Existing Buildings Recognition and BIM Generation Based on Multi-Plane Segmentation and Deep Learning

Abstract

1. Introduction

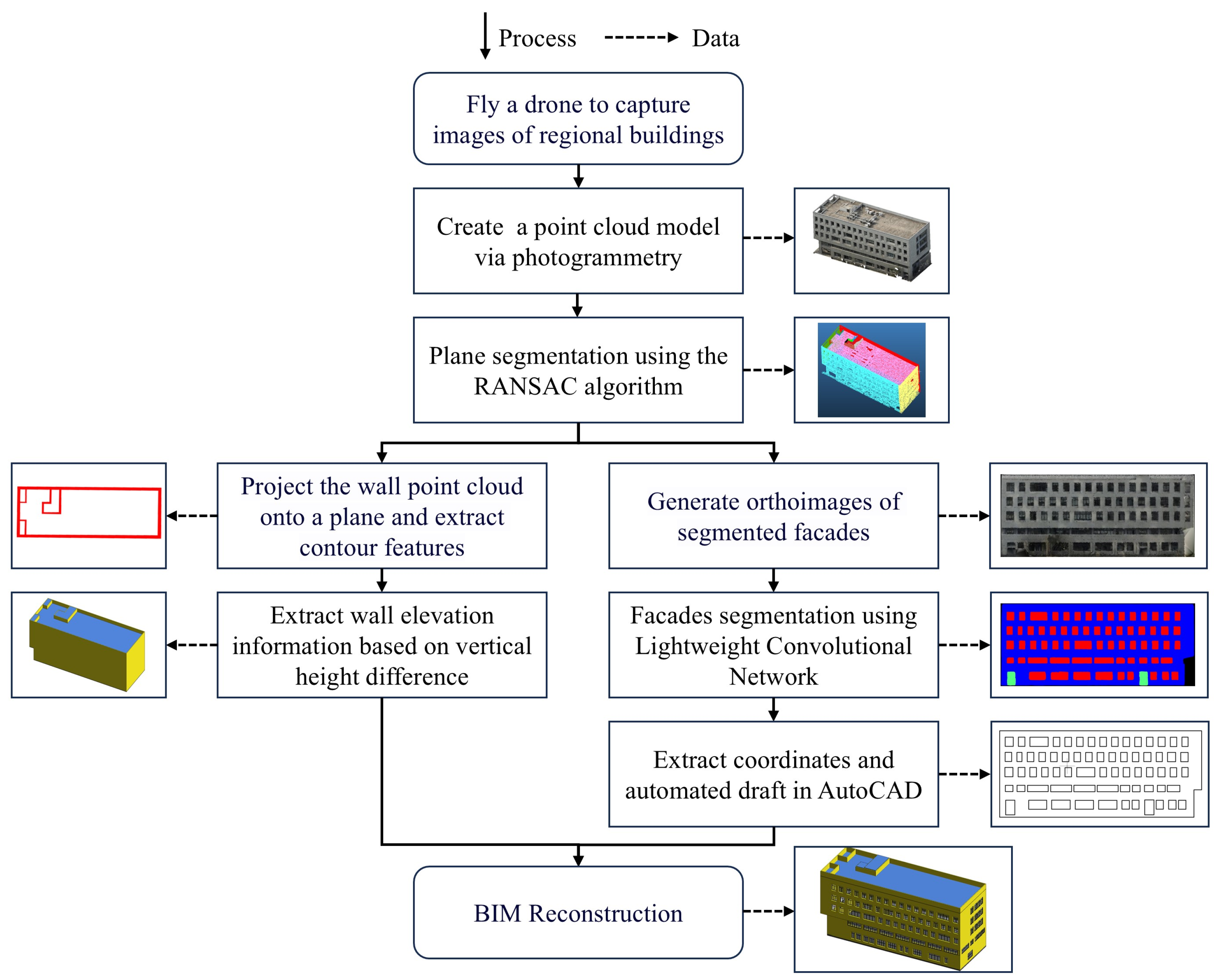

- Capturing multi-angle images of buildings using drones and generating point cloud data through 3D reconstruction software;

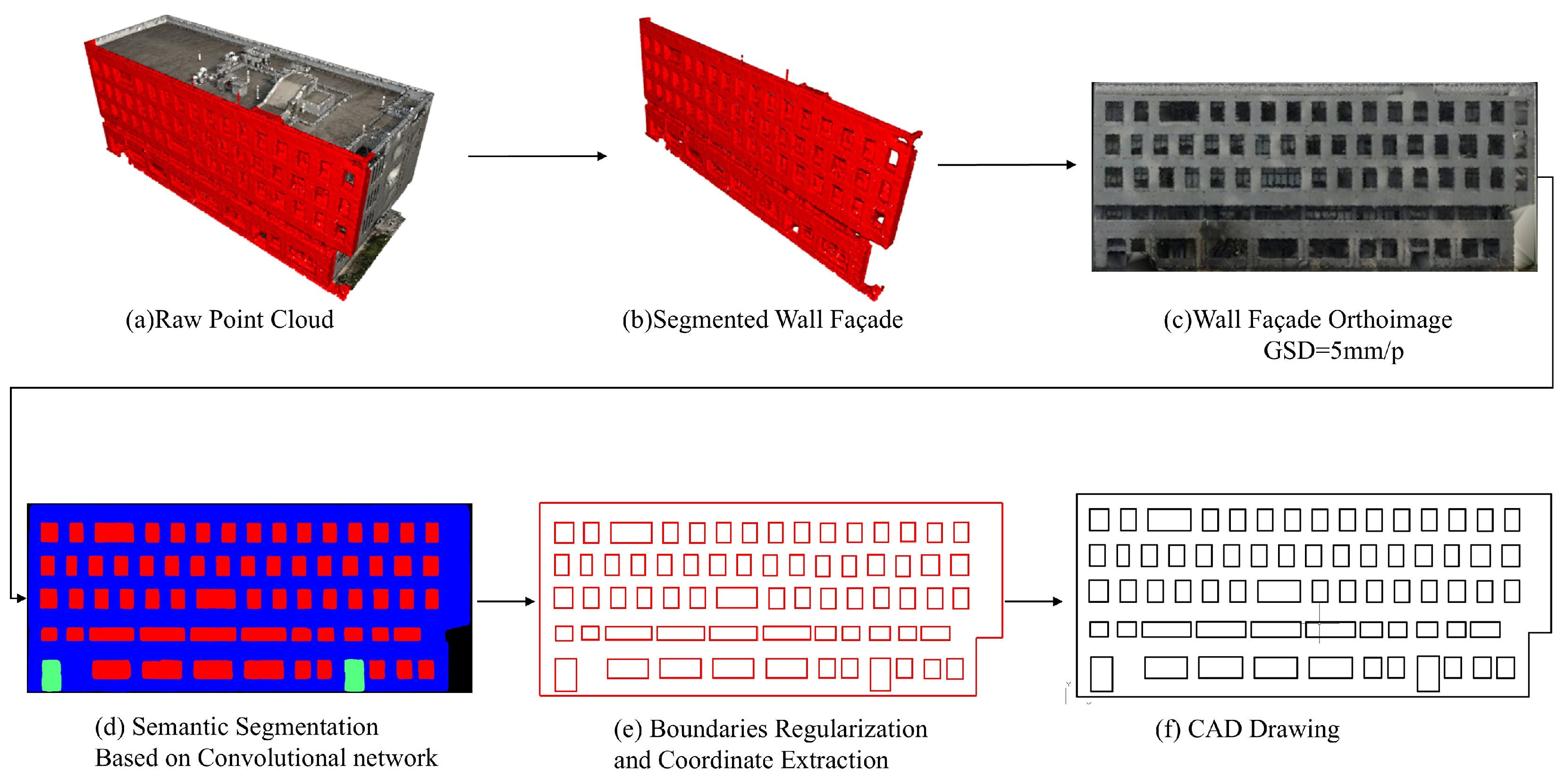

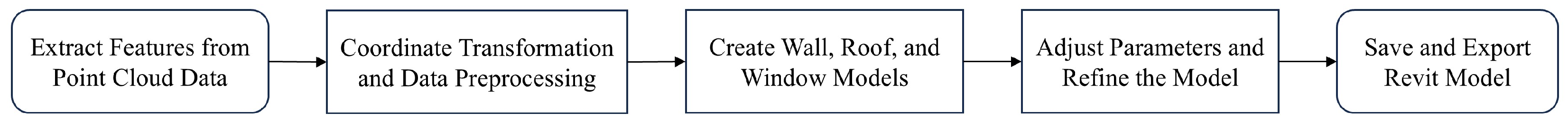

- Extracting key features from external walls and roofs, generating orthophotos of building façades, and performing semantic segmentation using a lightweight convolutional encoder–decoder model to identify door and window features, followed by the automatic generation of AutoCAD elevation drawings;

- Finally, integrating the extracted features to reconstruct the BIM.

2. Methodology

2.1. 3D Point Cloud Modeling

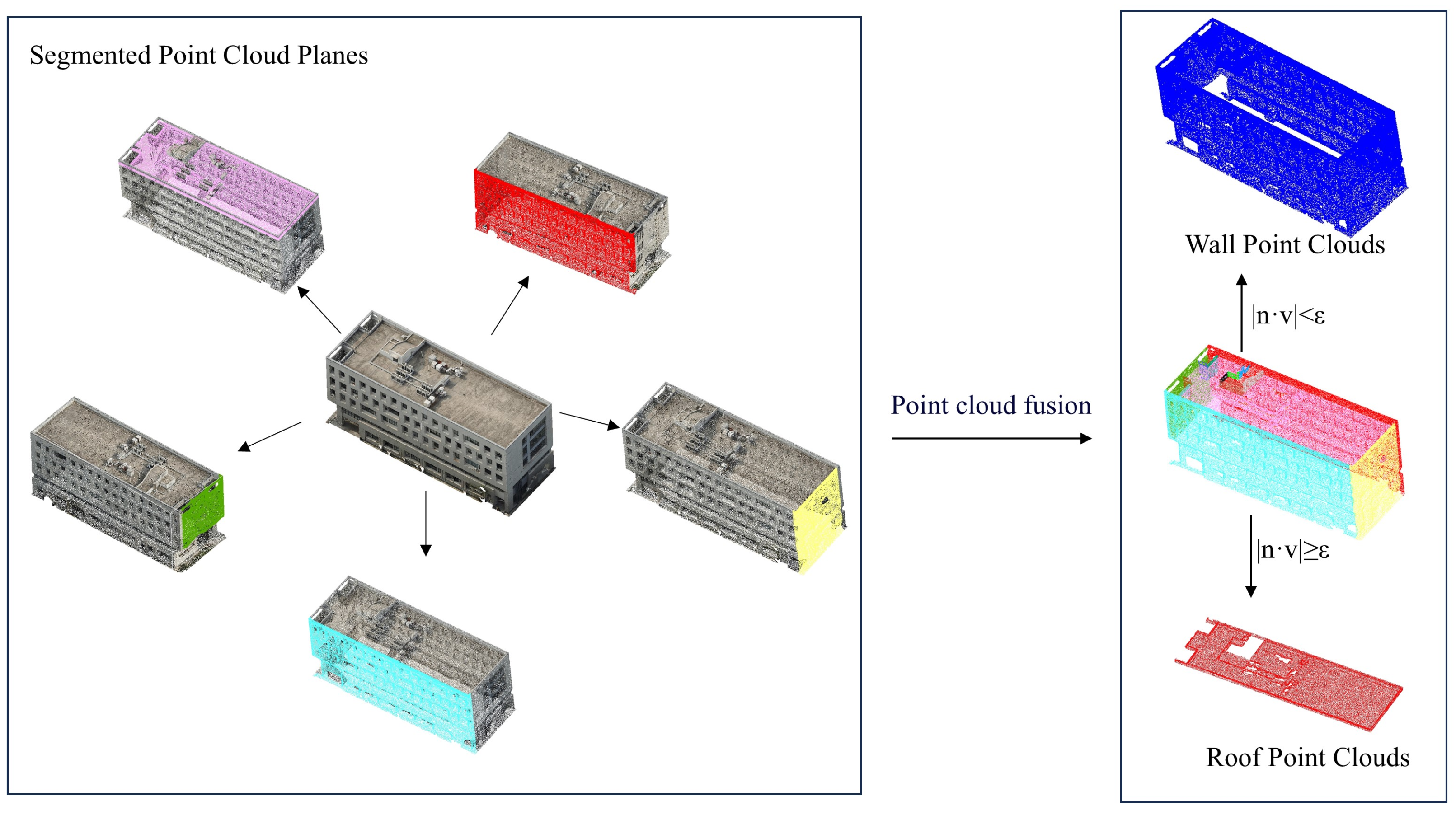

2.2. Multi-Plane Segmentation of Point Clouds

- Randomly sample three data points from the point cloud set P and compute the parameters Mp of the fitted plane;

- Validate the fitted plane by classifying points that satisfy the parameters Mp as inliers and those that do not as outliers. Record the current number of inliers;

- Check the termination conditions:

- If the proportion of inliers in the current plane exceeds a predefined threshold, or if the number of random sampling iterations reaches the maximum limit, the algorithm terminates;

- Otherwise, repeat the loop by sampling three new data points from P;

- During the iterations, fit new plane parameters and validate them. Models with fewer inliers are discarded, while those with more inliers are retained. This ensures that the model parameters p correspond to the optimal plane fitting for the segmentation task.

| Algorithm 1. Simplified pseudocode representation of the RANSAC algorithm. |

| Require: Point cloud P, threshold T, number of iterations K |

| Ensure: Optimal fitted plane p |

| 1: p null |

| 2: best_score 0 |

| 3: for k = 1 to K do |

| 4: Randomly select the minimal sample set from point cloud P |

| 5: Fit the plane M using the sample set , obtaining the plane parameters |

| 6: inliers [] |

| 7: for each point p in P do |

| 8: if p is consistent with the fitted plane then |

| 9: Add p to inliers |

| 10: end if |

| 11: end for |

| 12: if the number of inliers is greater than best_score then |

| 13: Update best_score and p |

| 14: end if |

| 15: end for |

| 16: Returnp |

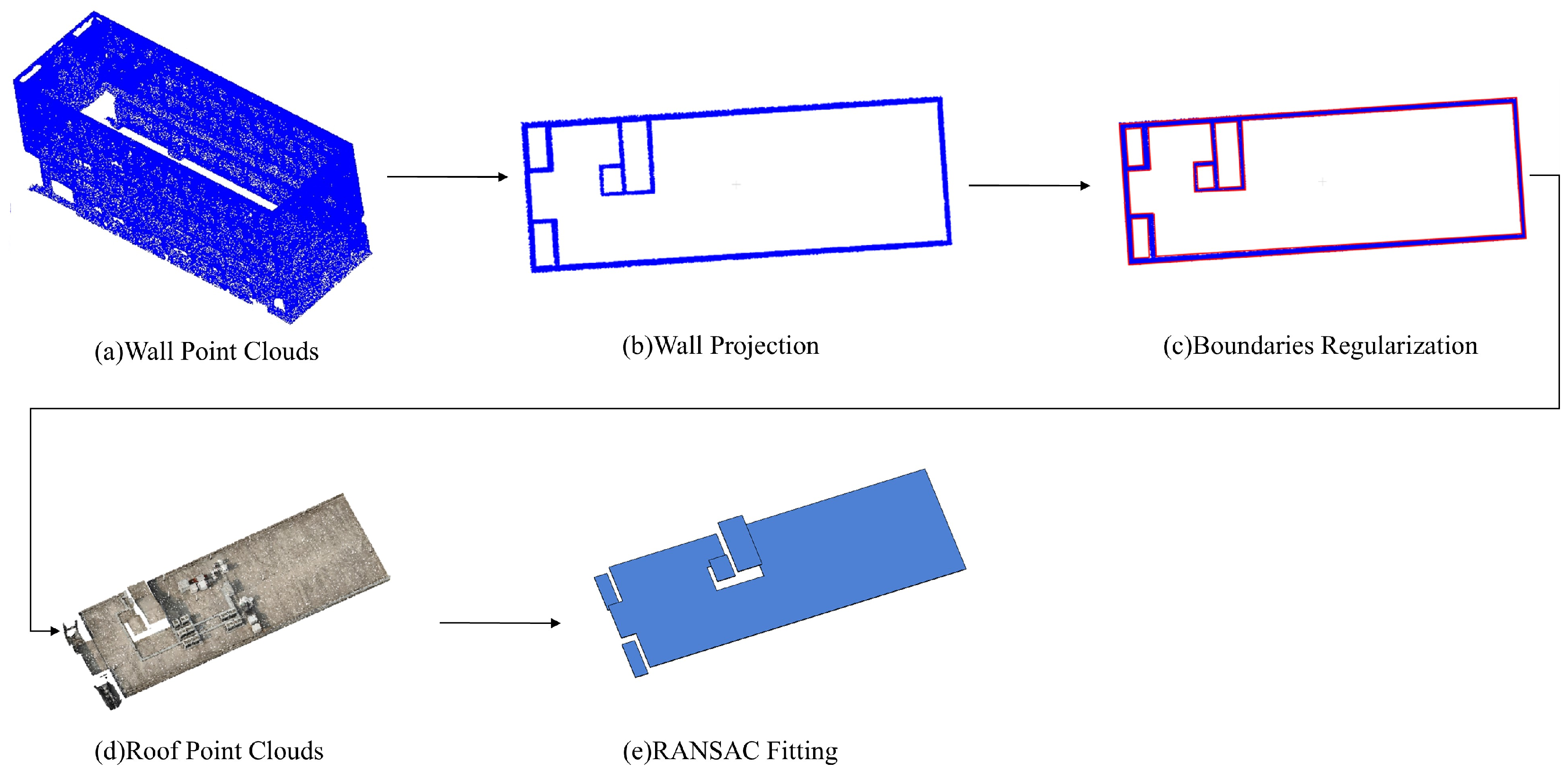

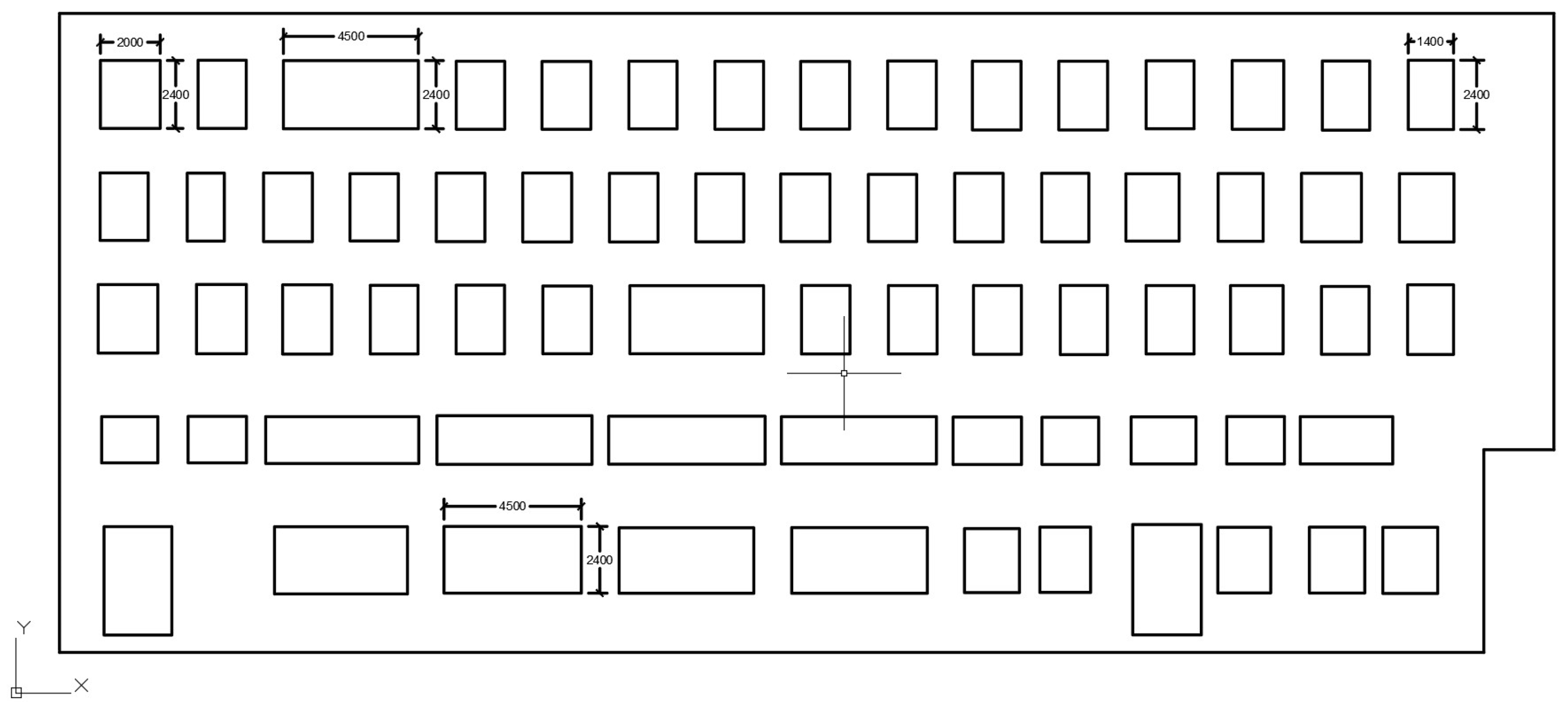

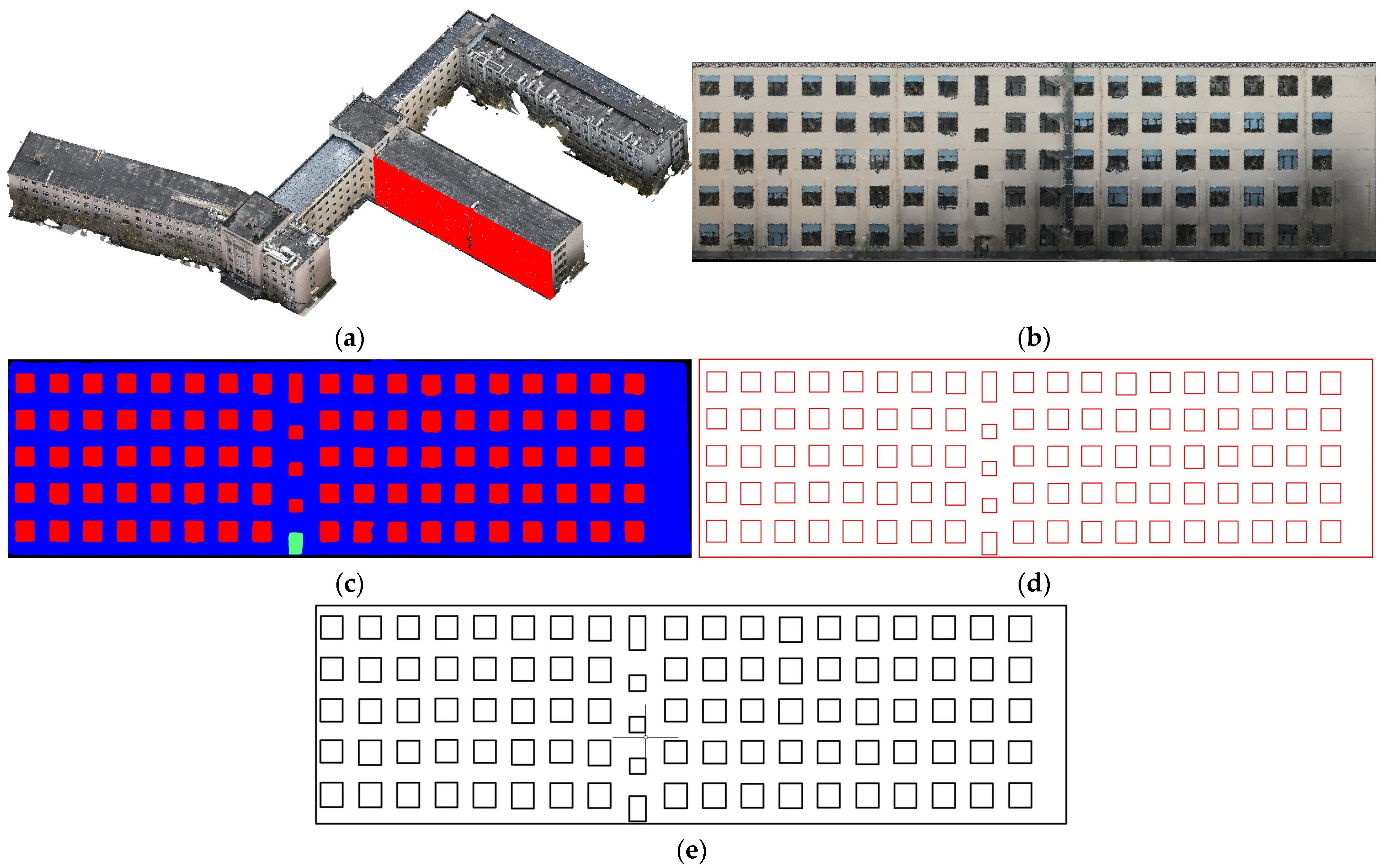

2.3. Key Building Feature Extraction

2.3.1. External Wall and Roof Feature Extraction

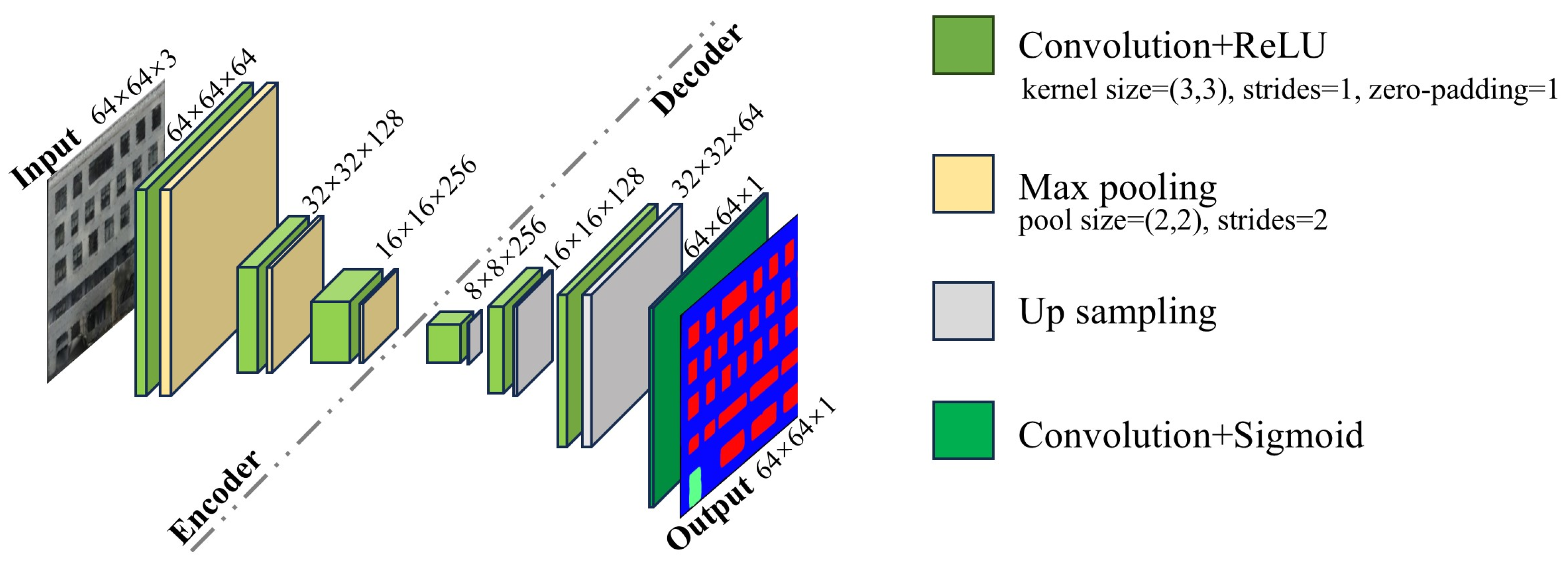

2.3.2. Door and Window Feature Extraction

2.4. BIM Generation

3. Experiments

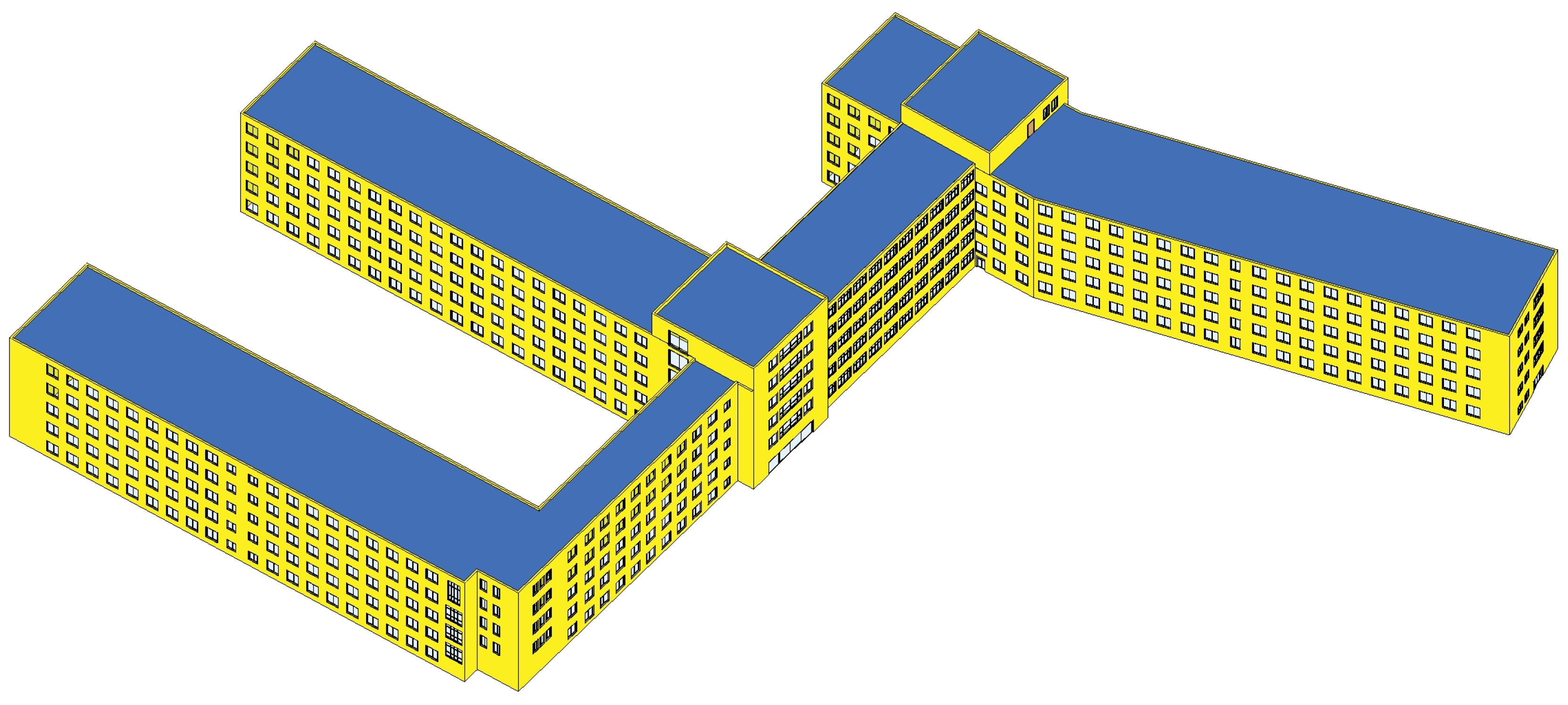

3.1. Case Study

3.2. Evaluation Metrics

3.2.1. Semantic Segmentation Metrics

3.2.2. Model Accuracy Metrics

4. Results

4.1. Performance of DeepLearning

4.2. Analysis of BIM Reconstruction Results

5. Conclusions

- Multi-plane segmentation: Using RANSAC-based multi-plane segmentation techniques, this method effectively separates wall, roof, and other planar features from point clouds, enabling the efficient and accurate extraction of building feature data. This automated approach not only enhances the speed of point cloud processing but also minimizes the need for manual intervention.

- Key feature extraction: A novel approach was developed for extracting external wall and roof features. Additionally, a lightweight convolutional encoder–decoder deep learning model was employed to perform pixel-level segmentation on façade orthoimages, enabling the accurate and efficient extraction of door and window features.

- Evaluation metrics: Length and position evaluation metrics were designed to comprehensively assess the reconstruction accuracy of the building information model. These metrics consider not only the dimensions of building elements but also their spatial accuracy, providing a more comprehensive evaluation of the model’s precision.

- In cases where buildings are obstructed by trees, dense vegetation, or other structures, missing point cloud data may occur, which limits the accuracy of feature extraction for walls and their associated doors and windows.

- This method is currently primarily suitable for buildings with relatively simple geometric shapes; improvements are needed to handle complex curved walls and irregular roof structures, particularly in terms of segmentation and feature fitting.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rocha, G.; Mateus, L. A survey of scan-to-BIM practices in the AEC industry—A quantitative analysis. ISPRS Int. J. Geo-Inf. 2021, 10, 564. [Google Scholar] [CrossRef]

- Gao, X.; Shen, S.; Zhou, Y.; Cui, H.; Zhu, L.; Hu, Z.; Sensing, R. Ancient Chinese architecture 3D preservation by merging ground and aerial point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 72–84. [Google Scholar] [CrossRef]

- Guo, H.; Chen, Z.; Chen, X.; Yang, J.; Song, C.; Chen, Y. UAV-BIM-BEM: An automatic unmanned aerial vehicles-based building energy model generation platform. Energy Build. 2024, 328, 115120. [Google Scholar] [CrossRef]

- Pantoja-Rosero, B.G.; Rusnak, A.; Kaplan, F.; Beyer, K. Generation of LOD4 models for buildings towards the automated 3D modeling of BIMs and digital twins. Autom. Constr. 2024, 168, 105822. [Google Scholar] [CrossRef]

- Wang, D.J.; Jiang, Q.M.; Liu, J.Z. Deep-Learning-Based Automated Building Information Modeling Reconstruction Using Orthophotos with Digital Surface Models. Buildings 2024, 14, 808. [Google Scholar] [CrossRef]

- Urbieta, M.; Urbieta, M.; Laborde, T.; Villarreal, G.; Rossi, G. Generating BIM model from structural and architectural plans using Artificial Intelligence. J. Build. Eng. 2023, 78, 107672. [Google Scholar] [CrossRef]

- Zhao, Y.; Deng, X.; Lai, H. Reconstructing BIM from 2D structural drawings for existing buildings. Autom. Constr. 2021, 128, 103750. [Google Scholar] [CrossRef]

- Gu, S.; Wang, D. Component Recognition and Coordinate Extraction in Two-Dimensional Paper Drawings Using SegFormer. Information 2024, 15, 17. [Google Scholar] [CrossRef]

- Li, Z.X.; Shan, J. RANSAC-based multi primitive building reconstruction from 3D point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 185, 247–260. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Z.; Shan, J. Optimal Model Fitting for Building Reconstruction from Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9636–9650. [Google Scholar] [CrossRef]

- Deng, M.; Tan, Y.; Singh, J.; Joneja, A.; Cheng, J.C.P. A BIM-based framework for automated generation of fabrication drawings for façade panels. Comput. Ind. 2021, 126, 103395. [Google Scholar] [CrossRef]

- Wang, B.; Li, M.; Peng, Z.; Lu, W. Hierarchical attributed graph-based generative façade parsing for high-rise residential buildings. Autom. Constr. 2024, 164, 105471. [Google Scholar] [CrossRef]

- Zolanvari, S.M.I.; Laefer, D.F.; Natanzi, A.S. Three-dimensional building facade segmentation and opening area detection from point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 134–149. [Google Scholar] [CrossRef]

- Fan, H.C.; Wang, Y.F.; Gong, J.Y. Layout graph model for semantic facade reconstruction using laser point clouds. Geo-Spat. Inf. Sci. 2021, 24, 403–421. [Google Scholar] [CrossRef]

- Maltezos, E.; Doulamis, A.; Doulamis, N.; Ioannidis, C. Building Extraction From LiDAR Data Applying Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 155–159. [Google Scholar] [CrossRef]

- Chen, H.; Chen, W.; Wu, R.; Huang, Y. Plane segmentation for a building roof combining deep learning and the RANSAC method from a 3D point cloud. J. Electron. Imaging 2021, 30, 053022. [Google Scholar] [CrossRef]

- Li, L.; Song, N.; Sun, F.; Liu, X.; Wang, R.; Yao, J.; Cao, S. Point2Roof: End-to-end 3D building roof modeling from airborne LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 193, 17–28. [Google Scholar] [CrossRef]

- Otero, R.; Sanchez-Aparicio, M.; Lagüela, S.; Arias, P. Semi-automatic roof modelling from indoor laser-acquired data. Autom. Constr. 2022, 136, 104130. [Google Scholar] [CrossRef]

- Dey, E.K.; Awrangjeb, M.; Stantic, B. Outlier detection and robust plane fitting for building roof extraction from LiDAR data. Int. J. Remote Sens. 2020, 41, 6325–6354. [Google Scholar] [CrossRef]

- Sun, X.; Guo, B.; Li, C.; Sun, N.; Wang, Y.; Yao, Y. Semantic Segmentation and Roof Reconstruction of Urban Buildings Based on LiDAR Point Clouds. ISPRS Int. J. Geo-Inf. 2024, 13, 19. [Google Scholar] [CrossRef]

- Albano, R. Investigation on Roof Segmentation for 3D Building Reconstruction from Aerial LIDAR Point Clouds. Appl. Sci. 2019, 9, 11. [Google Scholar] [CrossRef]

- Hu, P.B.; Miao, Y.M.; Hou, M.L. Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency. Remote Sens. 2021, 13, 25. [Google Scholar] [CrossRef]

- Hu, D.; Gan, V.J.; Yin, C. Robot-assisted mobile scanning for automated 3D reconstruction and point cloud semantic segmentation of building interiors. Autom. Constr. 2023, 152, 104949. [Google Scholar] [CrossRef]

- Wang, B.; Chen, Z.; Li, M.; Wang, Q.; Yin, C.; Cheng, J.C. Omni-Scan2BIM: A ready-to-use Scan2BIM approach based on vision foundation models for MEP scenes. Autom. Constr. 2024, 162, 105384. [Google Scholar] [CrossRef]

- Yang, F.; Pan, Y.T.; Zhang, F.S.; Feng, F.Y.; Liu, Z.J.; Zhang, J.Y.; Liu, Y.; Li, L. Geometry and Topology Reconstruction of BIM Wall Objects from Photogrammetric Meshes and Laser Point Clouds. Remote Sens. 2023, 15, 21. [Google Scholar] [CrossRef]

- Wang, S.; Park, S.; Park, S.; Kim, J. Building façade datasets for analyzing building characteristics using deep learning. Data Brief 2024, 57, 110885. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Unsupervised reconstruction of Building Information Modeling wall objects from point cloud data. Autom. Constr. 2020, 120, 103338. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.S.; Shan, J.; Zhang, J.; Li, L.L. Investigation on the Weighted RANSAC Approaches for Building Roof Plane Segmentation from LiDAR Point Clouds. Remote Sens. 2016, 8, 23. [Google Scholar] [CrossRef]

- He, Y.; Wu, X.; Pan, W.; Chen, H.; Zhou, S.; Lei, S.; Gong, X.; Xu, H.; Sheng, Y. LOD2-Level+ Low-Rise Building Model Extraction Method for Oblique Photography Data Using U-NET and a Multi-Decision RANSAC Segmentation Algorithm. Remote Sens. 2024, 16, 2404. [Google Scholar] [CrossRef]

- Zhao, Q.; Gao, X.; Li, J.; Luo, L. Optimization Algorithm for Point Cloud Quality Enhancement Based on Statistical Filtering. J. Sensors 2021, 2021, 7325600. [Google Scholar] [CrossRef]

- Jiang, Y.H.; Han, S.S.; Bai, Y. Scan4Facade: Automated As-Is Facade Modeling of Historic High-Rise Buildings Using Drones and AI. J. Archit. Eng. 2022, 28, 22. [Google Scholar] [CrossRef]

| Parameter | Value/Description |

|---|---|

| Dataset size | 300 images (240/30/30 split) |

| Data augmentation | Rotation, flipping, brightness adjustment |

| Batch size | 8 |

| Optimizer | Adam |

| Learning rate | 1 × 10−4 |

| Loss function | Cross-entropy loss |

| Epochs | 200 |

| Model | Parameters (M) | mIoU (%) | PA (%) | Inference Time (ms) |

|---|---|---|---|---|

| U-Net | 31.0 | 93.1 | 95.7 | 120 |

| DeepLabV3+ | 59.8 | 94.2 | 96.5 | 180 |

| Proposed Model | 12.4 | 92.3 | 94.1 | 70 |

| Category | Measured Quantity |

|---|---|

| Door and window width | 104 |

| Door and window height | 104 |

| Window horizontal coordinate x | 104 |

| Window vertical coordinate y | 104 |

| Wall length | 18 |

| Wall height | 18 |

| Instance | (m) | (m) | (m) |

|---|---|---|---|

| Door width 1 | 1.35 | 1.32 | 0.03 |

| Door height 1 | 2.15 | 2.21 | 0.06 |

| Door width 2 | 1.34 | 1.39 | 0.05 |

| Door height 2 | 2.13 | 2.10 | 0.03 |

| Window width 1 | 2.03 | 1.98 | 0.05 |

| Window height 1 | 2.41 | 2.40 | 0.01 |

| Window width 2 | 4.53 | 4.55 | 0.02 |

| Window height 2 | 2.42 | 2.45 | 0.03 |

| ⋯ | |||

| Total | L = 780.74 | ||

| Instance | (m) | (m) | (m) | (m) |

|---|---|---|---|---|

| Door 1 | 1.74 | 0.40 | 1.69 | 0.39 |

| Door 2 | 37.8 | 0.41 | 38.2 | 0.38 |

| Window 1 | 0.66 | 17.55 | 0.62 | 17.60 |

| Window 2 | 3.57 | 17.60 | 3.55 | 17.64 |

| Window 3 | 6.22 | 17.62 | 6.25 | 17.63 |

| ⋯ | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Liu, J.; Jiang, H.; Liu, P.; Jiang, Q. Existing Buildings Recognition and BIM Generation Based on Multi-Plane Segmentation and Deep Learning. Buildings 2025, 15, 691. https://doi.org/10.3390/buildings15050691

Wang D, Liu J, Jiang H, Liu P, Jiang Q. Existing Buildings Recognition and BIM Generation Based on Multi-Plane Segmentation and Deep Learning. Buildings. 2025; 15(5):691. https://doi.org/10.3390/buildings15050691

Chicago/Turabian StyleWang, Dejiang, Jinzheng Liu, Haili Jiang, Panpan Liu, and Quanming Jiang. 2025. "Existing Buildings Recognition and BIM Generation Based on Multi-Plane Segmentation and Deep Learning" Buildings 15, no. 5: 691. https://doi.org/10.3390/buildings15050691

APA StyleWang, D., Liu, J., Jiang, H., Liu, P., & Jiang, Q. (2025). Existing Buildings Recognition and BIM Generation Based on Multi-Plane Segmentation and Deep Learning. Buildings, 15(5), 691. https://doi.org/10.3390/buildings15050691