Determining the Most Consensus-Based Assessment Method for Social Sustainability—Case Study of a Suburb of Karlstad, Sweden

Abstract

1. Introduction and Background

- Measure the level of agreement among assessors using three distinct assessment methods.

- Measure the rate of consonance of various assessment methods by comparing them to Woxnerud’s [24] theory-based method.

2. Literature Review

Survey, Structured Interview, and Sampling in Qualitative Research

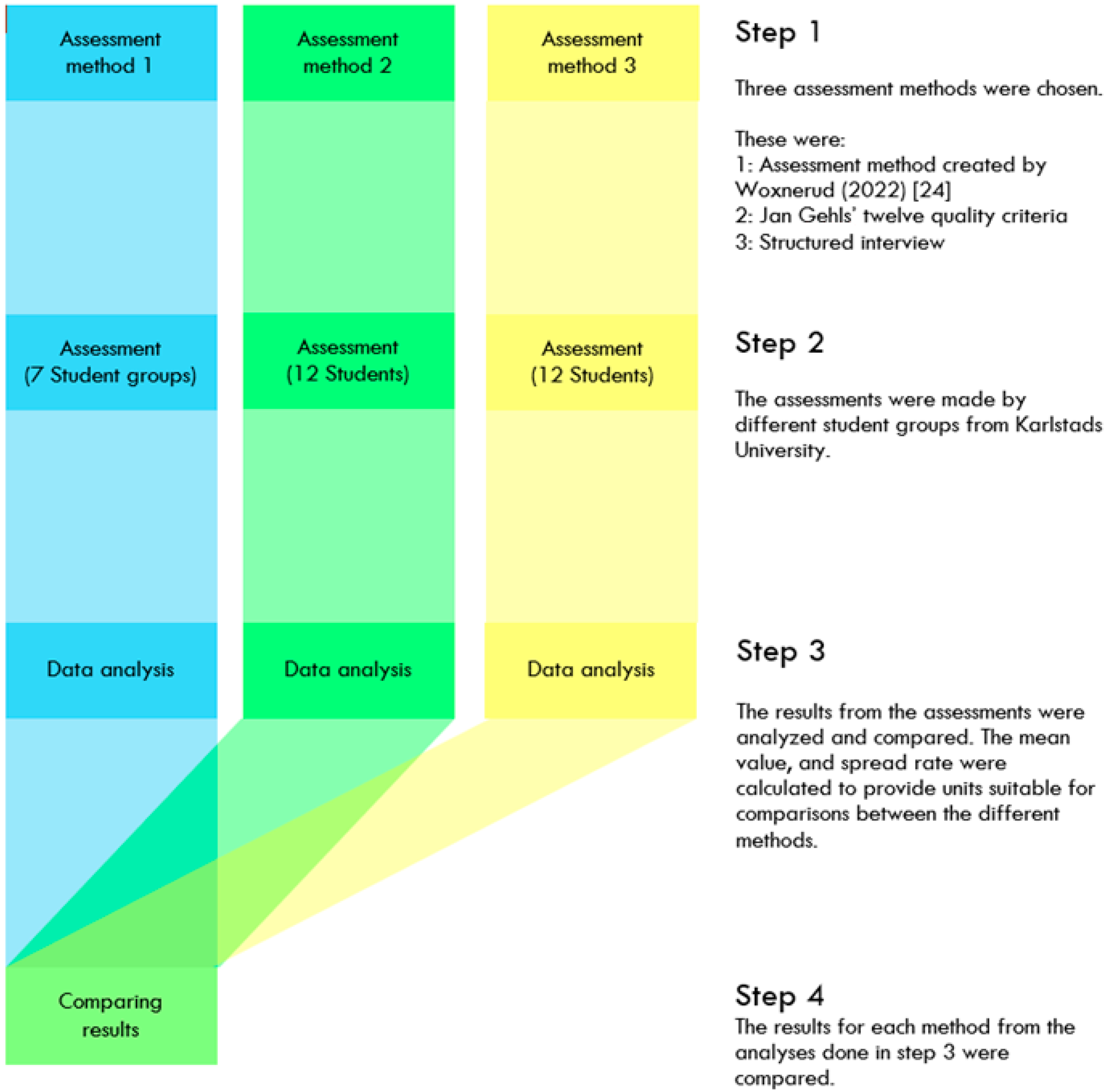

3. The Method

3.1. The Assessed Site

3.2. Methodology

3.3. Step 1 Selection of Three Assessment Methods

3.3.1. Step 1.1: Assessment Method Created by Woxnerud [24] (Assessment Method 1)

3.3.2. Step 1.2: Jan Gehl’s Twelve Quality Criteria (Assessment Method 2)

3.3.3. Step 1.3: Structured Interviews (Assessment Method 3)

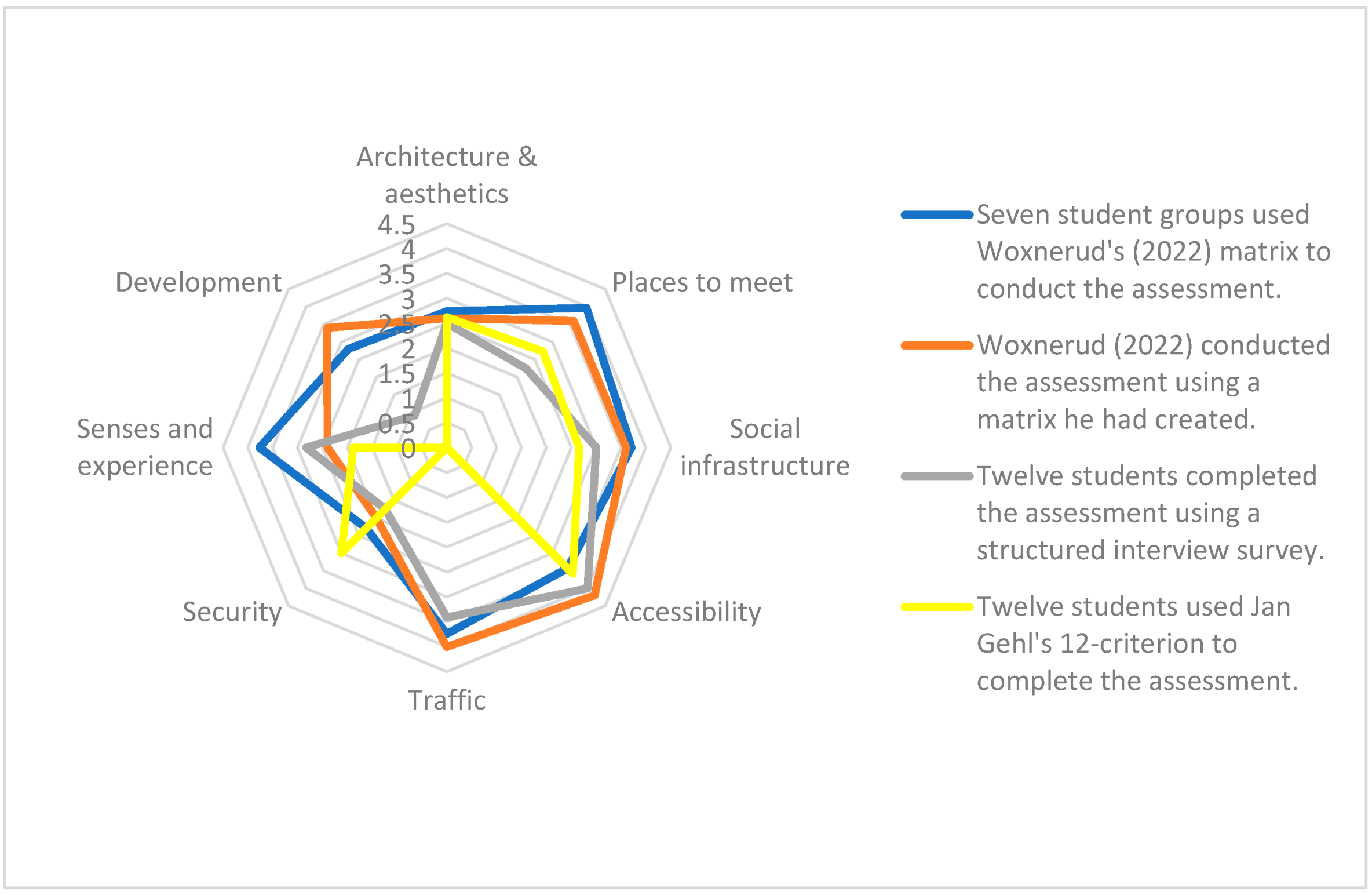

3.4. Step 2: Assessment

- Seven groups of five undergraduate students from Karlstad University’s Building and Construction Engineering program conducted the first assessment using Woxnerud’s 2022 method (Assessment Method 1) at the beginning of September 2022, in slightly colder weather. They graded all 40 questions on a five-point Likert scale as follows: very bad = 1, bad = 2, neutral = 3, good = 4, and very good = 5. After entering all the grades, they calculated the average scores for the eight different categories. They then used a radar chart to graphically present the results (see Figure 2).

- Twelve undergraduate students from the same Building and Construction Engineering program at Karlstad University conducted the second assessment in May 2024, under sunny and slightly windy conditions. This assessment was guided by Jan Gehl’s twelve quality criteria. (Assessment Method 2).

- In May 2024, twelve undergraduate students from the same program at Karlstad University conducted the third assessment under sunny and slightly windy conditions. The third assessment was guided by the survey assessment matrix. The students first observed the site for approximately 20 min, then answered all the questions. The authors converted the last eight questions into a rating scale and graded them on a five-point Likert scale as follows: 1 = very bad impression, 2 = bad impression, 3 = neutral impression, 4 = good impression, 5 = very good impression (refer to Table 4b). Weller et al. [28] assert that individuals can occasionally use rating scales, provided they carefully utilize both positive and negative items and adhere to the entire range of scale values. After entering all the grades, the authors calculated the average scores for the eight different categories, then used a radar chart to graphically present the results.

3.5. Step 3: Analyzing Results

- Formula (1)—Mean value calculation

- Formula (2)—Spread rate calculation

3.6. Step 4: Comparing Results

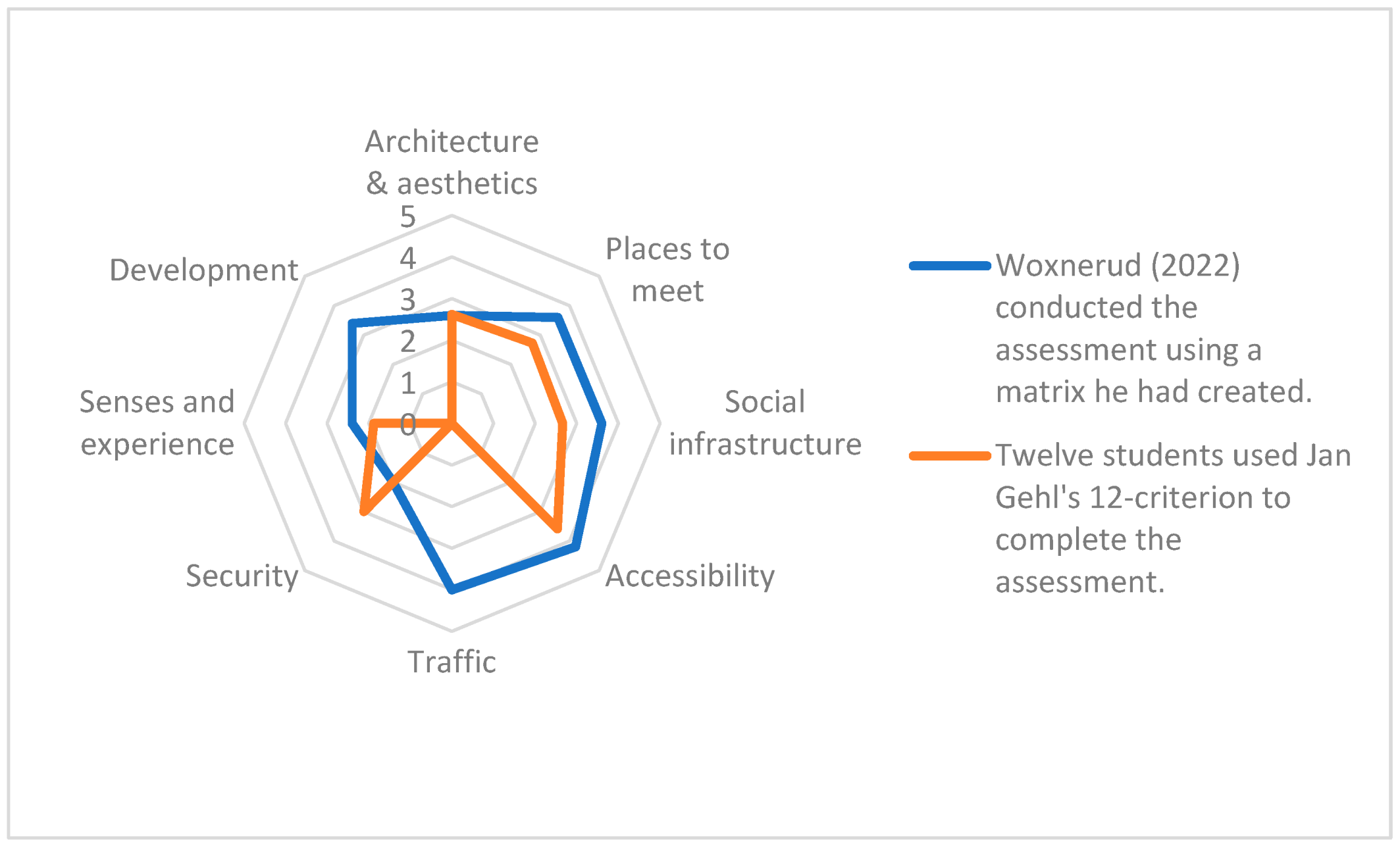

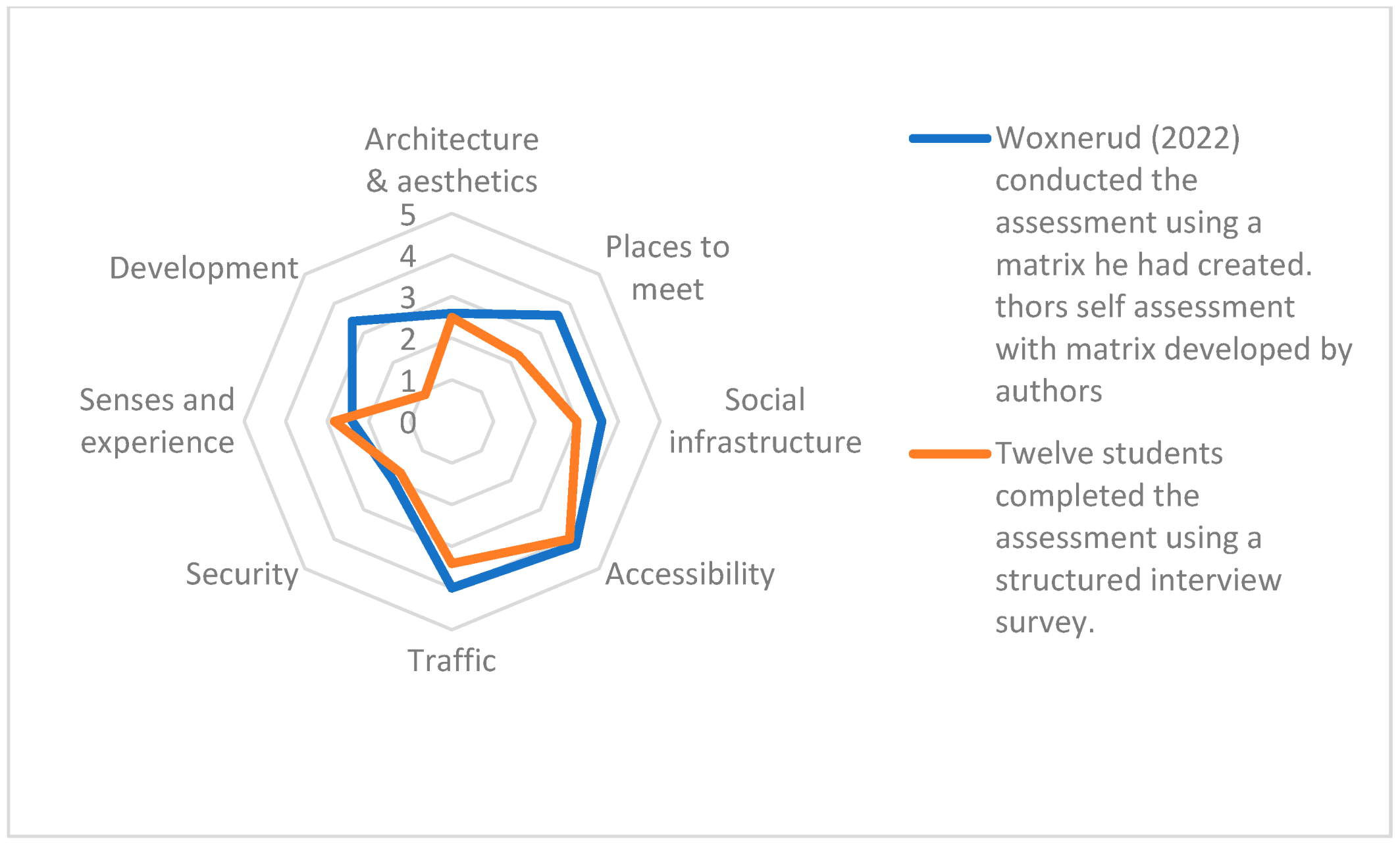

4. Results

4.1. Assessment Method Created by Woxnerud [24] (Assessment Method 1)

4.2. Jan Gehl’s Twelve Quality Criteria (Assessment Method 2)

4.3. Structured Interviews (Assessment Method 3)

5. Discussion

6. Conclusions

7. Recommendations for Further Research

- The utility of the matrix can be reinforced and the assessments made more robust by testing for temporal and seasonal sensitivities of the results.

- Interviewing those who frequent the place most often could provide a more holistic and comprehensive perspective of the place.

- To improve clarity and measurability, the questions in the categories of Accessibility, Senses and Experience, and Development could be further developed.

- The number of participants in the study (i.e., the student groups) may influence the results to some extent. More accurate results might be achieved by recruiting additional students and increasing the number of assessment sets.

- Consideration could be given to the equi-weighting approach adopted in this study. If different weightings need to be assigned (both intra-categorical to the questions and inter-categorical), an expert panel comprising a wide range of stakeholders could be established and consulted to enhance the robustness of the matrix and methodology.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| 1. ARCHITECTURE AND ESTHETICS | |

|---|---|

| 1a | Is the public space beautiful? Is it evident that there is good design both in terms of how things are shaped, as well as their durability? |

| 1b | Are the site and its buildings adapted to the human scale? Can people on the outer edges of the place be distinguished as people? |

| 1c | Are seating options placed in such a way that there are interesting things to look at? |

| 1d | Does the location’s area have a distinctive profile compared to nearby areas? (for example: by virtue of having a different style of architecture) |

| 1e | Is there access to any form of public art at the place? |

| 2. PLACES TO MEET | |

| 2a | How are the acoustics at the place? For example, is it possible to have a conversation, or is the ambient noise too loud? |

| 2b | Are there good “primary seats” such as benches or are there only “secondary seats” such as stairs, facades, or fountain edges? |

| 2c | Does the place have details that make it possible to stop and lean against? (for example: bus shelters, benches, facades, trees, niches, or ledges) |

| 2d | Is there access to places in the vicinity of the site that enable larger events? (for instance: squares, parks, wide sidewalks) |

| 2e | Is the site free from hostile architecture such as benches that are difficult to sit on? |

| 3. SOCIAL INFRASTRUCTURE | |

| 3a | Can the whole place be used for activities and play? Are there major obstacles to this? |

| 3b | Is there access to basic social infrastructure in the vicinity of the location? (e.g., shops, gyms, community facilities, and green spaces) |

| 3c | Are there schools, leisure centers, and preschools in close proximity to the site? |

| 3d | Are there “third places” such as cafes adjacent to the site? |

| 3e | Are there dedicated playgrounds in the vicinity of the site? |

| 4. ACCESSIBILITY | |

| 4a | Is the location available? Are there physical elements that limit the site’s accessibility? |

| 4b | Is the fastest route through the area clear, or is there a risk of detours? Is there a risk of disorientation? |

| 4c | Is the walking surface relatively flat? Are there ramps alongside the stairs for wheelchair users? |

| 4d | Is the location’s lighting sufficient for it to be possible to read sign language when it is dark outside? |

| 4e | Is there guidance for the visually impaired through, for example, tactile walking boards or handrails? |

| 5. TRAFFIC | |

|---|---|

| 5a | Is it possible to cycle and walk safely without the risk of accidents? |

| 5b | Does the area offer good communications through regular public transport? |

| 5c | Is the area well connected to nearby areas by roads and shared surfaces? |

| 5d | Is environmentally friendly transport made possible for both pedestrians and cyclists? Are these networks well developed? |

| 5e | How does the streetscape change in winter? Do the roof shapes look like they cause snowfall on the street? |

| 6. SECURITY | |

| 6a | Is the location safe both day and night? |

| 6b | Is there movement on the site at all hours of the day? |

| 6c | Does lighting create security and a good atmosphere? |

| 6d | Is there a natural surveillance of the site with many windows overlooking it? |

| 6e | Does the design of the place give the appearance of security or are there, for example, iron bars and steel shutters for windows? |

| 7. SENSES AND EXPERIENCE | |

| 7a | Are there noises, dust, smells, or other pollution? |

| 7b | Does the character of the place change when it is windy or stormy? |

| 7c | Does the location offer a good microclimate all year round? |

| 7d | Where are the seats located? Full sun or shade? How are seats positioned in relation to the wind? Do they offer protection? |

| 7e | Are there many trees adjacent to the site? Are there big trees? |

| 8. DEVELOPMENT | |

| 8a | Do the location and the area offer flexible workplaces, like cafes and libraries for instance? |

| 8b | Does the area of the site have housing of different sizes, or is the variation minimal? |

| 8c | Does the site area have premises for varying needs? (for instance: shop premises of different sizes) |

| 8d | Does the area seem to be developing? |

| 8e | Is it clear that the area’s residents have had influence over the area’s design? |

Appendix C

| Categories | Corresponding Quality Criteria | Definition of Each Quality Criteria | Categories of the Matrix |

|---|---|---|---|

| Protection | Protection against traffic and accidents. | Do groups across age and ability experience traffic safety in the public space? Can one safely bike and walk without fear of being hit by a driver? | Security |

| Protection against harm by others | Is the public space perceived to be safe both day and night? Are there people and activities at all hours of the day because the area has, for example, both residents and offices? Does the lighting provide safety at night as well as a good atmosphere? | ||

| Protection against unpleasant sensory experience | Are there noises, dust, smells, or other pollution? Does the public space function well when it is windy? Is there shelter from strong sun, rain, or minor flooding? | ||

| Comfort | Options for mobility | Is this space accessible? Are there physical elements that might limit or enhance personal mobility in the forms of walking, using a wheelchair, or pushing a stroller? Is it evident how to move through the space without having to take an illogical detour? | Accessibility |

| Options to stand and linger | Does the place have features you can stay and lean on, like a façade that invites one to spend time next to it, a bus stop, a bench, a tree, or a small ledge or niche? | Places to meet | |

| Options for sitting | Are there good primary seating options such as benches or chairs? Or is there only secondary seating such as a stair, seat wall, or the edge of a fountain? Are there adequate non-commercial seating options so that sitting does not require spending money? | ||

| Options for seeing | Are seating options placed so there are interesting things to look at? | Senses and experience | |

| Options for talking and listening/hearing | Is it possible to have a conversation here? Is it evident that you have the option to sit together and have a conversation? | Places to meet | |

| Enjoyment | Options for play, exercise, and activities | Are there options to be active at multiple times of the day and year? | Social infrastructure |

| Scale | Is the public space and the building that surrounds it at a human scale? If people are at the edges of the space, can we still relate to them as people or are they lost in their surroundings? | Architecture and esthetics | |

| Opportunities to enjoy the positive aspects of climate | Are local climatic aspects such as wind and sun taken into account? Are there varied conditions for spending time in public spaces at different times of year? With this in mind, where are the seating options placed? Are they located entirely in the shadows or the sun? And how are they oriented/placed in relation to wind? Are they protected? | Senses and experience | |

| Experience of esthetic qualities and positive sensory experiences | Is the public space beautiful? Is it evident that there is good design both in terms of how things are shaped, as well as their durability? | ||

| Traffic | |||

| Development |

References

- Garau, C.; Pavan, V.M. Evaluating urban quality: Indicators and assessment tools for smart sustainable cities. Sustainability 2018, 10, 575. [Google Scholar] [CrossRef]

- Drakakis-Smith, D. Third world cities: Sustainable urban development. Urban Stud. 1995, 32, 659–677. [Google Scholar] [CrossRef]

- Kunz, J. Social Sustainability and Community Involvement in Urban Planning: Lessons from the ECOCITY Project; University of Tampere, Department of Social Policy and Social Work, Unit for Research into Housing and the Environment: Tampere, Finland, 2006. [Google Scholar]

- Littig, B.; Griessler, E. Social sustainability: A catchword between political pragmatism and social theory. Int. J. Sustain. Dev. 2005, 8, 65–79. [Google Scholar] [CrossRef]

- Partridge, E. Social sustainability’: A useful theoretical framework. In Australasian Political Science Association Annual Conference; University of Technology: Delft, The Netherlands, 2005. [Google Scholar]

- Cuthill, M. Strengthening the ‘social’ in sustainable development: Developing a conceptual framework for social sustainability in a rapid urban growth region in Australia. Sustain. Dev. 2010, 18, 362–373. [Google Scholar] [CrossRef]

- Colantonio, A. Social Sustainability: A Review and Critique of Traditional Versus Emerging Themes and Assessment Methods; Loughborough University: Loughborough, UK, 2009. [Google Scholar]

- Kordi, N.E.; Belayutham, S.; Ibrahim, C.K.I.C. Mapping of social sustainability attributes to stakeholders’ involvement in construction project life cycle. Constr. Manag. Econ. 2021, 39, 513–532. [Google Scholar] [CrossRef]

- Eizenberg, E.; Jabareen, Y. Social sustainability: A new conceptual framework. Sustainability 2017, 9, 68. [Google Scholar] [CrossRef]

- Nakamba, C.C.; Chan, P.W.; Sharmina, M. How does social sustainability feature in studies of supply chain management? A review and research agenda. Supply Chain. Manag. Int. J. 2017, 22, 522–541. [Google Scholar] [CrossRef]

- Valdes-Vasquez, R.; Klotz, L.E. Social sustainability considerations during planning and design: Framework of processes for construction projects. J. Constr. Eng. Manag. 2013, 139, 80–89. [Google Scholar] [CrossRef]

- Bebbington, J.; Dillard, J. Social sustainability: An organizational-level analysis. In Understanding the Social Dimension of Sustainability; Routledge: London, UK, 2008; pp. 173–189. [Google Scholar]

- Opp, S.M. The forgotten pillar: A definition for the measurement of social sustainability in American cities. Local Environ. 2017, 22, 286–305. [Google Scholar] [CrossRef]

- Woodcraft, S.; Hackett, T.; Caistor-Arendar, L. Design for Social Sustainability: A Framework for Creating Thriving New Communities; The Young Foundation: London, UK, 2011. [Google Scholar]

- Kelly, M. Inequality and crime. Rev. Econ. Stat. 2000, 82, 530–539. [Google Scholar] [CrossRef]

- Larsson, D. Exposure to Crime as a Consequence of Poverty: Five Investigations About Relative Deprivation, Poverty and Exposure to Crime; Sociologi: Copenhagen, Denmark, 2006. [Google Scholar]

- Birmingham, E. Refraining the ruins: Pruitt-Igoe, structural racism and African American rhetoric as a space for cultural critique. West. J. Commun. 1999, 63, 291–309. [Google Scholar] [CrossRef]

- Jobard, F. Policing the banlieues. In Policing in France; Routledge: London, UK, 2020; pp. 187–201. [Google Scholar]

- Miller, B.J. The struggle over redevelopment at Cabrini-Green 1989–2004. J. Urban Hist. 2008, 34, 944–960. [Google Scholar] [CrossRef]

- Swedish Police Utsatta Områden—Polisens Arbete (Swedish) [Marked/Targeted Areas—The Work of the Police]. 2015. Available online: https://polisen.se/om-polisen/polisens-arbete/utsatta-omraden/ (accessed on 4 September 2022).

- Delegationen Mot Segregation [The Delegation Opposing Segregation]. Segregation i Sverige:—Årsrapport Om Den Socioekonomiska Boendesegregationens Utveckling. [Annual Report of the Status of the Socio-Economic Segregation of Inhabitants]. 2021. Available online: https://delmos.se/wp-content/uploads/2021/07/Segregation-i-Sverige.pdf (accessed on 4 September 2022).

- Marans, R.W.; Stimson, R.J. Investigating Quality of Urban Life: Theory, Methods, and Empirical Research; Springer Science & Business Media: New York, NY, USA, 2011; Volume 45. [Google Scholar]

- Stagl, S. Theoretical foundations of learning processes for sustainable development. Int. J. Sustain. Dev. World Ecol. 2007, 14, 52–62. [Google Scholar] [CrossRef]

- Woxnerud, W. Mänskligare städer: Framtagande av en ny bedömningsmetod för social hållbarhet och bedömning av Kronoparkens centrum i Karlstad. [More Humane Cities: Developing a New Assessment Method for Social Sustainability and Evaluating Central Kronoparken in Karlstad]. 2022. Available online: https://www.diva-portal.org/smash/record.jsf?pid=diva2%3A1675407&dswid=-5592 (accessed on 4 September 2022).

- Woxnerud, W.; Najar, K.; Nylander, O. The Human City: The Development of an Easy-to-Use Assessment Method Calibrated to Swedish Conditions. Sustainability 2024, 16, 5702. [Google Scholar] [CrossRef]

- Batchelder, W.H.; Anders, R. Cultural consensus theory: Comparing different concepts of cultural truth. J. Math. Psychol. 2012, 56, 316–332. [Google Scholar] [CrossRef]

- Ten Cate, O.; Regehr, G. The power of subjectivity in the assessment of medical trainees. Acad. Med. 2019, 94, 333–337. [Google Scholar] [CrossRef] [PubMed]

- Weller, S.C.; Romney, A.K. Systematic Data Collection; Sage Publications: Thousand Oaks, CA, USA, 1988; Volume 10. [Google Scholar]

- Romney, A.K.; Moore, C.C. Toward a theory of culture as shared cognitive structures. Ethos 1998, 26, 314–337. [Google Scholar] [CrossRef]

- Romney, A.K.; Weller, S.C.; Batchelder, W.H. Culture as consensus: A theory of culture and informant accuracy. Am. Anthropol. 1986, 88, 313–338. [Google Scholar] [CrossRef]

- Schneggm, M.; Lang, H. Die Analyse kultureller Domänen. Eine praxisorientierte Einführung. Methoden der Ethnographie [The analysis of cultural domains. A practice-oriented introduction. Methods of ethnography]. 2008. Available online: https://www.researchgate.net/publication/285282734_Die_Analyse_kultureller_Domanen_Eine_praxisorientierte_Einfuhrung (accessed on 4 September 2022).

- Rashidi, M.N.; Begum, R.A.; Mokhtar; Pereira, J.J. The conduct of structured interviews as research implementation method. J. Adv. Res. Des. 2014, 1, 28–34. [Google Scholar]

- Queirós, A.; Faria, D.; Almeida, F. Strengths and limitations of qualitative and quantitative research methods. Eur. J. Educ. Stud. 2017, 3, 369–386. [Google Scholar]

- Bernardi, S.; Merseguer, J.; Petriu, D.C. A dependability profile within MARTE. Softw. Syst. Model. 2011, 10, 313–336. [Google Scholar] [CrossRef]

- Weller, S.C. Structured interviewing and questionnaire construction. In Handbook of Methods in Cultural Anthropology; Rowman & Littlefield Publishers: Lanham, MD, USA, 1998; pp. 365–409. [Google Scholar]

- Fowler, F.J., Jr.; Cosenza, C. Design and evaluation of survey questions. In The SAGE Handbook of Applied Social Research Methods; SAGE Publications, Inc.: Newbury Park, CA, USA, 2009; Volume 2, pp. 375–412. [Google Scholar]

- Bryman, A. Social Research Methods; Oxford University Press: New York, NY, USA, 2016. [Google Scholar]

- Abdul-Rahman, H.; Wang, C.; Wood, L.C.; Low, S.F. Negative impact induced by foreign workers: Evidence in Malaysian construction sector. Habitat Int. 2012, 36, 433–443. [Google Scholar] [CrossRef]

- Gill, P.; Stewart, K.; Treasure, E.; Chadwick, B. Methods of data collection in qualitative research: Interviews and focus groups. Br. Dent. J. 2008, 204, 291–295. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R. Research Methodology: A Step-by-Step Guide for Beginners; Sage Publications Ltd.: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Trull, T.J.; Widiger, T.A.; Useda, J.D.; Holcomb, J. A structured interview for the assessment of the Five-Factor Model of Personality. Psychol. Assess. 1998, 10, 229. [Google Scholar] [CrossRef]

- Mack, N. Qualitative Research Methods: A Data Collector’s Field Guide; Family Health International: Durham, NC, USA, 2005. [Google Scholar]

- Oppong, S.H. The problem of sampling in qualitative research. Asian J. Manag. Sci. Educ. 2013, 2, 202–210. [Google Scholar]

- DePaulo, P. How Large Should the Sample Size Be in a Qualitative Study? DePaulo Research Consulting: Montgomeryville, PA, USA, 2000. [Google Scholar]

- Devers, K.J.; Frankel, R.M. Study design in qualitative research—2: Sampling and data collection strategies. Educ. Health 2000, 13, 263–271. [Google Scholar]

- Marshall, M.N. Sampling for qualitative research. Fam. Pract. 1996, 13, 522–526. [Google Scholar] [CrossRef]

- Hanly, P. The sampling problem. Am. Cathol. Sociol. Rev. 1947, 8, 258–265. [Google Scholar]

- Hennink, M.; Kaiser, B.N. Sample sizes for saturation in qualitative research: A systematic review of empirical tests. Soc. Sci. Med. 2022, 292, 114523. [Google Scholar] [CrossRef]

- Tuckett, A.G. Qualitative research sampling: The very real complexities. Nurse Res. 2004, 12, 47–61. [Google Scholar] [CrossRef]

- Greene, J.; McClintock, C. Triangulation in evaluation: Design and analysis issues. Eval. Rev. 1985, 9, 523–545. [Google Scholar] [CrossRef]

- Fern, E.F. The use of focus groups for idea generation: The effects of group size, acquaintanceshiand moderator on response quantity and quality. J. Mark. Res. 1982, 19, 1–13. [Google Scholar] [CrossRef]

- Morgan, D.L. Focus groups. Annu. Rev. Sociol. 1996, 22, 129–152. [Google Scholar] [CrossRef]

- Murphy, E.; Dingwall, R. Informed consent. anticipatory regulation and ethnographic practice. Soc. Sci. Med. 2007, 65, 2223–2234. [Google Scholar] [CrossRef] [PubMed]

- Karlstads Kommun [Karlstad Municipality]. Kronoparken. 2020. Available online: https://karlstad.se/Bygga-och-bo/Bygglov-tillstand-och-fastighetsfragor/Kulturhistoriska-byggnader/Omradesbeskrivningar/kronoparken/ (accessed on 4 November 2022).

- Wiik, C. 50 år sedan de första flyttade in på Kronoparken [Fifty years ago. the first residents moved to Kronoparken]. Nya Wermlands Tidningen, 23 September 2020. [Google Scholar]

- Dahlgren, B.; Gustafsson, G. Stadsdelen Kronoparken i Karlstad: Stadsplaneringens Vedermödor. [The Suburb of Kronoparken in Karlstad: Tribulations of Urban Planning]; Universitetstryckeriet: Karlstad, Sweden, 2016; Available online: https://karlstad.se/Uppleva-och-gora/Kultur/kommunarkiv/Kulturhistoria/Karlstads-lokalhistoria/samhallsbyggande/stadsdelen-kronoparken/ (accessed on 4 November 2022).

- Törnberg, M. Kronparken skapades efter tidens ideal. [Kronoparken was created according to the ideals of the time]. Nya Wermlands-Tidningen, 1 October 2020. [Google Scholar]

- Karlstads Kommun [Karlstad Municipality]. Socioekonomiska Förutsättningar i Karlstad [Socio-Economic Conditions in Karlstad]. 2018. Available online: https://segregationsbarometern.boverket.se/kommun/karlstad/ (accessed on 2 May 2022).

- Karlstads Kommun [Karlstad Municipality]. Medborgardialog om Brottsbekämpning och Trygghetsarbete [Citizen Dialogue on Crime Prevention and Safety Work]. 2016. Available online: https://www.svt.se/nyheter/lokalt/varmland/vill-ha-fler-poliser-pa-stan (accessed on 4 November 2022).

- Krums-Vabins, M. Rösterna Från Kronoparken i Karlstad—Efter Larm om Droghandel Och Otrygghet. [Voices from Kronoparken in Karlstad After Reports of Drug Misuse and Safety Concerns]. Sveriges Television. 10 September 2021. Available online: https://www.svt.se/nyheter/lokalt/varmland/fyra-roster-om-kronoparken-i-karlstad (accessed on 4 November 2022).

- Asplid, Å. ”Det har blivit vanligare med skyddsväst”. [Use of protective gear has been more commonplace]. Nya Wermlands-Tidningen, 25 September 2020. [Google Scholar]

- Spradley, J.P. The Ethnographic Interview; Waveland Press: Long Grove, IL, USA, 2016. [Google Scholar]

- Gehl Institute. Twelve Quality Criteria. 2022. Available online: https://issuu.com/gehlarchitects/docs/twelve-quality-criteria_gehl (accessed on 19 June 2024).

- Eken, A.; Magnusson, J.; Hildesson, A.; Molnar, S.; De Fine Licht, K. Rätt verktyg för jobbet? En översikt över verktyg för social hållbarhetsanalys i städer. [The right tool for the job?: An overview of tools for social sustainability analysis in cities]. Mistra Urban Futures Rep. 2017, 1, 17–36. [Google Scholar]

- Von Glaserfeld, E.; Johsua, S.; Von Glasersfeld, E. Radical Constructivism. A Way of Knowing and Learning; The Falmer Press: London, UK, 1995. [Google Scholar]

- Lantmäteriet Karlstad [Land Survey Karlstad]. SWEREF 99 TM, RH 2000. [Kartografiskt Material] [Cartographic Material]. 2023. Available online: https://minkarta.lantmateriet.se/plats/3006/v1.0/?e=419232&n=6585933&z=9&mapprofile=karta&background=1&boundaries=false (accessed on 9 May 2023).

- Lantmäteriet (2022) Kronoparken. SWEREF 99 TM, RH 2000. [Kartografiskt Material]. Available online: https://minkarta.lantmateriet.se/plats/3006/v1.0/?e=418809&n=6585774&z=11&mapprofile=karta&background=1&boundaries=false (accessed on 29 May 2022).

| No | Question | Purpose |

|---|---|---|

| 1 | Do you live in Kronoparken? If not, have you ever visited the site? | Location familiarity |

| 2 | Describe the location with three adjectives. | First impressions |

| 3 | How do you experience the site’s architecture and esthetic appearance? What is good and what is bad? | Easing comparison |

| 4 | Do you think the venue works as a meeting place? For example, is it possible to have good conversations on site, and is the place tempting to meet friends? What is good and what is bad? | |

| 5 | How do you experience the social infrastructure on site and in the area? (Social infrastructure can be said to be areas and places that enable social interaction. Examples of this are schools and cafes and play and sports facilities.) What is good and what is bad? | |

| 6 | Does the location look accessible? Can people with reduced mobility use the site? What is good and what is bad? | |

| 7 | Is it easy to cycle and walk in the area? Is public traffic (transportation) well developed? What is good and what is bad? | |

| 8 | Are the location and the area secured? Does it feel like the place is alive or is it desolate? What’s good/bad? | |

| 9 | How is the emotional (senses) and experience on site? Smell, sensation, hearing… How does the weather affect the experience of the location? What’s good/bad? | |

| 10 | Does it feel like the area is developing? Can the inhabitants be involved in directing the development? What’s good/bad? |

| Categories | Student Groups | Mean | Spread | ||||||

|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | |||

| Architecture & esthetics | 3.4 | 1.8 | 2.6 | 3.2 | 2.8 | 2 | 3.4 | 2.74 | 1.6 |

| Places to meet | 4.2 | 3.2 | 4 | 3.6 | 4 | 4 | 4.8 | 3.97 | 1.6 |

| Social infrastructure | 4.8 | 3 | 4 | 4 | 3.6 | 3 | 3.6 | 3.71 | 1.8 |

| Accessibility | 3.6 | 2.2 | 3.4 | 4.2 | 3.6 | 4 | 3 | 3.43 | 2.0 |

| Traffic | 4.2 | 3.8 | 4.4 | 3.4 | 3.4 | 2.8 | 4.2 | 3.74 | 1.6 |

| Security | 3.8 | 1.4 | 2 | 2.4 | 1.8 | 2 | 2.6 | 2.29 | 2.4 |

| Senses and experience | 4 | 3.4 | 3.4 | 4.2 | 4.4 | 3.6 | 3.4 | 3.77 | 1.0 |

| Development | 4 | 2.4 | 3.8 | 2 | 3.8 | 1.8 | 1.8 | 2.80 | 2.2 |

| Categories of the Matrix | Corresponding Quality Criteria | A | B | C | D | E | F | G | H | I | J | K | L | Mean | Spread | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Architecture & esthetics | Scale | 3 | 1 | 3 | 3 | 3 | 2 | 2 | 2.5 | 3 | 2 | 2 | 5 | 2.62 | 4 | ||

| Places to meet | Options to stand and linger | 4 | 2 | 3 | 5 | 2 | 3 | 1 | 2 | 5 | 2 | 3 | 5 | 3 | 2.73 | 4 | 3.1 |

| Options for sitting | 5 | 2 | 1 | 3 | 3 | 3 | 2 | 4 | 5 | 2 | 1 | 2 | 2.75 | 4 | |||

| Options for talking and listening/hearing | 3 | 3 | 2 | 2 | 3 | 2 | 2 | 3.5 | 3 | 2 | 2 | 2 | 2.45 | 1.5 | |||

| Social infrastructure | Options for play. exercise. and activities | 1 | 1 | 2 | 4 | 2 | 3 | 3 | 2 | 5 | 3 | 2 | 4 | 2.66 | 4 | ||

| Accessibility | Options for mobility | 3 | 1 | 2 | 4 | 3 | 4 | 4 | 4 | 5 | 4 | 4 | 5 | 3.58 | 3 | ||

| Security | Protection against traffic and accidents | 5 | 2 | 5 | 5 | 5 | 5 | 4 | 4 | 2 | 4 | 5 | 3 | 4 | 3 | 3 | 2.66 |

| Protection against harm by others | 4 | 1 | 1 | 2 | 1 | 1 | 2 | 3 | 3 | 2 | 2 | 2 | 2 | 3 | |||

| Protection against unpleasant sensory experience | 4 | 2 | 2 | 2 | 2 | 2 | 3 | 2 | 3 | 2 | 2 | 3 | 3.25 | 2 | |||

| Senses and experience | Options for seeing | 4 | 1 | 1 | 1 | 1 | 2 | 3 | 3 | 2 | 1 | 1 | 1 | 1.75 | 1.88 | 3 | 2.66 |

| Opportunities to enjoy the positive aspects of climate | 2 | 2 | 1 | 1 | 2 | 2 | 3 | 3 | 2 | 2 | 3 | 4 | 2.25 | 3 | |||

| Experience of esthetic qualities and positive sensory experiences | 3 | 1 | 2 | 1 | 2 | 1 | 2 | 3 | 2 | 1 | 1 | 1 | 1.66 | 2 | |||

| Development | No corresponding criteria | ||||||||||||||||

| Traffic | No corresponding criteria | ||||||||||||||||

| (a) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| The Questions | The Answers of 12 Students on the Structured Interview Matrix | Rate | ||||||||||||

| 1. Do you live in Kronoparken? If not, have you ever visited the site? | 22 years | Live | Live | Live | Live | Live | Live | Lived some years | Live | Don’t live but visit often | Visited few times | Visited two times | ||

| 2. Describe the location with three adjectives. | Boring | Nice to walk in | Crime | Multicultural | Multicultural | Multicultural | Very empty | Open | Gray | Boring | Uniform | Open | 94% | |

| (b) | ||||||||||||||

| Question Category | A | B | C | D | E | F | G | H | I | J | K | L | Mean | Spread |

| Architecture and esthetics | 3 | 3 | 1 | 4 | 2 | 4 | 2 | 3 | 2 | 2 | 2 | 2 | 2.5 | 3 |

| Places to meet | 1 | 5 | 3 | 1 | 3 | 2 | 1 | 1 | 3 | 3 | 1 | 3 | 2 | 2.25 |

| Social infrastructure | 4 | 3 | 3 | 3 | 4 | 3 | 4 | 5 | 2 | 1 | 2 | 2 | 3 | 4 |

| Accessibility | 3 | 2 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 2 |

| Traffic | 2 | 4 | 4 | 2 | 4 | 4 | 3 | 3 | 4 | 4 | 4 | 3 | 3.41 | 2 |

| Security | 2 | 3 | 1 | 2 | 3 | 2 | 1 | 1 | 1 | 1 | 2 | 2 | 1.75 | 2 |

| Senses and experience | 2 | 4 | 4 | 4 | 2 | 4 | 1 | 1 | 2 | 4 | 3 | 3 | 2.83 | 3 |

| Development | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| The Eight Distinct Categories of the Assessment Matrix. | Woxnerud [20] Conducted the Assessment Using a Matrix He Had Created. | Seven Student Groups Used Woxnerud’ s [24] Matrix to Conduct the Assessment. (Mean Value) | Twelve Students Completed the Assessment Using a Structured Interview Survey. (Mean Value) | Twelve Students Used Jan Gehl’s 12-Criterion to Complete the Assessment. (Mean Value) | ||||

|---|---|---|---|---|---|---|---|---|

| Architecture & esthetics | 2.6 | 2.74 | Result differences | 2.5 | Result differences | 2.62 | Result differences | Spread |

| +0.14 | −0.1 | +0.02 | 0.12 | |||||

| Places to meet | 3.6 | 3.97 | +0.37 | 2.25 | −1.35 | 2.73 | −0.87 | 1.72 |

| Social infrastructure | 3.6 | 3.71 | +0.11 | 3 | −0.6 | 2.66 | −0.94 | 1.05 |

| Accessibility | 4.2 | 3.43 | −0.47 | 4 | −0.2 | 3.58 | −0.62 | 0.77 |

| Traffic | 4 | 3.74 | −0.26 | 3.41 | −0.59 | 0 | −4 | 0.59 |

| Security | 2 | 2.29 | +0.29 | 1.75 | −0.25 | 3 | +1 | 1.25 |

| Senses and experience | 2.4 | 3.77 | +1.37 | 2.83 | +0.43 | 1.88 | −0.52 | 1.89 |

| Development | 3.4 | 2.8 | −0.6 | 0.91 | −2.49 | 0 | −3.4 | 2.49 |

| An average difference across all the categories | 0.65/8 = 0.08 | 3.6/8 = 0.45 | 10.35/8 = 1.25 | |||||

| Question | A | B | C | D | E | F | G | Spread (Highest–Lowest) |

|---|---|---|---|---|---|---|---|---|

| 4a | 5 | 4 | 4 | 5 | 5 | 5 | 4 | 1 |

| 4b | 3 | 1 | 3 | 5 | 3 | 5 | 2 | 4 |

| 4c | 4 | 3 | 5 | 5 | 5 | 5 | 4 | 2 |

| 4d | 5 | 2 | 2 | 4 | 3 | 4 | 3 | 3 |

| 4e | 1 | 1 | 3 | 2 | 2 | 1 | 2 | 2 |

| Average | 3.6 | 2.2 | 3.4 | 4.2 | 4 | 4 | 3 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Najar, K.; Nylander, O.; Woxnerud, W. Determining the Most Consensus-Based Assessment Method for Social Sustainability—Case Study of a Suburb of Karlstad, Sweden. Buildings 2024, 14, 3395. https://doi.org/10.3390/buildings14113395

Najar K, Nylander O, Woxnerud W. Determining the Most Consensus-Based Assessment Method for Social Sustainability—Case Study of a Suburb of Karlstad, Sweden. Buildings. 2024; 14(11):3395. https://doi.org/10.3390/buildings14113395

Chicago/Turabian StyleNajar, Karim, Ola Nylander, and William Woxnerud. 2024. "Determining the Most Consensus-Based Assessment Method for Social Sustainability—Case Study of a Suburb of Karlstad, Sweden" Buildings 14, no. 11: 3395. https://doi.org/10.3390/buildings14113395

APA StyleNajar, K., Nylander, O., & Woxnerud, W. (2024). Determining the Most Consensus-Based Assessment Method for Social Sustainability—Case Study of a Suburb of Karlstad, Sweden. Buildings, 14(11), 3395. https://doi.org/10.3390/buildings14113395