Research on Automated Defect Classification Based on Visual Sensing and Convolutional Neural Network-Support Vector Machine for GTA-Assisted Droplet Deposition Manufacturing Process

Abstract

1. Introduction

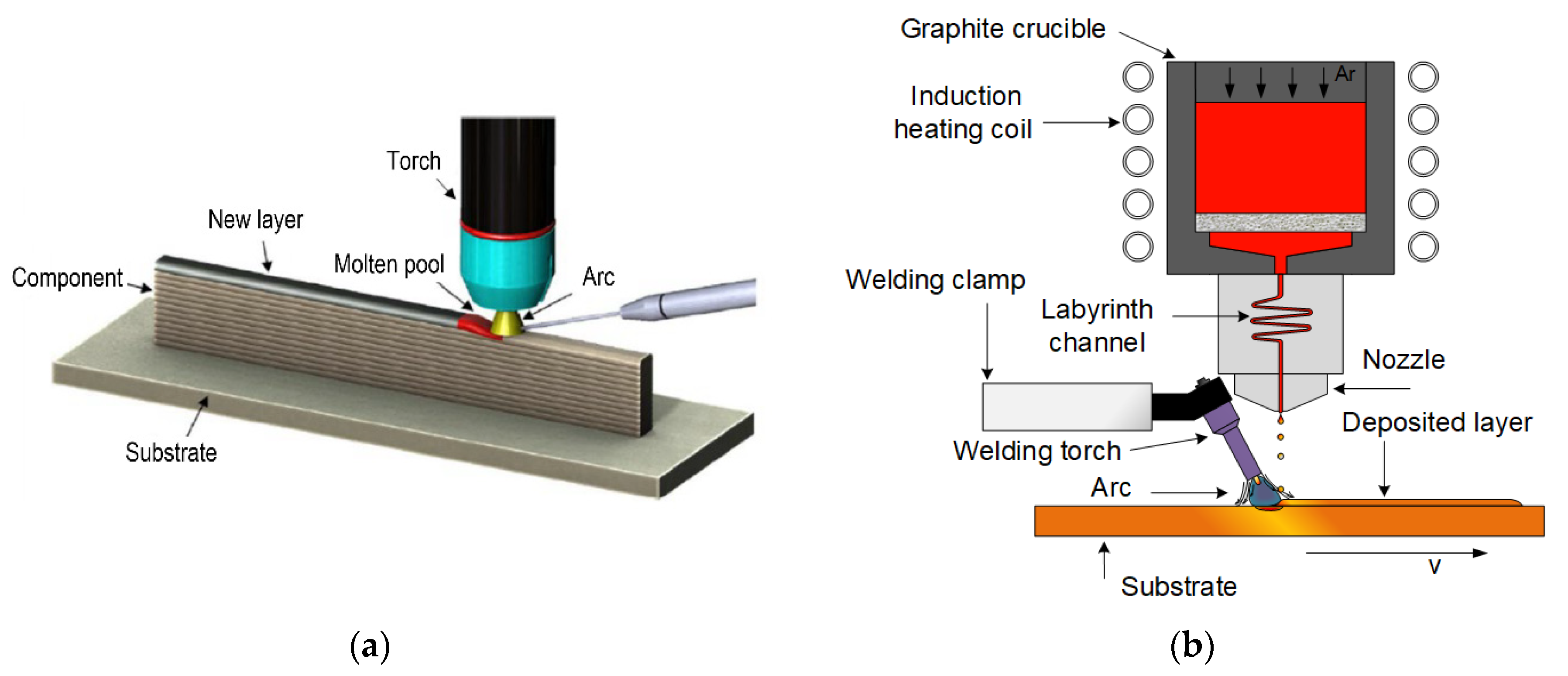

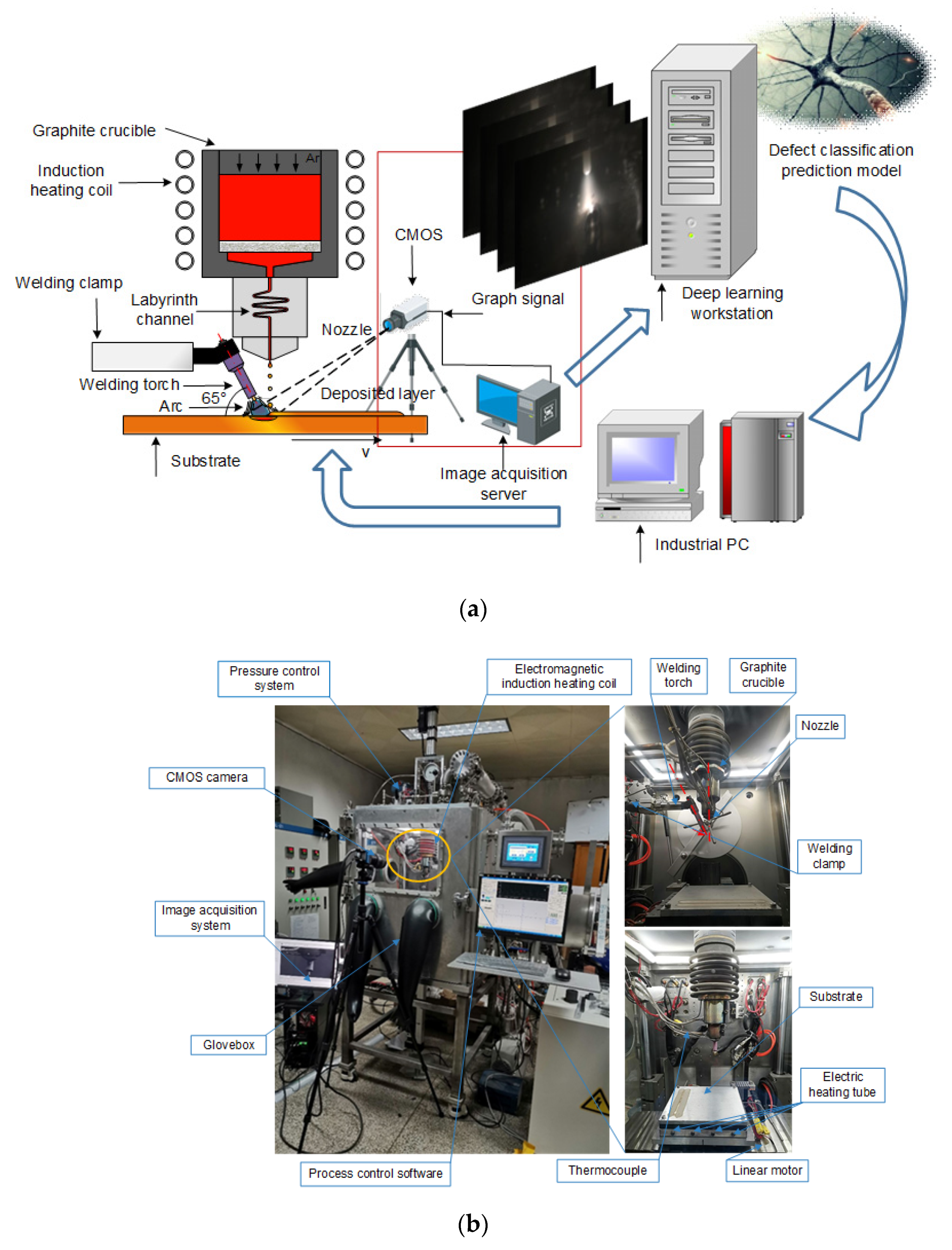

2. Experiment Platform and Methods

2.1. Experiment Platform

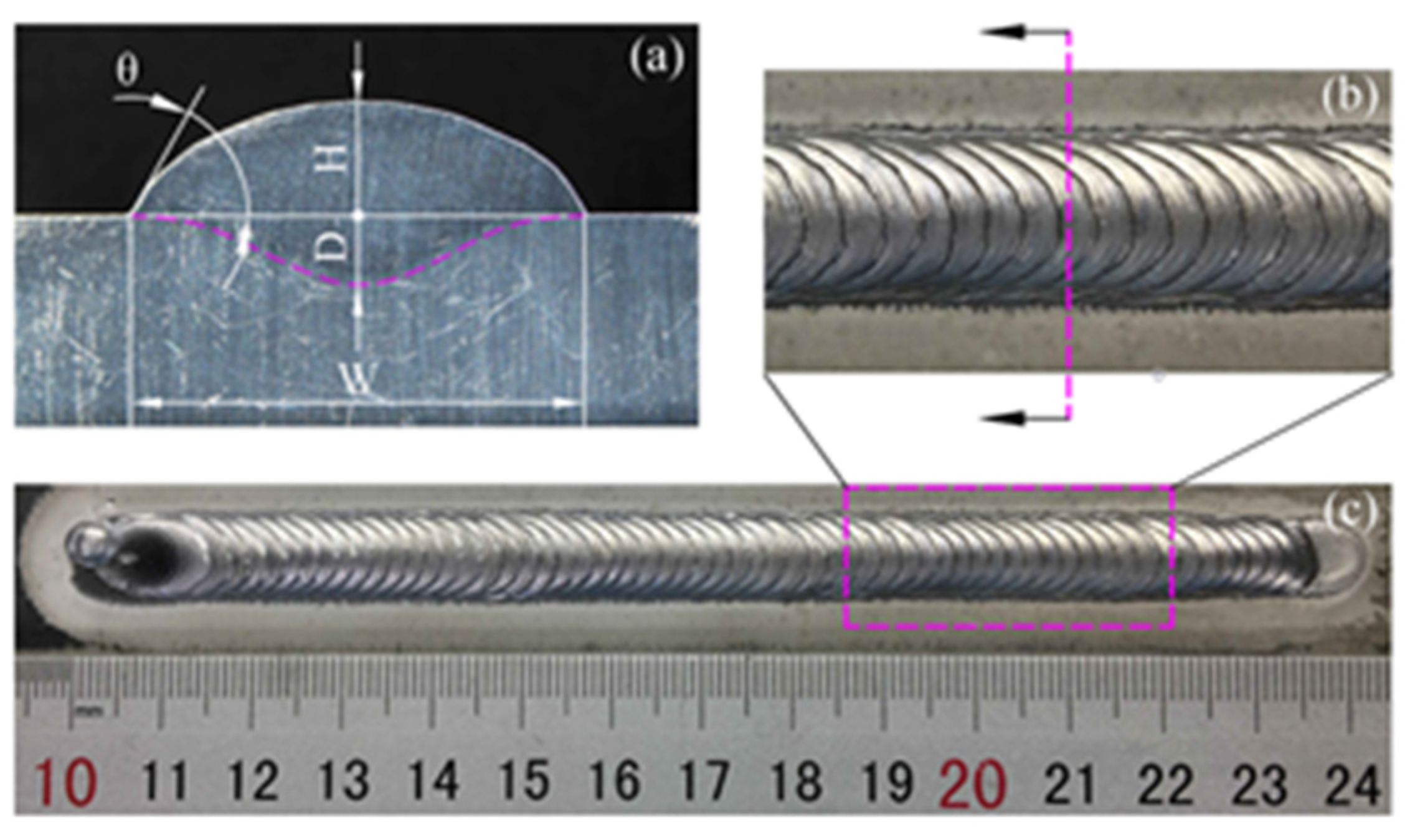

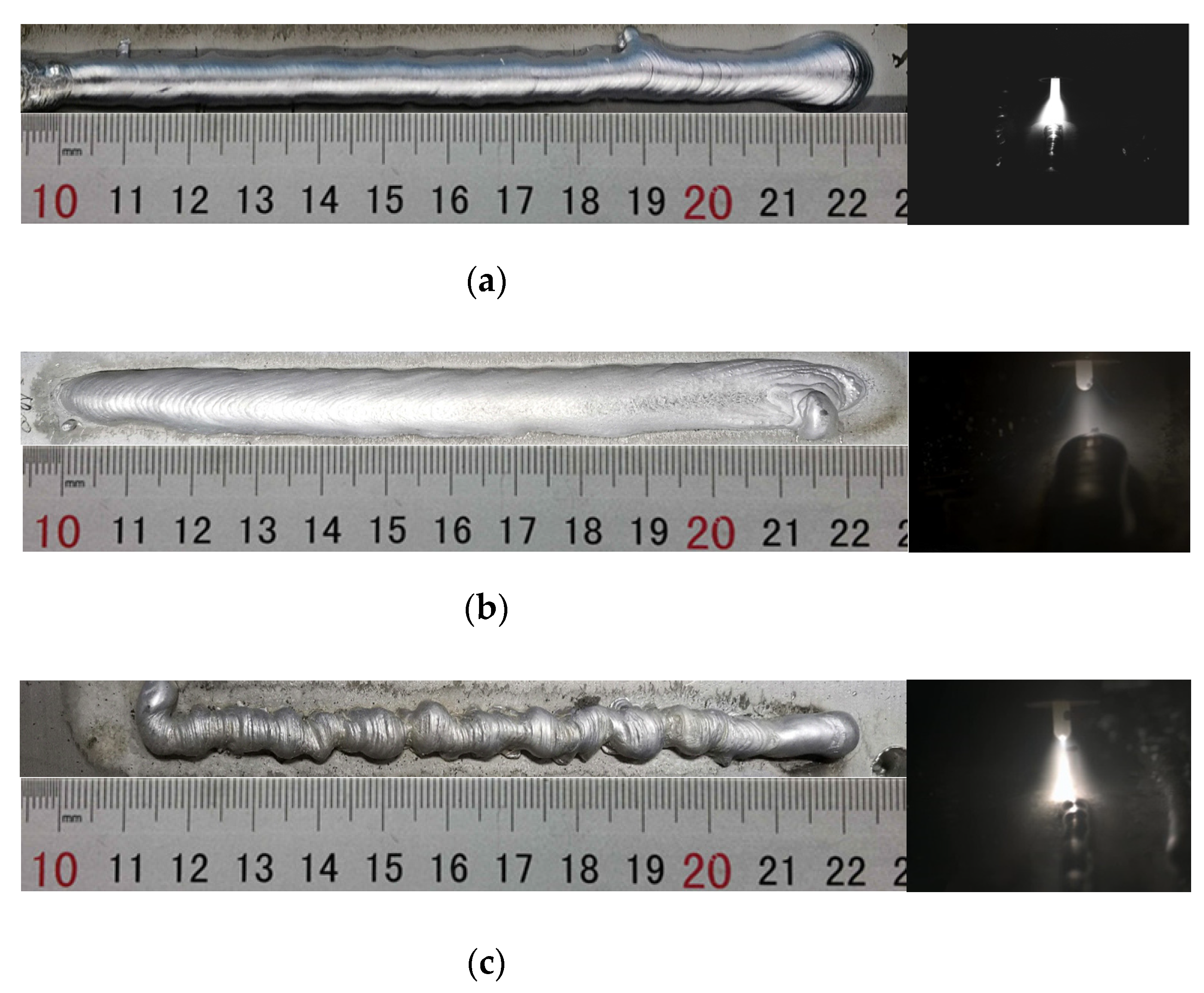

2.2. Experiment Methods

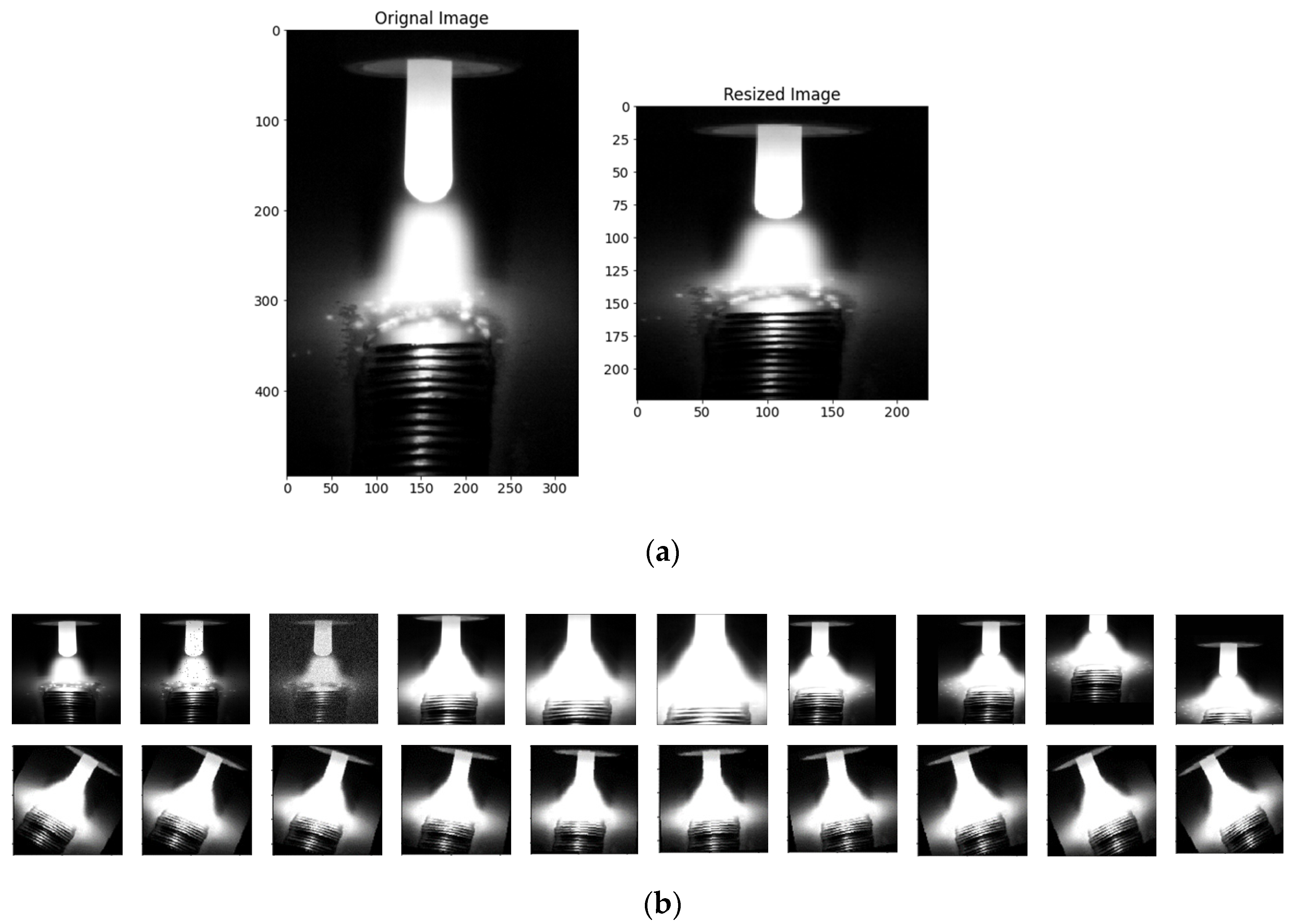

2.3. Preprocessing

2.4. Data Augmentation

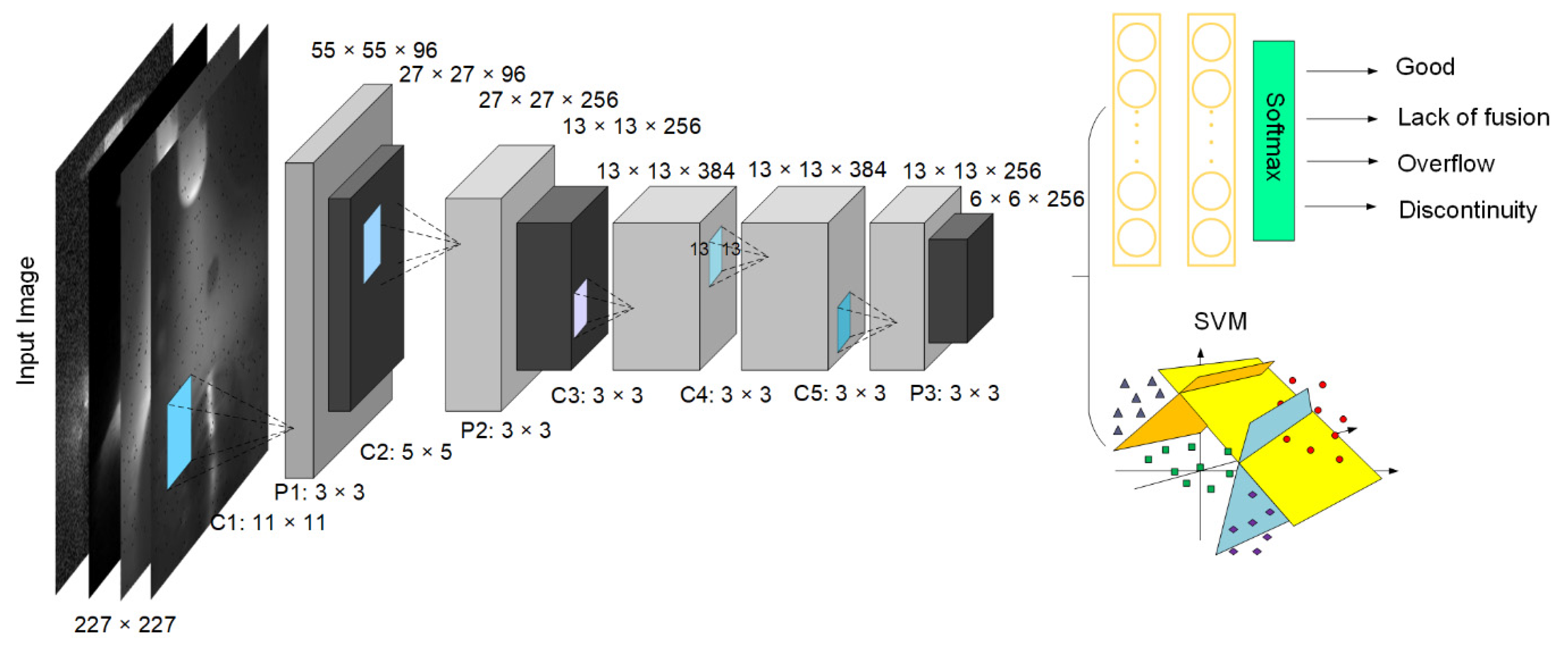

2.5. CNN+SVM Architecture

2.6. Evaluation Metrics

3. Results and Discussion

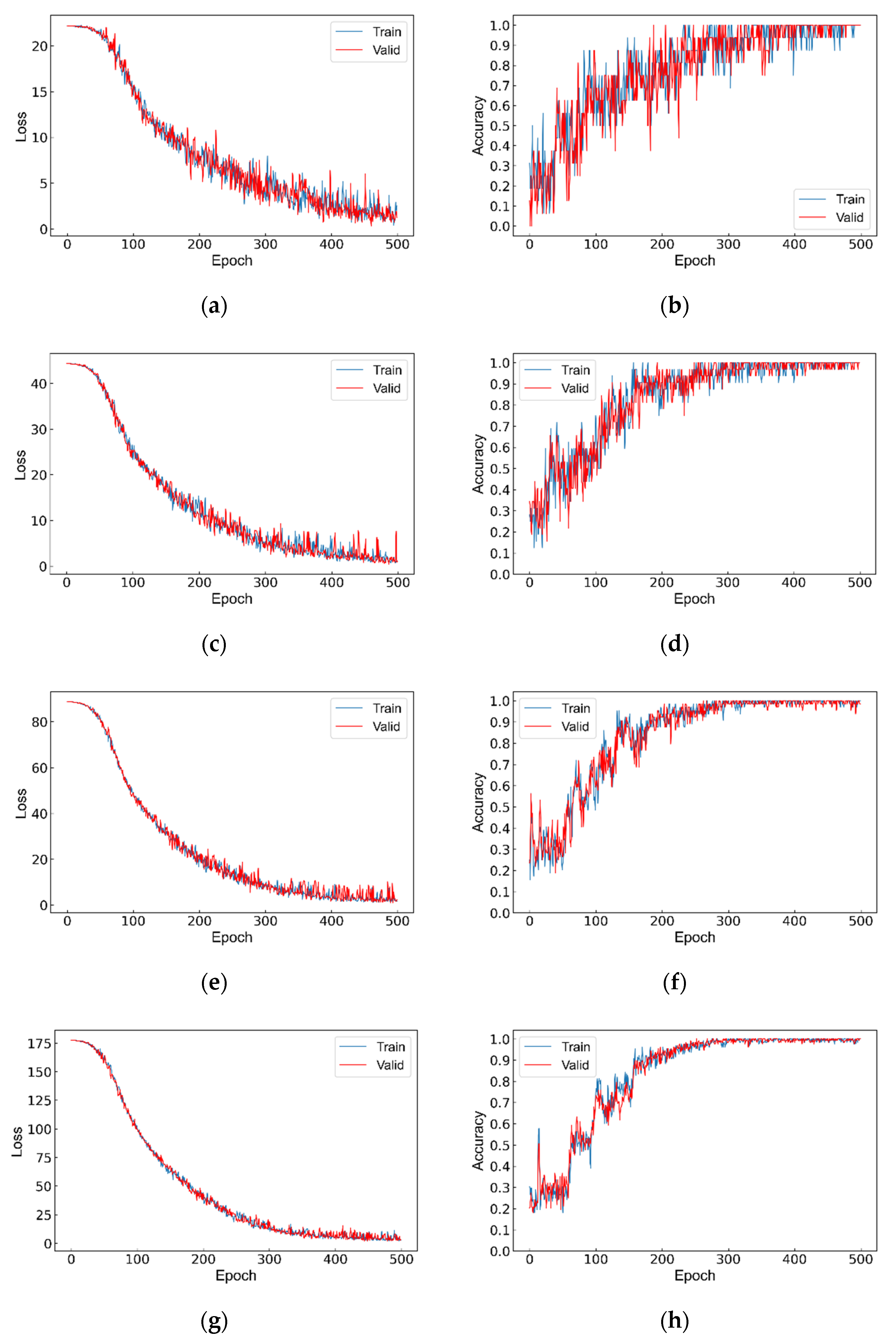

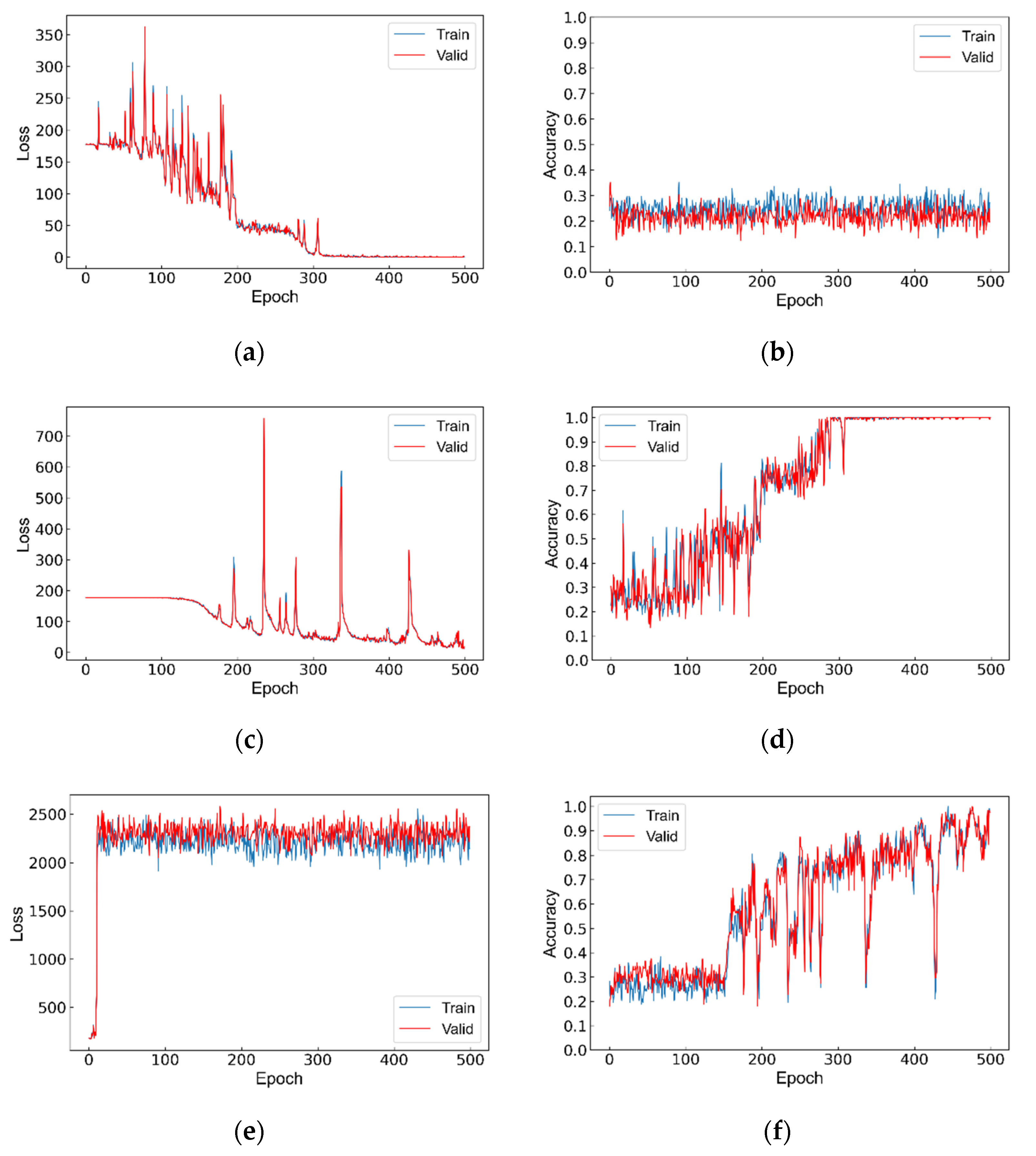

3.1. Tuning of CNN Architecture

3.2. Performance Evaluation

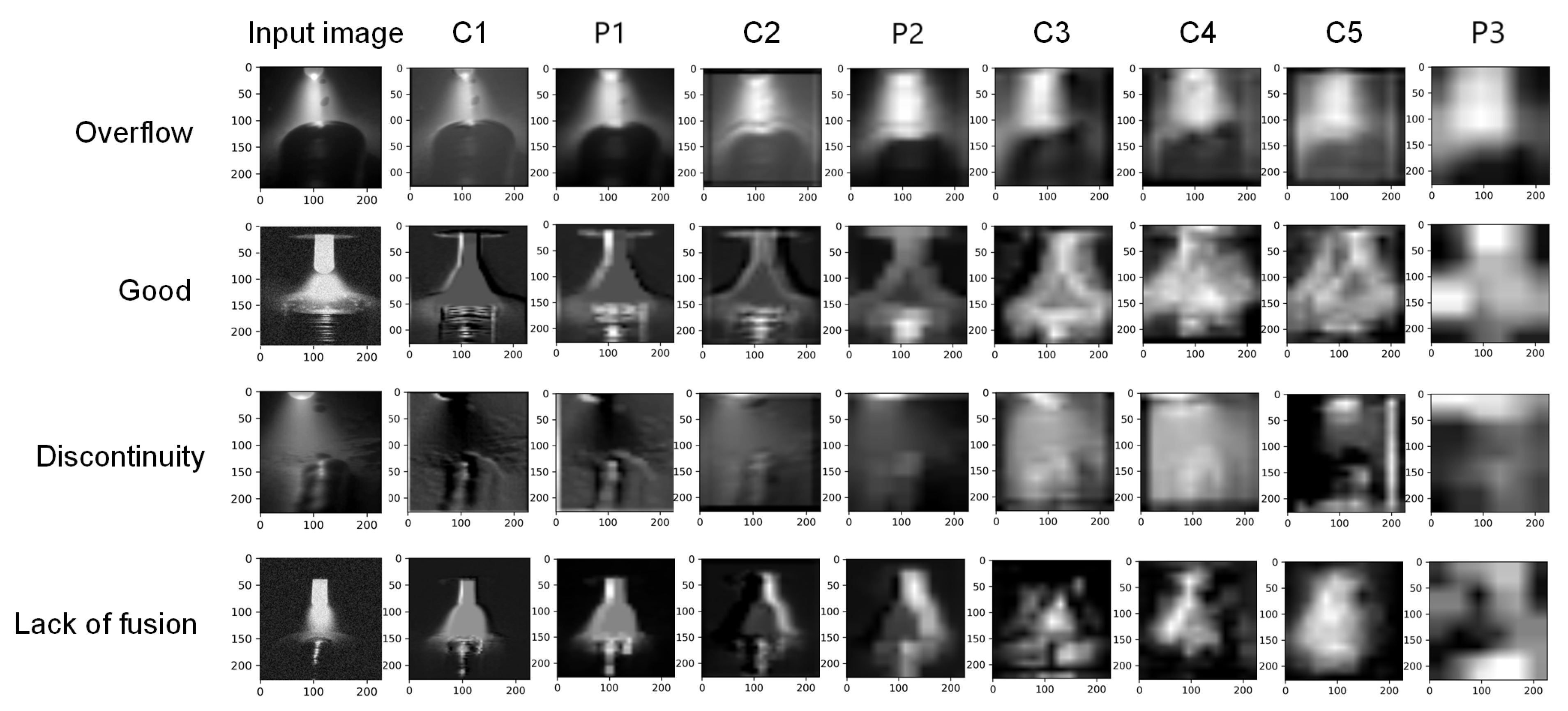

3.3. The Visualization of CNN Features

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| GTA | Gas tungsten arc |

| Ts | Forming speed |

| Ip | Forming current |

| Qv | Forming flux |

| Tb | Substrate temperature |

| C1–C5 | Convolutional layer with size of corresponding filters: nx × ny, stride: t and padding (Yes or Not) |

| P1–P3 | Pooling layer |

| ReLu | rectified linear units specified as f(x) for nonlinear activation of neurons |

| α | learning rate used during training |

| EPOCHs | Number of passes over the data set during training |

| DROPOUT | Dropout, specified as 0.4 for fully connected layers to prevent overfitting |

| KNN | k-nearest neighbor |

| SGD | Stochastic gradient descent |

| Adam | Adaptive moment estimation |

References

- Williams, S.W.; Martina, F.; Addison, A.C.; Ding, J.; Pardal, G.; Colegrove, P. Wire plus Arc Additive Manufacturing. Mater. Sci. Technol. 2016, 32, 641–647. [Google Scholar] [CrossRef]

- Rodrigues, T.A.; Duarte, V.; Miranda, R.M.; Santos, T.G.; Oliveira, J.P. Current Status and Perspectives on Wire and Arc Additive Manufacturing (WAAM). Materials 2019, 12, 1121. [Google Scholar] [CrossRef]

- Rodriguez-Cobo, L.; Ruiz-Lombera, R.; Conde, O.M.; Lopez-Higuera, J.M.; Cobo, A.; Mirapeix, J. Feasibility study of Hierarchical Temporal Memories applied to welding diagnostics. Sens. Actuator A Phys. 2013, 204, 58–66. [Google Scholar] [CrossRef]

- Arora, H.; Kumar, V.; Prakash, C.; Pimenov, D.; Singh, M.; Vasudev, H.; Singh, V. Analysis of Sensitization in Austenitic Stainless Steel-Welded Joint. In Advances in Mechanical Engineering; J.B. Metzler: Jalandhar, India, 2021; pp. 13–23. [Google Scholar]

- Gao, X.D.; Li, G.H.; Chen, Z.Q.; Lan, C.Z.; Li, Y.F.; Gao, P.P. Modeling for detecting weld defects based on magneto-optical imaging. Appl. Optics. 2018, 57, 6110–6119. [Google Scholar] [CrossRef]

- Florence, S.E.; Samsingh, R.V.; Babureddy, V. Artificial intelligence based defect classification for weld joints. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bandung, Indonesia, 9–13 July 2018; IOP Publishing: Kattankulathur, India, 2018; Volume 402, p. 012159. [Google Scholar]

- Chabot, A.; Laroche, N.; Carcreff, E.; Rauch, M.; Hascoet, J.Y. Towards defect monitoring for metallic additive manufacturing components using phased array ultrasonic testing. J. Intell. Manuf. 2020, 31, 1191–1201. [Google Scholar] [CrossRef]

- Bento, J.B.; Lopez, A.; Pires, I.; Quintino, L.; Santos, T.G. Non-destructive testing for wire plus arc additive manufacturing of aluminium parts. Addit. Manuf. 2019, 29, 100782. [Google Scholar]

- Wu, Y.; Cui, B.; Xiao, Y. Crack Detection during Laser Metal Deposition by Infrared Monochrome Pyrometer. Materials 2020, 13, 5643. [Google Scholar] [CrossRef]

- Montazeri, M.; Nassar, A.R.; Stutzman, C.B.; Rao, P. Heterogeneous sensor-based condition monitoring in directed energy deposition. Addit. Manuf. 2019, 30, 100916. [Google Scholar] [CrossRef]

- Chang, S.H.; Zhang, H.Y.; Xu, H.Y.; Sang, X.H.; Wang, L.; Du, D.; Chang, B.H. Online Measurement of Deposit Surface in Electron Beam Freeform Fabrication. Sensors 2019, 19, 4001. [Google Scholar] [CrossRef]

- Zhao, Z.; Guo, Y.T.; Bai, L.F.; Wang, K.H.; Han, J. Quality monitoring in wire-arc additive manufacturing based on cooperative awareness of spectrum and vision. Optik 2019, 181, 351–360. [Google Scholar] [CrossRef]

- Yu, R.W.; Zhao, Z.; Bai, L.F.; Han, J. Prediction of Weld Reinforcement Based on Vision Sensing in GMA Additive Manufacturing Process. Metals 2020, 10, 1041. [Google Scholar] [CrossRef]

- Xia, C.Y.; Pan, Z.X.; Zhang, S.Y.; Polden, J.; Wang, L.; Li, H.J.; Xu, Y.L.; Chen, S.B. Model predictive control of layer width in wire arc additive manufacturing. J. Manuf. Process 2020, 58, 179–186. [Google Scholar] [CrossRef]

- Aminzadeh, M.; Kurfess, T.R. Online quality inspection using Bayesian classification in powder-bed additive manufacturing from high-resolution visual camera images. J. Intell. Manuf. 2019, 30, 2505–2523. [Google Scholar] [CrossRef]

- Tao, W.; Leu, M.C.; Yin, Z. American Sign Language alphabet recognition using Convolutional Neural Networks with multiview augmentation and inference fusion. Eng. Appl. Artif. Intell. 2018, 76, 202–213. [Google Scholar] [CrossRef]

- Li, K.; Wu, Z.; Peng, K.-C.; Ernst, J.; Fu, Y. Tell Me Where to Look: Guided Attention Inference Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 27 February 2018; pp. 9215–9223. [Google Scholar]

- Wang, T.; Chen, Y.; Qiao, M.; Snoussi, H. A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 2018, 94, 3465–3471. [Google Scholar] [CrossRef]

- Azimi, S.M.; Britz, D.; Engstler, M.; Fritz, M.; Mücklich, F. Advanced steel microstructural classification by deep learning methods. Sci. Rep. 2018, 8, 2128. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.Q.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 23–28 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cui, W.Y.; Zhang, Y.L.; Zhang, X.C.; Li, L.; Liou, F. Metal Additive Manufacturing Parts Inspection Using Convolutional Neural Network. Appl. Sci. 2020, 10, 545. [Google Scholar] [CrossRef]

- Kwon, O.; Kim, H.G.; Ham, M.J.; Kim, W.; Kim, G.H.; Cho, J.H.; Kim, N.I.; Kim, K. A deep neural network for classification of melt-pool images in metal additive manufacturing. J. Intell. Manuf. 2020, 31, 375–386. [Google Scholar] [CrossRef]

- Yin, L.M.; Wang, J.Z.; Hu, H.Q.; Han, S.G.; Zhang, Y.P. Prediction of weld formation in 5083 aluminum alloy by twin-wire CMT welding based on deep learning. Weld. World. 2019, 63, 947–955. [Google Scholar] [CrossRef]

- Zhang, B.B.; Jaiswal, P.; Rai, R.; Guerrier, P.; Baggs, G. Convolutional neural network-based inspection of metal additive manufacturing parts. Rapid Prototyp. J. 2019, 25, 530–540. [Google Scholar] [CrossRef]

- Wang, Y.M.; Zhang, C.R.; Lu, J.; Bai, L.F.; Zhao, Z.; Han, J. Weld Reinforcement Analysis Based on Long-Term Prediction of Molten Pool Image in Additive Manufacturing. IEEE Access 2020, 8, 69908–69918. [Google Scholar] [CrossRef]

- Tomaz, I.d.V.; Colaço, F.H.G.; Sarfraz, S.; Pimenov, D.Y.; Gupta, M.K.; Pintaude, G. Investigations on quality characteristics in gas tungsten arc welding process using artificial neural network integrated with genetic algorithm. Int. J. Adv. Manuf. Technol. 2021, 1–15. [Google Scholar] [CrossRef]

- Bacioiv, D.; Melton, G.; Papaelias, M.; Shaw, R. Automated defect classification of Aluminium 5083 TIG welding using HDR camera and neural networks. J. Manuf. Process 2019, 45, 603–613. [Google Scholar] [CrossRef]

- Yahia, N.B.; Belhadj, T.; Brag, S.; Zghal, A. Automatic detection of welding defects using radiography with a neural approach. In Proceedings of the 11th International Conference on the Mechanical Behavior of Materials (ICM), Como, Italy, 5–9 June 2011; p. 10. [Google Scholar]

- Gaikwad, A.; Imani, F.; Yang, H.; Reutzel, E.; Rao, P. In Situ Monitoring of Thin-Wall Build Quality in Laser Powder Bed Fusion Using Deep Learning. Smart Sustain. Manuf. Syst. 2019, 3, 98–121. [Google Scholar] [CrossRef]

- Zhang, Z.F.; Wen, G.R.; Chen, S.B. Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding. J. Manuf. Process. 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Zhu, H.X.; Ge, W.M.; Liu, Z.Z. Deep Learning-Based Classification of Weld Surface Defects. Appl. Sci. 2019, 9, 3312. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

| Elements | Cu | Mn | Mg | Zn | Al |

|---|---|---|---|---|---|

| Composition | 4.75 | 0.5 | 1.56 | 0.25 | Others |

| Current | Forming Flux | Substrate Temp | Travel Speed | Shield Gas Flux |

|---|---|---|---|---|

| 260 A | 140 mm3/s | 280 °C | 8 mm/s | 15 L/min |

| Good | Lack of Fusion | Overflow | Discontinuity | Total | |

|---|---|---|---|---|---|

| Train | 6984 | 8136 | 7212 | 7056 | 29,388 |

| Test | 2328 | 2712 | 2404 | 2352 | 9796 |

| Class | Precision | Recall | F Score |

|---|---|---|---|

| Good | 0.93 | 0.96 | 0.945 |

| Lack of fusion | 0.92 | 0.89 | 0.905 |

| Overflow | 0.95 | 0.94 | 0.945 |

| Discontinuity | 0.91 | 0.88 | 0.895 |

| Method | Accuracy | Time (s) |

|---|---|---|

| KNN | 0.926 | 0.61 |

| SVM | 0.864 | 0.13 |

| CNN | 0.96 | 0.008 |

| Our model | 0.989 | 0.012 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.; Dang, H.; Du, J.; He, P.; Jiang, M.; Wei, Z. Research on Automated Defect Classification Based on Visual Sensing and Convolutional Neural Network-Support Vector Machine for GTA-Assisted Droplet Deposition Manufacturing Process. Metals 2021, 11, 639. https://doi.org/10.3390/met11040639

Ma C, Dang H, Du J, He P, Jiang M, Wei Z. Research on Automated Defect Classification Based on Visual Sensing and Convolutional Neural Network-Support Vector Machine for GTA-Assisted Droplet Deposition Manufacturing Process. Metals. 2021; 11(4):639. https://doi.org/10.3390/met11040639

Chicago/Turabian StyleMa, Chen, Haifei Dang, Jun Du, Pengfei He, Minbo Jiang, and Zhengying Wei. 2021. "Research on Automated Defect Classification Based on Visual Sensing and Convolutional Neural Network-Support Vector Machine for GTA-Assisted Droplet Deposition Manufacturing Process" Metals 11, no. 4: 639. https://doi.org/10.3390/met11040639

APA StyleMa, C., Dang, H., Du, J., He, P., Jiang, M., & Wei, Z. (2021). Research on Automated Defect Classification Based on Visual Sensing and Convolutional Neural Network-Support Vector Machine for GTA-Assisted Droplet Deposition Manufacturing Process. Metals, 11(4), 639. https://doi.org/10.3390/met11040639