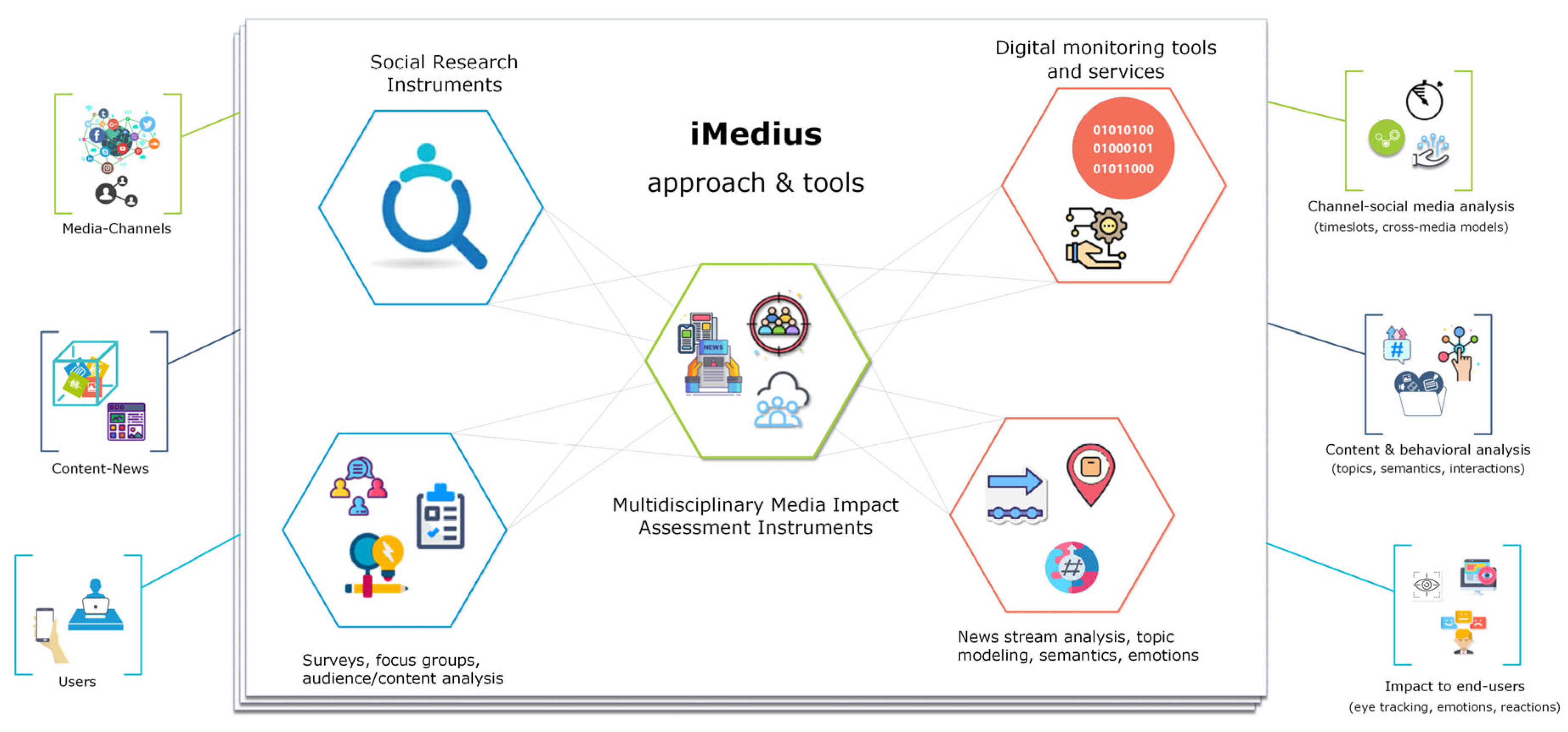

This section presents the results of the current work, including the development and integration of the iMedius environment with the presentation of the crafted eye-tracking and form-building services. The value of the framework is validated through usability analysis and evaluation of its corresponding functionalities, as well as findings and broader research insights from the pilot implementation of the representative use-case scenario, addressing the perception of tampered content. Based on this data, the state research hypotheses and questions are answered and further discussed, highlighting the goals achieved and the true potential of the proposed system.

5.1. Implemented Modules and System Integration

The iterative feedback from social scientists and multidisciplinary researchers highlighted the need for a user-friendly form-building tool. As a result, the design of the iMedius Form Builder focused on providing the functionalities in an easy-to-understand manner, avoiding unnecessary styling design that may cause disruption or confusion. Concerning the development technologies used and the associated software frameworks, the Form Builder was developed using the popular open-source React framework Next.js, which was selected to align with modern development standards, enabling server-side rendering, automatic code splitting and file-based routing. Also, by using the framework’s utility to build reusable components, a modular structure suited for scalability was ensured, a critical point for building production-ready applications. Likewise, eye-tracking functionality was implemented through machine learning solutions. The decision to use machine learning libraries in favor of computationally heavy geometrical analysis was driven by the need to integrate a lightweight, cost-effective tracking tool able to perform correctly without specialized equipment. On top of that, quick calibration processes enable models to retrain and adapt to the conditions of each different experiment, as further explained in a subsequent paragraph. The system is hosted on a virtual machine, ensuring complete control over the deployment environment and independence from third-party cloud tools and services, with a PostgreSQL relational database used for data management.

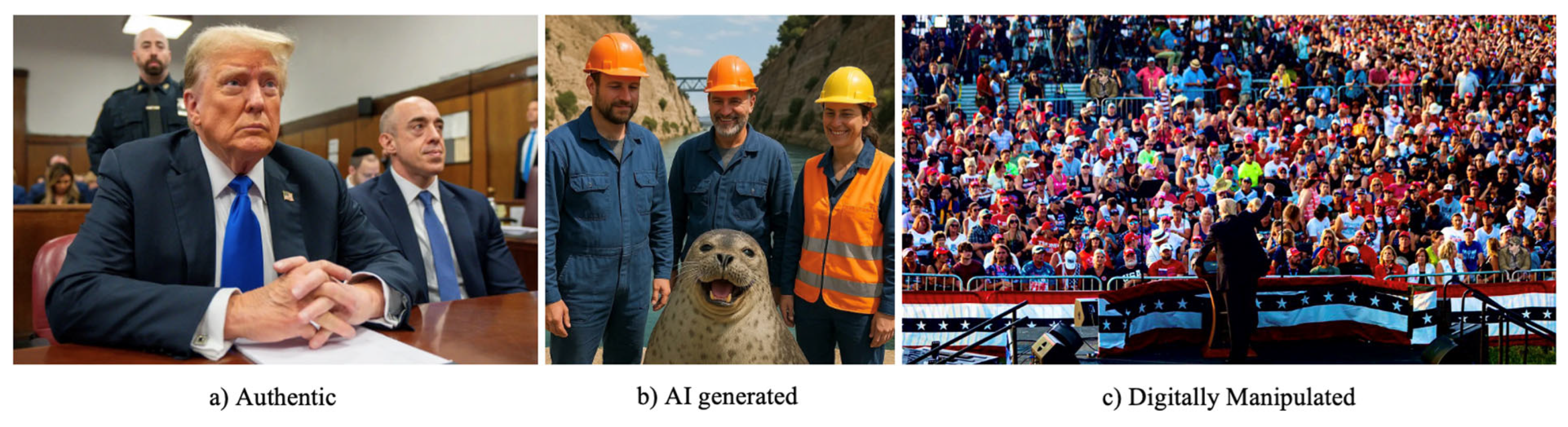

All features and functionalities of the tools in the iMedius framework have been implemented after careful consideration of the iterative review and feedback processes, extensively described in

Section 5.2 Usability and functionality evaluation. In

Figure 4, the Form Builder dashboard and the form-building interface are presented. As shown in this figure, the dashboard enables researchers to analyze aggregated statistics regarding form submissions through an interactive pie chart. Similar visualizations are presented for individual forms on their dedicated pages. Statistics visualization is an important feature providing information to facilitate users, increase user interaction and engagement. The dashboard is the main area of the platform for researchers to perform actions related to forms and enable additional functionalities. From there, the form-building interface can be accessed, presenting researchers with a drop area, where they can drag and drop various form elements to construct their survey. Using the tools provided in the drag and drop elements panel, the researcher adds necessary components such as: Title and Paragraph fields to construct form sections and provide instructions or contextual information to the participants, Image Field to add all images that will be used in the study (with or without attention tracking), video sections and various multiple choice or option selection elements, specialized to ensure the necessary structure of data collected from responses.

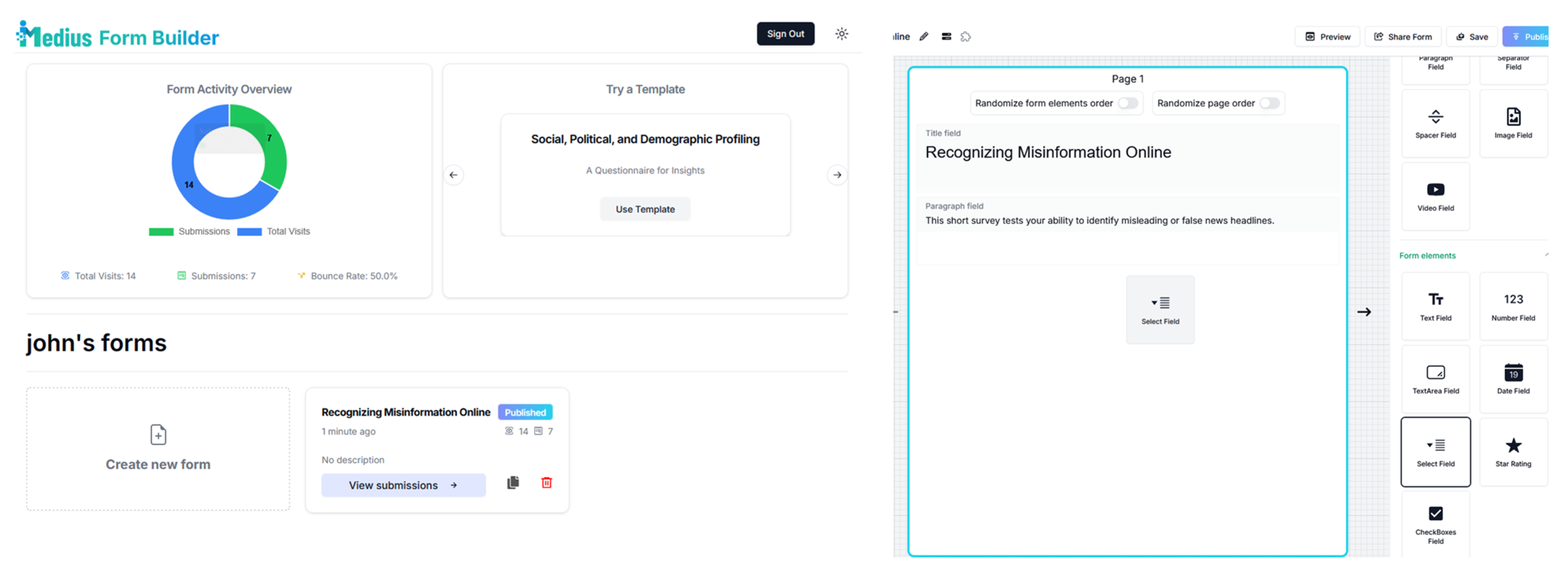

Forms with tracking mechanisms differ from basic forms in terms of user interactivity and functionality. To ensure data privacy and security while maintaining computational efficiency and functionality, the tracking algorithms collect data only on specific time intervals during each study. More specifically, each part of the form that requires eye or mouse tracking has a specialized button that enables tracking while informing the user. By enabling an attention-tracking element, users are notified accordingly, and the data extraction process begins. For example, eye-tracking data collection is enabled through selecting to inspect visualized content and images in full size. Selecting this option presents participants with all the necessary information and data privacy instructions. By exiting the attention-tracking process of each element, the survey’s content is automatically revealed, and attention tracking is disabled. Surveys that incorporate attention-tracking mechanisms require additional steps from participants. To ensure high data precision in the data collection process, a dedicated calibration page for eye-tracking algorithms has been implemented (

Figure 5). Eye-tracking calibration follows a systematic process of pointing and clicking in various parts of a detailed background to configure the initial algorithm measurements correctly. Our decision to use a background that mimics a real web environment, discarding the typical blank canvas used in calibration processes, was made to increase engagement. Internal testing confirmed improved calibration accuracy with this approach. Extensive testing was conducted to determine an optimal default threshold for calibration accuracy. Participants who do not meet this threshold during calibration are advised to repeat the process. An accuracy value of 89% was selected as the default, offering a balanced trade-off between realistic experiment conditions (e.g., suboptimal lighting, participant movement) and reliable data collection. Researchers can modify this threshold to better suit their study requirements. Notably, our real-world experiments yielded an average calibration accuracy of 95% (±2.1%), significantly exceeding the default threshold. This further validates our decision to set a reasonable minimum value that ensures accurate tracking.

Participants are required to conduct this process once per survey, following the instructions carefully in order for the system to analyze and correlate the data collected from mouse and eye movement. Given the utilization of AI algorithms, this data serves as training samples to train the algorithms further and fine-tune them to function correctly in the given test conditions. On all stages of survey submission, pseudo-anonymization techniques are employed to ensure that personal identifiers are not stored or linked to collected data. With this approach, the system prioritizes participant privacy while enabling rich data collection about the surveyed topic.

The Browser Tracking extension (logging browsing history) is designed to work as a complementary tool for the Form Builder (this option is considered optional, not placed in the core of the current research, and is presented here for the sake of completeness). At the form-building stage, researchers can activate the browser extension functionality and enable tracking during form submission. Forms involving the extension will notify the participants and request the required permissions to function. Participants have to apply a simple configuration on the same page as the form, providing a username to enable pseudo-anonymization of the collected data. If users refuse to activate the extension, no data collection process is activated. Moreover, during the survey, participants have the option to opt out of browser tracking and delete all relevant data. In the settings section, participants have full control of their data, enabling them to preview what is recorded, delete undesired collected data or entire sessions. The extension can record a detailed sequence of visited sites, capturing Web URLs and queries, Facebook and YouTube links and generic webpages. Additionally, browser tracking can be extended to incorporate attention tracking, similarly to the Form Builder, providing a complete data collection system designed to capture user browsing behavior, attention patterns, and screen activity during web sessions.

Both the Form Builder and Browser Extension tools utilize the open-source JavaScript library Webgazer.js [

39] to perform eye tracking. As a lightweight library, it offers an optimal trade-off between computational costs and tracking effectiveness. WebGazer uses machine learning techniques based on linear and regularized regression models to estimate gaze locations. As previously stated, our integrated, cross-tool attention-tracking approach enables modular development. More specifically, as eye-tracking research advances, the tracking algorithms can be seamlessly updated to both tools, avoiding data synchronization issues and preventing functionality conflicts between different tracking frameworks.

Data visualization is a key factor in efficiently utilizing the extracted data and also assessing its usefulness. By visualizing the results of the surveys correctly, researchers can enrich their analysis, gaining from the best-suited data representation tailored to their needs. The adopted data visualization methodology follows the same principles as those presented in the work of [

40]. There are two methods for visualizing data extracted from attention-tracking functionalities—fixation maps and heatmaps. Fixation maps visualize the interaction of participants with the content over time by leveraging the timestamps associated with each data point. In the iMedius framework, heatmaps are provided in a tunable form through adjustable sliders (

Figure 6). Heatmaps present a time-intensity summarization of the recorded attention activity (i.e., coloring with more intense colors the areas that gathered users’ attention the most). Researchers can configure the sliders and select the appropriate representation transitioning from raw data points to smoothed heatmaps.

Regarding the iMedius social media monitoring toolbox, it also has a complementary role (especially in the present work), detecting thematic topics or media trends in the pre-intervention phases through its topic modeling capabilities, thus helping better organize the corresponding surveys and monitoring sessions. Topic modeling is the process of using statistical models to automatically discover abstract topics hidden in a collection of documents. In the context of social media, topic modeling is used to make sense of large amounts of unstructured text data by breaking it down into smaller groups that each represent a topic or theme. This allows researchers to explore how different conversations or narratives are formed across a dataset. In social research, this process is beneficial but is often performed manually, especially when the dataset is small. However, this manual approach becomes almost impossible when dealing with large datasets, which are now very common due to the high volume of content generated daily on social media platforms. Therefore, automatic topic modeling is an important step for researchers who want to work efficiently and at scale. The iMedius social media monitoring toolbox supports users throughout this process. It not only offers a simple graphical user interface for applying topic modeling techniques but also provides a full workflow that guides users through each step of the process, making topic modeling more efficient and more accessible.

A comprehensive comparison of various tools for attention tracking and survey creation is presented in the following table. It highlights the key features of the iMedius platform alongside other widely used tools, including iMotions, WebGazer, LimeSurvey, and Google Forms.

Table 2 outlines functionalities such as dynamic questionnaire creation, attention tracking (mouse and gaze), and integration with other features. The comparison offers a clear overview of how the iMedius platform stands out in providing an integrated and flexible solution for attention tracking and behavioral analysis, making it a more powerful choice for researchers in this field. As we can see, many of the listed utilities are not even considered in some of the competing tools (listed as NA, i.e., non-Applicable), fact that reveals the superior integration and adaptability that iMedius framework offers, which, according to the literature review and the conducted research, is considered essential for behavioral monitoring in many social science directions.

5.2. Usability and Functionalities Evaluation

As already stated in

Section 4.2, the evaluation of the platform and its functionalities is considered of great importance to ensure that its reliability, usability, and ease of use for the targeted users. This step (Alpha testing) involved assessing the tool based on its content (features) and operations. Iterative internal sessions were organized and conducted within the interdisciplinary research team to obtain feedback on the ease of utilizing the platform and its respective features (survey creation, building questionnaires, and using the attention-tracking mechanisms).

The evaluation of the platform detected a number of issues that had to be addressed to facilitate easy use and match the social science researchers’ expectations. To make the platform more usable and adaptable, different solutions were put in place. The upgrades included a form duplication mechanism, increased character limitations for text inputs, choices for formatting text, and the ability to randomize questions and pages. These changes made the iMedius Form Builder easier to use and more useful for researchers involved in complicated studies.

Specifically, a “Duplicate” option was added, allowing users to copy existing forms for research that requires repetition or comparison across multiple instances. The character limit for text input fields was raised to 2000 characters to facilitate more complicated social science questions, enabling researchers to give more extensive instructions and context to the participants of their studies. Regarding style, basic text formatting choices were added, including bold, italics, line breaks, and list creation. The appearance and clarity of questions were enhanced, particularly for complicated questions. To enable form creators to identify required modifications and ensure the form’s visual appeal and functionality, a “Preview Mode” button was added, allowing the form to be inspected from the respondent’s viewpoint. Furthermore, taking into consideration that randomizing the sequence of questions and pages helps decrease bias and produce reliable and trustworthy data, mechanisms were added to allow users to randomize the sequence of questions and pages.

In terms of the platform’s collaborative nature, a “Share” option was introduced. This feature enables co-researchers to collaborate on constructing things, allowing multiple users to share and work on forms simultaneously. It makes it easier for people to work together, share ideas, and make sure that multiple points of view are taken into account while designing and building the tools, thus making the research process better and more effective. Finally, in addition to the primary form creation process, form templates were added to assist researchers in structuring their forms. In this context, there is the opportunity to broaden the template area with further options as the project progresses and additional effective, validated, and engaging surveys are developed. Some of the points discussed above, including the addition of the “Preview Mode” button, the capability to randomize the sequence of questions and pages, and the introduction of the “Share” feature for collaboration, have been thoroughly examined in a prior study focused in the areas of UX evaluation of the platform through a focus group with subject matter experts [

41].

Apart from this formative evaluation and its associated adaptation and debugging processes, all the members of the multidisciplinary iMedius research team (18 in total) were requested in these sessions to answer and discuss the semi-structured interview questions of

Table 1, which were mainly crafted to obtain qualitative feedback in the Beta testing. No doubt, this procedure involves a significant amount of subjectivity for several reasons (i.e., most iMedius researchers had prior knowledge of the targeted functionalities from their involvement in the project proposal preparation, and they gradually became more familiar with the desired features over time). Still, it was important to monitor the levels of confidence statistically increasing in all questioning items, with the corresponding variance in the given answers constantly decreasing, thus validating the chain of testing, debugging, updating, and overall progress within the interdisciplinary participatory design.

The Beta testing phase, providing feedback by actual users outside the project team, is essentially the first real-world environment. As already noted, the associated sessions were conducted on the occasion of the 2024 iMEdD International Journalism Forum, where a related iMedius-driven workshop took place and an iMedius stand was placed at the venue, the so-called Media Village, inviting participants to interact with the platform. Specifically, twenty (20) semi-structured interviews were conducted with ten (10) people with a theoretical/social science background (Media Professionals/Journalists) and ten (10) with a background in media technology and software engineering, who provided feedback answering the question of

Table 1, after interacting with the platform.

The five (5) questions were aimed at collecting data about the overall value of the iMedius platform and its potential to be utilized in media studies. Responses to Q1 showed that participants had different levels of comfort with using attention monitoring technology for research purposes. Several participants were in favor of the proposal, provided that rigorous data protection procedures are in place. Social scientists stressed the need for honesty and openness, saying that people should know how their data will be used. Technologists said that encrypting and anonymizing data could help with privacy issues by making it easier to use these methods in research. It is interesting to note that none of the answers revealed subjects being downright uncomfortable, which suggests that they would be okay with these technologies in the correct situations.

When people discussed the most significant characteristics of a form-building tool, they mentioned that usability, data analysis capabilities, and privacy were the most important (Q2). Many people noted that ease of use, data analysis features, security and privacy aspects are all equally important. They declared that these characteristics make form-building tools far more valuable and trustworthy. Another significant aspect that arose was the option to customize forms to meet individual needs. Many participants expressed their appreciation for such a feature. These answers show that users are in favor of form-building tools being simple to use and safe, without requiring demanding know-how, so that average users with low technological expertise can efficiently operate them. Social scientists emphasized the importance of the tool being able to adapt to the needs of different types of studies (cross-sectional studies, experiments, etc.). On the other hand, those coming from the technology domain underlined the importance of keeping data safe.

The review of the iMedius Form Builder was enormously positive. Social scientists appreciated the ease of use, while technologists valued the customization and data-driven capabilities (Q3). Everyone who took part in the study found the iMedius platform to be useful, with 17 of them describing it as very useful for creating surveys. Notably, none of the responses reveal that people were unhappy or that the platform lacks practicality and usefulness. This result shows that iMedius can significantly assist diverse groups of researchers and media professionals in executing and collecting data for their studies.

The majority of those who participated in the demonstration of the platforms stated that attention tracking seems beneficial for studying how people consume news (Q4). Most answers characterized this as a desired feature to acquire more insightful information for media studies, expressing the importance of studying the way people interact with news content, so as to optimize it. A participant from the social scientists group stated:

“Attention tracking capabilities are very important because they help us understand how consumers interact with media on a deeper level, which we can then use to improve content and make it more interesting”.

Social scientists also noted that it can help determine how interested or engaged readers are with content. At the same time, technologists emphasized the use of AI services to visualize and interpret this data.

Finally, participants’ experimentation with attention tracking helped us to extract valuable data about its value in media studies (Q5). Most of the subjects supported that the integration of attention-tracking technologies in the Form Builder is beneficial, as it will help them gain a better understanding of how people read news and interact with media.

All interviews mentioned that one of the best aspects of the implemented tool is that it enables more data extraction, which enhances the study of user behavior. The statement of a Media Researcher illustrates the above finding:

“This tool’s power to capture attention will significantly deepen my understanding of users’ behavior by providing insights that would have been difficult to gather otherwise”.

Also, most of the people who took part in the semi structure interviews stated

“they would definitely recommend the products to coworkers or peers in their sector”

And, commenting on the open access, free of charge nature of the tool, added that

“the fact that it is free makes it even more accessible and beneficial for researchers with limited resources”.

It is important to note that none of the participants found the attention-tracking utilities to be non-useful, proving that people really liked the idea of combining forms with attention monitoring in media studies. On top of that, social scientists indicated that combining qualitative and quantitative data can help researchers better understand user experience. On the other side, technology experts argued that acquiring more data can make studies more accurate. In all cases, positive feedback was received, with little or no specific comments indicating critical problems or difficulties in resolving them.

5.3. Pilot Implementation and Assessment: The Case of Tampered Images Perception Analysis

Following its configuration, the pilot real-world scenario focusing on tampered images perception analysis was implemented during three sessions of one and a half hours each, elaborating on previous research [

34] with the incorporation of attention-tracking mechanisms. The inclusion of this pilot study in the current work serves to assess the maturity and practical applicability of the developed framework in a real-world media impact analysis scenario, directly addressing research question RQ2. Given the exploratory nature of the study, the small sample size is not intended to draw definitive, generalizable conclusions but rather to serve as an initial step in the tool’s validation.

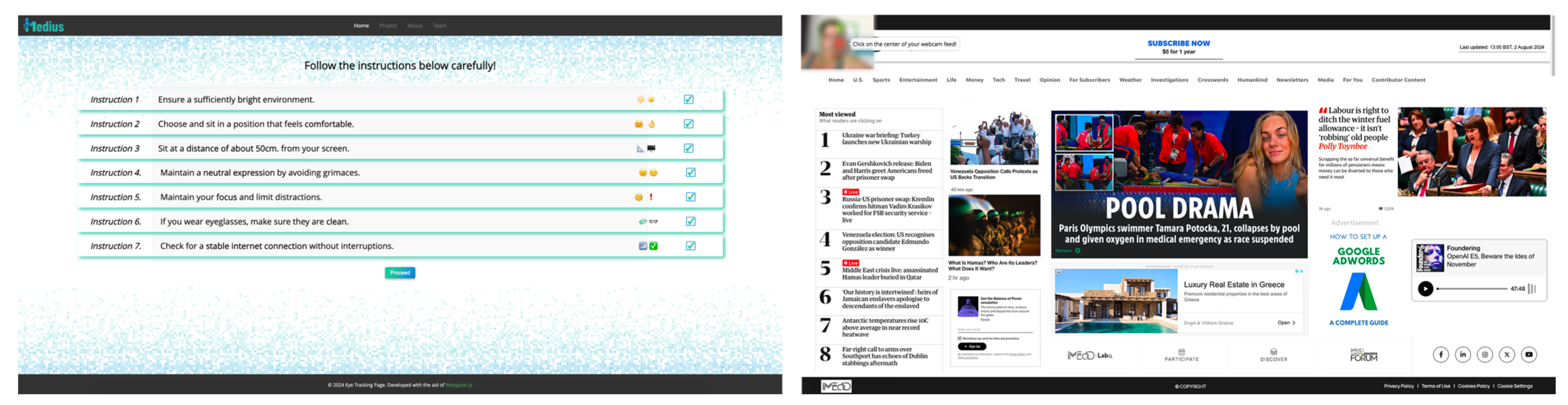

Using the iMedius Form Builder and the attention-tracking capabilities, the primary goal was to investigate how the progressive provision of information (technical tools, searches, AI detectors of tampered content, etc.) affects participants’ ability to recognize authentic, AI generated, and manipulated images. The participants were 28 students in the fields of Journalism, Digital Media, and Communication in three Greek universities (Ionian University, Aristotle University of Thessaloniki, and University of Western Macedonia).

Initially, participants were informed about the research scope and the inclusion of attention tracking in the survey. They were asked to work on their own laptops and follow carefully a series of instructions. Specifically, they had to ensure a sufficiently bright environment, fit in a comfortable position, keep about 50cm from their screen, maintain a neutral expression by avoiding grimaces, limit distractions, make sure their eyeglasses were clean and check for a stable internet connection without interruptions. After the completion of the calibration process, participants filled out a standardized questionnaire concerning some demographics (e.g., gender, age, educational level, monthly income), their opinions on social and political issues, such as criminality, justice and equality, and their attitudes towards institutions and the media (e.g., trust and credibility).

Participants were then asked to determine the authenticity of a presented image and decide whether it was authentic, AI generated, or digitally manipulated, after inspecting it in full size, with this viewing mode also activating the attention-tracking mechanism. The evaluation process for each image followed a sequential five-step protocol:

Simple observation: Participants viewed the image without any additional context or tools.

Color map analysis: Participants viewed color maps highlighting potential areas of alteration on the image.

Probability map analysis: Participants examined probability maps indicating regions likely to have been generated or altered, along with a forgery probability percentage.

Reverse image search: Participants were provided with the results of a reverse image search to assess the previous online presence of the image.

AI probability assessment: Participants were shown the results of an AI probability assessment tool to find out whether the image had been AI-generated or manipulated.

At each step, participants were required to classify the image as authentic, AI generated, or digitally manipulated, and to justify their decision using a predefined set of responses. This self-reported data was integrated alongside the attention-tracking data, allowing for a comprehensive understanding of how participants’ subjective justifications aligned with their visual engagement, as captured by eye tracking and gaze data.

The sequence of image evaluation began with an authentic image, during which only the first four steps were applied (i.e., the AI probability assessment tool was excluded). Then, the presentation of an AI generatedimage and a digitally manipulated image followed, each of which underwent the complete five-step evaluation process.

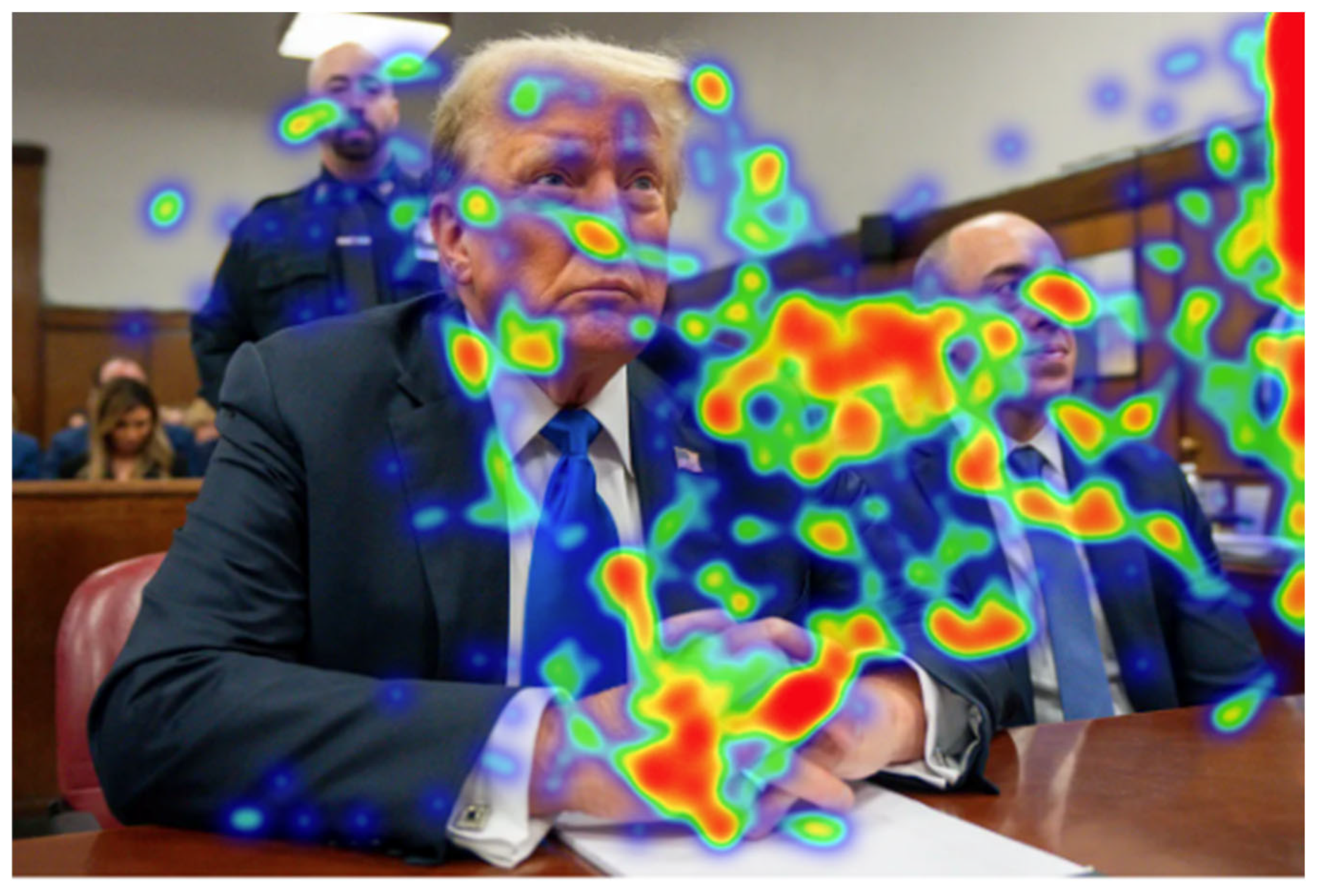

Initially, participants were asked to observe the authentic image without any prior knowledge. However, only 11 of them recognized it correctly as authentic, a number equal to those who considered it manipulated. However, this first step was the one with the highest accuracy, since the contribution of the color maps led three participants to change their mind and evaluate the image as manipulated rather than authentic. Simultaneously, the analysis of the iMedius platform extracted heatmaps provides additional data about participants’ visual strategies throughout the various steps of the study (

Figure 7). Specifically, in Step 1, participants focused closely on Trump’s face and hands, as these areas possess significant diagnostic value; faces indicate asymmetries and unnatural textures, while hands often reveal morphological inconsistencies in deepfake and AI-generated images. Their emphasis indicates an inherent quest for nuanced irregularities within intricate visual characteristics. This behavior is consistent with established research indicating that humans inherently focus on faces and extremities when identifying synthetic modifications [

63]. The introduction of Color maps (Step 2) significantly redirected visual attention to the artificial color patterns, resulting in possible over-analysis, thus reducing the number of correct answers. Neither did the probability map (Step 3) nor the reverse image search (Step 4) restore confidence in the authenticity of the image (

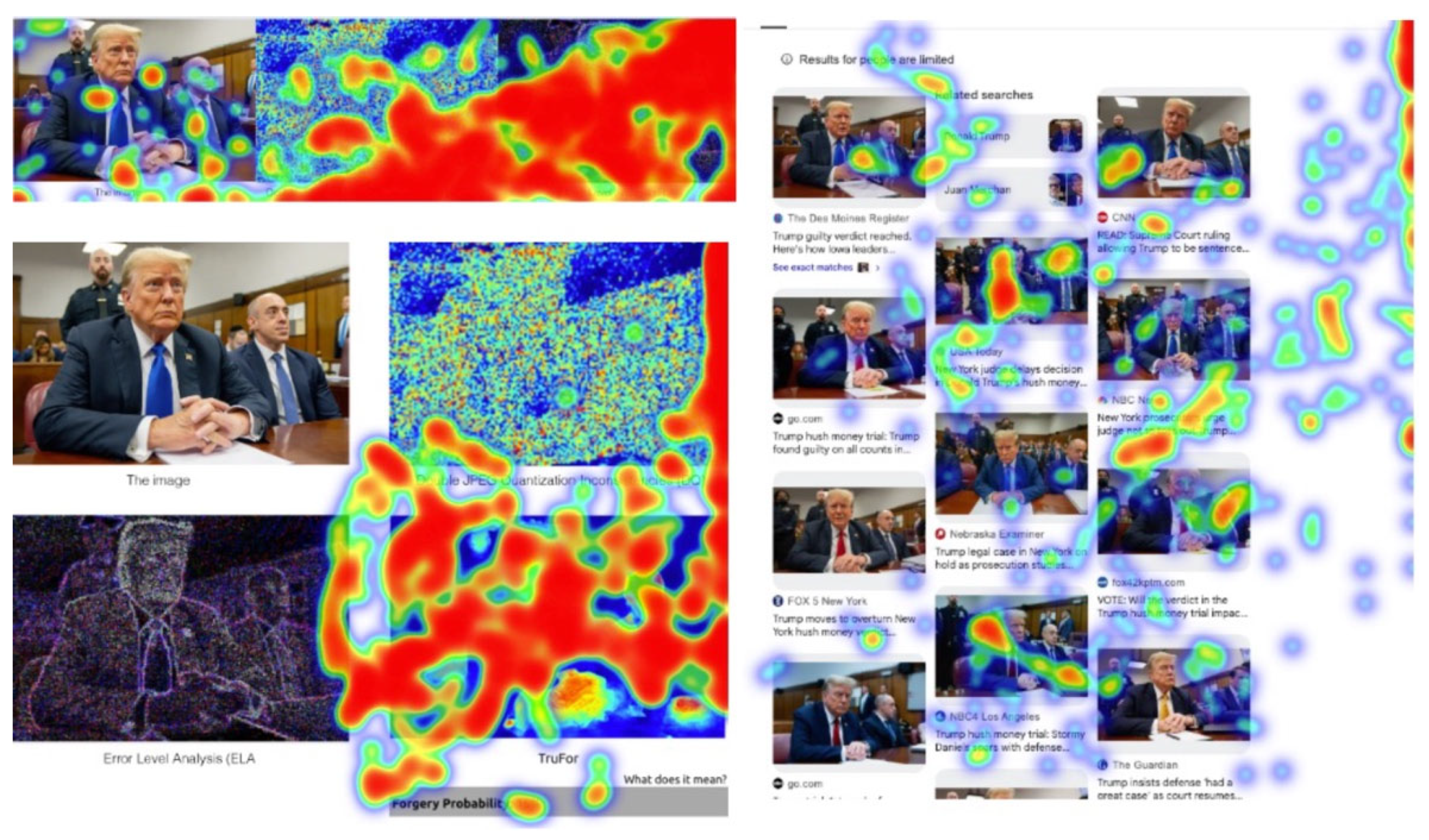

Table 3). In more detail, in Step 3, participants predominantly focused on the probability map instead of the image under examination. They focused on the tool’s visual indicators of manipulation, suggesting that the system’s technical output more influenced their answer than the content presented, indicating a transition from natural visual interpretation to tool-driven reasoning, which, in the case of an authentic image, may lead to “misdiagnosis”. The introduction of reverse image search results (Step 4) directs focus from the image itself to the contextual metadata, including titles, thumbnails, and source information, as participants pursued external validation (

Figure 8). Step 5 (AI probability assessment) was not applied to the authentic image and is therefore excluded from this heatmap interpretation.

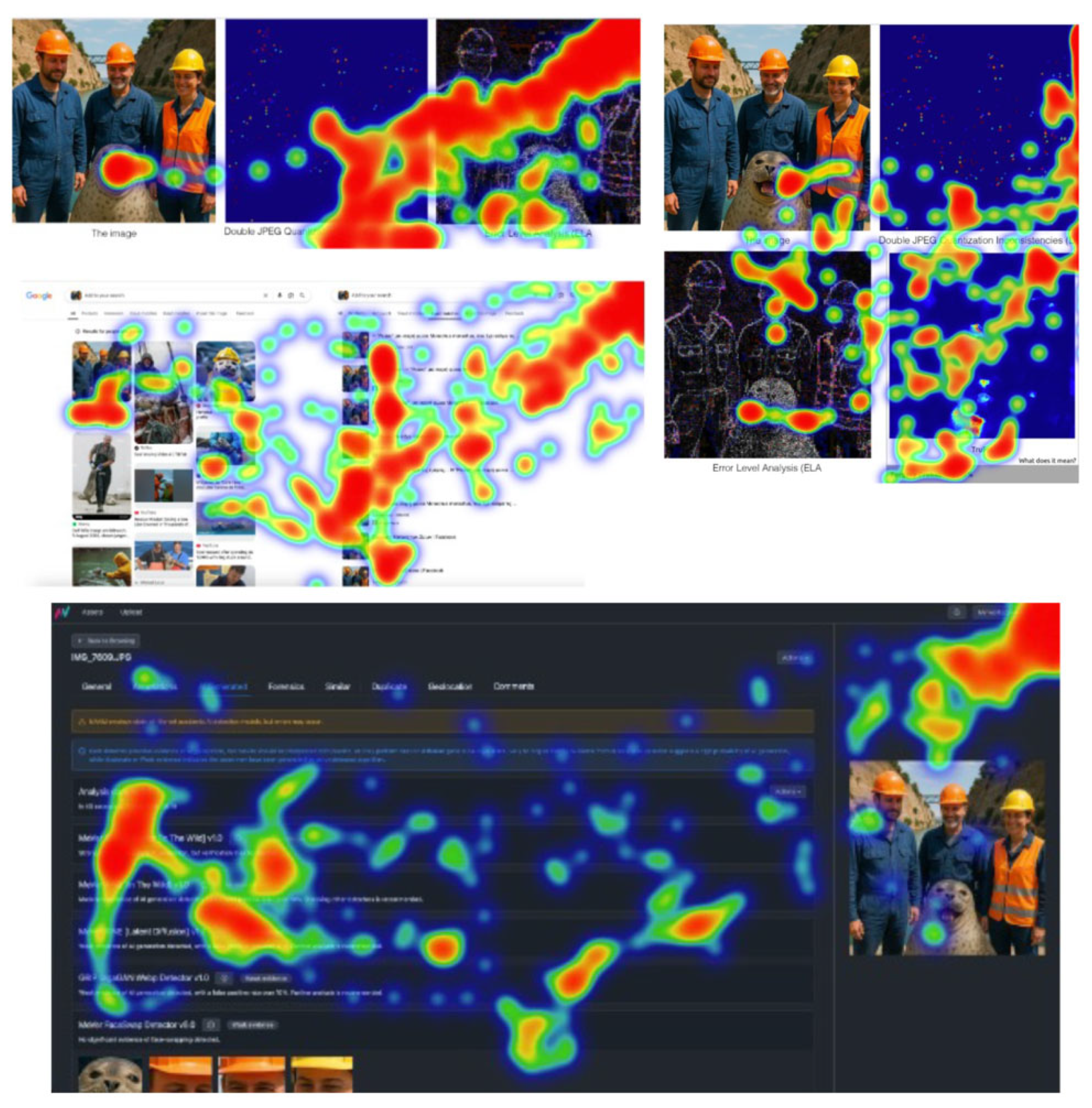

Then, the second image was presented to the participants, and most of them correctly identified it as AI generated, just by simply observing it (Step 1) (

Figure 9). Color maps (Step 2) caused a slight decrease in accuracy, possibly due to over-analysis or misinterpretation. The probability map during Step 3 partially corrected the drop, confirming the initial intuitive judgment. Subsequent tools in Steps 4 and 5 (reverse image search and AI probability assessment) did not significantly change the performance, indicating that the recognition was already established (

Table 4) (

Figure 10).

The attention heatmap analysis of the AI-generated image depicting the seal indicates a structured evolution in participants’ visual behavior, moving from intuitive inspection to tool-assisted verification. In the initial observation (Step 1), the gaze predominantly concentrated on the seal rather than the human figures. The interest in the unusual element indicates the presence of cognitive dissonance, although it does not imply a definitive suspicion of manipulation at that point. With the introduction of technical cues (Step 2), visual attention was directed toward particular regions indicative of potential tampering. Participants approached these regions with curiosity instead of certainty, suggesting an exploratory rather than diagnostic application of the tool. The incorporation of the probability map (Step 3) enhanced focus on the seal and adjacent facial areas. Gaze patterns indicate that participants actively sought confirmation of their suspicions via tool-generated markers, increasingly depending on the visual guidance offered by digital forensic platforms. In Step 4 (Reverse Image Search), considerable focus was placed on the search results, especially on images depicting alternative versions of the scene. This phase initiated an apparent emergence of doubt, as participants started to contemplate the possibility that the seal may not be associated with the original context of the image. In Step 5, the AI assessment incorporated both the original content and user-generated commentary, or visual evidence sourced from online platforms. Participants systematically compared the suspect image with external findings to obtain definitive confirmation. The impact of collective analysis reinforced their conclusions.

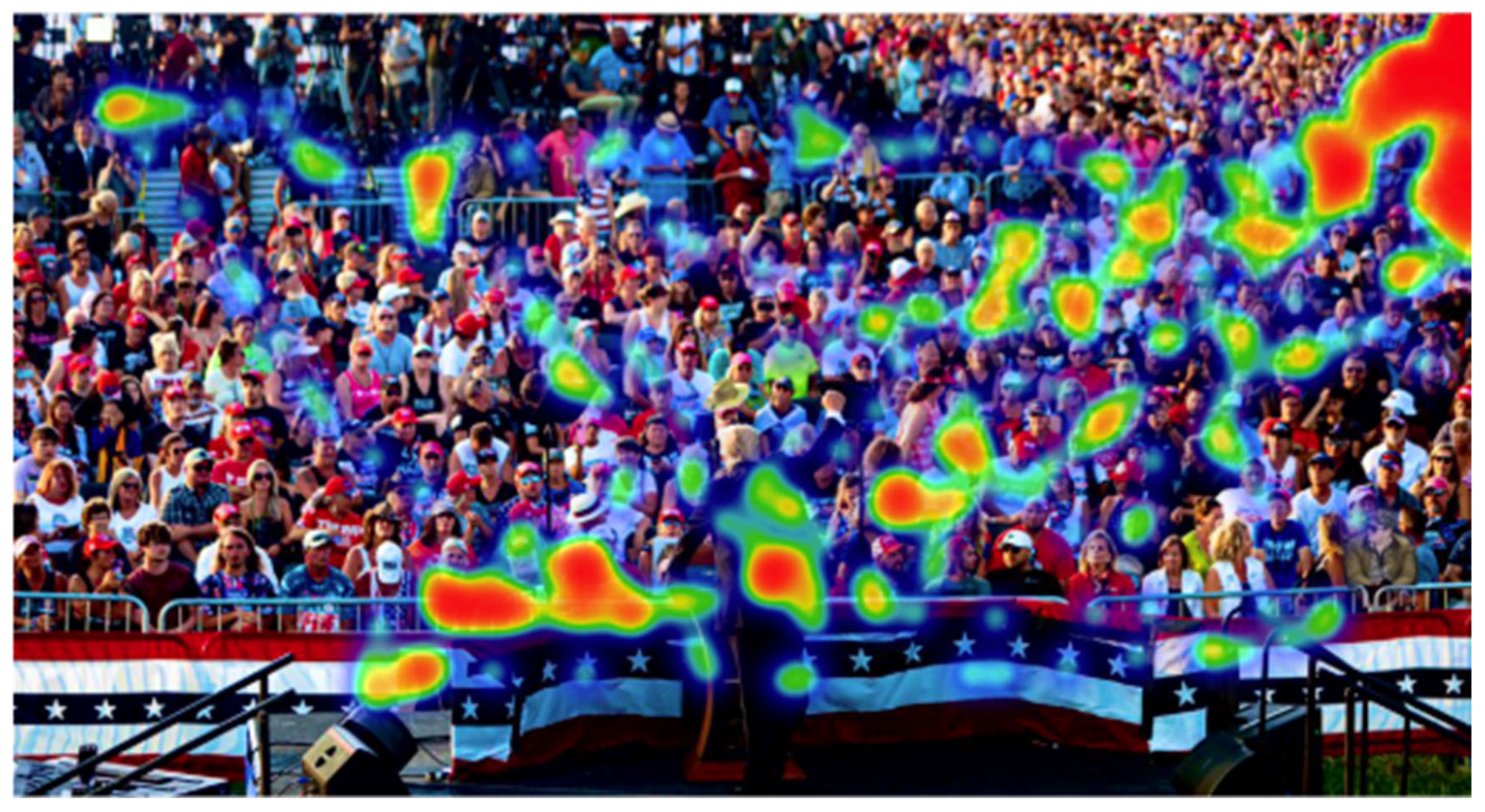

Regarding the third, digitally manipulated image, most participants incorrectly identified it as authentic after simple observation. The use of forensic tools led to a gradual improvement in the evaluation. Specifically, in Steps 2 and 3 (after color and probability maps), a slight increase in accuracy was observed (up to 10%). The reverse image search was decisive, as in Step 4, the accuracy reached 62%, while no participant characterized the image as authentic. In the final step (Step 5), the accuracy declined (~41%), while the responses became more fragmented. The AI probability assessment tool may have created confusion or doubt, rather than enhancing performance (

Table 5).

The examination of the heatmaps for the digitally manipulated image reveals a progressive improvement in participants’ visual behavior, following the introduction of external tools and context. In the initial observation (Step 1), attention was broadly dispersed throughout the crowd, lacking a distinct focal point. Step 2 directed focus toward distorted areas within the image. Nonetheless, the gaze was disorganized, as participants searched for indications of manipulation without a coherent visual strategy.

The implementation of the probability map (Step 3) markedly changed visual engagement, suggesting a more intentional effort to identify tampering through the tool’s forensic indicators. In Step 4 (Reverse Image Search), focus shifted to comparative evaluation. Participants conducted a visual analysis of various iterations of the image displayed in the search results, systematically comparing them to detect discrepancies, signifying a shift from internal evaluation to external visual validation. In Step 5, which involves AI probability assessment, significant emphasis was placed on the commentary and associated images. The heatmaps indicate that participants engaged in focused reading of crowd-sourced interpretations and conducted analyses of alternative image versions (

Figure 11 and

Figure 12).

Building on the previous analysis, the correlations between the responses and the justifications provided by participants were investigated. Chi-Square Test of Independence was applied to examine the relationship between the two categorical variables: the type of image classification (Authentic, AI generated, Manipulated) and the justification reasons provided by participants for their previous classification. The Chi-Square Test was performed at each of the five steps in the image evaluation process, with the primary goal of determining whether the distribution of justification reasons significantly differed based on the type of image classification. Specifically, it was tested whether certain justification reasons were more likely to be associated with a particular type of image (Authentic, AI generated, or Manipulated).

The results of the Chi-Square Test indicate significant correlations in the earlier steps of the evaluation process, particularly during the initial steps (Step 1, Step 2, and Step 3), where participants relied more on personal criteria, such as visual, technical, or intuitive assessments, to justify their classification decisions. This suggests that participants’ reasoning was heavily influenced by their individual interpretations and perceptions at these stages.

In contrast, the analysis revealed that Step 4 (Reverse Image Search) had a significant influence on the participants’ decisions, as it provided external information that could either confirm or challenge their personal justifications. This external validation seemed to reduce the reliance on personal criteria, thus leading to stronger correlations between the image classification and justification reasons. However, in some cases, the introduction of external information led to conflicting or inconsistent justifications, indicating that the participants’ reasoning became more diverse and complex when exposed to additional tools.

Finally, Step 5 (MAAM) showed a mixed effect. In some cases, the MAAM platform enhanced the accuracy of participants’ decisions by providing further validation, while in other instances, it caused divergence and inconsistency in justification. These findings suggest that while the MAAM tool can be a helpful aid in decision-making, it may also introduce variability in participants’ assessments due to its complex nature and reliance on multiple factors.

Overall, the Chi-Square Test revealed that the type of image classification was strongly associated with participants’ justification reasons, particularly in the earlier steps of the evaluation process (

Table 6).

The overall findings of this pilot study indicate that the introduction of color and probability maps significantly differentiated the responses, enhancing either certainty or confusion, depending on the image and profile of the participant. In the reverse image search step, participants were exposed to similar information, resulting in the alignment of their responses and the reduction of differences in justification. The AI probability assessment tool caused either confidence or doubt, as it was reflected in statistically significant differences in justifications, showing how users did not interpret their results in the same way. The statistically significant correlations in the early stages and their change subsequently confirm that the procedure applied captures the dynamic change in perception and evaluation. The above remarks indicate significantly more insightful analysis perspectives and outcomes compared to baseline studies (i.e., without the incorporation of attention tracking) [

34], even in smaller-scale research (i.e., with less population and number of image samples). However, the most important aspect here is that the proposed iMedius platform makes it possible to organize such complicated surveys, incorporating attention-tracking mechanisms with multiple experimental steps to investigate the impact and effect of each different digital forensics tool or practice. With the utilities of randomization, duplication, sharing, and database handling, such forms can be elaborated with expanded numbers of randomly selected images (as in the case of [

34]) to diminish any content-related bias, with much more subjects invited and involved, increasing the statistical reliability towards a broader picture, with more concrete and substantial generalization remarks.

5.4. Discussion and Answers to the Stated Research Hypothesis and Questions

Having accomplished the main objective of the current work, which is the end-to-end design, configuration, and implementation of the iMedius integration, the extracted experimental and assessment data can help answer the set hypothesis and questions. The central assumption behind the motivation of this work is that the envisioned media monitoring environment, integrating the combination of form-building management automation with flexible attention-tracking mechanisms and supplemented with further data monitoring and handling modalities, is not currently available, depriving researchers of valuable tools and resources (RH). Hence, there is a vital space to elaborate on these perspectives, incorporating multiple functionalities into a modular architecture, dynamically assembled through an iterative, interdisciplinary, participatory design and refinement process, leaning on the foundation of information systems design science research. Based on the formative evaluation outcomes and the feedback received during the Alpha and Beta testing procedures, the vast majority of the participants, who also represent the targeted audience of multidisciplinary researchers, strongly agreed that the envisioned multimodal instrument is not currently available in social science research. Another remark is that the offered functionalities are of great usefulness to interdisciplinary research collaborations, and especially in the sub-field of media studies. The high approval and usefulness of the tool, as indicated by the participants, reassures us of the project’s success. These findings align with the literature review conducted and the associated analysis phase during project development, revealing that competing applications do not exhibit the desired integration of tools and services, nor do they feature the targeted flexibility, adaptability, and ease of use.

Expanding on the set questions, the developed iMedius framework supports the envisioned multidisciplinary instruments for real-time news impact monitoring by analyzing user behavior, utilizing technologies such as dynamic questionnaires and visual attention tracking (RQ1). A major proof in this direction is that the iMedius platform is in place, it has been successfully utilized in a featured workshop during the iMEdD 2024 International Journalism Forum (gaining favorable comments and future directions feedback), and it also supported the implementation of a pilot use-case scenario, exemplifying the true potential of the iMedius toolset. Furthermore, both the iterative assessment and refinement sessions within the project team (formative evaluation and Alpha testing) and the remarks from the semi-structured interviews (Beta testing) confirmed the proper use and applicability of the crafted functionalities, which have been further demonstrated in a related publication [

41].

The combined previous two paragraphs also answer a significant part of the second question (RQ2), verifying that the released platform is open, usable, and convenient in real-world scenarios of media studies, ensuring its thorough future utilization by both the research and academic communities, as well as its incorporation in professional media processes. Indeed, the conducted workshop and pilot scenario discussed above represent real-world cases that can be encountered in media studies research, as well as in digital literacy and training practices within newsrooms and media agencies (i.e., training journalists and broader personnel on how to use digital forensic tools and making them aware of common mistakes they should avoid). Furthermore, compared to baseline studies on evaluating the photo-truth impact through image verification algorithms [

34], which were deployed without the use of such sophisticated tools, it has been demonstrated that iMedius introduces deeper analysis perspectives with more meaningful perception and interpretation results. In addition, this was the main reason for incorporating the use-case scenario into the current work: to test the capabilities and limitations of the iMedius framework and also to highlight new, impactful, and more sophisticated visualization and analysis methods. Based on the feedback gained by both the team members (especially those coming from social sciences) and all the implicated participants (i.e., in the iMEdD workshop, the Beta testing, and the pilot use case), the tools are suitable in a broader area of multidisciplinary research, from cognitive and behavioral studies to public communication, marketing, digital literacy, and more.