1. Introduction—COVID-19 and Social Distancing

According to O’Steen and Perry [

1], “a ‘disaster’ provides a form of societal shock which disrupts habitual, institutional patterns of behavior”. Given the abruptness of the COVID-19 outbreak, Bonaccorsi et al. [

2] suggests that COVID-19 could produce effects similar to those of a large-scale disaster. The abruptness of COVID-19 and the need to social distance and the move to remote working and learning is challenging teachers and researchers to find new or innovative methods to continue teaching and doing research, this especially true for social scientists that are frequently using and looking at social interactions.

While research is still being done on the impacts of COVID-19 on the education system, it is evident that COVID-19 altered the normal functioning of school systems at all levels [

3]. Prevented from being able to meet for classes, meetings, etc., in person, faculty, staff and students found themselves in a new world order in which they had to adjust to adhere to new public health guidelines [

4]. Higher education, specifically, faced many challenges; classes were disrupted, research was paused, and timelines altered. Wiggington et al. describes a world in which academic institutions, especially in regions where community transmission was severe (e.g., United States, Europe and China), had to halt all ‘nonessential’ on-site research activities (both in-lab and field-based research) abruptly [

4]. Still, the greatest impact, most likely, will be seen at the early-career scientist level (including, masters and PhD students) [

4]. Wiggington et al. purports that approximately 80% of on-site research was impacted at their affiliated institutions and that there will be long-term economic ramifications as a result [

4]. In terms of the United States in 2018, higher education institutions accounted for approximately 13 percent of the money spent nationally on research and development, and approximately 50 percent of the money spent on basic research nationwide [

4]. Altogether, COVID-19 emphasized the need for scenario planning and disaster preparedness in all sectors of society, including the research community, so that learning could still occur [

4,

5].

For social scientists, much of the research requires human interaction which is restricted or banned in the COVID-19 era. Engaging stakeholders into environmental decision making is essential for gaining better understanding of peoples’ perceptions and designing solutions that can be implemented to solve the issue under consideration [

6]. For example, evaluating social learning via stakeholder engagement workshops is being threatened during these times because effective engagement requires the interplay of participants through dialogue and transparency [

7]. According to Reed et al., social learning is a process in which societal change for social-ecological systems occurs when people have the opportunity to exchange thoughts, ideas and values with their peers while also learning from others additional ways to perceive these systems [

8]. The elicitation of shared values, specifically, is most effective via social interaction, open dialogue and social learning [

9].

Future functioning of sustainable, long-term businesses requires the evaluation of social learning through the use of stakeholder engagement to understand and address the interests of employees, customers, suppliers and the community at large [

7]. However, COVID-19 changes how these interactions can happen for the indefinite future. The University of North Carolina provided examples of engagement modes that could assist researchers working with stakeholders during these times [

10]. Examples include the use of phone, email, snail mail, online file sharing, social media, and virtual meetings [

10].

The impacts of COVID-19 can be seen in all sectors; however, the continuation of successful research and business practices is essential to moving the world forward. Given the unknowns, in terms of how long we will need to remain socially distant, it could take a significant amount of time before research institutions reach a new normal [

4]. Additionally, given the duration of remote working and today’s technology there is a question of how or if this will change back after COVID-19. Remote working and social distancing present challenges both in terms of data generation, career advancements, and global research overall, unless we learn to adapt [

4]. While there are limitations to research based on the new work-from-home modality, Shelley-Egan acknowledges that this is also an opportunity for the research community to “imagine, and actively design, a future academic modus operandi characterized by a more sustainable, equitable and societally relevant research system” [

11]. In other words, research (both in the sense of conducting it and participating in it) should not be constrained by geography [

11].

This paper was created to showcase how social science research, specifically stakeholder engagement using the Deliberative Multicriteria Evaluation [DMCE] method, could still take place in a virtually distant society. To best of our knowledge the DMCE methodology has solely been employed during in-person workshops. Thus, this paper illustrates how the DMCE methodology can be adapted and move completely online when this is forced by external factors such as a pandemia. As Shelley and Egen emphasize, “the ontology of what it is to be a researcher and the temporalities, role identities, methodologies and epistemologies tied up with that will, most likely, adopt a different hue in—and beyond—this crisis” [

11]. This paper is meant to highlight the resources that are available to do so seamlessly and encourage a new way of thinking when planning for stakeholder engagement.

3. Revisiting Our Methodology in a COVID-19 Era

To accommodate for the inability of large groups to gather, the implementation of this research had two options: (1) wait for a time in which large group gatherings were allowed or (2) modify the methodology and transition the workshops online. Given the uncertainty of the times, we opted to explore the second option.

In order to determine whether the second option was viable given the resources available, we decided to approach and address each of the following dimensions: technology, modifications (to methodology, agenda and IRB), and scheduling.

Technology: To acquire the appropriate data for this research, participants needed to be able to interact and ‘move’ the scenario cards based on their preferences. Additionally, we needed to be able to record the workshops. Thus, the ease of use, security and cost were of the utmost priorities when determining which platforms to use. Zoom, Microsoft Teams, GoogleDrive and Google Hangout, WebX, StarLeaf, GoToMeeting, iTricks and Digital Hives were platforms suggested by colleagues, with Zoom being the most common and inexpensive. In combination with Zoom, GoogleDrive would allow us to provide participants with individual and group links that they would then be able to manipulate in real time when necessary. A folder was created for each participant in GoogleDrive so that their data could be stored, and only participants and hosts had access to it.

Modifications to Methodology: Fortunately, through the use of Zoom and GoogleDrive, the methodology did not need to be modified drastically. We were still able to evaluate individual and group preferences using the scenario cards and group discussions were still able to be had. The tactile (‘moving’ printed out versions of the cards) and in-person components were impacted the most but corrected through the use of technology and a moderator.

Modifications to Agenda: Originally, the in-person workshop was scheduled for 5 h (plus travel time by participants). However, given other work obligations and childcare, participants expressed their concern about being able to participate for the entirety of the pre-planned agenda (even virtually).

While there are many benefits to the new work-from-home lifestyle that COVID-19 enforced (decreased commute time, flexible schedules, productivity gains, etc.) [

19], we still had to be mindful of the negative aspects that truncated a participant’s availability from all day to just a few hours in the wake of transitioning these workshops virtually. Research has shown that COVID-19 created additional challenges for parents of children, especially those children of a younger age who were unable to go to school or childcare and could not be unsupervised [

20,

21]. These challenges affected parents’ ability to work-from-home undisturbed [

19]. Many co-parents also had to make decisions about who could work when and who took care of the children throughout the day [

20,

21]. In an email correspondence with a stakeholder, she acknowledged that her participation was now limited to half-a-day because her and her husband were both working and sharing childcare responsibilities (C.Tobin. personal communication, 3 April 2020).

A 2018 study found that American adults logged approximately 11 h of screen time per day [

22]. Sanudo et al. found smartphone use increased ~2 h per day during the lockdown [

23]. Another study found that adults who spent more than six hours behind screens are more likely to suffer from moderate to severe depression [

22]. Without the face-to-face meetings that oftentimes reinvigorate our attention spans, research has also shown the impacts of virtual meetings on people’s level of tiredness [

11]. Given that our workshops were at the beginning of the pandemic, participants may not have been as screen time- fatigued.

In addition to childcare challenges and increased screen-time usage, COVID-19 also promoted a more sedentary lifestyle, which can have detrimental effects on physical and mental health [

19,

23,

24,

25]. Recent research found that percentage of U.S. adults sitting more than 8 h per day increased 24% from pre- (16%) to during- (40%) confinement [

23]. These factors (childcare, increased sedentary lifestyle, and increased screen time) could have discouraged stakeholders from wanting to participate in this type of virtual workshop for a full day. Aristovnik et al. acknowledges that the success of the new work-from-home environment cannot be dependent solely on ease of technology; other challenges can include lack of motivation and the need for improved self-discipline [

26]. While the authors were specifically referencing student studying habits at home, those same challenges could affect non-students as well [

26].

Shelley-Egan discusses how COVID-19 has disproved the need for academia (and other professions) to be physically in-person to conduct work [

11]. Now, professionals have access to a plethora of online webinars and conferences that they otherwise may not have been able to attend in-person [

11]. Thus, and in combination with the factors discussed above, the whole online world is competing against one another, vying for people’s time. Since the data for this research was dependent on full participation, we had to adjust accordingly.

In order to truncate the agenda while not losing the necessary time for group deliberation, we decided to have experts pre-record their presentations that would provide context to what we would be discussing during the workshop. In doing so, we divided the workshop into three phases:

Phase I occurred prior to the participation date. Participants were expected to watch the pre-recorded presentations from the experts;

Phase II was the actual workshop on a given participation date; and

Phase III occurred after the participation date. Participants were expected to complete a post-survey within one week.

Modifications to IRB: The Institutional Review Board (IRB) at UMB had previously reviewed and approved our research proposal in early winter 2020. At that time, the workshops were planned in-person (over 2 days) with 3 deliberative groups per day and did not have content pertaining to the use of Zoom and GoogleDrive. Thus, we needed to submit a modification to our research proposal that would allow us to conduct the research in this new manner. The modifications included changing the workshop to web-based using the online platforms of Zoom and GoogleDrive, increasing the number of workshops from 2 to 3 and decreasing the number of deliberative groups per day from 3 to 1. Originally, the average IRB review time during COVID-19 was 3 weeks, however, they were able to review and approve in one day. If IRB had not reviewed so quickly, we would have been tasked with moving the workshops further into the summer.

Scheduling of workshops: The in-person workshops were planned for the end of April 2020. On 20 March 2020, UMB suspended all in-person meetings, especially with large groups. While participants had already agreed to the in-person dates, we knew that it would take time to completely switch the workshops online. With that, we chose to push back the dates to the end of May 2020.

Scheduling for participants: Immediately upon learning about the impacts of COVID-19 to in-person stakeholder research, we contacted the participants and notified them of the postponement of the workshops with the understanding that we were exploring ways to host them virtually. Once we had identified new dates in late-May, we confirmed their willingness and ability to participate with the caveat that we were still modifying the agenda and determining which technology platforms to use. Details were shared with participants as they were approved (IRB) and confirmed (technology platforms). It was essential that we shared the technology platforms that were to be used ahead of time so we could identify participants who were unfamiliar with said platforms, unable to use them given their at-home workstations, or unable to use them given affiliation rules. We had one participant who was unable to participate given an agency-wide mandate on the use of Zoom.

4. Discussion

4.1. Opportunities in Virtual Stakeholder Engagement

Overall, hosting the stakeholder meetings virtually using Zoom and GoogleDrive was successful. After participating in the workshop, stakeholders had the opportunity to complete an evaluation form to let us know about their experience, responses included:

“I appreciate how difficult this was over Zoom, how difficult surveys are in general and how difficult trying to get consensus on the topic of microplastic can be. I found the discussion interesting and informative for our own work communicating about this problem. I look forward to seeing the results!”

“Getting to consensus with a group of wide-ranging stakeholder views is challenging. A well-executed virtual workshop. Thanks.”

“The facilitators and format was excellent, and the zoom and google docs worked really well. This is a hard kind of stakeholder prioritization because it is kind of theoretical or hypothetical and not so real life. But this is the kind of information you need to better understand stakeholder values.”

“Very impressed at the effort it took to organize this and provide such clear direction for us.”

“Good learning experience and networking opportunity.”

Conducting in-person social science research can be costly in terms of funding and time. Expenses include room reservations (multiple if breakout groups are necessary), mileage reimbursement for participants, hotel reservations for experts, video-recording equipment, food, and supplies. Given the limited funding in social sciences, these factors can be a major deterrent from being able to conduct stakeholder workshops. Additionally, Shelley-Egen emphasizes that there is a suite of technological alternatives already in existence [

11]. However, most of those alternatives were created in conjunction with face-to-face meetings [

11]. Thus, a challenge for this research was to utilize platforms that would eliminate the need for in-person interactions.

We chose technology platforms that were not only familiar to participants but also at a low cost to us (GoogleDrive services were free and the upgraded Zoom account was already provided to professors from the University). There were no costs associated with on-boarding participants to these platforms since Zoom is free and GoogleDrive allows the host to send materials for review and manipulation by non-Gmail users. There were also no expenses associated with travel by hosts, experts, or participants. Oftentimes, the funding constrains the number of people who can participate given the associated costs per person. With this virtual workshop, we were able to invite as many stakeholders as appropriate. Additionally, over the three days, only 10 percent of participants recruited did not show up, whereas 30 percent of participants recruited for previous work did not show up [

16]. Altogether, using virtual platforms to conduct social science research removes the constraints detailed above and may provide an opportunity for the direction of future social science research.

We acknowledge that the success of this methodology is constrained by internet connectivity and quality and users comfort with technology and remote meetings. Belzunegui-Eraso and Erro-Garces emphasize the important role technology plays in the successful development of telework [

27]. According to Belzunegui-Eraso and Erro-Garces, the ideal teleworker is a “Millenial woman holding a higher education degree, with 4–10 years of professional experience, and working from home two days a week in the management and administration field” whereas the least ideal person is a “man of the baby boomers’ generation, holding a university degree, with 20 years or more of professional experience, and who started working remotely only during quarantine” [

27]. However, conducting human-centric research does not always allow for the ideal teleworker. Thus, this [meaning, technology literacy] is an important indicator of successful research during these COVID-19 times; future work should keep in mind socioeconomic status and geographic location of participants to assure that data is irrespective of technological challenges. For our research, we assumed that the participants were technologically literate, given their professions and backgrounds, but we also provided an opportunity prior to the workshop to confirm and adjust based on their technological literacy as needed.

Participants were required to view presentations ahead of their participation date. The presentations were from experts in the field of microplastics and oysters as well as one from the host explaining the general agenda of the workshop as well as the indicators that were to be evaluated by participants day-of. Typically, these presentations would have been done at the beginning of the workshops; however, in the initial discussions with participants about changing the format of the workshops from in-person to online, participants expressed that their time availability had become truncated, in essence due to other work obligations and childcare. Taking that into account, we shortened the time of the workshop from 5 h to 3 h and moved certain agenda items (e.g., expert presentations) prior to the workshop to be sure that participants had enough time for group deliberation. As a result of this, we may have encouraged more folks to participate given that they did not have to travel to Boston in order to participate (as was the plan during initial outreach). As of March 2020, we had 20 stakeholders agree to participate in the in-person workshop (30% from the Academic sector, 30% from Government, 25% from NGO, and 15% from Industry). At the completion of the virtual workshops, 30 stakeholders had participated (21% from the Academic sector, 41% from government, 24% from NGO, and 14% from Industry). This increase in stakeholders could be attributed to increased outreach and the virtual nature of the workshops. However, given childcare constraints, as discussed in the previous section, some may have still been discouraged to participate.

The change from in-person to virtual was communicated via email through the description of the three participation phases:

Phase I took place before the participation date. Participants were asked to watch four presentations (totaling, approximately 40 min). One provided context to the workshop activities and the other three were from three experts in the field of shellfish and microplastics. The content in these presentations provided the necessary background information for the workshop.

Phase II took place on the respective participation date. Participants needed the (2) weblinks for Google Slides and Zoom.

Phase III took place 1 week after the workshop. Participants were asked to complete an activity similar to what they did day-of (no longer than 10–15 min). Participants completed this survey in their individual GoogleSlides link.

The expert presentations were uploaded to a shared GoogleFolder and shared with participants 5 days prior to their participation date. One participant said “I liked participating, it was very interesting and the format was fine, nice job! I am happy to have been part of this. I liked being able to listen to the information ahead of time and to hear a range of comments from such a group of stakeholders…” When asked if participants were able to successfully view the presentations, 83 percent of participants indicated ‘yes,’ 10 percent indicated ‘partially’ and 7 percent indicated ‘no’ (

Figure 4A). All participants found the instructional material provided was helpful either in totality (67 percent) or partially (33 percent) (

Figure 4B). Additionally, participants were exposed to the key points of each experts’ pre-workshop presentation at the start of Phase II; participants were offered the opportunity to ask questions and/or clarify points made by the experts at this time. For future work, one participant suggested that the videos be placed on a secure Youtube Channel rather than GoogleDrive. Furthermore, click rates could be monitored to assure that the videos were actually viewed ahead of the workshops and an additional question could be added to the post-workshop evaluation form asking participants if they viewed the material undistracted or while doing other work.

When these workshops are video-recorded in-person, it is evident to participants that a camera is recording their conversations and movements. This may become a hindrance to ‘camera shy’ individuals. With this new virtual format, participants were read a consent form pertaining to the use of video-recording at the start of the workshop. The camera was not as apparent throughout the virtual workshop (as it would have been in-person) which may have allowed those individuals to participate more comfortably.

We also decided to keep the daily groupings smaller in number compared to doing it in-person. Acknowledging that free-flowing conversation is not as easy in a virtual workshop, we wanted to make sure that all voices could be heard. Aided by a facilitator, participants were called on to help move the conversation forward when needed. One participant mentioned that they “appreciated the moderator calling on people to speak up. Often one person dominates the conversations, especially during remote meetings… Getting more people to speak can only improve the discussion…”. Even with the virtual format, however, participants were generally satisfied with the outcome of the group deliberation (

Figure 4C).

4.2. Challenges in Virtual Stakeholder Engagement

At the crux of stakeholder engagement, and specifically the DMCE methodology, is the ability to interact with fellow participants. This interaction is important for both the researchers and the participants. For the researchers, the qualitative data (e.g., the discussions between participants) are just as, if not more important, than the quantitative data (e.g., the ranking values given to the scenarios). We wanted to understand the reasoning behind choices (why did participants preferences change during the group deliberation when compared to pre-deliberation preferences?). For the participants, being able to learn from other participants during the group deliberation is essential to the learning process that takes place while implementing the DMCE methodology. While using Zoom was a decent substitute for in-person relations (one participant said “We are all becoming more comfortable and competent with Zoom. It will be a most valuable tool in the future.”), not all participants were able to use Zoom as a result of work-related mandates (one participant, who had initially agreed to participate, had to cancel because of the use of Zoom). Since participant and data security is integral to working with human subjects, using platforms that provide that is essential. Based on this experience, we can identify how we need to improve technological tools that we used in this process.

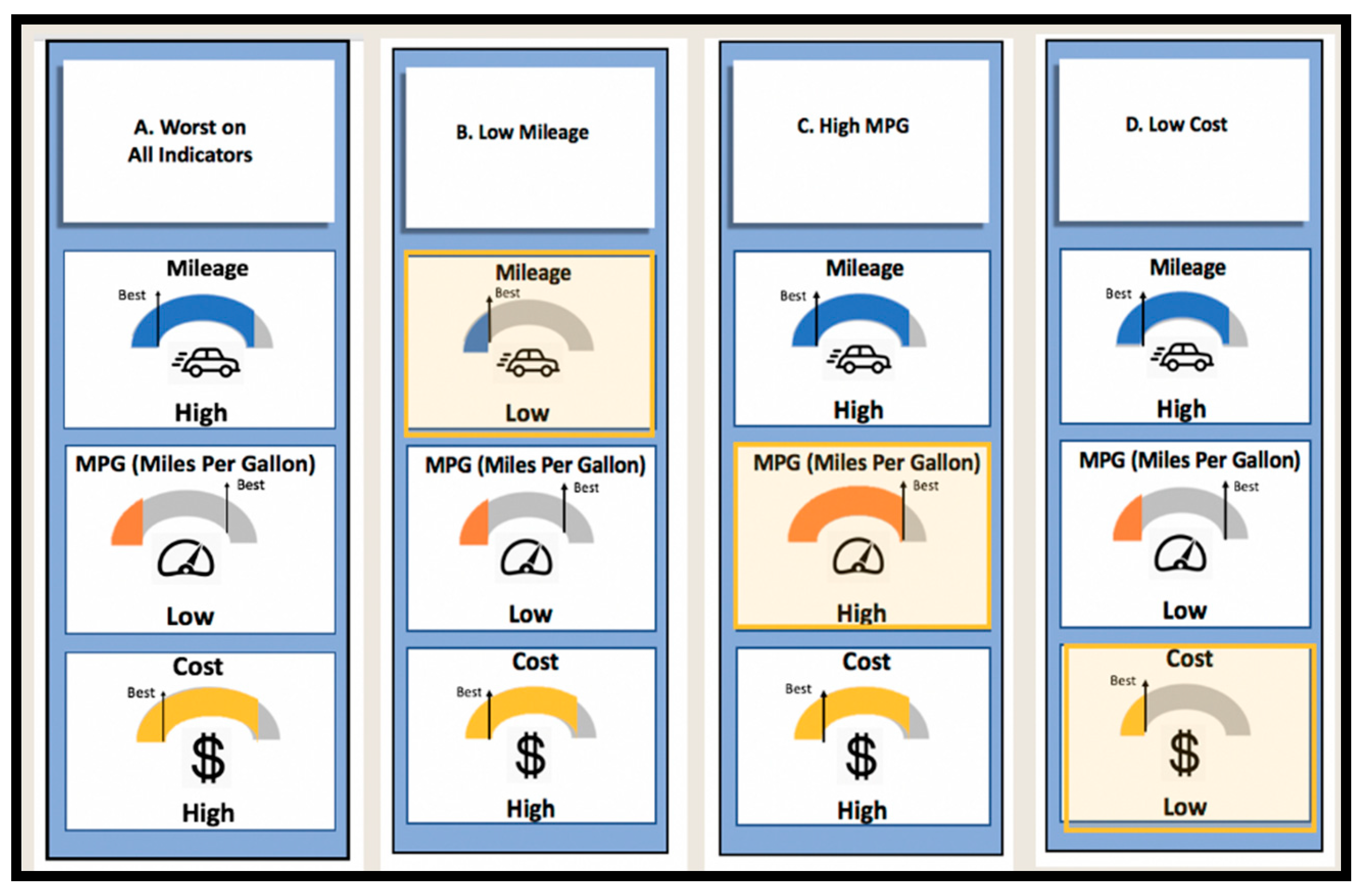

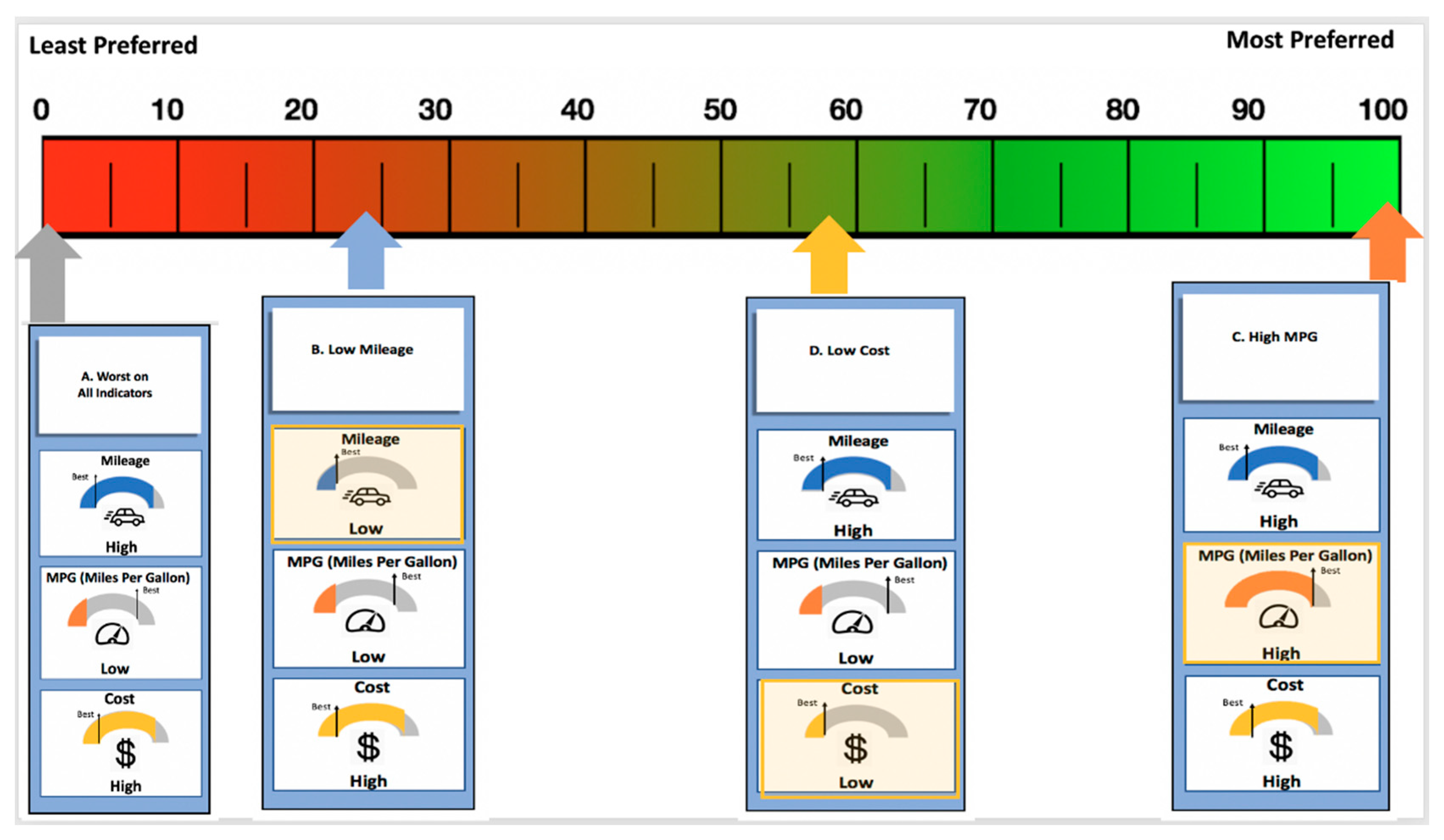

Lacking these in-person relations was one of the major challenges of this research. Previous work done using this methodology emphasized the need for the in-person relations [

16]. Typically, participants would have been provided with a printout of the scenario cards in addition to the scale bar so that individuals could move the cards based on their preferences in real-time and others could evaluate and suggest changes, thus prompting conversation among participants. In hopes of providing some of that tactile learning, we provided images of the cards on a GoogleSlide (that all participants had access to) so that they could move the cards in real-time. What we found, however, is that most participants did not utilize that function during the group deliberation section of the workshop and thus we required a graduate student to help move the cards based on the discussion.

One of the unique aspects of this methodology is being able to evaluate participant preferences before, during, and after knowledge consumption in order to see if preferences change or stagnate with the gaining of more knowledge. Typically, all of the information would have been provided day-of so that we were able to record initial preferences in real-time, rather than allow the participants to contemplate the information for days prior. However, as mentioned above, we had to adapt to current times by shortening the day-of workshop and providing materials ahead of time. Given that materials were provided 5 days in advance, participants technically had the opportunity to reflect on the content, specifically the indicators, prior to completing the initial, individual survey day-of. For future work, participants could be asked to complete the initial, individual survey when they received the materials in advance.

Providing opportunities for participants to gain knowledge throughout the workshop is integral to successfully implementing this methodology. Knowledge building can happen during structured expert presentations, conversations with other participants, as well as candid conversations with experts throughout the day. For the latter, participants are told that experts are available throughout the workshop to clarify any points/questions. While experts were present during each virtual workshop for its entirety, participants oftentimes forgot they were there since they (the experts) are not meant to interject unless called upon, which may have led to the discrepancies in how influential the scientists (‘experts’) were on the outcome of the group deliberation (

Figure 4D).