Abstract

This study examined the level of agreement (Pearson product-moment correlation [rP]), within- and between-day reliability (intraclass correlation coefficient [ICC]), and minimal detectable change of the MusclelabTM Laser Speed (MLS) device on sprint time and force–velocity–power profiles in Division II Collegiate athletes. Twenty-two athletes (soccer = 17, basketball = 2, volleyball = 3; 20.1 ± 1.5 y; 1.71 ± 0.11 m; 70.7 ± 12.5 kg) performed three 30-m (m) sprints on two separate occasions (seven days apart). Six time splits (5, 10, 15, 20, 25, and 30 m), horizontal force (HZT F0; N∙kg−1), peak velocity (VMAX; m∙s−1), horizontal power (HZT P0; W∙kg−1), and force–velocity slope (SFV; N·s·m−1·kg−1) were measured. Sprint data for the MLS were compared to the previously validated MySprint (MySp) app to assess for level of agreement. The MLS reported good to excellent reliability for within- and between-day trials (ICC = 0.69–0.98, ICC = 0.77–0.98, respectively). Despite a low level of agreement with HZT F0 (rP = 0.44), the MLS had moderate to excellent agreement across nine variables (rp = 0.68–0.98). Bland–Altman plots displayed significant proportional bias for VMAX (mean difference = 0.31 m∙s−1, MLS < MySp). Overall, the MLS is in agreement with the MySp app and is a reliable device for assessing sprint times, VMAX, HZT P0, and SFV. Proportional bias should be considered for VMAX when comparing the MLS to the MySp app.

1. Introduction

One focus of sprint research is the interpretation of the force-velocity-power (FVP) profile for guiding individualized program development [1]. The common FVP profile consists of theoretical horizontal force output per body mass (HZT F0; N·kg−1), theoretical maximal running velocity (VMAX; m·s−1), maximal mechanical horizontal power output per body mass (HZT P0; W·kg−1), the slope of the force–velocity curve (SFV), and the ratio of decreasing force with increasing velocity (DRF). Customizing training to the athlete’s specific FVP profile may allow coaches to develop optimal individual training interventions [1,2,3]. The benefits of FVP profiling include addressing variability in playing level [4,5], sport position [6], and age [7] as well as the ability to take into account external variables, such as time of season [8]. Team sports, such as rugby [3,9], soccer [4,7], ice hockey [10], and netball [11], as well as individual athletic activities, such as ballet [12] and gymnastics [13], have utilized this approach.

An important concern with FVP profiling is the ability to obtain reliable data for within- and between-day testing. Inconsistencies have been reported in jumping [14] and short (<10 m) sprinting performance [15], which causes concern about the efficacy of FVP profiling [16]. Therefore, equally important to the FVP profile is the training approach and its accuracy of measurement. The technology for monitoring FVP continues to improve in terms of efficiency, allowing coaches to assess and evaluate their players more quickly and effectively [17]. Currently, coaches and sports scientists have access to a variety of kinematic monitoring devices for sprinting performance, including high-speed cameras [18,19], iPhone apps [20], radar guns (i.e., Stalker II) [15], tether motor devices (i.e., 1080 Sprint) [21], inertial measurement units (IMUs) [22], global positioning systems (i.e., STATSports VIPER) (GPS) [23,24], and laser displacement measuring devices [25,26,27,28,29,30,31]. Although high-speed cameras and radar technology have shown to be valid methodologies for sprinting analysis, these systems are typically expensive and time consuming, requiring further analysis beyond the raw data captured. Contemporary systems, such as laser displacement measuring devices, provide immediate feedback and are easier to use during regular training sessions [21].

Studies on laser displacement measuring devices report intraclass correlation coefficients (ICCs) that range from moderate to good (0.64–0.83) [25] and good to excellent (ICC > 0.77) [27]. Recently, a commercially available device from Musclelab, Musclelab Laser Speed (MLS), was developed as a feasible means of assessing the FVP profile during sprinting. Van den Tillaar et al. [32] measured the validity of the MLS, combined with an IMU (MLS + IMU), in comparison to the use of force plates on step length, step velocity, and step frequency in trained sprinters. The authors tested 14 trained sprinters in the 50-m sprint using the MLS to record continuous distance over time. Significant positive correlations between the MLS + IMU and force plates for step velocity (r = 0.69) and step frequency (r = 0.53) were reported. In addition to kinematic data, the MLS calculates several of the values for the FVP profile, which have not been studied. Therefore, the first objective of this study is to examine the level of agreement of the FVP profile with the MLS with a previously validated field device, the MySprint (MySp) app [20], on maximal 30-m sprint time in a sample of Division Collegiate II athletes. Equivalence testing [33], such as the comparison of the MLS and the MySp app, can provide coaches with the information needed to compare instruments. The rationale for comparing commercial devices across the same movement exercise is used for other field devices, such as linear transducers [34,35]. This comparison provides coaches and practitioners with a greater understanding of which devices are practical to guide programming and training [34]. The results from this study can be used to help monitor training and provide direct feedback to the athlete.

The second objective is to report the minimal detectable change (MDC) data for the MLS. Minimal detectable change data are critical for practitioners to confidently identify actual change instead of typical within- and between-day variability [36]. Few studies have reported MDC for the FVP profile [9,36,37,38]; however, established MDC data are lacking, particularly in the case of laser technology. Given that sport lasers report excellent validity and reliability, we hypothesize that the MLS device will have a good level of agreement with the validated MySp app [20] and a high degree of reliability in terms of FVP profiling.

2. Materials and Methods

2.1. Experimental Design

A repeated measures design was used to assess within- and between-session reliability for sprint times at six splits (5, 10, 15, 20, 25, 30 m) and the FVP profile, which consisted of HZT F0, VMAX, HZT P0 and SFV. The SFV for both devices was calculated using a published spreadsheet by Morin and Samozino [39]. The dependent variables were the six splits and the FVP profile. The independent variables were the number of sprint trials and testing days. The protocol consisted of each athlete performing three sprints on two occasions separated by seven days. To control for testing conditions, participants performed all sessions on the same day of the week, at the same time of day (~9 am), and on an indoor gymnasium floor to avoid the influence of weather. Participants were tested at the beginning of the week to ensure that they did not perform any team-organized strength training sessions 24 h prior to testing. Participants wore the same shoes to control for shoe–surface interface and were instructed not to perform any high-intensity training sessions 24 h prior to testing. All participants were compliant with the experimental guidelines.

2.2. Participants

Twenty-two Division II university athletes (20.1 ± 1.5 y; 1.71 ± 0.11 m; 70.7 ± 12.5 kg) volunteered to participate in this study. The sample included 16 females and 6 males (13 female soccer, 4 male soccer, 2 male basketball, 3 female volleyball). Based on an a priori power analysis using G*Power 3.1 software [40] for a one-tailed Pearson product-moment correlation between the two devices (MLS vs. MySp), we adopted an α = 0.05, ρ H1 correlation coefficient = 0.81, and ρ H0 correlation coefficient = 0.5. The sample size of 22 participants produced a power of 0.81. A post hoc power analysis was used to determine the actual power of the study as 0.95, using a sample size of 22 participants and a Pearson-product moment correlation as 0.87.

Inclusion criteria were (1) 18 to 24 years old, (2) active members of their respective sports teams, and (3) free of any physical limitations, defined as having no lower or upper body musculoskeletal injuries that affected maximum sprinting ability. Exclusion criterion included having a musculoskeletal upper or lower body injury that affected the sprinting exercise. Before enrollment, all participants completed the Physical Activity Readiness Questionnaire and a medical history questionnaire.

2.3. Procedures

Participants reported to the research facility on two days for sprint testing, and each visit was separated by seven days. Prior to maximal sprint testing, participants were weighed on a digital scale (Taylor Precision Products, Oak Brook, IL, USA) with full clothing and shoes, and their body mass in kilograms was entered into the MLS and MySp app. Next, participants performed a standardized 15 min warm-up, consisting of 5 min of jogging, 5 min of lower limb dynamic stretching, and 5 min of progressive 30-m sprints at 50%, 70% and 90% effort. After the warm-up, participants performed three 30-m sprints at maximal effort, with 5 min rest in between sprints. A 30-m sprint distance is used in previous research [15,27,36] and is the distance setting for the MySp app. All participants started in a two-point stance, with no false step, and were instructed to start the sprint at any time. All participants were familiar with sprint testing, and all warm-ups and sprinting sessions were supervised by a certified strength and conditioning specialist (CSCS-NSCA). Each sprint was simultaneously assessed by the MLS and MySp app technology.

2.4. Musclelab Laser Speed

Measurements of instantaneous split times and FVP data were recorded using MLS (MusclelabTM 6000 ML6LDU02 Laser Speed device, Ergotest Innovations, Stathelle, Norway), sampling at 2.5 KHz (Figure 1). Raw data were analyzed using the Musclelab software (version 10.213.98.5188), which measures continuous velocity (Vh (t)) and distance to create an individual FVP profile. A mathematical model is fitted to the recorded velocity/time by calculating the time constant, “tau” (τ). Thus, the velocity/time can be retrieved from the model as

where VMAX is observed maximal velocity. A mathematical derivation allows calculation of horizontal acceleration/time:

Vh (t) = VMAX (1 − e−t/τ),

ah (t) = VMAX/τ ∗ e−t/τ,

Figure 1.

MusclelabTM Laser Speed with tripod.

The horizontal force/time can then be calculated:

where Fair is force caused by wind drag. Fh (t) is then expressed as a function of Vh (t), and a linear fit is applied. It gives the form Fh = Avh + B, also known as F/V profile, where A and B are polynomial constants. The MLS is designed to operate in a typical indoor environment, such as a sports hall or gymnasium, on a flat surface of 29–60 m. MLS was placed 3 m behind the starting line on a tripod of a height of 0.91 m and oriented to the participant’s lower back proximal to his or her center of mass. The distance behind the start line for the MLS is similar to previous methodology [25]. The MLS has a standard aiming scope (Strike Red® Dot 1 × 30) with a pointer beam (605 nm) precision width of 1–5 mm and a range of 75 m. The MLS measures continuous distance and FVP profile during sprinting. To ensure that all participants performed the sprint in a straight line, the investigators created a lane width of 0.66 m, which is considerably less than a standard track, ranging from 1.07–1.22 m, depending on the level of competition. Sprint performance measurements were available immediately after each sprint. The head strength and conditioning coach was the sole operator for all MLS sprint trials.

F(h) (t) = mah + Fair,

2.5. MySp App

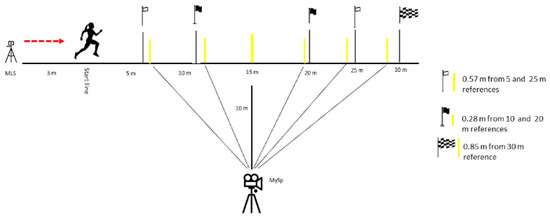

The MySp app videos were filmed with an iPad (7th generation; iOS 14.4.2 with built-in slow-motion video support at 120 fps at a quality of 720p) according to previously validated methodologies [20]. Notably, Romero et al. [20] used a 40-m track, whereas the current study implemented a 30-m track, consequently slightly altering our parallax measurements (Figure 2). The iPad was mounted to a tripod (height, 1.46 m) to record each sprint, assessing the frontal plane to film the sprint from the side. The video parallax was corrected to ensure that the 5, 10, 15, 20, 25, and 30 m split times were measured accurately. As per [20], the marking poles were not exactly at the associated distances but, rather, at the adjusted positions. The iPad camera filmed the participants’ hips as they crossed the markers when they were precisely at the targeted distances (Figure 3).

Figure 2.

Experimental setup of the testing sessions for the validation of the MusclelabTM Laser Speed and MySprint app. The yellow lines represent the marking poles as part of the, Mysprint set up.

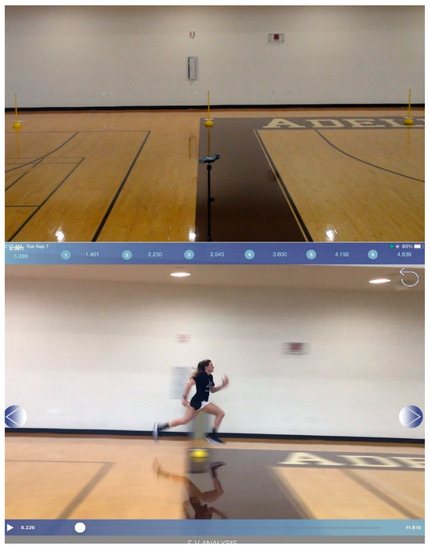

Figure 3.

Pictorial representation of the MySprint app.

2.6. Independent Observers

Two independent observers were asked to time stamp the exact start of the sprint initiated by the participants’ movement and each split time for the entire sprint. The rationale for two independent observers using the MySp app was to ensure that each observer accurately identified the start of the sprint and the crossing of the hips (i.e., split times) at each marking point. The start of the sprint was defined as the moment when the back leg plantar flexed, indicating that the participant applied force to the ground. Stamping the start time of the participants’ movement, instead of from an external verbal cue (e.g., “set, go”), avoided any confounding effects of participants’ reaction time. The first three successful repetitions for each testing day were used in the analysis.

2.7. Statistical Analysis

Data analysis was performed using IBM SPSS, Version 27.0 (SPSS Inc., Chicago, IL, USA) software for Windows. Descriptive data for participants’ characteristics and experimental variables were calculated as means and standard deviations with 95% confidence intervals. Normality of the distributions for each dependent variable was tested using a Shapiro–Wilk test. Absolute reliability was calculated using ICCs for the within-day (within the three sprints of each session) and between-day (mean of each session) data to determine the reliability of the MLS.

The ICC results were interpreted as 0.2–0.49 = low, 0.50–0.74 = moderate, 0.75–0.89 = high, 0.9–0.98 = very high, and 0.99 = extremely high [41]. Internal consistency for the MLS was examined by calculating the coefficient of variation (CV). A coefficient of variation (SD/mean) of <10% was considered acceptable for reliability and <5%, acceptable for fitness testing standards [42]. The CV was calculated for each participant for the three sprints across both testing days. The average CV of all participants for each dependent variable was reported.

The validated MySp app was selected as the device to assess the level of agreement with the MLS [20]. Level of agreement was assessed by an examination of the significance of the paired t-test mean difference, ICCs, and Pearson product-moment correlation (rP) with the 95% confidence intervals. Bland–Altman plots were created (GraphPad Prism version 9.2 for Windows; GraphPad software, La Jolla, CA, USA) to examine the linear regression analysis of the difference and average scores across the devices [20,43]. Examination of the 95% confidence intervals for the slope and y-intercept across the split times and FVP were used to determine proportional and fixed bias, respectively.

A one-way repeated-measures analysis of variance (ANOVA) was used to calculate the mean square error for the within- and between-session data. The standard error of measurement (SEM) was calculated by taking the square root of the mean square error [44], and the MDC was detected at the 95% confidence interval (MDC95). The MDC95 provided assurance that a true change had occurred, outside of error; MDC95 = SEM × √2 × 1.96 [45]. The smallest worthwhile change (SWC) was calculated for within-day testing by selecting the best sprint trial for each session, then multiplying the between-participant standard deviation by 0.2. For between-day testing, the average standard deviation across both days was multiplied by 0.2 [46].

To ensure the accuracy of the MySp app analysis, two independent observers analyzed each participant’s video for sprint times. Six independent t-tests were used to calculate the mean differences across the six splits (5, 10, 15, 20, 25, 30 m) to determine whether significant differences in sprint times existed between observers. If no significant differences were found, the data from the primary investigator was used in the analysis. If significant differences were found, the average of the two observers was recorded.

3. Results

3.1. Within-Day Reliability

Normality was satisfied for 95.8% of the data, using a Shapiro–Wilks test (p > 0.05). Out of 120 variables (10 scores x 6 sprints x 2 devices), five variables did not satisfy normality; (1) MLS HZT F0 for Day 1, Sprint 2; (2) MLS HZT F0 for Day 1, Sprint 3; (3) MySp app HZT P0 for Day 2, Sprint 3; (4) MySp app 10-m time for Day 2, Sprint 3; and (5) MLS SFV for Day 1, Sprint 1.

Raw data means, standard deviations, ICCs, CVs, SWCs, SEMs, and MDC95 values with 95% confidence intervals were calculated for the sprint times and FVP profile for the three sprints across both testing days (Table 1, Table 2, Table 3 and Table 4). Except for HZT F0 (0.71 and 0.69) and Day 1 for SFV (0.71); nine of the ten variables reported high to very high (0.8–0.98) within-day reliability scores for both days. The average ICCs across all variables for Day 1 and Day 2 were 0.90 and 0.92, respectively. The average coefficients of variation across all variables for Day 1 and Day 2 were highly acceptable, at 2.4% and 1.8%, respectively. The MDC95 values for split times ranged from 0.06 to 0.11 s on Day 1 and 0.05 to 0.14 s on Day 2. The MDC95 values for FVP on Day 1 were 1.16 N·kg−1 (HZT F0), 0.25 m·s−1 (VMAX), 1.99 W·kg−1 (HZT P0) and 0.29 N·s·m−1·kg−1 (SFV); and for Day 2, were 0.83 N·kg−1 (HZT F0), 0.30 m·s−1 (VMAX), 1.41 W·kg−1 (HZT P0), and 0.12 N·s·m−1·kg−1 (SFV).

Table 1.

Means ± standard deviation (95% confidence interval) across three sprints for MusclelabTM Laser Speed on Day 1, within-day trials.

Table 2.

Absolute reliability statistics for MusclelabTM Laser Speed on Day 1; within-day trials.

Table 3.

Means ± standard deviation (95% confidence interval) across three sprints for MusclelabTM Laser Speed on Day 2; within-day trials.

Table 4.

Absolute reliability statistics for MusclelabTM Laser Speed on Day 2; within-day trials.

3.2. Between-Day Reliability

Between-day reliability values are presented in Table 5 as an average across both testing days. Seven out of ten scores were above 0.9 in addition to all CVs ≤5%, indicating very good to acceptable reliability. The average ICC and CV were 0.90 and 1.6%, respectively, across all variables between testing days. For the time between the days, the range of MDC95 values for split times was 0.08–0.14, and values for FVP were 0.66 N·kg−1 (HZT F0), 0.28 m·s−1 (VMAX), 1.43 W·kg−1 (HZT P0), and 0.11 N·s·m−1·kg−1 (SFV).

Table 5.

Mean ± standard deviation (95% confidence interval) and absolute reliability statistics across the average of both days for MusclelabTM Laser Speed; between-day trials.

3.3. MySp App Reliability

The descriptive and reliability data for the MySp app are reported in Table 6. The average ICCs across all variables for Day 1 and Day 2 were excellent, at 0.91 and 0.90, respectively. The average coefficients of variation across all variables for Day 1 and Day 2 were highly acceptable, at 2.6% and 2.5%, respectively.

Table 6.

Mean ± standard deviation (95% confidence interval) and absolute reliability statistics for Day 1 and Day 2 for the MySprint app.

3.4. Level of Agreement

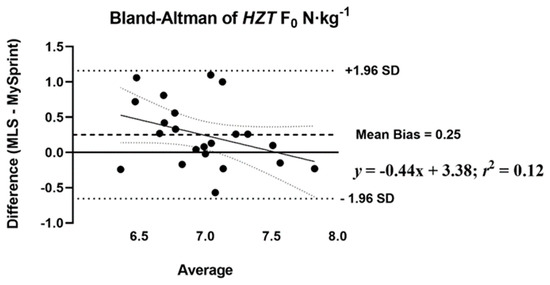

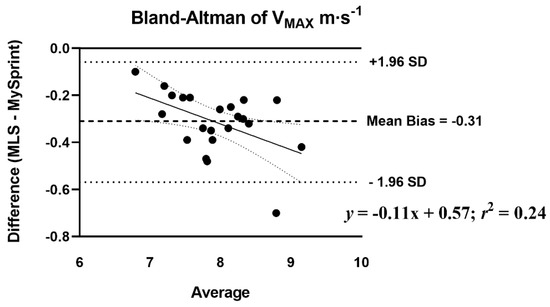

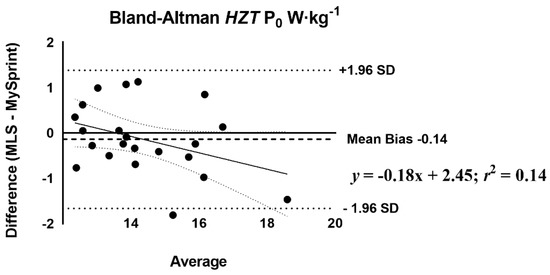

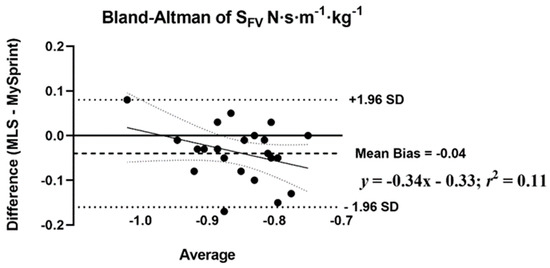

The mean difference score and rP were calculated to assess the level of agreement between MLS and MySp app (Table 7). The rP ranged from 0.44 to 0.98, with low agreement for HZT F0 (0.44), moderate for SFV (0.60), and the remaining variables showing high levels of agreement, greater than 0.88. Three of the ten variables had no statistical difference in mean difference scores (p > 0.05) based on the paired t-test. A statistical difference (p < 0.05) was reported for four split times 15–30 m with the MySp reporting faster times than the MLS. A statistical difference (p < 0.05) was reported for HZT F0 with the MySp app reporting lower HZT F0 values compared to MLS (6.85 < 7.1, mean difference = 0.25). A statistical difference (p < 0.001) was reported for VMAX (MySp faster than MLS, 8.08 > 7.77 m·s−1, mean difference = 0.31 m·s−1) and SFV (p = 0.01), MySp > MLS, −0.83 > −0.87, mean difference = 0.04. No proportional or fixed bias was observed for split times, HZT F0, HZT P0, and SFV, indicated visually by the Bland–Altman plots (Figure 4, Figure 5, Figure 6 and Figure 7), which show a low R2 for the linear regression of HZT F0, HZT P0, and SFV (0.12, 0.14 and 0.11, respectively). In addition, except for VMAX, all confidence intervals, including the split times, contained zero for the slope and intercept, indicating that the differences between the devices were the same across the six sprints. For VMAX, the R2 of the linear regression plots has a large effect size, 0.24. The 95% confidence interval for the slope did not contain zero CI (−0.2–−0.02), thus showing proportional bias toward the MySp app. The negative slope (−0.11) indicated that the MySp app had a greater proportional bias for the faster runners.

Table 7.

Level of agreement of MusclelabTM Laser Speed vs. MySprint app on the sprinting profiling measurements across six trials with 95% confidence interval.

Figure 4.

Bland-Altman plot that provides a comparison of the differences and the average between the MusclelabTM Laser Speed and the MySprint app for HZT F0 N·kg−1. The upper and lower lines represent ± 1.96 N·kg−1.

Figure 5.

Bland–Altman plot that provides a comparison of differences and the average between the MusclelabTM Laser Speed and the MySprint app for VMAX m·s−1. The upper and lower lines represent ± 1.96 m·s−1.

Figure 6.

Bland–Altman plot that provides a comparison of the differences and the average between the MusclelabTM Laser Speed and the MySprint app for HZT P0 W·kg−1. The upper and lower lines represent ± 1.96 W·kg−1.

Figure 7.

Bland–Altman plot that provides a comparison of the differences and the average between the MusclelabTM Laser Speed and the MySprint app for SFV N·s·m−1·kg−1. The upper and lower lines represent ± 1.96 N·s·m−1·kg−1.

3.5. Inter-Observer Analysis

Six independent t-tests were used to determine significant differences among six sprint times between two independent observers. Mean difference and p-values for the 5 m (0.001 ± 0.01 s, p = 0.932), 10 m (0.008 ± 0.02 s, p = 0.735), 15 m (0.01 ± 0.03 s, p = 0.731), 20 m (0.01 ± 0.04 s, p = 0.756), 25 m (0.02 ± 0.05 s, p = 0.774), and 30 m (0.02 ± 0.07 s, p = 0.794) revealed no significant differences for sprint times between the observers.

4. Discussion

This study reported the level of agreement, reliability, and MDC of the MLS device during maximal 30-m sprints in a sample of Division II Collegiate athletes. The major finding is that the MLS displayed a moderate to excellent level of agreement and reliability for nine of ten variables, with the exception of HZT F0. A second finding is that there was a significant proportional bias for VMAX as compared the MLS to MySp app, with the MySp app detecting faster velocities (mean difference = 0.31 m·s−1). Finally, significant differences occurred in four split times (15–30 m), HZT F0, and SFV between the two devices, with the MySp app having faster times, lower HZT F0, and a velocity-dominant slope.

The low to moderate ICC and rP for HZT F0 and moderate ICC and rP for SFV indicate a partial rejection of our primary hypothesis that the MLS would agree with the MySp app for all components of the FVP profile. Our second hypothesis that the MLS is reliable for within- and between-day testing for split times and the FVP profile was primarily supported for nine of ten variables. Despite proportional bias for VMAX, our finding is consistent with a previous study showing the mathematical model for the MySp app to have a 0.32 m·s−1 bias compared to force plate analysis [47].

Our ICCs were both higher [25,28] and similar [27,31] as compared to previous studies on the reliability of sports lasers. Within- and between-day ICCs and CVs across all split times were above 0.83 and less than 5%, respectively. Both HZT P0 and VMAX within- and between-day data showed ICCs and CVs above 0.92 and less than 5%. Notably, HZT F0 showed acceptable internal consistency with CVs less than 5% for both within- (4.7% and 3.8%) and between-day (2.7%) sessions. Pearson-product moment correlations and absolute agreement ICCs for all split times, HZT P0, VMAX, and SFV ranged from 0.60 to 0.98 and 0.68 to 0.95. Similar to our reliability data, the MLS did not agree with the MySp app data for HZT F0, with rP and ICC at 0.44 and 0.55, respectively. Bland–Altman plots show no fixed or proportional bias, except for proportional bias for VMAX (MySp > MLS). The absence of bias is supported by all split times, HZT F0, HZT P0, and SFV as having zero in the 95% CI for the slope and intercept. VMAX did not have zero in the 95% CI for slope (−0.2–−0.02) but did have zero for the intercept (−0.15–1.29), indicating no fixed, but proportional, bias.

MDC is defined as the smallest change in a variable that reflects a true change in performance [48]. MDC is important, particularly when monitoring athletes over several trials, as between-trial variation may suggest a change that has not exceeded a threshold error [36,49]. Studies by Edwards et al. [36] and Ferro et al. [37] used radar and laser technology, respectively, reported MDCs at the 90% confidence interval. Our split time and FVP profile MDC data were similar to those of Edwards et al. (2021) when comparing the average of three trials. SWC is defined as the smallest change in a metric that is likely of practical importance [50]. In four splits (15–30 m) and VMAX, the CV% was approximately equal to the SWC%, indicating that the MLS had an “okay” sensitivity rating in terms of detecting real change [51]. In contrast, the CV% for HZT F0, HZT P0, and SFV was greater than the SWC%. For Day 1 and Day 2, HZT F0 was CV (4.7%) > SWC (1.6%) and 3.8% > 1.2%, HZT P0 was 3.7% > 2.5% and 3.5% > 2.2%, and SFV was 7.0% > 1.5% and 4.0% > 1.1%. Therefore, all three measurements had “marginal to poor” within-day sensitivity [51,52].

Coaches find FVP profiling to be useful as they allow for a more individualized training approach, and if the correct data collection methodology is used, FVP can provide accurate monitoring of progression [1]. However, the value of FVP profiling is subject to debate [14,16]. Studies report HZT F0 to have moderate reliability [15,17,36,53], specifically for sprints < 10 m [15,36]. Our data are consistent with this finding for HZT F0, which achieved moderate ICCs for within- (0.71 and 0.69) and between-day (0.77) testing. Similar inconsistencies for HZT F0 have been reported when monitoring jumping with large variations between trials noted [14].

Comparisons between the MLS and MySp app can be made in terms of their practical uses in real-world settings considering that both are accurate and valid sprint testing devices. The first advantage of the MLS is that it provides immediate feedback to the coach and sprinter allowing for better coaching instruction during the training session, while the MySp app uses video technology requiring further analysis post-sprint. The second is that the MLS accommodates a variety of settings, such as a field or gymnasium, with minimal setup time, while the MySp app requires a 15- to 20-min setup time (with two people) to ensure that all of the distances and parallaxes are accurate and that the viewing area is clear. This kind of setup can be challenging for short-staffed strength and conditioning coaches who might have one coach per training group. A final advantage is that the MLS can be combined with other measurement devices (e.g., IMU, contact grid) to access more sophisticated data, such as step velocity, step length, and contact time [32]. However, the MLS costs approximately $6000, whereas the MySp app is $10. Further, the MLS does not display the entire FVP profile, as does the MySp app. In this regard, [27] suggests that SFV and DRF are the most important factors in the FVP profile, which the MLS does not record, but the MySp app does. Nevertheless, according to [27], the use of laser technology with trained sprinters resulted in poor reliability for both SFV and DRF.

This study has certain limitations. A research-grade criterion variable, such as a high-speed camera or force plate system, which the study lacked, would provide a better means to validate the MLS device. The rationale for using the MySp app was that the investigators wanted to provide two easily accessible devices for practitioners to make the comparison. A suggestion for future research would be a follow-up validation study that compares the MLS to a criterion variable. Second, the significant difference across the four split times, HZT F0, and proportional bias for VMAX between the MLS and MySp app could be due to the sampling rate of the iPad camera (i.e., 120 fps), which is equivalent to a high-speed smartphone [19]. Romeo et al. [20] suggest using an iPhone at a sampling rate of 240 fps when utilizing the MySp app. A camera with a higher sampling rate might have reduced some of the statistical differences across the split times and our bias due to providing a more accurate identification point of the sprinter’s start and the hips as they crossed the specified marking poles. Notably, previous literature has used 120 fps to assess sprint performance [54] and treadmill running [55]; however, a practical suggestion for coaches is to have an iPhone, as opposed to an iPad, readily available when using the MySp app. Third, the inherent limitations of testing a sample of novice sprinters may have caused variation in our HZT F0 data. A contributor to the unwanted variation in HZT F0, particularly in the early phases of the sprint (<10 m), is that the changes in the lumbar point (where the laser is aimed) to the center of mass of the sprinter decreases as the sprinter continues to rise to an upright posture [30]. This notion is supported by Talukdar et al. [56], who reported HZT F0 to have the highest CV in their data set of young female team sport athletes (nevertheless, this study reported acceptable overall reliability for HZT F0 [ICC = 0.89, CI 0.77–0.94], using radar). One reason for this variation may be that novice sprinters rise too early and are inconsistent at the start of the sprint, and, thus, are not consistent in applying force at the start [56]. The start positon (two-point vs. three-point) maybe a source of error for HZT F0 due to different athletes becoming accustomed to different starts. The current study used a two-point stance, which is similar to recent research when assessing 30 m sprint performance [57]. Nonetheless, teaching athletes to have more consistency in their start position and sprinting technique (i.e., avoid rising too fast) in the first 10 m may reduce this variation. Therefore, caution should be used with HZT F0 data when evaluating progression and the training prescription.

5. Conclusions

The current study found that the MLS displays excellent agreement with the MySp app for most performance measures and that the MLS is a reliable (within- and between-day) device for measuring 30-m split times, VMAX, HZT P0, and SFV. Nevertheless, the MLS has moderate to poor accuracy in measuring HZT F0. Coaches and practitioners need to be aware of the significant proportional bias for VMAX, with the MySp app’s reporting higher sprint velocities than the MLS in the faster runners and a velocity dominant slope for SFV. The MLS is sensitive to a change in 15–30 m sprints and VMAX. Finally, the MDC data add to the knowledge available for coaches and practitioners in terms of identifying an actual change in performance.

Author Contributions

This project was conceptualized by J.J.G. and K.J.F.; J.J.G., K.J.F. and K.M.P. were responsible for the methodology; J.J.G., K.J.F. and K.M.P. assisted with visualization, supervision, and data curation; J.J.G., K.J.F. and C.F.V. were responsible for formal analysis and writing the original draft of the manuscript; A.M.G. and K.M.S. assisted with reviewing and editing of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and The Hofstra University Institutional Review Board approved the research protocol before participant enrollment (Approval Ref#: 20210615-HPHS-GHI-1).

Informed Consent Statement

After explaining all procedures, risks, and benefits, each participant provided informed consent prior to participation in this study.

Data Availability Statement

The date presented in this study are available upon request from the corresponding author.

Acknowledgments

The authors would like to acknowledge the study participants and the research assistants involved in the data collection process.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Morin, J.B.; Samozino, P. Interpreting power-force-velocity profiles for individualized and specific training. Int. J. Sports Physiol. Perform. 2016, 11, 267–272. [Google Scholar] [CrossRef] [PubMed]

- Haugen, T.; Breitschädel, F.; Seiler, S. Sprint mechanical variables in elite athletes: Are force-velocity profiles sport specific or individual? PLoS ONE 2019, 14, e0215551. [Google Scholar] [CrossRef] [PubMed]

- Watkins, C.M.; Storey, A.; McGuigan, M.R.; Downes, P.; Gill, N.D. Horizontal force-velocity-power profiling of rugby players: A cross-sectional analysis of competition-level and position-specific movement demands. J. Strength Cond. Res. 2021; publish ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Devismes, M.; Aeles, J.; Philips, J.; Vanwanseele, B. Sprint force-velocity profiles in soccer players: Impact of sex and playing level. Sports Biomech. 2021, 20, 947–957. [Google Scholar] [CrossRef]

- Jiménez-Reyes, P.; Samozino, P.; García-Ramos, A.; Cuadrado-Peñafiel, V.; Brughelli, M.; Morin, J.B. Relationship between vertical and horizontal force-velocity-power profiles in various sports and levels of practice. PeerJ 2018, 6, e5937. [Google Scholar] [CrossRef]

- Haugen, T.A.; Breitschädel, F.; Seiler, S. Sprint mechanical properties in soccer players according to playing standard, position, age and sex. J. Sports Sci. 2020, 38, 1070–1076. [Google Scholar] [CrossRef]

- Fernández-Galván, L.M.; Boullosa, D.; Jiménez-Reyes, P.; Cuadrado-Peñafiel, V.; Casado, A. Examination of the sprinting and jumping force-velocity profiles in young soccer players at different maturational stages. Int. J. Environ. Res. Public Health 2021, 18, 4646. [Google Scholar] [CrossRef]

- Jiménez-Reyes, P.; Garcia-Ramos, A.; Párraga-Montilla, J.A.; Morcillo-Losa, J.A.; Cuadrado-Peñafiel, V.; Castaño-Zambudio, A.; Samozino, P.; Morin, J.B. Seasonal changes in the sprint acceleration force-velocity profile of elite male soccer players. J. Strength Cond. Res. 2020; published ahead of print. [Google Scholar] [CrossRef]

- Lahti, J.; Jiménez-Reyes, P.; Cross, M.R.; Samozino, P.; Chassaing, P.; Simond-Cote, B.; Ahtiainen, J.; Morin, J.B. Individual sprint force-velocity profile adaptations to in-season assisted and resisted velocity-based training in professional rugby. Sports 2020, 8, 74. [Google Scholar] [CrossRef]

- Perez, J.; Guilhem, G.; Brocherie, F. Reliability of the force-velocity-power variables during ice hockey sprint acceleration. Sports Biomech. 2019, 21, 56–70. [Google Scholar] [CrossRef]

- Escobar-Álvarez, J.A.; Fuentes-García, J.P.; Viana-da-Conceição, F.A.; Jiménez-Reyes, P. Association between vertical and horizontal force-velocity-power profiles in netball players. J. Hum. Sport Exerc. 2020, 17, 83–92. [Google Scholar] [CrossRef]

- Escobar Álvarez, J.A.; Reyes, P.J.; Pérez Sousa, M.; Conceição, F.; Fuentes García, J.P. Analysis of the force-velocity profile in female ballet dancers. J. Danc. Med. Sci. 2020, 24, 59–65. [Google Scholar] [CrossRef] [PubMed]

- Nakatani, M.; Murata, K.; Kanehisa, H.; Takai, Y. Force-velocity relationship profile of elbow flexors in male gymnasts. PeerJ 2021, 9, e10907. [Google Scholar] [CrossRef] [PubMed]

- Valenzuela, P.L.; Sánchez-Martínez, G.; Torrontegi, E.; Vázquez-Carrión, J.; Montalvo, Z.; Haff, G.G. Should we base training prescription on the force-velocity profile? Exploratory study of its between-day reliability and differences between methods. Int. J. Sports Physiol. Perform. 2021, 16, 1001–1007. [Google Scholar] [CrossRef]

- Simperingham, K.D.; Cronin, J.B.; Pearson, S.N.; Ross, A. Reliability of horizontal force–velocity–power profiling during short sprint-running accelerations using radar technology. Sports Biomech. 2019, 18, 88–99. [Google Scholar] [CrossRef]

- Lindberg, K.; Solberg, P.; Rønnestad, B.R.; Frank, M.T.; Larsen, T.; Abusdal, G.; Berntsen, S.; Paulsen, G.; Sveen, O.; Seynnes, O.; et al. Should we individualize training based on force-velocity profiling to improve physical performance in athletes? Scand. J. Med. Sci. Sports 2021, 31, 2198–2210. [Google Scholar] [CrossRef]

- Simperingham, K.D.; Cronin, J.B.; Ross, A. Advances in sprint acceleration profiling for field-based team-sport athletes: Utility, reliability, validity and limitations. Sports Med. 2016, 46, 1619–1645. [Google Scholar] [CrossRef]

- Busca, B.; Quintana, M.; Padulles, J.M. High-speed cameras in sport and exercise: Practical applications in sports training and performance analysis. Aloma 2016, 34, 13–23. [Google Scholar] [CrossRef]

- Pueo, B. High speed cameras for motion analysis in sports science. J. Hum. Sport Exerc. 2016, 11, 53–73. [Google Scholar] [CrossRef]

- Romero-Franco, N.; Jiménez-Reyes, P.; Castaño-Zambudio, A.; Capelo-Ramírez, F.; Rodríguez-Juan, J.J.; González-Hernández, J.M.; Toscano-Bendala, F.J.; Cuadrado-Peñafiel, V.; Balsalobre-Fernández, C. Sprint performance and mechanical outputs computed with an iPhone app: Comparison with existing reference methods. Eur. J. Sport Sci. 2017, 17, 386–392. [Google Scholar] [CrossRef]

- van den Tillaar, R. Comparison of step-by-step kinematics of elite sprinters’ unresisted and resisted 10-m sprints measured with Optojump or Musclelab. J. Strength Cond. Res. 2021, 35, 1419–1424. [Google Scholar] [CrossRef] [PubMed]

- Macadam, P.; Cronin, J.; Neville, J.; Diewald, S. Quantification of the validity and reliability of sprint performance metrics computed using inertial sensors: A systematic review. Gait Posture 2019, 73, 26–38. [Google Scholar] [CrossRef] [PubMed]

- Beato, M.; Devereux, G.; Stiff, A. Validity and reliability of global positioning system units (STATSports Viper) for measuring distance and peak speed in sports. J. Strength Cond. Res. 2018, 32, 2831–2837. [Google Scholar] [CrossRef]

- Lacome, M.; Peeters, A.; Mathieu, B.; Bruno, M.; Christopher, C.; Piscione, J. Can we use GPS for assessing sprinting performance in rugby sevens? A concurrent validity and between-device reliability study. Biol. Sport 2019, 36, 25–29. [Google Scholar] [CrossRef] [PubMed]

- Ashton, J.; Jones, P.A. The reliability of using a laser device to assess deceleration ability. Sports 2019, 7, 191. [Google Scholar] [CrossRef] [PubMed]

- Ferro, A.; Villacieros, J.; Floría, P.; Graupera, J.L. Analysis of speed performance in soccer by a playing position and a sports level using a laser system. J. Hum. Kinet. 2014, 44, 143–153. [Google Scholar] [CrossRef][Green Version]

- Sarabon, N.; Kozinc, Ž.; García Ramos, A.; Knežević, O.; Čoh, M.; Mirkov, D. Reliability of sprint force-velocity-power profiles obtained with KiSprint system. J. Sports Sci. Med. 2021, 20, 357–364. [Google Scholar] [CrossRef]

- Harrison, A.J.; Jensen, R.L.; Donoghue, O. A comparison of laser and video techniques for determining displacement and velocity during running. Meas. Phys. Educ. Exerc. Sci. 2005, 9, 219–231. [Google Scholar] [CrossRef]

- Morin, J.B.; Samozino, P.; Murata, M.; Cross, M.R.; Nagahara, R. A simple method for computing sprint acceleration kinetics from running velocity data: Replication study with improved design. J. Biomech. 2019, 94, 82–87. [Google Scholar] [CrossRef]

- Bezodis, N.E.; Salo, A.I.; Trewartha, G. Measurement error in estimates of sprint velocity from a laser displacement measurement device. Int. J. Sport Med. 2012, 33, 439–444. [Google Scholar] [CrossRef]

- Buchheit, M.; Samozino, P.; Glynn, J.A.; Michael, B.S.; Al Haddad, H.; Mendez-Villanueva, A.; Morin, J.B. Mechanical determinants of acceleration and maximal sprinting speed in highly trained young soccer players. J. Sports Sci. 2014, 32, 1906–1913. [Google Scholar] [CrossRef] [PubMed]

- van den Tillaar, R.; Nagahara, R.; Gleadhill, S.; Jiménez-Reyes, P. Step-To-Step Kinematic Validation between an Inertial Measurement Unit (IMU) 3D System, a Combined Laser + IMU System and Force Plates during a 50 M Sprint in a Cohort of Sprinters. Sensors 2021, 21, 6560. [Google Scholar] [CrossRef] [PubMed]

- Dixon, P.M.; Saint-Maurice, P.F.; Kim, Y.; Hibbing, P.; Bai, Y.; Welk, G.J. A Primer on the Use of Equivalence Testing for Evaluating Measurement Agreement. Med. Sci. Sports Exerc. 2018, 50, 837–845. [Google Scholar] [CrossRef] [PubMed]

- Harris, N.K.; Cronin, J.; Taylor, K.-L.; Boris, J.; Sheppard, J. Understanding Position Transducer Technology for Strength and Conditioning Practitioners. Strength Cond. J. 2010, 32, 66–79. [Google Scholar] [CrossRef]

- Gonzalez, A.M.; Mangine, G.T.; Spitz, R.W.; Ghigiarelli, J.J.; Sell, K.M. Agreement between the Open Barbell and Tendo Linear Position Transducers for Monitoring Barbell Velocity during Resistance Exercise. Sports 2019, 7, 125. [Google Scholar] [CrossRef]

- Edwards, T.; Banyard, H.G.; Piggott, B.; Haff, G.G.; Joyce, C. Reliability and Minimal Detectable Change of Sprint Times and Force-Velocity-Power Characteristics. J. Strength Cond. Res. 2021; published ahead of print. [Google Scholar] [CrossRef]

- Ferro, A.; Floria, P.; Villacieros, J.; Aguado-Gomez, R. Validity and reliability of the laser sensor of BioLaserSport system for the analysis of running velocity. Int. J. Sport Sci. 2012, 8, 357–370. [Google Scholar]

- Lahti, J.; Huuhka, T.; Romero, V.; Bezodis, I.; Morin, J.B.; Häkkinen, K. Changes in sprint performance and sagittal plane kinematics after heavy resisted sprint training in professional soccer players. PeerJ 2020, 8, e10507. [Google Scholar] [CrossRef]

- Morin, J.-B.; Samozino, P. Spreadsheet for Sprint Acceleration Force-Velocity-Power Profiling. J. Med. Sci. Sports 2017, 13, 2017. [Google Scholar]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Hopkins, W.G.; Marshall, S.W.; Batterham, A.M.; Hanin, J. Progressive Statistics for Studies in Sports Medicine and Exercise Science. Med. Sci. Sports Exerc. 2009, 41, 3–13. [Google Scholar] [CrossRef] [PubMed]

- Turner, A.; Brazier, J.; Bishop, C.; Chavda, S.; Cree, J.; Read, P. Data Analysis for Strength and Conditioning Coaches: Using Excel to Analyze Reliability, Differences, and Relationships. Strength Cond. J. 2015, 37, 76–83. [Google Scholar] [CrossRef]

- Ludbrook, J. Linear regression analysis for comparing two measurers or methods of measurement: But which regression? Clin. Exp. Pharm. Physiol. 2010, 37, 692–699. [Google Scholar] [CrossRef] [PubMed]

- Stratford, P.W.; Goldsmith, C.H. Use of the standard error as a reliability index of interest: An applied example using elbow flexor strength data. Phys. Ther. 1997, 77, 745–750. [Google Scholar] [CrossRef]

- Weir, J.P. Quantifying test-retest reliability using the intraclass correlation coefficient and the SEM. J. Strength Cond. Res. 2005, 19, 231–240. [Google Scholar] [CrossRef]

- Hopkins, W.G. How to interpret changes in an athletic performance test. Sportscience 2004, 8, 1–7. [Google Scholar]

- Samozino, P.; Rabita, G.; Dorel, S.; Slawinski, J.; Peyrot, N.; Saez de Villarreal, E.; Morin, J.B. A simple method for measuring power, force, velocity properties, and mechanical effectiveness in sprint running. Scand. J. Med. Sci. Sports 2016, 26, 648–658. [Google Scholar] [CrossRef]

- French, D.; Ronda, L.T. NSCA’s Essentials of Sport Science; Human Kinetics: Champaign, IL, USA, 2021. [Google Scholar]

- Beekhuizen, K.S.; Davis, M.D.; Kolber, M.J.; Cheng, M.S. Test-retest reliability and minimal detectable change of the hexagon agility test. J. Strength Cond. Res. 2009, 23, 2167–2171. [Google Scholar] [CrossRef]

- Buchheit, M. Want to see my report, coach? ASPETAR Med. J. 2017, 6, 36–43. [Google Scholar]

- Harper, D.J.; Morin, J.-B.; Carling, C.; Kiely, J. Measuring maximal horizontal deceleration ability using radar technology: Reliability and sensitivity of kinematic and kinetic variables. Sports Biomech. 2020, 1–17. [Google Scholar] [CrossRef]

- Buchheit, M.; Lefebvre, B.; Laursen, P.B.; Ahmaidi, S. Reliability, Usefulness, and Validity of the 30–15 Intermittent Ice Test in Young Elite Ice Hockey Players. J. Strength Cond. Res. 2011, 25, 1457–1464. [Google Scholar] [CrossRef] [PubMed]

- Shahab, S.; Steendahl, I.B.; Ruf, L.; Meyer, T.; Van Hooren, B. Sprint performance and force-velocity profiling does not differ between artificial turf and concrete. Int. J. Sports Sci. Coach. 2021, 16, 968–975. [Google Scholar] [CrossRef]

- Standing, R.J.; Maulder, P.S. The Biomechanics of Standing Start and Initial Acceleration: Reliability of the Key Determining Kinematics. J. Sports Sci. Med. 2017, 16, 154–162. [Google Scholar] [PubMed]

- Pipkin, A.; Kotecki, K.; Hetzel, S.; Heiderscheit, B. Reliability of a Qualitative Video Analysis for Running. J. Orthop. Sports Phys. Ther. 2016, 46, 556–561. [Google Scholar] [CrossRef]

- Talukdar, K.; Harrison, C.; McGuigan, M. Intraday and Inter-day Reliability of Sprinting Kinetics in Young Female Athletes Measured Using a Radar Gun. Meas. Phys. Educ. Exerc. Sci. 2021, 25, 266–272. [Google Scholar] [CrossRef]

- Morin, J.B.; Capelo-Ramirez, F.; Rodriguez-Pérez, M.A.; Cross, M.R.; Jimenez-Reyes, P. Individual Adaptation Kinetics Following Heavy Resisted Sprint Training. J. Strength Cond. Res. 2022, 36, 1158–1161. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).