Deep Learning-Based Detection of Honey Storage Areas in Apis mellifera Colonies for Predicting Physical Parameters of Honey via Linear Regression

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.1.1. Experimental Setup

2.1.2. Image Acquisition

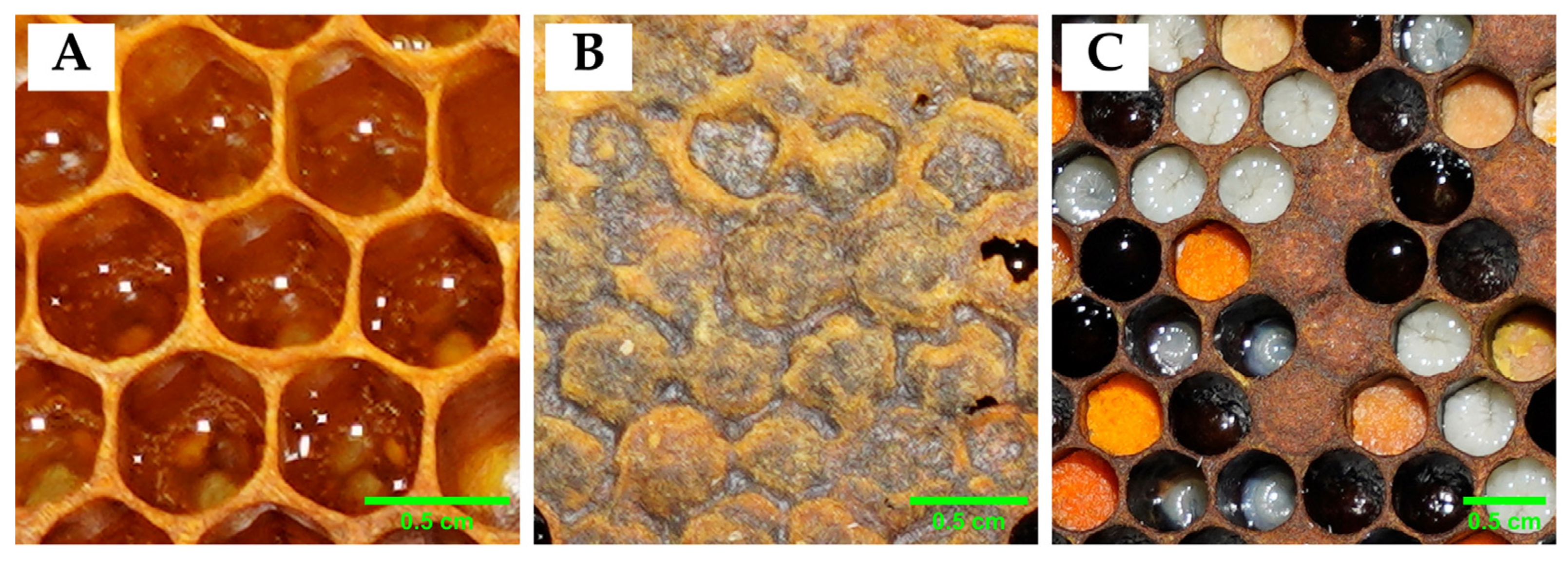

2.1.3. Image Annotation

2.1.4. Data Augmentation

2.2. Object Detection Model

Model Training and Validation

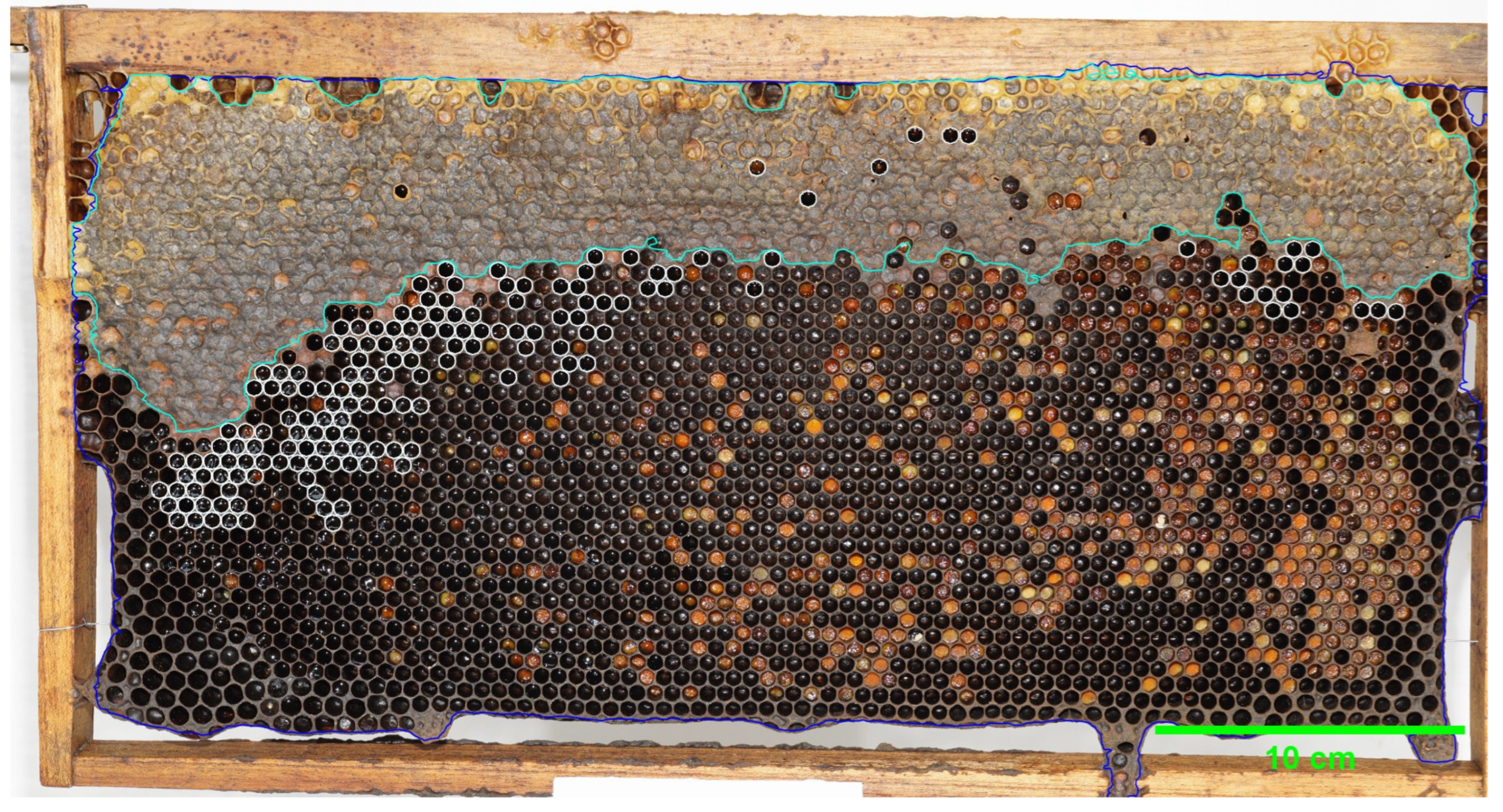

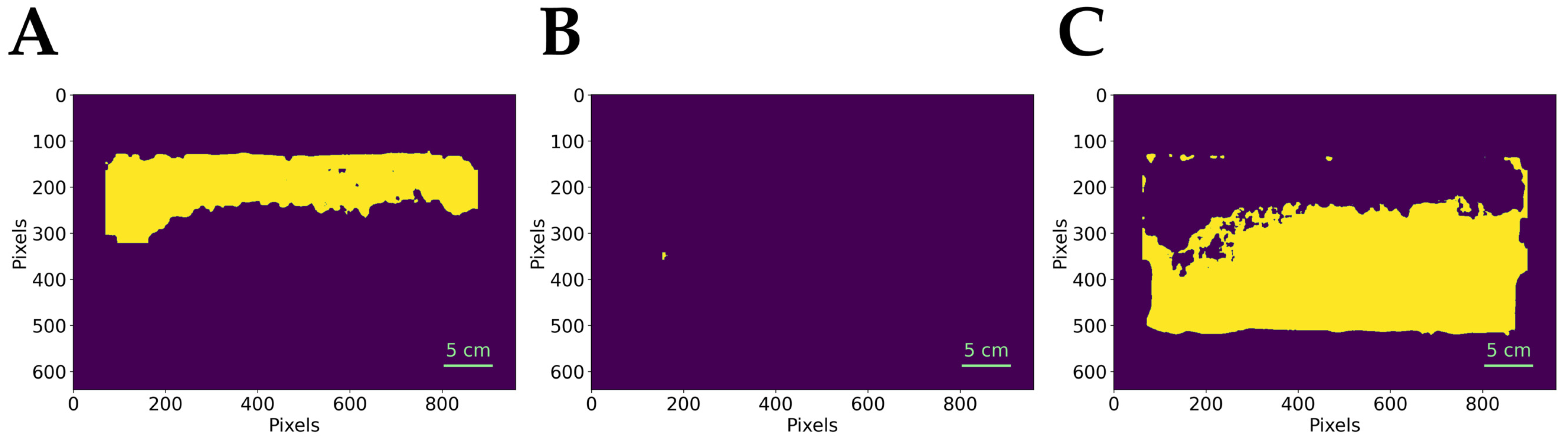

2.3. Data Extraction

2.4. Performance Metrics

2.4.1. Precision and Recall

2.4.2. Average Precision and Mean Average Precision

2.4.3. Honey Area Acquisition and Dataset Fittings Along Linear Regression Line

2.5. Assessment and Measurement of Honey Physical Parameters in Honeybee Hives

2.5.1. pH Measurement

2.5.2. Electrical Conductivity (EC)

2.5.3. Moisture Content

2.5.4. Color Measurement

3. Results

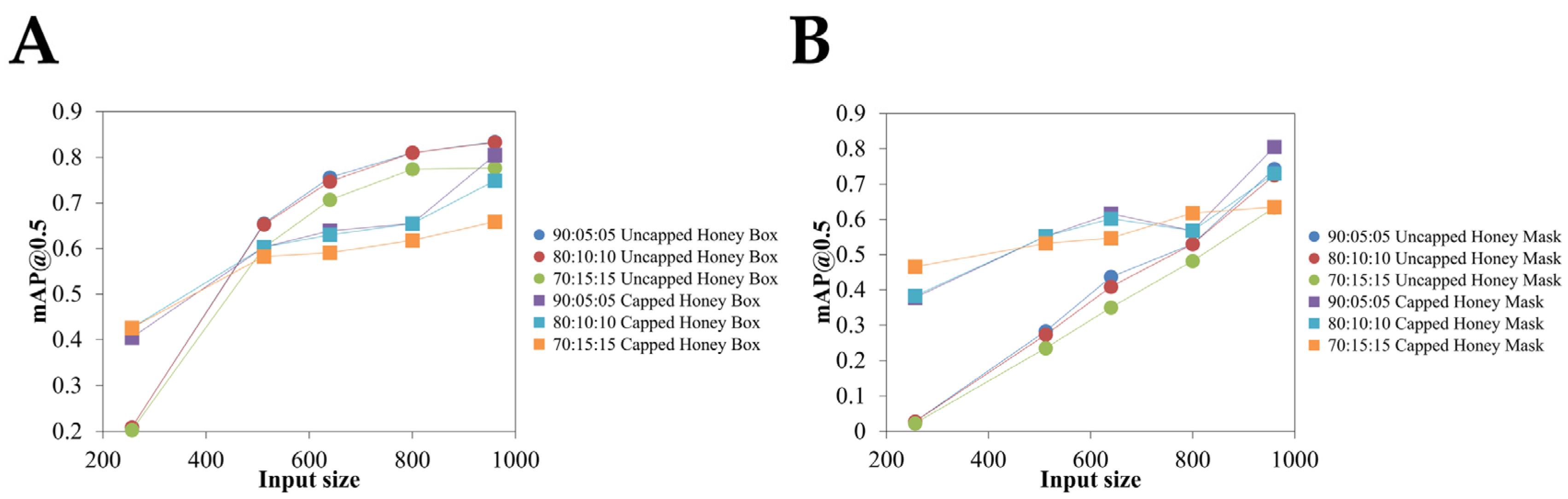

3.1. Model Performance

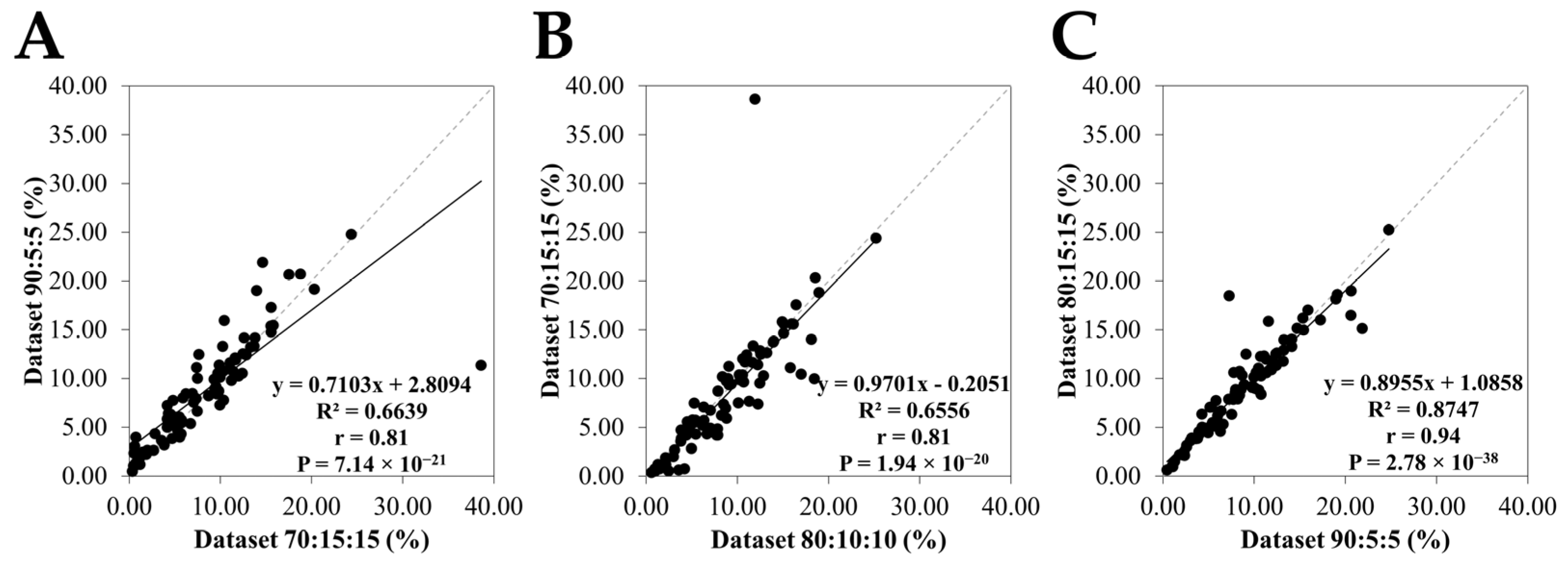

3.2. Comparison of Honey Area Estimates Among Different Datasets

3.3. Relationship Between the Physical Parameters of Honey and the Honey Area

4. Discussion

4.1. Model Performance and Scalability

4.2. Association Between Regional Factors and Honey Quality Parameters

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| AI | Artificial Intelligence |

| mAP | Mean average precision |

| mAP@0.5 | Mean average precision calculated at an intersection over union (IoU) threshold of 0.50 |

| RGB | Red, Green, Blue |

| R2 | Coefficient of determination |

| r | Correlation coefficient |

| P | Statistical significance |

References

- Patel, V.; Pauli, N.; Biggs, E.; Barbour, L.; Boruff, B. Why Bees Are Critical for Achieving Sustainable Development. Ambio 2021, 50, 49–59. [Google Scholar] [CrossRef] [PubMed]

- Khalifa, S.A.M.; Elshafiey, E.H.; Shetaia, A.A.; El-Wahed, A.A.A.; Algethami, A.F.; Musharraf, S.G.; AlAjmi, M.F.; Zhao, C.; Masry, S.H.D.; Abdel-Daim, M.M.; et al. Overview of Bee Pollination and Its Economic Value for Crop Production. Insects 2021, 12, 688. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Jeon, H.; Jung, C. Foraging Behaviour and Preference of Pollen Sources by Honey Bee (Apis mellifera) Relative to Protein Contents. J. Ecol. Environ. 2020, 44, 4. [Google Scholar] [CrossRef]

- Wakgari, M.; Yigezu, G. Honeybee Keeping Constraints and Future Prospects. Cogent Food Agric. 2021, 7, 1872192. [Google Scholar] [CrossRef]

- Capela, N.; Dupont, Y.L.; Rortais, A.; Sarmento, A.; Papanikolaou, A.; Topping, C.J.; Arnold, G.; Pinto, M.A.; Rodrigues, P.J.; More, S.J.; et al. High Accuracy Monitoring of Honey Bee Colony Development by a Quantitative Method. J. Apic. Res. 2023, 62, 741–750. [Google Scholar] [CrossRef]

- Urban, M.; Chlebo, R. Current Status and Future Outlooks of Precision Beekeeping Systems and Services. Rev. Agric. Sci. 2024, 12, 165–181. [Google Scholar] [CrossRef]

- Hadjur, H.; Ammar, D.; Lefèvre, L. Toward an Intelligent and Efficient Beehive: A Survey of Precision Beekeeping Systems and Services. Comput. Electron. Agric. 2022, 192, 106604. [Google Scholar] [CrossRef]

- Odemer, R. Approaches, Challenges and Recent Advances in Automated Bee Counting Devices: A Review. Ann. Appl. Biol. 2022, 180, 73–89. [Google Scholar] [CrossRef]

- Alleri, M.; Amoroso, S.; Catania, P.; Lo Verde, G.; Orlando, S.; Ragusa, E.; Sinacori, M.; Vallone, M.; Vella, A. Recent Developments on Precision Beekeeping: A Systematic Literature Review. J. Agric. Food Res. 2023, 14, 100726. [Google Scholar] [CrossRef]

- Alves, T.S.; Pinto, M.A.; Ventura, P.; Neves, C.J.; Biron, D.G.; Junior, A.C.; De Paula Filho, P.L.; Rodrigues, P.J. Automatic Detection and Classification of Honey Bee Comb Cells Using Deep Learning. Comput. Electron. Agric. 2020, 170, 105244. [Google Scholar] [CrossRef]

- Ngo, T.N.; Rustia, D.J.A.; Yang, E.-C.; Lin, T.-T. Automated Monitoring and Analyses of Honey Bee Pollen Foraging Behavior Using a Deep Learning-Based Imaging System. Comput. Electron. Agric. 2021, 187, 106239. [Google Scholar] [CrossRef]

- Colin, T.; Bruce, J.; Meikle, W.G.; Barron, A.B. The Development of Honey Bee Colonies Assessed Using a New Semi-Automated Brood Counting Method: CombCount. PLoS ONE 2018, 13, e0205816. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Lozano, F.J.; Geninatti, S.R.; Flores, J.M.; Quiles-Latorre, F.J.; Ortiz-Lopez, M. Capped Honey Segmentation in Honey Combs Based on Deep Learning Approach. Comput. Electron. Agric. 2024, 227, 109573. [Google Scholar] [CrossRef]

- Sehar, U.; Naseem, M.L. How Deep Learning Is Empowering Semantic Segmentation. Multimed. Tools Appl. 2022, 81, 30519–30544. [Google Scholar] [CrossRef]

- Abdollahi, M.; Giovenazzo, P.; Falk, T.H. Automated Beehive Acoustics Monitoring: A Comprehensive Review of the Literature and Recommendations for Future Work. Appl. Sci. 2022, 12, 3920. [Google Scholar] [CrossRef]

- Robles-Guerrero, A.; Gómez-Jiménez, S.; Saucedo-Anaya, T.; López-Betancur, D.; Navarro-Solís, D.; Guerrero-Méndez, C. Convolutional Neural Networks for Real Time Classification of Beehive Acoustic Patterns on Constrained Devices. Sensors 2024, 24, 6384. [Google Scholar] [CrossRef] [PubMed]

- Nnadozie, E.; Iloanusi, O.; Ani, O.; Yu, K. Detecting Cassava Plants under Different Field Conditions Using UAV-Based RGB Images and Deep Learning Models. Remote Sens. 2023, 15, 2322. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, Z.; Su, Y.; Kang, F.; Wang, L.; Lin, Y.; Wu, Q.; Li, H.; Cai, Z. PC-YOLO11s: A Lightweight and Effective Feature Extraction Method for Small Target Image Detection. Sensors 2025, 25, 348. [Google Scholar] [CrossRef]

- Schurischuster, S.; Kampel, M. Image-Based Classification of Honeybees. In Proceedings of the 2020 Tenth International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 9–12 November 2020; pp. 1–6. [Google Scholar]

- Yang, C.R. The Use of Video to Detect and Measure Pollen on Bees Entering a Hive; Auckland University of Technology: Auckland, New Zealand, 2018. [Google Scholar]

- Kriouile, Y.; Ancourt, C.; Wegrzyn-Wolska, K.; Bougueroua, L. Nested Object Detection Using Mask R-CNN: Application to Bee and Varroa Detection. Neural Comput. Appl. 2024, 36, 22587–22609. [Google Scholar] [CrossRef]

- Bilík, Š.; Kratochvila, L.; Ligocki, A.; Boštík, O.; Zemčík, T.; Hybl, M.; Horak, K.; Zalud, L. Visual Diagnosis of the Varroa destructor Parasitic Mite in Honeybees Using Object Detector Techniques. Sensors 2021, 21, 2764. [Google Scholar] [CrossRef]

- Alomar, K.; Aysel, H.I.; Cai, X. Data Augmentation in Classification and Segmentation: A Survey and New Strategies. J. Imaging 2023, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Liew, L.H.; Lee, B.Y.; Chan, M. Cell Detection for Bee Comb Images Using Circular Hough Transformation. In Proceedings of the 2010 International Conference on Science and Social Research (CSSR 2010), Kuala Lumpur, Malaysia, 5–7 December 2010; pp. 191–195. [Google Scholar]

- Janota, J.; Blaha, J.; Rekabi-Bana, F.; Ulrich, J.; Stefanec, M.; Fedotoff, L.; Arvin, F.; Schmickl, T.; Krajník, T. Towards Robotic Mapping of a Honeybee Comb. In Proceedings of the 2024 International Conference on Manipulation, Automation and Robotics at Small Scales (MARSS), Delft, The Netherlands, 1–5 July 2024; pp. 1–6. [Google Scholar]

- Raweh, H.S.A.; Badjah-Hadj-Ahmed, A.Y.; Iqbal, J.; Alqarni, A.S. Physicochemical Composition of Local and Imported Honeys Associated with Quality Standards. Foods 2023, 12, 2181. [Google Scholar] [CrossRef]

- Matović, K.; Ćirić, J.; Kaljević, V.; Nedić, N.; Jevtić, G.; Vasković, N.; Baltić, M.Ž. Physicochemical Parameters and Microbiological Status of Honey Produced in an Urban Environment in Serbia. Environ. Sci. Pollut. Res. 2018, 25, 14148–14157. [Google Scholar] [CrossRef] [PubMed]

- Loredana Elena, V.; Mazilu, I.; Enache, C.; Enache, S.; Topala, C. Botanical Origin Influence on Some Honey Physicochemical Characteristics and Antioxidant Properties. Foods 2023, 12, 2134. [Google Scholar] [CrossRef] [PubMed]

- Knauer, U.; Zautke, F.; Bienefeld, K.; Meffert, B. A Comparison of Classifiers for Prescreening of Honeybee Brood Cells. In Proceedings of the International Conference on Computer Vision Systems: Proceedings (2007), Rio de Janeiro, Brazil, 14–21 October 2007. [Google Scholar]

- Bogdanov, S. Harmonised Methods of the International Honey Commission; Swiss Bee Research Centre, FAM: Liebefeld, Switzerland, 2009; pp. 1–62. [Google Scholar]

- Acquarone, C.; Buera, P.; Elizalde, B. Pattern of pH and Electrical Conductivity upon Honey Dilution as a Complementary Tool for Discriminating Geographical Origin of Honeys. Food Chem. 2007, 101, 695–703. [Google Scholar] [CrossRef]

- Alimentarius, C. Revised Codex Standard for Honey. Codex Stan. 2001, 12, 1982. [Google Scholar]

- El Sohaimy, S.A.; Masry, S.H.D.; Shehata, M.G. Physicochemical Characteristics of Honey from Different Origins. Ann. Agric. Sci. 2015, 60, 279–287. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Thompson, N.C.; Greenewald, K.; Lee, K.; Manso, G.F. The Computational Limits of Deep Learning. arXiv 2007. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Sulaiman, S.A.; Khalil, M.; Gan, S. Evaluation of Physicochemical and Antioxidant Properties of Sourwood and Other Malaysian Honeys: A Comparison with Manuka Honey. Chem. Cent. J. 2013, 7, 138. [Google Scholar] [CrossRef]

- Schiassi, M.C.E.V.; de Souza, V.R.; Lago, A.M.T.; Carvalho, G.R.; Curi, P.N.; Guimarães, A.S.; Queiroz, F. Quality of Honeys from Different Botanical Origins. J. Food Sci. Technol. 2021, 58, 4167–4177. [Google Scholar] [CrossRef]

- González-Miret, M.L.; Terrab, A.; Hernanz, D.; Fernández-Recamales, M.Á.; Heredia, F.J. Multivariate Correlation between Color and Mineral Composition of Honeys and by Their Botanical Origin. J. Agric. Food Chem. 2005, 53, 2574–2580. [Google Scholar] [CrossRef] [PubMed]

- Shekilango, S.G.; Mongi, R.J.; Shayo, N.B. Colour and Antioxidant Activities of Honey from Different Floral Sources and Geographical Origins in Tanzania. Int. J. Basic Appl. Res. 2016, 15. Available online: https://www.ajol.info/index.php/tjags/article/view/177785 (accessed on 12 January 2025).

- Akgün, N.; Çelik, Ö.F.; Kelebekli, L. Physicochemical Properties, Total Phenolic Content, and Antioxidant Activity of Chestnut, Rhododendron, Acacia and Multifloral Honey. Food Meas. 2021, 15, 3501–3508. [Google Scholar] [CrossRef]

- Pop, I.; Simeanu, D.; Cucu-Man, S.-M.; Pui, A.; Albu, A. Quality Profile of Several Monofloral Romanian Honeys. Agriculture 2022, 13, 75. [Google Scholar] [CrossRef]

- Puścion-Jakubik, A.; Borawska, M.H.; Socha, K. Modern Methods for Assessing the Quality of Bee Honey and Botanical Origin Identification. Foods 2020, 9, 1028. [Google Scholar] [CrossRef]

| Class | Description | Number of Annotation |

|---|---|---|

| Uncap | Polygon around the honey-uncapped cell | 62,520 |

| Cap | Polygon around the honey-capped cell | 607 |

| Other | Polygon around the area of the beeswax | 300 |

| Category | Techniques Used | Description |

|---|---|---|

| Preprocessing | Auto-orient | Ensured all images were correctly oriented |

| Resize | Stretched images to 960 × 960 pixels | |

| Auto-adjust contrast | Applied Adaptive Equalization for contrast | |

| Filter Null | Ensured all images contained annotations | |

| Augmentation | Flip | Horizontal flipping |

| Hue | Between −5° and +5° | |

| Saturation | Between −10% and +10% | |

| Brightness | Between −10% and +10% | |

| Exposure | Between −5% and +5% | |

| Noise | Up to 1% of the pixels |

| Input Resolution | Dataset (Training: Validating: Testing) | mAP@0.5 | |||

|---|---|---|---|---|---|

| Uncapped Honey Cells | Capped Honey Cells | ||||

| Box | Mask | Box | Mask | ||

| 960 × 960 | 90:5:5 | 0.834 | 0.743 | 0.805 | 0.805 |

| 80:10:10 | 0.830 | 0.725 | 0.749 | 0.730 | |

| 70:15:15 | 0.777 | 0.634 | 0.659 | 0.635 | |

| 800 × 800 | 90:5:5 | 0.842 | 0.513 | 0.775 | 0.681 |

| 80:10:10 | 0.810 | 0.530 | 0.655 | 0.568 | |

| 70:15:15 | 0.774 | 0.482 | 0.604 | 0.618 | |

| 640 × 640 | 90:5:5 | 0.773 | 0.434 | 0.790 | 0.720 |

| 80:10:10 | 0.756 | 0.437 | 0.639 | 0.616 | |

| 70:15:15 | 0.709 | 0.405 | 0.591 | 0.547 | |

| 512 × 512 | 90:5:5 | 0.655 | 0.273 | 0.685 | 0.638 |

| 80:10:10 | 0.658 | 0.280 | 0.590 | 0.552 | |

| 70:15:15 | 0.597 | 0.255 | 0.559 | 0.582 | |

| 256 × 256 | 90:5:5 | 0.229 | 0.028 | 0.505 | 0.378 |

| 80:10:10 | 0.234 | 0.034 | 0.415 | 0.363 | |

| 70:15:15 | 0.209 | 0.028 | 0.428 | 0.466 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khokthong, W.; Kritangkoon, P.; Sinpoo, C.; Takioawong, P.; Phokasem, P.; Disayathanoowat, T. Deep Learning-Based Detection of Honey Storage Areas in Apis mellifera Colonies for Predicting Physical Parameters of Honey via Linear Regression. Insects 2025, 16, 575. https://doi.org/10.3390/insects16060575

Khokthong W, Kritangkoon P, Sinpoo C, Takioawong P, Phokasem P, Disayathanoowat T. Deep Learning-Based Detection of Honey Storage Areas in Apis mellifera Colonies for Predicting Physical Parameters of Honey via Linear Regression. Insects. 2025; 16(6):575. https://doi.org/10.3390/insects16060575

Chicago/Turabian StyleKhokthong, Watit, Panpakorn Kritangkoon, Chainarong Sinpoo, Phuwasit Takioawong, Patcharin Phokasem, and Terd Disayathanoowat. 2025. "Deep Learning-Based Detection of Honey Storage Areas in Apis mellifera Colonies for Predicting Physical Parameters of Honey via Linear Regression" Insects 16, no. 6: 575. https://doi.org/10.3390/insects16060575

APA StyleKhokthong, W., Kritangkoon, P., Sinpoo, C., Takioawong, P., Phokasem, P., & Disayathanoowat, T. (2025). Deep Learning-Based Detection of Honey Storage Areas in Apis mellifera Colonies for Predicting Physical Parameters of Honey via Linear Regression. Insects, 16(6), 575. https://doi.org/10.3390/insects16060575