On the Study of Joint YOLOv5-DeepSort Detection and Tracking Algorithm for Rhynchophorus ferrugineus

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

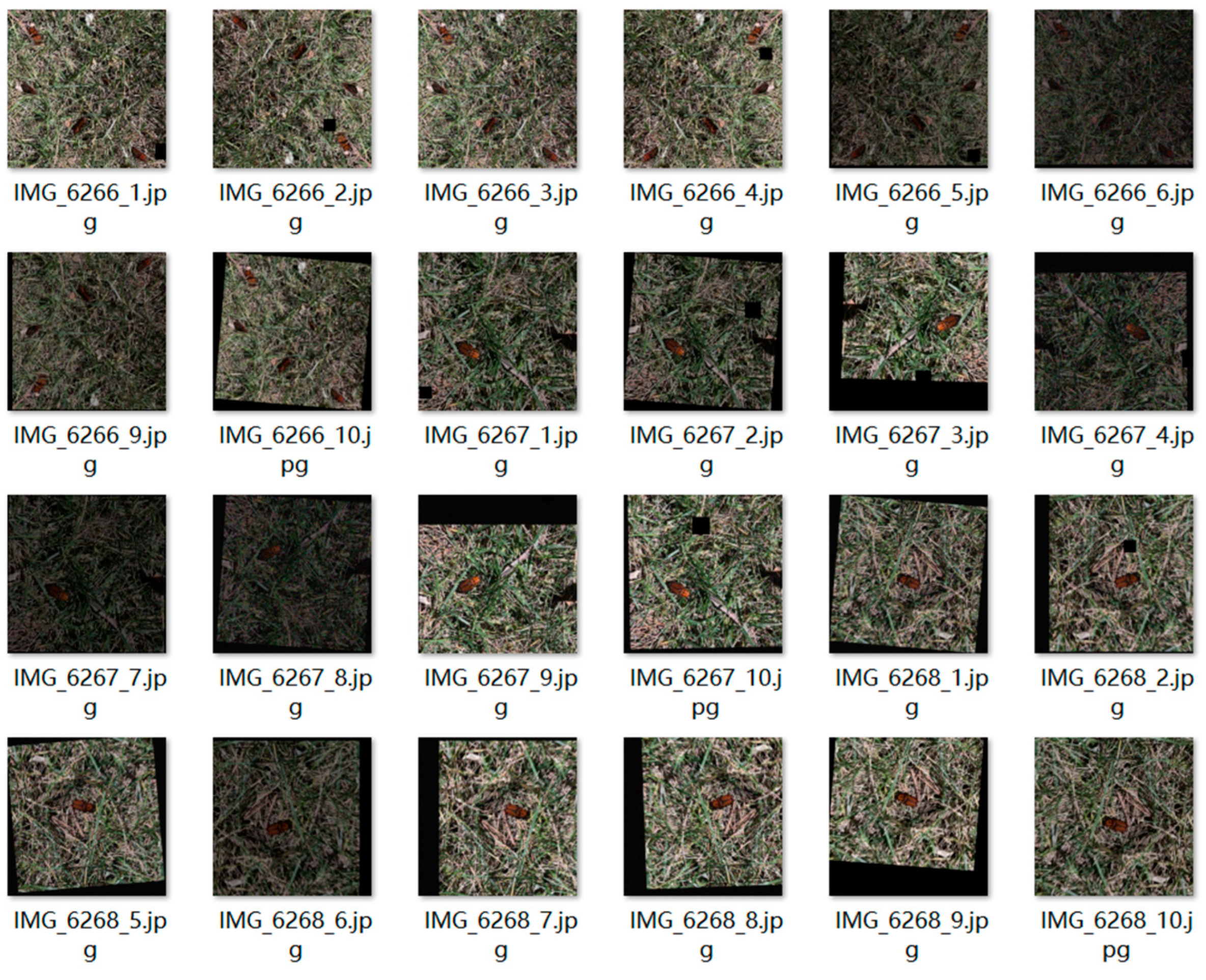

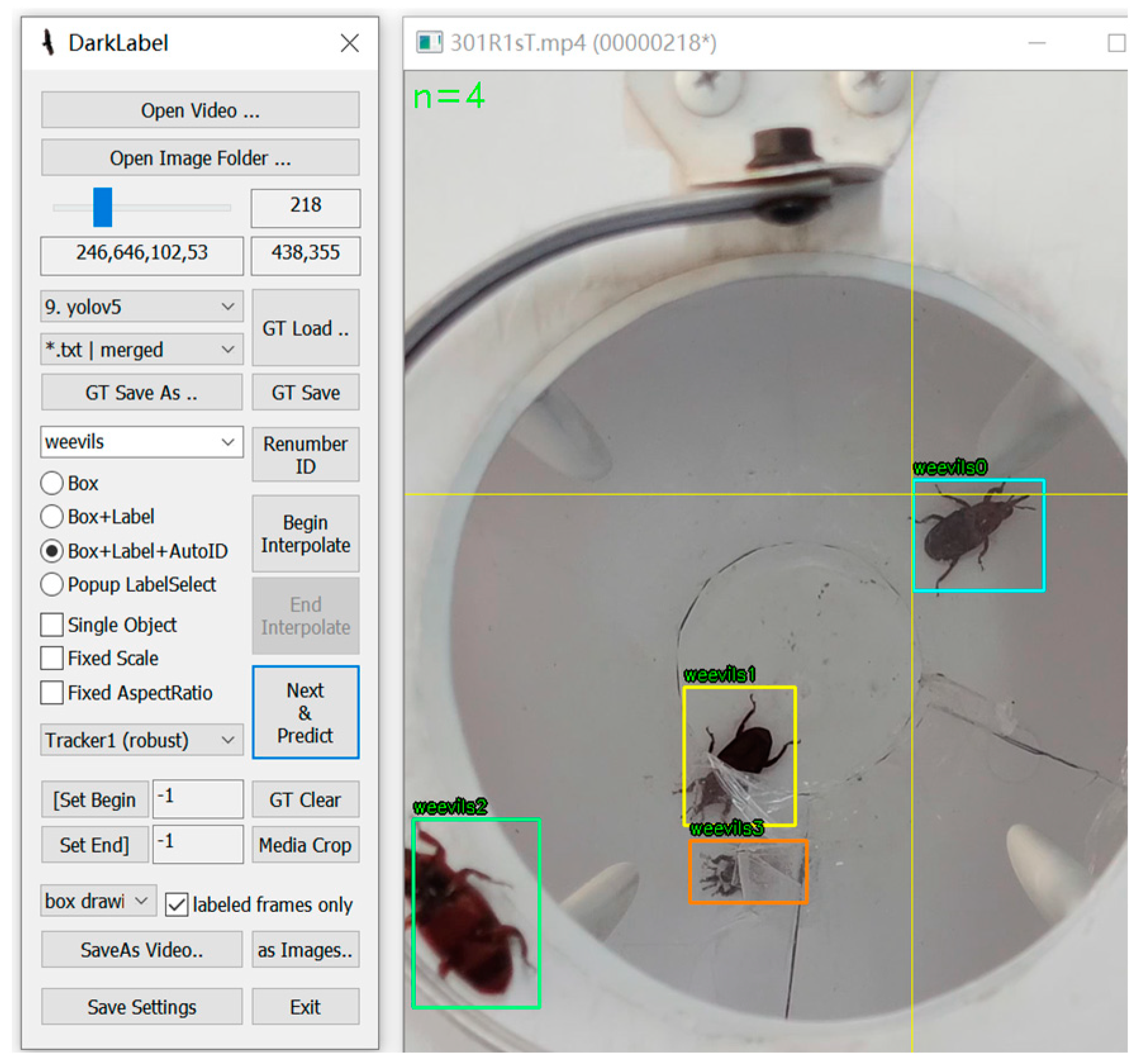

2.1. Dataset Acquisition and Labeling

2.2. Data Augmentation

3. Detecting and Tracking Principles

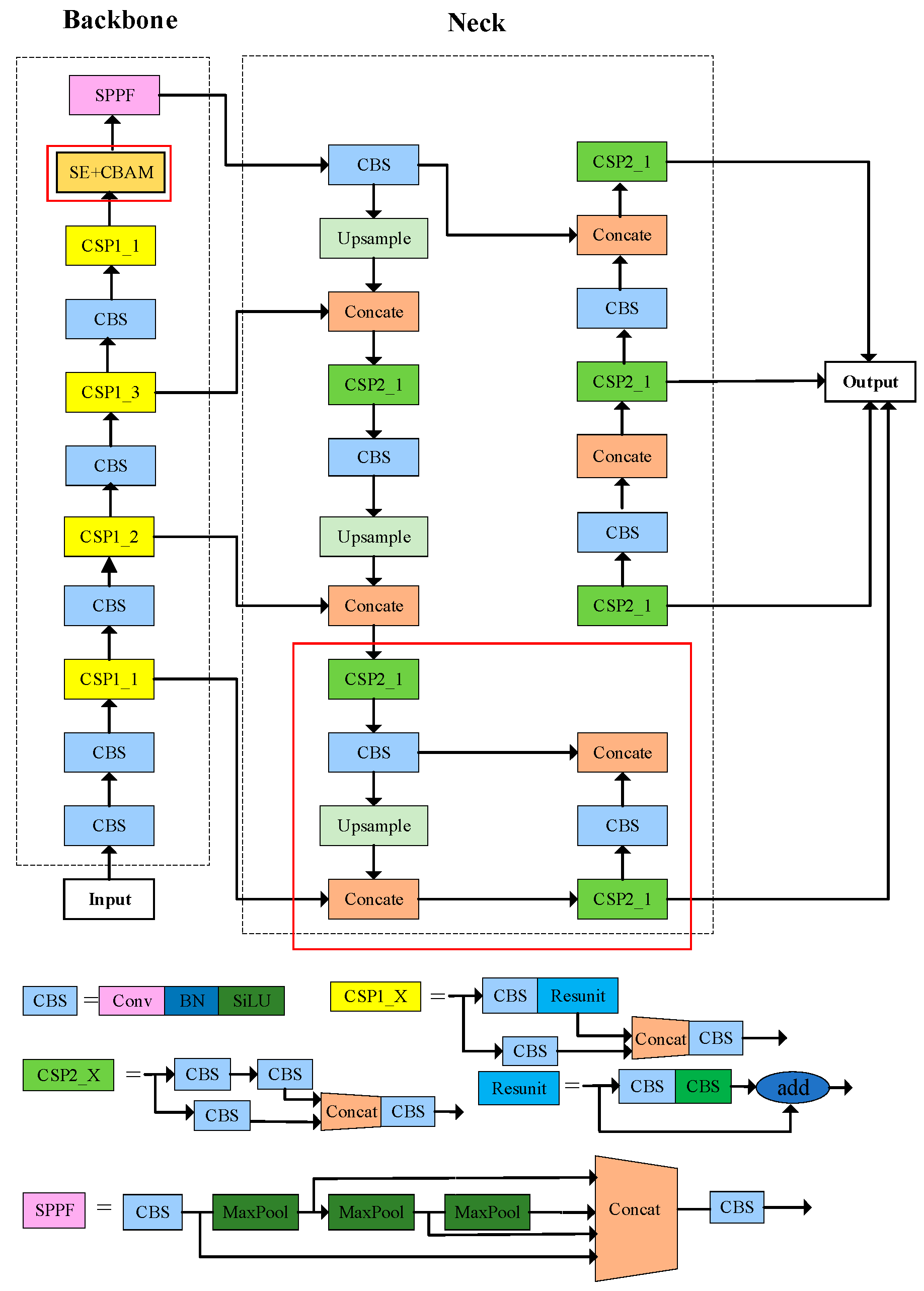

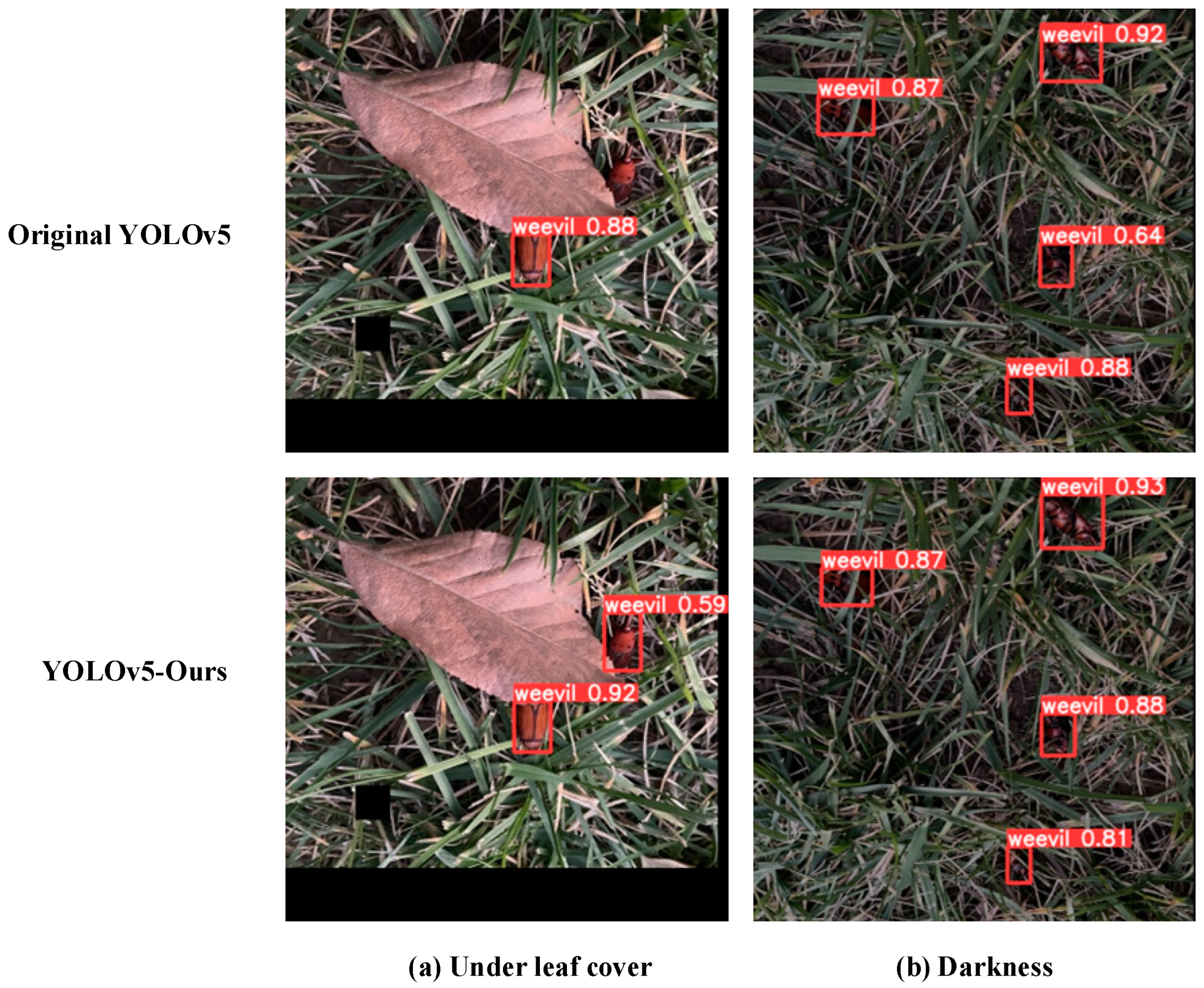

3.1. Improved YOLOv5 Model

- (1)

- Improving the feature extraction network: The difficulty of feature extraction is increased because the RPW as a small target occupies a small proportion of the whole background. To address the above problems, a 4-fold down-sampling layer is added in the feature extraction network to improve the ability to extract the location information of small targets.

- (2)

- Fusion attention mechanism: For the problem of the RPW being difficult to detect when features are missing, Squeeze-and-Excitation (SE) [28] and Convolutional Block Attention Module (CBAM) [29] attention mechanisms are added to the original YOLOv5. These mechanisms enhance the algorithm’s local feature extraction ability and improve the detection performance. The improved YOLOv5 network is given in Figure 5, and the improved part of the network is shown in the red box.

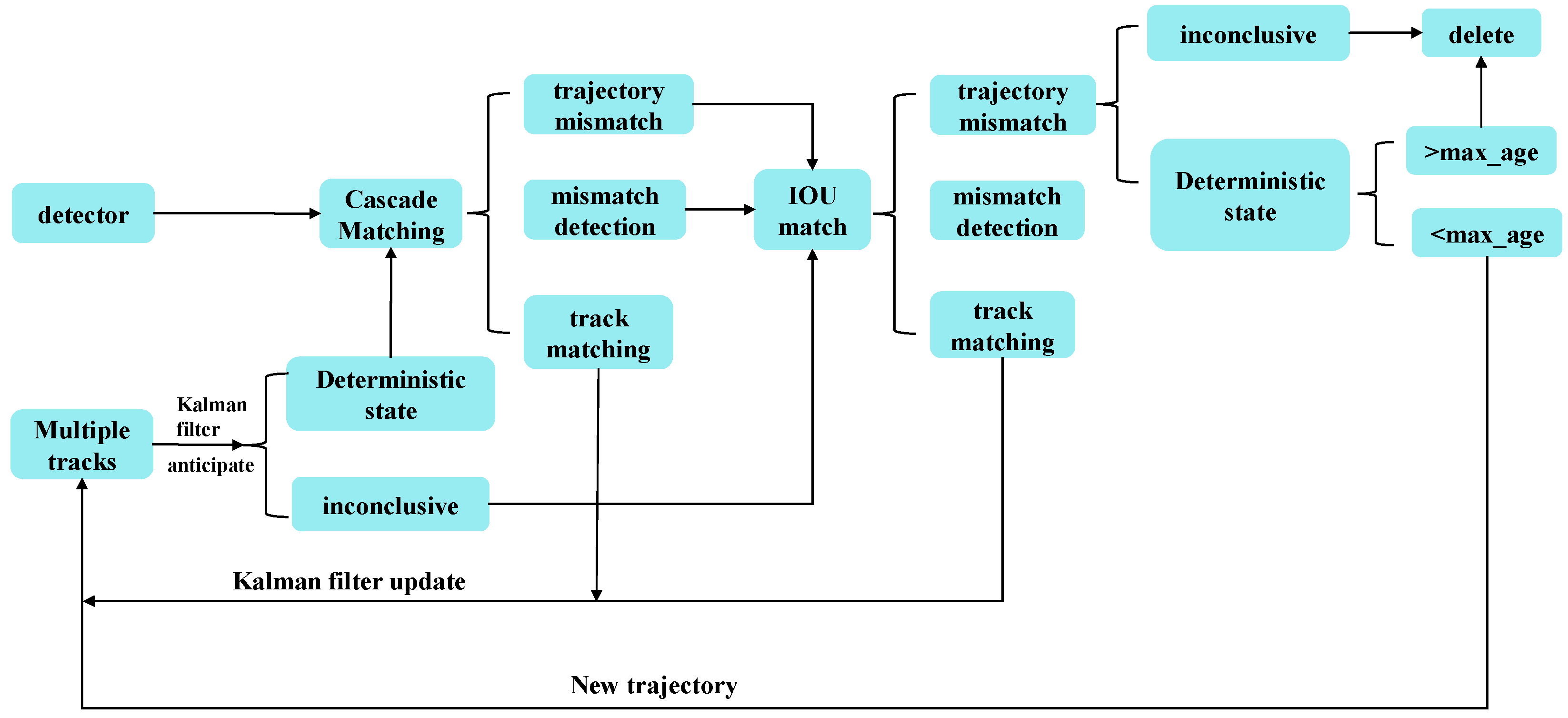

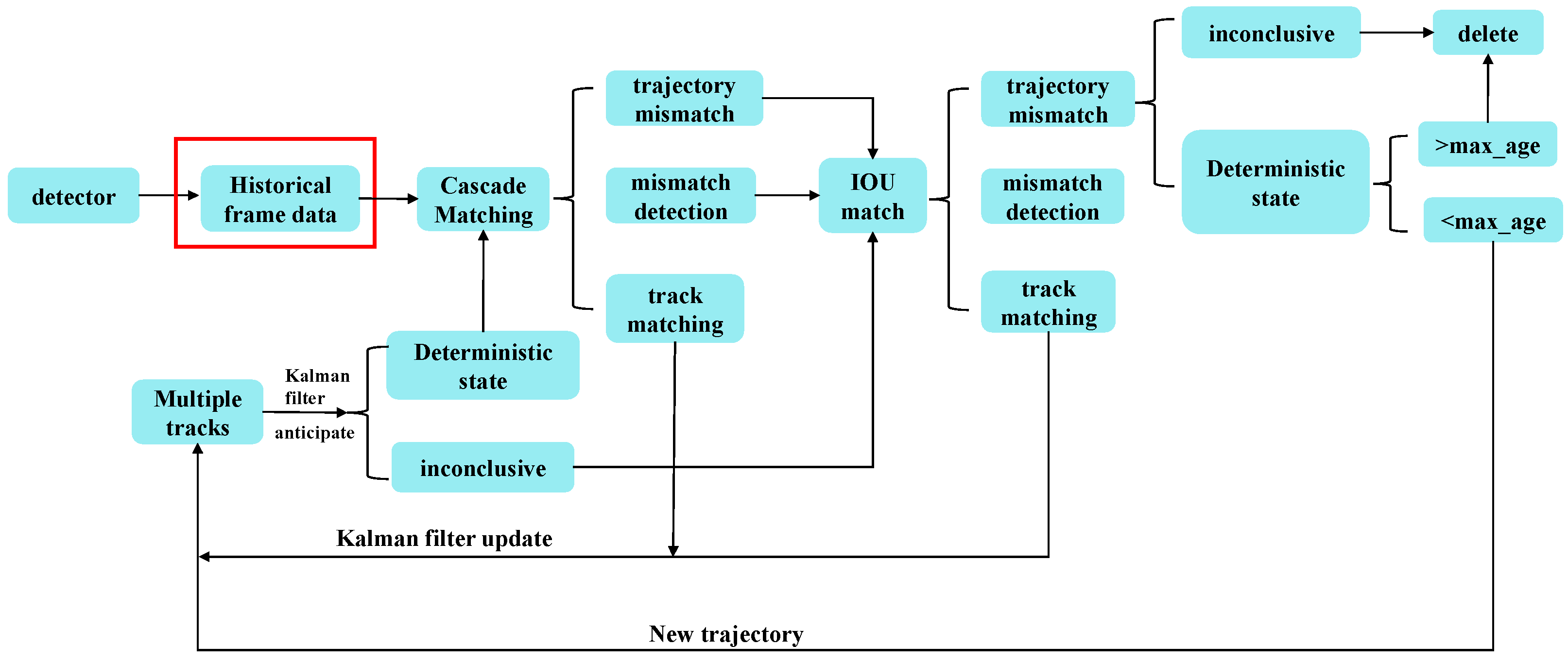

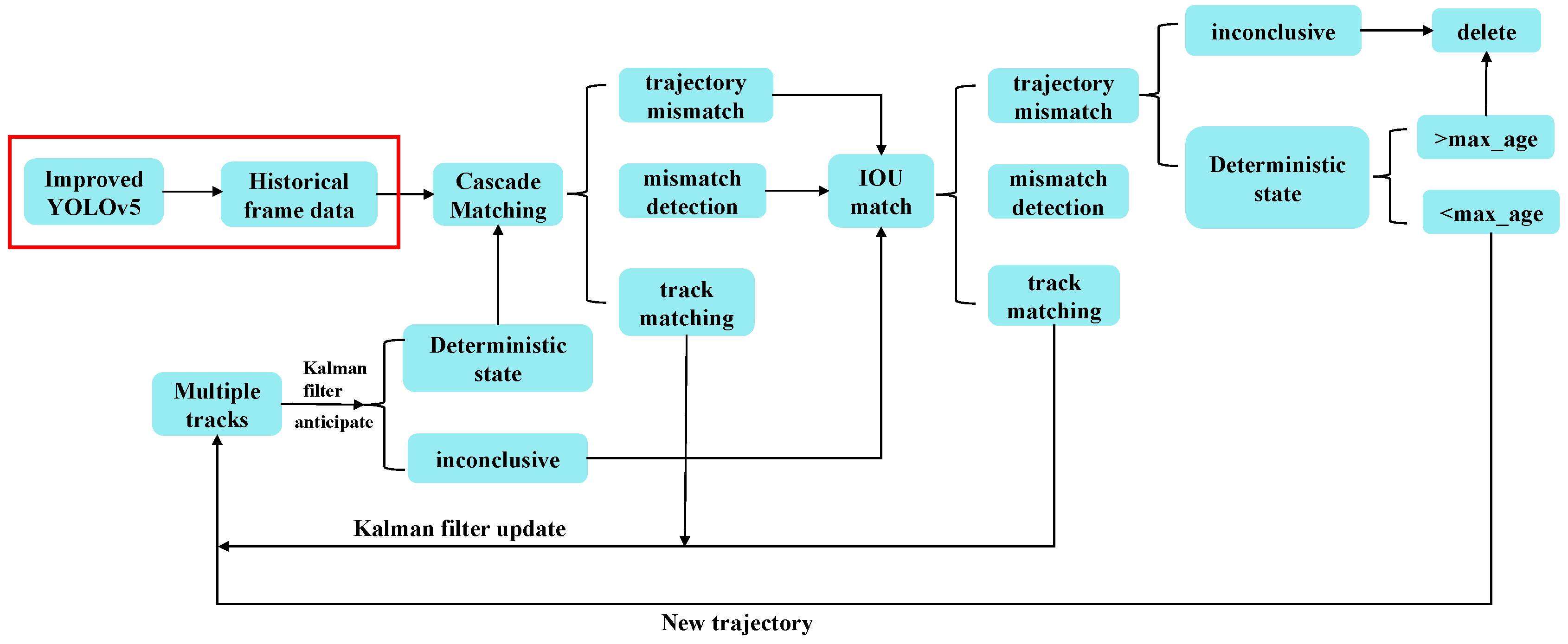

3.2. DeepSort Target Tracking Algorithm

3.3. Improved DeepSort Algorithm

3.4. The Joint YOLOv5-DeepSort Algorithm

4. Experiment and Results Analysis

4.1. Experimental Equipment and Parameter Settings

- (1)

- Learning rate: set to 0.01, which is used to control the step size of the model to update the weights in each training iteration.

- (2)

- Momentum: set to 0.937, this parameter helps to speed up the convergence process and reduce the oscillation of the training process.

- (3)

- Decay: set to 0.0005, which is used to control the decay rate of the learning rate to maintain the stability of the training.

- (4)

- Batch size: set to 8, indicates the number of samples processed simultaneously in each iteration.

4.2. Evaluation Metrics for the Red Palm Weevil Dataset

4.2.1. Target Detection Dataset Evaluation Indicators

4.2.2. Target Tracking Dataset Evaluation Indicators

4.3. Experiments and Analysis of Results

4.3.1. Improved YOLOv5 Experiments and Results Analysis

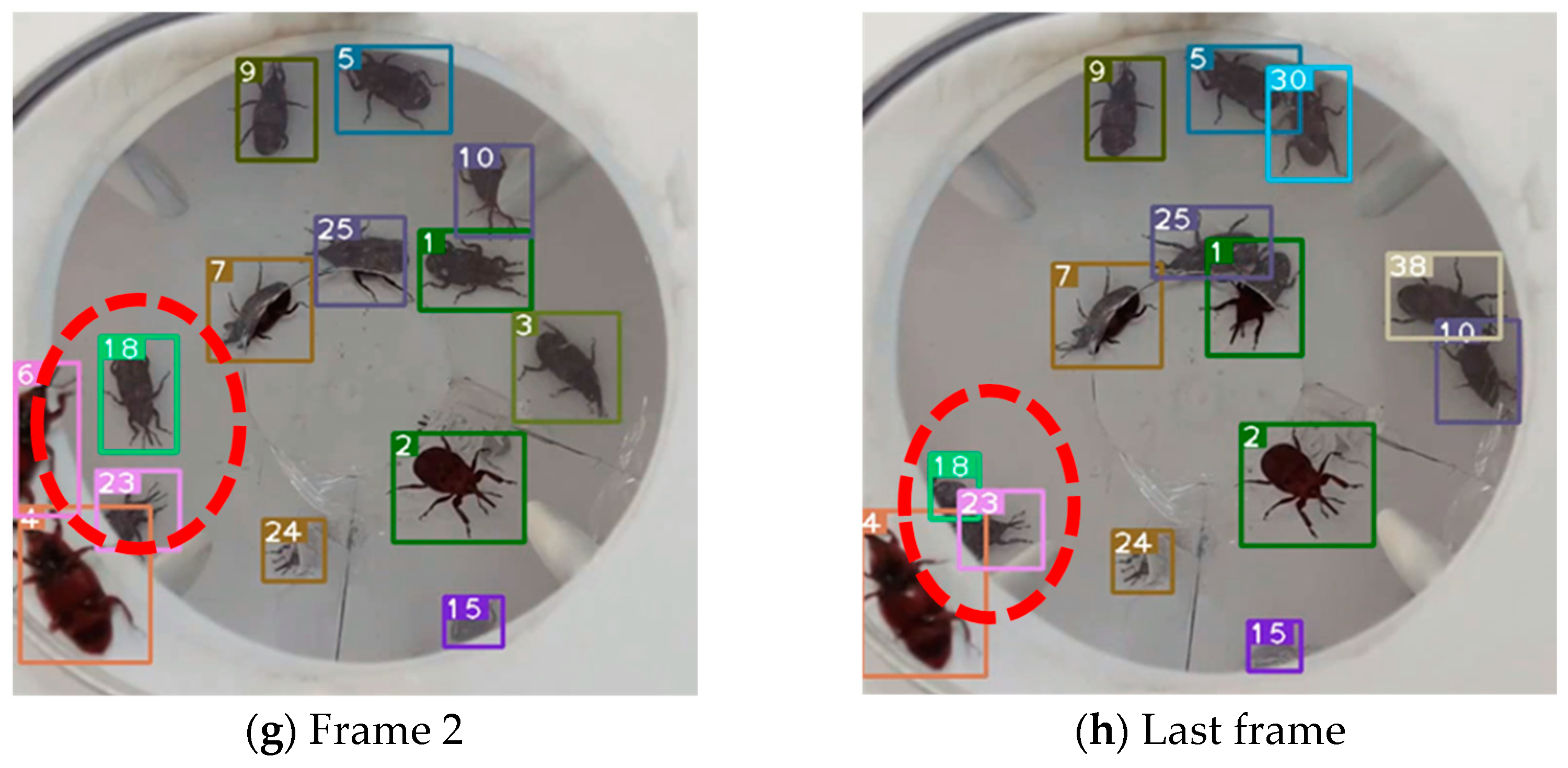

4.3.2. The Joint YOLOv5-DeepSort Performance Analysis

5. Conclusions

- (1)

- Expand the sample dataset. The model proposed in this work demonstrates strong versatility in the fields of object detection and tracking, possessing the potential to be extended to applications for detecting and counting other pests. To enhance the applicability of the algorithm in other pest management systems, this work will consider further expanding the dataset by collecting more raw data from similar insects and covering more species and targets. Given the high similarity between the RPW and other insects, the current model may not be able to classify and detect these insects accurately. Therefore, further research is needed to integrate information from other modalities to improve the accuracy of insect classification and detection.

- (2)

- Continuously improve model performance. In the future, we will continue to use deep learning models to train and optimize the enhanced data to improve the algorithm’s performance in recognizing species with similar shapes, especially on small target species such as insects that have similar appearances but less obvious features. At the same time, considering the limited number of individuals, the single scenario, and the limited types of target in the testing experiments in this article, the applicability of the algorithm can be expanded in the future by conducting hardware system experiments using the control variable method to improve the practical performance of the system.

- (3)

- Research on model lightweighting. This work used optimized algorithm fusion to monitor and count the RPW, but the fused joint model had a relatively large volume, resulting in an overall increase in computational complexity. Considering the importance of model lightweighting for practical applications, in-depth research and discussion can be conducted in the future to reduce the complexity and storage requirements of models while maintaining computational performance. By using lightweight fusion models, more valuable references and guidance can be provided for the practical application of monitoring and counting models for RPW.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Qin, W.; Zhao, H.; Han, C. Occurrence and control of RPW in Hainan. Yunnan Therm. Sci. Technol. 2002, 25, 29–30. [Google Scholar]

- Song, Y. Introduction to nineteen quarantine pests in forestry. Chin. For. Pests Dis. 2005, 24, 30–35. [Google Scholar]

- Zhao, X.; Li, H.; Hu, X. A review of deep learning-based techniques for automatic identification of field pests. J. Image Signal Process. 2023, 12, 77–88. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Salvador, A.; Giró-i-Nieto, X.; Marqués, F.; Satoh, S.I. Faster r-cnn features for instance search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 27–30 June 2016; pp. 9–16. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA; pp. 779–788. [Google Scholar]

- He, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Fast identification of tomato fruits in greenhouse at night based on improved YOLO v5. J. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Wu, S.; Wang, J.; Liu, L.; Chen, D.; Lu, H.; Xu, C.; Hao, R.; Li, Z.; Wang, Q. Enhanced YOLOv5 Object Detection Algorithm for Accurate Detection of Adult Rhynchophorus ferrugineus. Insects 2023, 14, 698. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep Learning in Video Multi-Object Tracking: A Survey. arXiv 2019, arXiv:1907.12740. [Google Scholar] [CrossRef]

- Wax, N. Signal-to-noise improvement and the statistics of track populations. J. Appl. Phys. 1955, 26, 586–595. [Google Scholar] [CrossRef]

- Chan, Y.T.; Hu, A.G.C.; Plant, J.B. A Kalman filter based tracking scheme with input estimation. IEEE Trans. Aerosp. Electron. Syst. 1979, 15, 237–244. [Google Scholar] [CrossRef]

- Xu, J.; Li, J.; Xu, S. Quantized innovations Kalman filter: Stability and modification with scaling quantization. J. Zhejiang Univ. Sci. C 2012, 13, 118–130. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, H.; Zhang, L. A review of visual multi-target tracking algorithms based on deep learning. Comput. Eng. Appl. 2021, 57, 55–66. [Google Scholar]

- Li, X.; Cha, Y.; Zhang, T. A review of target tracking algorithms for deep learning. Chin. J. Image Graph. 2019, 24, 2057–2080. [Google Scholar] [CrossRef]

- Zheng, C.; Yan, X.; Gao, J.; Zhao, W.; Zhang, W.; Li, Z.; Cui, S. Box-aware feature enhancement for single object tracking on point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13199–13208. [Google Scholar]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 107–122. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and real-time tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and real-time tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: New York, NY, USA; pp. 3645–3649. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Ma, R.; Zhang, M. Improvement of surveillance video traffic counting with YOLOv5s+ DeepSORT. Comput. Eng. Appl. 2022, 58, 271–279. [Google Scholar]

- Jiang, L. Deep Learning Based Insect Recognition Method and Its Application. Master’s Thesis, Hunan Agricultural University, Changsha, China, 2019. [Google Scholar]

- Yuan, Z.; Gu, Y.; Ma, G. Improved CSTrack algorithm for multi-target tracking of multi-category ships. Opt. Eng. 2022, 49, 210372. [Google Scholar]

- Ting, L.; Baijun, Z.; Yongsheng, Z.; Shun, Y. Ship detection algorithm based on improved YOLO V5. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 483–487. [Google Scholar]

- Li, R.; Wu, Y. Improved YOLO v5 wheat ear detection algorithm based on attention mechanism. Electronics 2022, 11, 1673. [Google Scholar] [CrossRef]

- Huang, L.; Yang, Y.; Yang, C.; Yang, W.; Li, Y. FS-YOLOv5: A lightweight infrared target detection method. Comput. Eng. Appl. 2023, 59, 215–224. [Google Scholar]

- Yang, L.; Yan, J.; Li, H.; Cao, X.; Ge, B.; Qi, Z.; Yan, X. Real-Time Classification of Invasive Plant Seeds Based on Improved YOLOv5 with Attention Mechanism. Diversity 2022, 14, 254. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, Y.S.; Liu, W.K.; Liu, Q.H.; Zhe, T.T.; Wang, J.C. Pedestrian detection algorithm for shipboard vehicles based on YOLOX combined with DeepSort. Comput. Mod. 2023, 8, 60–67. [Google Scholar]

- Parico, A.I.B.; Ahamed, T. Real time pear fruit detection and counting using YOLOv4 models and deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef]

- Qi, J.; Liu, X.; Liu, K. An improved YOLOv5 model based on visual attention mechanism: Application to recognition of tomato virus disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Song, L.; Xu, K.; Shi, X. Research on the method of multi-unmanned craft co-operation in rounding up intelligent escape targets. China Ship Res. 2023, 18, 52–59. [Google Scholar]

| 4× | SE | CBAM | P | R | F1 | mAP@.5 | mAP@.5:.95 | FPS |

|---|---|---|---|---|---|---|---|---|

| × | × | × | 0.913 | 0.828 | 0.868 | 0.888 | 0.485 | 125 |

| √ | × | × | 0.923 | 0.813 | 0.865 | 0.893 | 0.486 | 123 |

| × | √ | × | 0.928 | 0.811 | 0.866 | 0.878 | 0.454 | 121 |

| × | × | √ | 0.932 | 0.795 | 0.858 | 0.849 | 0.437 | 122 |

| √ | √ | √ | 0.938 | 0.834 | 0.883 | 0.901 | 0.489 | 120 |

| Model | P | R | F1 | mAP@.5 | mAP@.5:.95 | FPS |

|---|---|---|---|---|---|---|

| YOLOv5 | 0.913 | 0.828 | 0.868 | 0.888 | 0.485 | 125 |

| YOLOv5-Ours | 0.938 | 0.834 | 0.883 | 0.901 | 0.489 | 120 |

| Algorithm | MOTA | MOTP | IDS |

|---|---|---|---|

| Original DeepSort | 94.1% | 90.03% | 15 |

| Joint YOLOv5-DeepSort | 94.3% | 90.14% | 10 |

| Method | Result |

|---|---|

| Manual | 17 |

| Anchor Frame | 19 |

| Joint YOLOv5-DeepSort | 16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, S.; Wang, J.; Wei, W.; Ji, X.; Yang, B.; Chen, D.; Lu, H.; Liu, L. On the Study of Joint YOLOv5-DeepSort Detection and Tracking Algorithm for Rhynchophorus ferrugineus. Insects 2025, 16, 219. https://doi.org/10.3390/insects16020219

Wu S, Wang J, Wei W, Ji X, Yang B, Chen D, Lu H, Liu L. On the Study of Joint YOLOv5-DeepSort Detection and Tracking Algorithm for Rhynchophorus ferrugineus. Insects. 2025; 16(2):219. https://doi.org/10.3390/insects16020219

Chicago/Turabian StyleWu, Shuai, Jianping Wang, Wei Wei, Xiangchuan Ji, Bin Yang, Danyang Chen, Huimin Lu, and Li Liu. 2025. "On the Study of Joint YOLOv5-DeepSort Detection and Tracking Algorithm for Rhynchophorus ferrugineus" Insects 16, no. 2: 219. https://doi.org/10.3390/insects16020219

APA StyleWu, S., Wang, J., Wei, W., Ji, X., Yang, B., Chen, D., Lu, H., & Liu, L. (2025). On the Study of Joint YOLOv5-DeepSort Detection and Tracking Algorithm for Rhynchophorus ferrugineus. Insects, 16(2), 219. https://doi.org/10.3390/insects16020219