Measuring the Flight Trajectory of a Free-Flying Moth on the Basis of Noise-Reduced 3D Point Cloud Time Series Data

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Sample Collection and Rearing

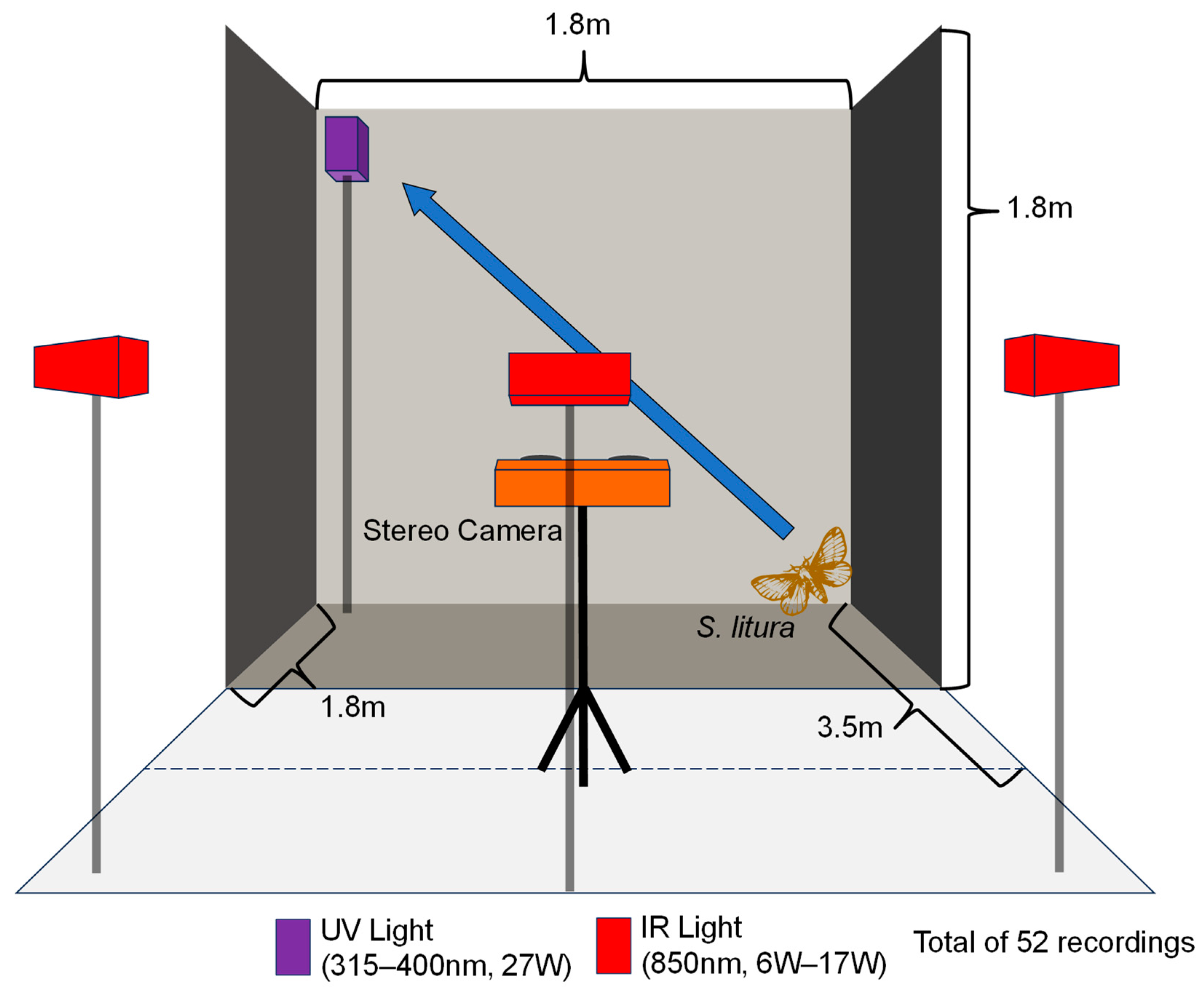

2.2. Video Recording Using Stereo Camera

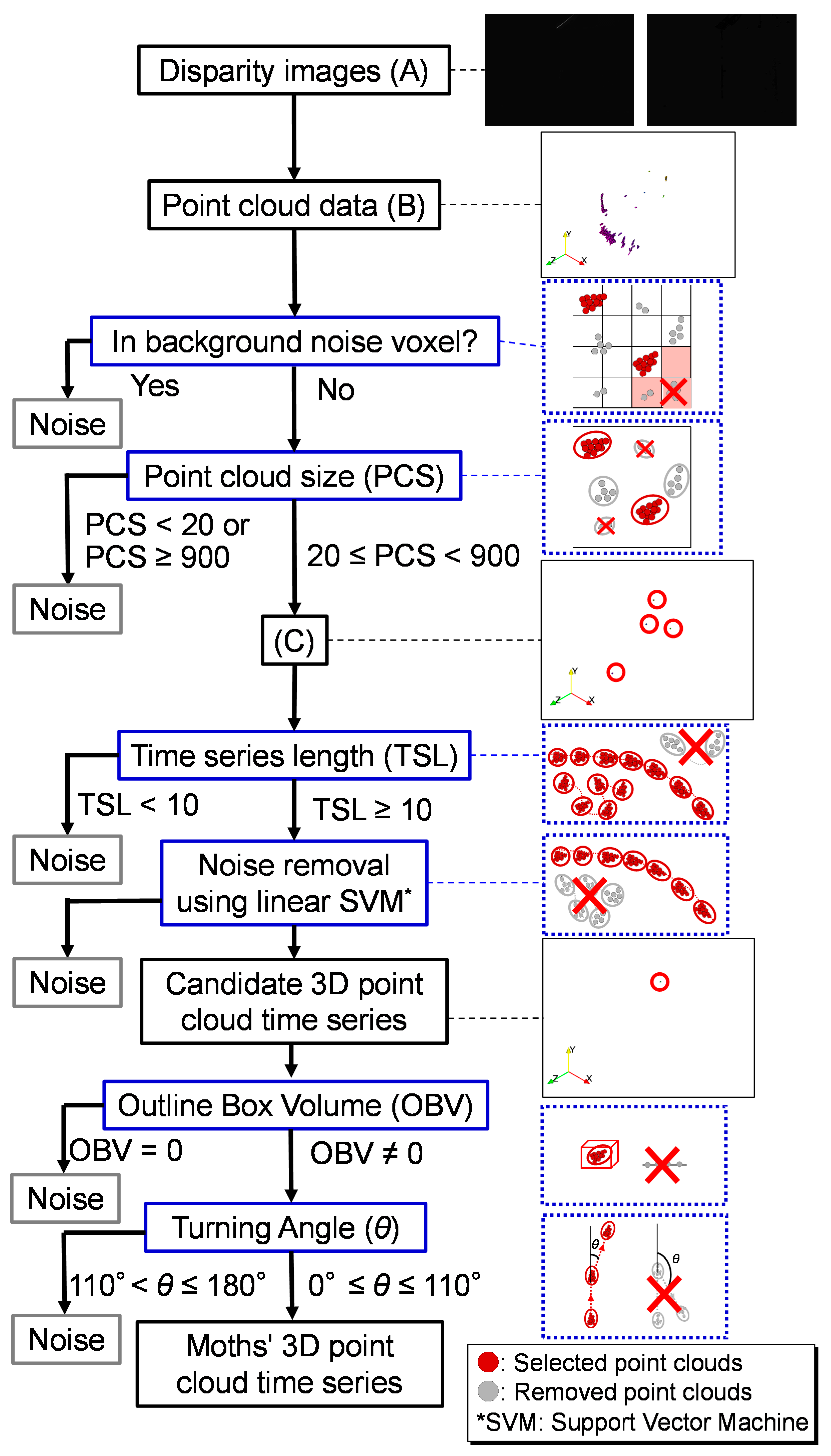

2.3. Extracting Spodoptera litura Flight Trajectories

2.4. Additional Noise Removal

2.4.1. Three-Dimensional Animation of Three-Dimensional Point Cloud Time Series

2.4.2. Remaining Noise Removal Process

3. Results

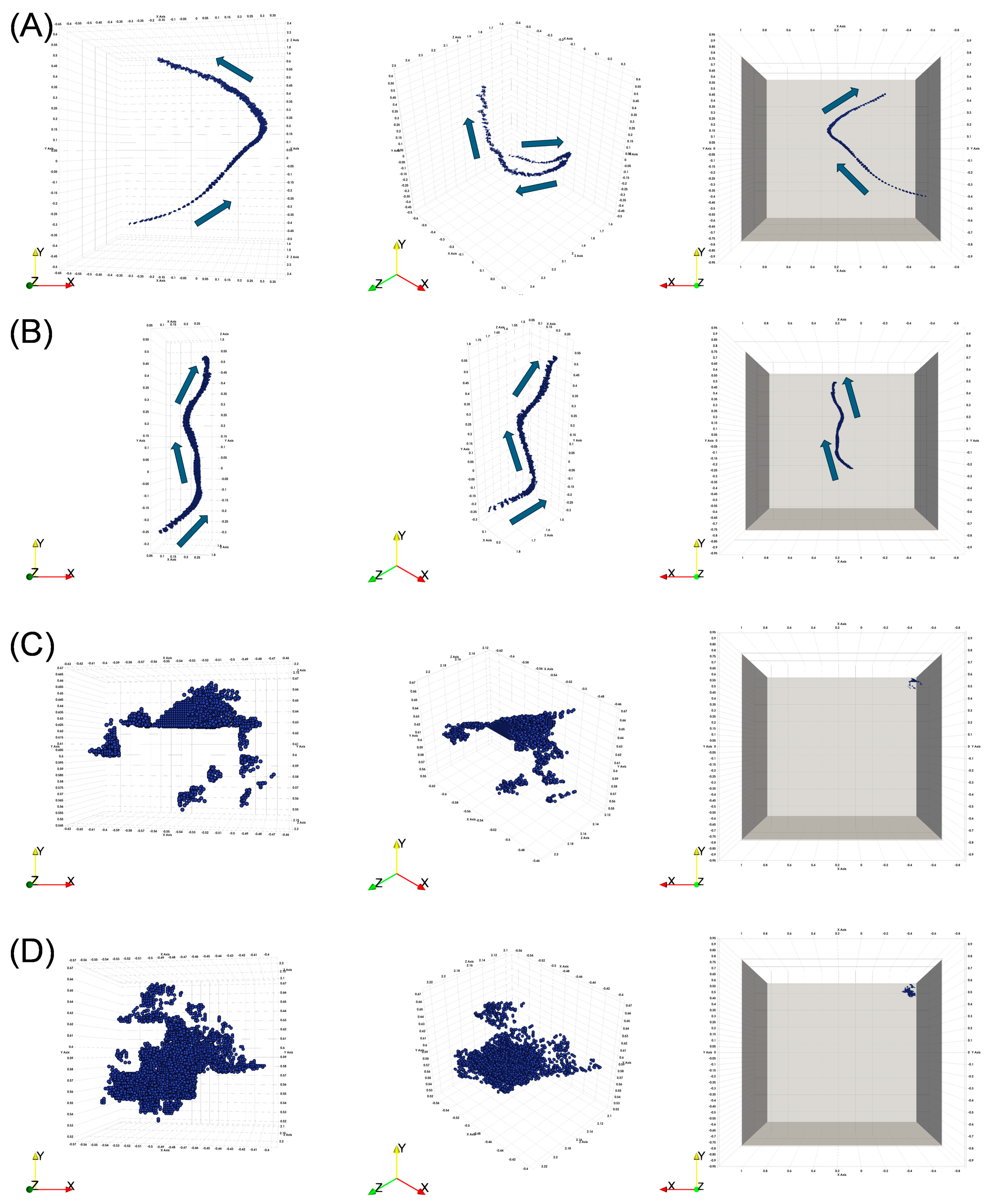

3.1. Three-Dimensional Point Cloud of Flight Trajectories

3.2. Removal of Remaining Noisy Point Cloud

3.3. Flight Speed Based on 3D Point Cloud Time Series

4. Discussion

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- IPPC Secretariat. 2021 International Year of Plant Health—Final Report. In Protecting Plants, Protecting Life; FAO on Behalf of the Secretariat of the International Plant Protection Convention: Rome, Italy, 2021; Volume 64. [Google Scholar]

- Bragard, C.; Dehnen-Schmutz, K.; Di Serio, F.; Gonthier, P.; Jacques, M.A.; Jaques Miret, J.A.; Justesen, A.F.; Magnusson, C.S.; Milonas, P.; Navas-Cortes, J.A.; et al. Pest Categorisation of Spodoptera litura. EFSA J. 2019, 17. [Google Scholar] [CrossRef] [PubMed]

- Cheng, T.; Wu, J.; Wu, Y.; Chilukuri, R.V.; Huang, L.; Yamamoto, K.; Feng, L.; Li, W.; Chen, Z.; Guo, H.; et al. Genomic Adaptation to Polyphagy and Insecticides in a Major East Asian Noctuid Pest. Nat. Ecol. Evol. 2017, 1, 1747–1756. [Google Scholar] [CrossRef] [PubMed]

- Armes, N.J.; Wightman, J.A.; Jadhav, D.R.; Rao, G.V.R. Status of Insecticide Resistance in Spodoptera litura in Andhra Pradesh, India. Pestic. Sci. 1997, 50, 240–248. [Google Scholar] [CrossRef]

- Omino, T.; Yokoi, S.; Tsuji, H. Experimental Studies on the Daytime Behaviour of Noctuid Larvae, the Cabbage Armyworm, Mamestra Brassicae, the Tobacco Cutworm, Spodoptera litura, and the Black Cutworm, Agrotis Ipsilon. Jpn. J. Appl. Entomol. Zool. 1973, 17, 215–220. [Google Scholar] [CrossRef]

- Zhang, J.; Li, S.; Li, W.; Chen, Z.; Guo, H.; Liu, J.; Xu, Y.; Xiao, Y.; Zhang, L.; Arunkumar, K.P.; et al. Circadian Regulation of Night Feeding and Daytime Detoxification in a Formidable Asian Pest Spodoptera litura. Commun. Biol. 2021, 4, 286. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, S.A. Mortality Responses of Spodoptera litura Following Feeding on BT-Sprayed Plants. J. Basic Appl. Sci. 2013, 9, 195–215. [Google Scholar] [CrossRef]

- Kumari, V.; Singh, N.P. Spodoptera litura Nuclear Polyhedrosis Virus (NPV-S) as a Component in Integrated Pest Management (IPM) of Spodoptera litura (Fab.) on Cabbage. J. Biopestic. 2009, 2, 84–86. [Google Scholar] [CrossRef]

- Andreasen, C.; Scholle, K.; Saberi, M. Laser Weeding With Small Autonomous Vehicles: Friends or Foes? Front. Agron. 2022, 4, 841086. [Google Scholar] [CrossRef]

- Bui, S.; Geitung, L.; Oppedal, F.; Barrett, L.T. Salmon Lice Survive the Straight Shooter: A Commercial Scale Sea Cage Trial of Laser Delousing. Prev. Vet. Med. 2020, 181, 105063. [Google Scholar] [CrossRef]

- Gaetani, R.; Lacotte, V.; Dufour, V.; Clavel, A.; Duport, G.; Gaget, K.; Calevro, F.; Da Silva, P.; Heddi, A.; Vincent, D.; et al. Sustainable Laser-Based Technology for Insect Pest Control. Sci. Rep. 2021, 11, 11068. [Google Scholar] [CrossRef]

- Keller, M.D.; Norton, B.J.; Farrar, D.J.; Rutschman, P.; Marvit, M.; Makagon, A. Optical Tracking and Laser-Induced Mortality of Insects during Flight. Sci. Rep. 2020, 10, 14795. [Google Scholar] [CrossRef] [PubMed]

- Mathiassen, S.K.; Bak, T.; Christensen, S.; Kudsk, P. The Effect of Laser Treatment as a Weed Control Method. Biosyst. Eng. 2006, 95, 497–505. [Google Scholar] [CrossRef]

- Mullen, E.R.; Rutschman, P.; Pegram, N.; Patt, J.M.; Adamczyk, J.J. Johanson Laser System for Identification, Tracking, and Control of Flying Insects. Opt. Express 2016, 24, 11828. [Google Scholar] [CrossRef] [PubMed]

- Rakhmatulin, I. Raspberry PI for Kill Mosquitoes by Laser. SSRN Electron. J. 2021, 3772579. [Google Scholar] [CrossRef]

- Rakhmatulin, I. Detect Caterpillar, Grasshopper, Aphid and Simulation Program for Neutralizing Them by Laser. SSRN Electron. J. 2021. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Lihoreau, M.; Pueyo, J. Selective Neutralisation and Deterring of Cockroaches with Laser Automated by Machine Vision. Orient. Insects 2022, 57, 728–745. [Google Scholar] [CrossRef]

- Sugiura, R.; Nakano, R.; Shibuya, K.; Nishisue, K.; Fukuda, S. Real-Time 3D Tracking of Flying Moths Using Stereo Vision for Laser Pest Control. In Proceedings of the 2023 ASABE Annual International Meeting, Omaha, Nebraska, USA, 9–12 July 2023; American Society of Agricultural and Biological Engineers: Saint Joseph, MI, USA, 2023. [Google Scholar] [CrossRef]

- Nishiguchi, S.; Fuji, H.; Yamamoto, K. Laser Beam Insecticide for Physical Pest Control. In Proceedings of the 43rd Annual Meeting of The Laser Society of Japan, Aichi, Japan, 18–20 January 2023. (In Japanese). [Google Scholar]

- Kitware Inc. ParaView-Open-Source, Multi-Platform Data Analysis and Visualization Application. Available online: https://www.paraview.org/ (accessed on 21 March 2023).

- R Core Team R: The R Project for Statistical Computing. Available online: https://www.r-project.org/ (accessed on 10 October 2023).

- Noda, T.; Kamano, S. Flight Capacity of Spodoptera litura (F.) (Lepidoptera: Noctuidae) Determined with a Computer-Assisted Flight Mill: Effect of Age and Sex of the Moth. Jpn. J. Appl. Entomol. Zool. 1988, 32, 227–229. [Google Scholar] [CrossRef]

- Tu, Y.G.; Wu, K.M.; Xue, F.S.; Lu, Y.H. Laboratory Evaluation of Flight Activity of the Common Cutworm, Spodoptera litura (Lepidoptera: Noctuidae). Insect Sci. 2010, 17, 53–59. [Google Scholar] [CrossRef]

- Naranjo, S.E. Assessing Insect Flight Behavior in the Laboratory: A Primer on Flight Mill Methodology and What Can Be Learned. Ann. Entomol. Soc. Am. 2019, 112, 182–199. [Google Scholar] [CrossRef]

- Ribak, G.; Barkan, S.; Soroker, V. The Aerodynamics of Flight in an Insect Flight-Mill. PLoS ONE 2017, 12, e0186441. [Google Scholar] [CrossRef]

- Li, Y.; Wang, K.; Quintero-Torres, R.; Brick, R.; Sokolov, A.V.; Sokolov, A.V.; Scully, M.O.; Scully, M.O.; Scully, M.O. Insect Flight Velocity Measurement with a CW Near-IR Scheimpflug Lidar System. Opt. Express 2020, 28, 21891–21902. [Google Scholar] [CrossRef]

- Wang, H.; Zeng, L.; Liu, H.; Yin, C. Measuring Wing Kinematics, Flight Trajectory and Body Attitude during Forward Flight and Turning Maneuvers in Dragonflies. J. Exp. Biol. 2003, 206, 745–757. [Google Scholar] [CrossRef] [PubMed]

- Geurten, B.R.H.; Kern, R.; Braun, E.; Egelhaaf, M. A Syntax of Hoverfly Flight Prototypes. J. Exp. Biol. 2010, 213, 2461–2475. [Google Scholar] [CrossRef] [PubMed]

- Tsunoda, T.; Moriya, S. Measurement of Flight Speed and Estimation of Flight Distance of the Bean Bug, Riptortus Pedestris (Fabricius) (Heteroptera: Alydidae) and the Rice Bug, Leptocorisa Chinensis Dallas (Heteroptera: Alydidae) with a Speed Sensor and Flight Mills. Appl. Entomol. Zool. 2008, 43, 451–456. [Google Scholar] [CrossRef]

- Du, H.; Zou, D.; Chen, Y.Q. Relative Epipolar Motion of Tracked Features for Correspondence in Binocular Stereo. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007. [Google Scholar] [CrossRef]

- Tao, J.; Risse, B.; Jiang, X.; Klette, R. 3D Trajectory Estimation of Simulated Fruit Flies. In IVCNZ ’12: Proceedings of the 27th Conference on Image and Vision Computing New Zealand, Dunedin, New Zealand, 26–28 November 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 31–36. [Google Scholar] [CrossRef]

- Risse, B.; Berh, D.; Tao, J.; Jiang, X.; Klette, R.; Klämbt, C. Comparison of Two 3D Tracking Paradigms for Freely Flying Insects. EURASIP J. Image Video Process. 2013, 2013, 57. [Google Scholar] [CrossRef]

- Tao, J.; Risse, B.; Jiang, X. Stereo and Motion Based 3D High Density Object Tracking. In Image and Video Technology. PSIVT 2013; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2014; Volume 8333, pp. 136–148. [Google Scholar] [CrossRef]

- Wu, H.S.; Zhao, Q.; Zou, D.; Chen, Y.Q. Acquiring 3D Motion Trajectories of Large Numbers of Swarming Animals. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops 2009, Kyoto, Japan, 27 September–4 October 2009; pp. 593–600. [Google Scholar] [CrossRef]

- Goyal, P.; van Leeuwen, J.L.; Muijres, F.T. Bumblebees Compensate for the Adverse Effects of Sidewind during Visually-Guided Landings. J. Exp. Biol. 2024, 227, jeb245432. [Google Scholar] [CrossRef]

| Camera | Karmin3 |

| Processor | SceneScan Pro |

| Distance between cameras | 10 cm |

| Resolution | 1024 × 768 pixels |

| Horizontal angle | 47.2° |

| Vertical angle | 36.3° |

| Spatial resolution | 1.6 mm (at 2 m distance) |

| Frame rate | 55 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nishisue, K.; Sugiura, R.; Nakano, R.; Shibuya, K.; Fukuda, S. Measuring the Flight Trajectory of a Free-Flying Moth on the Basis of Noise-Reduced 3D Point Cloud Time Series Data. Insects 2024, 15, 373. https://doi.org/10.3390/insects15060373

Nishisue K, Sugiura R, Nakano R, Shibuya K, Fukuda S. Measuring the Flight Trajectory of a Free-Flying Moth on the Basis of Noise-Reduced 3D Point Cloud Time Series Data. Insects. 2024; 15(6):373. https://doi.org/10.3390/insects15060373

Chicago/Turabian StyleNishisue, Koji, Ryo Sugiura, Ryo Nakano, Kazuki Shibuya, and Shinji Fukuda. 2024. "Measuring the Flight Trajectory of a Free-Flying Moth on the Basis of Noise-Reduced 3D Point Cloud Time Series Data" Insects 15, no. 6: 373. https://doi.org/10.3390/insects15060373

APA StyleNishisue, K., Sugiura, R., Nakano, R., Shibuya, K., & Fukuda, S. (2024). Measuring the Flight Trajectory of a Free-Flying Moth on the Basis of Noise-Reduced 3D Point Cloud Time Series Data. Insects, 15(6), 373. https://doi.org/10.3390/insects15060373