Attention-Based Multiscale Feature Pyramid Network for Corn Pest Detection under Wild Environment

Abstract

Simple Summary

Abstract

1. Introduction

- (1)

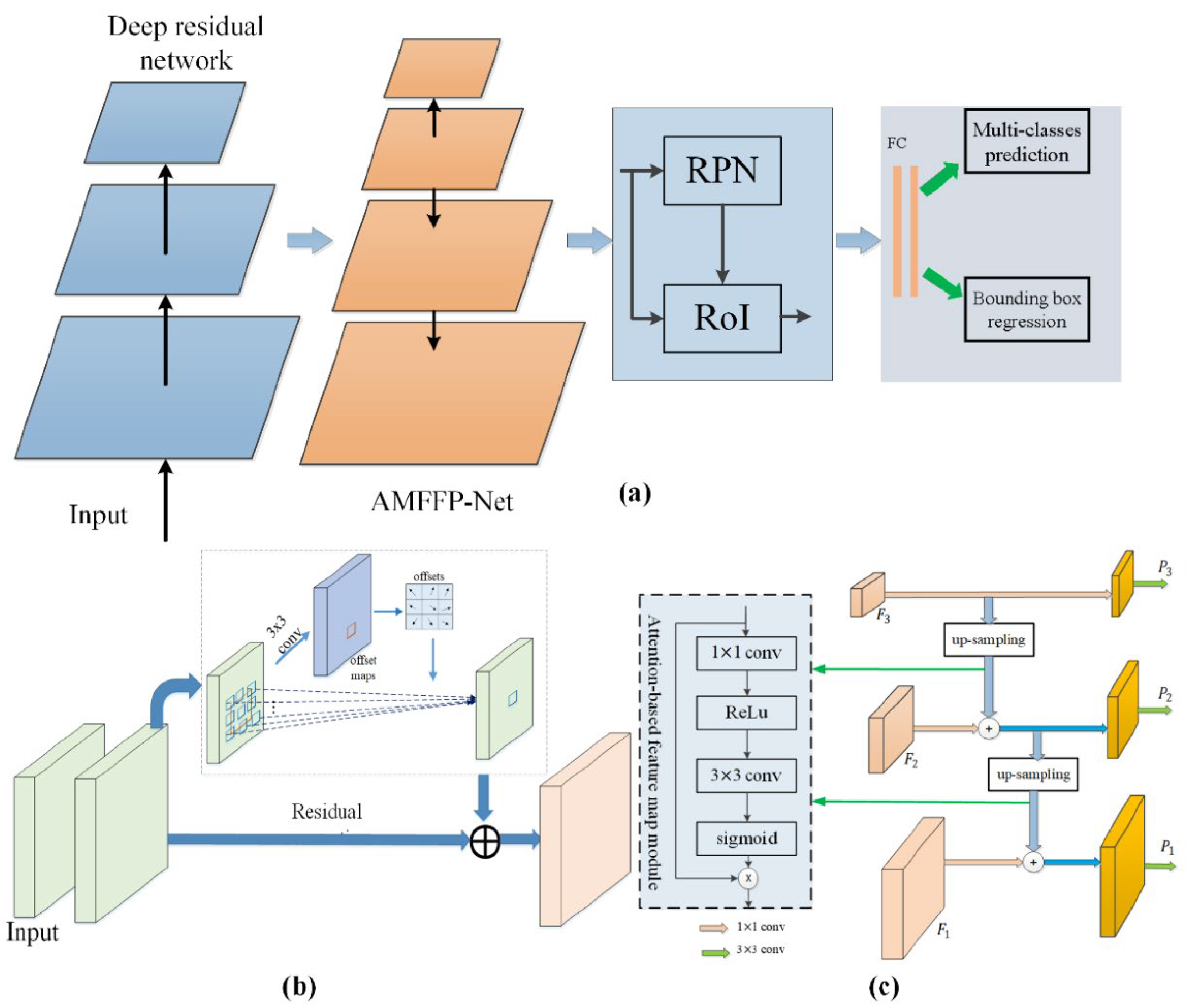

- A deep residual network with deformable convolution is introduced to extract rich feature information of corn pests, which improves the expression ability of information of the network.

- (2)

- An attention-based multi-scale feature pyramid network is used to address the detection of corn pests of different sizes.

- (3)

- We have constructed a large-scale corn pest dataset, including 7392 corn pest images and 10 types of corn pests. By combining our method with the two-stage detector, the proposed method can achieve 70.1%mAP and 74.3% recall on the corn pest dataset.

2. Materials and Methods

2.1. Materials

2.1.1. Corn Pest Image Collection

2.1.2. Data Labeling

2.1.3. Data Splitting

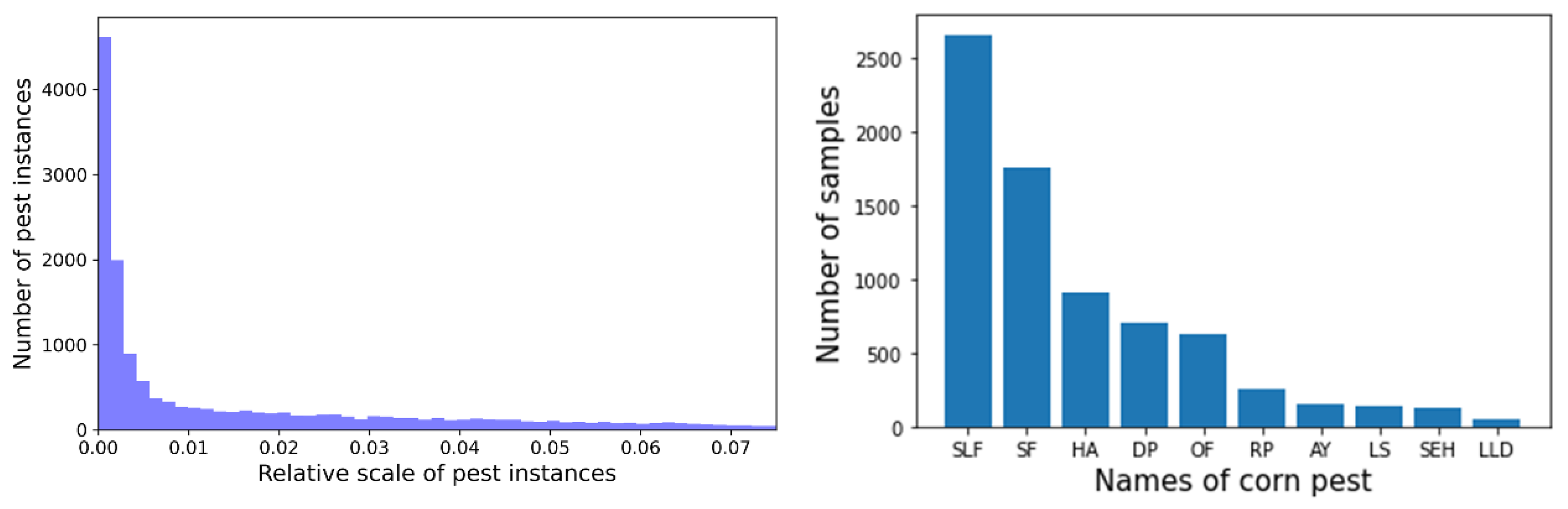

2.1.4. Analysis of Corn Pest Dataset

2.2. Methods

2.2.1. Deep Residual Network with Deformable Convolution Block

2.2.2. Attention-Based Multi-Scale Feature Fusion Pyramid Network (AMFFP-Net)

2.2.3. Joint Detection

2.3. Evaluation Metrics

3. Results and Analysis

3.1. Experimental Platform and Parameters Setting

3.2. Experimental Results and Analysis

3.3. Detection Efficiency

3.4. Ablation Experiment

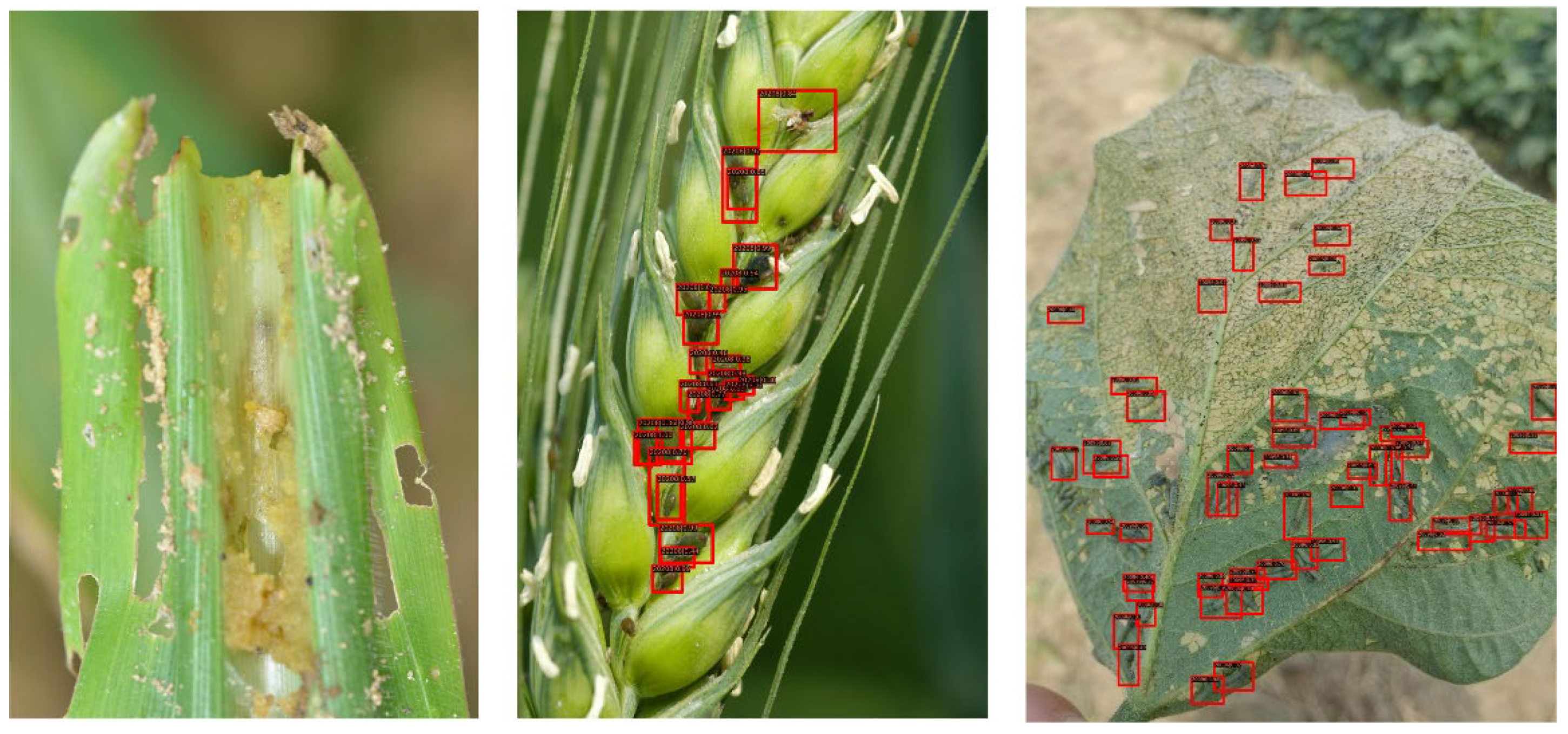

3.5. Visualized Detection Results and Analysis

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Larios, N.; Deng, H.; Zhang, W.; Sarpola, M.; Yuen, J.; Paasch, R.; Moldenke, A.; Lytle, D.A.; Correa, S.R.; Mortensen, E.N.; et al. Automated insect identification through concatenated histograms of local appearance features: Feature vector generation and region detection for deformable objects. Mach. Vis. Appl. 2008, 19, 105–123. [Google Scholar] [CrossRef]

- Faithpraise, F.; Birch, P.; Young, R.; Obu, J.; Faithpraise, B.; Chatwin, C. Automatic plant detection and recogition using K-means clustering algorithm and correspondence filters. Int. J. Adv. Biotechnol. Res. 2013, 4, 189–199. [Google Scholar]

- Wen, C.; Guyer, D. Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. 2012, 89, 110–115. [Google Scholar] [CrossRef]

- Xie, C.; Wang, R.; Zhang, J.; Chen, P.; Dong, W.; Li, R.; Chen, T.; Chen, H. Multi-level learning features for automatic classification of field crop pests. Comput. Electron. Agric. 2018, 152, 233–241. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2117–2125. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6154–6162. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Dong, Z.; Li, G.; Liao, Y.; Wang, F.; Ren, P.; Qian, C. CentripetalNet: Pursuing High-Quality Keypoint Pairs for Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10516–10525. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krähenbühl, P. Bottom-Up Object Detection by Grouping Extreme and Center Points. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 850–859. [Google Scholar]

- Liu, L.; Xie, C.; Wang, R.; Yang, P.; Wang, F. Deep Learning based Automatic Multi-Class Wild Pest Monitoring Approach using Hybrid Global and Local Activated Features. IEEE Trans. Ind. Inform. 2020, 17, 7589–7598. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Feng, H.; Ren, L.; Du, X.; Wu, J. Common pests image recognition based on deep convolutional neural network. Comput. Electron. Agric. 2020, 179, 105834. [Google Scholar] [CrossRef]

- Rahman, R.; Arko, P.; Ali, M.E.; Khan, M.; Apon, S.H.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar] [CrossRef]

- Jiao, L.; Dong, S.; Zhang, S.; Xie, C.; Wang, H. AF-RCNN: An anchor-free convolutional neural network for multi-categories agricultural pest detection. Comput. Electron. Agric. 2020, 174, 105522. [Google Scholar] [CrossRef]

- Dong, S.; Du, J.; Jiao, L.; Wang, F.; Liu, K.; Teng, Y.; Wang, R. Automatic Crop Pest Detection Oriented Multiscale Feature Fusion Approach. Insects 2022, 13, 554. [Google Scholar] [CrossRef] [PubMed]

- Teng, Y.; Wang, R.; Du, J.; Huang, Z.; Zhou, Q.; Jiao, L. TD-Det: A Tiny Size Dense Aphid Detection Network under In-Field Environment. Insects 2022, 13, 501. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Zhou, H.; Li, J.; Jian, F.; Jayas, D. Detection of stored-grain insects using deep learning. Comput. Electron. Agric. 2018, 145, 319–325. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Ruiz, H.; Safari, N.; Elayabalan, S.; Ocimati, W.; Blomme, G. AI-powered banana diseases and pest detection. Plant Methods 2019, 15, 92. [Google Scholar] [CrossRef]

- Wang, R.; Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R. S-RPN: Sampling-balanced region proposal network for small crop pest detection. Comput. Electron. Agric. 2021, 187, 106290. [Google Scholar] [CrossRef]

- Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R.; Zhang, J. Adaptive feature fusion pyramid network for multi-classes agricultural pest detection. Comput. Electron. Agric. 2022, 195, 106827. [Google Scholar] [CrossRef]

- He, Y.; Zhou, Z.Y.; Tian, L.H.; Liu, Y.F.; Luo, X.W. Brown rice planthopper (Nilaparvata lugens Stal) detection based on deep learning. Precis. Agric. 2020, 21, 1385–1402. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| ID | Scientific Name | Number of Images | Number of Corn Pest Instances | Average Relative Size |

|---|---|---|---|---|

| 1 | Leucania loreyi Duponchel (LLD) | 55 | 55 | 0.192 |

| 2 | Ostrinia furnacalis (OF) | 629 | 650 | 0.042 |

| 3 | Agrotis ypsilon (AY) | 146 | 174 | 0.153 |

| 4 | Spodoptera litura Fabricius (SLF) | 2664 | 7976 | 0.306 |

| 5 | Dichocrocis punctiferalis (DP) | 709 | 849 | 0.038 |

| 6 | Helicoverpa armigera (HA) | 916 | 919 | 0.094 |

| 7 | Laodelphax striatellus (LS) | 139 | 140 | 0.061 |

| 8 | Spodoptera exigua Hiibner (SEH) | 131 | 141 | 0.048 |

| 9 | Rhopalosiphum padi (RP) | 249 | 3875 | 0.007 |

| 10 | Spodoptera frugiperda (SF) | 1754 | 1970 | 0.057 |

| Class | FPN | S-RPN | Cascade R-CNN | Our Method | ||||

|---|---|---|---|---|---|---|---|---|

| Recall | AP | Recall | AP | Recall | AP | Recall | AP | |

| LLD | 83.3 | 81.8 | 100 | 100 | 100 | 100 | 100 | 100 |

| OF | 60.6 | 56.4 | 67.6 | 59.1 | 59.2 | 51.6 | 69.0 | 60.0 |

| AY | 88.2 | 74.5 | 83.1 | 80.1 | 88.9 | 81.8 | 83.3 | 81.8 |

| SLF | 56.0 | 49.7 | 63.4 | 58.3 | 55.4 | 49.6 | 64.1 | 58.1 |

| DP | 48.8 | 45.5 | 46.0 | 44.6 | 46.3 | 44.7 | 46.3 | 44.0 |

| HA | 85.9 | 79.4 | 80.4 | 79.4 | 81.5 | 78.5 | 82.6 | 79.6 |

| LS | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| SEH | 60.0 | 54.5 | 58.8 | 53.6 | 58.8 | 52.9 | 58.8 | 53.6 |

| RP | 61.1 | 48.9 | 68.4 | 58.5 | 62.0 | 51.4 | 70.1 | 61.7 |

| SF | 69.4 | 61.4 | 68.6 | 62.1 | 67.0 | 62.1 | 69.1 | 62.5 |

| Mean | 71.3 | 65.2 | 73.6 | 69.6 | 71.9 | 67.3 | 74.3 | 70.1 |

| Method | Speed (FPS) | GFLOPs | Number of Parameter (M) |

|---|---|---|---|

| FPN | 18.2 | 216.34 | 41.17 |

| S-RPN | 14.5 | 241.12 | 46.23 |

| Cascade R-CNN | 13.1 | 244.13 | 68.95 |

| Ours | 17.0 | 224.22 | 41.82 |

| Deformable Convolution | AMFFP-Net | mAP | Recall |

|---|---|---|---|

| 65.2 | 71.3 | ||

| √ | 66.3 | 69.5 | |

| √ | √ | 70.1 | 74.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, C.; Jiao, L.; Wang, R.; Liu, Z.; Du, J.; Hu, H. Attention-Based Multiscale Feature Pyramid Network for Corn Pest Detection under Wild Environment. Insects 2022, 13, 978. https://doi.org/10.3390/insects13110978

Kang C, Jiao L, Wang R, Liu Z, Du J, Hu H. Attention-Based Multiscale Feature Pyramid Network for Corn Pest Detection under Wild Environment. Insects. 2022; 13(11):978. https://doi.org/10.3390/insects13110978

Chicago/Turabian StyleKang, Chenrui, Lin Jiao, Rujing Wang, Zhigui Liu, Jianming Du, and Haiying Hu. 2022. "Attention-Based Multiscale Feature Pyramid Network for Corn Pest Detection under Wild Environment" Insects 13, no. 11: 978. https://doi.org/10.3390/insects13110978

APA StyleKang, C., Jiao, L., Wang, R., Liu, Z., Du, J., & Hu, H. (2022). Attention-Based Multiscale Feature Pyramid Network for Corn Pest Detection under Wild Environment. Insects, 13(11), 978. https://doi.org/10.3390/insects13110978