2.1. Fault Tree Creation and Qualitative Analysis

Fault tree is a model for the systematic analysis of faults. It consists of top event, middle event, bottom event, and logic gates; commonly used logic gates are “or gate,” “with gate”, and so on. Fault trees are built layer by layer, with downward derivation from the top event for approximate fault classification, also called intermediate events, and then recursive derivation from the intermediate events to each bottom event. When performing fault tree building, there should be a clear logic and no logical contradictions, considering every cause of failure in order to prioritize events regardless of the probability of failure. Events must be accounted for when conditions require it.

The fault tree constructed in this way has a better fault-sorting vein, which can visually present the causes and parts of the fault, and also provide a comprehensive and visual description of the causes of the fault and various logical relationships. After completing the fault tree, it needs to be analyzed qualitatively, which is the first step of fault diagnosis. The purpose of the qualitative analysis is to determine the minimum cut [

17] set, which finds the minimum set of events that could cause the top event failure. It is usually derived by the downstream method [

18]. Among the routine physical and chemical items of ship oil inspection, the high viscosity of the engine oil was selected as the object, and the fault tree was established by combining information and expert guidance.

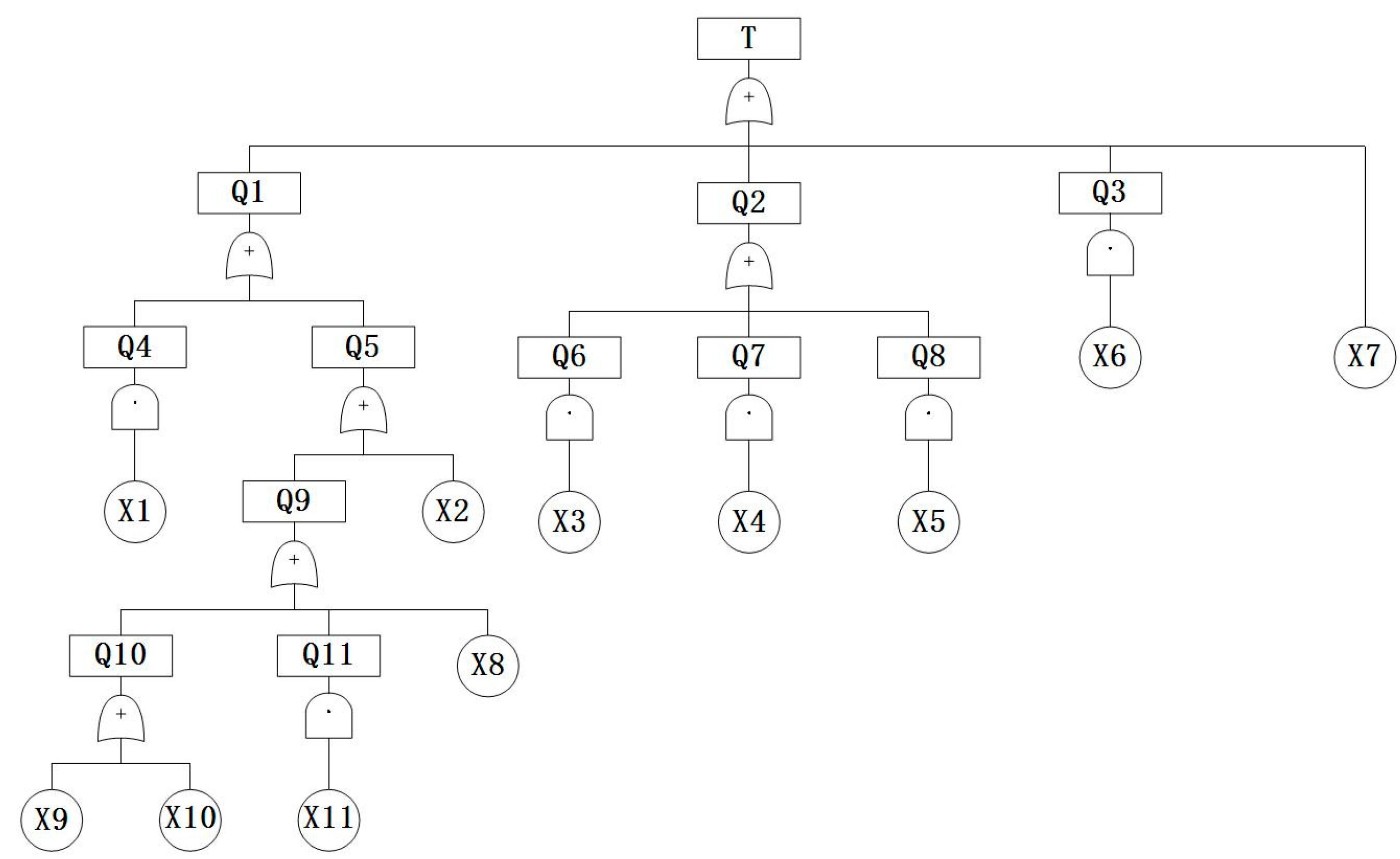

Table 1 shows the event table of the fault tree of the high viscosity of ship engine oil, and

Figure 1 shows the fault tree of the high viscosity of ship engine oil.

The minimum cut set of the high-viscosity marine engine oil fault tree established from

Figure 1 is {X1}, {X2}, {X3}, {X4}, {X5}, {X6}, {X7}, {X8}, {X9}, {X10}, {X11}.

2.2. Experts’ Own Weights

Experts’ own weights have a large impact on the bottom event failure rate, which needs to be rationalized. There are three types of methods to determine the weights: the subjective assignment method, objective assignment method, and combined assignment. The main methods of the former are AHP (hierarchical analysis), Delphi method [

19,

20], binomial coefficient method [

21], indicator assignment method, etc., which are subjective cognitive judgments of decision makers without considering the relationship between data. For the subjective assignment method of establishing weights, experts often play the role of decision maker to make subjective judgments and to give weights to the data, but the experts themselves cannot be accurately assigned when evaluating their weights, and the indicator assignment method is one of the methods that can assign weights to them. Objective assignment methods include the entropy value method [

22], CRITIC assignment method [

23,

24], and standard deviation method [

25]. In contrast to the subjective assignment method, which focuses more on the intrinsic data linkage between indicators to determine the weights, the disadvantage is that it cannot be used when there is little or missing data. Combinatorial assignment uses different mathematical methods to combine subjective and objective assignments, or to objectively modify the subjective assignments.

The indicator measurement method is a weighted judgment that sets measurement indicators, which is divided into two layers: the indicator layer and the judgment layer. The indicator layer is established for the weighting of the set indicators using a questionnaire scoring survey. The judgment layer uses the known information to formulate the scoring criteria and methods of indicators, and finally uses the comprehensive information to determine the weight. The index measurement method is used to set expert-level indexes for experts’ academic attainments, titles and professional ethics, and to rate a number of experts. These indicators were chosen because of the openness and scientific nature of the indicators. The experts’ personal academic information is freely available, and the professional ethics evaluation is also published annually via the school’s official website and other channels. The academic attainments are mainly experts’ research results and awards, and the titles include school titles and their own qualifications. Secondly, the professional ethics can be seen from perspective of the degree of importance that experts attach to them, which directly affects their judgment of the subsequent bottom event failure rate, Because the importance of indicators is not affected by time and space, there is a certain difference, so the total score of the three indicators is set to 10 for scoring questionnaires; the set score range is usually [0, 100]. The ratio of the survey score to the total score is the weight of the expert-level indicator, and the sum of the weights of all indicators is 1. The criteria and grades are set for the indicators.

Table 2 shows the weight ratio of expert-level indicators calculated by the questionnaire, and

Table 3 shows the expert indicator judging criteria.

According to

Table 3, each expert situation is first scored and combined with the expert-level index weights to obtain the comprehensive level score.

where

F is the comprehensive level score,

f is the evaluation score corresponding to the expert-level index, and

Es is the weight value of the s-th expert-level index.

The expression for the calculation of the expert’s own weight is:

where

Zj is the index measurement method of the expert’s own weight and

m is the number of experts.

In Equations (1) and (2), it can be seen that the comprehensive expert-level scores are obtained by linear weighting, the expert-level index weights and evaluation scores directly affect the experts’ own weights, and the reasonableness of the data needs to be improved.

2.3. Improving and Justifying the Combination Assignment to the Experts’ Own Weights

The evaluation of experts has a large impact on the probability results of bottom events, and the assignment of traditional experts’ own weights only considers subjectivity but ignores objectivity, so the best assignment scheme should be a combination of subjective and objective weighting in order to obtain results that are in line with the actual situation. Using the indicator level questionnaire data and the subjective data of expert ratings in the judgment level of the indicator assessment method as the basis, the CRITIC assignment method is used to correct the experts’ own weights. The CRITIC assignment method assigns weights from two aspects: one is the standardized difference comparison between evaluation objects under the same evaluation index, and the other is the conflicting comparison between evaluation indexes. It is important to note that the evaluation indicators and evaluation objects selected in the indicator layer differ from those in the judgment layer. The indicator layer takes the expert-level indicators as the evaluation indicators and each questionnaire item as the evaluation object, while the judgment layer takes the experts themselves as the evaluation indicators, and the evaluation objects are the evaluation scores of the expert-level indicators, with the weights obtained being the evaluation indicator weights.

The CRITIC assignment method is used to calculate the weights, and the data are first dimensionless, with

u evaluation indicators and

v evaluation objects;

Lij is the initial value of the

i-th evaluation object in the

j-th evaluation index, and the data are subjected to positive indexing.

where

Mmax is the maximum value of

Lij,

Mmin is the minimum value of

Lij, and

is the value after dimensionless processing.

Afterwards, the expression for the amount of information is obtained.

where

Cj is the amount of information,

is the standardized difference of the evaluation index, and

rkj is the correlation coefficient between the

k-th and

j-th evaluation indexes.

Finally, the expression of indicator weight

Hj of the CRITIC empowerment method is derived.

The selection of combination assignment should satisfy three principles: first, it has subjective and objective merits; second, the merits are explanatory (its own weights should be biased towards subjective weights, and the data sought should be biased towards objective weights); finally, it should not be blindly overcomposed. Based on these three principles, two methods of the objective correction of the subjective combination of the assignment method to seek the expert’s own weight, in order to carry out the data comparison method, are as follows:

- (1)

Combined assignment method I: Firstly, the evaluation index questionnaire data is used to derive the expert-level index weight by the CRITIC assignment method. This weight is used to weight the expert rating data, and then the final weight is obtained by the CRITIC assignment method. Because there are two layers of data, the subjective data is objectively corrected twice, and the overall approach is close to the objective assignment method.

- (2)

Combined assignment method II: Combinatorial weighting method II: Combinatorial weighting method I and the expert-level indicator weights of the index measurement method are combined and weighted using the principle of minimum discriminative information [

26] to obtain their weights, and then the weights are used to weight the expert rating data. The experts’ own weights are calculated using the CRITIC weighting method, and finally the weights are combined and weighted again with the experts’ own weights of the index measurement method. The weights obtained by this method were also corrected for two levels of data.

Let

and

be the subjective and objective weights of different methods,

Wj is the combined weights, and the formula of the combined weighting method based on the principle of minimum discriminative information is:

The rationality of the combined weights is studied by defining the weight deviation

ε, the maximum value of the weight deviation

λ, and the weight distortion rate

η.

ε is the difference between subjective weights and other weights. Because the subjective weights represent the public’s opinion, it would be set as the benchmark; the ratio of the sum of the weights to the number of evaluation indicators

u is the maximum value of the deviation of the weights, as well as the average value of the evaluation indicator weights, where the sum of the weights is usually set to 1. When using other assignment methods and subjective assignment methods to obtain the same evaluation indicator weights, if

ε >

λ, then there may be a situation where the evaluation indicator weights are equal to 0, which is contrary to reality; this is the basis for the setting of

λ. Using the weight distortion rate

η for the reasonableness analysis of the combination weights, the smaller the combination weights, the higher the credibility.

where

usz is the number of distortions of evaluation index weights.

The experts in this research field are fewer, and five are selected as experts in the same field with serial numbers A1, A2, A3, A4 and A5. The data survey is processed through

Table 2 and

Table 3, and

Table 4 and

Table 5 are different assignment methods to calculate the weight of five expert-level indexes and the experts’ own weights of the three methods, respectively.

In

Table 4, for the three methods of expert-level indicator weights, in the same order, academic attainment > job title > professional ethics;

λ is 1/3,

ε is in range, and there is no distortion. In

Table 5,

λ = 0.2; for the combined assignment method I and the indicator measurement method obtained by the experts themselves, the weight comparisons

ε are 0.153, 0.125, 0.201, 0.117, 0.056, indicating the weight distortion of expert A3, and

η is 20%. There is no distortion in the expert’s own weight obtained by the combined assignment method II, and it is more reasonable than the combined assignment method I. Despite the data analysis of the combined assignment method relative to the subjective assignment method, it cannot be determined from this point that the combined assignment method is superior to the subjective assignment method, and a final judgment of the derived data under the weights of the different method experts themselves is needed, such as a better differentiation of the bottom event failure rate.

2.4. Improving Quantitative Analysis of Expert’s Own Weight–Aggregate Fuzzy Number Fault Trees

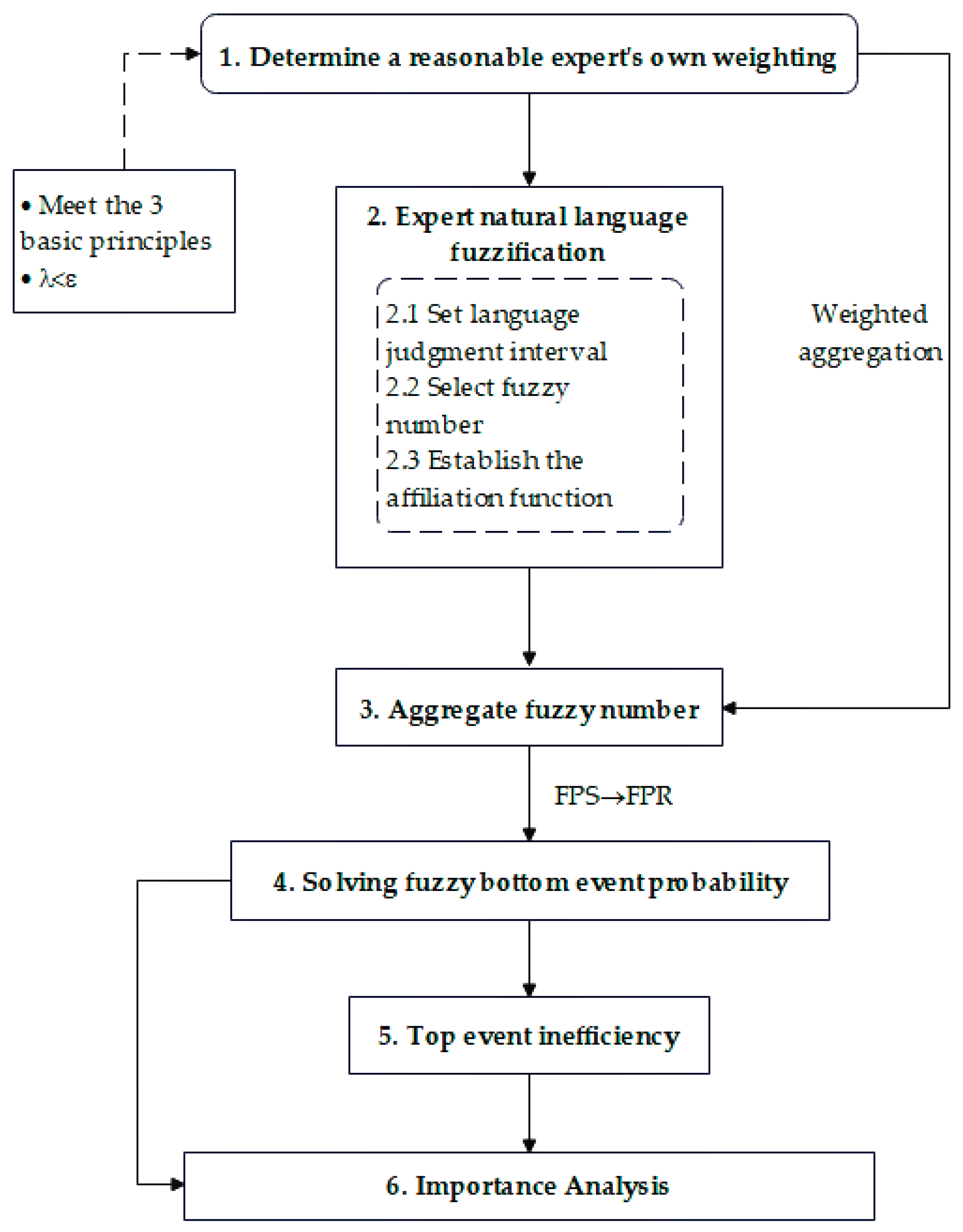

In order to perform an accurate processing and quantitative analysis of fault tree event probabilities, the following steps are listed:

Step 1: Confirm whether the experts’ own weight settings are reasonable and if they can satisfy the three principles.

Step 2: Fuzzification of expert natural language, where the bottom event failure rate is evaluated by a fuzzy representation of the expert’s natural language judgment of the event, by establishing seven judgment intervals for natural language event probabilities: very small (VS), small (S), small (NS), medium (M), large (FL), large (L), and very large (VL). For the selection of fuzzy numbers [

27], a combination of triangular fuzzy numbers and trapezoidal fuzzy numbers is used. The fuzzy number is expressed by the affiliation function. In this case, the triangular fuzzy number is a special case of trapezoidal fuzzy number, and the combination can be used for better fault description. The fuzzy interval setting is subject to subjective influence, making it difficult and meaningless to be precise.

Step 3: Aggregation of fuzzy numbers. Weighted aggregation of mostly natural language by experts for a given bottom event failure rate. Obtain the average number of blurs for a given bottom event.

Step 4: Solve the fuzzy bottoming event failure rate. Chen [

28] proposed a fuzzy left–right ranking method that could make the fuzzy numbers exact.

Step 5: Calculate the top event [

29] failure rate.

Step 6: Compute the critical importance [

30] of the bottom event. Derived from the bottom event and top event failure rates, it is decisive for fault determination and sequencing.

Overall, this is a process of transformation from fuzzy to non-fuzzy. However, the definition of vagueness is again influenced by human subjectivity, indicating the importance of the confirmation of the experts’ own weights.

Through the above steps, the flow chart of the quantitative fault tree analysis of the model can be drawn, as shown in

Figure 2.

Once our model is built, the failure analysis can be performed. The linguistic descriptions of the experts for each bottom event are collected and, following the model steps,

Table 6 is obtained, including the quantitative analysis data for the three methods.

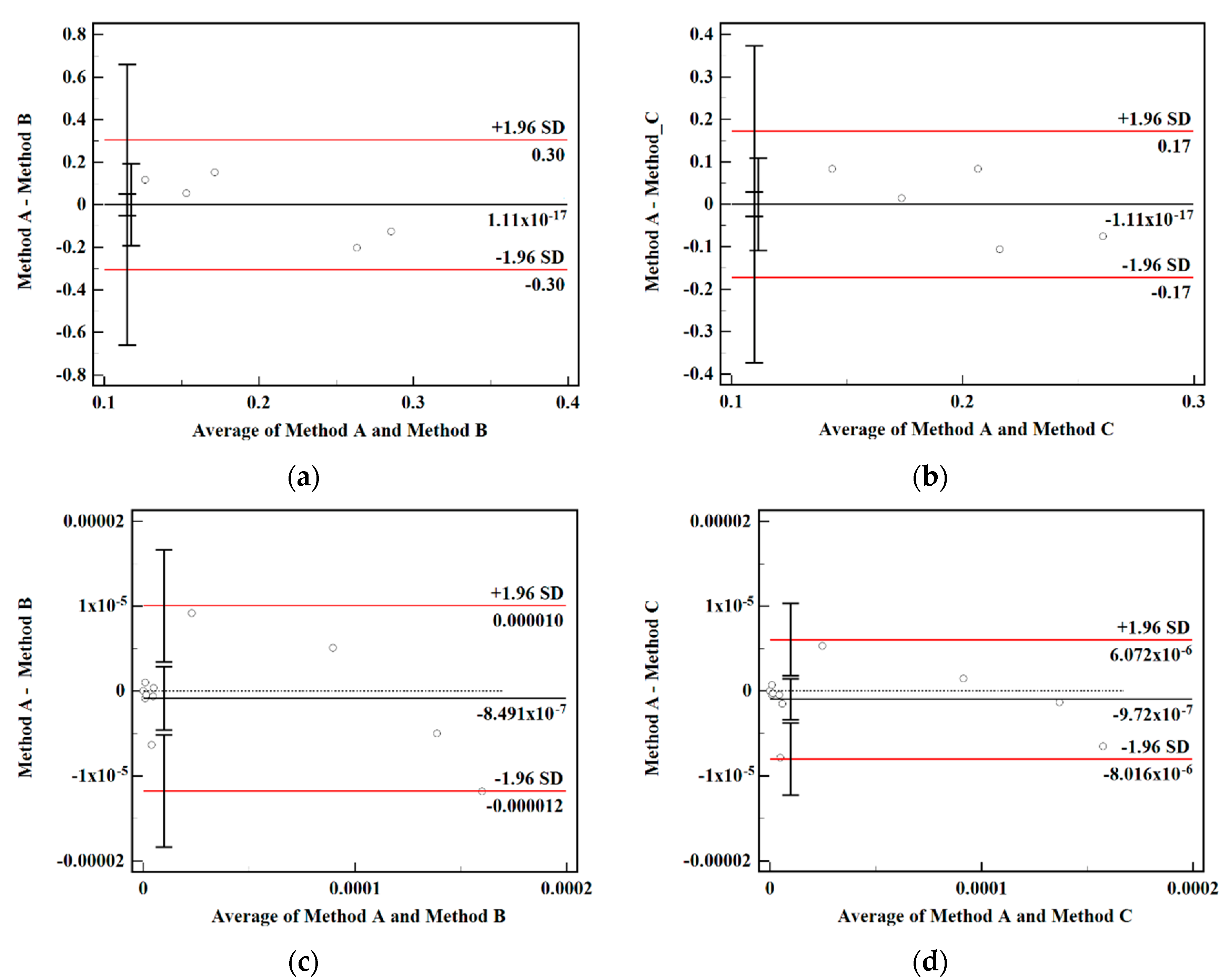

Table 6 lists three techniques for obtaining data for high-viscosity ship oil testing fault analysis. Failure analysis should be given top priority for the failures X11 (excessive cylinder liner/piston ring wear), X9 (too many machines), and X8 (poor combustion), which have higher failure rates and importance. The most significant bottom event is the cylinder liner/piston ring wear being too large, which is caused by a constant reciprocating friction state as well as working conditions, temperature, and a variety of other factors. The lubricant and its direct contact influence the removal of the wear particles; prolonged use will cause a rise in viscosity; the outcomes are accurate. X3 (fuel system failure), X4 (severe emulsification of lubricating oil), X5 (cylinder Oil Leakage), X6 (high-viscosity slip oil is used) and other failure rates are lower and less than 1% importance. X4 is the least important (0.000024) because the engine is the core component, the water will lead to serious accidents, and the possibility of serious emulsification of the slip oil is the lowest, being almost negligible. Combined with the fault tree, for intermediate events, the highest importance is assigned to the aging, deterioration, or unnatural oxidation, since engine oil viscosity should be the first analysis.

In particular, for the same bottom event, the bottom event probability varies more with the experts’ own weights using different methods. For bottom events with small probability, such as X4 and X6, the bottom event failure rate fluctuates more than 50 percent, indicating a large impact. In practical fault determination, we often use importance to rank. The improved approach raises the importance of certain major fault events, which allows for more rapid fault determination.