Artificial Intelligence in Gastrointestinal Surgery: A Systematic Review of Its Role in Laparoscopic and Robotic Surgery

Abstract

1. Introduction

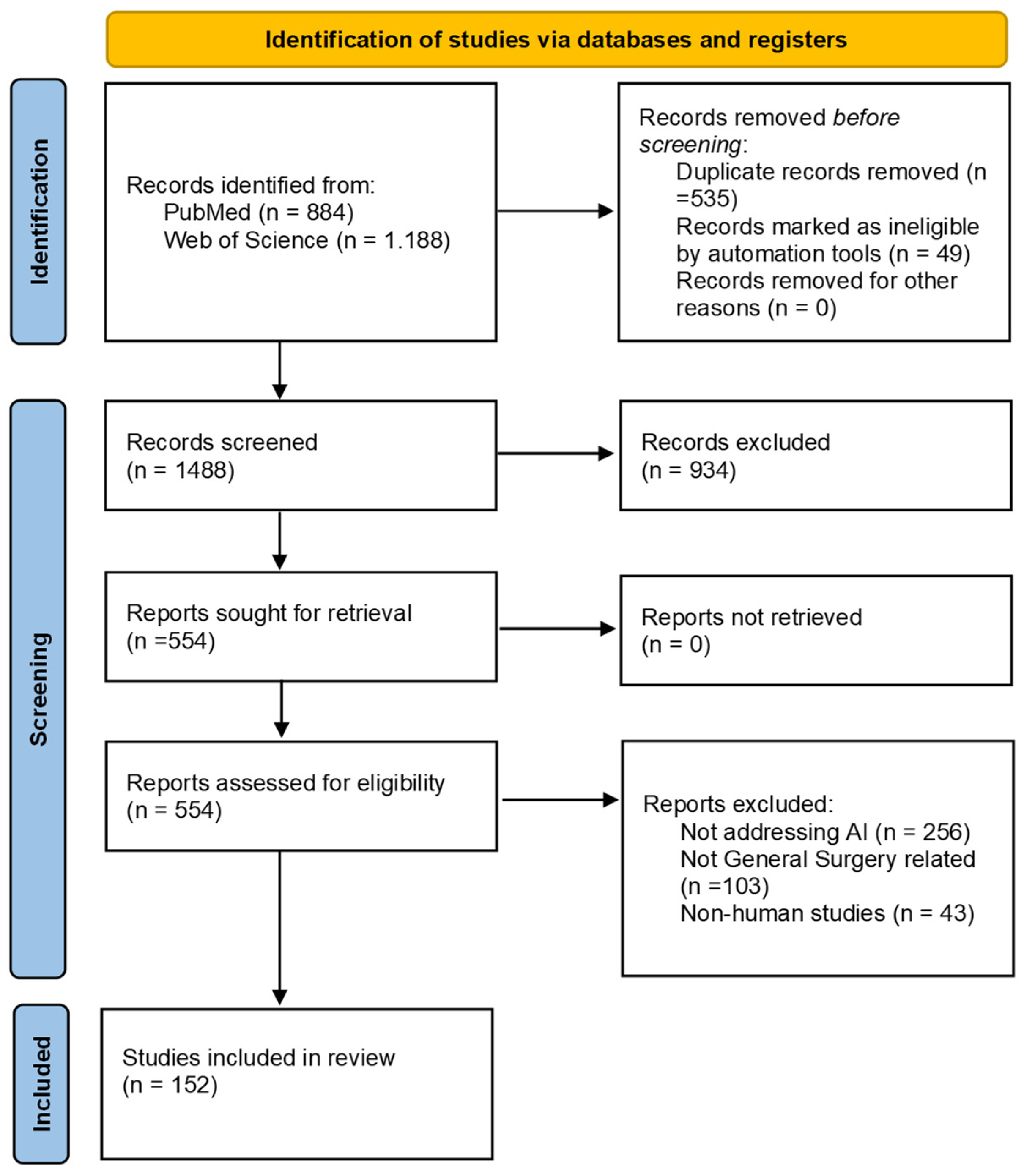

2. Materials and Methods

- -

- Articles focusing on laparoscopic or robotic procedures performed in General Surgery settings (Under the term “General Surgery”, the following fields were included: abdominal surgery, including colorectal, upper gastrointestinal, bariatric, endocrine, abdominal wall, oncologic, hepatopancreaticobiliary, minimally invasive surgeries and transplant; trauma and breast surgeries).

- -

- AI as the focus of the research, applied in a clinical context.

- -

- Articles presenting original data.

- -

- Studies focused on specialties outside General Surgery (e.g., Urology or Gynecology).

- -

- AI not applied in a clinical setting (e.g., theoretical models or models without a specific surgical application).

- -

- Reviews, meta-analyses, abstracts.

- -

- Non-human studies (studies were included if an animal model was employed, for instance, in a training setting, but the final objective was human surgery).

Studies Classification

- -

- Technical evaluations (evaluation of new AI-based tools or algorithms, often with proof-of-concept validation).

- -

- Retrospective observational studies.

- -

- Prospective observational studies.

- -

- Feasibility studies (preliminary testing of AI applications in real or simulated surgical settings).

- -

- Clinical trials.

- -

- Dataset descriptions (description of publicly available or novel surgical datasets annotated for AI development and benchmarking).

- -

- Simulation-based training assessments.

- -

- Surveys or expert opinion studies.

- -

- Surgical decision support and outcome prediction: studies that used AI to assist in preoperative or intraoperative clinical decision-making, risk stratification, or prediction of postoperative outcomes.

- -

- Skill assessment and training: research focused on evaluating or improving surgical performance, through AI-based performance metrics, simulation platforms, or feedback systems.

- -

- Workflow recognition and intraoperative guidance: articles addressing the use of AI to identify surgical phases, provide context-aware support, or optimize procedural flow during surgery.

- -

- Object or structure detection: studies aimed at recognizing anatomical structures, surgical instruments, or landmarks within the operative field.

- -

- Augmented reality (AR) and navigation: research involving the integration of preoperative imaging and real-time intraoperative views.

- -

- Image enhancement: studies focused on improving the visual quality of surgical imaging.

- -

- Surgeon perception, preparedness, and attitudes: Studies exploring surgeons’ knowledge, acceptance, and perceived challenges regarding the integration of AI and digital technologies into surgical practice.

3. Results

3.1. AI for Object or Structure Detection

3.2. AI for Surgical Skill Assessment and Training

3.3. AI for Workflow Recognition and Intraoperative Guidance

3.4. AI for Surgical Decision Support and Outcome Prediction

3.5. AI for Augmented Reality and Navigation

3.6. AI for Image Enhancement

3.7. Surgeon Perception, Preparedness, and Attitudes

3.8. Risk of Bias Assessment

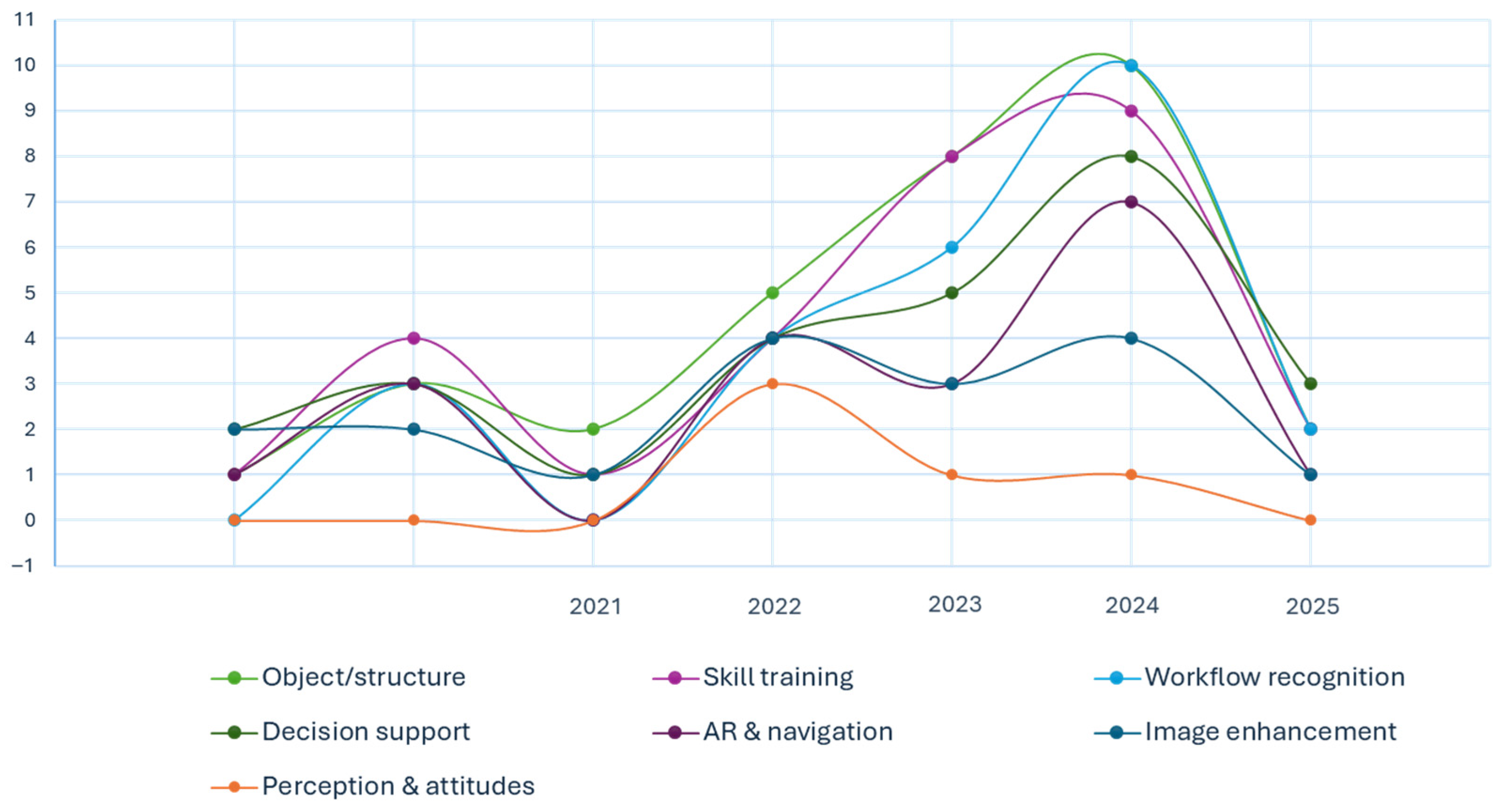

3.9. Quantitative Synthesis by Thematic Domain

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | artificial intelligence; |

| CNN | Convolutional Neural Network; |

| AR | Augmented Reality; |

| TME | Total Mesorectal Excision; |

| TaTME | Transanal Total Mesorectal Excision; |

| CVS | Critical View of Safety; |

| LLR | Laparoscopic Liver Resection; |

| TAPP | Transabdominal Preperitoneal hernia repair; |

| LC | Laparoscopic Cholecystectomy; |

| TEP | Totally Extraperitoneal; |

| VR | Virtual Reality; |

| RALIHR | Robotic-Assisted Laparoscopic Inguinal Hernia Repair; |

| NIR | Near-Infrared; |

| MIS | Minimally Invasive Surgery; |

| IoU | intersection over union; |

| Dice | Dice similarity coefficient; |

| F1 | F1-score; |

| AUC | area under the receiver operating characteristic curve; |

| TRE | target registration error; |

| PSNR | peak signal-to-noise ratio; |

| SSIM | structural similarity index; |

| FPS | frames per second. |

References

- Revolutionizing Patient Care: The Harmonious Blend of Artificial Intelligence and Surgical Tradition-All Databases. Available online: https://www.webofscience.com/wos/alldb/full-record/WOS:001179111800002 (accessed on 6 June 2025).

- Liao, W.; Zhu, Y.; Zhang, H.; Wang, D.; Zhang, L.; Chen, T.; Zhou, R.; Ye, Z. Artificial Intelligence-Assisted Phase Recognition and Skill Assessment in Laparoscopic Surgery: A Systematic Review. Front. Surg. 2025, 12, 1551838. [Google Scholar] [CrossRef]

- Hatcher, A.J.; Beneville, B.T.; Awad, M.M. The Evolution of Surgical Skills Simulation Education: Robotic Skills. Surgery 2025, 181, 109173. [Google Scholar] [CrossRef]

- Boal, M.W.E.; Anastasiou, D.; Tesfai, F.; Ghamrawi, W.; Mazomenos, E.; Curtis, N.; Collins, J.W.; Sridhar, A.; Kelly, J.; Stoyanov, D.; et al. Evaluation of Objective Tools and Artificial Intelligence in Robotic Surgery Technical Skills Assessment: A Systematic Review. Br. J. Surg. 2024, 111, znad331. [Google Scholar] [CrossRef] [PubMed]

- Knudsen, J.E.; Ghaffar, U.; Ma, R.; Hung, A.J. Clinical Applications of Artificial Intelligence in Robotic Surgery. J. Robot Surg. 2024, 18, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Hallbeck, M.S.; Salehinejad, H.; Thiels, C. The Integration of Artificial Intelligence in Robotic Surgery: A Narrative Review. Surgery 2024, 176, 552–557. [Google Scholar] [CrossRef] [PubMed]

- Gumbs, A.A.; Croner, R.; Abu-Hilal, M.; Bannone, E.; Ishizawa, T.; Spolverato, G.; Frigerio, I.; Siriwardena, A.; Messaoudi, N. Surgomics and the Artificial Intelligence, Radiomics, Genomics, Oncopathomics and Surgomics (AiRGOS) Project. Art. Int. Surg. 2023, 3, 180–185. [Google Scholar] [CrossRef]

- Guni, A.; Varma, P.; Zhang, J.; Fehervari, M.; Ashrafian, H. Artificial Intelligence in Surgery: The Future Is Now. Eur. Surg. Res. 2024, 65, 22–39. [Google Scholar] [CrossRef]

- Panesar, S.; Cagle, Y.; Chander, D.; Morey, J.; Fernandez-Miranda, J.; Kliot, M. Artificial Intelligence and the Future of Surgical Robotics. Ann. Surg. 2019, 270, 223–226. [Google Scholar] [CrossRef]

- Schijven, M.P.; Kroh, M. Editorial: Harnessing the Power of AI in Health Care: Benefits, Risks, and Preparation. Surg. Innov. 2023, 30, 417–418. [Google Scholar] [CrossRef]

- O’Sullivan, S.; Leonard, S.; Holzinger, A.; Allen, C.; Battaglia, F.; Nevejans, N.; van Leeuwen, F.W.B.; Sajid, M.I.; Friebe, M.; Ashrafian, H.; et al. Operational Framework and Training Standard Requirements for AI-Empowered Robotic Surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2020, 16, 1–13. [Google Scholar] [CrossRef]

- Vasey, B.; Lippert, K.A.N.; Khan, D.Z.; Ibrahim, M.; Koh, C.H.; Layard Horsfall, H.; Lee, K.S.; Williams, S.; Marcus, H.J.; McCulloch, P. Intraoperative Applications of Artificial Intelligence in Robotic Surgery: A Scoping Review of Current Development Stages and Levels of Autonomy. Ann. Surg. 2023, 278, 896–903. [Google Scholar] [CrossRef]

- Moglia, A.; Georgiou, K.; Georgiou, E.; Satava, R.M.; Cuschieri, A. A Systematic Review on Artificial Intelligence in Robot-Assisted Surgery. Int. J. Surg. 2021, 95, 106151. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Petracchi, E.J.; Olivieri, S.E.; Varela, J.; Canullan, C.M.; Zandalazini, H.; Ocampo, C.; Quesada, B.M. Use of Artificial Intelligence in the Detection of the Critical View of Safety During Laparoscopic Cholecystectomy. J. Gastrointest. Surg. 2024, 28, 877–879. [Google Scholar] [CrossRef] [PubMed]

- Schnelldorfer, T.; Castro, J.; Goldar-Najafi, A.; Liu, L. Development of a Deep Learning System for Intra-Operative Identification of Cancer Metastases. Ann. Surg. 2024, 280, 1006–1013. [Google Scholar] [CrossRef]

- Chen, G.; Xie, Y.; Yang, B.; Tan, J.N.; Zhong, G.; Zhong, L.; Zhou, S.; Han, F. Artificial Intelligence Model for Perigastric Blood Vessel Recognition During Laparoscopic Radical Gastrectomy with D2 Lymphadenectomy in Locally Advanced Gastric Cancer. BJS Open 2025, 9, zrae158. [Google Scholar] [CrossRef]

- Tashiro, Y.; Aoki, T.; Kobayashi, N.; Tomioka, K.; Kumazu, Y.; Akabane, M.; Shibata, H.; Hirai, T.; Matsuda, K.; Kusano, T. Color-Coded Laparoscopic Liver Resection Using Artificial Intelligence: A Preliminary Study. J. Hepatobiliary Pancreat Sci. 2024, 31, 67–68. [Google Scholar] [CrossRef]

- Ryu, S.; Goto, K.; Kitagawa, T.; Kobayashi, T.; Shimada, J.; Ito, R.; Nakabayashi, Y. Real-Time Artificial Intelligence Navigation-Assisted Anatomical Recognition in Laparoscopic Colorectal Surgery. J. Gastrointest. Surg. 2023, 27, 3080–3082. [Google Scholar] [CrossRef]

- Wu, S.; Tang, M.; Liu, J.; Qin, D.; Wang, Y.; Zhai, S.; Bi, E.; Li, Y.; Wang, C.; Xiong, Y.; et al. Impact of an AI-Based Laparoscopic Cholecystectomy Coaching Program on the Surgical Performance: A Randomized Controlled Trial. Int. J. Surg. 2024, 110, 7816–7823. [Google Scholar] [CrossRef]

- Halperin, L.; Sroka, G.; Zuckerman, I.; Laufer, S. Automatic Performance Evaluation of the Intracorporeal Suture Exercise. Int. J. Comput. Assist. Radiol. Surg. 2023, 19, 83–86. [Google Scholar] [CrossRef]

- Chen, G.; Li, L.; Hubert, J.; Luo, B.; Yang, K.; Wang, X. Effectiveness of a Vision-Based Handle Trajectory Monitoring System in Studying Robotic Suture Operation. J. Robot Surg. 2023, 17, 2791–2798. [Google Scholar] [CrossRef]

- Hashimoto, D.A.; Rosman, G.; Witkowski, E.R.; Stafford, C.; Navarette-Welton, A.J.; Rattner, D.W.; Lillemoe, K.D.; Rus, D.L.; Meireles, O.R. Computer Vision Analysis of Intraoperative Video: Automated Recognition of Operative Steps in Laparoscopic Sleeve Gastrectomy. Ann. Surg. 2019, 270, 414–421. [Google Scholar] [CrossRef] [PubMed]

- Takeuchi, M.; Kawakubo, H.; Tsuji, T.; Maeda, Y.; Matsuda, S.; Fukuda, K.; Nakamura, R.; Kitagawa, Y. Evaluation of Surgical Complexity by Automated Surgical Process Recognition in Robotic Distal Gastrectomy Using Artificial Intelligence. Surg. Endosc. 2023, 37, 4517–4524. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Lopez, V.; Morise, Z.; Albaladejo-González, M.; Gavara, C.G.; Goh, B.K.P.; Koh, Y.X.; Paul, S.J.; Hilal, M.A.; Mishima, K.; Krürger, J.A.P.; et al. Explainable Artificial Intelligence Prediction-Based Model in Laparoscopic Liver Surgery for Segments 7 and 8: An International Multicenter Study. Surg. Endosc. 2024, 38, 2411–2422. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.N.; An, J.H.; Zong, L. Advances in Artificial Intelligence for Predicting Complication Risks Post-Laparoscopic Radical Gastrectomy for Gastric Cancer: A Significant Leap Forward. World J. Gastroenterol. 2024, 30, 4669–4671. [Google Scholar] [CrossRef]

- Guan, P.; Luo, H.; Guo, J.; Zhang, Y.; Jia, F. Intraoperative Laparoscopic Liver Surface Registration with Preoperative CT Using Mixing Features and Overlapping Region Masks. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 1521–1531. [Google Scholar] [CrossRef]

- Aoyama, Y.; Matsunobu, Y.; Etoh, T.; Suzuki, K.; Fujita, S.; Aiba, T.; Fujishima, H.; Empuku, S.; Kono, Y.; Endo, Y.; et al. Correction: Artificial Intelligence for Surgical Safety During Laparoscopic Gastrectomy for Gastric Cancer: Indication of Anatomical Landmarks Related to Postoperative Pancreatic Fistula Using Deep Learning. Surg. Endosc. 2024, 38, 6203. [Google Scholar] [CrossRef]

- Akbari, H.; Kosugi, Y.; Khorgami, Z. Image-Guided Preparation of the Calot’s Triangle in Laparoscopic Cholecystectomy. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society: Engineering the Future of Biomedicine, EMBC 2009, Minneapolis, MN, USA, 3–6 September 2009; pp. 5649–5652. [Google Scholar] [CrossRef]

- Wagner, M.; Bihlmaier, A.; Kenngott, H.G.; Mietkowski, P.; Scheikl, P.M.; Bodenstedt, S.; Schiepe-Tiska, A.; Vetter, J.; Nickel, F.; Speidel, S.; et al. A Learning Robot for Cognitive Camera Control in Minimally Invasive Surgery. Surg. Endosc. 2021, 35, 5365–5374. [Google Scholar] [CrossRef]

- Acosta-Mérida, M.A.; Sánchez-Guillén, L.; Álvarez Gallego, M.; Barber, X.; Bellido Luque, J.A.; Sánchez Ramos, A. Encuesta Nacional Sobre La Gobernanza de Datos y Cirugía Digital: Desafíos y Oportunidades de Los Cirujanos En La Era de La Inteligencia Artificial. Cir. Esp. 2025, 103, 143–152. [Google Scholar] [CrossRef]

- Shafiei, S.B.; Shadpour, S.; Mohler, J.L. An Integrated Electroencephalography and Eye-Tracking Analysis Using EXtreme Gradient Boosting for Mental Workload Evaluation in Surgery. Hum. Factors J. Hum. Factors Ergon. Soc. 2025, 67, 464–484. [Google Scholar] [CrossRef]

- Khalid, M.U.; Laplante, S.; Masino, C.; Alseidi, A.; Jayaraman, S.; Zhang, H.; Mashouri, P.; Protserov, S.; Hunter, J.; Brudno, M.; et al. Use of Artificial Intelligence for Decision-Support to Avoid High-Risk Behaviors During Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 9467–9475. [Google Scholar] [CrossRef] [PubMed]

- Ward, T.M.; Hashimoto, D.A.; Ban, Y.; Rosman, G.; Meireles, O.R. Artificial Intelligence Prediction of Cholecystectomy Operative Course from Automated Identification of Gallbladder Inflammation. Surg. Endosc. 2022, 36, 6832–6840. [Google Scholar] [CrossRef]

- Orimoto, H.; Hirashita, T.; Ikeda, S.; Amano, S.; Kawamura, M.; Kawano, Y.; Takayama, H.; Masuda, T.; Endo, Y.; Matsunobu, Y.; et al. Development of an Artificial Intelligence System to Indicate Intraoperative Findings of Scarring in Laparoscopic Cholecystectomy for Cholecystitis. Surg. Endosc. 2025, 39, 1379–1387. [Google Scholar] [CrossRef] [PubMed]

- Kolbinger, F.R.; Rinner, F.M.; Jenke, A.C.; Carstens, M.; Krell, S.; Leger, S.; Distler, M.; Weitz, J.; Speidel, S.; Bodenstedt, S. Anatomy Segmentation in Laparoscopic Surgery: Comparison of Machine Learning and Human Expertise—An Experimental Study. Int. J. Surg. 2023, 109, 2962–2974. [Google Scholar] [CrossRef] [PubMed]

- Sato, Y.; Sese, J.; Matsuyama, T.; Onuki, M.; Mase, S.; Okuno, K.; Saito, K.; Fujiwara, N.; Hoshino, A.; Kawada, K.; et al. Preliminary Study for Developing a Navigation System for Gastric Cancer Surgery Using Artificial Intelligence. Surg. Today 2022, 52, 1753–1758. [Google Scholar] [CrossRef]

- Igaki, T.; Kitaguchi, D.; Kojima, S.; Hasegawa, H.; Takeshita, N.; Mori, K.; Kinugasa, Y.; Ito, M. Artificial Intelligence-Based Total Mesorectal Excision Plane Navigation in Laparoscopic Colorectal Surgery. Dis. Colon Rectum 2022, 65, E329–E333. [Google Scholar] [CrossRef]

- Jearanai, S.; Wangkulangkul, P.; Sae-Lim, W.; Cheewatanakornkul, S. Development of a Deep Learning Model for Safe Direct Optical Trocar Insertion in Minimally Invasive Surgery: An Innovative Method to Prevent Trocar Injuries. Surg. Endosc. 2023, 37, 7295–7304. [Google Scholar] [CrossRef]

- Oh, N.; Kim, B.; Kim, T.; Rhu, J.; Kim, J.; Choi, G.S. Real-Time Segmentation of Biliary Structure in Pure Laparoscopic Donor Hepatectomy. Sci. Rep. 2024, 14, 22508. [Google Scholar] [CrossRef]

- Benavides, D.; Cisnal, A.; Fontúrbel, C.; de la Fuente, E.; Fraile, J.C. Real-Time Tool Localization for Laparoscopic Surgery Using Convolutional Neural Network. Sensors 2024, 24, 4191. [Google Scholar] [CrossRef]

- Gazis, A.; Karaiskos, P.; Loukas, C. Surgical Gesture Recognition in Laparoscopic Tasks Based on the Transformer Network and Self-Supervised Learning. Bioengineering 2022, 9, 737. [Google Scholar] [CrossRef]

- Tomioka, K.; Aoki, T.; Kobayashi, N.; Tashiro, Y.; Kumazu, Y.; Shibata, H.; Hirai, T.; Yamazaki, T.; Saito, K.; Yamazaki, K.; et al. Development of a Novel Artificial Intelligence System for Laparoscopic Hepatectomy. Anticancer. Res. 2023, 43, 5235–5243. [Google Scholar] [CrossRef]

- Cui, P.; Zhao, S.; Chen, W. Identification of the Vas Deferens in Laparoscopic Inguinal Hernia Repair Surgery Using the Convolutional Neural Network. J. Healthc. Eng. 2021, 2021, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Memida, S.; Miura, S. Identification of Surgical Forceps Using YOLACT++ in Different Lighted Environments. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; IEEE: New York, NY, USA; pp. 1–4. [Google Scholar]

- Wesierski, D.; Jezierska, A. Instrument Detection and Pose Estimation with Rigid Part Mixtures Model in Video-Assisted Surgeries. Med. Image Anal. 2018, 46, 244–265. [Google Scholar] [CrossRef] [PubMed]

- Jurosch, F.; Wagner, L.; Jell, A.; Islertas, E.; Wilhelm, D.; Berlet, M. Extra-Abdominal Trocar and Instrument Detection for Enhanced Surgical Workflow Understanding. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1939–1945. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Brizuela, G.; Santos-Criado, F.J.; Sanz-Gobernado, D.; de la Fuente-López, E.; Fraile, J.C.; Pérez-Turiel, J.; Cisnal, A. Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks. Sensors 2022, 22, 5180. [Google Scholar] [CrossRef]

- Lai, S.-L.; Chen, C.-S.; Lin, B.-R.; Chang, R.-F. Intraoperative Detection of Surgical Gauze Using Deep Convolutional Neural Network. Ann. Biomed. Eng. 2023, 51, 352–362. [Google Scholar] [CrossRef]

- Ehrlich, J.; Jamzad, A.; Asselin, M.; Rodgers, J.R.; Kaufmann, M.; Haidegger, T.; Rudan, J.; Mousavi, P.; Fichtinger, G.; Ungi, T. Sensor-Based Automated Detection of Electrosurgical Cautery States. Sensors 2022, 22, 5808. [Google Scholar] [CrossRef]

- Nwoye, C.I.; Mutter, D.; Marescaux, J.; Padoy, N. Weakly Supervised Convolutional LSTM Approach for Tool Tracking in Laparoscopic Videos. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1059–1067. [Google Scholar] [CrossRef]

- Carstens, M.; Rinner, F.M.; Bodenstedt, S.; Jenke, A.C.; Weitz, J.; Distler, M.; Speidel, S.; Kolbinger, F.R. The Dresden Surgical Anatomy Dataset for Abdominal Organ Segmentation in Surgical Data Science. Sci. Data 2023, 10, 3. [Google Scholar] [CrossRef]

- Yin, Y.; Luo, S.; Zhou, J.; Kang, L.; Chen, C.Y.C. LDCNet: Lightweight Dynamic Convolution Network for Laparoscopic Procedures Image Segmentation. Neural. Netw. 2024, 170, 441–452. [Google Scholar] [CrossRef]

- Tashiro, Y.; Aoki, T.; Kobayashi, N.; Tomioka, K.; Saito, K.; Matsuda, K.; Kusano, T. Novel Navigation for Laparoscopic Cholecystectomy Fusing Artificial Intelligence and Indocyanine Green Fluorescent Imaging. J. Hepatobiliary Pancreat Sci. 2024, 31, 305–307. [Google Scholar] [CrossRef] [PubMed]

- Kitaguchi, D.; Harai, Y.; Kosugi, N.; Hayashi, K.; Kojima, S.; Ishikawa, Y.; Yamada, A.; Hasegawa, H.; Takeshita, N.; Ito, M. Artificial Intelligence for the Recognition of Key Anatomical Structures in Laparoscopic Colorectal Surgery. Br. J. Surg. 2023, 110, 1355–1358. [Google Scholar] [CrossRef] [PubMed]

- Han, F.; Zhong, G.; Zhi, S.; Han, N.; Jiang, Y.; Tan, J.; Zhong, L.; Zhou, S. Artificial Intelligence Recognition System of Pelvic Autonomic Nerve During Total Mesorectal Excision. Dis. Colon Rectum 2024, 68, 308–315. [Google Scholar] [CrossRef] [PubMed]

- Frey, S.; Facente, F.; Wei, W.; Ekmekci, E.S.; Séjor, E.; Baqué, P.; Durand, M.; Delingette, H.; Bremond, F.; Berthet-Rayne, P.; et al. Optimizing Intraoperative AI: Evaluation of YOLOv8 for Real-Time Recognition of Robotic and Laparoscopic Instruments. J. Robot Surg. 2025, 19, 131. [Google Scholar] [CrossRef]

- ElMoaqet, H.; Janini, R.; Ryalat, M.; Al-Refai, G.; Abdulbaki Alshirbaji, T.; Jalal, N.A.; Neumuth, T.; Moeller, K.; Navab, N. Using Masked Image Modelling Transformer Architecture for Laparoscopic Surgical Tool Classification and Localization. Sensors 2025, 25, 3017. [Google Scholar] [CrossRef]

- Korndorffer, J.R.; Hawn, M.T.; Spain, D.A.; Knowlton, L.M.; Azagury, D.E.; Nassar, A.K.; Lau, J.N.; Arnow, K.D.; Trickey, A.W.; Pugh, C.M. Situating Artificial Intelligence in Surgery: A Focus on Disease Severity. Ann. Surg. 2020, 272, 523–528. [Google Scholar] [CrossRef]

- Park, S.H.; Park, H.M.; Baek, K.R.; Ahn, H.M.; Lee, I.Y.; Son, G.M. Artificial Intelligence Based Real-Time Microcirculation Analysis System for Laparoscopic Colorectal Surgery. World J. Gastroenterol. 2020, 26, 6945–6962. [Google Scholar] [CrossRef]

- Ryu, K.; Kitaguchi, D.; Nakajima, K.; Ishikawa, Y.; Harai, Y.; Yamada, A.; Lee, Y.; Hayashi, K.; Kosugi, N.; Hasegawa, H.; et al. Deep Learning-Based Vessel Automatic Recognition for Laparoscopic Right Hemicolectomy. Surg. Endosc. 2024, 38, 171–178. [Google Scholar] [CrossRef]

- Zygomalas, A.; Kalles, D.; Katsiakis, N.; Anastasopoulos, A.; Skroubis, G. Artificial Intelligence Assisted Recognition of Anatomical Landmarks and Laparoscopic Instruments in Transabdominal Preperitoneal Inguinal Hernia Repair. Surg. Innov. 2024, 31, 178–184. [Google Scholar] [CrossRef]

- Mita, K.; Kobayashi, N.; Takahashi, K.; Sakai, T.; Shimaguchi, M.; Kouno, M.; Toyota, N.; Hatano, M.; Toyota, T.; Sasaki, J. Anatomical Recognition of Dissection Layers, Nerves, Vas Deferens, and Microvessels Using Artificial Intelligence During Transabdominal Preperitoneal Inguinal Hernia Repair. Hernia 2025, 29, 1–5. [Google Scholar] [CrossRef]

- Horita, K.; Hida, K.; Itatani, Y.; Fujita, H.; Hidaka, Y.; Yamamoto, G.; Ito, M.; Obama, K. Real-Time Detection of Active Bleeding in Laparoscopic Colectomy Using Artificial Intelligence. Surg. Endosc. 2024, 38, 3461–3469. [Google Scholar] [CrossRef] [PubMed]

- Kinoshita, K.; Maruyama, T.; Kobayashi, N.; Imanishi, S.; Maruyama, M.; Ohira, G.; Endo, S.; Tochigi, T.; Kinoshita, M.; Fukui, Y.; et al. An Artificial Intelligence-Based Nerve Recognition Model Is Useful as Surgical Support Technology and as an Educational Tool in Laparoscopic and Robot-Assisted Rectal Cancer Surgery. Surg. Endosc. 2024, 38, 5394–5404. [Google Scholar] [CrossRef] [PubMed]

- Takeuchi, M.; Collins, T.; Lipps, C.; Haller, M.; Uwineza, J.; Okamoto, N.; Nkusi, R.; Marescaux, J.; Kawakubo, H.; Kitagawa, Y.; et al. Towards Automatic Verification of the Critical View of the Myopectineal Orifice with Artificial Intelligence. Surg. Endosc. 2023, 37, 4525–4534. [Google Scholar] [CrossRef] [PubMed]

- Une, N.; Kobayashi, S.; Kitaguchi, D.; Sunakawa, T.; Sasaki, K.; Ogane, T.; Hayashi, K.; Kosugi, N.; Kudo, M.; Sugimoto, M.; et al. Intraoperative Artificial Intelligence System Identifying Liver Vessels in Laparoscopic Liver Resection: A Retrospective Experimental Study. Surg. Endosc. 2024, 38, 1088–1095. [Google Scholar] [CrossRef]

- Kojima, S.; Kitaguchi, D.; Igaki, T.; Nakajima, K.; Ishikawa, Y.; Harai, Y.; Yamada, A.; Lee, Y.; Hayashi, K.; Kosugi, N.; et al. Deep-Learning-Based Semantic Segmentation of Autonomic Nerves from Laparoscopic Images of Colorectal Surgery: An Experimental Pilot Study. Int. J. Surg. 2023, 109, 813–820. [Google Scholar] [CrossRef]

- Nakanuma, H.; Endo, Y.; Fujinaga, A.; Kawamura, M.; Kawasaki, T.; Masuda, T.; Hirashita, T.; Etoh, T.; Shinozuka, K.; Matsunobu, Y.; et al. An Intraoperative Artificial Intelligence System Identifying Anatomical Landmarks for Laparoscopic Cholecystectomy: A Prospective Clinical Feasibility Trial (J-SUMMIT-C-01). Surg. Endosc. 2023, 37, 1933–1942. [Google Scholar] [CrossRef]

- Loukas, C.; Gazis, A.; Schizas, D. Multiple Instance Convolutional Neural Network for Gallbladder Assessment from Laparoscopic Images. Int. J. Med. Robot. Comput. Assist. Surg. 2022, 18, e2445. [Google Scholar] [CrossRef]

- Endo, Y.; Tokuyasu, T.; Mori, Y.; Asai, K.; Umezawa, A.; Kawamura, M.; Fujinaga, A.; Ejima, A.; Kimura, M.; Inomata, M. Impact of AI System on Recognition for Anatomical Landmarks Related to Reducing Bile Duct Injury During Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 5752–5759. [Google Scholar] [CrossRef]

- Fried, G.M.; Ortenzi, M.; Dayan, D.; Nizri, E.; Mirkin, Y.; Maril, S.; Asselmann, D.; Wolf, T. Surgical Intelligence Can Lead to Higher Adoption of Best Practices in Minimally Invasive Surgery. Ann. Surg. 2024, 280, 525–534. [Google Scholar] [CrossRef]

- Mascagni, P.; Vardazaryan, A.; Alapatt, D.; Urade, T.; Emre, T.; Fiorillo, C.; Pessaux, P.; Mutter, D.; Marescaux, J.; Costamagna, G.; et al. Artificial Intelligence for Surgical Safety Automatic Assessment of the Critical View of Safety in Laparoscopic Cholecystectomy Using Deep Learning. Ann. Surg. 2022, 275, 955–961. [Google Scholar] [CrossRef]

- Fujinaga, A.; Endo, Y.; Etoh, T.; Kawamura, M.; Nakanuma, H.; Kawasaki, T.; Masuda, T.; Hirashita, T.; Kimura, M.; Matsunobu, Y.; et al. Development of a Cross-Artificial Intelligence System for Identifying Intraoperative Anatomical Landmarks and Surgical Phases During Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 6118–6128. [Google Scholar] [CrossRef]

- Kawamura, M.; Endo, Y.; Fujinaga, A.; Orimoto, H.; Amano, S.; Kawasaki, T.; Kawano, Y.; Masuda, T.; Hirashita, T.; Kimura, M.; et al. Development of an Artificial Intelligence System for Real-Time Intraoperative Assessment of the Critical View of Safety in Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 8755–8763. [Google Scholar] [CrossRef]

- Tokuyasu, T.; Iwashita, Y.; Matsunobu, Y.; Kamiyama, T.; Ishikake, M.; Sakaguchi, S.; Ebe, K.; Tada, K.; Endo, Y.; Etoh, T.; et al. Development of an Artificial Intelligence System Using Deep Learning to Indicate Anatomical Landmarks During Laparoscopic Cholecystectomy. Surg. Endosc. 2021, 35, 1651–1658. [Google Scholar] [CrossRef]

- Zhang, K.; Qiao, Z.; Yang, L.; Zhang, T.; Liu, F.; Sun, D.; Xie, T.; Guo, L.; Lu, C. Computer-Vision-Based Artificial Intelligence for Detection and Recognition of Instruments and Organs During Radical Laparoscopic Gastrectomy for Gastric Cancer: A Multicenter Study. Chin. J. Gastrointest. Surg. 2024, 27, 464–470. [Google Scholar] [CrossRef]

- Ortenzi, M.; Rapoport Ferman, J.; Antolin, A.; Bar, O.; Zohar, M.; Perry, O.; Asselmann, D.; Wolf, T. A Novel High Accuracy Model for Automatic Surgical Workflow Recognition Using Artificial Intelligence in Laparoscopic Totally Extraperitoneal Inguinal Hernia Repair (TEP). Surg. Endosc. 2023, 37, 8818–8828. [Google Scholar] [CrossRef] [PubMed]

- Belmar, F.; Gaete, M.I.; Escalona, G.; Carnier, M.; Durán, V.; Villagrán, I.; Asbun, D.; Cortés, M.; Neyem, A.; Crovari, F.; et al. Artificial Intelligence in Laparoscopic Simulation: A Promising Future for Large-Scale Automated Evaluations. Surg. Endosc. 2023, 37, 4942–4946. [Google Scholar] [CrossRef] [PubMed]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Accurate and Interpretable Evaluation of Surgical Skills from Kinematic Data Using Fully Convolutional Neural Networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1611–1617. [Google Scholar] [CrossRef]

- Nguyen, X.A.; Ljuhar, D.; Pacilli, M.; Nataraja, R.M.; Chauhan, S. Surgical Skill Levels: Classification and Analysis Using Deep Neural Network Model and Motion Signals. Comput. Methods Programs Biomed. 2019, 177, 1–8. [Google Scholar] [CrossRef]

- SATR-DL: Improving Surgical Skill Assessment and Task Recognition in Robot-Assisted Surgery with Deep Neural Networks-All Databases. Available online: https://www.webofscience.com/wos/alldb/full-record/WOS:000596231902067 (accessed on 25 May 2025).

- Funke, I.; Mees, S.T.; Weitz, J.; Speidel, S. Video-Based Surgical Skill Assessment Using 3D Convolutional Neural Networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1217–1225. [Google Scholar] [CrossRef]

- Partridge, R.W.; Hughes, M.A.; Brennan, P.M.; Hennessey, I.A.M. Accessible Laparoscopic Instrument Tracking (“InsTrac”): Construct Validity in a Take-Home Box Simulator. J. Laparoendosc. Adv. Surg. Tech. 2014, 24, 578–583. [Google Scholar] [CrossRef]

- Derathé, A.; Reche, F.; Guy, S.; Charrière, K.; Trilling, B.; Jannin, P.; Moreau-Gaudry, A.; Gibaud, B.; Voros, S. LapEx: A New Multimodal Dataset for Context Recognition and Practice Assessment in Laparoscopic Surgery. Sci. Data 2025, 12, 342. [Google Scholar] [CrossRef]

- Bogar, P.Z.; Virag, M.; Bene, M.; Hardi, P.; Matuz, A.; Schlegl, A.T.; Toth, L.; Molnar, F.; Nagy, B.; Rendeki, S.; et al. Validation of a Novel, Low-Fidelity Virtual Reality Simulator and an Artificial Intelligence Assessment Approach for Peg Transfer Laparoscopic Training. Sci. Rep. 2024, 14, 16702. [Google Scholar] [CrossRef]

- Matsumoto, S.; Kawahira, H.; Fukata, K.; Doi, Y.; Kobayashi, N.; Hosoya, Y.; Sata, N. Laparoscopic Distal Gastrectomy Skill Evaluation from Video: A New Artificial Intelligence-Based Instrument Identification System. Sci. Rep. 2024, 14, 12432. [Google Scholar] [CrossRef]

- Gillani, M.; Rupji, M.; Paul Olson, T.J.; Sullivan, P.; Shaffer, V.O.; Balch, G.C.; Shields, M.C.; Liu, Y.; Rosen, S.A. Objective Performance Indicators During Robotic Right Colectomy Differ According to Surgeon Skill. J. Surg. Res. 2024, 302, 836–844. [Google Scholar] [CrossRef]

- Yang, J.H.; Goodman, E.D.; Dawes, A.J.; Gahagan, J.V.; Esquivel, M.M.; Liebert, C.A.; Kin, C.; Yeung, S.; Gurland, B.H. Using AI and Computer Vision to Analyze Technical Proficiency in Robotic Surgery. Surg. Endosc. 2023, 37, 3010–3017. [Google Scholar] [CrossRef] [PubMed]

- Caballero, D.; Pérez-Salazar, M.J.; Sánchez-Margallo, J.A.; Sánchez-Margallo, F.M. Applying Artificial Intelligence on EDA Sensor Data to Predict Stress on Minimally Invasive Robotic-Assisted Surgery. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1953–1963. [Google Scholar] [CrossRef] [PubMed]

- Yanik, E.; Ainam, J.P.; Fu, Y.; Schwaitzberg, S.; Cavuoto, L.; De, S. Video-Based Skill Acquisition Assessment in Laparoscopic Surgery Using Deep Learning. Glob. Surg. Educ.-J. Assoc. Surg. Educ. 2024, 3, 26. [Google Scholar] [CrossRef]

- Nakajima, K.; Kitaguchi, D.; Takenaka, S.; Tanaka, A.; Ryu, K.; Takeshita, N.; Kinugasa, Y.; Ito, M. Automated Surgical Skill Assessment in Colorectal Surgery Using a Deep Learning-Based Surgical Phase Recognition Model. Surg. Endosc. 2024, 38, 6347–6355. [Google Scholar] [CrossRef]

- Yamazaki, Y.; Kanaji, S.; Kudo, T.; Takiguchi, G.; Urakawa, N.; Hasegawa, H.; Yamamoto, M.; Matsuda, Y.; Yamashita, K.; Matsuda, T.; et al. Quantitative Comparison of Surgical Device Usage in Laparoscopic Gastrectomy Between Surgeons’ Skill Levels: An Automated Analysis Using a Neural Network. J. Gastrointest. Surg. 2022, 26, 1006–1014. [Google Scholar] [CrossRef]

- Allen, B.; Nistor, V.; Dutson, E.; Carman, G.; Lewis, C.; Faloutsos, P. Support Vector Machines Improve the Accuracy of Evaluation for the Performance of Laparoscopic Training Tasks. Surg. Endosc. 2010, 24, 170–178. [Google Scholar] [CrossRef]

- Fukuta, A.; Yamashita, S.; Maniwa, J.; Tamaki, A.; Kondo, T.; Kawakubo, N.; Nagata, K.; Matsuura, T.; Tajiri, T. Artificial Intelligence Facilitates the Potential of Simulator Training: An Innovative Laparoscopic Surgical Skill Validation System Using Artificial Intelligence Technology. Int. J. Comput. Assist. Radiol. Surg. 2024, 20, 597–603. [Google Scholar] [CrossRef]

- Moglia, A.; Morelli, L.; D’Ischia, R.; Fatucchi, L.M.; Pucci, V.; Berchiolli, R.; Ferrari, M.; Cuschieri, A. Ensemble Deep Learning for the Prediction of Proficiency at a Virtual Simulator for Robot-Assisted Surgery. Surg. Endosc. 2022, 36, 6473–6479. [Google Scholar] [CrossRef]

- Ju, S.; Jiang, P.; Jin, Y.; Fu, Y.; Wang, X.; Tan, X.; Han, Y.; Yin, R.; Pu, D.; Li, K. Automatic Gesture Recognition and Evaluation in Peg Transfer Tasks of Laparoscopic Surgery Training. Surg. Endosc. 2025, 39, 3749–3759. [Google Scholar] [CrossRef]

- Cruz, E.; Selman, R.; Figueroa, Ú.; Belmar, F.; Jarry, C.; Sanhueza, D.; Escalona, G.; Carnier, M.; Varas, J. A Scalable Solution: Effective AI Implementation in Laparoscopic Simulation Training Assessments. Glob. Surg. Educ. J. Assoc. Surg. Educ. 2025, 4, 46. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, D.; Li, A.; Sun, L.; Zhao, J.; Liu, J.; Liu, L.; Zhou, X.; Chen, Y.; Cai, Y.; et al. Decoding Surgical Skill: An Objective and Efficient Algorithm for Surgical Skill Classification Based on Surgical Gesture Features—Experimental Studies. Int. J. Surg. 2024, 110, 1441–1449. [Google Scholar] [CrossRef]

- Erlich-Feingold, O.; Anteby, R.; Klang, E.; Soffer, S.; Cordoba, M.; Nachmany, I.; Amiel, I.; Barash, Y. Artificial Intelligence Classifies Surgical Technical Skills in Simulated Laparoscopy: A Pilot Study. Surg. Endosc. 2025, 39, 3592–3599. [Google Scholar] [CrossRef] [PubMed]

- Power, D.; Burke, C.; Madden, M.G.; Ullah, I. Automated Assessment of Simulated Laparoscopic Surgical Skill Performance Using Deep Learning. Sci. Rep. 2025, 15, 13591. [Google Scholar] [CrossRef] [PubMed]

- Alonso-Silverio, G.A.; Pérez-Escamirosa, F.; Bruno-Sanchez, R.; Ortiz-Simon, J.L.; Muñoz-Guerrero, R.; Minor-Martinez, A.; Alarcón-Paredes, A. Development of a Laparoscopic Box Trainer Based on Open Source Hardware and Artificial Intelligence for Objective Assessment of Surgical Psychomotor Skills. Surg. Innov. 2018, 25, 380–388. [Google Scholar] [CrossRef]

- Pan, J.J.; Chang, J.; Yang, X.; Zhang, J.J.; Qureshi, T.; Howell, R.; Hickish, T. Graphic and Haptic Simulation System for Virtual Laparoscopic Rectum Surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2011, 7, 304–317. [Google Scholar] [CrossRef] [PubMed]

- Ershad, M.; Rege, R.; Majewicz Fey, A. Automatic and near Real-Time Stylistic Behavior Assessment in Robotic Surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 635–643. [Google Scholar] [CrossRef]

- Kowalewski, K.F.; Garrow, C.R.; Schmidt, M.W.; Benner, L.; Müller-Stich, B.P.; Nickel, F. Sensor-Based Machine Learning for Workflow Detection and as Key to Detect Expert Level in Laparoscopic Suturing and Knot-Tying. Surg. Endosc. 2019, 33, 3732–3740. [Google Scholar] [CrossRef] [PubMed]

- St John, A.; Khalid, M.U.; Masino, C.; Noroozi, M.; Alseidi, A.; Hashimoto, D.A.; Altieri, M.; Serrot, F.; Kersten-Oertal, M.; Madani, A. LapBot-Safe Chole: Validation of an Artificial Intelligence-Powered Mobile Game App to Teach Safe Cholecystectomy. Surg. Endosc. 2024, 38, 5274–5284. [Google Scholar] [CrossRef] [PubMed]

- Yen, H.H.; Hsiao, Y.H.; Yang, M.H.; Huang, J.Y.; Lin, H.T.; Huang, C.C.; Blue, J.; Ho, M.C. Automated Surgical Action Recognition and Competency Assessment in Laparoscopic Cholecystectomy: A Proof-of-Concept Study. Surg. Endosc. 2025, 39, 3006–3016. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, K.; Takenaka, S.; Kitaguchi, D.; Tanaka, A.; Ryu, K.; Takeshita, N.; Kinugasa, Y.; Ito, M. Artificial Intelligence Assessment of Tissue-Dissection Efficiency in Laparoscopic Colorectal Surgery. Langenbecks Arch. Surg. 2025, 410, 80. [Google Scholar] [CrossRef]

- Igaki, T.; Kitaguchi, D.; Matsuzaki, H.; Nakajima, K.; Kojima, S.; Hasegawa, H.; Takeshita, N.; Kinugasa, Y.; Ito, M. Automatic Surgical Skill Assessment System Based on Concordance of Standardized Surgical Field Development Using Artificial Intelligence. JAMA Surg. 2023, 158, E231131. [Google Scholar] [CrossRef]

- Smith, R.; Julian, D.; Dubin, A. Deep Neural Networks Are Effective Tools for Assessing Performance During Surgical Training. J. Robot Surg. 2022, 16, 559–562. [Google Scholar] [CrossRef]

- Loukas, C.; Seimenis, I.; Prevezanou, K.; Schizas, D. Prediction of Remaining Surgery Duration in Laparoscopic Videos Based on Visual Saliency and the Transformer Network. Int. J. Med. Robot. Comput. Assist. Surg. 2024, 20, e2632. [Google Scholar] [CrossRef]

- Wagner, M.; Müller-Stich, B.P.; Kisilenko, A.; Tran, D.; Heger, P.; Mündermann, L.; Lubotsky, D.M.; Müller, B.; Davitashvili, T.; Capek, M.; et al. Comparative Validation of Machine Learning Algorithms for Surgical Workflow and Skill Analysis with the HeiChole Benchmark. Med. Image Anal. 2023, 86, 102770. [Google Scholar] [CrossRef]

- Zhang, B.; Goel, B.; Sarhan, M.H.; Goel, V.K.; Abukhalil, R.; Kalesan, B.; Stottler, N.; Petculescu, S. Surgical Workflow Recognition with Temporal Convolution and Transformer for Action Segmentation. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 785–794. [Google Scholar] [CrossRef]

- Park, B.; Chi, H.; Park, B.; Lee, J.; Jin, H.S.; Park, S.; Hyung, W.J.; Choi, M.K. Visual Modalities-Based Multimodal Fusion for Surgical Phase Recognition. Comput. Biol. Med. 2023, 166, 107453. [Google Scholar] [CrossRef]

- Twinanda, A.P.; Yengera, G.; Mutter, D.; Marescaux, J.; Padoy, N. RSDNet: Learning to Predict Remaining Surgery Duration from Laparoscopic Videos Without Manual Annotations. IEEE Trans. Med. Imaging 2019, 38, 1069–1078. [Google Scholar] [CrossRef]

- Zang, C.; Turkcan, M.K.; Narasimhan, S.; Cao, Y.; Yarali, K.; Xiang, Z.; Szot, S.; Ahmad, F.; Choksi, S.; Bitner, D.P.; et al. Surgical Phase Recognition in Inguinal Hernia Repair—AI-Based Confirmatory Baseline and Exploration of Competitive Models. Bioengineering 2023, 10, 654. [Google Scholar] [CrossRef]

- Cartucho, J.; Weld, A.; Tukra, S.; Xu, H.; Matsuzaki, H.; Ishikawa, T.; Kwon, M.; Jang, Y.E.; Kim, K.J.; Lee, G.; et al. SurgT Challenge: Benchmark of Soft-Tissue Trackers for Robotic Surgery. Med. Image Anal. 2024, 91, 102985. [Google Scholar] [CrossRef]

- Zheng, Y.; Leonard, G.; Zeh, H.; Fey, A.M. Frame-Wise Detection of Surgeon Stress Levels During Laparoscopic Training Using Kinematic Data. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 785–794. [Google Scholar] [CrossRef]

- Zhai, Y.; Chen, Z.; Zheng, Z.; Wang, X.; Yan, X.; Liu, X.; Yin, J.; Wang, J.; Zhang, J. Artificial Intelligence for Automatic Surgical Phase Recognition of Laparoscopic Gastrectomy in Gastric Cancer. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 345–353. [Google Scholar] [CrossRef]

- You, J.; Cai, H.; Wang, Y.; Bian, A.; Cheng, K.; Meng, L.; Wang, X.; Gao, P.; Chen, S.; Cai, Y.; et al. Artificial Intelligence Automated Surgical Phases Recognition in Intraoperative Videos of Laparoscopic Pancreatoduodenectomy. Surg. Endosc. 2024, 38, 4894–4905. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Yang, R.; Yang, S.; Ni, X.; Li, Y.; Jiang, Z.; Wang, X.; Wang, L.; Chen, Z.; Liu, X. Development and Validation of a Deep-Learning Based Assistance System for Enhancing Laparoscopic Control Level. Int. J. Med. Robot. Comput. Assist. Surg. 2023, 19, e2449. [Google Scholar] [CrossRef] [PubMed]

- Dayan, D. Implementation of Artificial Intelligence–Based Computer Vision Model for Sleeve Gastrectomy: Experience in One Tertiary Center. Obes. Surg. 2024, 34, 330–336. [Google Scholar] [CrossRef] [PubMed]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Oda, T.; Watanabe, M.; Mori, K.; Kobayashi, E.; Ito, M. Automated Laparoscopic Colorectal Surgery Workflow Recognition Using Artificial Intelligence: Experimental Research. Int. J. Surg. 2020, 79, 88–94. [Google Scholar] [CrossRef]

- Yoshida, M.; Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Ishikawa, Y.; Yura, M.; Akimoto, T.; Kinoshita, T.; Ito, M. Surgical Step Recognition in Laparoscopic Distal Gastrectomy Using Artificial Intelligence: A Proof-of-Concept Study. Langenbecks Arch. Surg. 2024, 409, 213. [Google Scholar] [CrossRef]

- Fer, D.; Zhang, B.; Abukhalil, R.; Goel, V.; Goel, B.; Barker, J.; Kalesan, B.; Barragan, I.; Gaddis, M.L.; Kilroy, P.G. An Artificial Intelligence Model That Automatically Labels Roux-En-Y Gastric Bypasses, a Comparison to Trained Surgeon Annotators. Surg. Endosc. 2023, 37, 5665–5672. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, S.; Zhang, G.; Zhang, X.; Hu, M.; Zhang, X.; Li, C.; Zhou, S.K.; Liu, R. Multilevel Effective Surgical Workflow Recognition in Robotic Left Lateral Sectionectomy with Deep Learning: Experimental Research. Int. J. Surg. 2023, 109, 2941–2952. [Google Scholar] [CrossRef] [PubMed]

- Khojah, B.; Enani, G.; Saleem, A.; Malibary, N.; Sabbagh, A.; Malibari, A.; Alhalabi, W. Deep Learning-Based Intraoperative Visual Guidance Model for Ureter Identification in Laparoscopic Sigmoidectomy. Surg. Endosc. 2025, 39, 3610–3623. [Google Scholar] [CrossRef] [PubMed]

- Lavanchy, J.L.; Ramesh, S.; Dall’Alba, D.; Gonzalez, C.; Fiorini, P.; Müller-Stich, B.P.; Nett, P.C.; Marescaux, J.; Mutter, D.; Padoy, N. Challenges in Multi-Centric Generalization: Phase and Step Recognition in Roux-En-Y Gastric Bypass Surgery. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 2249–2257. [Google Scholar] [CrossRef] [PubMed]

- Komatsu, M.; Kitaguchi, D.; Yura, M.; Takeshita, N.; Yoshida, M.; Yamaguchi, M.; Kondo, H.; Kinoshita, T.; Ito, M. Automatic Surgical Phase Recognition-Based Skill Assessment in Laparoscopic Distal Gastrectomy Using Multicenter Videos. Gastric Cancer 2024, 27, 187–196. [Google Scholar] [CrossRef]

- Sasaki, K.; Ito, M.; Kobayashi, S.; Kitaguchi, D.; Matsuzaki, H.; Kudo, M.; Hasegawa, H.; Takeshita, N.; Sugimoto, M.; Mitsunaga, S.; et al. Automated Surgical Workflow Identification by Artificial Intelligence in Laparoscopic Hepatectomy: Experimental Research. Int. J. Surg. 2022, 105, 106856. [Google Scholar] [CrossRef]

- Madani, A.; Namazi, B.; Altieri, M.S.; Hashimoto, D.A.; Rivera, A.M.; Pucher, P.H.; Navarrete-Welton, A.; Sankaranarayanan, G.; Brunt, L.M.; Okrainec, A.; et al. Artificial Intelligence for Intraoperative Guidance: Using Semantic Segmentation to Identify Surgical Anatomy during Laparoscopic Cholecystectomy. Ann. Surg. 2022, 276, 363–369. [Google Scholar] [CrossRef]

- Cheng, K.; You, J.; Wu, S.; Chen, Z.; Zhou, Z.; Guan, J.; Peng, B.; Wang, X. Artificial Intelligence-Based Automated Laparoscopic Cholecystectomy Surgical Phase Recognition and Analysis. Surg. Endosc. 2022, 36, 3160–3168. [Google Scholar] [CrossRef]

- Golany, T.; Aides, A.; Freedman, D.; Rabani, N.; Liu, Y.; Rivlin, E.; Corrado, G.S.; Matias, Y.; Khoury, W.; Kashtan, H.; et al. Artificial Intelligence for Phase Recognition in Complex Laparoscopic Cholecystectomy. Surg. Endosc. 2022, 36, 9215–9223. [Google Scholar] [CrossRef]

- Shinozuka, K.; Turuda, S.; Fujinaga, A.; Nakanuma, H.; Kawamura, M.; Matsunobu, Y.; Tanaka, Y.; Kamiyama, T.; Ebe, K.; Endo, Y.; et al. Artificial Intelligence Software Available for Medical Devices: Surgical Phase Recognition in Laparoscopic Cholecystectomy. Surg. Endosc. 2022, 36, 7444–7452. [Google Scholar] [CrossRef]

- Masum, S.; Hopgood, A.; Stefan, S.; Flashman, K.; Khan, J. Data Analytics and Artificial Intelligence in Predicting Length of Stay, Readmission, and Mortality: A Population-Based Study of Surgical Management of Colorectal Cancer. Discov. Oncol. 2022, 13, 11. [Google Scholar] [CrossRef]

- Lopez-Lopez, V.; Maupoey, J.; López-Andujar, R.; Ramos, E.; Mils, K.; Martinez, P.A.; Valdivieso, A.; Garcés-Albir, M.; Sabater, L.; Valladares, L.D.; et al. Machine Learning-Based Analysis in the Management of Iatrogenic Bile Duct Injury During Cholecystectomy: A Nationwide Multicenter Study. J. Gastrointest. Surg. 2022, 26, 1713–1723. [Google Scholar] [CrossRef]

- Cai, Z.H.; Zhang, Q.; Fu, Z.W.; Fingerhut, A.; Tan, J.W.; Zang, L.; Dong, F.; Li, S.C.; Wang, S.L.; Ma, J.J. Magnetic Resonance Imaging-Based Deep Learning Model to Predict Multiple Firings in Double-Stapled Colorectal Anastomosis. World J. Gastroenterol. 2023, 29, 536–548. [Google Scholar] [CrossRef]

- Dayan, D.; Dvir, N.; Agbariya, H.; Nizri, E. Implementation of Artificial Intelligence-Based Computer Vision Model in Laparoscopic Appendectomy: Validation, Reliability, and Clinical Correlation. Surg. Endosc. 2024, 38, 3310–3319. [Google Scholar] [CrossRef] [PubMed]

- Arpaia, P.; Bracale, U.; Corcione, F.; De Benedetto, E.; Di Bernardo, A.; Di Capua, V.; Duraccio, L.; Peltrini, R.; Prevete, R. Assessment of Blood Perfusion Quality in Laparoscopic Colorectal Surgery by Means of Machine Learning. Sci. Rep. 2022, 12, 14682. [Google Scholar] [CrossRef] [PubMed]

- Gillani, M.; Rupji, M.; Paul Olson, T.J.; Sullivan, P.; Shaffer, V.O.; Balch, G.C.; Shields, M.C.; Liu, Y.; Rosen, S.A. Objective Performance Indicators Differ in Obese and Nonobese Patients during Robotic Proctectomy. Surgery 2024, 176, 1591–1597. [Google Scholar] [CrossRef] [PubMed]

- Emile, S.H.; Horesh, N.; Garoufalia, Z.; Gefen, R.; Rogers, P.; Wexner, S.D. An Artificial Intelligence-Designed Predictive Calculator of Conversion from Minimally Invasive to Open Colectomy in Colon Cancer. Updates Surg. 2024, 76, 1321–1330. [Google Scholar] [CrossRef]

- Velmahos, C.S.; Paschalidis, A.; Paranjape, C.N. The Not-So-Distant Future or Just Hype? Utilizing Machine Learning to Predict 30-Day Post-Operative Complications in Laparoscopic Colectomy Patients. Am. Surg. 2023, 89, 5648–5654. [Google Scholar] [CrossRef]

- Jo, S.J.; Rhu, J.; Kim, J.; Choi, G.-S.; Joh, J.W. Indication Model for Laparoscopic Repeat Liver Resection in the Era of Artificial Intelligence: Machine Learning Prediction of Surgical Indication. HPB 2025, 27, 832–843. [Google Scholar] [CrossRef]

- Li, Y.; Su, Y.; Shao, S.; Wang, T.; Liu, X.; Qin, J. Machine Learning–Based Prediction of Duodenal Stump Leakage Following Laparoscopic Gastrectomy for Gastric Cancer. Surgery 2025, 180, 108999. [Google Scholar] [CrossRef]

- Lippenberger, F.; Ziegelmayer, S.; Berlet, M.; Feussner, H.; Makowski, M.; Neumann, P.-A.; Graf, M.; Kaissis, G.; Wilhelm, D.; Braren, R.; et al. Development of an Image-Based Random Forest Classifier for Prediction of Surgery Duration of Laparoscopic Sigmoid Resections. Int. J. Color. Dis. 2024, 39, 21. [Google Scholar] [CrossRef]

- Zhou, C.M.; Li, H.J.; Xue, Q.; Yang, J.J.; Zhu, Y. Artificial Intelligence Algorithms for Predicting Post-Operative Ileus after Laparoscopic Surgery. Heliyon 2024, 10, e26580. [Google Scholar] [CrossRef]

- Du, C.; Li, J.; Zhang, B.; Feng, W.; Zhang, T.; Li, D. Intraoperative Navigation System with a Multi-Modality Fusion of 3D Virtual Model and Laparoscopic Real-Time Images in Laparoscopic Pancreatic Surgery: A Preclinical Study. BMC Surg. 2022, 22, 139. [Google Scholar] [CrossRef] [PubMed]

- Kasai, M.; Uchiyama, H.; Aihara, T.; Ikuta, S.; Yamanaka, N. Laparoscopic Projection Mapping of the Liver Portal Segment, Based on Augmented Reality Combined with Artificial Intelligence, for Laparoscopic Anatomical Liver Resection. Cureus 2023, 15, e48450. [Google Scholar] [CrossRef] [PubMed]

- Ryu, S.; Imaizumi, Y.; Goto, K.; Iwauchi, S.; Kobayashi, T.; Ito, R.; Nakabayashi, Y. Feasibility of Simultaneous Artificial Intelligence-Assisted and NIR Fluorescence Navigation for Anatomical Recognition in Laparoscopic Colorectal Surgery. J. Fluoresc. 2024, 35, 6755–6761. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Granero, A.; Jerí Mc-Farlane, S.; Gamundí Cuesta, M.; González-Argente, F.X. Application of 3D-Reconstruction and Artificial Intelligence for Complete Mesocolic Excision and D3 Lymphadenectomy in Colon Cancer. Cir. Esp. 2023, 101, 359–368. [Google Scholar] [CrossRef]

- Ali, S.; Espinel, Y.; Jin, Y.; Liu, P.; Güttner, B.; Zhang, X.; Zhang, L.; Dowrick, T.; Clarkson, M.J.; Xiao, S.; et al. An Objective Comparison of Methods for Augmented Reality in Laparoscopic Liver Resection by Preoperative-to-Intraoperative Image Fusion from the MICCAI2022 Challenge. Med. Image Anal. 2025, 99, 103371. [Google Scholar] [CrossRef]

- Robu, M.R.; Edwards, P.; Ramalhinho, J.; Thompson, S.; Davidson, B.; Hawkes, D.; Stoyanov, D.; Clarkson, M.J. Intelligent Viewpoint Selection for Efficient CT to Video Registration in Laparoscopic Liver Surgery. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1079–1088. [Google Scholar] [CrossRef]

- Wei, R.; Li, B.; Mo, H.; Lu, B.; Long, Y.; Yang, B.; Dou, Q.; Liu, Y.; Sun, D. Stereo Dense Scene Reconstruction and Accurate Localization for Learning-Based Navigation of Laparoscope in Minimally Invasive Surgery. IEEE Trans. Biomed. Eng. 2023, 70, 488–500. [Google Scholar] [CrossRef]

- Nicolaou, M.; James, A.; Lo, B.P.L.; Darzi, A.; Yang, G.-Z. Invisible Shadow for Navigation and Planning in Minimal Invasive Surgery. Med. Image Comput. Comput. Assist. Interv. 2005, 8, 25–32. [Google Scholar]

- Calinon, S.; Bruno, D.; Malekzadeh, M.S.; Nanayakkara, T.; Caldwell, D.G. Human-Robot Skills Transfer Interfaces for a Flexible Surgical Robot. Comput. Methods Programs Biomed. 2014, 116, 81–96. [Google Scholar] [CrossRef]

- Zheng, Q.; Yang, R.; Ni, X.; Yang, S.; Jiang, Z.; Wang, L.; Chen, Z.; Liu, X. Development and Validation of a Deep Learning-Based Laparoscopic System for Improving Video Quality. Int. J. Comput. Assist. Radiol. Surg. 2022, 18, 257–268. [Google Scholar] [CrossRef]

- Cheng, Q.; Dong, Y. Da Vinci Robot-Assisted Video Image Processing under Artificial Intelligence Vision Processing Technology. Comput. Math. Methods Med. 2022, 2022, 2752444. [Google Scholar] [CrossRef] [PubMed]

- Katić, D.; Wekerle, A.-L.; Görtler, J.; Spengler, P.; Bodenstedt, S.; Röhl, S.; Suwelack, S.; Kenngott, H.G.; Wagner, M.; Müller-Stich, B.P.; et al. Context-Aware Augmented Reality in Laparoscopic Surgery. Comput. Med. Imaging Graph. 2013, 37, 174–182. [Google Scholar] [CrossRef] [PubMed]

- Beyersdorffer, P.; Kunert, W.; Jansen, K.; Miller, J.; Wilhelm, P.; Burgert, O.; Kirschniak, A.; Rolinger, J. Detection of Adverse Events Leading to Inadvertent Injury during Laparoscopic Cholecystectomy Using Convolutional Neural Networks. Biomed. Tech. 2021, 66, 413–421. [Google Scholar] [CrossRef] [PubMed]

- Salazar-Colores, S.; Moreno, H.A.; Moya, U.; Ortiz-Echeverri, C.J.; Tavares de la Paz, L.A.; Flores, G. Removal of Smoke Effects in Laparoscopic Surgery via Adversarial Neural Network and the Dark Channel Prior. Cir. Y Cir. (Engl. Ed.) 2022, 90, 74–83. [Google Scholar] [CrossRef]

- He, W.; Zhu, H.; Rao, X.; Yang, Q.; Luo, H.; Wu, X.; Gao, Y. Biophysical Modeling and Artificial Intelligence for Quantitative Assessment of Anastomotic Blood Supply in Laparoscopic Low Anterior Rectal Resection. Surg. Endosc. 2025, 39, 3412–3421. [Google Scholar] [CrossRef]

- Lünse, S.; Wisotzky, E.L.; Beckmann, S.; Paasch, C.; Hunger, R.; Mantke, R. Technological Advancements in Surgical Laparoscopy Considering Artificial Intelligence: A Survey among Surgeons in Germany. Langenbecks Arch. Surg. 2023, 408, 405. [Google Scholar] [CrossRef]

- Iftikhar, M.; Saqib, M.; Zareen, M.; Mumtaz, H. Artificial Intelligence: Revolutionizing Robotic Surgery: Review. Ann. Med. Surg. 2024, 86, 5401–5409. [Google Scholar] [CrossRef]

- Chatterjee, S.; Das, S.; Ganguly, K.; Mandal, D. Advancements in Robotic Surgery: Innovations, Challenges and Future Prospects. J. Robot Surg. 2024, 18, 28. [Google Scholar] [CrossRef]

- Reza, T.; Bokhari, S.F.H. Partnering with Technology: Advancing Laparoscopy with Artificial Intelligence and Machine Learning. Cureus 2024, 16, e56076. [Google Scholar] [CrossRef]

- Khanam, M.; Akther, S.; Mizan, I.; Islam, F.; Chowdhury, S.; Ahsan, N.M.; Barua, D.; Hasan, S.K. The Potential of Artificial Intelligence in Unveiling Healthcare’s Future. Cureus 2024, 16, e71625. [Google Scholar] [CrossRef]

- Hamilton, A. The Future of Artificial Intelligence in Surgery. Cureus 2024, 16, e63699. [Google Scholar] [CrossRef]

| Characteristic | Number of Studies (Total n = 152) |

|---|---|

| Year of Publication | |

| Articles published before 2015 | 7 |

| Articles published in between 2015 and 2020 | 18 |

| Articles published in 2021 | 5 |

| Articles published in 2022 | 28 |

| Articles published in 2023 | 34 |

| Articles published in 2024 | 49 |

| Articles published in 2025 | 11 |

| Type of surgery | |

| Laparoscopic surgery | 125 |

| Robotic surgery | 19 |

| Laparoscopic and Robotic surgery | 8 |

| Study Type | |

| Technical evaluations | 65 |

| Retrospective observational studies: | 35 |

| Prospective observational studies | 14 |

| Feasibility studies | 13 |

| Clinical trials | 8 |

| Dataset descriptions | 7 |

| Simulation-based training assessments | 6 |

| Surveys or expert opinion studies | 4 |

| Study Categories | |

| Object or structure detection | 51 |

| Skill assessment and training | 37 |

| Workflow recognition and intraoperative guidance | 28 |

| Surgical decision support and outcome prediction | 14 |

| Augmented reality and navigation | 11 |

| Image enhancement | 8 |

| Surgeon perception, preparedness, and attitudes | 3 |

| First Author and Year | DOI | Type of Surgery | Study Type | Study Objective |

|---|---|---|---|---|

| Object or Structure Detection | ||||

| Khalid 2023 [33] | 10.1007/s00464-023-10403-4 | Laparoscopic | Retrospective validation study | Prediction of safe and unsafe dissection zones during laparoscopic cholecystectomy. |

| Ward 2022 [34] | 10.1007/s00464-022-09009-z | Laparoscopic | Retrospective model | To classify inflammation based on gallbladder images. |

| Orimoto 2025 [35] | 10.1007/s00464-024-11514-2 | Laparoscopic | Retrospective model | Identify intraoperative scarring in laparoscopic cholecystectomy for acute cholecystitis. |

| Kolbinger 2023 [36] | 10.1097/JS9.0000000000000595 | Laparoscopic | Algorithm development + comparison study | Segmentation of abdominal anatomy. |

| Sato 2022 [37] | 10.1007/s00595-022-02508-5 | Laparoscopic and Robotic | Feasibility study | Pancreas contouring for navigation in lymphadenectomy. |

| Igaki 2022 [38] | 10.1097/DCR.0000000000002393 | Laparoscopic | Single-center feasibility study | Segmentation-based image-guided navigation system for TME dissection. |

| Jearanai 2023 [39] | 10.1007/s00464-023-10309-1 | Laparoscopic | Technical development + validation | Detect abdominal wall layers during trocar insertion. |

| Oh 2024 [40] | 10.1038/s41598-024-73434-4 | Laparoscopic | Algorithm development + intraoperative support | Identify biliary structures. |

| Benavides 2024 [41] | 10.3390/s2413419 | Laparoscopic | Technical development | Localization of surgical tools in laparoscopic surgery. |

| Gazis 2022 [42] | 10.3390/bioengineering9120737 | Laparoscopic | Technical development | To recognize surgical gestures. |

| Tomioka 2023 [43] | 10.21873/anticanres.16725 | Laparoscopic | Technical development | Recognition of hepatic veins and Glissonean pedicle. |

| Cui 2021 [44] | 10.1155/2021/5578089 | Laparoscopic | Technical development | To detect vas deferens in laparoscopic inguinal hernia repair. |

| Memida 2023 [45] | 10.1109/EMBC40787.2023.10341025 | Laparoscopic | Technical development | To identify surgical instruments in laparoscopic procedures. |

| Wesierski 2018 [46] | 10.1016/j.media.2018.03.012 | Robotic | Technical development | To estimate the pose of multiple non-rigid and robotic surgical tools. |

| Jurosch 2024 [47] | 10.1007/s11548-024-03220-0 | Laparoscopic | Technical development | To detect trocars and assess their occupancy. |

| Sánchez-Brizuela 2022 [48] | 10.3390/s22145180 | Laparoscopic | Technical development | To identify surgical gauze in real time. |

| Lai 2023 [49] | 10.1007/s10439-022-03033-9 | Laparoscopic | Technical development | To detect surgical gauze in real time. |

| Ehrlich 2022 [50] | 10.3390/s22155808 | Robotic | Technical development | To detect energy events from electrosurgical tools. |

| Nwoye 2019 [51] | 10.1007/s11548-019-01958-6 | Laparoscopic | Methodological innovation | To enable real-time tool tracking. |

| Carstens 2023 [52] | 10.1038/s41597-022-01719-2 | Laparoscopic | Dataset publication | Semantic segmentations of abdominal organs and vessels. |

| Yin 2024 [53] | 10.1016/j.neunet.2023.11.055 | Laparoscopic | Technical development | Segmentation model for TaTME procedures. |

| Tashiro 2024 [54] | 10.1002/jhbp.1422 | Laparoscopic | Retrospective analysis | To recognize and color-code loose connective tissue. |

| Petracchi 2024 [15] | 10.1016/j.gassur.2024.03.018 | Laparoscopic | Prospective observational study | To detect the critical view of safety during elective LC. |

| Schnelldorfer 2024 [16] | 10.1097/SLA.0000000000006294 | Laparoscopic | Prototype development | Guidance system for identifying peritoneal metastases. |

| Kitaguchi 2023 [55] | 10.1093/bjs/znad249 | Laparoscopic | Prospective observational study | Organ recognition models. |

| Chen 2025 [17] | 10.1093/bjsopen/zrae158 | Laparoscopic | Retrospective study | Perigastric vessel recognition. |

| Han 2024 [56] | 10.1097/DCR.0000000000003547 | Laparoscopic | Model development | Neurorecognition during total mesorectal excision. |

| Tashiro 2024 [18] | 10.1002/jhbp.1388 | Laparoscopic and Robotic | Proof-of-concept demonstration | Identification of intrahepatic vascular structures during liver resection. |

| Frey 2025 [57] | 10.1007/s11701-025-02284-7 | Laparoscopic and Robotic | Model evaluation | Detecting instruments in robot-assisted abdominal surgeries. |

| El Moaqet 2025 [58] | 10.3390/s25103017 | Laparoscopic | Model development and evaluation | To classify and localize surgical tools. |

| Korndorffer 2020 [59] | 10.1097/SLA.0000000000004207 | Laparoscopic | Observational study | Assessing critical view of safety and intraoperative events during LC. |

| Shunjin Ryu 2023 [19] | 10.1007/s11605-023-05819-1 | Laparoscopic | Prospective observational study | Recognition of nerves during colorectal surgery. |

| Park 2020 [60] | 10.3748/wjg.v26.i44.6945 | Laparoscopic | Feasibility study | Analysis of microperfusion for predicting anastomotic complications in laparoscopic colorectal cancer surgery. |

| Ryu 2024 [61] | 10.1007/s00464-023-10524-w | Laparoscopic | Model development | Recognition and visualization of major blood vessels during laparoscopic right hemicolectomy. |

| Zygomalas 2024 [62] | 10.1177/15533506241226502 | Laparoscopic | Feasibility study | Recognition of anatomical landmarks and tools in TAPP hernia repair. |

| Mita 2024 [63] | 10.1007/s10029-024-03223-5 | Laparoscopic | Validation study | Anatomical recognition during TAPP hernia repair. |

| Horita 2024 [64] | 10.1007/s00464-024-10874-z | Laparoscopic | Model development | To detect active intraoperative bleeding during laparoscopic colectomy. |

| Kinoshita 2024 [65] | 10.1007/s00464-024-10939-z | Laparoscopic and Robotic | Validation study | Nerve recognition in rectal cancer surgery. |

| Takeuchi 2023 [66] | 10.1007/s00464-023-09934-7 | Laparoscopic | Model development | Landmarks recognition in TAPP hernia repair. |

| Une 2024 [67] | 10.1007/s00464-023-10637-2 | Laparoscopic | Feasibility study | Liver vessel recognition during parenchymal dissection in LLR. |

| Kojima 2023 [68] | 10.1097/JS9.0000000000000317 | Laparoscopic | Model development | Segmentation of autonomic nerves during colorectal surgery. |

| Nakanuma 2023 [69] | 10.1007/s00464-022-09678-w | Laparoscopic | Clinical feasibility study | Detecting landmarks during laparoscopic cholecystectomy. |

| Loukas 2022 [70] | 10.1002/rcs.2445 | Laparoscopic | Model development | To classify vascularity of gallbladder wall. |

| Endo 2023 [71] | 10.1007/s00464-023-10224-5 | Laparoscopic | Prospective experimental study | Anatomical landmark identification during laparoscopic cholecystectomy. |

| Fried 2024 [72] | 10.1097/SLA.0000000000006377 | Laparoscopic | Implementation study | Monitoring superior vena cava in laparoscopic cholecystectomy. |

| Mascagni 2022 [73] | 10.1097/SLA.0000000000004351 | Laparoscopic | Model development | To segment hepatocystic anatomy. |

| Fujinaga 2023 [74] | 10.1007/s00464-023-10097-8 | Laparoscopic | Clinical feasibility study | Landmark recognition to reduce bile duct injury. |

| Kawamura 2023 [75] | 10.1007/s00464-023-10328-y | Laparoscopic | Model development | Automatically score CVS criteria. |

| Tokuyasu 2021 [76] | 10.1007/s00464-020-07548-x | Laparoscopic | Model development and validation | Anatomical landmarks during laparoscopic cholecystectomy. |

| Zhang 2024 [77] | 10.3760/cma.j.cn441530-20240125-00041 | Laparoscopic | Model development and validation | Detect organs and instruments in laparoscopic radical gastrectomy. |

| Skill Assessment and Training | ||||

| Ortenzi 2023 [78] | 10.1007/s00464-023-10375-5 | Laparoscopic | Model development and validation | Surgical steps recognition in totally extraperitoneal (TEP) inguinal hernia repairs. |

| Wu 2024 [20] | 10.1097/JS9.0000000000001798 | Laparoscopic | Multicenter randomized controlled trial | AI-based surgical coaching program for laparoscopic cholecystectomy. |

| Belmar 2023 [79] | 10.1007/s00464-022-09576-1 | Laparoscopic | Validation study | Assessing basic laparoscopic simulation training exercises. |

| Halperin 2023 [21] | 10.1007/s11548-023-02963-6 | Laparoscopic | Prospective validation study (simulator-based) | Automated feedback on intracorporeal suture performance. |

| Chen 2023 [22] | 10.1007/s11701-023-01713-9 | Robotic | Prospective observational study (simulator-based) | To evaluate robotic suturing skills. |

| Fawaz 2019 [80] | 10.1007/s11548-019-02039-4 | Robotic | Retrospective model development | Classify robotic surgical skill levels and personalized feedback. |

| Nguyen 2019 [81] | 10.1016/j.cmpb.2019.05.008 | Robotic | Model development | Objective surgical skill assessment. |

| Wang 2025 [82] | 10.1109/EMBC.2018.8512575 | Robotic | Model development | Recognize surgical tasks and assess surgeon skill levels in robot-assisted training. |

| Funke 2019 [83] | 10.1007/s11548-019-01995-1 | Robotic | Model development | Surgical skill assessment system. |

| Partridge 2014 [84] | 10.1089/lap.2014.0015 | Laparoscopic | Tool development and validation | To track instrument movement for performance feedback in laparoscopic simulators. |

| Dereathe 2025 [85] | 10.1038/s41597-025-04588-7 | Laparoscopic | Dataset development and evaluation | To assess quality of field exposure in sleeve gastrectomy. |

| Bogar 2024 [86] | 10.1038/s41598-024-67435-6 | Laparoscopic | Simulation study | Custom VR simulator and AI-based peg transfer evaluator. |

| Matsumoto 2024 [87] | 10.1038/s41598-024-63388-y | Laparoscopic | Kinematic analysis | To analyze kinematic differences in laparoscopic distal gastrectomy by surgical skill level. |

| Gilliani 2024 [88] | 10.1016/j.jss.2024.07.103 | Robotic | Skill classification study | To distinguish expert, intermediate, and novice surgeons in robotic right colectomy. |

| Yang 2023 [89] | 10.1007/s00464-022-09781-y | Robotic | Algorithm validation | Surgical skill grading in colorectal robotic surgery. |

| Caballero 2024 [90] | 10.1007/s11548-024-03218-8 | Robotic | Observational study | To predict surgeon stress levels during robotic surgery using ergonomic and physiological parameters. |

| Yanik 2024 [91] | 10.1007/s44186-023-00223-4 | Laparoscopic | Learning curve analysis | To predict surgical skill acquisition through self-supervised video-based learning. |

| Nakajima 2024 [92] | 10.1007/s00464-024-11208-9 | Laparoscopic | Retrospective analysis | To validate automated surgical skill assessment in sigmoidectomy. |

| Yamazaki 2022 [93] | 10.1007/s11605-021-05161-4 | Laparoscopic | Retrospective analysis | To compare surgical device usage patterns during laparoscopic gastrectomy by surgeon skill level. |

| Allen 2010 [94] | 10.1007/s00464-009-0556-6 | Laparoscopic | Experimental study | Automatic evaluation of laparoscopic skills. |

| Fukuta 2024 [95] | 10.1007/s11548-024-03253-5 | Laparoscopic | Development study | To assess laparoscopic surgical skills. |

| Moglia 2022 [96] | 10.1007/s00464-021-08999-6 | Robotic | Observational study | To predict proficiency acquisition rates in robotic-assisted surgery trainees. |

| Ju 2025 [97] | 10.1007/s00464-025-11730-4 | Laparoscopic | Development study | Automatic gesture recognition model for laparoscopic training. |

| Cruz 2025 [98] | 10.1007/s44186-025-00355-9 | Laparoscopic | Validation study | Skill assessment in laparoscopic simulation training. |

| Chen 2024 [99] | 10.1097/JS9.0000000000000975 | Laparoscopic | Development study | Evaluate surgical skills based on surgical gestures. |

| Erlich-Feingold 2025 [100] | 10.1007/s00464-025-11715-3 | Laparoscopic | Development study | To classify basic laparoscopic skills (precision cutting tasks). |

| Power 2025 [101] | 10.1038/s41598-025-96336-5 | Laparoscopic | Development study | Evaluate laparoscopic surgical skill across expertise levels. |

| Alonso-Silverio 2018 [102] | 10.1177/1553350618777045 | Laparoscopic | Development and evaluation study | Affordable laparoscopic trainer with AI, CV, and AR for online surgical skills assessment. |

| Pan 2011 [103] | 10.1002/rcs.399 | Laparoscopic | Development study | Laparoscopic rectal surgery training. |

| Ershad 2019 [104] | 10.1007/s11548-019-01920-6 | Robotic | Development study | Automatic stylistic behaviour recognition using joint position data in robotic surgery. |

| Kowalewski 2019 [105] | 10.1007/s00464-019-06667-4 | Laparoscopic | Experimental study | Skill level assessment and phase detection. |

| St John A 2024 [106] | 10.1007/s00464-024-11068-3 | Laparoscopic | Validation study | AI-powered mobile game for learning safe dissection in LC. |

| Yen 2025 [107] | 10.1007/s00464-025-11663-y | Laparoscopic | Development and validation study | To assess surgical actions and develop automated models for competency assessment in LC. |

| Nakajima 2025 [108] | 10.1007/s00423-025-03641-8 | Laparoscopic | Retrospective multicenter study | To assess surgical dissection skill. |

| Igaki 2023 [109] | 10.1001/jamasurg.2023.1131 | Laparoscopic | Development and validation study | To recognize standardized surgical fields and assess skill. |

| Smith 2022 [110] | 10.1007/s11701-021-01284-7 | Robotic | Validation study | Classification of surgical skill level. |

| Workflow Recognition and Intraoperative Guidance | ||||

| Loukas 2024 [111] | 10.1002/rcs.2632 | Laparoscopic | Model development | To predict the remaining surgery duration. |

| Wagner 2023 [112] | 10.1016/j.media.2023.102770 | Laparoscopic | Multicenter dataset development and benchmark study | To evaluate generalizability of workflow, instrument, action, and skill recognition models in laparoscopic cholecystectomy. |

| Zhang 2023 [113] | 10.1007/s11548-022-02811-z | Laparoscopic and Robotic | Model development and validation | Automatic surgical workflow recognition. |

| Park 2023 [114] | 10.1016/j.compbiomed.2023.107453 | Laparoscopic | Multimodal model development | Surgical phase recognition by integrating tool interaction and visual modality in laparoscopic surgery. |

| Twinanda 2019 [115] | 10.1109/TMI.2018.2878055 | Laparoscopic | Model development | To estimate remaining surgery duration intraoperatively. |

| Zang 2023 [116] | 10.3390/bioengineering10060654 | Robotic | Model comparison | Surgical phase recognition in RALIHR. |

| Cartucho 2024 [117] | 10.1016/j.media.2023.102985 | Laparoscopic | Model development and validation | To track soft tissue movement during laparoscopic procedures. |

| Zheng 2022 [118] | 10.1007/s11548-022-02568-5 | Laparoscopic | Experimental study | To detect stress in surgical motion. |

| Zhai 2024 [119] | 10.1007/s11548-023-03027-5 | Laparoscopic | Model development and validation | Surgical phase recognition in gastric cancer surgery. |

| Takeuchi 2022 [23] | 10.1007/s10029-022-02621-x | Laparoscopic | Model development | Phase recognition in TAPP and assess links to surgical skill. |

| Hashimoto 2020 [23] | 10.1097/SLA.0000000000003460 | Laparoscopic | Algorithm development and validation | To identify operative steps in laparoscopic sleeve gastrectomy. |

| You 2024 [120] | 10.1007/s00464-024-10916-6 | Laparoscopic | Model development and validation | Automated surgical phase recognition in laparoscopic pancreaticoduodenectomy. |

| Takeuchi 2023 [24] | 10.1007/s00464-023-09924-9 | Robotic | Model development | Surgical phases recognition and prediction of complexity in robotic distal gastrectomy |

| Zheng 2023 [121] | 10.1002/rcs.2449 | Laparoscopic | Model development | Better intraoperative field visualization. |

| Dayan 2024 [122] | 10.1007/s11695-023-07043-x | Laparoscopic | External validation study | AI model for identifying sleeve gastrectomy safety milestones. |

| Kitaguchi 2020 [123] | 10.1016/j.ijsu.2020.05.015 | Laparoscopic | Model development | AI to recognize surgical phase, action and tools. |

| Yoshida 2024 [124] | 10.1007/s00423-024-03411-y | Laparoscopic | Model development and evaluation | Surgical step recognition in laparoscopic distal gastrectomy. |

| Fer 2023 [125] | 10.1007/s00464-023-09870-6 | Laparoscopic | Model development | Step labelling in Roux-en-Y gastric bypass. |

| Liu 2023 [126] | 10.1097/JS9.0000000000000559 | Robotic | Model development | Workflow recognition model for robotic left lateral sectionectomy. |

| Khojah 2025 [127] | 10.1007/s00464-025-11694-5 | Laparoscopic | Model development and intraoperative validation | Real-time ureter localization during laparoscopic sigmoidectomy. |

| Lavanchy 2024 [128] | 10.1007/s11548-024-03166-3 | Laparoscopic | Dataset creation and benchmarking | Improving AI model generalizability. |

| Komatsu 2024 [129] | 10.1007/s10120-023-01450-w | Laparoscopic | Model development and feasibility study | Phase recognition for laparoscopic distal gastrectomy. |

| Sasaki 2022 [130] | 10.1016/j.ijsu.2022.106856 | Laparoscopic | Model development | Automated surgical step identification in laparoscopic hepatectomy. |

| Madani 2022 [131] | 10.1097/SLA.0000000000004594 | Laparoscopic | Model development and validation | Intraoperative guidance by identifying safe/dangerous zones and anatomical landmarks in cholecystectomy. |

| Cheng 2022 [132] | 10.1007/s00464-021-08619-3 | Laparoscopic | Model development and multicenter validation | Phase recognition in laparoscopic cholecystectomy. |

| Golany 2022 [133] | 10.1007/s00464-022-09405-5 | Laparoscopic | Model development | Surgical phase recognition. |

| Shinozuka 2022 [134] | 10.1007/s00464-022-09160-7 | Laparoscopic | Model development and validation | Surgical phase recognition in laparoscopic cholecystectomy. |

| Laplante 2022 [25] | 10.1007/s00464-022-09439-9 | Laparoscopic | Model validation | Identifying safe and dangerous zones in left colectomy. |

| Surgical decision support and outcome prediction | ||||

| López 2024 [25] | 10.1007/s00464-024-10681-6 | Laparoscopic | Retrospective multicenter study | To predict surgical complexity and postoperative outcomes in laparoscopic liver surgery. |

| Masum 2022 [135] | 10.1007/s12672-022-00472-7 | Laparoscopic and robotic | Retrospective study | Prediction of LOS, readmission, and mortality. |

| López 2022 [136] | 10.1007/s11605-022-05398-7 | Laparoscopic | Retrospective multi-institutional cohort study | To identify factors associated with successful initial repair of IBDI and predict the success of definitive repair using AI. |

| Cai 2023 [137] | 10.3748/wjg.v29.i3.536 | Laparoscopic | Retrospective study | Prediction of the number of stapler cartridges needed to avoid high-risk anastomosis. |

| Dayan 2024 [138] | 10.1007/s00464-024-10847-2 | Laparoscopic | Retrospective observational validation study | Grading intraoperative complexity and safety adherence in laparoscopic appendectomy. |

| Arpaia 2022 [139] | 10.1038/s41598-022-16030-8 | Laparoscopic | Development and validation study | Assessment of perfusion quality during laparoscopic colorectal surgery. |

| Gillani 2024 [140] | 10.1016/j.surg.2024.08.015 | Robotic | Feasibility study | Performance indicators during robotic proctectomy. |

| Emile 2024 [141] | 10.1007/s13304-024-01915-2 | Laparoscopic and Robotic | Retrospective case–control study | Predict of conversion from minimally invasive (laparoscopic or robotic) to open colectomy. |

| Wang 2024 [26] | 10.3748/wjg.v30.i43.4669 | Laparoscopic | Multicenter retrospective cohort study development | Risk of postoperative complications in laparoscopic radical gastrectomy. |

| Velmahos 2023 [142] | 10.1177/00031348231167397 | Laparoscopic | Comparative model analysis | Morbidity prediction after laparoscopic colectomy. |

| Jo 2025 [143] | 10.1016/j.hpb.2025.02.016 | Laparoscopic | Retrospective study | Predictors of conversion to open surgery in laparoscopic repeat liver resection. |

| Li 2025 [144] | 10.1016/j.surg.2024.108999 | Laparoscopic | Retrospective study | Estimate the risk of duodenal stump leakage in laparoscopic gastrectomy. |

| Lippenberger 2024 [145] | 10.1007/s00384-024-04593-z | Laparoscopic | Retrospective single-center cohort study | To predict procedure duration of laparoscopic sigmoid resections. |

| Zhou 2024 [146] | 10.1016/j.heliyon.2024.e26580 | Laparoscopic | Retrospective predictive model | Predicting postoperative intestinal obstruction in laparoscopic colorectal cancer surgery. |

| Augmented reality and navigation | ||||

| Aoyama 2024 [28] | 10.1007/s00464-024-11160-8 | Laparoscopic | Feasibility study | To identify anatomical landmarks associated with postoperative pancreatic fistula during laparoscopic gastrectomy. |

| Du 2022 [147] | 10.1186/s12893-022-01585-0 | Laparoscopic | System development and preclinical evaluation | Intraoperative navigation system. |

| Kasai 2024 [148] | 10.7759/cureus.48450 | Laparoscopic | System development | Mapping for portal segment identification in laparoscopic liver surgery. |

| Ryu 2024 [149] | 10.1007/s10895-024-04030-y | Laparoscopic | Feasibility study | Combining AI and NIR fluorescence for anatomical recognition during colorectal surgery. |

| Garcia-Granero 2023 [150] | 10.1016/j.ciresp.2022.10.023 | Laparoscopic | Case report | 3D image reconstruction system in mesocolic excision and lymphadenectomy. |

| Guan 2023 [27] | 10.1007/s11548-023-02846-w | Laparoscopic | Laboratory/technical study | Mapping using stereo 3D laparoscopy and CT registration for liver resection. |

| Ali 2024 [151] | 10.1016/j.media.2024.103371 | Laparoscopic | Challenge dataset + algorithm development | Automate landmark detection for CT-laparoscopic image. |

| Robu 2017 [152] | 10.1007/s11548-017-1584-7 | Laparoscopic | Proof-of-concept study | View-planning strategy to improve AR registration using CT and laparoscopic video. |

| Wei 2022 [153] | 10.1109/TBME.2022.3195027 | Laparoscopic | Technical/methodological study | 3D localization method for anatomical navigation during MIS. |

| Nicolau 2005 [154] | 10.1007/11566489_4 | Laparoscopic | Feasibility study | Enhance depth perception. |

| Calinon 2014 [155] | 10.1016/j.cmpb.2013.12.015 | Laparoscopic | Technical development | Skill transfer interfaces for soft robotic using context-aware learning. |

| Image enhancement | ||||

| Zheng 2022 [156] | 10.1007/s11548-022-02777-y | Laparoscopic | Algorithm development | Remove visual impairments |

| Cheng 2022 [157] | 10.1155/2022/2752444 | Robotic | Comparative experimental study | Image edge detection algorithm in robotic gastric surgery. |

| Akbari 2009 [29] | 10.1109/IEMBS.2009.5333766 | Laparoscopic | Prospective evaluation | Artery detection in laparoscopic cholecystectomy. |

| Katic 2013 [158] | 10.1016/j.compmedimag.2013.03.003 | Laparoscopic | Conceptual/methodological article | Reduce information overload in surgery. |

| Beyersdorffer 2021 [159] | 10.1515/BMT-2020-0106 | Laparoscopic | Feasibility study | Detect the presence of dissecting tool within camera field. |

| Salazar-Colores 2022 [160] | 10.24875/CIRU.20000951 | Laparoscopic | Algorithm development | Remove surgical smoke. |

| Wagner 2021 [30] | 10.1007/s00464-021-08509-8 | Laparoscopic | Prospective experimental study | Autonomous camera-guiding robot. |

| He 2025 [161] | 10.1007/s00464-025-11693-6 | Laparoscopic | Prospective feasibility study | Intraoperative perfusion assessment in colorectal surgery. |

| Surgeon perception, preparedness, and attitudes | ||||

| Acosta 2025 [31] | 10.1016/j.ciresp.2024.12.003 | Robotic | Survey study | Evaluate Spanish surgeons’ knowledge, attitudes, and preparedness toward Digital Surgery and AI, comparing robotic vs. non-robotic users. |

| Luense 2023 [162] | 10.1007/s00423-023-03134-6 | Laparoscopic | Survey study | Survey of German surgeons to identify limitations of current laparoscopy and desired AI features in future systems. |

| Shafiei 2025 [32] | 10.1177/00187208241285513 | Laparoscopic and Robotic | Experimental study using simulation data | To predict mental workload during surgical tasks. |

| Category | Low Risk | Moderate Risk | High Risk | External Validation | Multicenter Design |

|---|---|---|---|---|---|

| Object/Structure Detection | 28.0% | 60.0% | 12.0% | 12.0% | 18.0% |

| Skill Assessment & Training | 21.2% | 66.7% | 12.1% | 9.1% | 15.2% |

| Workflow Recognition & Guidance | 35.0% | 55.0% | 10.0% | 25.0% | 30.0% |

| Decision Support & Outcome Prediction | 31.8% | 59.1% | 9.1% | 27.3% | 40.9% |

| Augmented Reality & Navigation | 40.0% | 50.0% | 10.0% | 33.3% | 46.7% |

| Image Enhancement | 20.0% | 60.0% | 20.0% | 10.0% | 20.0% |

| Perception/Preparedness | 100.0% | 0.0% | 0.0% | — | 100.0% |

| Global | 31.4% | 58.0% | 10.6% | 20.0% | 27.1% |

| Category | Typical Metrics Reported | Median/Representative Values | External Validation (%) | Clinical or Ex Vivo Validation (%) | Multicenter Studies (%) | Main Limitations |

|---|---|---|---|---|---|---|

| Object/Structure Detection | Accuracy, IoU, Dice, F1-score | Accuracy 0.87 (range 0.72–0.96); Dice 0.83 (0.68–0.92) | 12% | 48% | 18% | Lack of standard annotation, limited generalizability |