Evaluating Medical Text Summaries Using Automatic Evaluation Metrics and LLM-as-a-Judge Approach: A Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

- •

- Two radiology reports for the same anatomical region, acquired with the same modality;

- •

- Examination report created by the specialist who referred the patient for the last of these imaging studies;

- •

- A report for an additional imaging study acquired using a different modality or on a different anatomical area;

- •

- Two values of a lab test (initial and follow-up);

- •

- An additional lab test value different from the above;

- •

- An examination report by a physician of any specialty not related to imaging studies;

- •

- A hospital discharge summary.

2.2. LLMs for Summarizers and LLMs-as-a-Judge

- •

- Released no earlier than Q1 2024;

- •

- Available under the Apache 2.0 open-source license;

- •

- Architecture type: transformer;

- •

- Capable of handling large volumes of text data.

- •

- Deployment environment: Ollama, 2 RTX 3090 Ti GPUs

2.3. Reference Summary and Expert Review

2.4. Automatic Evaluation Metrics

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation). This class of metrics evaluate the recall and accuracy of summarization on a scale from 0 to 1 by analyzing the match of n-grams and their sequences with a reference summary.

- 2.

- BLEU (Bilingual Evaluation Understudy) uses a weighted combination of n-gram matches to estimate similarity and introduces a brevity penalty. This approach enables the comparison of generated texts with multiple reference summaries to ensure a more accurate assessment.

- 3.

- METEOR (Metric for Evaluation of Translation with Explicit Ordering) is a modification of ROUGE that accounts for the variability of the words’ morphological features through stemming and lemmatization. The algorithm penalizes unrelated fragments, permutations, and duplication.

- 4.

- BERTScore (Bidirectional Encoder Representations from Transformers) evaluates the similarity between generated and reference summaries by calculating the semantic distance between individual word vectors (tokens) using a pre-trained BERT model. Unlike previous evaluation metrics, BERTScore takes into account the semantic and syntactic similarity between the generated and the reference summaries but ignores key quality criteria such as coherence and factual accuracy. The method is computationally intensive and relies on the quality of pre-trained BERT models.

2.5. Statistical Analysis

3. Results

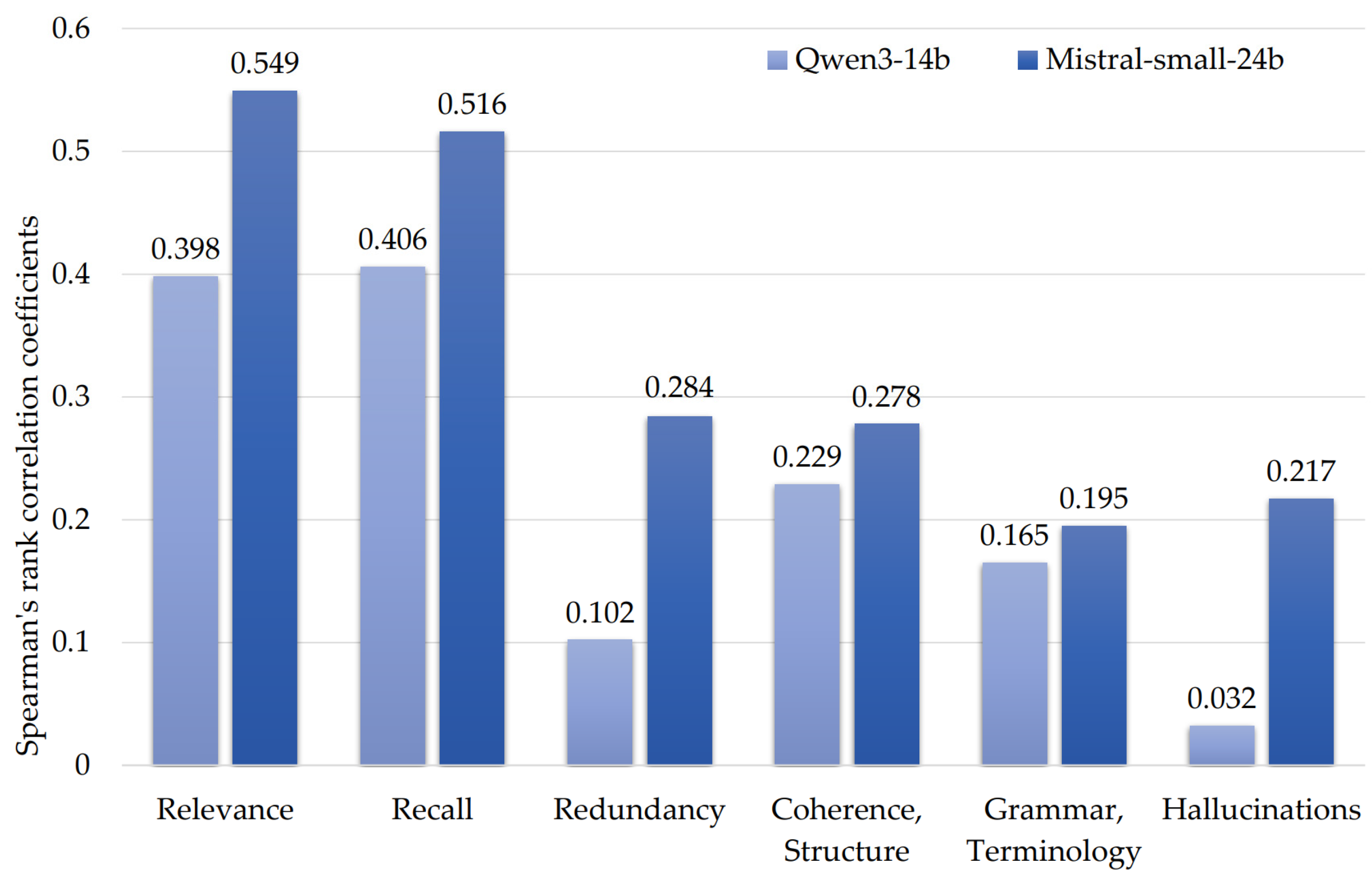

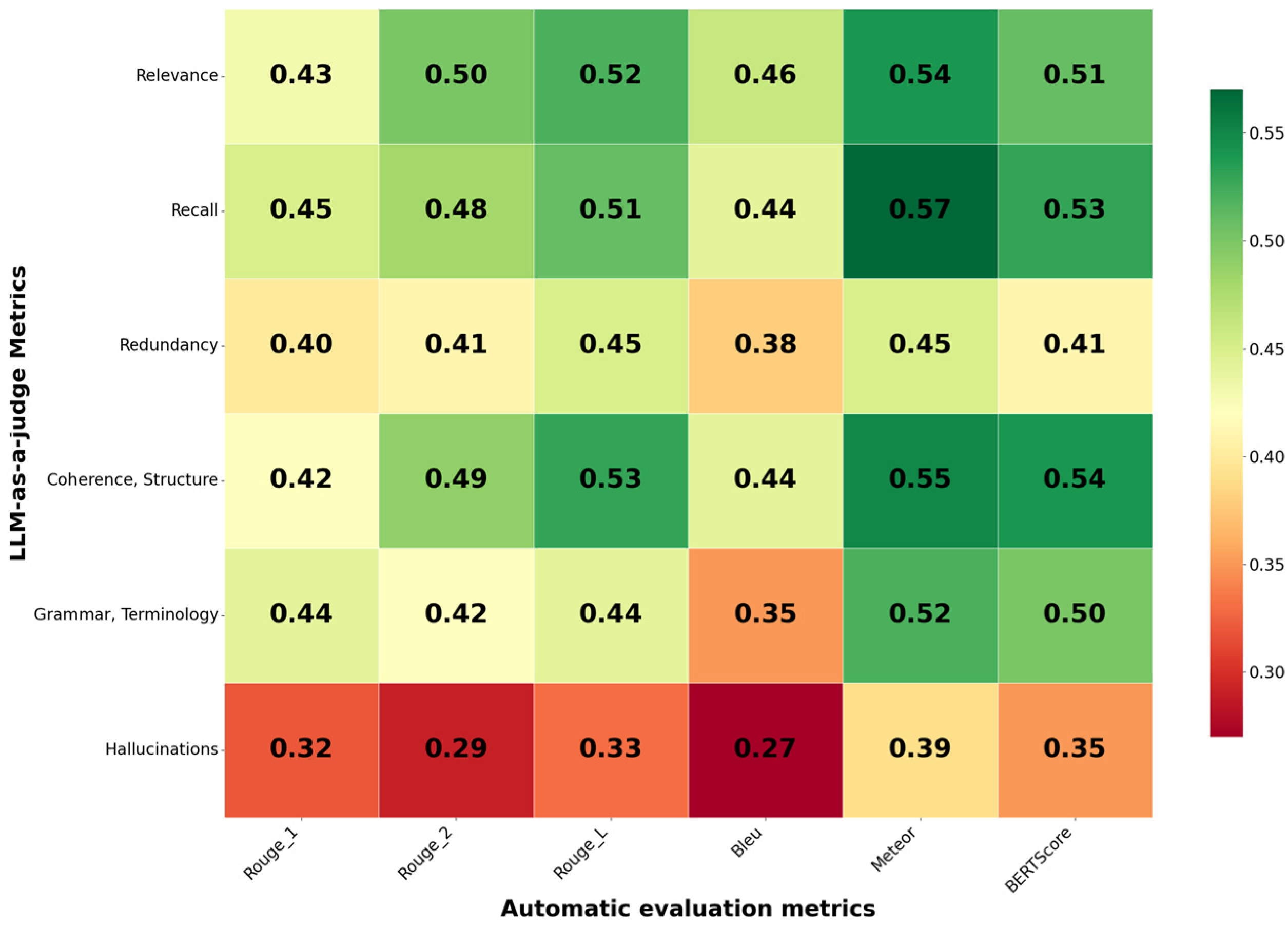

3.1. Performance of the Automatic Evaluation Metrics

3.2. Standalone Integrated Assessment of Summarization Performance

4. Discussion

5. Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| BERT | Bidirectional Encoder Representations from Transformers |

| BLEU | Bilingual Evaluation Understudy |

| CT | Computed Tomography |

| EHR | Electronic Health Record |

| LLM | Large Language Model |

| METEOR | Metric for Evaluation of Translation with Explicit Ordering |

| RAG | Retrieval-Augmented Generation |

| RMSE | Root Mean Square Error |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| SD | Standard deviation |

Appendix A

| Criteria | Explanation | 1 Point | 5 Points |

|---|---|---|---|

| Relevance | How closely does the generated summary match the inquiry? | The information is useless and irrelevant to the inquiry | Perfectly meets the inquiry; all key aspects are considered, and the information is useful and accurate. |

| Recall | How completely does the generated summary reflect all relevant information? | Most clinically relevant data is omitted. | All clinically relevant findings and important details are present. |

| Redundancy | Does the generated summary contain redundant information that is irrelevant to the task? | Contains a large amount of redundant information that is not relevant to the inquiry. | Contains only the information necessary to the task. |

| Coherence, Structure | How clear, logical, and structured is the generated summary? | Unclear, lacks logic. | Perfectly understandable, logical, and structured. |

| Grammar, Terminology | How linguistically and terminologically correct is the generated summary? | Contains numerous errors in language and terminology, making it unsuitable for use. | Perfectly complies with language and professional terminology standards; no errors. |

| Hallucinations | Does the generated summary contain information that is missing from the original data? | It contains information that is missing from the source text. | It does not contain information that is missing from the source text. |

References

- Roustan, D.; Bastardot, F. The clinicians’ guide to large language models: A general perspective with a focus on hallucinations. Interact. J. Med. Res. 2025, 14, e59823. [Google Scholar] [CrossRef] [PubMed]

- Van Veen, D.; Van Uden, C.; Blankemeier, L.; Delbrouck, J.-B.; Aali, A.; Bluethgen, C.; Pareek, A.; Polacin, M.; Reis, E.P.; Seehofnerová, A.; et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat. Med. 2024, 30, 1134–1142. [Google Scholar] [CrossRef] [PubMed]

- Nazi, Z.A.; Peng, W. Large Language Models in Healthcare and Medical Domain: A Review. Informatics 2024, 11, 57. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Reshetnikov, R.V.; Nanova, O.G.; Vladzymyrskyy, A.V.; Arzamasov, K.M.; Omelyanskaya, O.V.; Kodenko, M.R.; Erizhokov, R.A.; Pamova, A.P.; Seradzhi, S.R.; et al. Application of large language models in radiological diagnostics: A scoping review. Digit. Diagn. 2025, 6, 268–285. [Google Scholar] [CrossRef]

- Kaiser, P.; Yang, S.; Bach, M.; Breit, C.; Mertz, K.; Stieltjes, B.; Ebbing, J.; Wetterauer, C.; Henkel, M. The interaction of structured data using openEHR and large Language models for clinical decision support in prostate cancer. World J. Urol. 2025, 43, 67. [Google Scholar] [CrossRef]

- Nakaura, T.; Ito, R.; Ueda, D.; Nozaki, T.; Fushimi, Y.; Matsui, Y.; Yanagawa, M.; Yamada, A.; Tsuboyama, T.; Fujima, N.; et al. The impact of large language models on radiology: A guide for radiologists on the latest innovations in AI. Jpn. J. Radiol. 2024, 42, 685–696. [Google Scholar] [CrossRef]

- Filali Ansary, R.; Lechien, J.R. Clinical decision support using large language models in otolaryngology: A systematic review. Eur. Arch. Otorhinolaryngol. 2025, 282, 4325–4334. [Google Scholar] [CrossRef]

- Niel, O.; Dookhun, D.; Caliment, A. Performance evaluation of large language models in pediatric nephrology clinical decision support: A comprehensive assessment. Pediatr. Nephrol. 2025, 40, 3211–3218. [Google Scholar] [CrossRef]

- Grolleau, E.; Couraud, S.; Jupin Delevaux, E.; Piegay, C.; Mansuy, A.; de Bermont, J.; Cotton, F.; Pialat, J.-B.; Talbot, F.; Boussel, L. Incidental pulmonary nodules: Natural language processing analysis of radiology reports. Respir. Med. Res. 2024, 86, 101136. [Google Scholar] [CrossRef]

- Ahmed, A.; Zeng, X.; Xi, R.; Hou, M.; Shah, S.A. MED-Prompt: A novel prompt engineering framework for medicine prediction on free-text clinical notes. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 101933. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Zhou, H.; Gu, B.; Zou, X.; Huang, J.; Wu, J.; Li, Y.; Chen, S.S.; Hua, Y.; Zhou, P.; et al. Application of large language models in medicine. Nat. Rev. Bioeng. 2025, 3, 445–464. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Vladzymyrskyy, A.V. (Eds.) Artificial Intelligence in Radiology: Per Aspera Ad Astra; Izdatelskie Resheniya: Moscow, Russia, 2025. (In Russian) [Google Scholar]

- Order of the Ministry of Health of the Russian Federation No. 4n of June 6, 2012 “On the Approval of the Nomenclature Classification of Medical Devices”. Available online: https://normativ.kontur.ru/document?moduleId=1&documentId=501045 (accessed on 21 November 2025).

- Lin, C.Y. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. Available online: https://aclanthology.org/W04-1013 (accessed on 21 November 2025).

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL), Philadelphia, PA, USA, 7–12 July 2002. [Google Scholar]

- Lavie, A.; Agarwal, A. METEOR: An Automatic Metric for MT Evaluation with High Levels of Correlation with Human Judgments. In Proceedings of the Second Workshop on Statistical Machine Translation, Prague, Czech Republic, 23 June 2007. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2019. [Google Scholar] [CrossRef]

- Williams, C.Y.K.; Subramanian, C.R.; Ali, S.S.; Apolinario, M.; Askin, E.; Barish, P.; Cheng, M.; Deardorff, W.J.; Donthi, N.; Ganeshan, S.; et al. Physician- and Large Language Model-Generated Hospital Discharge Summaries. JAMA Intern. Med. 2025, 185, 818–825. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Sun, Z.; Idnay, B.; Nestor, J.G.; Soroush, A.; Elias, P.A.; Xu, Z.; Ding, Y.; Durrett, G.; Rousseau, J.F.; et al. Evaluating large language models on medical evidence summarization. npj Digit. Med. 2023, 6, 158. [Google Scholar] [CrossRef]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.P.; et al. Judging LLM-as-a-judge with MT-bench and chatbot arena. arXiv 2023. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Z.; Zulkernine, F. Comparative analysis of open-source language models in summarizing medical text data. In Proceedings of the 2024 IEEE International Conference on Digital Health (ICDH), Shenzhen, China, 7–13 July 2024. [Google Scholar] [CrossRef]

- Croxford, E.; Gao, Y.; First, E.; Pellegrino, N.; Schnier, M.; Caskey, J.; Oguss, M.; Wills, G.; Chen, G.; Dligach, D.; et al. Automating evaluation of AI text generation in healthcare with a large language model (LLM)-as-a-judge. medRxiv 2025. [Google Scholar] [CrossRef]

- Van Schaik, T.A.; Pugh, B. A field guide to automatic evaluation of LLM-generated summaries. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’24), Washington, DC, USA, 14–18 July 2024. [Google Scholar] [CrossRef]

- Gu, J.; Jiang, X.; Shi, Z.; Tan, H.; Zhai, X.; Xu, C.; Li, W.; Shen, Y.; Ma, S.; Liu, H.; et al. A survey on LLM-as-a-Judge. arXiv 2024. [Google Scholar] [CrossRef]

- Gero, Z.; Singh, C.; Xie, Y.; Zhang, S.; Subramanian, P.; Vozila, P.; Naumann, T.; Gao, J.; Poon, H. Attribute structuring improves LLM-based evaluation of clinical text summaries. arXiv 2024. [Google Scholar] [CrossRef]

- Shah, N.; Pfeffer, M.; Liang, P. Holistic Evaluation of Large Language Models for Medical Applications. 2025. Available online: https://hai.stanford.edu/news/holistic-evaluation-of-large-language-models-for-medical-applications (accessed on 10 May 2025).

- Ahmed, A.; Hou, M.; Xi, R.; Zeng, X.; Shah, S.A. Prompt-eng: Healthcare prompt engineering: Revolutionizing healthcare applications with precision prompts. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; pp. 1329–1337. [Google Scholar]

- HuggingFace. Available online: https://huggingface.co/ (accessed on 20 August 2025).

- Research and Practical Clinical Center for Diagnostics and Telemedicine Technologies of the Moscow Health Care Department. Generative AI Radiologist’s Workstation (GARW). ClinicalTrials.gov ID: NCT07057830. 2025. Available online: https://clinicaltrials.gov/study/NCT07057830 (accessed on 20 August 2025).

- Reshetnikov, R.V.; Tyrov, I.A.; Vasilev, Y.A.; Shumskaya, Y.F.; Vladzymyrskyy, A.V.; Akhmedzyanova, D.A.; Bezhenova, K.Y.; Varyukhina, M.D.; Sokolova, M.V.; Blokhin, I.A.; et al. Assessing the quality of large generative models for basic healthcare applications. Med. Dr. Inf. Technol. 2025, 3, 64–75. [Google Scholar] [CrossRef]

- Shi, J.; Ma, Q.; Liu, H.; Zhao, H.; Hwang, J.-N.; Li, L. Explaining context length scaling and bounds for language models. arXiv 2025. [Google Scholar] [CrossRef]

- Li, J.; Wang, M.; Zheng, Z.; Zhang, M. Loogle: Can long-context language models understand long contexts? arXiv 2023. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Wu, W.; Huang, Z.; Li, B.; Wang, H. U-NIAH: Unified RAG and LLM Evaluation for Long Context Needle-In-A-Haystack. arXiv 2025. [Google Scholar] [CrossRef]

- Urlana, A.; Kanumolu, G.; Kumar, C.V.; Garlapati, B.M.; Mishra, R. HalluCounter: Reference-free LLM Hallucination Detection in the Wild! arXiv 2025. [Google Scholar] [CrossRef]

- Park, S.; Du, X.; Yeh, M.-H.; Wang, H.; Li, Y. Steer LLM Latents for Hallucination Detection. arXiv 2025. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Verga, P.; Hofstatter, S.; Althammer, S.; Su, Y.; Piktus, A.; Arkhangorodsky, A.; Xu, M.; White, N.; Lewis, P. Replacing judges with juries: Evaluating LLM generations with a panel of diverse models. arXiv 2024. [Google Scholar] [CrossRef]

| LLM | Model Size (Parameters) | Developer |

|---|---|---|

| Gemma-2-27b-it | 27 bln | Google LLC, Mountain View, CA, USA |

| Llama-3.3-70B-Instruct_Q4 | 70 bln | Meta Platforms Inc., Menlo Park, CA, USA |

| Llama3.1-8b | 8 bln | Meta Platforms Inc., Menlo Park, CA, USA |

| Llama-3.2-3B-Instruct | 3 bln | Meta Platforms Inc., Menlo Park, CA, USA |

| Gemma-3-12b-it | 12 bln | Google LLC, Mountain View, CA, USA |

| Qwen3-32B_Q4-nt | 32 bln | Qwen Ltd., Beijing, China |

| Model/Criteria | Gemma-2-27b-it | Llama-3.3-70B-Instruct_Q4 | Llama3.1-8b | Llama-3.2-3B-Instruct | Gemma-3-12b-it | Qwen3-32B_Q4-nt |

|---|---|---|---|---|---|---|

| Relevance | 2.6 ± 1.3 | 3.8 ± 1.0 | 3.3 ± 0.9 | 2.4 ± 1.0 | 3.6 ± 1.2 | 3.8 ± 1.3 |

| Recall | 2.6 ± 1.3 | 3.7 ± 1.0 | 3.1 ± 1.1 | 2.3 ± 1.1 | 3.9 ± 1.1 | 3.9 ± 1.2 |

| Redundancy | 3.2 ± 1.4 | 4.2 ± 0.8 | 3.5 ± 1.1 | 3.2 ± 1.2 | 4.0 ± 1.0 | 3.2 ± 1.3 |

| Coherence, Structure | 3.6 ± 1.2 | 4.4 ± 1.0 | 3.6 ± 1.2 | 3.1 ± 1.1 | 4.2 ± 1.0 | 4.2 ± 1.2 |

| Grammar, Terminology | 4.5 ± 0.6 | 4.7 ± 0.7 | 4.4 ± 0.8 | 3.6 ± 1.2 | 4.7 ± 0.5 | 4.3 ± 1.1 |

| Hallucinations | 3.8 ± 1.9 | 4.6 ± 1.2 | 3.6 ± 1.9 | 3.4 ± 2.0 | 3.9 ± 1.8 | 3.8 ± 1.9 |

| Criteria/ LLM-as-a-Judge | Relevance | Recall | Redundancy | Coherence, Structure | Grammar, Terminology |

|---|---|---|---|---|---|

| Qwen3-14b M ± SD Me [Q1; Q3] | 5.0 ± 0.0 5 [5; 5] | 4.8 ± 0.6 5 [5; 5] | 5.0 ± 0.0 5 [5; 5] | 5.0 ± 0.0 5 [5; 5] | 5.0 ± 0.0 5 [5; 5] |

| Mistral-small-24b M ± SD Me [Q1; Q3] | 4.4 ± 0.8 5 [5; 5] | 4.2 ± 0.7 4 [4; 5] | 3.6 ± 0.8 4 [3; 4] | 4.8 ± 0.4 5 [5; 5] | 4.8 ± 0.4 5 [5; 5] |

| p-value | <0.001 | 0.001 | <0.001 | 0.014 | 0.025 |

| ROUGE-1 | ROUGE-2 | ROUGE-L | BLEU | METEOR | BERTScore | |

|---|---|---|---|---|---|---|

| Relevance | 0.44 | 0.45 | 0.46 | 0.45 | 0.48 | 0.57 |

| Recall | 0.40 | 0.41 | 0.40 | 0.37 | 0.49 | 0.53 |

| Redundancy | 0.35 | 0.29 | 0.31 | 0.27 | 0.21 | 0.33 |

| Coherence, Structure | 0.30 | 0.35 | 0.32 | 0.31 | 0.27 | 0.42 |

| Grammar, Terminology | 0.28 | 0.30 | 0.31 | 0.28 | 0.21 | 0.29 |

| Hallucinations | 0.17 | 0.10 | 0.19 | 0.11 | 0.14 | 0.23 |

| Expert Score Metric | Metrics with the Greatest Impact | R (Three Metrics) | R (BERTScore) | R (LLM-as-a-Judge) | R (Full Model) | R2 (Full Model) | RMSE (Full Model) |

|---|---|---|---|---|---|---|---|

| Relevance | BERTScore | 0.673 | 0.610 | 0.585 | 0.688 | 0.474 | 0.944 |

| Relevance (LLM-as-a-judge) | |||||||

| Hallucinations (LLM-as-a-judge) | |||||||

| Recall | BERTScore | 0.502 | 0.377 | 0.281 | 0.528 | 0.278 | 1.070 |

| Relevance (LLM-as-a-judge) | |||||||

| Meteor | |||||||

| Redundancy | Rouge_1 | 0.502 | 0.377 | 0.281 | 0.528 | 0.278 | 1.070 |

| Relevance (LLM-as-a-judge) | |||||||

| Meteor | |||||||

| Coherence, Structure | Rouge_2 | 0.437 | 0.406 | 0.224 | 0.471 | 0.222 | 1.072 |

| Meteor | |||||||

| BERTScore | |||||||

| Grammar, Terminology | Rouge_L | 0.420 | 0.377 | 0.247 | 0.463 | 0.214 | 0.826 |

| Meteor | |||||||

| BERTScore | |||||||

| Hallucinations | Rouge_2 | 0.306 | 0.226 | 0.217 | 0.355 | 0.126 | 1.750 |

| Rouge_L | |||||||

| BERTScore |

| LLM-as-a-Judge Comment | Criterion for Which the LLM-as-a-Judge Lowered the Score | Correct Criterion |

|---|---|---|

| The summary contains a lot of information, but it is not always logically structured or connected to the main objective—the description of an abdominal CT scan. The text includes data not relevant to this task, such as a detailed description of the cardiovascular system’s condition, treatment, and recommendations related to paroxysmal atrial fibrillation. This creates unnecessary cognitive load and may hinder the radiologist’s ability to extract the necessary information. | Coherence, Structure | Relevance, Redundancy |

| The statement “Male, age not specified” does not correspond to the source text, which states the age as 77 years. Additionally, there are repetitions in the “Recommendations” section, making the text less structured. | Grammar, Terminology | Hallucinations, Coherence, Structure |

| It is claimed, “the patient is in serious condition, “which is not substantiated in the source text. The source text describes only symptoms (pain, weakness) without an assessment of the general condition as “serious.” | Grammar, Terminology | Hallucinations, Relevance |

| The summary includes the statement “harmful habits: smoking, alcohol consumption (unspecified).” This information is absent from the source text and represents an unwarranted addition. Moreover, assertions regarding the “patient’s serious condition” and the “necessity of an urgent CT scan” are interpretative in nature and are not directly derived from the factual data presented in the original text. | Redundancy | Hallucinations, Relevance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Vasilev, Y.; Raznitsyna, I.; Pamova, A.; Burtsev, T.; Bobrovskaya, T.; Kosov, P.; Vladzymyrskyy, A.; Omelyanskaya, O.; Arzamasov, K. Evaluating Medical Text Summaries Using Automatic Evaluation Metrics and LLM-as-a-Judge Approach: A Pilot Study. Diagnostics 2026, 16, 3. https://doi.org/10.3390/diagnostics16010003

Vasilev Y, Raznitsyna I, Pamova A, Burtsev T, Bobrovskaya T, Kosov P, Vladzymyrskyy A, Omelyanskaya O, Arzamasov K. Evaluating Medical Text Summaries Using Automatic Evaluation Metrics and LLM-as-a-Judge Approach: A Pilot Study. Diagnostics. 2026; 16(1):3. https://doi.org/10.3390/diagnostics16010003

Chicago/Turabian StyleVasilev, Yuriy, Irina Raznitsyna, Anastasia Pamova, Tikhon Burtsev, Tatiana Bobrovskaya, Pavel Kosov, Anton Vladzymyrskyy, Olga Omelyanskaya, and Kirill Arzamasov. 2026. "Evaluating Medical Text Summaries Using Automatic Evaluation Metrics and LLM-as-a-Judge Approach: A Pilot Study" Diagnostics 16, no. 1: 3. https://doi.org/10.3390/diagnostics16010003

APA StyleVasilev, Y., Raznitsyna, I., Pamova, A., Burtsev, T., Bobrovskaya, T., Kosov, P., Vladzymyrskyy, A., Omelyanskaya, O., & Arzamasov, K. (2026). Evaluating Medical Text Summaries Using Automatic Evaluation Metrics and LLM-as-a-Judge Approach: A Pilot Study. Diagnostics, 16(1), 3. https://doi.org/10.3390/diagnostics16010003