Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Literature Search Strategy

2.2. Study Screening and Selection Criteria

2.3. Data Extraction and Reporting

- Study details: title, authorship, year of publication and journal name.

- Application and primary outcome measure.

- Study details: sample size, region studied, MRI sequences used.

- Artificial intelligence technique used.

- Key results and conclusion.

3. Results

3.1. Search Results

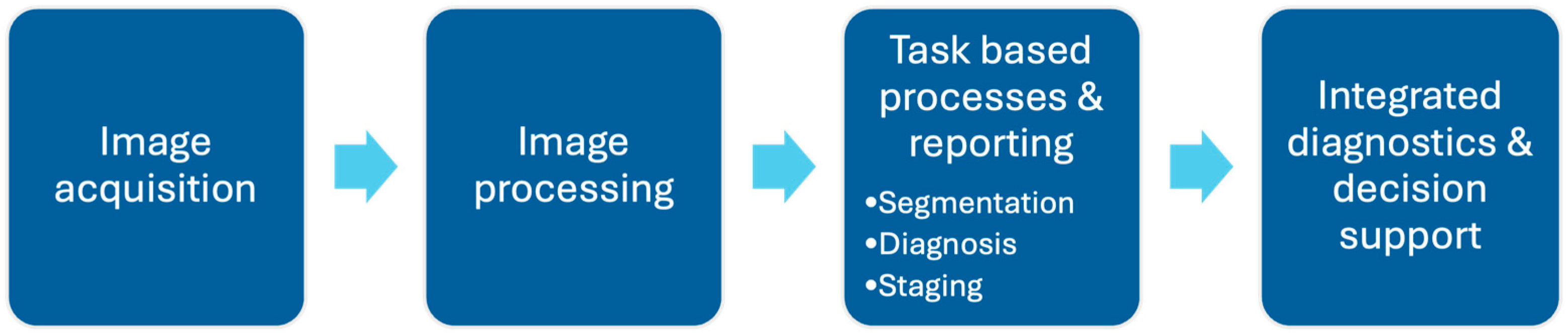

3.2. Overview of How Can AI Help in MRI Interpretation

3.3. Reducing Scan Times to Improve Efficiency

3.4. Automating Segmentation

3.5. Optimizing Worklist Triage and Workflow Processes

3.6. Decreasing Reading Times

3.7. Time-Saving and Workload Reduction

4. Discussion

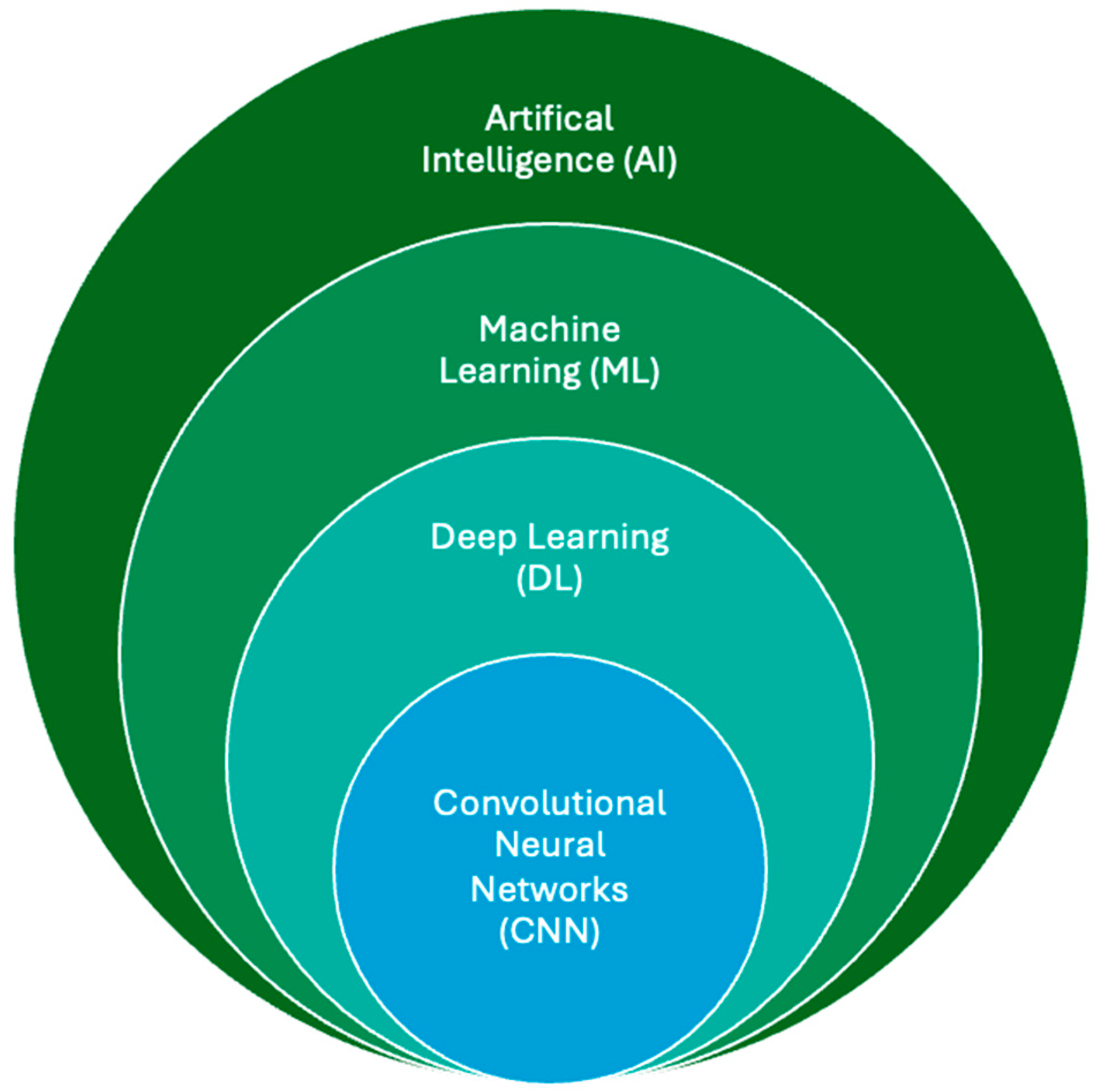

4.1. Dominant AI Architectures and Approaches

4.2. Strengths and Limitations of Current Evidence

4.3. Recommended Enhancements

4.3.1. Regulatory Frameworks and Compliance

4.3.2. Generalizability and Multi-Center Validation

4.3.3. Ethical Considerations

4.3.4. Current Recommendations

4.3.5. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing Healthcare: The Role of Artificial Intelligence in Clinical Practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef]

- GE Healthcare—Committing to Sustainability in MRI. Available online: https://www.gehealthcare.com/insights/article/committing-to-sustainability-in-mri (accessed on 15 November 2024).

- Zhou, M.; Zhao, X.; Luo, F.; Luo, J.; Pu, H.; Tao, X. Robust RGB-T Tracking via Adaptive Modality Weight Correlation Filters and Cross-modality Learning. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 95. [Google Scholar] [CrossRef]

- Zhou, M.; Lan, X.; Wei, X.; Liao, X.; Mao, Q.; Li, Y. An End-to-End Blind Image Quality Assessment Method Using a Recurrent Network and Self-Attention. IEEE Trans. Broadcast. 2023, 69, 369–377. [Google Scholar] [CrossRef]

- Zhou, M.; Chen, L.; Wei, X.; Liao, X.; Mao, Q.; Wang, H. Perception-Oriented U-Shaped Transformer Network for 360-Degree No-Reference Image Quality Assessment. IEEE Trans. Broadcast. 2023, 69, 396–405. [Google Scholar] [CrossRef]

- Zhou, M.; Shen, W.; Wei, X.; Luo, J.; Jia, F.; Zhuang, X.; Jia, W. Blind Image Quality Assessment: Exploring Content Fidelity Perceptibility via Quality Adversarial Learning. Int. J. Comput. Vis. 2025. [Google Scholar] [CrossRef]

- Zhou, M.; Wu, X.; Wei, X.; Tao, X.; Fang, B.; Kwong, S. Low-Light Enhancement Method Based on a Retinex Model for Structure Preservation. IEEE Trans. Multimed. 2024, 26, 650–662. [Google Scholar] [CrossRef]

- McDonald, R.J.; Schwartz, K.M.; Eckel, L.J.; Diehn, F.E.; Hunt, C.H.; Bartholmai, B.J.; Erickson, B.J.; Kallmes, D.F. The Effects of Changes in Utilization and Technological Advancements of Cross-Sectional Imaging on Radiologist Workload. Acad. Radiol. 2015, 22, 1191–1198. [Google Scholar] [CrossRef] [PubMed]

- Quinn, L.; Tryposkiadis, K.; Deeks, J.; De Vet, H.C.W.; Mallett, S.; Mokkink, L.B.; Takwoingi, Y.; Taylor-Phillips, S.; Sitch, A. Interobserver Variability Studies in Diagnostic Imaging: A Methodological Systematic Review. Br. J. Radiol. 2023, 96, 20220972. [Google Scholar] [CrossRef]

- Khalifa, M.; Albadawy, M. AI in Diagnostic Imaging: Revolutionising Accuracy and Efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Potočnik, J.; Foley, S.; Thomas, E. Current and Potential Applications of Artificial Intelligence in Medical Imaging Practice: A Narrative Review. J. Med. Imaging Radiat. Sci. 2023, 54, 376–385. [Google Scholar] [CrossRef] [PubMed]

- Younis, H.A.; Eisa, T.A.; Nasser, M.; Sahib, T.M.; Noor, A.A.; Alyasiri, O.M.; Salisu, S.; Hayder, I.M.; Younis, H.A. A Systematic Review and Meta-Analysis of Artificial Intelligence Tools in Medicine and Healthcare: Applications, Considerations, Limitations, Motivation and Challenges. Diagnostics 2024, 14, 109. [Google Scholar] [CrossRef]

- Gitto, S.; Serpi, F.; Albano, D.; Risoleo, G.; Fusco, S.; Messina, C.; Sconfienza, L.M. AI Applications in Musculoskeletal Imaging: A Narrative Review. Eur. Radiol. Exp. 2024, 8, 22. [Google Scholar] [CrossRef]

- Vogrin, M.; Trojner, T.; Kelc, R. Artificial Intelligence in Musculoskeletal Oncological Radiology. Radiol. Oncol. 2021, 55, 1–6. [Google Scholar] [CrossRef]

- Wenderott, K.; Krups, J.; Zaruchas, F.; Weigl, M. Effects of Artificial Intelligence Implementation on Efficiency in Medical Imaging—A Systematic Literature Review and Meta-Analysis. NPJ Digit. Med. 2024, 7, 265. [Google Scholar] [CrossRef]

- Stec, N.; Arje, D.; Moody, A.R.; Krupinski, E.A.; Tyrrell, P.N. A Systematic Review of Fatigue in Radiology: Is It a Problem? Am. J. Roentgenol. 2018, 210, 799–806. [Google Scholar] [CrossRef]

- Ranschaert, E.; Topff, L.; Pianykh, O. Optimization of Radiology Workflow with Artificial Intelligence. Radiol. Clin. N. Am. 2021, 59, 955–966. [Google Scholar] [CrossRef] [PubMed]

- Clifford, B.; Conklin, J.; Huang, S.Y.; Feiweier, T.; Hosseini, Z.; Goncalves Filho, A.L.M.; Tabari, A.; Demir, S.; Lo, W.-C.; Longo, M.G.F.; et al. An Artificial Intelligence-Accelerated 2-Minute Multi-Shot Echo Planar Imaging Protocol for Comprehensive High-Quality Clinical Brain Imaging. Magn. Reson. Med. 2022, 87, 2453–2463. [Google Scholar] [CrossRef] [PubMed]

- Jing, X.; Wielema, M.; Cornelissen, L.J.; van Gent, M.; Iwema, W.M.; Zheng, S.; Sijens, P.E.; Oudkerk, M.; Dorrius, M.D.; van Ooijen, P.M.A. Using Deep Learning to Safely Exclude Lesions with Only Ultrafast Breast MRI to Shorten Acquisition and Reading Time. Eur. Radiol. 2022, 32, 8706–8715. [Google Scholar] [CrossRef]

- Chen, G.; Fan, X.; Wang, T.; Zhang, E.; Shao, J.; Chen, S.; Zhang, D.; Zhang, J.; Guo, T.; Yuan, Z.; et al. A Machine Learning Model Based on MRI for the Preoperative Prediction of Bladder Cancer Invasion Depth. Eur. Radiol. 2023, 33, 8821–8832. [Google Scholar] [CrossRef]

- Ivanovska, T.; Daboul, A.; Kalentev, O.; Hosten, N.; Biffar, R.; Völzke, H.; Wörgötter, F. A Deep Cascaded Segmentation of Obstructive Sleep Apnea-Relevant Organs from Sagittal Spine MRI. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 579–588. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, Y.; Qi, H.; Hu, Z.; Chen, Z.; Yang, R.; Qiao, H.; Sun, J.; Wang, T.; Zhao, X.; et al. Deep Learning–Enhanced T1 Mapping with Spatial-Temporal and Physical Constraint. Magn. Reson. Med. 2021, 86, 1647–1661. [Google Scholar] [CrossRef]

- Herington, J.; McCradden, M.D.; Creel, K.; Boellaard, R.; Jones, E.C.; Jha, A.K.; Rahmim, A.; Scott, P.J.H.; Sunderland, J.J.; Wahl, R.L.; et al. Ethical Considerations for Artificial Intelligence in Medical Imaging: Data Collection, Development, and Evaluation. J. Nucl. Med. 2023, 64, 1848–1854. [Google Scholar] [CrossRef]

- Martín-Noguerol, T.; Paulano-Godino, F.; López-Ortega, R.; Górriz, J.M.; Riascos, R.F.; Luna, A. Artificial Intelligence in Radiology: Relevance of Collaborative Work between Radiologists and Engineers for Building a Multidisciplinary Team. Clin. Radiol. 2021, 76, 317–324. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Cui, Z.; Shi, Z.; Jiang, Y.; Zhang, Z.; Dai, X.; Yang, Z.; Gu, Y.; Zhou, L.; Han, C.; et al. A Robust and Efficient AI Assistant for Breast Tumor Segmentation from DCE-MRI via a Spatial-Temporal Framework. Patterns 2023, 4, 100826. [Google Scholar] [CrossRef]

- Pötsch, N.; Dietzel, M.; Kapetas, P.; Clauser, P.; Pinker, K.; Ellmann, S.; Uder, M.; Helbich, T.; Baltzer, P.A.T. An A.I. Classifier Derived from 4D Radiomics of Dynamic Contrast-Enhanced Breast MRI Data: Potential to Avoid Unnecessary Breast Biopsies. Eur. Radiol. 2021, 31, 5866–5876. [Google Scholar] [CrossRef]

- Zhao, Z.; Du, S.; Xu, Z.; Yin, Z.; Huang, X.; Huang, X.; Wong, C.; Liang, Y.; Shen, J.; Wu, J.; et al. SwinHR: Hemodynamic-Powered Hierarchical Vision Transformer for Breast Tumor Segmentation. Comput. Biol. Med. 2024, 169, 107939. [Google Scholar] [CrossRef]

- Lim, R.P.; Kachel, S.; Villa, A.D.M.; Kearney, L.; Bettencourt, N.; Young, A.A.; Chiribiri, A.; Scannell, C.M. CardiSort: A Convolutional Neural Network for Cross Vendor Automated Sorting of Cardiac MR Images. Eur. Radiol. 2022, 32, 5907–5920. [Google Scholar] [CrossRef]

- Parker, J.; Coey, J.; Alambrouk, T.; Lakey, S.M.; Green, T.; Brown, A.; Maxwell, I.; Ripley, D.P. Evaluating a Novel AI Tool for Automated Measurement of the Aortic Root and Valve in Cardiac Magnetic Resonance Imaging. Cureus 2024, 16, e59647. [Google Scholar] [CrossRef]

- Duan, C.; Deng, H.; Xiao, S.; Xie, J.; Li, H.; Zhao, X.; Han, D.; Sun, X.; Lou, X.; Ye, C.; et al. Accelerate Gas Diffusion-Weighted MRI for Lung Morphometry with Deep Learning. Eur. Radiol. 2022, 32, 702–713. [Google Scholar] [CrossRef]

- Xie, L.; Udupa, J.K.; Tong, Y.; Torigian, D.A.; Huang, Z.; Kogan, R.M.; Wootton, D.; Choy, K.R.; Sin, S.; Wagshul, M.E.; et al. Automatic Upper Airway Segmentation in Static and Dynamic MRI via Anatomy-Guided Convolutional Neural Networks. Med. Phys. 2022, 49, 324–342. [Google Scholar] [CrossRef]

- Wang, J.; Peng, Y.; Jing, S.; Han, L.; Li, T.; Luo, J. A deep-learning approach for segmentation of liver tumors in magnetic resonance imaging using UNet++. BMC Cancer 2023, 23, 1060. [Google Scholar] [CrossRef] [PubMed]

- Gross, M.; Huber, S.; Arora, S.; Ze’evi, T.; Haider, S.P.; Kucukkaya, A.S.; Iseke, S.; Kuhn, T.N.; Gebauer, B.; Michallek, F.; et al. Automated MRI Liver Segmentation for Anatomical Segmentation, Liver Volumetry, and the Extraction of Radiomics. Eur. Radiol. 2024, 34, 5056–5065. [Google Scholar] [CrossRef] [PubMed]

- Oestmann, P.M.; Wang, C.J.; Savic, L.J.; Hamm, C.A.; Stark, S.; Schobert, I.; Gebauer, B.; Schlachter, T.; Lin, M.; Weinreb, J.C.; et al. Deep Learning–Assisted Differentiation of Pathologically Proven Atypical and Typical Hepatocellular Carcinoma (HCC) versus Non-HCC on Contrast-Enhanced MRI of the Liver. Eur. Radiol. 2021, 31, 4981–4990. [Google Scholar] [CrossRef] [PubMed]

- Yan, M.; Zhang, X.; Zhang, B.; Geng, Z.; Xie, C.; Yang, W.; Zhang, S.; Qi, Z.; Lin, T.; Ke, Q.; et al. Deep Learning Nomogram Based on Gd-EOB-DTPA MRI for Predicting Early Recurrence in Hepatocellular Carcinoma after Hepatectomy. Eur. Radiol. 2023, 33, 4949–4961. [Google Scholar] [CrossRef]

- Wei, H.; Zheng, T.; Zhang, X.; Wu, Y.; Chen, Y.; Zheng, C.; Jiang, D.; Wu, B.; Guo, H.; Jiang, H.; et al. MRI Radiomics Based on Deep Learning Automated Segmentation to Predict Early Recurrence of Hepatocellular Carcinoma. Insights Imaging 2024, 15, 120. [Google Scholar] [CrossRef]

- Gross, M.; Haider, S.P.; Ze’evi, T.; Huber, S.; Arora, S.; Kucukkaya, A.S.; Iseke, S.; Gebauer, B.; Fleckenstein, F.; Dewey, M.; et al. Automated Graded Prognostic Assessment for Patients with Hepatocellular Carcinoma Using Machine Learning. Eur. Radiol. 2024, 34, 6940–6952. [Google Scholar] [CrossRef]

- Cunha, G.M.; Hasenstab, K.A.; Higaki, A.; Wang, K.; Delgado, T.; Brunsing, R.L.; Schlein, A.; Schwartzman, A.; Hsiao, A.; Sirlin, C.B.; et al. Convolutional Neural Network-Automated Hepatobiliary Phase Adequacy Evaluation May Optimize Examination Time. Eur. J. Radiol. 2020, 124, 108837. [Google Scholar] [CrossRef]

- Liu, G.; Pan, S.; Zhao, R.; Zhou, H.; Chen, J.; Zhou, X.; Xu, J.; Zhou, Y.; Xue, W.; Wu, G. The Added Value of AI-Based Computer-Aided Diagnosis in Classification of Cancer at Prostate MRI. Eur. Radiol. 2023, 33, 5118–5130. [Google Scholar] [CrossRef]

- Simon, B.D.; Merriman, K.M.; Harmon, S.A.; Tetreault, J.; Yilmaz, E.C.; Blake, Z.; Merino, M.J.; An, J.Y.; Marko, J.; Law, Y.M.; et al. Automated Detection and Grading of Extraprostatic Extension of Prostate Cancer at MRI via Cascaded Deep Learning and Random Forest Classification. Acad. Radiol. 2024, 31, 4096–4106. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Xing, Z.; Kong, Z.; Yu, Y.; Chen, Y.; Zhao, X.; Song, B.; Wang, X.; Wu, P.; Wang, X.; et al. Artificial Intelligence as Diagnostic Aiding Tool in Cases of Prostate Imaging Reporting and Data System Category 3: The Results of Retrospective Multi-Center Cohort Study. Abdom. Radiol. 2023, 48, 3757–3765. [Google Scholar] [CrossRef] [PubMed]

- Hosseinzadeh, M.; Saha, A.; Brand, P.; Slootweg, I.; de Rooij, M.; Huisman, H. Deep Learning–Assisted Prostate Cancer Detection on Bi-Parametric MRI: Minimum Training Data Size Requirements and Effect of Prior Knowledge. Eur. Radiol. 2022, 32, 2224–2234. [Google Scholar] [CrossRef] [PubMed]

- Oerther, B.; Engel, H.; Nedelcu, A.; Schlett, C.L.; Grimm, R.; von Busch, H.; Sigle, A.; Gratzke, C.; Bamberg, F.; Benndorf, M. Prediction of Upgrade to Clinically Significant Prostate Cancer in Patients under Active Surveillance: Performance of a Fully Automated AI-Algorithm for Lesion Detection and Classification. Prostate 2023, 83, 871–878. [Google Scholar] [CrossRef]

- Li, Q.; Xu, W.-Y.; Sun, N.-N.; Feng, Q.-X.; Hou, Y.-J.; Sang, Z.-T.; Zhu, Z.-N.; Hsu, Y.-C.; Nickel, D.; Xu, H.; et al. Deep Learning-Accelerated T2WI: Image Quality, Efficiency, and Staging Performance against BLADE T2WI for Gastric Cancer. Abdom. Radiol. 2024, 49, 2574–2584. [Google Scholar] [CrossRef]

- Goel, A.; Shih, G.; Riyahi, S.; Jeph, S.; Dev, H.; Hu, R.; Romano, D.; Teichman, K.; Blumenfeld, J.D.; Barash, I.; et al. Deployed Deep Learning Kidney Segmentation for Polycystic Kidney Disease MRI. Radiol. Artif. Intell. 2022, 4, e210205. [Google Scholar] [CrossRef]

- Marka, A.W.; Luitjens, J.; Gassert, F.T.; Steinhelfer, L.; Burian, E.; Rübenthaler, J.; Schwarze, V.; Froelich, M.F.; Makowski, M.R.; Gassert, F.G. Artificial Intelligence Support in MR Imaging of Incidental Renal Masses: An Early Health Technology Assessment. Eur. Radiol. 2024, 34, 5856–5865. [Google Scholar] [CrossRef]

- Chen, Y.; Ruan, D.; Xiao, J.; Wang, L.; Sun, B.; Saouaf, R.; Yang, W.; Li, D.; Fan, Z. Fully Automated Multiorgan Segmentation in Abdominal Magnetic Resonance Imaging with Deep Neural Networks. Med. Phys. 2020, 47, 4971–4982. [Google Scholar] [CrossRef]

- Hallinan, J.T.P.D.; Zhu, L.; Yang, K.; Makmur, A.; Algazwi, D.A.R.; Thian, Y.L.; Lau, S.; Choo, Y.S.; Eide, S.E.; Yap, Q.V.; et al. Deep Learning Model for Automated Detection and Classification of Central Canal, Lateral Recess, and Neural Foraminal Stenosis at Lumbar Spine MRI. Radiology 2021, 300, 130–138. [Google Scholar] [CrossRef]

- Lim, D.S.W.; Makmur, A.; Zhu, L.; Zhang, W.; Cheng, A.J.L.; Sia, D.S.Y.; Eide, S.E.; Ong, H.Y.; Jagmohan, P.; Tan, W.C.; et al. Improved Productivity Using Deep Learning–Assisted Reporting for Lumbar Spine MRI. Radiology 2022, 305, 160–166. [Google Scholar] [CrossRef]

- McSweeney, T.P.; Tiulpin, A.; Saarakkala, S.; Niinimäki, J.; Windsor, R.; Jamaludin, A.; Kadir, T.; Karppinen, J.; Määttä, J. External Validation of SpineNet, an Open-Source Deep Learning Model for Grading Lumbar Disk Degeneration MRI Features, Using the Northern Finland Birth Cohort 1966. Spine 2023, 48, 484–491. [Google Scholar] [CrossRef] [PubMed]

- Grob, A.; Loibl, M.; Jamaludin, A.; Winklhofer, S.; Fairbank, J.C.T.; Fekete, T.; Porchet, F.; Mannion, A.F. External Validation of the Deep Learning System “SpineNet” for Grading Radiological Features of Degeneration on MRIs of the Lumbar Spine. Eur. Spine J. 2022, 31, 2137–2148. [Google Scholar] [CrossRef]

- Bharadwaj, U.U.; Christine, M.; Li, S.; Chou, D.; Pedoia, V.; Link, T.M.; Chin, C.T.; Majumdar, S. Deep Learning for Automated, Interpretable Classification of Lumbar Spinal Stenosis and Facet Arthropathy from Axial MRI. Eur. Radiol. 2023, 33, 3435–3443. [Google Scholar] [CrossRef]

- van der Graaf, J.W.; Brundel, L.; van Hooff, M.L.; de Kleuver, M.; Lessmann, N.; Maresch, B.J.; Vestering, M.M.; Spermon, J.; van Ginneken, B.; Rutten, M.J.C.M. AI-Based Lumbar Central Canal Stenosis Classification on Sagittal MR Images Is Comparable to Experienced Radiologists Using Axial Images. Eur. Radiol. 2024, 35, 2298–2306. [Google Scholar] [CrossRef] [PubMed]

- Yoo, H.; Yoo, R.-E.; Choi, S.H.; Hwang, I.; Lee, J.Y.; Seo, J.Y.; Koh, S.Y.; Choi, K.S.; Kang, K.M.; Yun, T.J. Deep Learning–Based Reconstruction for Acceleration of Lumbar Spine MRI: A Prospective Comparison with Standard MRI. Eur. Radiol. 2023, 33, 8656–8668. [Google Scholar] [CrossRef]

- Wang, D.; Liu, S.; Ding, J.; Sun, A.; Jiang, D.; Jiang, J.; Zhao, J.; Chen, D.; Ji, G.; Li, N.; et al. A Deep Learning Model Enhances Clinicians’ Diagnostic Accuracy to More Than 96% for Anterior Cruciate Ligament Ruptures on Magnetic Resonance Imaging. Arthroscopy 2024, 40, 1197–1205. [Google Scholar] [CrossRef] [PubMed]

- Ro, K.; Kim, J.Y.; Park, H.; Cho, B.H.; Kim, I.Y.; Shim, S.B.; Choi, I.Y.; Yoo, J.C. Deep-Learning Framework and Computer Assisted Fatty Infiltration Analysis for the Supraspinatus Muscle in MRI. Sci. Rep. 2021, 11, 15065. [Google Scholar] [CrossRef]

- Ni, M.; Zhao, Y.; Zhang, L.; Chen, W.; Wang, Q.; Tian, C.; Yuan, H. MRI-Based Automated Multitask Deep Learning System to Evaluate Supraspinatus Tendon Injuries. Eur. Radiol. 2024, 34, 3538–3551. [Google Scholar] [CrossRef]

- Feuerriegel, G.C.; Weiss, K.; Kronthaler, S.; Leonhardt, Y.; Neumann, J.; Wurm, M.; Lenhart, N.S.; Makowski, M.R.; Schwaiger, B.J.; Woertler, K.; et al. Evaluation of a Deep Learning-Based Reconstruction Method for Denoising and Image Enhancement of Shoulder MRI in Patients with Shoulder Pain. Eur. Radiol. 2023, 33, 4875–4884. [Google Scholar] [CrossRef]

- Liu, J.; Li, W.; Li, Z.; Yang, J.; Wang, K.; Cao, X.; Qin, N.; Xue, K.; Dai, Y.; Wu, P.; et al. Magnetic Resonance Shoulder Imaging Using Deep Learning–Based Algorithm. Eur. Radiol. 2023, 33, 4864–4874. [Google Scholar] [CrossRef]

- Nowak, S.; Henkel, A.; Theis, M.; Luetkens, J.; Geiger, S.; Sprinkart, A.M.; Pieper, C.C.; Attenberger, U.I. Deep Learning for Standardized, MRI-Based Quantification of Subcutaneous and Subfascial Tissue Volume for Patients with Lipedema and Lymphedema. Eur. Radiol. 2023, 33, 884–892. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Liu, Z.; Zhou, H.; Fang, J.; Lu, H. Deep HT: A Deep Neural Network for Diagnose on MR Images of Tumors of the Hand. PLoS ONE 2020, 15, e0237606. [Google Scholar] [CrossRef]

- Li, Y.; Li, H.; Fan, Y. ACEnet: Anatomical Context-Encoding Network for Neuroanatomy Segmentation. Med. Image Anal. 2021, 70, 101991. [Google Scholar] [CrossRef]

- Yamada, S.; Otani, T.; Ii, S.; Kawano, H.; Nozaki, K.; Wada, S.; Oshima, M.; Watanabe, Y. Aging-Related Volume Changes in the Brain and Cerebrospinal Fluid Using Artificial Intelligence-Automated Segmentation. Eur. Radiol. 2023, 33, 7099–7112. [Google Scholar] [CrossRef] [PubMed]

- Dar, S.U.H.; Öztürk, Ş.; Özbey, M.; Oguz, K.K.; Çukur, T. Parallel-Stream Fusion of Scan-Specific and Scan-General Priors for Learning Deep MRI Reconstruction in Low-Data Regimes. Comput. Biol. Med. 2023, 167, 107610. [Google Scholar] [CrossRef] [PubMed]

- Jun, Y.; Cho, J.; Wang, X.; Gee, M.; Grant, P.E.; Bilgic, B.; Gagoski, B. SSL-QALAS: Self-Supervised Learning for Rapid Multiparameter Estimation in Quantitative MRI Using 3D-QALAS. Magn. Reson. Med. 2023, 90, 2019–2032. [Google Scholar] [CrossRef]

- Kemenczky, P.; Vakli, P.; Somogyi, E.; Homolya, I.; Hermann, P.; Gál, V.; Vidnyánszky, Z. Effect of Head Motion-Induced Artefacts on the Reliability of Deep Learning-Based Whole-Brain Segmentation. Sci. Rep. 2022, 12, 1618. [Google Scholar] [CrossRef]

- Bash, S.; Wang, L.; Airriess, C.; Zaharchuk, G.; Gong, E.; Shankaranarayanan, A.; Tanenbaum, L.N. Deep Learning Enables 60% Accelerated Volumetric Brain MRI While Preserving Quantitative Performance: A Prospective, Multicenter, Multireader Trial. Am. J. Neuroradiol. 2021, 42, 2130–2137. [Google Scholar] [CrossRef]

- Boaro, A.; Kaczmarzyk, J.R.; Kavouridis, V.K.; Harary, M.; Mammi, M.; Dawood, H.; Shea, A.; Cho, E.Y.; Juvekar, P.; Noh, T.; et al. Deep Neural Networks Allow Expert-Level Brain Meningioma Segmentation and Present Potential for Improvement of Clinical Practice. Sci. Rep. 2022, 12, 15462. [Google Scholar] [CrossRef]

- Gab Allah, A.M.; Sarhan, A.M.; Elshennawy, N.M. Classification of Brain MRI Tumor Images Based on Deep Learning PGGAN Augmentation. Diagnostics 2021, 11, 2343. [Google Scholar] [CrossRef]

- Amini, A.; Shayganfar, A.; Amini, Z.; Ostovar, L.; HajiAhmadi, S.; Chitsaz, N.; Rabbani, M.; Kafieh, R. Deep Learning for Discrimination of Active and Inactive Lesions in Multiple Sclerosis Using Non-Contrast FLAIR MRI: A Multicenter Study. Mult. Scler. Relat. Disord. 2024, 87, 105642. [Google Scholar] [CrossRef] [PubMed]

- Cui, X.; Chen, N.; Zhao, C.; Li, J.; Zheng, X.; Liu, C.; Yang, J.; Li, X.; Yu, C.; Liu, J.; et al. An Adaptive Weighted Attention-Enhanced Deep Convolutional Neural Network for Classification of MRI Images of Parkinson’s Disease. J. Neurosci. Methods 2023, 394, 109884. [Google Scholar] [CrossRef] [PubMed]

- Sun, F.; Lyu, J.; Jian, S.; Qin, Y.; Tang, X. Accurate Measurement of Magnetic Resonance Parkinsonism Index by a Fully Automatic and Deep Learning Quantification Pipeline. Eur. Radiol. 2023, 33, 8844–8853. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Hui, D.; Wu, F.; Xia, Y.; Shi, F.; Yang, M.; Zhang, J.; Peng, C.; Feng, J.; Li, C. Automatic Diagnosis of Parkinson’s Disease Using Artificial Intelligence Base on Routine T1-Weighted MRI. Front. Med. 2024, 10, 1303501. [Google Scholar] [CrossRef]

- Yasaka, K.; Akai, H.; Sugawara, H.; Tajima, T.; Akahane, M.; Yoshioka, N.; Kabasawa, H.; Miyo, R.; Ohtomo, K.; Abe, O.; et al. Impact of Deep Learning Reconstruction on Intracranial 1.5 T Magnetic Resonance Angiography. Jpn. J. Radiol. 2022, 40, 476–483. [Google Scholar] [CrossRef]

- Tian, T.; Gan, T.; Chen, J.; Lu, J.; Zhang, G.; Zhou, Y.; Li, J.; Shao, H.; Liu, Y.; Zhu, H.; et al. Graphic Intelligent Diagnosis of Hypoxic-Ischemic Encephalopathy Using MRI-Based Deep Learning Model. Neonatology 2023, 120, 441–449. [Google Scholar] [CrossRef]

- Lew, C.O.; Calabrese, E.; Chen, J.V.; Tang, F.; Chaudhari, G.; Lee, A.; Faro, J.; Juul, S.; Mathur, A.; McKinstry, R.C.; et al. Artificial Intelligence Outcome Prediction in Neonates with Encephalopathy (AI-OPiNE). Radiol. Artif. Intell. 2024, 6, e240076. [Google Scholar] [CrossRef]

- She, J.; Huang, H.; Ye, Z.; Huang, W.; Sun, Y.; Liu, C.; Yang, W.; Wang, J.; Ye, P.; Zhang, L.; et al. Automatic Biometry of Fetal Brain MRIs Using Deep and Machine Learning Techniques. Sci. Rep. 2023, 13, 17860. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, H.-R.; Wen, J.-H.; Hu, Y.-J.; Diao, Y.-L.; Chen, J.-L.; Xia, Y.-F. A Novel LVPA-UNet Network for Target Volume Automatic Delineation: An MRI Case Study of Nasopharyngeal Carcinoma. Heliyon 2024, 10, e30763. [Google Scholar] [CrossRef]

- Winkel, D.J.; Tong, A.; Lou, B.; Kamen, A.; Comaniciu, D.; Disselhorst, J.A.; Rodríguez-Ruiz, A.; Huisman, H.; Szolar, D.; Shabunin, I.; et al. A Novel Deep Learning Based Computer-Aided Diagnosis System Improves the Accuracy and Efficiency of Radiologists in Reading Biparametric Magnetic Resonance Images of the Prostate: Results of a Multireader, Multicase Study. Investig. Radiol. 2021, 56, 605–613. [Google Scholar] [CrossRef]

- Rudie, J.D.; Duda, J.; Duong, M.T.; Chen, P.-H.; Xie, L.; Kurtz, R.; Ware, J.B.; Choi, J.; Mattay, R.R.; Botzolakis, E.J.; et al. Brain MRI Deep Learning and Bayesian Inference System Augments Radiology Resident Performance. J. Digit. Imaging 2021, 34, 1049–1058. [Google Scholar] [CrossRef]

- Langkilde, F.; Masaba, P.; Edenbrandt, L.; Gren, M.; Halil, A.; Hellström, M.; Larsson, M.; Naeem, A.A.; Wallström, J.; Maier, S.E.; et al. Manual Prostate MRI Segmentation by Readers with Different Experience: A Study of the Learning Progress. Eur. Radiol. 2024, 34, 4801–4809. [Google Scholar] [CrossRef]

- Park, J.; Oh, K.; Han, K.; Lee, Y.H. Patient-Centered Radiology Reports with Generative Artificial Intelligence: Adding Value to Radiology Reporting. Sci. Rep. 2024, 14, 13218. [Google Scholar] [CrossRef]

- Federau, C.; Hainc, N.; Edjlali, M.; Zhu, G.; Mastilovic, M.; Nierobisch, N.; Uhlemann, J.-P.; Paganucci, S.; Granziera, C.; Heinzlef, O.; et al. Evaluation of the Quality and the Productivity of Neuroradiological Reading of Multiple Sclerosis Follow-up MRI Scans Using an Intelligent Automation Software. Neuroradiology 2024, 66, 361–369. [Google Scholar] [CrossRef]

- Lee, Y.H. Efficiency Improvement in a Busy Radiology Practice: Determination of Musculoskeletal Magnetic Resonance Imaging Protocol Using Deep-Learning Convolutional Neural Networks. J. Digit. Imaging 2018, 31, 604–610. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; He, Z.; Ouyang, J.; Tan, Y.; Chen, Y.; Gu, Y.; Mao, L.; Ren, W.; Wang, J.; Lin, L.; et al. Magnetic Resonance Imaging Radiomics Predicts Preoperative Axillary Lymph Node Metastasis to Support Surgical Decisions and Is Associated with Tumor Microenvironment in Invasive Breast Cancer: A Machine Learning, Multicenter Study. EBioMedicine 2021, 69, 103460. [Google Scholar] [CrossRef] [PubMed]

- Schlemper, J.; Caballero, J.; Hajnal, J.V.; Price, A.N.; Rueckert, D. A Deep Cascade of Convolutional Neural Networks for Dynamic MR Image Reconstruction. IEEE Trans. Med. Imaging 2018, 37, 491–503. [Google Scholar] [CrossRef] [PubMed]

- Hammernik, K.; Klatzer, T.; Kobler, E.; Recht, M.P.; Sodickson, D.K.; Pock, T.; Knoll, F. Learning a Variational Network for Reconstruction of Accelerated MRI Data. Magn. Reson. Med. 2017, 79, 3055. [Google Scholar] [CrossRef]

- Dai, X.; Lei, Y.; Wang, T.; Zhou, J.; Roper, J.; McDonald, M.; Beitler, J.J.; Curran, W.J.; Liu, T.; Yang, X. Automated Delineation of Head and Neck Organs at Risk Using Synthetic MRI-Aided Mask Scoring Regional Convolutional Neural Network. Med. Phys. 2021, 48, 5862–5873. [Google Scholar] [CrossRef]

- Amisha; Malik, P.; Pathania, M.; Rathaur, V.K. Overview of Artificial Intelligence in Medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef]

- Kobayashi, H. Potential for Artificial Intelligence in Medicine and Its Application to Male Infertility. Reprod. Med. Biol. 2024, 23, e12590. [Google Scholar] [CrossRef] [PubMed]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine Learning for Medical Imaging. RadioGraphics 2017, 37, 505–515. [Google Scholar] [CrossRef]

- Zaharchuk, G.; Gong, E.; Wintermark, M.; Rubin, D.; Langlotz, C.P. Deep Learning in Neuroradiology. Am. J. Neuroradiol. 2018, 39, 1776–1784. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Cabitza, F.; Campagner, A.; Sconfienza, L.M. Studying Human-AI Collaboration Protocols: The Case of the Kasparov’s Law in Radiological Double Reading. Health Inf. Sci. Syst. 2021, 9, 8. [Google Scholar] [CrossRef]

- Almansour, H.; Herrmann, J.; Gassenmaier, S.; Afat, S.; Jacoby, J.; Koerzdoerfer, G.; Nickel, D.; Mostapha, M.; Nadar, M.; Othman, A.E. Deep Learning Reconstruction for Accelerated Spine MRI: Prospective Analysis of Interchangeability. Radiology 2022, 306, e212922. [Google Scholar] [CrossRef] [PubMed]

- Hallinan, J.T.P.D. Deep Learning for Spine MRI: Reducing Time Not Quality. Radiology 2022, 306, e222410. [Google Scholar] [CrossRef]

- Fritz, J.; Raithel, E.; Thawait, G.K.; Gilson, W.; Papp, D.F. Six-Fold Acceleration of High-Spatial Resolution 3D SPACE MRI of the Knee Through Incoherent k-Space Undersampling and Iterative Reconstruction—First Experience. Investig. Radiol. 2016, 51, 400–409. [Google Scholar] [CrossRef]

- Han, Z.; Wang, Y.; Wang, W.; Zhang, T.; Wang, J.; Ma, X.; Men, K.; Shi, A.; Gao, Y.; Bi, N. Artificial Intelligence-Assisted Delineation for Postoperative Radiotherapy in Patients with Lung Cancer: A Prospective, Multi-Center, Cohort Study. Front. Oncol. 2024, 14, 1388297. [Google Scholar] [CrossRef]

- Wang, F.; Chen, Q.; Chen, Y.; Zhu, Y.; Zhang, Y.; Cao, D.; Zhou, W.; Liang, X.; Yang, Y.; Lin, L.; et al. A Novel Multimodal Deep Learning Model for Preoperative Prediction of Microvascular Invasion and Outcome in Hepatocellular Carcinoma. Eur. J. Surg. Oncol. 2023, 49, 156–164. [Google Scholar] [CrossRef]

- Fatima, A.; Shahid, A.R.; Raza, B.; Madni, T.M.; Janjua, U.I. State-of-the-Art Traditional to the Machine- and Deep-Learning-Based Skull Stripping Techniques, Models, and Algorithms. J. Digit. Imaging 2020, 33, 1443–1464. [Google Scholar] [CrossRef] [PubMed]

- Hallinan, J.T.P.D.; Leow, N.W.; Ong, W.; Lee, A.; Low, Y.X.; Chan, M.D.Z.; Devi, G.K.; Loh, D.D.L.; He, S.S.; Nor, F.E.M.; et al. MRI Spine Request Form Enhancement and Auto Protocoling Using a Secure Institutional Large Language Model. Spine J. 2024, 25, 505–514. [Google Scholar] [CrossRef] [PubMed]

- Beauchamp, N.J.; Bryan, R.N.; Bui, M.M.; Krestin, G.P.; McGinty, G.B.; Meltzer, C.C.; Neumaier, M. Integrative Diagnostics: The Time Is Now—A Report from the International Society for Strategic Studies in Radiology. Insights Imaging 2023, 14, 54. [Google Scholar] [CrossRef] [PubMed]

- Forsberg, D.; Rosipko, B.; Sunshine, J.L. Radiologists’ Variation of Time to Read Across Different Procedure Types. J. Digit. Imaging 2017, 30, 86–94. [Google Scholar] [CrossRef]

- Nowak, S.; Mesropyan, N.; Faron, A.; Block, W.; Reuter, M.; Attenberger, U.I.; Luetkens, J.A.; Sprinkart, A.M. Detection of Liver Cirrhosis in Standard T2-Weighted MRI Using Deep Transfer Learning. Eur. Radiol. 2021, 31, 8807–8815. [Google Scholar] [CrossRef]

- Nai, Y.H.; Loi, H.Y.; O’Doherty, S.; Tan, T.H.; Reilhac, A. Comparison of the performances of machine learning and deep learning in improving the quality of low dose lung cancer PET images. Jpn. J. Radiol. 2022, 40, 1290–1299. [Google Scholar] [CrossRef]

- Chauhan, D.; Anyanwu, E.; Goes, J.; Besser, S.A.; Anand, S.; Madduri, R.; Getty, N.; Kelle, S.; Kawaji, K.; Mor-Avi, V.; et al. Comparison of machine learning and deep learning for view identification from cardiac magnetic resonance images. Clin. Imaging. 2022, 82, 121–126. [Google Scholar] [CrossRef]

- Zhang, C.; Zheng, H.; Gu, Y. Dive into the details of self-supervised learning for medical image analysis. Med. Image Anal. 2023, 89, 102879. [Google Scholar] [CrossRef]

- Frueh, M.; Kuestner, T.; Nachbar, M.; Thorwarth, D.; Schilling, A.; Gatidis, S. Self-supervised learning for automated anatomical tracking in medical image data with minimal human labeling effort. Comput. Methods Programs Biomed. 2022, 225, 107085. [Google Scholar] [CrossRef]

- Xu, J.; Turk, E.A.; Grant, P.E.; Golland, P.; Adalsteinsson, E. STRESS: Super-Resolution for Dynamic Fetal MRI using Self-Supervised Learning. Med. Image Comput. Comput. Assist. Interv. 2021, 12907, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Peng, L.; Wang, N.; Xu, J.; Zhu, X.; Li, X. GATE: Graph CCA for Temporal Self-Supervised Learning for Label-Efficient fMRI Analysis. IEEE Trans. Med. Imaging 2023, 42, 391–402. [Google Scholar] [CrossRef]

- Hasan, S.M.K.; Linte, C. STAMP: A Self-training Student-Teacher Augmentation-Driven Meta Pseudo-Labeling Framework for 3D Cardiac MRI Image Segmentation. Med. Image Underst. Anal. 2022, 13413, 371–386. [Google Scholar] [CrossRef]

- Azad, R.; Kazerouni, A.; Heidari, M.; Aghdam, E.K.; Molaei, A.; Jia, Y.; Jose, A.; Roy, R.; Merhof, D. Advances in medical image analysis with vision Transformers: A comprehensive review. Med. Image Anal. 2024, 91, 103000. [Google Scholar] [CrossRef] [PubMed]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; Payne, P.; et al. Large language models encode clinical knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef]

- Kim, J.K.; Chua, M.; Rickard, M.; Lorenzo, A. ChatGPT and Large Language Model (LLM) Chatbots: The Current State of Acceptability and a Proposal for Guidelines on Utilization in Academic Medicine. J. Pediatr. Urol. 2023, 19, 598–604. [Google Scholar] [CrossRef]

- Han, H. Challenges of reproducible AI in biomedical data science. BMC Med. Genom. 2025, 18 (Suppl. 1), 8. [Google Scholar] [CrossRef] [PubMed]

- Bradshaw, T.J.; Huemann, Z.; Hu, J.; Rahmim, A. A Guide to Cross-Validation for Artificial Intelligence in Medical Imaging. Radiol. Artif. Intell. 2023, 5, e220232. [Google Scholar] [CrossRef]

- Kim, D.W.; Jang, H.Y.; Kim, K.W.; Shin, Y.; Park, S.H. Design Characteristics of Studies Reporting the Performance of Artificial Intelligence Algorithms for Diagnostic Analysis of Medical Images: Results from Recently Published Papers. Korean J. Radiol. 2019, 20, 405–410. [Google Scholar] [CrossRef]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key Challenges for Delivering Clinical Impact with Artificial Intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef]

- Filkins, B.L.; Kim, J.Y.; Roberts, B.; Armstrong, W.; Miller, M.A.; Hultner, M.L.; Castillo, A.P.; Ducom, J.C.; Topol, E.J.; Steinhubl, S.R. Privacy and Security in the Era of Digital Health: What Should Translational Researchers Know and Do about It? Am. J. Transl. Res. 2016, 8, 1560. [Google Scholar]

- Hill, D.L.G. AI in Imaging: The Regulatory Landscape. Br. J. Radiol. 2024, 97, 483. [Google Scholar] [CrossRef]

- Quaranta, M.; Amantea, I.A.; Grosso, M. Obligation for AI Systems in Healthcare: Prepare for Trouble and Make It Double? Rev. Socionetwork Strateg. 2023, 17, 275–295. [Google Scholar] [CrossRef]

- van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; van Ginneken, B.; de Rooij, M. Artificial Intelligence in Radiology: 100 Commercially Available Products and Their Scientific Evidence. Eur. Radiol. 2021, 31, 3797. [Google Scholar] [CrossRef] [PubMed]

- Brady, A.P.; Allen, B.; Chong, J.; Kotter, E.; Kottler, N.; Mongan, J.; Oakden-Rayner, L.; Pinto dos Santos, D.; Tang, A.; Wald, C.; et al. Developing, Purchasing, Implementing and Monitoring AI Tools in Radiology: Practical Considerations. A Multi-Society Statement from the ACR, CAR, ESR, RANZCR & RSNA. J. Med. Imaging Radiat. Oncol. 2024, 68, 7–26. [Google Scholar] [CrossRef] [PubMed]

- Alis, D.; Tanyel, T.; Meltem, E.; Seker, M.E.; Seker, D.; Karakaş, H.M.; Karaarslan, E.; Öksüz, İ. Choosing the Right Artificial Intelligence Solutions for Your Radiology Department: Key Factors to Consider. Diagn. Interv. Radiol. 2024, 30, 357. [Google Scholar] [CrossRef]

- Huisman, M.; Hannink, G. The AI Generalization Gap: One Size Does Not Fit All. Radiol. Artif. Intell. 2023, 5, e230246. [Google Scholar] [CrossRef]

- Eche, T.; Schwartz, L.H.; Mokrane, F.Z.; Dercle, L. Toward Generalizability in the Deployment of Artificial Intelligence in Radiology: Role of Computation Stress Testing to Overcome Underspecification. Radiol. Artif. Intell. 2021, 3, e210097. [Google Scholar] [CrossRef]

- Giganti, F.; da Silva, N.M.; Yeung, M.; Davies, L.; Frary, A.; Rodriguez, M.F.; Sushentsev, N.; Ashley, N.; Andreou, A.; Bradley, A.; et al. AI-powered prostate cancer detection: A multi-centre, multi-scanner validation study. Eur. Radiol. 2025. [Google Scholar] [CrossRef]

- Rehman, M.H.U.; Hugo Lopez Pinaya, W.; Nachev, P.; Teo, J.T.; Ourselin, S.; Cardoso, M.J. Federated learning for medical imaging radiology. Br. J. Radiol. 2023, 96, 20220890. [Google Scholar] [CrossRef]

- Aouedi, O.; Sacco, A.; Piamrat, K.; Marchetto, G. Handling Privacy-Sensitive Medical Data With Federated Learning: Challenges and Future Directions. IEEE J. Biomed. Health Inform. 2023, 27, 790–803. [Google Scholar] [CrossRef] [PubMed]

- Young, A.T.; Fernandez, K.; Pfau, J.; Reddy, R.; Cao, N.A.; von Franque, M.Y.; Johal, A.; Wu, B.V.; Wu, R.R.; Chen, J.Y.; et al. Stress Testing Reveals Gaps in Clinic Readiness of Image-Based Diagnostic Artificial Intelligence Models. NPJ Digit. Med. 2021, 4, 10. [Google Scholar] [CrossRef]

- Lovejoy, C.A.; Arora, A.; Buch, V.; Dayan, I. Key considerations for the use of artificial intelligence in healthcare and clinical research. Future Healthc. J. 2022, 9, 75–78. [Google Scholar] [CrossRef] [PubMed]

- Theriault-Lauzier, P.; Cobin, D.; Tastet, O.; Langlais, E.L.; Taji, B.; Kang, G.; Chong, A.Y.; So, D.; Tang, A.; Gichoya, J.W.; et al. A Responsible Framework for Applying Artificial Intelligence on Medical Images and Signals at the Point of Care: The PACS-AI Platform. Can. J. Cardiol. 2024, 40, 1828–1840. [Google Scholar] [CrossRef]

- Sohn, J.H.; Chillakuru, Y.R.; Lee, S.; Lee, A.Y.; Kelil, T.; Hess, C.P.; Seo, Y.; Vu, T.; Joe, B.N. An Open-Source, Vender Agnostic Hardware and Software Pipeline for Integration of Artificial Intelligence in Radiology Workflow. J. Digit. Imaging 2020, 33, 1041–1046. [Google Scholar] [CrossRef] [PubMed]

- Arkoh, S.; Akudjedu, T.N.; Amedu, C.; Antwi, W.K.; Elshami, W.; Ohene-Botwe, B. Current Radiology Workforce Perspective on the Integration of Artificial Intelligence in Clinical Practice: A Systematic Review. J. Med. Imaging Radiat. Sci. 2025, 56, 101769. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Brahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef]

- Doherty, G.; McLaughlin, L.; Hughes, C.; McConnell, J.; Bond, R.; McFadden, S. A Scoping Review of Educational Programmes on Artificial Intelligence (AI) Available to Medical Imaging Staff. Radiography 2024, 30, 474–482. [Google Scholar] [CrossRef]

- Bouderhem, R. Shaping the future of AI in healthcare through ethics and governance. Humanit. Soc. Sci. Commun. 2024, 11, 416. [Google Scholar] [CrossRef]

- Zayas-Cabán, T.; Haque, S.N.; Kemper, N. Identifying Opportunities for Workflow Automation in Health Care: Lessons Learned from Other Industries. Appl. Clin. Inform. 2021, 12, 686. [Google Scholar] [CrossRef]

- Nair, M.; Svedberg, P.; Larsson, I.; Nygren, J.M. A Comprehensive Overview of Barriers and Strategies for AI Implementation in Healthcare: Mixed-Method Design. PLoS ONE 2024, 19, e0305949. [Google Scholar] [CrossRef] [PubMed]

- Petitgand, C.; Motulsky, A.; Denis, J.L.; Régis, C. Investigating the Barriers to Physician Adoption of an Artificial Intelligence- Based Decision Support System in Emergency Care: An Interpretative Qualitative Study. Stud. Health Technol. Inform. 2020, 270, 1001–1005. [Google Scholar] [CrossRef] [PubMed]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Avanzo, M.; Stancanello, J.; Pirrone, G.; Drigo, A.; Retico, A. The Evolution of Artificial Intelligence in Medical Imaging: From Computer Science to Machine and Deep Learning. Cancers 2024, 16, 3702. [Google Scholar] [CrossRef]

- Al Qurri, A.; Almekkawy, M. Improved UNet with Attention for Medical Image Segmentation. Sensors 2023, 23, 8589. [Google Scholar] [CrossRef] [PubMed]

- Siddique, N.; Paheding, S.; Reyes Angulo, A.A.; Alom, M.Z.; Devabhaktuni, V.K. Fractal, recurrent, and dense U-Net architectures with EfficientNet encoder for medical image segmentation. J. Med. Imaging 2022, 9, 064004. [Google Scholar] [CrossRef]

- Kim, H.E.; Cosa-Linan, A.; Santhanam, N.; Jannesari, M.; Maros, M.E.; Ganslandt, T. Transfer learning for medical image classification: A literature review. BMC Med. Imaging 2022, 22, 69. [Google Scholar] [CrossRef]

- Kiryu, S.; Akai, H.; Yasaka, K.; Tajima, T.; Kunimatsu, A.; Yoshioka, N.; Akahane, M.; Abe, O.; Ohtomo, K. Clinical Impact of Deep Learning Reconstruction in MRI. Radiographics 2023, 43, e220133. [Google Scholar] [CrossRef]

- Lin, D.J.; Johnson, P.M.; Knoll, F.; Lui, Y.W. Artificial Intelligence for MR Image Reconstruction: An Overview for Clinicians. J Magn Reson Imaging. 2021, 53, 1015–1028. [Google Scholar] [CrossRef]

- Keshavarz, P.; Bagherieh, S.; Nabipoorashrafi, S.A.; Chalian, H.; Rahsepar, A.A.; Kim, G.H.J.; Hassani, C.; Raman, S.S.; Bedayat, A. ChatGPT in Radiology: A Systematic Review of Performance, Pitfalls, and Future Perspectives. Diagn. Interv. Imaging 2024, 105, 251–265. [Google Scholar] [CrossRef]

- Wang, W.T.; Tan, N.; Hanson, J.A.; Crubaugh, C.A.; Hara, A.K. Initial Experience with a COVID-19 Screening Chatbot Before Radiology Appointments. J. Digit. Imaging 2022, 35, 1303. [Google Scholar] [CrossRef] [PubMed]

- Al-Aiad, A.; Momani, A.K.; Alnsour, Y.; Alsharo, M. The impact of speech recognition systems on the productivity and the workflow in radiology departments: A systematic review. In Proceedings of the AMCIS 2020 TREOs, Salt Lake City, UT, USA, 10–14 August 2020. [Google Scholar]

- Srivastav, S.; Ranjit, M.; Pérez-García, F.; Bouzid, K.; Bannur, S.; Castro, D.C.; Schwaighofer, A.; Sharma, H.; Ilse, M.; Salvatelli, V.; et al. MAIRA at RRG24: A specialised large multimodal model for radiology report generation. In Proceedings of the 23rd Workshop on Biomedical Natural Language Processing, Bangkok, Thailand, 16 August 2024; Association for Computational Linguistics: Kerrville, TX, USA, 2024; pp. 597–602. [Google Scholar]

- Kim, S.H.; Wihl, J.; Schramm, S.; Berberich, C.; Rosenkranz, E.; Schmitzer, L.; Serguen, K.; Klenk, C.; Lenhart, N.; Zimmer, C.; et al. Human-AI collaboration in large language model-assisted brain MRI differential diagnosis: A usability study. Eur. Radiol. 2025. [Google Scholar] [CrossRef] [PubMed]

- Kwong, J.C.C.; Wang, S.C.Y.; Nickel, G.C.; Cacciamani, G.E.; Kvedar, J.C. The Long but Necessary Road to Responsible Use of Large Language Models in Healthcare Research. NPJ Digit. Med. 2024, 7, 177. [Google Scholar] [CrossRef]

- Cacciamani, G.E.; Chu, T.N.; Sanford, D.I.; Abreu, A.; Duddalwar, V.; Oberai, A.; Kuo, C.C.J.; Liu, X.; Denniston, A.K.; Vasey, B.; et al. PRISMA AI Reporting Guidelines for Systematic Reviews and Meta-Analyses on AI in Healthcare. Nat. Med. 2023, 29, 14–15. [Google Scholar] [CrossRef] [PubMed]

- Fawzy, N.A.; Tahir, M.J.; Saeed, A.; Ghosheh, M.J.; Alsheikh, T.; Ahmed, A.; Lee, K.Y.; Yousaf, Z. Incidence and Factors Associated with Burnout in Radiologists: A Systematic Review. Eur. J. Radiol. Open 2023, 11, 100530. [Google Scholar] [CrossRef]

- Liu, H.; Ding, N.; Li, X.; Chen, Y.; Sun, H.; Huang, Y.; Liu, C.; Ye, P.; Jin, Z.; Bao, H.; et al. Artificial Intelligence and Radiologist Burnout. JAMA Netw. Open 2024, 7, e2448714. [Google Scholar] [CrossRef]

- Wiggins, W.F.; Caton, M.T.; Magudia, K.; Glomski, S.A.; George, E.; Rosenthal, M.H.; Gaviola, G.C.; Andriole, K.P. Preparing Radiologists to Lead in the Era of Artificial Intelligence: Designing and Implementing a Focused Data Science Pathway for Senior Radiology Residents. Radiol. Artif. Intell. 2020, 2, e200057. [Google Scholar] [CrossRef]

- van Kooten, M.J.; Tan, C.O.; Hofmeijer, E.I.S.; van Ooijen, P.M.A.; Noordzij, W.; Lamers, M.J.; Kwee, T.C.; Vliegenthart, R.; Yakar, D. A Framework to Integrate Artificial Intelligence Training into Radiology Residency Programs: Preparing the Future Radiologist. Insights Imaging 2024, 15, 15. [Google Scholar] [CrossRef]

- Kim, B.; Romeijn, S.; van Buchem, M.; Mehrizi, M.H.R.; Grootjans, W. A Holistic Approach to Implementing Artificial Intelligence in Radiology. Insights Imaging 2024, 15, 22. [Google Scholar] [CrossRef]

- Filice, R.W.; Mongan, J.; Kohli, M.D. Evaluating Artificial Intelligence Systems to Guide Purchasing Decisions. J. Am. Coll. Radiol. 2020, 17, 1405–1409. [Google Scholar] [CrossRef]

- Topff, L.; Ranschaert, E.R.; Bartels-Rutten, A.; Negoita, A.; Menezes, R.; Beets-Tan, R.G.H.; Visser, J.J. Artificial Intelligence Tool for Detection and Worklist Prioritization Reduces Time to Diagnosis of Incidental Pulmonary Embolism at CT. Radiol. Cardiothorac. Imaging 2023, 5, e220163. [Google Scholar] [CrossRef] [PubMed]

- Baltruschat, I.; Steinmeister, L.; Nickisch, H.; Saalbach, A.; Grass, M.; Adam, G.; Knopp, T.; Ittrich, H. Smart Chest X-Ray Worklist Prioritization Using Artificial Intelligence: A Clinical Workflow Simulation. Eur. Radiol. 2021, 31, 3837–3845. [Google Scholar] [CrossRef] [PubMed]

| No. | Ref No. | Application and Primary Outcome Measure | Sample Size * | Region Studied | MRI Sequences Used | AI Technique Used | Key Results |

|---|---|---|---|---|---|---|---|

| 1 | [27] | Develop AI assistant to automatically segment breast tumors by capturing dynamic changes in multi-phase DCE-MRI with spatial-temporal framework | 2190 | Breast | DCE-MRI | UNet | DSC 92.6% internal + external testing set |

| 2 | [28] | Evaluate temporally and spatially resolved (4D) radiomics approach to distinguish benign from malignant enhancing breast lesions—avoiding unnecessary biopsies | 329 | Breast | DCE-MRI | Neural Network (PCA AI classifier) | AUC 80.6% (training dataset) + 83.5% (testing dataset) |

| 3 | [29] | Propose a SwinHR scheme for breast tumor segmentation on DCE-MRI, which integrates prior hemodynamic knowledge of DCE-MRI and Swin Transformer with RLK blocks to extract temporal and spatial information | 2246 | Breast | DCE-MRI | CNN | Dice 0.75–0.81 across different datasets |

| 4 | [30] | Develop image-based automatic deep learning method to classify CMR images by sequence type and imaging plane for improved clinical post-processing efficiency | 334 | Chest (Cardiac) | Cine-CMR, T1w/T2w/T2*w, Perfusion, LGE | CNN | Accuracy and F1-scores = 81.8% and 0.82 for SVT, 94.3% and 0.94 MVT on the hold-out test set |

| 5 | [31] | Evaluating AI tool in interpreting MR imaging to produce aortic root and valve measurements by comparing accuracy and efficiency with cardiologists | 35 | Chest (Cardiac) | Cine-CMR | CNN | ICC = 0.98 between AI assessment of aortic root and valve with cardiologists |

| 6 | [32] | Accelerate multiple b-value gas DW-MRI for lung morphometry using deep learning | 101 | Chest (Lungs) | DW-MRI | DC -RDN | Improved reconstruction acceleration factor of 4 |

| 7 | [33] | Develop and test a comprehensive deep learning-based segmentation system to improve automatic upper airway segmentation in static and dynamic MRI | 160 | Chest (Lungs) | Static/dynamic 2D + 3D MR | UNet | DSC 0.84 to 0.89 across different sequences |

| 8 | [34] | Implement a deep-learning approach for segmentation of liver tumors in magnetic resonance imaging using UNet++ | 105 | Abdomen (Liver) | T1w arterial phase, T2w | UNet ++ | DSC liver and tumor segmentations > 0.9 and >0.6 respectively |

| 9 | [35] | Develop and evaluate a DCNN for automated liver segmentation, volumetry, and radiomic feature extraction on contrast-enhanced portal venous MRI | 470 | Abdomen (Liver) | 3D T1w portal venous | DCNN | DSC internal + external + public testing set 0.93–0.97 |

| 10 | [36] | Train deep learning model to differentiate pathologically proven HCC and non-HCC lesions including lesions with atypical imaging features on MRI. | 118 | Abdomen (Liver) | Multi-phasic T1w | CNN | Sensitivities/specificities for HCC + non-HCC lesions = 92.7%/82.0% and 82.0%/92.7%; AOC = 0.912 |

| 11 | [37] | Explore the feasibility of deep learning (DL) features derived from gadoxetate disodium (Gd-EOB-DTPA) MRI, qualitative features, and clinical variables for predicting early recurrence. | 285 | Abdomen (Liver) | Contrast enhanced MRI | VGGNet-19 | DL vs. clinical nomogram AUC 0.91–0.95 vs. 0.72–0.75 for training and validation set |

| 12 | [38] | Investigate the utility of DL automated segmentation-based MRI radiomic features and clinical-radiological characteristics in predicting early recurrence after curative resection of single HCC | 434 | Abdomen (Liver) | T2w | UNet | AUC 0.743 vs. 0.55–0.64 in widely adopted BCLC and CNLC systems |

| 13 | [39] | Develop and validate a ML mortality risk quantification method for HCC patients using clinical data and liver radiomics on baseline MRI | 555 | Abdomen (Liver) | T1w breath-hold sequences before + after contrast | CNN | C-indices of 0.8503 and 0.8234 |

| 14 | [40] | Develop and evaluate a CNN based algorithm to evaluate hepatobiliary phase (HBP) adequacy of gadoxetate disodium (EOB)-enhanced MRI and reduce examination time | 484 | Abdomen (Liver) | EOB-enhanced MRI-HBP | CNN | Kappa 0.67–0.80 and AUC = 0.95–0.97 for internal and external test set |

| 15 | [41] | Develop AI model for prostate segmentation and PCa detection, and explore CAD compared to conventional PI-RADS assessment | 100 | Abdomen (Prostate) | Not specified | CNN | Prostate mean DSCs fine + coarse segmentation = 0.91 + 0.88. PCa diagnosis AI CAD > consistency in internal (kappa 1.00) + external (kappa 0.96) tests vs. radiologists (kappa 0.75) |

| 16 | [42] | Utilize a DL AI workflow for automated EPE grading from prostate T2W MRI, ADC map, and High B DWI. | 634 | Abdomen (Prostate) | T2W MRI, ADC map, and High B DWI | CNN | Sensitivity of 0.67, specificity of 0.73, accuracy of 0.72 |

| 17 | [43] | Study the effect of artificial intelligence (AI) on the diagnostic performance of radiologists in interpreting prostate mpMRI images of the PI-RADS category 3. | 87 | Abdomen (Prostate) | mpMRI | CNN | AI with radiologists => diagnostic specificity + accuracy (0.695 vs. 0.000 and 0.736 vs. 0.322, p < 0.001) |

| 18 | [44] | Assess PI-RADS-trained DL algorithm performance and investigate the effect of data size and prior knowledge on the detection of csPCa in biopsy-naïve men with a suspicion of PCa. | 2734 | Abdomen (Prostate) | mpMRI | UNet | DL sensitivity for detecting PI-RADS ≥ 4 lesions = 87%; AUC of 0.88. |

| 19 | [45] | To evaluate whether an AI-driven algorithm can detect clinically significant PCa (csPCa) in patients under AS | 56 | Abdomen (Prostate) | mpMRI | CNN | Patient sensitivity/specificity = 92.5%/31% detection of ISUP ≥ 1 and 96.4%/25% detection of ISUP ≥ 2 |

| 20 | [46] | Investigate image quality, efficiency, and diagnostic performance of a DLSB against BLADE for T2WI for GC | 112 | Abdomen (Stomach) | DLSB - T2w | CNN | W test 0.679–0.869 subjective IQS; ICC 0.597–0.670 measure SNR and CNR; Kappa 0.683–0.703 agreement cT stage |

| 21 | [47] | Develop, validate, and deploy deep learning for automated TKV measurement on T2WI MRI studies of ADPKD | 129 | Abdomen (Kidney) | T2w | UNet, EfficientNet | DSC > 0.97 + Bland–Altman mean percentage difference < 3.6%. |

| 22 | [48] | Analyzes potential cost-effectiveness of integrating an AI–assisted system into differentiation of incidental renal lesions as benign or malignant on MRI follow-up | NA | Abdomen (Kidney) | T2w and T1 post contrast | ResNet | 8.76 and 8.77 QALYs for MRI vs. MRI + AI strategy |

| 23 | [49] | Develop a DL technique for fully automated segmentation of multiple OARs on clinical abdominal MRI with high accuracy, reliability, and efficiency | 102 | Abdomen | T1-VIBE | U-Net | DSC 0.87–0.96 |

| 24 | [50] | Develop a DL model for automated detection and classification of lumbar central canal, lateral recess, and neural foraminal stenosis | 446 | Spine | Axial T2w, Sagittal T1w | CNN | Internal testing classification = kappa 0.89–0.95. External testing classification = kappa 0.95–0.96 |

| 25 | [51] | To assess the speed and interobserver agreement of radiologists for reporting lumbar spinal stenosis with and without DL assistance | 25 | Spine | Axial T2w, sagittal T1w | CNN | Kappa 0.71 + 0.70 (DL) vs. 0.39 + 0.39 (no DL) for DL-assisted general + in-training radiologists (p < 0.001) |

| 26 | [52] | To externally validate SpineNet predictions for disk degeneration (DD) using Pfirrmann classification and Modic changes (MCs) | 1331 | Spine | T2w | SpineNet (DCNN) | DD and MC accuracy = 78% and 86%. PG = Lin concordance correlation coefficient 0.86 and kappa 0.68 |

| 27 | [53] | To evaluate SpineNet (SN) ratings compared with those of an expert radiologist in analysis of degenerative features in MRI scans | 882 | Spine | Not specified | SpineNet (DCNN) | Kappa 0.63–0.77 for PG, kappa 0.07–60 for SL, kappa 0.17–0.57 for CCS |

| 28 | [54] | Evaluate a deep learning model for automated and interpretable classification of central canal stenosis, neural foraminal stenosis, and facet arthropathy from lumbar spine MRI | 200 | Spine | T2WI axial | V-Net, ResNet-50 | Dural sac + intervertebral disk = DSC 0.93 and 0.94. Localization foramen and facet = 0.72 and 0.83. CCS = kappa 0.54. Neural foraminal stenosis and facet arthropathy = AUC 0.92 and 0.93 |

| 29 | [55] | Develop and validate an AI-based model that automatically classifies lumbar central canal stenosis (LCCS) using sagittal T2w MRIs | 186 | Spine | Sagittal T2w MRI | CNN | Multiclass model = kappa 0.86 vs. 0.73–0.85 for readers. Binary model = AUC 0.98; sensitivity 93% + specificity 91% vs. 98–99% and 54–74% for readers |

| 30 | [56] | To compare the image quality and diagnostic performance between standard TSE MRI and accelerated MRI with deep learning (DL)-based image reconstruction for degenerative lumbar spine diseases | 50 | Spine | T1WI, T2WI | CNN | DL_coarse + DL_fine = significantly higher SNRs T1WI and T2WI, higher CNRs on T1WI, similar CNRs on T2WI |

| 31 | [57] | To develop a DL model to accurately detect ACL ruptures on MRI and evaluate effect on accuracy and efficiency of clinicians | 3800 MRIs | MSK | Not specified | CNN | AUC 0.987, sensitivity + specificity 95.1% |

| 32 | [58] | To analyze occupation ratio using a DL framework and study fatty infiltration of supraspinatus muscle using automated region-based Otsu thresholding technique for rotator cuff tears | 240 | MSK | T1WI fast spin echo | CNN | Mean DSC/accuracy/sensitivity/specificity/relative area difference segmented lesion = 0.97/99.84/96.89/99.92/0.07 |

| 33 | [59] | To establish an automated, multitask, MRI-based deep learning system for the detailed evaluation of supraspinatus tendon (SST) injuries | 3087 | MSK | Not specified | VGG16 | AUC of VGG16 = 0.92, 0.97–0.99 for RC-MTL classifiers. ICCs of the radiologists = 0.97–0.99 |

| 34 | [60] | Evaluate performance of automated reconstruction algorithm to reconstruct denoised images from undersampled MR images in patients with shoulder pain | 38 | MSK | TSE fat saturation, sagittal T2w TSE, coronal T1w TSE | CNN | Kappa 0.95; Improved SNR and CNR (p < 0.04) |

| 35 | [61] | Investigate feasibility of DL-MRI in shoulder imaging | 400 | MSK | PD, T2w | CNN | DL-MRI (Kendall W: 0.588~0.902); non-DL-MRI (Kendall W: 0751~0.865) |

| 36 | [62] | DL assessment of lower extremities in patients with lipedema or lymphedema. Develop pipeline for landmark detection methods and segmentation | 45 | MSK | 3D DIXON MR lymphangiography | EfficientNet-B1 UNet | Z-deviation (landmark) = 4.5 mm and segmentation DSC = 0.989 |

| 37 | [63] | To propose a deep neural network for diagnosis of MR Images of tumors of the hand in order to better define preoperative diagnosis and standardize surgical treatment | 221 | MSK | T1w axial/sagittal/coronal | DeepLabv3+ DCNN, TensorFlow | Average confidence level of 71.6% in segmentation of hand tumors |

| 38 | [64] | Develop an Anatomical Context-Encoding Network (ACEnet) to incorporate 3D spatial and anatomical contexts in 2D CNNs for efficient and accurate segmentation of brain structures from MRI | 30 | Brain | T1w | UNet | Skull stripping DSC > 0.976 |

| 39 | [65] | To verify reliability of volumes automatically segmented using AI-based application and evaluate changes in brain and CSF volume with healthy aging | 133 | Brain | 3D T1w | UNet | Mean ICC 0.986; 95% CI, 0.981–0.990 |

| 40 | [66] | To evaluate inference times of novel PSFNet that synergistically fuses SS (scan specific) and SG (scan general) priors for performant MRI reconstruction in low-data regimes | NA | Brain | Not specified | PSFNet | 3.1 dB higher PSNR, 2.8% higher SSIM, and 0.3 × lower RMSE vs. baselines |

| 41 | [67] | Rapid estimation of multiparametric T1, T2, PD, 3D-QALAS measurements using SSL | NA | Brain | T1w, T2w, PD, and inversion efficiency maps | SSL-based QALAS | RMSE dictionary matching and SSL-QALAS methods = 8.62, 8.75, 9.98, and 2.42% for T1, T2, PD, and IE sequences |

| 42 | [68] | Compare effect of head motion-induced artifacts on the consistency of MR segmentation performed by DL and non-DL methods | 110 | Brain | T1w | ReSeg, FastSurfer, Kwyk | DSC 0.91–0.96 for DL vs. 0.88–0.91 non-DL |

| 43 | [69] | Evaluate and compare both image quality and quantitative image-analysis consistency of 60% accelerated volumetric MR imaging sequences with SubtleMR with standard of care (SOC) | 40 | Brain | 3D T1w | SubtleMR CNN | Both FAST-DL and SOC statistically superior to FAST for all analyzed features (p < 0.001) |

| 44 | [70] | To demonstrate a 3D CNN that performs expert-level, automated meningioma segmentation and volume estimation on MRI | 10,099 | Brain | T1w | UNet | Tumor segmentation DSC = 85.2% vs. experts 80.0–90.3% |

| 45 | [71] | Examine efficacy of new approach to classification of brain tumor MRIs through VGG19 features extractor and PGGAN augmentation model | 233 | Brain | T1w CE | VGG19 + PGGAN | VGG19 + model multiplied dataset ~ 6-fold + 96.59% accuracy. PGGAN model > accuracy VGG19 + model by >1.96% |

| 46 | [72] | Investigate the potential of DL to differentiate active and inactive lesions in MS using non-contrast FLAIR-type MRI data | 130 | Brain | T1w FLAIR | Multiple CNNs | Accuracy 85%, sensitivity 95%, specificity 75%, AUC = 0.90. ResNet50 most effective |

| 47 | [73] | To propose a DL framework that combines multi-scale attention guidance and multi-branch feature processing modules to diagnose PD by learning sMRI T2w | 504 | Brain | T2w | DCNN | Accuracy 92%, sensitivity 94%, specificity 90% F1 score 95% for identifying PD and HC |

| 48 | [74] | To propose a fully automatic pipeline for measuring the magnetic resonance parkinsonism index (MRPI) using deep learning methods | 400 | Brain | T1w | nnUNet, HRNet | Inter-rater APE on external datasets = 11.31% |

| 49 | [75] | Automatically diagnose PD from HCs with segmentation using VB-net and radiomics brain features | 246 | Brain | T1w | 3D VB-net | AUCs 0.988 and 0.976 in training and testing set |

| 50 | [76] | Evaluate whether DLR improves the image quality of intracranial MRA at 1.5 T | 40 | Brain | Axial and coronal MIP images | AI Clear IQ Engine—DLR | SNR and CNR for basilar artery > in DLR images (p < 0.001) |

| 51 | [77] | Develop and validate intelligent hypoxic–ischemic encephalopathy (HIE) identification model | 186 (HIE) + 219 (HC) | Brain | NA | DLCRN | AUC 0.868/0.813/0.798 for training/internal/independent cohorts |

| 52 | [78] | DL model to predict 2-year neurodevelopmental outcomes in neonates with HIE using MRI | 414 | Brain | T1w, T2w, and diffusion tensor imaging | OPiNE (CNN) | AUC 0.74 and 63% accuracy in-distribution test set + AUC 0.77 and 78% accuracy out-of-distribution test set |

| 53 | [79] | Automatic segmentation-based method for 2D biometric measurements fetal brain on MRI | 268 | Brain | T2w SSFSE, (BSSFP), T1w and DWI | nnUNet | Correlation coefficients CBPD, TCD, LAD and RAD = 0.977, 0.990, 0.817, 0.719 |

| 54 | [80] | LVPA-UNet model based on 2D-3D architecture to improve GTV segmentation for radiotherapy in nasopharyngeal carcinoma | 1010 | ENT | T1w | LVPA-UNet | >DSC by 2.14%, precision by 2.96%, recall by 1.01%, reduction HD95 0.543 mm |

| 55 | [81] | Evaluate DL-CAD system on radiologists’ interpretation accuracy and efficiency in reading bi-parametric prostate magnetic resonance imaging scans | 100 | Abdomen (Prostate) | Not specified | DL-CAD | DL-CAD vs. radiologist = AUC 0.88 vs. 0.84 |

| 56 | [82] | Determine if AI systems for brain MRI could be used as a clinical decision support tool to augment radiologist performance | 390 | Brain | T1w, FLAIR, GRE, T1-post diffusion | CNNs + Bayesian network | Radiology resident performance > with ARIES for = TDx (55% vs. 30%; p < 0.001) and T3DDx (79% vs. 52%; p = 0.002) |

| 57 | [83] | To evaluate the learning progress of less experienced readers in prostate MRI segmentation—in preparation for datasets in AI development | 100 MRIs | Abdomen (Prostate) | T2w, DWI | RECOMIA | Using DSC > 0.8 as threshold, residents = 99.2%; novices = 47.5%/68.3%/84.0% with subsequent rounds of teaching on accurate segmentations |

| 58 | [84] | Efficacy of AI-generated radiology reports and contribution to the advancement of radiology workflow | 685 MRI reports | NA | NA | GPT-3.5-turbo | High scores comprehension (2.71 ± 0.73) + patient-friendliness (4.69 ± 0.48) (p < 0.001). 1.12% hallucinations and 7.40% potentially harmful translations |

| 59 | [85] | Evaluate intelligent automation software (Jazz) in assessing new, slowly expanding, and contrast-enhancing MS lesions | 117 MRIs | Neurology | T1w | Jazz | Kappa 0.5 |

| 60 | [86] | Evaluate protocol determination with CNN classifier based on short-text classification and comparing protocols determined by MSK radiologists | 6275 MRIs | MSK | NA | ConvNet | CNN and radiologist = Kappa 0.88. Routine protocols and tumor protocols = AUC 0.977 |

| 61 | [22] | Efficient approach for segmentation relevant for diagnosis and treatment of OSAS | 181 | ENT | T1w, T2w TSE | UNet | DSC (test set) = 0.89, 0.87, 0.79 (tongue, pharynx, soft palate) |

| 62 | [87] | Develop an efficient preoperative MRI radiomics evaluation approach of ALN status | 1088 | Breast | T2w, DWI-ADC, T1 + contrast | ML random forest algorithm | ALN-tumor radiomic signature AUC = 0.87–0.88 in training, external, prospective-retrospective validation cohort |

| 63 | [20] | Investigate automatically identifying normal scans in ultrafast breast MRI with AI to increase efficiency and reduce workload | 438 | Breast | TWIST sequences | ResNet-34 | AUC 0.81 |

| 64 | [88] | Framework for reconstructing dynamic sequences of 2D CMR from undersampled data using CNNs | 5 | Chest (Cardiac) | 2D CMR | CNN | Preserves anatomical structures up to 11-fold under sampling |

| 65 | [89] | Fast and high-quality reconstruction of clinical accelerated multi-coil MR data by learning a VN using DL | 50 | MSK | 2D TSE | CNN | VN reconstructions > standard algorithms and acceleration factor 4 |

| 66 | [90] | Develop DL based automated OAR delineation method achieving reliable expert performance | 70 | Head and Neck | T1w | UNet | Average DSC 0.86 (0.73–0.97)—6% better than competing methods |

| No. | Relevant Study Finding(s) | Study Center Size | Reduced Scan Time | Automated Segmentation | Optimized Worklist Triage and Workflow | Time Savings and Workload Reduction | Decreased Reading Time | Overall Productivity Gain |

|---|---|---|---|---|---|---|---|---|

| 1 | Time for manual annotation reduced by factor of 20, while maintaining physician accuracy. | M | No | Yes | No | No | No | Gain |

| 2 | Fewer unnecessary biopsies of benign breast lesions by ~36.2%, p < 0.001 at the cost of up to 4.5% (n = 4) false negative low-risk cancers | S | No | Yes | No | No | No | Unclear—no dedicated reproducibility analysis of the automated lesion segmentation and feature extraction |

| 3 | Experimental evidence showed superiority to other available automated segmentation methods | M | No | Yes | No | No | No | Unclear—ablation studies used but no reasoning time or comparison to manual methods |

| 4 | Fully automated multivendor model that classifies cardiac MR images directly from scanner for post processing, validated on external data with high accuracy | M | No | No | Yes | No | No | Unclear—no reasoning time and only trained to recognize standard cardiac anatomy |

| 5 | AI delivered measurements in 2.6 s vs. mean of 334.5 s by cardiologists, without compromising accuracy. | S | No | No | No | Yes | No | Gain |

| 6 | Breath-holding reduced 17.8 s to 4.7 s; slice reconstruction within 7.2 ms | S | Yes | No | No | No | No | Gain |

| 7 | Segmentation time per subject/per slice for static 3D and dynamic 2D MRI less than 1.2 and 0.01 s respectively | S | No | Yes | No | No | No | Gain |

| 8 | Automated segmentation of liver and liver tumor saved >160 and >20 s, respectively, on average per case | S | No | Yes | No | No | No | Unclear—Despite time gains, DSC liver tumor segmentation < 0.7, raising concerns with accuracy |

| 9 | Total training time 6.35 h. Diverse training dataset of different hepatic etiologies. Mean run time of 0.52 s. | M | No | Yes | No | No | No | Gain |

| 10 | CNN accuracy of 87.3% and computational time of <3 ms | S | No | No | Yes | No | No | Gain |

| 11 | Proposed DL nomogram superior to clinical nomogram in predicting early recurrence for HCC patients post hepatectomy | M | No | No | Yes | No | No | Unclear—no reasoning time provided |

| 12 | DL-assisted automated segmentation using radiomic signatures allows better prediction of early HCC recurrence | S | No | Yes | No | No | No | Unclear—no reasoning time provided |

| 13 | 1.11 min risk prediction framework on average | S | No | No | Yes | No | No | Gain |

| 14 | 48% examinations achieved <20 min HPB delay normally applied | M | Yes | No | No | No | No | Gain |

| 15 | AI-first read (8.54 s) was faster than readers (92.72 s) and the concurrent-read method (29.15 s) | M | No | Yes | No | No | Yes | Gain |

| 16 | Improved accuracy compared against expert genitourinary radiologist | S | No | No | Yes | No | No | Unclear—no reasoning time; only compared with the performance of one expert radiologist |

| 17 | AI reduced reading time (mean: 351 s, p < 0.001) | M | No | No | No | No | Yes | Gain |

| 18 | DL sensitivity for the detection of Gleason > 6 lesions was 85% compared to 91% for a consensus panel of expert radiologists | M | No | No | No | No | Yes | Loss—no improvement in reasoning time shown, poor accuracy vs. expert unless high training case count met |

| 19 | Accurate automated AI-algorithm for prostate lesion detection and classification | S | No | No | Yes | No | No | Unclear—no reasoning time provided; adopting to clinical practice unclear |

| 20 | Vs. BLADE, DLSB reduced acquisition time of T2WI from 4 min 42 s/patient to 18 s/patient, with better overall image and comparable accuracy | S | Yes | No | No | No | No | Gain |

| 21 | 51% reduction in radiologist time for model-assisted segmentation | S | No | Yes | No | No | No | Gain |

| 22 | Additional use of AI-based algorithm may be cost-effective alternative in differentiation of incidental renal lesions on MRI | NA | No | Yes | No | No | No | Unclear—integration into clinical practice not clarified |

| 23 | Inference completed within 1 min for a three-dimensional volume of 320 × 288 × 180 | S | No | Yes | No | No | No | Gain |

| 24 | DL model detecting and classifying lumbar spinal stenosis at MRI comparable with radiologists for central canal and lateral recess stenosis | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 25 | DL-assisted radiologists = reduced interpretation time per spine MRI study. Mean of 124–274 s reduced to 47–71 s (p < 0.001) | S | No | No | No | No | Yes | Gain |

| 26 | 20.83% of disks rated differently by SpineNet compared to human raters, but only 0.85% of disks had a grade difference > 1 | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 27 | SpineNet recorded > pathological features in PG vs. radiologist | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 28 | Accurate automated grading lumbar spinal stenosis and facet arthropathy using axial sequences only | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 29 | Accurate AI-based lumbar central canal stenosis classification on sagittal MR images | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed, no external test set |

| 30 | Accelerated protocol reduced MRI acquisition time by 32.3% compared to standard protocol | S | Yes | No | No | No | No | Gain |

| 31 | AI assistance significantly improved accuracy (>96%) with reduction in diagnostic time observed (up to 9.7 s in expert or 24.5 s in trainee groups) | M | No | No | No | No | Yes | Gain |

| 32 | Analysis using freeware computer program that analyzed muscle atrophy and fatty infiltration MR images in <than 1 s | S | No | Yes | No | No | No | Gain |

| 33 | Automated multitask DL system in diagnosing SST injuries with comparable accuracy to experts | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 34 | Reconstruction performed on the scanner in ~80 s for CS DL reconstructions compared to approximately ~40 s for standard CS reconstructions | S | Yes | No | No | No | No | Loss |

| 35 | Scan time of DL-MRI (6 min 1 s) ~50% decreased compared with that of non-DL-MRI (11 min 25 s) | S | Yes | No | No | No | No | Gain |

| 36 | Prediction time/patient for first + second landmark detection = 2.7 s + 0.1 s. Mean prediction time/patient for tissue segmentation 8 s | S | No | Yes | No | No | No | Gain |

| 37 | Automated segmentation of hand tumors with 71.6% accuracy | S | No | Yes | No | No | No | Unclear—poor accuracy, improvements on segmentation time not discussed |

| 38 | Slight computation cost of 16.6% increase from baseline; MRI head scan segmentation within ~9 s | S | No | Yes | No | No | No | Gain |

| 39 | Brain and intracranial CSF volume ratios measured within 1 min | S | No | Yes | No | No | No | Gain |

| 40 | PSFNet required order of magnitude lower samples and faster inference vs. SG methods | S | Yes | No | No | No | No | Gain |

| 41 | Reconstruction multiparametric maps using pre-trained SSL-QALAS model < 10 s, no external dictionary required. Scan-specific tuning with target subject < 15 min | S | Yes | No | No | No | No | Gain |

| 42 | ReSeg DCNN inference time 11 s with >consistent segmentations | S | No | Yes | No | No | No | Gain |

| 43 | 60% reduction sequence scan time using FAST-DL—from 4 to 6 min 56 s to 2 min 40–44 s, with >quality | S | Yes | No | No | No | No | Gain |

| 44 | Reduced segmentation time by 99% (2 s/segmentation p < 0.001) + more accurate | S | No | Yes | No | No | No | Gain |

| 45 | Accurate DL model helping radiologists classify brain tumor types on MRI with less training data needed | S | No | No | No | Yes | No | Unclear—training time not compared, limited brain tumor types, inconsistent image sizes due to computational limits |

| 46 | Multiple CNNs allow accurate discerning active vs. inactive lesions without reliance on contrast agents on FLAIR images | M | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 47 | DL model can accurately distinguish PD and HC using T2w MRI | S | No | No | No | No | Yes | Unclear—improvements on interpretation time not discussed |

| 48 | Automated DL pipeline can accurately predict MRPI comparable with manual measurements | S | No | Yes | No | No | No | Unclear—limited training data, segmentation time not discussed |

| 49 | Automated segmentation of brain into 109 regions with distinguishing PD from HC in 1 min | S | No | No | No | No | Yes | Gain |

| 50 | DLR = higher quality 1.5 T intracranial MRA images with improved visualization of arteries | S | Yes | No | No | No | No | Loss—multifold increase in imaging time, benefits high image quality on disease detectability not detailed |

| 51 | DLCRN model allowing accurate identification of HIE in neonates using MRI | S | No | Yes | No | No | No | Unclear—improvement on segmentation time and clinical application not detailed |

| 52 | DL analysis neonatal brain MRI yielded high performance for predicting 2-year neurodevelopmental outcomes | M | No | Yes | No | No | No | Unclear—segmentation times and potential real time clinical application not discussed |

| 53 | Accurate deep segmentation network using 2D biometric measurements of fetal brain on MRI | S | No | Yes | No | No | No | Unclear—only normal fetal brains in training data, segmentation time vs. manual methods not detailed |

| 54 | Accurate GTV segmentation using T1w MRI for radiotherapy in nasopharyngeal carcinoma | M | No | Yes | No | No | No | Unclear—segmentation time not discussed |

| 55 | Median reading time reduced by 21% from 103 to 81 s (p < 0.001) | M | No | No | No | No | Yes | Gain |

| 56 | Improved diagnostic accuracy of radiology resident using ARIES support tool | S | No | No | No | Yes | No | Unclear—time gains or losses with support tool not discussed |

| 57 | Segmentation time of experienced resident 7 min 22 s—7 min 50 s per case | S | No | No | No | Yes | No | Gain |

| 58 | Good efficacy AI-generated radiology in terms of report summary accuracy and patient-friendliness | S | No | No | No | Yes | No | Unclear—artificial hallucinations and harmful translations might equate to more work to verify reports |

| 59 | Significantly more MS lesions detected vs. baseline. Reading time 2 min 33 s/case. | M | No | No | No | No | Yes | Gain |

| 60 | Training time approximately 10 min | S | No | No | Yes | No | No | Gain |

| 61 | Consistent with expert readings. Processing one dataset = ~2 s using modern GPU | S | No | Yes | No | No | No | Gain |

| 62 | Accurate signature that could be preoperatively used for identifying patients with ALN metastasis in early-stage invasive breast cancer | M | No | No | No | Yes | No | Unclear—potential time gains or losses with clinical application not discussed |

| 63 | Workload and scanning time reductions = 15.7% and 16.6% | S | Yes | No | No | Yes | No | Gain |

| 64 | Reconstruction < 10 s with each complete dynamic sequence and <23 ms for 2D case | S | Yes | No | No | No | No | Gain |

| 65 | Reconstruction time of 193 ms on single graphics card | S | Yes | No | No | No | No | Gain |

| 66 | Accurate DL framework for automated delineation of OARs in HN radiotherapy treatment planning | S | No | Yes | No | No | No | Unclear—segmentation time not discussed |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nair, A.; Ong, W.; Lee, A.; Leow, N.W.; Makmur, A.; Ting, Y.H.; Lee, Y.J.; Ong, S.J.; Tan, J.J.H.; Kumar, N.; et al. Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review. Diagnostics 2025, 15, 1146. https://doi.org/10.3390/diagnostics15091146

Nair A, Ong W, Lee A, Leow NW, Makmur A, Ting YH, Lee YJ, Ong SJ, Tan JJH, Kumar N, et al. Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review. Diagnostics. 2025; 15(9):1146. https://doi.org/10.3390/diagnostics15091146

Chicago/Turabian StyleNair, Arun, Wilson Ong, Aric Lee, Naomi Wenxin Leow, Andrew Makmur, Yong Han Ting, You Jun Lee, Shao Jin Ong, Jonathan Jiong Hao Tan, Naresh Kumar, and et al. 2025. "Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review" Diagnostics 15, no. 9: 1146. https://doi.org/10.3390/diagnostics15091146

APA StyleNair, A., Ong, W., Lee, A., Leow, N. W., Makmur, A., Ting, Y. H., Lee, Y. J., Ong, S. J., Tan, J. J. H., Kumar, N., & Hallinan, J. T. P. D. (2025). Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review. Diagnostics, 15(9), 1146. https://doi.org/10.3390/diagnostics15091146