Abstract

Background: Robot-assisted radical prostatectomy (RARP) is a common treatment option for prostate cancer. A 3D model for surgical guidance can improve surgical outcomes. Manual expert radiologist segmentation of the prostate and tumor in prostate MRI to create 3D models is labor-intensive and prone to inter-observer variability, highlighting the need for automated segmentation methods. Methods: This study evaluates the performance of the prostate and tumor segmentation using a commercially available AI tool without (fully automated) and with manual adjustment (AI-assisted) compared to manual segmentations on 120 patients, using several metrics, including Dice Coefficient and Hausdorff distance. Tumor detection rates were assessed with recall and precision. Results: For the prostate, both the fully automated AI model and AI-assisted model achieved a mean Dice score of 0.88, while AI-assisted had a lower Hausdorff distance (7.22 mm) compared to the fully automated (7.40 mm). For tumor segmentations, the Dice scores were 0.53 and 0.62, with Hausdorff distances of 9.53 mm and 6.62 mm obtained for fully automated AI and AI-assisted methods, respectively. The fully automated AI method had a recall of 0.74 and a precision of 0.76 in tumor detection, while the AI-assisted method achieved 0.95 recall and 0.94 precision. Fully automated segmentation required less than 1 min, while adjustments for the AI-assisted segmentation took an additional 81 s, and manual segmentation took approximately 15–30 min. Conclusions: The fully automated AI model shows promising results, offering high tumor detection rates and acceptable segmentation metrics. The AI-assisted strategy improved the relevant metrics with minimal additional time investment. Therefore, the AI-assisted segmentation method is promising for allowing 3D-model-guided surgery for all patients undergoing RARP.

1. Introduction

Prostate cancer is the most common cancer among men in Europe, and robot-assisted radical prostatectomy (RARP) is one of the common treatment options [1,2]. Successful radical prostatectomy is defined as a trifecta: the complete removal of the tumor while preserving continence and erectile function. Virtual or printed 3D models derived from prostate MRI have shown several useful applications for planning and guiding RARP in order to achieve this trifecta [3]. For example, 3D models can help with nerve-sparing planning to preserve continence and erectile function [4], guide the location of frozen sections during surgery to allow for secondary resections with negative surgical margins [5], and significantly reduce the incidence of positive surgical margins [6]. However, manual segmentations of the prostate and tumor tissue from the prostate MRI that are necessary for the generation of these 3D models are labor-intensive [7,8]. This highlights the need for automated segmentation methods. AI models have shown good results in prostate cancer detection, usually retrospectively [9]. One study showed superior results compared with 62 reads, although not superior to expert clinical practice reads [10]. These AI algorithms can generate the segmentations of the prostate and tumor needed to create 3D models. Although many AI algorithms have been developed and studied, only a few have become commercially available. One of these commercially available tools is the Prostate MR application on syngo.via (Siemens Healthineers, Forchheim, Germany), which can generate segmentations of both the tumor and prostate. Previous studies have primarily evaluated the diagnostic accuracy of AI algorithms for prostate lesions detection [11,12,13,14,15,16,17]. However, these studies did not evaluate the quality of the segmentation, for example, expressed by factors such as volume, overlap, and centre, factors that are essential for 3D-model-guided surgery. In this study, we compare the relevant voxel-based, lesion-based and volume-based metrics of the fully automated AI segmentations without and with adjustments compared with expert radiologist segmentations as a step towards AI-driven 3D-model-guided RARP.

2. Materials and Methods

2.1. Study Design

A retrospective cohort design was utilized to evaluate the performance of the Siemens AI tool for prostate and tumor segmentation compared to a manual segmentation performed by an expert radiologist. The study cohort consisted of 142 patients diagnosed with biopsy-proven localized prostate cancer, who were visiting the Netherlands Cancer Institute-Antoni van Leeuwenhoek Hospital (NKI-AVL) from February 2021 to October 2023. All included patients were scheduled for an RARP and had undergone a preoperative prostate MRI that showed at least one lesion with a Prostate Imaging Reporting and Data System (PI-RADS) score of 3–5. Patients were excluded if their MRI was older than six months prior to the RARP or if no nerve sparing was performed. The study was approved by the Institutional Review Board of the hospital (IRBd25-011) and adhered to the Declaration of Helsinki. Informed consent was obtained from all patients.

The MRI scans included in this study were acquired with various MRI machines from different manufacturers, including Philips Medical Systems, Siemens Healthineers, and GE Medical Systems; for details, see Appendix A, Table A1. Our center is part of a large prostate cancer network, ‘Prostate Cancer Network the Netherlands’, consisting of 13 centers with centralized robot-assisted radical prostatectomy performed only in our center. Also, we are a tertiary referral cancer center where second opinions are performed with MRI scans from the referring centers. Therefore, many different MRI machines were involved. All patients underwent T2-weighted MRI, apparent diffusion coefficient (ADC), and diffusion-weighted imaging (DWI) scans. For some of the patients, a dynamic contrast-enhanced (DCE) sequence was employed.

2.2. Methodology

2.2.1. Prostate and Tumor Segmentation Process

Manual Segmentation

The manual segmentations of the prostate and tumor were performed independently by a certified expert radiologist with over nine years of experience in reading prostate MRI scans. For the manual segmentation, the radiologist used the Vue PACS software v.12, Philips Medical Systems, Best, the Netherlands, Philips. All segmentations were performed on the axial T2 sequence. For tumor detection and size estimation, the T2, DWI, and ADC sequences were used (and DCE if available). In cases where an anomaly was detected on one sequence, the others were reviewed to confirm the lesion’s presence and characteristics. Additional information such as biopsy results (usually) or PSMA PET scans (in some of the patients) was also available. The radiologist used this information to enhance the accuracy of the segmentation masks. In this study, the results of the manual segmentation served as a reference standard (ground truth) for evaluating the performance of the method with the fully automated AI and the AI-assisted method.

Fully Automated AI Segmentation

MRI scans were annotated using the Prostate MR reading workflow on syngo.via VB60 (Siemens Healthineers, Erlangen, Germany), which offers an AI-based fully automatic segmentation of the tumor and prostate [11,12,13,14,15,16,17]. To create these segmentations, the MRI scans were loaded into the software and automatically pre-processed using the MR prostate module. Only the axial T2 and the DWI images were used as input for the algorithm. Subsequently, all segmentations were accepted and exported via the biopsy support in an RT Struct format.

AI-Assisted Segmentation

After the radiologist had completed the manual segmentation and the AI tool had generated the automated segmentations, the radiologist adjusted the automated segmentations to create the AI-assisted segmentation. In this set of segmentations, the radiologist performed a thorough review and adjustment of the results obtained by the automated AI method. False positives identified by the automated AI method were removed after careful consideration. Conversely, any lesions that the radiologist identified as missed by the automated AI method were manually added to ensure all tumors were captured. For lesions confirmed to be true positives, the radiologist carefully examined the boundaries and made adjustments when deemed necessary. This process was not limited to basic edits; it also involved integrating expert knowledge and cross-referencing with other imaging modalities, such as ADC, DWI, and T2-weighted sequences, to verify the presence and characteristics of the lesions. Furthermore, the time required for these adjustments was systematically measured and recorded to evaluate the efficiency of the AI-assisted approach compared to fully manual segmentation.

2.2.2. Performance Evaluation

Both the fully automatic and the AI-assisted segmentations were evaluated against the reference standard of manual segmentations (ground truth) using various metrics to ensure a comprehensive performance assessment. The metrics included lesion-based metrics (lesion-based recall and precision), voxel-based (Dice score, Hausdorff distance, Average Surface Distance, voxel-based recall and precision), and volume-based (Absolute Volume Difference, Relative Volume Difference).

For all detected and annotated tumor lesions, recall and precision values were calculated to evaluate the model’s performance. Recall represents the proportion of actual positive lesions correctly identified by the model, while precision measures the proportion of positive predictions that were accurate. These metrics provide insight into the model’s ability to detect and correctly classify lesions.

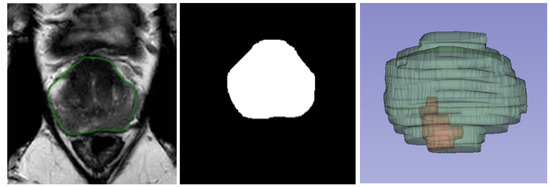

In addition to the lesion-based metrics, the segmentation performance was also assessed with the voxel-based and volume-based performance metrics. This involved calculating metrics for the prostate and all correctly detected tumor lesions classified as true positives. For each overlapping true positive lesion, a comprehensive set of metrics was applied, including the Dice Coefficient, Hausdorff distance, Average Surface Distance, Euclidean Distance of Centers, and volume-based calculations. These metrics quantified the spatial accuracy, boundary alignment, and volumetric consistency of the segmentations, enabling a detailed evaluation of the AI and AI-assisted approaches relative to the manual reference standard. This multi-level analysis, lesion-based, voxel-based, and volume-based, provided a robust framework for assessing the segmentation performance across varying levels of granularity, ensuring both clinical and technical relevance. A detailed description of all used evaluation metrics including the calculation formulas is included in Appendix A.2. For voxel-wise metric calculations, the manual boundary segmentations were converted into segmentation masks (Figure 1). In these masks, each voxel was assigned a value of 1 if it was classified as part of the segmented prostate or tumor and a value of 0 if it was categorized as background. This binary representation enabled a precise and standardized comparison across segmentation methods.

Figure 1.

From left to right: Boundary segmentation on T2-weighted MRI and segmentation mask, and 3D representation of prostate (in green) and tumor (in red).

2.2.3. Data Analysis

Voxel-based and volume-based metrics were calculated only for lesions detected by the fully automated AI or AI-assisted models that overlapped with a lesion identified by the radiologist.

When multiple lesions detected by the automated AI or AI-assisted model overlapped with a single lesion in the manual segmentation, the union of these overlapping lesions was used for metric calculations. Similarly, if multiple manually annotated lesions overlapped with a single automated AI or AI-assisted lesion, the union of the overlapping manual segmentations was considered.

In some instances, the radiologist segmented a single lesion into two separate parts, resulting in distinct segmentations. For lesions appearing very close to one another, the radiologist reviewed the segmentations to identify unintentional separations. If the separation was deemed unintentional, the two parts were combined and treated as a single lesion for analysis.

2.2.4. Statistical Tests

Descriptive statistics were employed to provide a comprehensive evaluation of the model’s performance across multiple metrics. For each metric—Dice Coefficient, Hausdorff distance, Average Surface Distance, recall, precision, Euclidean Distance of Centers, Volume Difference, and Relative Volume Difference—the mean, standard deviation, and median were calculated across all subjects (patients) to capture the overall performance trends.

The Shapiro–Wilk test was applied to assess the normality of data distributions. Based on the results, appropriate statistical tests were selected: either the Wilcoxon signed-rank test or the paired t-test was used for paired prostate datasets, while the unpaired t-test or the Mann–Whitney U test was employed for comparisons in unpaired lesion datasets, depending on the normality of the data.

To account for multiple comparisons across the eight metrics and control the false discovery rate, p-values were adjusted using the Benjamini–Yekutieli procedure. This ensured a rigorous and statistically robust analysis of the model’s performance [18].

2.2.5. Clinical Subgroups

PI-RADS

To evaluate the system’s performance across varying clinical contexts, the patient cohort was stratified into clinical subgroups. Each lesion identified by the radiologist was assigned a PI-RADS score ranging from 1 to 5, where a score of 1 represents the lowest likelihood of clinically significant malignancy, and a score of 5 represents the highest likelihood [19]. Higher PI-RADS scores are associated with more visually prominent lesions on MRI scans, potentially making them easier for the AI tool to detect. Based on these scores, the cohort was divided into three subgroups: PI-RADS 3, 4, and 5. For each subgroup, lesion-based recall values were calculated to assess the system’s detection performance. Additionally, for all true positive lesions within each subgroup, the mean, standard deviation, and median were calculated for voxel-based and volume-based performance metrics.

Due to the small sample size in the PI-RADS 3 subgroup (n = 5 lesions), this group was excluded from further statistical analysis to avoid unreliable results. For the PI-RADS 4 and 5 subgroups, the normality of metric distributions was assessed using the Shapiro–Wilk test. Depending on the results, either the unpaired t-test or the Mann–Whitney U test was applied. To account for multiple comparisons and control the false discovery rate, p-values were adjusted using the Benjamini–Yekutieli procedure.

Prostate Zone

Given that prostate cancer predominantly arises in the peripheral zone (PZ) [20], the performance of the AI tool was also evaluated across different prostatic zones. Lesions were categorized into the PZ and transition zone (TZ). Only these two zones contained a sufficient number of lesions for meaningful analysis. Recall values were calculated for both zones to assess detection rates. As in the PI-RADS analysis, the Benjamini–Yekutieli procedure was used to adjust for multiple comparisons to correct for the false discovery rate.

3. Results

A total of 142 patients were initially included in this study. Of these, all three segmentation methods (manual, fully automated, and AI-assisted) were available for 120 patients. For 22 patients, only the manual segmentation was available because the AI model was unable to annotate the prostate and lesion(s). Errors were caused by the following: split B series (), no low b values (), representation state series present (), wrong T2 series chosen (), and unknown (). This resulted in a primary analysis of 120 patients for whom complete data were available.

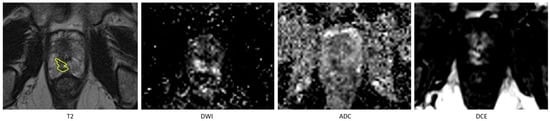

3.1. Tumor Detection

In the comparison between the radiologist and the fully automated AI method, a total of 175 lesions were manually annotated across the 120 included patients. The fully automated AI method achieved a recall of 0.74 and a precision of 0.76 in detecting these lesions. When comparing the radiologist’s segmentations to those of the AI-assisted model, the AI-assisted method demonstrated substantial improved performance, achieving a recall of 0.95 and a precision of 0.94. Detailed metrics are provided in Table 1. An example of missed lesion (false negative) by the fully automated AI method is demonstrated in Figure 2.

Table 1.

Lesion detection performance of the fully automated AI and AI-assisted models compared to 175 manually annotated lesions.

Figure 2.

An example of missed lesion (false negative) by the fully automated AI method, where lesion is annotated by the radiologist (in yellow) on T2 hypointense area in the peripheral zone in the right apex with corresponding high signal on the high-B-value DWI and low signal on the ADC and on the DCE early enhancement.

3.2. Prostate and Tumor Segmentation

Among the voxel-based and volume-based metrics tested, only voxel-based recall in the radiologist-versus-automated AI tumor comparison and Relative Volume Difference in the radiologist-versus-AI-assisted prostate comparison exhibited normal distributions, with p-values of 0.13 and 0.12, respectively. All other metrics had p-values less than 0.05, indicating non-normal distributions.

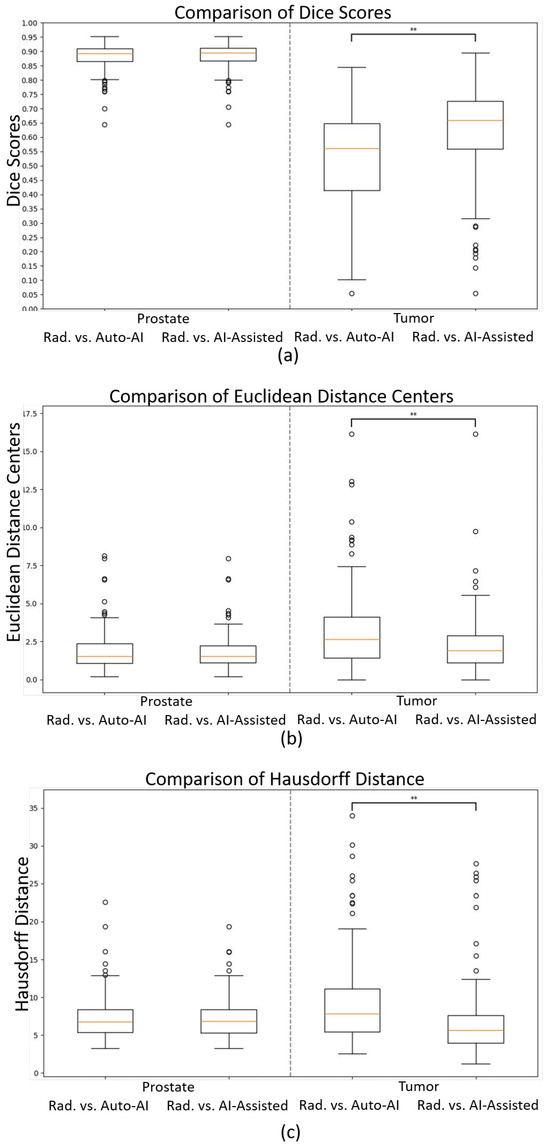

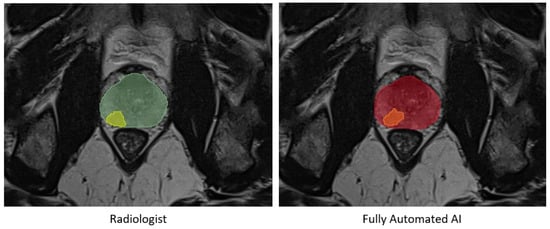

No statistically significant differences were observed in the prostate segmentation comparisons. However, in the tumor comparisons, the statistical tests on all metrics, except for precision, exhibited differences with a p-value less than 0.01 between the automated AI and AI-assisted models. Detailed results are presented in Figure 3 and Table 2 and Table 3. An example of prostate and tumor segmentation performed by a radiologist, compared to the fully automated AI method, is shown in Figure 4.

Figure 3.

Boxplots of (a) Dice scores, (b) Euclidean distances of the centers, and (c) Hausdorff distances comparing the radiologist (“Rad.”) vs. fully automated AI (“Auto-AI”) and AI-assisted models for prostate (left side) and tumor segmentations (right side). ** .

Table 2.

Comparison of segmentation performance metrics for prostate segmentations between the radiologist and the fully automated AI model versus the AI-assisted model, including mean, standard deviation (STD), median, and p-values for statistical significance.

Table 3.

Comparison of segmentation performance metrics for tumor segmentations between the radiologist and the fully automated AI model versus the AI-assisted model, including mean, standard deviation (STD), median, and p-values for statistical significance.

Figure 4.

An example of prostate segmentation and tumor segmentation by a radiologist on the left, compared to fully automated AI method output on the right. Green: Prostate segmentation by radiologist, Yellow: Tumor segmentation by radiologist, Red: Prostate segmentation by fully automated AI, Orange: Tumor segmentation by fully automated AI.

The mean time required for reviewing and adjusting the segmentations was 81 s, with a standard deviation of 65 s and a median of 69 s.

3.3. Clinical Subgroups

Of the 175 manually annotated lesions, 34 lesions were excluded from the subgroup analysis due to the unavailability of PI-RADS scores. An additional 31 lesions were excluded because the region of the lesion was not specified. Seven lesions were further excluded because they spanned multiple regions, making them unsuitable for per-region comparisons. Only one lesion was located in the anterior fibromuscular stroma (AFS); this lesion was excluded due to insufficient representation for statistical analysis.

The recall for tumor detection demonstrated an upward trend with increasing PI-RADS scores, indicating improved detection accuracy for lesions with higher malignancy likelihood. Furthermore, recall was notably higher for lesions located in the PZ compared to those in the TZ, suggesting that the system performed better in regions where prostate cancer is more commonly observed. Detailed results are provided in Table 4 and Table 5, highlighting the variations in detection performance across PI-RADS scores and prostate regions. These findings underscore the importance of lesion characteristics and anatomical context in evaluating AI-based segmentation performance.

Table 4.

Lesion detection performance metrics of the fully automatic approach by PI-RADS score, including the number of true positives, false negatives, total lesions, and recall values for each PI-RADS category.

Table 5.

Lesion detection performance metrics of the fully automatic approach by prostatic region, including the number of true positives, false negatives, total lesions, and recall values for lesions located in the peripheral zone (PZ) and transition zone (TZ).

The performance comparison across different subgroups for lesion segmentation is provided in Appendix A.3.

4. Discussion

In this study, we compare the relevant metrics of the AI-based segmentations from commercially available software without and with adjustments of an expert radiologist with manual segmentations of the radiologist as a step towards AI-driven 3D-model-guided RARP.

The prostate segmentation performance of this fully automated model was good with a Dice score of 0.88 and a Hausdorff distance of 7.4 mm. Adjustments to the AI segmentation were only rarely made by the radiologist. In a systematic review of deep learning models for prostate MRI segmentation, reported mean Dice results of 0.90 are very similar to those achieved by the AI software utilized in the present work [21]. Also, similar interobserver differences are found between radiologists (Dice 0.86 and 0.92) [22,23].

For the fully automated lesion segmentation, the metrics of the automated AI method were inferior to those of the prostate volume with a Dice score of 0.53 and a Hausdorff distance of 9.46 mm, reflecting the complexity of this task. Other automated lesion segmentation models have shown comparable results as well, for example, 0.53–0.63 [24,25,26,27], but these were internal validations which generally give better results than external validations such as ours. The lesion segmentation metrics are also similar to the interobserver results reported between radiologists (Dice 0.53–0.55 and Hausdorff 11.02–11.60 mm) [24]. The AI-assisted method improved the relevant metrics: the Dice to 0.62 and the Hausdorff distance to 6.62 mm. While the segmentation performance of the AI model appears comparable to that of the radiologist in terms of descriptive statistics, our statistical analysis revealed important differences. Specifically, several metrics for tumor segmentation—including the Dice Coefficient, Hausdorff distance, Average Surface Distance, and recall—showed statistically significant differences (p < 0.05) between the fully automated AI and AI-assisted approaches, as presented in Table 3. These findings underscore that visual or numerical similarity in mean performance does not imply equivalence. Therefore, we emphasize that while the AI-based methods demonstrate promising results, they are not statistically identical to expert radiologist performance. This highlights the added value of AI-assisted approaches and the continued importance of expert oversight in clinical applications.

An AI-assisted method might improve the detection rates compared to a radiologist alone. Ten ‘false positive lesions’ convinced the radiologist that a lesion was present, which could be interpreted as false-negative findings by the radiologist. By subtracting these ten cases from the 41 false positives generated by the AI tool, the precision of the AI tool would increase from 0.76 to 0.81.

The fully automated method provides a fast method for generating segmentations, less than a minute. While manual segmentation requires approximately 15–30 min per patient for the radiologist, the AI-assisted approach reduces this time to an average of 81 s (in addition to the automated generation time).

The AI tool relied solely on T2 and DWI MRI images, whereas the radiologist, in certain cases, used additional information, such as biopsy results and PSMA-PET scans. This makes the AI results even more impressive but also highlights the human ability to integrate a broader range of data sources.

The fully automated method results demonstrate higher recall for lesions with higher PI-RADS scores, reflecting their greater visibility and likelihood of malignancy. Similarly, the better segmentation performance in the PZ compared to the TZ aligns with the higher prevalence and distinct characteristics of prostate cancer in the peripheral zone. These findings suggest that the AI model effectively prioritizes clinically significant lesions and regions, which is consistent with the clinical relevance of PI-RADS and prostate zonal anatomy.

This study is not without limitations. The AI segmentations were compared with a single expert radiologist. The results should be confirmed with multiple radiologists. A part of the prostate MRIs were not segmented by the algorithm, and could therefore not be included in the analysis. Most of these errors (16 of 22) can be easily avoided by making sure the B-values of the DWI sequence are not split into separate series by the MRI machine when sent to the PACS. Furthermore, for optimal validation of AI software, 3D MRI models should also be compared with the ‘gold standard’, i.e., 3D pathology of the prostatectomy specimen. While this research offers a comprehensive assessment of the technical performance of the automated AI and AI-assisted models, it does not address the clinical impact of implementing the AI tool. For example, precise tumor segmentation may not be essential for creating a 3D model that is effective for surgical guidance. Annotation of the approximate location of the tumor may be sufficient to support surgical guidance. Additionally, using both biparametric (without DCE) and multiparametric MRI could have resulted in some variability in PI-RADS scores, because in the multiparametric MRI, the contrast enhancement may have caused some upgrades of the PI-RADS category. The retrospective nature of the study is also a limitation. Furthermore, a relatively small number of patients were included, especially considering the multiple variables investigated. Future studies should address these limitations.

Although the segmentations for 3D-model-guided RARP are important, ideally, more information should be provided by AI. For example, the segmentation of seminal vesicles, urethra, and bladder could improve surgical navigation. Furthermore, AI measurement/segmentation of the neurovascular bundle and membranous urethral length could improve estimated personalized incontinence and erectile dysfunction risk. Providing all these parameters could increase the benefit from AI for prostate MRI evaluation.

5. Conclusions

Although the fully automated prostate segmentation method is accurate, tumor detection still requires radiologist evaluation to enhance recall and precision and improve relevant performance metrics. Thus, an AI-assisted method presents a promising approach, while substantially reducing segmentation time. The AI-assisted segmentation method seems a feasible approach to facilitate 3D-model-guided RARP for all prostate cancer patients.

Author Contributions

Conceptualization, T.N.B. and P.J.v.L.; methodology, B.D., T.N.B. and L.d.B.; formal analysis, R.v.E., T.N.B. and B.D.; investigation, R.v.E., T.N.B., L.d.B. and S.H.v.d.G.; data curation, T.N.B., L.d.B. and S.H.v.d.G.; writing—original draft preparation, R.v.E.; writing—review and editing, T.N.B., L.d.B., L.S.M., P.J.v.L., S.H.v.d.G., H.G.v.d.P. and B.D.; visualization, R.v.E.; supervision, T.N.B. and B.D.; project administration, L.S.M., H.G.v.d.P. and P.J.v.L.; funding acquisition, T.N.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of The Netherlands Cancer Institute—Antoni van Leeuwenhoek hospital (IRBd25-011; 4 March 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available.

Acknowledgments

We thank Siemens Healthineers for providing their software and server access free of charge.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3D | Three-dimensional |

| ADC | Apparent diffusion coefficient |

| AI | Artificial intelligence |

| DCE | Dynamic contrast enhanced |

| DWI | Diffusion-weighted imaging |

| FN | False negative |

| FP | False positive |

| MRI | Magnetic resonance imaging |

| PI-RADS | Prostate imaging-reporting and data system |

| PSMA-PET | Prostate-specific membrane antigen positron emission tomography |

| PZ | Peripheral zone |

| RARP | Robot-assisted radical prostatectomy |

| STD | Standard deviation |

| TZ | Transitional zone |

Appendix A

Appendix A.1. MRI Machine Models and Manufacturers

Table A1.

MRI machine models and manufacturers.

Table A1.

MRI machine models and manufacturers.

| Manufacturer | Model | Tesla |

|---|---|---|

| Philips Medical Systems | Achieva dStream | 3 |

| Philips Medical Systems | Ingenia Elition X | 3 |

| Philips Medical Systems | Ingenia Elition S | 3 |

| Philips Medical Systems | Ingenia | 1.5 or 3 |

| Siemens Healthineers | MAGNETOM Vida | 3 |

| Siemens Healthineers | MAGNETOM Aera | 1.5 |

| Siemens Healthineers | MAGNETOM Verio | 3 |

| Siemens Healthineers | MAGNETOM Avanto fit | 1.5 |

| Siemens Healthineers | MAGNETOM Avanto DOT | 1.5 |

| Siemens Healthineers | MAGNETOM Skyra | 3 |

| Siemens Healthineers | MAGNETOM Sola | 1.5 |

| GE Medical Systems | Discovery MR750 | 3 |

Appendix A.2. Performance Evaluation Metrics

- Lesion-based Metrics

- Lesion-based Recall (Sensitivity):

This metric measures the model’s ability to detect all clinically relevant lesions. A higher recall indicates fewer missed lesions, which is crucial for comprehensive lesion detection. It can be calculated as

where represents the number of lesions detected by the fully automated AI/AI-assisted models that overlap with a lesion identified by the radiologist, and represents the number of lesions identified by the radiologist that do not overlap with any lesions from the AI/AI-assisted model.

- Lesion-based Precision:

Precision evaluates the accuracy of the detected lesions. A high precision value ensures that most of the identified lesions are truly present, reducing false positives. It can be calculated as

where represents the number of lesions detected by the fully automated AI or AI-assisted models that do not overlap with any lesions identified by the radiologist.

- Voxel-based Metrics

- Dice Score:

To assess the spatial overlap of the segmented regions, the Dice score (DSC) was calculated [28]. The Dice Coefficient measures the overlap between the predicted and reference segmentations. It ranges from 0 to 1, where 1 indicates perfect agreement between the two segmentations, demonstrating accurate segmentation. The Dice score is calculated as

where and represent the total number of voxels in the predicted and reference segmentation masks, respectively. represents the count of voxels overlapping in both segmentations.

- Hausdorff Distance:

The Hausdorff distance captures the largest boundary discrepancy between the predicted and reference segmentations. A lower value signifies a better match, highlighting areas with the greatest segmentation error [29]. The Hausdorff distance represents the greatest of all the distances from the set of boundary points in the predicted segmentation A to the closest set of boundary points in the reference segmentation B.

Hausdorff distance can be calculated as

where represents the Euclidean distance between points and . sup represents the supremum (least upper bound) and inf represents the infimum (greatest lower bound).

In this formula, is the shortest distance from a point to its closest point in B. Similarly, is the shortest distance from a point to its closest point in A. The terms and represent the largest of these shortest distances for points in A and B, respectively.

A smaller HD value indicates better alignment, reflecting fewer outliers or significant discrepancies, while a larger HD value highlights areas of substantial error. This metric is particularly useful for identifying extreme misalignments.

- Average Surface Distance:

To assess the average alignment of the boundaries, the Average Surface Distance (ASD) was calculated. This metric evaluates the average boundary alignment, providing insight into how closely the predicted and reference segmentations match, with smaller values signifying better alignment. The ASD is calculated as

where P and Q are the sets of boundary points in the predicted segmentation and reference segmentation, respectively. The terms and represent the number of boundary points in the predicted and reference segmentations. The distance denotes the shortest Euclidean distance from a boundary point to the closest point in Q, and represents the shortest Euclidean distance from a boundary point to the closest point in P.

Unlike the Hausdorff distance, which captures the worst-case error, the ASD focuses on the overall alignment quality, offering a more generalized view of segmentation performance. This metric is useful for evaluating the smoothness and precision of boundary matches between the predicted and reference segmentations.

- Centroid Euclidean Distance:

To assess how effectively the centers of the segmentation masks are aligned, the Euclidean distance between the centroids of the estimated masks and reference masks was calculated as

where represent the coordinates of center point p in 3D space, and represent the coordinates of point q in 3D space. A smaller distance indicates better localization accuracy.

- Voxel-based Recall and Precision:

To quantify the model’s ability to correctly classify all voxels that belong to the prostate or tumor, the recall was calculated, ensuring that relevant voxels are included in the segmentation. Additionally, voxel-based precision evaluates how accurately the model identifies positive voxels. Higher precision indicates fewer false positives within the segmentation. Voxel-based recall and precision are calculated as

where true positives (TPs) represent the proportion of voxels where the model correctly identifies a positive voxel, false negatives (FNs) represent the proportion of voxels where the model misses a positive voxel, and false positives (FPs) represent the proportion of voxels where the model incorrectly identifies a negative voxel.

- Volume-based Metrics

To obtain a comprehensive understanding of the size of the annotated volumes, the volumes of the segmentations were calculated. Mathematically, it can be expressed as

where V represents the total volume of the segmentation, N represents the number of voxels in the annotated region, and represents the volume of a single voxel.

The volume size of a single voxel can be expressed as

where , , and represent the voxel size in mm in the x, y, and z directions, respectively.

- Absolute Volume Difference:

This metric quantifies the absolute difference in volume between the predicted and reference segmentations. A smaller value indicates closer volumetric consistency. The Absolute Volume Difference can be expressed as

where represents the difference in volume, represents the volume of the manual segmentation, and represents the volume of the AI tool segmentation or the AI-assisted segmentation.

- Relative Volume Difference:

The Relative Volume Difference can be expressed as

which shows the Volume Difference as a percentage of the reference segmentation, highlighting proportional size accuracy.

Appendix A.3. Subgroup Analysis of Lesion Segmentation Performance

The PI-RADS 3 group, containing only 5 lesions, was excluded from further analysis due to the small sample size. Among the metrics tested, only recall in the PI-RADS 5 group exhibited a normal distribution, with a p-value of 0.40. Consequently, the Mann–Whitney U test was used to evaluate statistical differences between the subgroups.

In the comparison of PI-RADS scores, statistically significant differences were observed only for precision and Volume Difference metrics. No significant differences were found in any metrics between lesions located in the peripheral zone (PZ) and the transition zone (TZ). Detailed results are provided in Table A2 and Table A3.

Table A2.

Segmentation performance metrics for tumor comparison based on PI-RADS 4 and PI-RADS 5 scores, including mean, standard deviation, median, and p-values for statistical differences. * .

Table A2.

Segmentation performance metrics for tumor comparison based on PI-RADS 4 and PI-RADS 5 scores, including mean, standard deviation, median, and p-values for statistical differences. * .

| Metric | PI-RADS 4 | PI-RADS 5 | p-Value | ||||

|---|---|---|---|---|---|---|---|

| Mean | STD | Median | Mean | STD | Median | ||

| Dice Coefficient | 0.54 | 0.16 | 0.57 | 0.53 | 0.17 | 0.55 | 1.00 |

| Hausdorff Distance [mm] | 9.46 | 6.51 | 7.24 | 9.88 | 5.67 | 7.88 | 1.00 |

| Average Surface Distance [mm] | 1.70 | 1.51 | 1.35 | 1.99 | 1.15 | 1.78 | 0.21 |

| Recallvoxel | 0.55 | 0.21 | 0.53 | 0.44 | 0.20 | 0.46 | 0.20 |

| Precisionvoxel | 0.64 | 0.23 | 0.69 | 0.79 | 0.17 | 0.84 | * |

| Euclidean Distance Centres [mm] | 2.77 | 2.82 | 2.19 | 3.15 | 2.34 | 2.52 | 0.80 |

| Absolute Volume Difference (cc) | 1.28 | 4.71 | 0.32 | 2.17 | 6.00 | 1.01 | * |

| Relative Volume Difference | 0.80 | 1.61 | 0.41 | 0.47 | 0.32 | 0.44 | 1.00 |

Table A3.

Segmentation performance metrics for tumor comparison by prostatic region (peripheral zone and transition zone), including mean, standard deviation, median, and p-values for statistical differences.

Table A3.

Segmentation performance metrics for tumor comparison by prostatic region (peripheral zone and transition zone), including mean, standard deviation, median, and p-values for statistical differences.

| Metric | Peripheral Zone | Transition Zone | p-Value | ||||

|---|---|---|---|---|---|---|---|

| Mean | STD | Median | Mean | STD | Median | ||

| Dice Coefficient | 0.52 | 0.16 | 0.56 | 0.50 | 0.22 | 0.61 | 1.00 |

| Hausdorff Distance [mm] | 9.13 | 5.18 | 7.42 | 14.62 | 9.16 | 14.26 | 0.64 |

| Average Surface Distance [mm] | 1.73 | 0.92 | 1.66 | 3.19 | 2.63 | 1.68 | 1.00 |

| Recallvoxel | 0.48 | 0.20 | 0.51 | 0.43 | 0.20 | 0.48 | 1.00 |

| Precisionvoxel | 0.72 | 0.21 | 0.76 | 0.76 | 0.26 | 0.82 | 1.00 |

| Euclidean Distance Centres [mm] | 2.88 | 2.12 | 2.52 | 5.43 | 5.25 | 2.89 | 1.00 |

| Absolute Volume Difference (cc) | 0.90 | 1.01 | 0.56 | 8.73 | 15.26 | 1.49 | 0.66 |

| Relative Volume Difference | 0.64 | 1.32 | 0.41 | 0.92 | 1.63 | 0.44 | 1.00 |

References

- Bray, F.; Laversanne, M.; Sung, H.; Ferlay, J.; Siegel, R.L.; Soerjomataram, I.; Jemal, A. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2024, 74, 229–263. [Google Scholar] [CrossRef] [PubMed]

- Cornford, P.; van den Bergh, R.C.; Briers, E.; Van den Broeck, T.; Brunckhorst, O.; Darraugh, J.; Eberli, D.; De Meerleer, G.; De Santis, M.; Farolfi, A.; et al. EAU-EANM-ESTRO-ESUR-ISUP-SIOG guidelines on prostate cancer—2024 update. Part I: Screening, diagnosis, and local treatment with curative intent. Eur. Urol. 2024, 86, 148–163. [Google Scholar] [CrossRef] [PubMed]

- Boellaard, T.N.; Hagens, M.J.; Veerman, H.; Yakar, D.; Mertens, L.S.; Heijmink, S.W.; van der Poel, H.G.; van Leeuwen, P.J.; Schoots, I.G.; van Dijk-de Haan, M.C. Prostate MRI for improving personalized risk prediction of incontinence and surgical planning: The role of membranous urethral length measurements and the use of 3D models. Life 2023, 13, 830. [Google Scholar] [CrossRef] [PubMed]

- Veerman, H.; Boellaard, T.N.; van der Eijk, J.A.; Sluijter, J.H.; Roeleveld, T.A.; van der Sluis, T.M.; Nieuwenhuijzen, J.A.; Wit, E.; van Alphen, M.J.; van Veen, R.L.; et al. Development and clinical applicability of MRI-based 3D prostate models in the planning of nerve-sparing robot-assisted radical prostatectomy. J. Robot. Surg. 2023, 17, 509–517. [Google Scholar] [CrossRef]

- Engesser, C.; Philipp, B.; Gahl, B.; Matthias, W.; Julian, G.; Seifert, H.; Subotic, S.; Rentsch, C.; Wetterauer, C.; Bubendorf, L.; et al. 3D-printed model for resection of positive surgical margins in robot-assisted prostatectomy. BJU Int. 2024, 135, 657–667. [Google Scholar] [CrossRef]

- Checcucci, E.; Pecoraro, A.; Amparore, D.; De Cillis, S.; Granato, S.; Volpi, G.; Sica, M.; Verri, P.; Piana, A.; Piazzolla, P.; et al. The impact of 3D models on positive surgical margins after robot-assisted radical prostatectomy. World J. Urol. 2022, 40, 2221–2229. [Google Scholar] [CrossRef]

- Shahedi, M.; Cool, D.W.; Romagnoli, C.; Bauman, G.S.; Bastian-Jordan, M.; Rodrigues, G.; Ahmad, B.; Lock, M.; Fenster, A.; Ward, A.D. Postediting prostate magnetic resonance imaging segmentation consistency and operator time using manual and computer-assisted segmentation: Multiobserver study. J. Med. Imaging 2016, 3, 046002. [Google Scholar] [CrossRef]

- Bleker, J.; Kwee, T.C.; Rouw, D.; Roest, C.; Borstlap, J.; de Jong, I.J.; Dierckx, R.A.; Huisman, H.; Yakar, D. A deep learning masked segmentation alternative to manual segmentation in biparametric MRI prostate cancer radiomics. Eur. Radiol. 2022, 32, 6526–6535. [Google Scholar] [CrossRef]

- Molière, S.; Hamzaoui, D.; Ploussard, G.; Mathieu, R.; Fiard, G.; Baboudjian, M.; Granger, B.; Roupret, M.; Delingette, H.; Renard-Penna, R. A Systematic Review of the Diagnostic Accuracy of Deep Learning Models for the Automatic Detection, Localization, and Characterization of Clinically Significant Prostate Cancer on Magnetic Resonance Imaging. Eur. Urol. Oncol. 2024; in press. [Google Scholar]

- Saha, A.; Bosma, J.S.; Twilt, J.J.; van Ginneken, B.; Bjartell, A.; Padhani, A.R.; Bonekamp, D.; Villeirs, G.; Salomon, G.; Giannarini, G.; et al. Artificial intelligence and radiologists in prostate cancer detection on MRI (PI-CAI): An international, paired, non-inferiority, confirmatory study. Lancet Oncol. 2024, 25, 879–887. [Google Scholar] [CrossRef]

- Winkel, D.J.; Wetterauer, C.; Matthias, M.O.; Lou, B.; Shi, B.; Kamen, A.; Comaniciu, D.; Seifert, H.H.; Rentsch, C.A.; Boll, D.T. Autonomous detection and classification of PI-RADS lesions in an MRI screening population incorporating multicenter-labeled deep learning and biparametric imaging: Proof of concept. Diagnostics 2020, 10, 951. [Google Scholar] [CrossRef]

- Winkel, D.J.; Tong, A.; Lou, B.; Kamen, A.; Comaniciu, D.; Disselhorst, J.A.; Rodríguez-Ruiz, A.; Huisman, H.; Szolar, D.; Shabunin, I.; et al. A novel deep learning based computer-aided diagnosis system improves the accuracy and efficiency of radiologists in reading biparametric magnetic resonance images of the prostate: Results of a multireader, multicase study. Investig. Radiol. 2021, 56, 605–613. [Google Scholar] [CrossRef] [PubMed]

- Hu, L.; Fu, C.; Song, X.; Grimm, R.; Von Busch, H.; Benkert, T.; Kamen, A.; Lou, B.; Huisman, H.; Tong, A.; et al. Automated deep-learning system in the assessment of MRI-visible prostate cancer: Comparison of advanced zoomed diffusion-weighted imaging and conventional technique. Cancer Imaging 2023, 23, 6. [Google Scholar] [CrossRef]

- Youn, S.Y.; Choi, M.H.; Kim, D.H.; Lee, Y.J.; Huisman, H.; Johnson, E.; Penzkofer, T.; Shabunin, I.; Winkel, D.J.; Xing, P.; et al. Detection and PI-RADS classification of focal lesions in prostate MRI: Performance comparison between a deep learning-based algorithm (DLA) and radiologists with various levels of experience. Eur. J. Radiol. 2021, 142, 109894. [Google Scholar] [CrossRef] [PubMed]

- Labus, S.; Altmann, M.M.; Huisman, H.; Tong, A.; Penzkofer, T.; Choi, M.H.; Shabunin, I.; Winkel, D.J.; Xing, P.; Szolar, D.H.; et al. A concurrent, deep learning–based computer-aided detection system for prostate multiparametric MRI: A performance study involving experienced and less-experienced radiologists. Eur. Radiol. 2023, 33, 64–76. [Google Scholar] [CrossRef]

- Oerther, B.; Engel, H.; Nedelcu, A.; Schlett, C.L.; Grimm, R.; von Busch, H.; Sigle, A.; Gratzke, C.; Bamberg, F.; Benndorf, M. Prediction of upgrade to clinically significant prostate cancer in patients under active surveillance: Performance of a fully automated AI-algorithm for lesion detection and classification. Prostate 2023, 83, 871–878. [Google Scholar] [CrossRef]

- Thimansson, E.; Bengtsson, J.; Baubeta, E.; Engman, J.; Flondell-Sité, D.; Bjartell, A.; Zackrisson, S. Deep learning algorithm performs similarly to radiologists in the assessment of prostate volume on MRI. Eur. Radiol. 2023, 33, 2519–2528. [Google Scholar] [CrossRef]

- Benjamini, Y.; Yekutieli, D. The control of the false discovery rate in multiple testing under dependency. Ann. Stat. 2001, 29, 1165–1188. [Google Scholar] [CrossRef]

- Steenbergen, P.; Haustermans, K.; Lerut, E.; Oyen, R.; De Wever, L.; Van den Bergh, L.; Kerkmeijer, L.G.; Pameijer, F.A.; Veldhuis, W.B.; Pos, F.J.; et al. Prostate tumor delineation using multiparametric magnetic resonance imaging: Inter-observer variability and pathology validation. Radiother. Oncol. 2015, 115, 186–190. [Google Scholar] [CrossRef]

- McNeal, J.E.; Redwine, E.A.; Freiha, F.S.; Stamey, T.A. Zonal distribution of prostatic adenocarcinoma: Correlation with histologic pattern and direction of spread. Am. J. Surg. Pathol. 1988, 12, 897–906. [Google Scholar] [CrossRef]

- Fassia, M.K.; Balasubramanian, A.; Woo, S.; Vargas, H.A.; Hricak, H.; Konukoglu, E.; Becker, A.S. Deep learning prostate MRI segmentation accuracy and robustness: A systematic review. Radiol. Artif. Intell. 2024, 6, e230138. [Google Scholar] [CrossRef]

- Montagne, S.; Hamzaoui, D.; Allera, A.; Ezziane, M.; Luzurier, A.; Quint, R.; Kalai, M.; Ayache, N.; Delingette, H.; Renard-Penna, R. Challenge of prostate MRI segmentation on T2-weighted images: Inter-observer variability and impact of prostate morphology. Insights Imaging 2021, 12, 71. [Google Scholar] [CrossRef] [PubMed]

- Becker, A.S.; Chaitanya, K.; Schawkat, K.; Muehlematter, U.J.; Hötker, A.M.; Konukoglu, E.; Donati, O.F. Variability of manual segmentation of the prostate in axial T2-weighted MRI: A multi-reader study. Eur. J. Radiol. 2019, 121, 108716. [Google Scholar] [CrossRef]

- Jeganathan, T.; Salgues, E.; Schick, U.; Tissot, V.; Fournier, G.; Valéri, A.; Nguyen, T.A.; Bourbonne, V. Inter-Rater Variability of Prostate Lesion Segmentation on Multiparametric Prostate MRI. Biomedicines 2023, 11, 3309. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Yuan, B.; Kou, W.; Yan, W.; Marshall, H.; Yang, Q.; Syer, T.; Punwani, S.; Emberton, M.; Barratt, D.C.; et al. Prostate cancer segmentation from MRI by a multistream fusion encoder. Med. Phys. 2023, 50, 5489–5504. [Google Scholar] [CrossRef]

- Lai, C.C.; Wang, H.K.; Wang, F.N.; Peng, Y.C.; Lin, T.P.; Peng, H.H.; Shen, S.H. Autosegmentation of prostate zones and cancer regions from biparametric magnetic resonance images by using deep-learning-based neural networks. Sensors 2021, 21, 2709. [Google Scholar] [CrossRef]

- Chen, Y.; Xing, L.; Yu, L.; Bagshaw, H.P.; Buyyounouski, M.K.; Han, B. Automatic intraprostatic lesion segmentation in multiparametric magnetic resonance images with proposed multiple branch UNet. Med. Phys. 2020, 47, 6421–6429. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Beauchemin, M.; Thomson, K.P.; Edwards, G. On the Hausdorff distance used for the evaluation of segmentation results. Can. J. Remote Sens. 1998, 24, 3–8. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).