Classification of the ICU Admission for COVID-19 Patients with Transfer Learning Models Using Chest X-Ray Images

Abstract

1. Introduction

- Compare CNN models pre-trained on natural images vs. X-rays to assess the impact of domain-specific pre-training;

- Introduce a dataset extension strategy, incorporating an external dataset (n = 417) with different yet clinically relevant labels;

- Evaluate model performance across different training strategies;

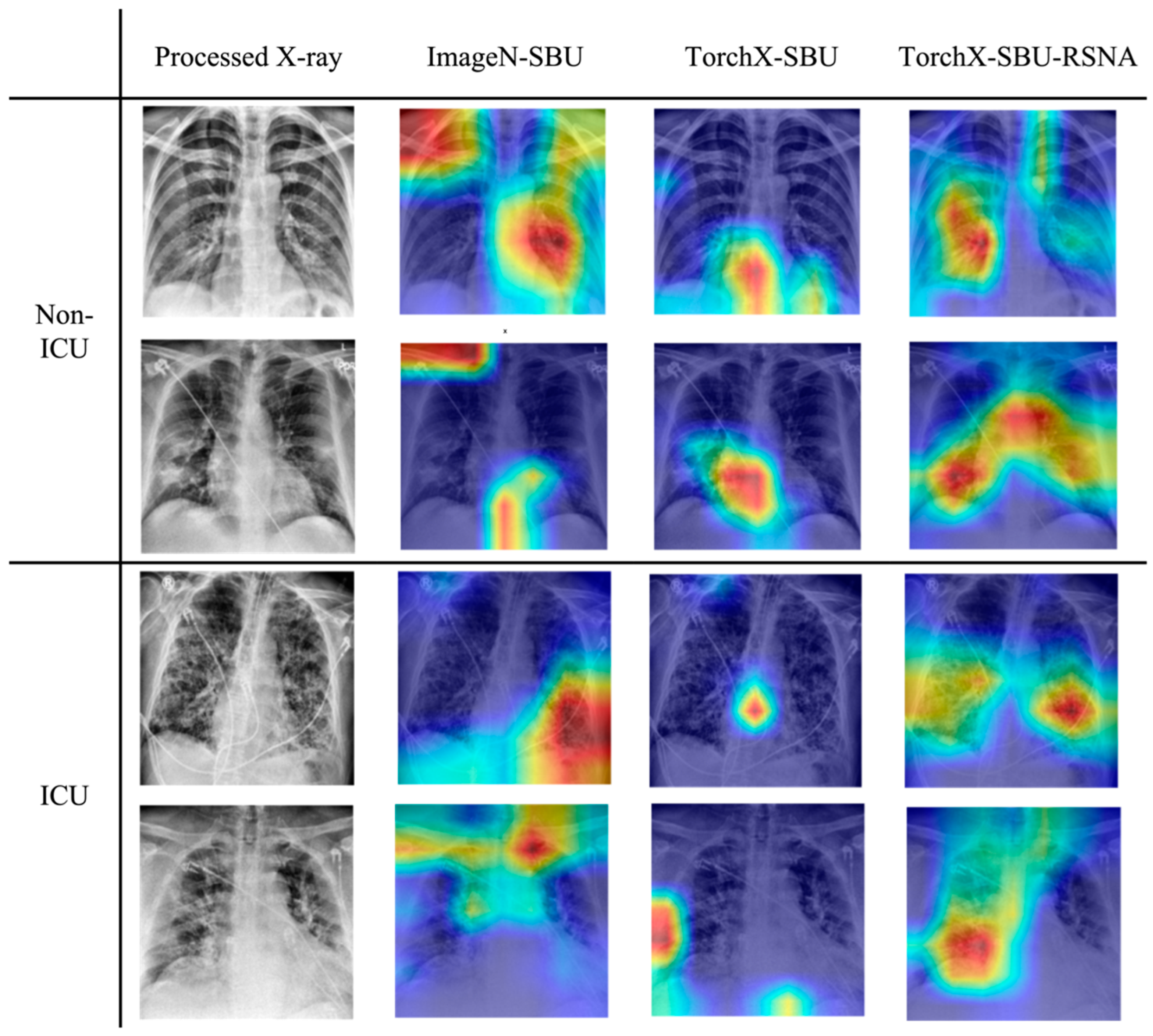

- Use Grad-CAM analysis to examine whether the models focus on lung regions that are physiologically meaningful for severe lung infections.

2. Materials and Methods

2.1. Datasets

2.1.1. Overview of the Datasets

2.1.2. COVID-19-NY-SBU

2.1.3. MIDRC-RICORD-1c

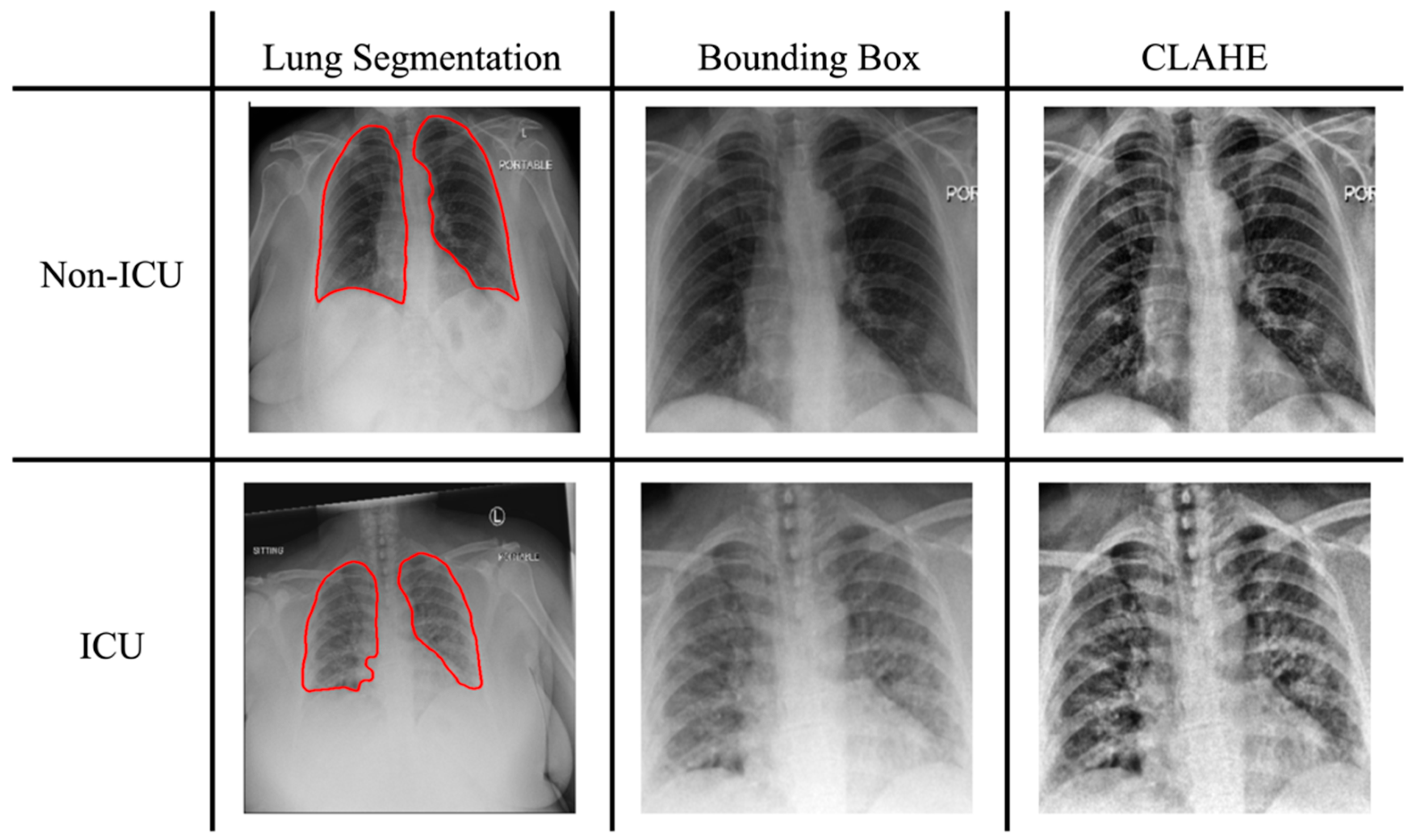

2.2. Image Preprocessing

2.2.1. Lung Segmentation

2.2.2. Oversampling and Augmentation

2.2.3. Histogram Equalization and Normalization

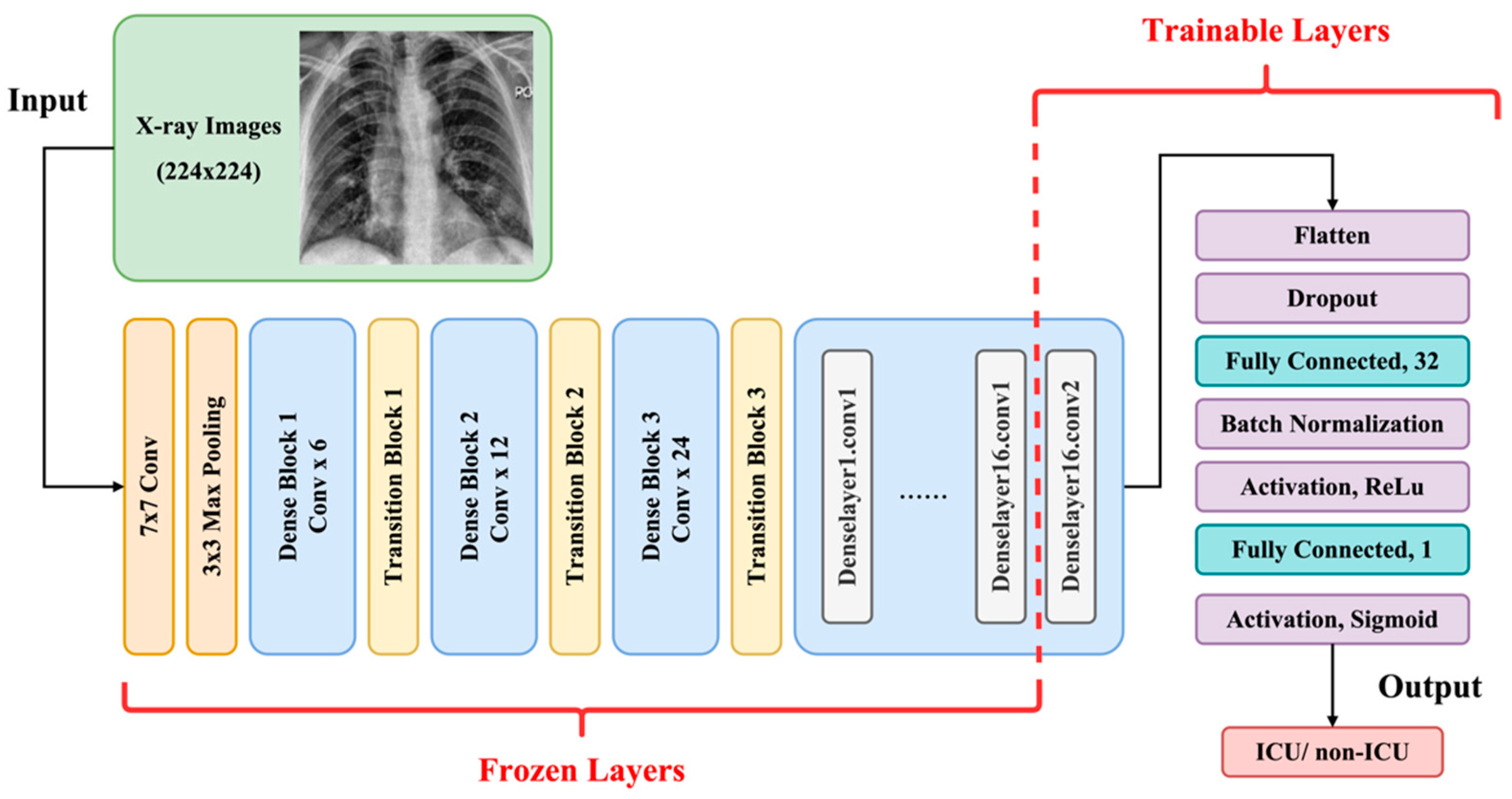

2.3. Transfer Learning and Model Architectures

2.4. Evaluation

2.5. Experiment

- ImageN-SBU: ImageNet pre-trained model re-trained with COVID-19-NY-SBU;

- TorchX-SBU: TorchXRayVision pre-trained model re-trained with COVID-19-NY-SBU;

- TorchX-SBU-RSNA: TorchXRayVision pre-trained model re-trained using an extended training dataset containing both the COVID-19-NY-SBU and the MIDRC-RICORD-1c data;

- ELIXR-SBU-RSNA: X-ray-pre-trained image encoder, with classification layers trained on both the COVID-19-NY-SBU and the MIDRC-RICORD-1c data.

3. Results

3.1. Comparison of Performance Between Different Pre-Trained Models

3.2. Impact of Training Data Expansion on Model Performance

3.3. Grad-CAM Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- WHO COVID-19 Dashboard. Geneva: World Health Organization. Available online: https://covid19.who.int/ (accessed on 21 December 2024).

- El-Shabasy, R.M.; Nayel, M.A.; Taher, M.M.; Abdelmonem, R.; Shoueir, K.R.; Kenawy, E.R. Three waves changes, new variant strains, and vaccination effect against COVID-19 pandemic. Int. J. Biol. Macromol. 2022, 204, 161–168. [Google Scholar] [CrossRef] [PubMed]

- COVID-19 Epidemiological Update—22 December 2023. World Health Organization. Available online: https://www.who.int/publications/m/item/covid-19-epidemiological-update---22-december-2023 (accessed on 21 December 2024).

- Cascella, M.; Rajnik, M.; Aleem, A.; Dulebohn, S.C.; Di Napoli, R. Features, Evaluation, and Treatment of Coronavirus (COVID-19). In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Stasi, C.; Fallani, S.; Voller, F.; Silvestri, C. Treatment for COVID-19: An overview. Eur. J. Pharmacol. 2020, 889, 173644. [Google Scholar] [CrossRef] [PubMed]

- Murakami, N.; Hayden, R.; Hills, T.; Al-Samkari, H.; Casey, J.; Del Sorbo, L.; Lawler, P.R.; Sise, M.E.; Leaf, D.E. Therapeutic advances in COVID-19. Nat. Rev. Nephrol. 2023, 19, 38–52. [Google Scholar] [CrossRef] [PubMed]

- Dougan, M.; Azizad, M.; Mocherla, B.; Gottlieb, R.L.; Chen, P.; Hebert, C.; Perry, R.; Boscia, J.; Heller, B.; Morris, J.; et al. A Randomized, Placebo-Controlled Clinical Trial of Bamlanivimab and Etesevimab Together in High-Risk Ambulatory Patients with COVID-19 and Validation of the Prognostic Value of Persistently High Viral Load. Clin. Infect. Dis. 2022, 75, e440–e449. [Google Scholar] [CrossRef]

- Gupta, A.; Gonzalez-Rojas, Y.; Juarez, E.; Crespo Casal, M.; Moya, J.; Rodrigues Falci, D.; Sarkis, E.; Solis, J.; Zheng, H.; Scott, N.; et al. Effect of Sotrovimab on Hospitalization or Death Among High-risk Patients with Mild to Moderate COVID-19: A Randomized Clinical Trial. JAMA 2022, 327, 1236–1246. [Google Scholar] [CrossRef]

- Takashita, E.; Kinoshita, N.; Yamayoshi, S.; Sakai-Tagawa, Y.; Fujisaki, S.; Ito, M.; Iwatsuki-Horimoto, K.; Chiba, S.; Halfmann, P.; Nagai, H.; et al. Efficacy of Antibodies and Antiviral Drugs against COVID-19 Omicron Variant. N. Engl. J. Med. 2022, 386, 995–998. [Google Scholar] [CrossRef]

- Gottlieb, R.L.; Vaca, C.E.; Paredes, R.; Mera, J.; Webb, B.J.; Perez, G.; Oguchi, G.; Ryan, P.; Nielsen, B.U.; Brown, M.; et al. Early Remdesivir to Prevent Progression to Severe COVID-19 in Outpatients. N. Engl. J. Med. 2022, 386, 305–315. [Google Scholar] [CrossRef]

- Shiri, I.; Arabi, H.; Salimi, Y.; Sanaat, A.; Akhavanallaf, A.; Hajianfar, G.; Askari, D.; Moradi, S.; Mansouri, Z.; Pakbin, M.; et al. COLI-Net: Deep learning-assisted fully automated COVID-19 lung and infection pneumonia lesion detection and segmentation from chest computed tomography images. Int. J. Imaging Syst. Technol. 2022, 32, 12–25. [Google Scholar] [CrossRef]

- Li, Z.; Zhao, S.; Chen, Y.; Luo, F.; Kang, Z.; Cai, S.; Zhao, W.; Liu, J.; Zhao, D.; Li, Y. A deep-learning-based framework for severity assessment of COVID-19 with CT images. Expert Syst. Appl. 2021, 185, 115616. [Google Scholar] [CrossRef]

- Ho, T.T.; Park, J.; Kim, T.; Park, B.; Lee, J.; Kim, J.Y.; Kim, K.B.; Choi, S.; Kim, Y.H.; Lim, J.-K.; et al. Deep Learning Models for Predicting Severe Progression in COVID-19-Infected Patients: Retrospective Study. JMIR Med. Inform. 2021, 9, e24973. [Google Scholar] [CrossRef]

- Chao, H.; Fang, X.; Zhang, J.; Homayounieh, F.; Arru, C.D.; Digumarthy, S.R.; Babaei, R.; Mobin, H.K.; Mohseni, I.; Saba, L.; et al. Integrative analysis for COVID-19 patient outcome prediction. Med. Image Anal. 2021, 67, 101844. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Jiao, Z.; Yang, L.; Choi, J.W.; Xiong, Z.; Halsey, K.; Tran, T.M.L.; Pan, I.; Collins, S.A.; Feng, X.; et al. Artificial intelligence for prediction of COVID-19 progression using CT imaging and clinical data. Eur. Radiol. 2022, 32, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Laino, M.E.; Ammirabile, A.; Lofino, L.; Lundon, D.J.; Chiti, A.; Francone, M.; Savevski, V. Prognostic findings for ICU admission in patients with COVID-19 pneumonia: Baseline and follow-up chest CT and the added value of artificial intelligence. Emerg. Radiol. 2022, 29, 243–262. [Google Scholar] [CrossRef]

- Gülbay, M.; Baştuğ, A.; Özkan, E.; Öztürk, B.Y.; Mendi, B.A.R.; Bodur, H. Evaluation of the models generated from clinical features and deep learning-based segmentations: Can thoracic CT on admission help us to predict hospitalized COVID-19 patients who will require intensive care? BMC Med. Imaging 2022, 22, 110. [Google Scholar] [CrossRef] [PubMed]

- Mary Shyni, H.; Chitra, E. A Comparative Study of X-Ray and Ct Images in Covid-19 Detection Using Image Processing and Deep Learning Techniques. Comput. Methods Programs Biomed. Update 2022, 2, 100054. [Google Scholar] [CrossRef]

- Willemink, M.J.; Koszek, W.A.; Hardell, C.; Wu, J.; Fleischmann, D.; Harvey, H.; Folio, L.R.; Summers, R.M.; Rubin, D.L.; Lungren, M.P. Preparing Medical Imaging Data for Machine Learning. Radiology 2020, 295, 4–15. [Google Scholar] [CrossRef]

- Mei, X.; Liu, Z.; Robson, P.M.; Marinelli, B.; Huang, M.; Doshi, A.; Jacobi, A.; Cao, C.; Link, K.E.; Yang, T.; et al. RadImageNet: An Open Radiologic Deep Learning Research Dataset for Effective Transfer Learning. Radiol. Artif. Intell. 2022, 4, e210315. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.A.; Al-Betar, M.A.; Doush, I.A.; Awadallah, M.A.; Abasi, A.K.; Makhadmeh, S.N.; Alomari, O.A.; Abdulkareem, K.H.; Adam, A.; Damasevicius, R.; et al. Review on COVID-19 diagnosis models based on machine learning and deep learning approaches. Expert Syst. 2022, 39, e12759. [Google Scholar] [CrossRef]

- Gomes, R.; Kamrowski, C.; Langlois, J.; Rozario, P.; Dircks, I.; Grottodden, K.; Martinez, M.; Tee, W.Z.; Sargeant, K.; Lafleur, C.; et al. A Comprehensive Review of Machine Learning Used to Combat COVID-19. Diagnostics 2022, 12, 1853. [Google Scholar] [CrossRef]

- Minaee, S.; Kafieh, R.; Sonka, M.; Yazdani, S.; Jamalipour Soufi, G. Deep-COVID: Predicting COVID-19 from chest X-ray images using deep transfer learning. Med. Image Anal. 2020, 65, 101794. [Google Scholar] [CrossRef] [PubMed]

- Apostolopoulos, I.D.; Mpesiana, T.A. COVID-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed]

- Hoang-Thi, T.N.; Tran, D.T.; Tran, H.D.; Tran, M.C.; Ton-Nu, T.M.; Trinh-Le, H.M.; Le-Huu, H.N.; Le-Thi, N.M.; Tran, C.T.; Le-Dong, N.N.; et al. Usefulness of Hospital Admission Chest X-ray Score for Predicting Mortality and ICU Admission in COVID-19 Patients. J. Clin. Med. 2022, 11, 3548. [Google Scholar] [CrossRef]

- Schalekamp, S.; Huisman, M.; van Dijk, R.A.; Boomsma, M.F.; Freire Jorge, P.J.; de Boer, W.S.; Herder, G.J.M.; Bonarius, M.; Groot, O.A.; Jong, E.; et al. Model-based Prediction of Critical Illness in Hospitalized Patients with COVID-19. Radiology 2021, 298, E46–E54. [Google Scholar] [CrossRef]

- Au-Yong, I.; Higashi, Y.; Giannotti, E.; Fogarty, A.; Morling, J.R.; Grainge, M.; Race, A.; Juurlink, I.; Simmonds, M.; Briggs, S.; et al. Chest Radiograph Scoring Alone or Combined with Other Risk Scores for Predicting Outcomes in COVID-19. Radiology 2022, 302, E11. [Google Scholar] [CrossRef]

- Galloway, J.B.; Norton, S.; Barker, R.D.; Brookes, A.; Carey, I.; Clarke, B.D.; Jina, R.; Reid, C.; Russell, M.D.; Sneep, R.; et al. A clinical risk score to identify patients with COVID-19 at high risk of critical care admission or death: An observational cohort study. J. Infect. 2020, 81, 282–288. [Google Scholar] [CrossRef]

- Chamberlin, J.H.; Aquino, G.; Nance, S.; Wortham, A.; Leaphart, N.; Paladugu, N.; Brady, S.; Baird, H.; Fiegel, M.; Fitzpatrick, L.; et al. Automated diagnosis and prognosis of COVID-19 pneumonia from initial ER chest X-rays using deep learning. BMC Infect. Dis. 2022, 22, 637. [Google Scholar] [CrossRef]

- Li, M.D.; Arun, N.T.; Gidwani, M.; Chang, K.; Deng, F.; Little, B.P.; Mendoza, D.P.; Lang, M.; Lee, S.I.; O’Shea, A.; et al. Automated Assessment and Tracking of COVID-19 Pulmonary Disease Severity on Chest Radiographs using Convolutional Siamese Neural Networks. Radiol. Artif. Intell. 2020, 2, e200079. [Google Scholar] [CrossRef]

- Shamout, F.E.; Shen, Y.; Wu, N.; Kaku, A.; Park, J.; Makino, T.; Jastrzebski, S.; Witowski, J.; Wang, D.; Zhang, B.; et al. An artificial intelligence system for predicting the deterioration of COVID-19 patients in the emergency department. npj Digit. Med. 2021, 4, 80. [Google Scholar] [CrossRef]

- Li, H.; Drukker, K.; Hu, Q.; Whitney, H.M.; Fuhrman, J.D.; Giger, M.L. Predicting intensive care need for COVID-19 patients using deep learning on chest radiography. J. Med. Imaging 2023, 10, 044504. [Google Scholar] [CrossRef]

- Saltz, J.; Saltz, M.; Prasanna, P.; Moffitt, R.; Hajagos, J.; Bremer, E.; Balsamo, J.; Kurc, T. Stony Brook University COVID-19 Positive Cases [Data set]. 2021. Available online: https://doi.org/10.7937/TCIA.BBAG-2923 (accessed on 27 June 2022).

- Tsai, E.B.; Simpson, S.; Lungren, M.P.; Hershman, M.; Roshkovan, L.; Colak, E.; Erickson, B.J.; Shih, G.; Stein, A.; Kalpathy-Cramer, J.; et al. The RSNA International COVID-19 Open Radiology Database (RICORD). Radiology 2021, 299, E204–E213. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.; Kamra, A. A Review on CLAHE Based Enhancement Techniques. In Proceedings of the 2023 6th International Conference on Contemporary Computing and Informatics (IC3I), Gautam Buddha Nagar, India, 14–16 September 2023. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Cohen, J.P.; Viviano, J.D.; Bertin, P.; Morrison, P.; Torabian, P.; Guarrera, M.; Lungren, M.P.; Chaudhari, A.; Brooks, R.; Hashir, M.; et al. TorchXRayVision: A library of chest X-ray datasets and models. arXiv 2021, arXiv:2111.00595. [Google Scholar] [CrossRef]

- Kassania, S.H.; Kassanib, P.H.; Wesolowskic, M.J.; Schneidera, K.A.; Detersa, R. Automatic Detection of Coronavirus Disease (COVID-19) in X-ray and CT Images: A Machine Learning Based Approach. Biocybern. Biomed. Eng. 2021, 41, 867–879. [Google Scholar] [CrossRef]

- Sellergren, A.B.; Chen, C.; Nabulsi, Z.; Li, Y.; Maschinot, A.; Sarna, A.; Huang, J.; Lau, C.; Kalidindi, S.R.; Etemadi, M.; et al. Simplified Transfer Learning for Chest Radiography Models Using Less Data. Radiology 2022, 305, 454–465. [Google Scholar] [CrossRef]

- Xu, S.; Yang, L.; Kelly, C.; Sieniek, M.; Kohlberger, T.; Ma, M.; Weng, W.-H.; Kiraly, A.; Kazemzadeh, S.; Melamed, Z.; et al. Elixr: Towards a general purpose x-ray artificial intelligence system through alignment of large language models and radiology vision encoders. arxiv 2023, arXiv:2308.01317. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding Transfer Learning for Medical Imaging. In Advances in Neural Information Processing Systems 32; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Niu, S.; Liu, M.; Liu, Y.; Wang, J.; Song, H. Distant Domain Transfer Learning for Medical Imaging. IEEE J. Biomed. Health Inform. 2021, 25, 3784–3793. [Google Scholar] [CrossRef]

- Wen, Y.; Chen, L.; Deng, Y.; Zhou, C. Rethinking pre-training on medical imaging. J. Vis. Commun. Image Represent. 2021, 78, 103145. [Google Scholar] [CrossRef]

- Islam, T.; Hafiz, S.M.; Jim, J.R.; Kabir, M.M.; Mridha, M.F. A systematic review of deep learning data augmentation in medical imaging: Recent advances and future research directions. Healthc. Anal. 2024, 5, 100340. [Google Scholar] [CrossRef]

- Guan, C.S.; Lv, Z.B.; Yan, S.; Du, Y.N.; Chen, H.; Wei, L.G.; Xie, R.M.; Chen, B.D. Imaging Features of Coronavirus disease 2019 (COVID-19): Evaluation on Thin-Section CT. Acad. Radiol. 2020, 27, 609–613. [Google Scholar] [CrossRef]

- Hosseiny, M.; Kooraki, S.; Gholamrezanezhad, A.; Reddy, S.; Myers, L. Radiology Perspective of Coronavirus Disease 2019 (COVID-19): Lessons From Severe Acute Respiratory Syndrome and Middle East Respiratory Syndrome. AJR Am. J. Roentgenol. 2020, 214, 1078–1082. [Google Scholar] [CrossRef] [PubMed]

- Salehi, S.; Abedi, A.; Balakrishnan, S.; Gholamrezanezhad, A. Coronavirus Disease 2019 (COVID-19): A Systematic Review of Imaging Findings in 919 Patients. AJR Am. J. Roentgenol. 2020, 215, 87–93. [Google Scholar] [CrossRef] [PubMed]

- Wong, H.Y.F.; Lam, H.Y.S.; Fong, A.H.; Leung, S.T.; Chin, T.W.; Lo, C.S.Y.; Lui, M.M.; Lee, J.C.Y.; Chiu, K.W.; Chung, T.W.; et al. Frequency and Distribution of Chest Radiographic Findings in Patients Positive for COVID-19. Radiology 2020, 296, E72–E78. [Google Scholar] [CrossRef]

- Smith, D.L.; Grenier, J.P.; Batte, C.; Spieler, B. A Characteristic Chest Radiographic Pattern in the Setting of the COVID-19 Pandemic. Radiol. Cardiothorac. Imaging 2020, 2, e200280. [Google Scholar] [CrossRef]

- Rod, J.E.; Oviedo-Trespalacios, O.; Cortes-Ramirez, J. A brief-review of the risk factors for COVID-19 severity. Rev. Saúde Pública 2020, 54, 60. [Google Scholar] [CrossRef]

- Reddy, R.K.; Charles, W.N.; Sklavounos, A.; Dutt, A.; Seed, P.T.; Khajuria, A. The effect of smoking on COVID-19 severity: A systematic review and meta-analysis. J. Med. Virol. 2021, 93, 1045–1056. [Google Scholar] [CrossRef]

- Kompaniyets, L.; Goodman, A.B.; Belay, B.; Freedman, D.S.; Sucosky, M.S.; Lange, S.J.; Gundlapalli, A.V.; Boehmer, T.K.; Blanck, H.M. Body Mass Index and Risk for COVID-19–Related Hospitalization, Intensive Care Unit Admission, Invasive Mechanical Ventilation, and Death—United States, March–December 2020. MMWR. Morb. Mortal. Wkly. Rep. 2021, 70, 355–361. [Google Scholar] [CrossRef]

- Gimeno-Miguel, A.; Bliek-Bueno, K.; Poblador-Plou, B.; Carmona-Pírez, J.; Poncel-Falcó, A.; González-Rubio, F.; Ioakeim-Skoufa, I.; Pico-Soler, V.; Aza-Pascual-Salcedo, M.; Prados-Torres, A.; et al. Chronic diseases associated with increased likelihood of hospitalization and mortality in 68,913 COVID-19 confirmed cases in Spain: A population-based cohort study. PLoS ONE 2021, 16, e0259822. [Google Scholar] [CrossRef]

- Bradley, S.A.; Banach, M.; Alvarado, N.; Smokovski, I.; Bhaskar, S.M.M. Prevalence and impact of diabetes in hospitalized COVID-19 patients: A systematic review and meta-analysis. J. Diabetes 2022, 14, 144–157. [Google Scholar] [CrossRef]

- Corona, G.; Pizzocaro, A.; Vena, W.; Rastrelli, G.; Semeraro, F.; Isidori, A.M.; Pivonello, R.; Salonia, A.; Sforza, A.; Maggi, M. Diabetes is most important cause for mortality in COVID-19 hospitalized patients: Systematic review and meta-analysis. Rev. Endocr. Metab. Disord. 2021, 22, 275–296. [Google Scholar] [CrossRef]

- Van Dam, P.M.E.L.; Zelis, N.; Van Kuijk, S.M.J.; Linkens, A.E.M.J.H.; Brüggemann, R.A.G.; Spaetgens, B.; Van Der Horst, I.C.C.; Stassen, P.M. Performance of prediction models for short-term outcome in COVID-19 patients in the emergency department: A retrospective study. Ann. Med. 2021, 53, 402–409. [Google Scholar] [CrossRef] [PubMed]

- Wilfong, E.M.; Lovly, C.M.; Gillaspie, E.A.; Huang, L.-C.; Shyr, Y.; Casey, J.D.; Rini, B.I.; Semler, M.W. Severity of illness scores at presentation predict ICU admission and mortality in COVID-19. J. Emerg. Crit. Care Med. 2021, 5, 7. [Google Scholar] [CrossRef] [PubMed]

- Mejía-Vilet, J.M.; Córdova-Sánchez, B.M.; Fernández-Camargo, D.A.; Méndez-Pérez, R.A.; Morales-Buenrostro, L.E.; Hernández-Gilsoul, T. A Risk Score to Predict Admission to the Intensive Care Unit in Patients with COVID-19: The ABC-GOALS score. Salud Pública 2020, 63, 1–11. [Google Scholar] [CrossRef]

- Izquierdo, J.L.; Ancochea, J.; Soriano, J.B. Clinical Characteristics and Prognostic Factors for Intensive Care Unit Admission of Patients with COVID-19: Retrospective Study Using Machine Learning and Natural Language Processing. J. Med. Internet Res. 2020, 22, e21801. [Google Scholar] [CrossRef]

- Estiri, H.; Strasser, Z.H.; Murphy, S.N. Individualized prediction of COVID-19 adverse outcomes with MLHO. Sci. Rep. 2021, 11, 5322. [Google Scholar] [CrossRef]

- Subudhi, S.; Verma, A.; Patel, A.B.; Hardin, C.C.; Khandekar, M.J.; Lee, H.; Mcevoy, D.; Stylianopoulos, T.; Munn, L.L.; Dutta, S.; et al. Comparing machine learning algorithms for predicting ICU admission and mortality in COVID-19. npj Digit. Med. 2021, 4, 87. [Google Scholar] [CrossRef]

- Jimenez-Solem, E.; Petersen, T.S.; Hansen, C.; Hansen, C.; Lioma, C.; Igel, C.; Boomsma, W.; Krause, O.; Lorenzen, S.; Selvan, R.; et al. Developing and validating COVID-19 adverse outcome risk prediction models from a bi-national European cohort of 5594 patients. Sci. Rep. 2021, 11, 3246. [Google Scholar] [CrossRef]

- Patel, D.; Kher, V.; Desai, B.; Lei, X.; Cen, S.; Nanda, N.; Gholamrezanezhad, A.; Duddalwar, V.; Varghese, B.; Oberai, A.A. Machine learning based predictors for COVID-19 disease severity. Sci. Rep. 2021, 11, 4673. [Google Scholar] [CrossRef]

- Nazir, A.; Ampadu, H.K. Interpretable deep learning for the prediction of ICU admission likelihood and mortality of COVID-19 patients. PeerJ Comput. Sci. 2022, 8, e889. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Che, Q.; Ji, X.; Meng, X.; Zhang, L.; Jia, R.; Lyu, H.; Bai, W.; Tan, L.; Gao, Y. Correlation between lung infection severity and clinical laboratory indicators in patients with COVID-19: A cross-sectional study based on machine learning. BMC Infect. Dis. 2021, 21, 192. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef] [PubMed]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Kaur, A.; Dong, G. A Complete Review on Image Denoising Techniques for Medical Images. Neural Process. Lett. 2023, 55, 7807–7850. [Google Scholar] [CrossRef]

- Motamed, S.; Rogalla, P.; Khalvati, F. Data augmentation using Generative Adversarial Networks (GANs) for GAN-based detection of Pneumonia and COVID-19 in chest X-ray images. Inform. Med. Unlocked 2021, 27, 100779. [Google Scholar] [CrossRef]

| Sets | Label | NY-SBU | Augmented |

|---|---|---|---|

| Train | ICU | 172 | 403 |

| Non-ICU | 403 | 403 | |

| Validation | ICU | 43 | 101 |

| Non-ICU | 101 | 101 | |

| Test | ICU | 54 | 54 |

| Non-ICU | 126 | 126 |

| Sets | Label | NY-SBU | RICORD | Total | Augmented |

|---|---|---|---|---|---|

| Train | ICU | 172 | 94 | 266 | 642 |

| Non-ICU | 403 | 239 | 642 | 642 | |

| Validation | ICU | 43 | 24 | 67 | 161 |

| Non-ICU | 101 | 60 | 161 | 161 | |

| Test | ICU | 54 | - | 54 | 54 |

| Non-ICU | 126 | - | 126 | 126 |

| Model | Precision | Recall | Specificity | Accuracy | Balanced Accuracy | AUC | PR AUC |

|---|---|---|---|---|---|---|---|

| ImageN-SBU | 43.6% | 55.6% | 67.9% | 64.2% | 61.7% | 0.657 | 0.426 |

| TorchX-SBU | 48.3% | 58.9% | 71.3% | 67.6% | 65.1% | 0.711 | 0.488 |

| TorchX-SBU-RSNA | 55% | 58% | 80% | 73.3% | 68.9% | 0.772 | 0.632 |

| ELIXR-SBU-RSNA | 55.8% | 62.2% | 77.5% | 72.9% | 69.8% | 0.777 | 0.629 |

| Study | Data | Model | Performance |

|---|---|---|---|

| Chamberlin et al. [30] | 2456 chest X-rays (50% COVID-negative) | dCNNs + logistic regression (chest X-ray pre-trained) | AUC = 0.870 |

| Li et al. [33] | 8357 chest X-rays (all COVID patients) | DenseNet121 (chest X-ray pre-trained) | AUC = 0.76 |

| Shamout et al. [32] | 6449 chest X-rays (all COVID patients) | COVID-GMIC * (ChestX-ray14 pre-trained) | X-ray only: AUC = 0.738 PR AUC = 0.439 X-ray + laboratory tests: AUC = 0.786 PR AUC = 0.517 |

| ImageN-SBU | COVID-19-NY-SBU (n = 899, all COVID patients) | DenseNet121 (ImageNet pre-trained) | AUC = 0.657 PR AUC = 0.426 |

| TorchX-SBU | COVID-19-NY-SBU (n = 899, all COVID patients) | DenseNet121 (TorchXRayVision pre-trained) | AUC = 0.711 PR AUC = 0.488 |

| TorchX-SBU-RSNA | COVID-19-NY-SBU and MIDRC-RICORD-1c (n = 1316, all COVID patients) | DenseNet121 (TorchXRayVision pre-trained) | AUC = 0.772 PR AUC = 0.632 |

| ELIXR-SBU-RSNA | COVID-19-NY-SBU and MIDRC-RICORD-1c (n = 1316, all COVID patients) | ELIXR (chest X-ray pre-trained) | AUC = 0.777 PR AUC = 0.629 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-C.; Fang, Y.-H.D. Classification of the ICU Admission for COVID-19 Patients with Transfer Learning Models Using Chest X-Ray Images. Diagnostics 2025, 15, 845. https://doi.org/10.3390/diagnostics15070845

Lin Y-C, Fang Y-HD. Classification of the ICU Admission for COVID-19 Patients with Transfer Learning Models Using Chest X-Ray Images. Diagnostics. 2025; 15(7):845. https://doi.org/10.3390/diagnostics15070845

Chicago/Turabian StyleLin, Yun-Chi, and Yu-Hua Dean Fang. 2025. "Classification of the ICU Admission for COVID-19 Patients with Transfer Learning Models Using Chest X-Ray Images" Diagnostics 15, no. 7: 845. https://doi.org/10.3390/diagnostics15070845

APA StyleLin, Y.-C., & Fang, Y.-H. D. (2025). Classification of the ICU Admission for COVID-19 Patients with Transfer Learning Models Using Chest X-Ray Images. Diagnostics, 15(7), 845. https://doi.org/10.3390/diagnostics15070845