Diabetic Foot Ulcers Detection Model Using a Hybrid Convolutional Neural Networks–Vision Transformers

Abstract

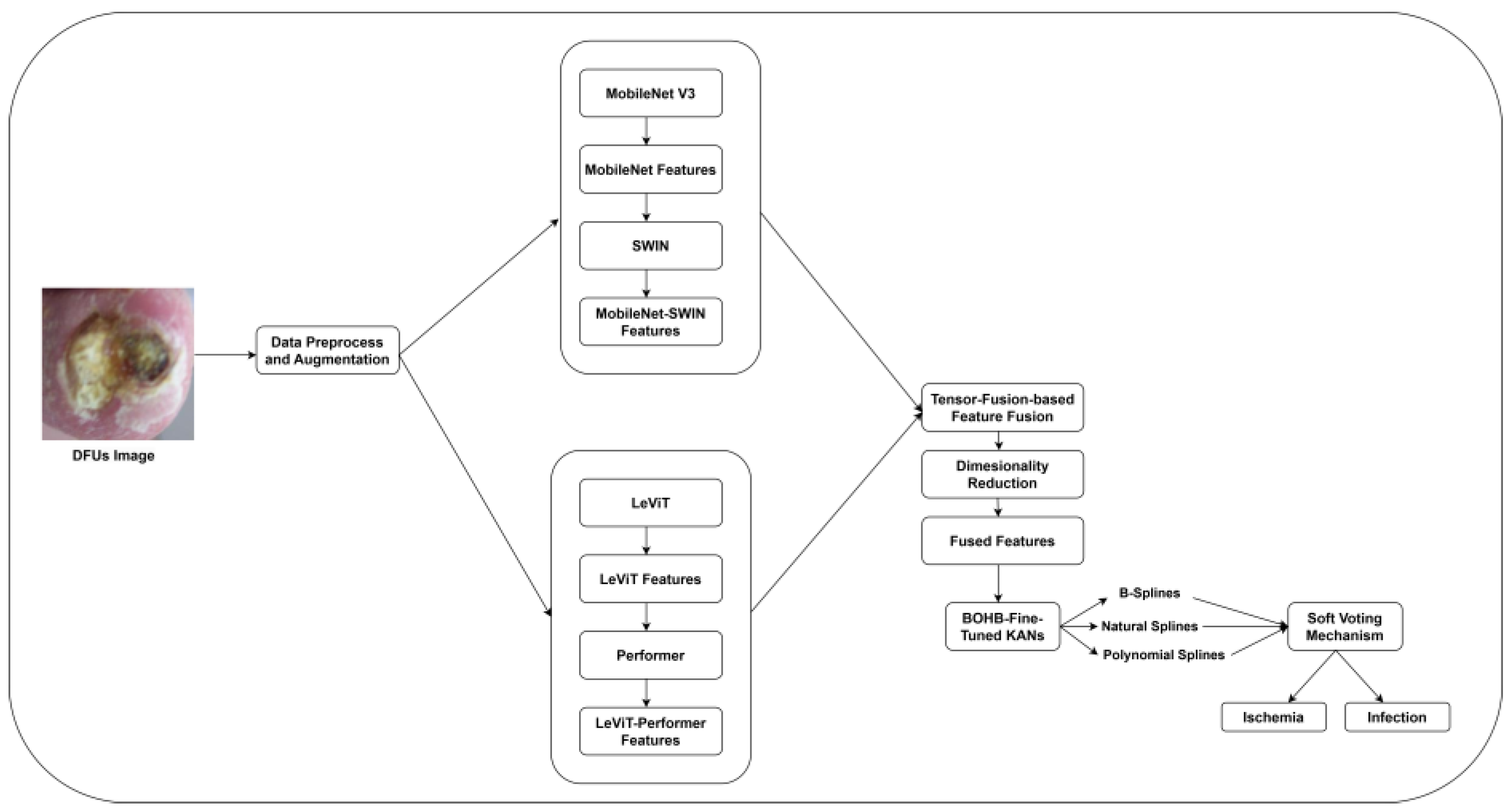

1. Introduction

- Hybrid feature extraction framework using the potentials of CNNs and ViTs:

- 2.

- Tensor fusion technique-based critical feature identification:

- 3.

- Ensembled splines-driven Kolmogorov–Arnold Networks (KANs)-based DFUs classification.

2. Materials and Methods

2.1. Data Acquisition and Preparation

2.2. MobileNet V3-SWIN Feature Extraction

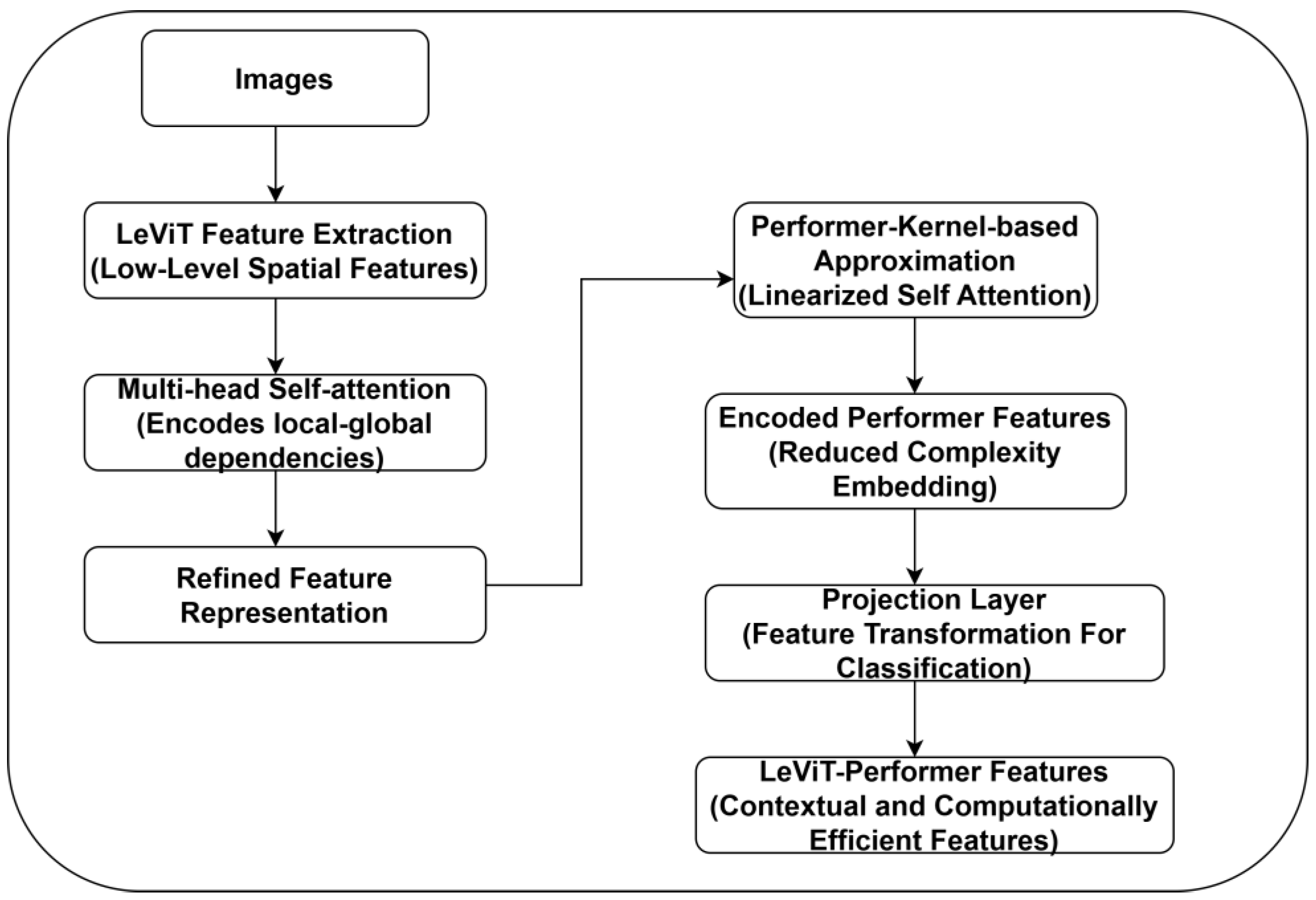

2.3. LeViT-Performer Feature Extraction

2.4. Tensor Fusion-Based Feature Fusion

2.5. Ensembled Splines Driven KANs-Based Classification

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yap, M.H.; Hachiuma, R.; Alavi, A.; Brüngel, R.; Cassidy, B.; Goyal, M.; Zhu, H.; Rückert, J.; Olshansky, M.; Huang, X.; et al. Deep learning in diabetic foot ulcers detection: A comprehensive evaluation. Comput. Biol. Med. 2021, 135, 104596. [Google Scholar] [CrossRef] [PubMed]

- Tulloch, J.; Zamani, R.; Akrami, M. Machine learning in the prevention, diagnosis and management of diabetic foot ulcers: A systematic review. IEEE Access 2020, 8, 198977–199000. [Google Scholar] [CrossRef]

- Guan, H.; Wang, Y.; Niu, P.; Zhang, Y.; Zhang, Y.; Miao, R.; Fang, X.; Yin, R.; Zhao, S.; Liu, J.; et al. The role of machine learning in advancing diabetic foot: A review. Front. Endocrinol. 2024, 15, 1325434. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhu, S.; Wang, B.Q.; Li, W.; Gu, S.; Chen, H.; Qin, C.; Dai, Y.; Li, J. Enhancing diabetic foot ulcer prediction with machine learning: A focus on Localized examinations. Heliyon 2024, 10, e37635. [Google Scholar]

- Goyal, M.; Reeves, N.D.; Rajbhandari, S.; Yap, M.H. Robust methods for real-time diabetic foot ulcer detection and localization on mobile devices. IEEE J. Biomed. Health Inform. 2018, 23, 1730–1741. [Google Scholar] [CrossRef]

- Weatherall, T.; Avsar, P.; Nugent, L.; Moore, Z.; McDermott, J.H.; Sreenan, S.; Wilson, H.; McEvoy, N.L.; Derwin, R.; Chadwick, P.; et al. The impact of machine learning on the prediction of diabetic foot ulcers–a systematic review. J. Tissue Viability 2024, 33, 853–863. [Google Scholar] [CrossRef]

- Nanda, R.; Nath, A.; Patel, S.; Mohapatra, E. Machine learning algorithm to evaluate risk factors of diabetic foot ulcers and its severity. Med. Biol. Eng. Comput. 2022, 60, 2349–2357. [Google Scholar] [CrossRef]

- Mousa, K.M.; Mousa, F.A.; Mohamed, H.S.; Elsawy, M.M. Prediction of foot ulcers using artificial intelligence for diabetic patients at Cairo university hospital, Egypt. SAGE Open Nurs. 2023, 9, 23779608231185873. [Google Scholar] [CrossRef]

- Yazdanpanah, L.; Nasiri, M.; Adarvishi, S. Literature review on the management of diabetic foot ulcer. World J. Diabetes 2015, 6, 13. [Google Scholar] [CrossRef]

- Anbarasi, L.J.; Jawahar, M.; Jayakumari, R.B.; Narendra, M.; Ravi, V.; Neeraja, R. An overview of current developments and methods for identifying diabetic foot ulcers: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1562. [Google Scholar] [CrossRef]

- Das, S.K.; Roy, P.; Singh, P.; Diwakar, M.; Singh, V.; Maurya, A.; Kumar, S.; Kadry, S.; Kim, J. Diabetic foot ulcer identification: A review. Diagnostics 2023, 13, 1998. [Google Scholar] [CrossRef] [PubMed]

- Goyal, M.; Reeves, N.D.; Rajbhandari, S.; Ahmad, N.; Wang, C.; Yap, M.H. Recognition of ischaemia and infection in diabetic foot ulcers: Dataset and techniques. Comput. Biol. Med. 2020, 117, 103616. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Qiu, Y.; Peng, L.; Zhou, Q.; Wang, Z.; Qi, M. A comprehensive review of methods based on deep learning for diabetes-related foot ulcers. Front. Endocrinol. 2022, 13, 945020. [Google Scholar] [CrossRef] [PubMed]

- Fadhel, M.A.; Alzubaidi, L.; Gu, Y.; Santamaría, J.; Duan, Y. Real-time diabetic foot ulcer classification based on deep learning & parallel hardware computational tools. Multimedia Tools Appl. 2024, 83, 70369–70394. [Google Scholar]

- Khandakar, A.; Chowdhury, M.E.; Reaz, M.B.I.; Ali, S.H.M.; Hasan, M.A.; Kiranyaz, S.; Rahman, T.; Alfkey, R.; Bakar, A.A.A.; Malik, R.A. A machine-learning model for early detection of diabetic foot using thermogram images. Comput. Biol. Med. 2021, 137, 104838. [Google Scholar] [CrossRef]

- Biswas, S.; Mostafiz, R.; Uddin, M.S.; Paul, B.K. XAI-FusionNet: Diabetic foot ulcer detection based on multi-scale feature fusion with explainable artificial intelligence. Heliyon 2024, 10, e31228. [Google Scholar] [CrossRef]

- Prabhu, M.S.; Verma, S. A deep learning framework and its implementation for diabetic Foot ulcer classification. In Proceedings of the 2021 9th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 3–4 September 2021; pp. 1–5. [Google Scholar]

- Reyes-Luévano, J.; Guerrero-Viramontes, J.A.; Romo-Andrade, J.R.; Funes-Gallanzi, M. DFU_VIRNet: A novel Visible-InfraRed CNN to improve diabetic foot ulcer classification and early detection of ulcer risk zones. Biomed. Signal Process. Control 2023, 86, 105341. [Google Scholar] [CrossRef]

- Sharma, A.; Kaushal, A.; Dogra, K.; Mohana, R. Deep Learning Perspectives for Prediction of Diabetic Foot Ulcers. In Metaverse Applications for Intelligent Healthcare; IGI Global: Hershey, PA, USA, 2024; pp. 203–228. [Google Scholar]

- Bansal, N.; Vidyarthi, A. DFootNet: A Domain Adaptive Classification Framework for Diabetic Foot Ulcers Using Dense Neural Network Architecture. Cogn. Comput. 2024, 16, 2511–2527. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Anjum, M.A.; Khan, H.U.; Malik, M.S.A.; Kadry, S. An integrated design for classification and localization of diabetic foot ulcer based on CNN and YOLOv2-DFU models. IEEE Access 2020, 8, 228586–228597. [Google Scholar] [CrossRef]

- Diabetic foot Ulcers Dataset. Available online: https://dfu-challenge.github.io/dfuc2021.html (accessed on 22 October 2024).

- Koonce, B. MobileNetV3. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; O′Reilly Media: Sevastopol, CA, USA, 2021; pp. 125–144. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference On Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Graham, B.; El-Nouby, A.; Touvron, H.; Stock, P.; Joulin, A.; Jégou, H.; Douze, M. Levit: A vision transformer in convnet’s clothing for faster inference. In Proceedings of the IEEE/CVF International Conference On Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12259–12269. [Google Scholar]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking attention with performers. arXiv 2020, arXiv:2009.14794. [Google Scholar]

- Mungoli, N. Adaptive Feature Fusion: Enhancing Generalization in Deep Learning Models. arXiv 2023, arXiv:2304.03290. [Google Scholar]

- Wang, T.; Li, M.; Chen, Z.; Chen, X.; Chen, H.; Wu, B.; Wu, X.; Li, Y.; Lu, Y. Tensor based multi-modality fusion for prediction of postoperative early recurrence of single hepatocellular carcinoma. World J. Gastroenterol. 2023, 12465, 1246524. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Marcílio, W.E.; Eler, D.M. From explanations to feature selection: Assessing SHAP values as feature selection mechanism. In Proceedings of the 2020 33rd SIBGRAPI conference on Graphics, Patterns and Images (SIBGRAPI), Porto de Galinhas, Brazil, 7–10 November 2020; pp. 340–347. [Google Scholar]

- Al-Garaawi, N.; Ebsim, R.; Alharan, A.F.; Yap, M.H. Diabetic foot ulcer classification using mapped binary patterns and convolutional neural networks. Comput. Biol. Med. 2022, 140, 105055. [Google Scholar] [CrossRef]

- Galdran, A.; Carneiro, G.; Ballester, M.A. Convolutional nets versus vision transformers for diabetic foot ulcer classification. In Diabetic Foot Ulcers Grand Challenge; Springer: Cham, Switzerland, 2022; pp. 21–29. [Google Scholar]

- Bloch, L.; Brungel, R.; Friedrich, C.M. Boosting efficientnets ensemble performance via pseudo-labels and synthetic images by pix2pixHD for infection and ischaemia classification in diabetic foot ulcers. In Diabetic Foot Ulcers Grand Challenge; Springer: Cham, Switzerland, 2022; pp. 30–49. 2p. [Google Scholar]

- Ahmed, S.; Naveed, H. Bias adjustable activation network for imbalanced data. In Diabetic Foot Ulcers Grand Challenge; Yap, M.H., Cassidy, B., Kendrick, C., Eds.; Springer: Cham, Switzerland, 2022; pp. 50–61. [Google Scholar]

- Toofanee, M.S.A.; Dowlut, S.; Hamroun, M.; Tamine, K.; Petit, V.; Duong, A.K.; Sauveron, D. Dfu-Siam a novel diabetic foot ulcer classification with deep learning. IEEE Access 2023, 11, 98315–98332. [Google Scholar] [CrossRef]

- Qayyum, A.; Benzinou, A.; Mazher, M.; Meriaudeau, F. Efficient multi-model vision transformer based on feature fusion for classification of DFUC 2021 challenge. In Diabetic Foot Ulcers Grand Challenge; Yap, M.H., Cassidy, B., Kendrick, C., Eds.; Springer: Cham, Switzerland, 2022; pp. 62–75. [Google Scholar]

- Sarmun, R.; Chowdhury, M.E.; Murugappan, M.; Aqel, A.; Ezzuddin, M.; Rahman, S.M.; Khandakar, A.; Akter, S.; Alfkey, R.; Hasan, A. Diabetic Foot Ulcer Detection: Combining Deep Learning Models for Improved Localization. Cogn. Comput. 2024, 16, 1413–1431. [Google Scholar] [CrossRef]

- Ahsan, M.; Naz, S.; Ahmad, R.; Ehsan, H.; Sikandar, A. A deep learning approach for diabetic foot ulcer classification and recognition. Information 2023, 14, 36. [Google Scholar] [CrossRef]

- Salam, A.; Ullah, F.; Amin, F.; Khan, I.A.; Villena, E.G.; Castilla, A.K.; de la Torre, I. Efficient prediction of anticancer peptides through deep learning. PeerJ Comput. Sci. 2024, 10, e2171. [Google Scholar] [CrossRef]

- Khan, I.; Akbar, W.; Soomro, A.; Hussain, T.; Khalil, I.; Khan, M.N.; Salam, A. Enhancing Ocular Health Precision: Cataract Detection Using Fundus Images and ResNet-50. IECE Trans. Intell. Syst. 2024, 1, 145–160. [Google Scholar] [CrossRef]

- Asif, M.; Naz, S.; Ali, F.; Salam, A.; Amin, F.; Ullah, F.; Alabrah, A. Advanced Zero-Shot Learning (AZSL) Framework for Secure Model Generalization in Federated Learning. IEEE Access 2024, 12, 184393–184407. [Google Scholar] [CrossRef]

| Feature Name | Description | Importance For Model’s Interpretability |

|---|---|---|

| Ulcer shape | Geometric structure of the ulcer | Distinguishing ischemia from infection |

| Ulcer texture | Granulation tissue pattern, surface roughness, and irregularities | Differentiating healthy and ulcer patterns |

| Ulcer color | RGB distribution of ulcer region | Distinguishing necrotic and infected ulcers |

| Wound exudate | Presence of fluid in the ulcer | Infection indicator |

| Depth information | Shallow or deep ulcers based on color gradients | Significant for assessing ulcer severities |

| Lighting condition | Brightness, contrast, and exposure variation | Ensuring model robustness under clinical image variability |

| Foot region | Forefoot, mid-foot, and heel ulcer region | Significant for making clinical decisions |

| Model | Parameters | Values |

|---|---|---|

| MobileNet V3- SWIN | Backbone | MobileNet V3 |

| Transformer head | SWIN transformer with windowed self attention | |

| Batch size | 64 | |

| Optimizer | AdamW | |

| Learning rate | ||

| Weight delay | ||

| LeViT-Performer | CNN-Transformer fusion | LeViT combines CNN’s spatial efficiency with Performer optimized self-attention |

| Self-attention | Linearized attention | |

| Activation | GELU | |

| Batch size | 32 | |

| Optimizer | SGD | |

| Learning rate | ||

| Dropout | 0.3 | |

| Tensor fusion-based fusion | Feature attention scaling | Weighted sum with trainable co-efficient |

| Feature reduction | PCA | |

| KANs-based classification | Hyperparameter tuning | BOHB |

| Regularization | 0.007 | |

| Number of hidden nodes | 64 | |

| Learning rate | ||

| Batch size | 32 | |

| Splines functional order | 3rd order splines | |

| Output layer | Sigmoid |

| Acc | Prec | Rec | F1 | Spec | |

|---|---|---|---|---|---|

| DFUC 2021 | |||||

| Ischemia | 98.6 | 97.1 | 97.3 | 97.2 | 96.5 |

| Infection | 98.9 | 97.5 | 97.6 | 97.5 | 96.7 |

| DFUC 2020 | |||||

| Ischemia | 97.2 | 95.8 | 96.3 | 95.9 | 95.9 |

| Infection | 96.7 | 96.1 | 96.1 | 96.0 | 95.8 |

| Model Variant | Acc | Prec | Rec | F1 | Spec |

|---|---|---|---|---|---|

| Proposed Model | 98.7 | 97.3 | 97.4 | 97.3 | 96.6 |

| Without LeviT-Performer and Fusion (MobileNet V3-SWIN + KANs) | 96.4 | 94.5 | 94.9 | 94.7 | 94.1 |

| Without MobileNet V3-SWIN and Fusion (LeViT-Performer + KANs) | 95.8 | 93.9 | 94.2 | 94.0 | 93.7 |

| Without Tensor Fusion (MobileNet V3-SWIN + LeViT-Performer + Concatenation + KANs) | 95.1 | 93.1 | 92.8 | 93.0 | 92.1 |

| Without KANs (MobileNet V3-SWIN + LeViT-Performer + Fusion + Fully Connected Layer) | 94.7 | 92.5 | 92.3 | 92.4 | 91.8 |

| Acc | Prec | Rec | F1 | Spec | SD | CI | |

|---|---|---|---|---|---|---|---|

| Proposed Model | 98.7 | 97.3 | 97.4 | 97.3 | 96.6 | 0.0004 | [96.3–97.1] |

| SWIN | 93.8 | 93.4 | 93.7 | 93.5 | 93.9 | 0.0003 | [96.1–96.8] |

| MobileNet V3 | 94.9 | 94.1 | 94.3 | 94.2 | 94.1 | 0.0005 | [95.3–95.9] |

| RegNet X | 92.1 | 91.3 | 90.8 | 91.0 | 90.2 | 0.0005 | [95.8–96.7] |

| LeViT | 93.7 | 92.4 | 91.9 | 92.1 | 91.4 | 0.0005 | [96.1–97.4] |

| Performer | 93.1 | 92.9 | 93.4 | 93.1 | 93.1 | 0.0003 | [96.3–96.7] |

| Linformer | 94.5 | 93.6 | 94.1 | 93.8 | 93.9 | 0.0003 | [95.9–96.4] |

| Input Image | Ground Truth | Prediction | SHAP Value | Clinical Relevance |

|---|---|---|---|---|

| Infection | Infection | 0.35, 0.45, and 0.20 | Highlighted regions indicate redness, swelling, and potential pus, leading to infection-specific patterns. |

| Infection | Infection | 0.40, 0.50, and 0.10 | Yellowish discharge and wound edges are the crucial indicators of infection. In addition, swelling and wound patterns influenced the prediction. |

| Infection | Infection | 0.38, 0.47, and 0.15 | Central ulcer discharge and edge discoloration indicate a bacterial infection. |

| Ischemia | Ischemia | 0.45, 0.40, 0.18 | Dark necrotic tissue and reduced blood supply areas confirm ischemic interpretation. |

| Ischemia | Ischemia | 0.50, 0.35, and 0.11 | Yellowish dry tissue with no surrounding swelling, indicating ischemia diagnosis. |

| Ischemia | Ischemia | 0.42, 0.16, and 0.37 | Shrunken tissue regions represent the ischemia patterns. |

| Model | Dataset | Feature Extraction | Classification | Interpretability | Performance |

| Proposed Model | DFUC 2021 [22] | MobileNet V3-SWIN and LeViT-Performer | Ensembled Splines-based KANs | ✓ | Acc: 98.7% Prec: 97.3% Rec: 97.4% F1: 97.3% Spec: 96.6% AUROC: 0.93 |

| Proposed Model | DFUC 2020 [22] | ✓ | Acc: 96.9% Prec: 95.9% Rec: 96.2% F1: 96.0% Spec: 95.8% AUROC: 0.91 | ||

| AlGarawi et al. (2022) [31] | DFUC 2020 [22] | Customized CNNs | Fully connected layer | × | Average Acc: 86.7% Average F1: 86.7% AUC: 0.90 |

| Galdran et al. (2022) [32] | DFUC 2020 [22] | ResNeXt 50—EfficientNet-ViT-based DeiT | Fully connected layer | × | Average Prec: 70.0% Average Rec: 68.5% Average F1: 75.7% AUC: 0.88 |

| Bloch et al. (2022) [33] | DFUC 2021 [22] | EfficientNet B0-B6 | Fully connected layer | × | Average F1: 53.5% Average Prec: 68.5% Average Rec: 66.5% AUC: 0.86 |

| Ahmed and Naveed (2022) [34] | DFUC 2021 [22] | EfficientNet B0-B6—ResNet 50 | Fully connected layer | × | Average F1: 53.9% Average Prec: 67.3% Average Rec: 67.1% AUC: 0.59 |

| Toofanee et al. (2023) [35] | DFUC 2021 [22] | EfficientNet-BeiT | KNN-based classification | × | Acc: 95.0% AUC: 0.8298 Prec: 93.9% Rec: 93.9% F1: 93.7% |

| Qayyam et al. (2022) [36] | DFUC 2021 [22] | Customized ViTs | Fully connected layer | × | Average F1: 43.47% Average Prec: 68.0% Average Rec: 66.3% AUC: 0.84 |

| Sarmun et al. (2024) [37] | DFUC 2021 [22] | YOLO V8X | Fully connected layer | × | Prec: 89.7% Rec: 74.0% F1: 81.1% |

| YOLO V8X + ResNet | EL approach | × | Prec: 83.5% Rec: 75.2% F1: 79.1% | ||

| Ahsan et al. (2023) [38] | DFUC 2020 [22] | ResNet 50 | Fully connected layer | × | Average Acc: 92.12% Average F1: 92.24% AUC: 0.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sait, A.R.W.; Nagaraj, R. Diabetic Foot Ulcers Detection Model Using a Hybrid Convolutional Neural Networks–Vision Transformers. Diagnostics 2025, 15, 736. https://doi.org/10.3390/diagnostics15060736

Sait ARW, Nagaraj R. Diabetic Foot Ulcers Detection Model Using a Hybrid Convolutional Neural Networks–Vision Transformers. Diagnostics. 2025; 15(6):736. https://doi.org/10.3390/diagnostics15060736

Chicago/Turabian StyleSait, Abdul Rahaman Wahab, and Ramprasad Nagaraj. 2025. "Diabetic Foot Ulcers Detection Model Using a Hybrid Convolutional Neural Networks–Vision Transformers" Diagnostics 15, no. 6: 736. https://doi.org/10.3390/diagnostics15060736

APA StyleSait, A. R. W., & Nagaraj, R. (2025). Diabetic Foot Ulcers Detection Model Using a Hybrid Convolutional Neural Networks–Vision Transformers. Diagnostics, 15(6), 736. https://doi.org/10.3390/diagnostics15060736