Learning Architecture for Brain Tumor Classification Based on Deep Convolutional Neural Network: Classic and ResNet50

Abstract

1. Introduction

2. Related Work

3. Proposed Method

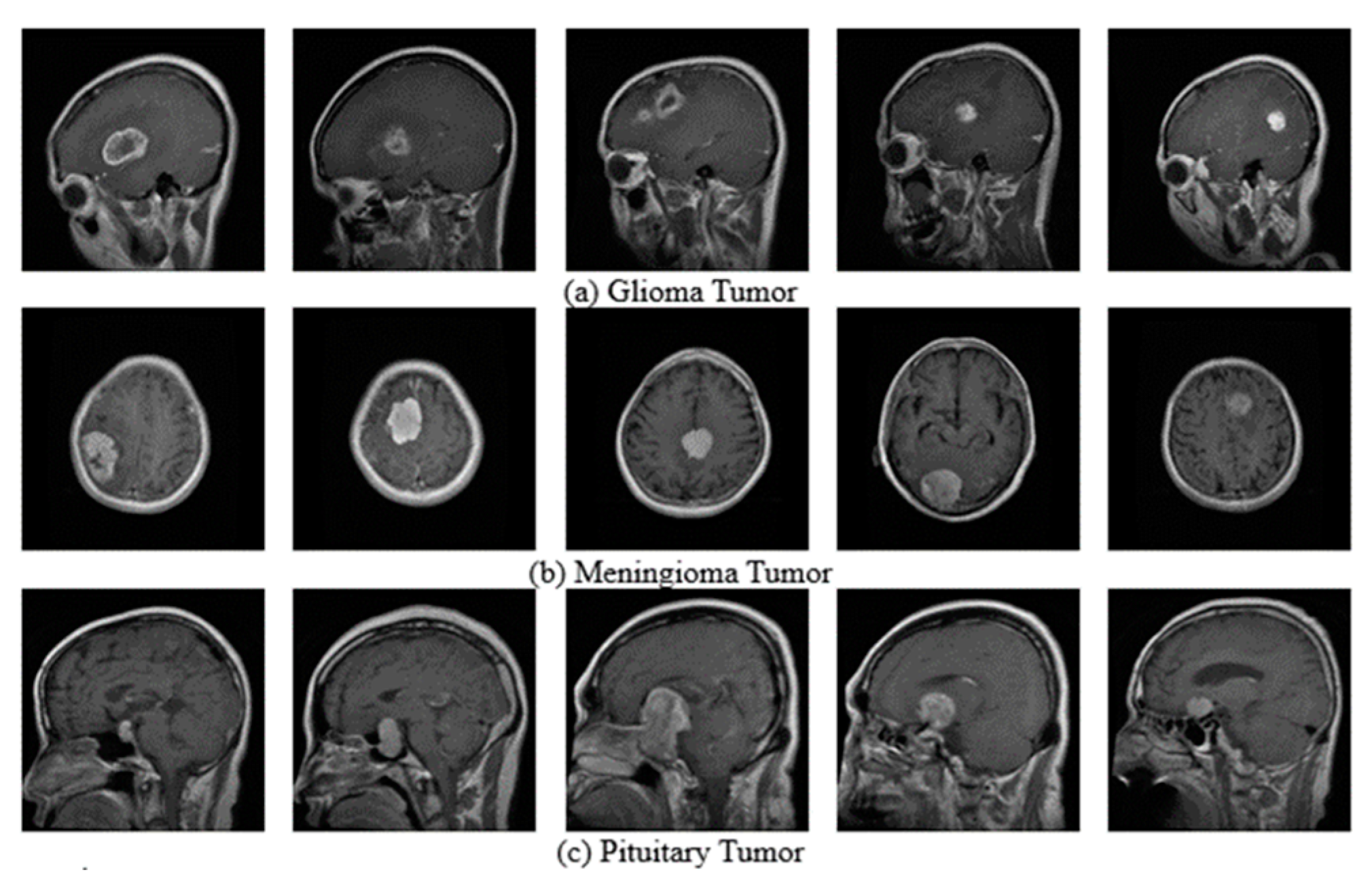

3.1. Datasets

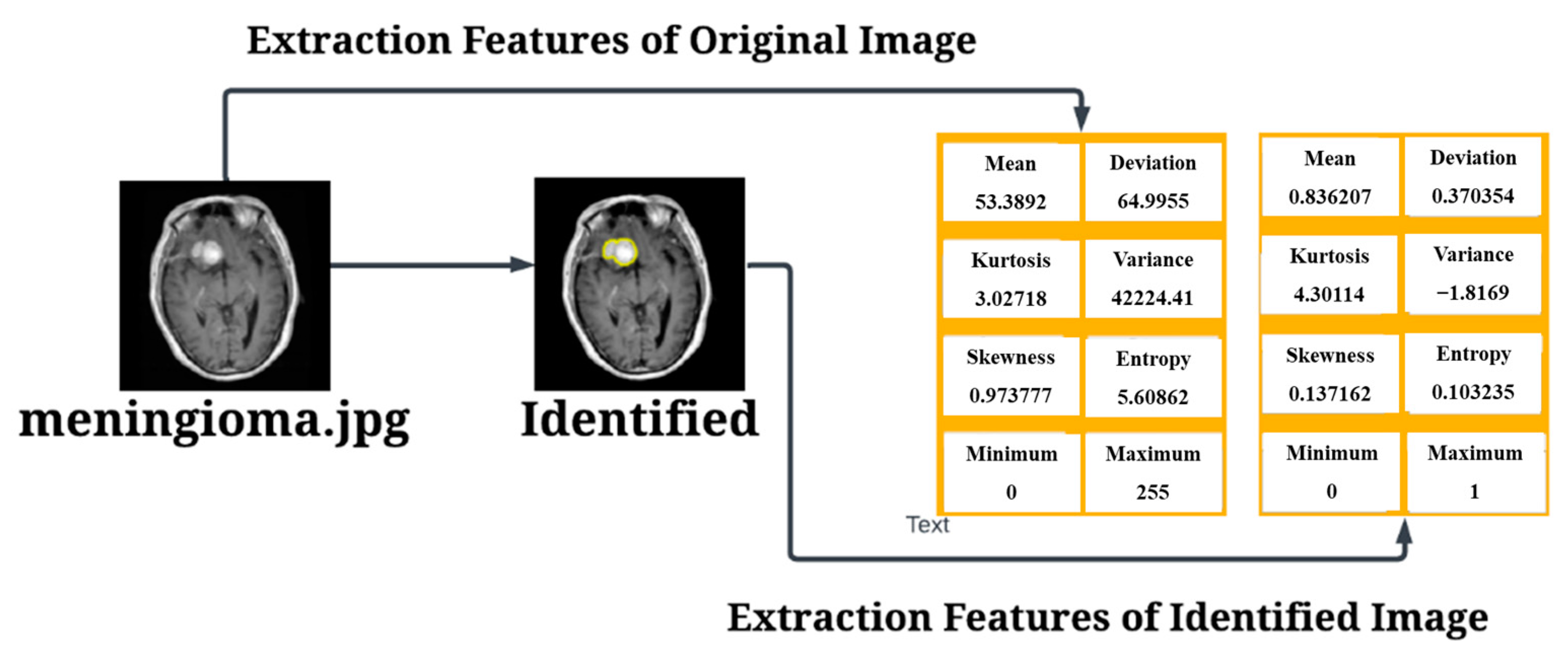

3.2. Pre-Processing

- Input Image: .

- Image Thresholding with a specified threshold using Black and White: bw if input image threshold, then ; if Input Image Threshold, then ; else “Wrong Input”

- Labeling Connected Objects: label if bw is part of a connected object labeled as than ; if bw is part of the background than ;

- Computing the area and convexArea property from the label:

- Computing the Solidity property from the label:

- Creating a binary vector based on the Solidity property of density: : if density Threshold, then ; if densitythreshold, then .

- Finding the maximum area value among the objects with high density: .

- Selecting the label(s) with the maximum area: .

- Creating a binary image with the selected label(s) after dilation: Tumor detected = if label is an element of tumor_label after dilation then , if label is not an element of tumor_label after dilation then .

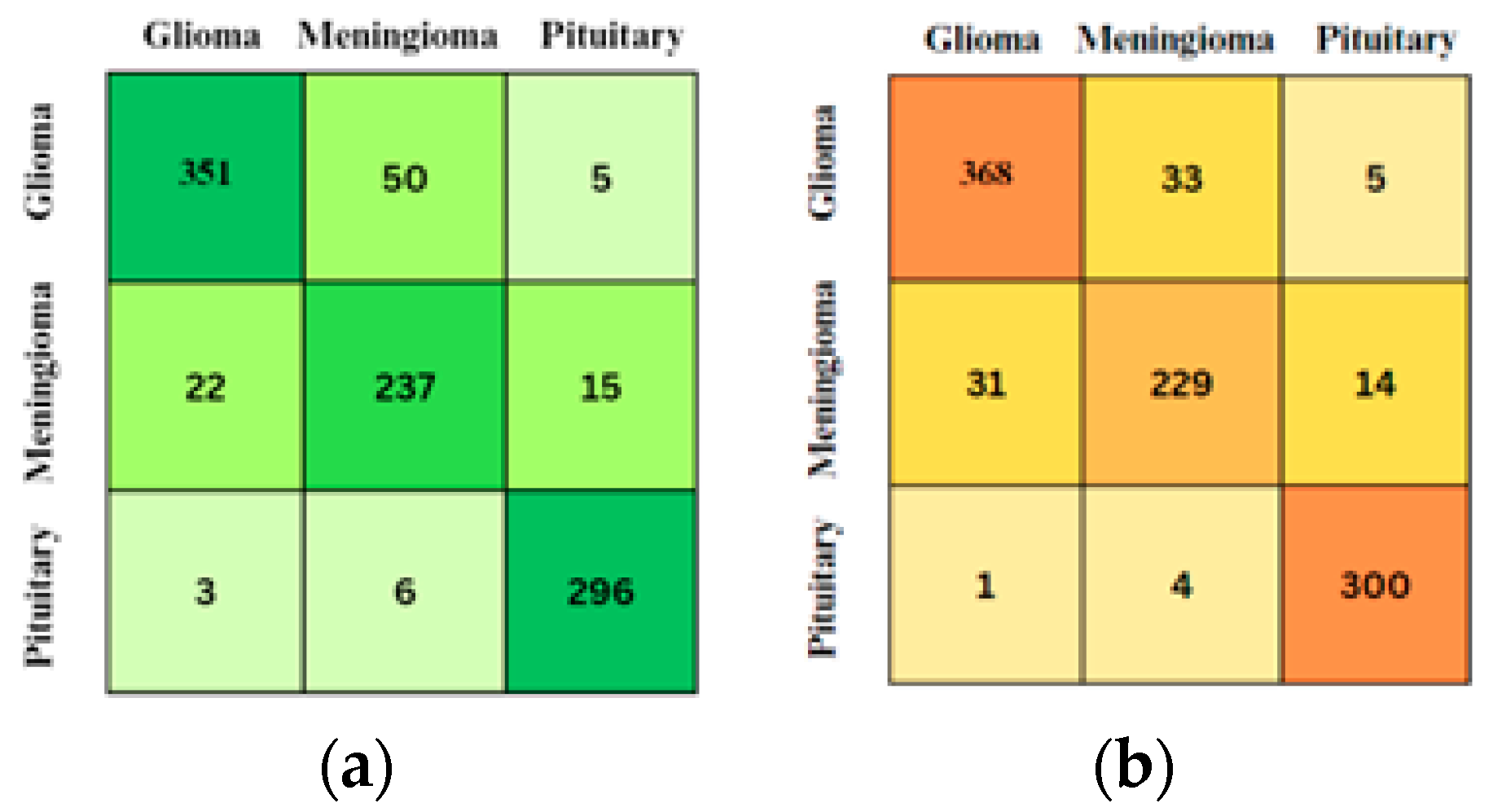

3.3. Confusion Matrix

3.4. Convolutional Neural Network (CNN)

3.5. Convolution Layers

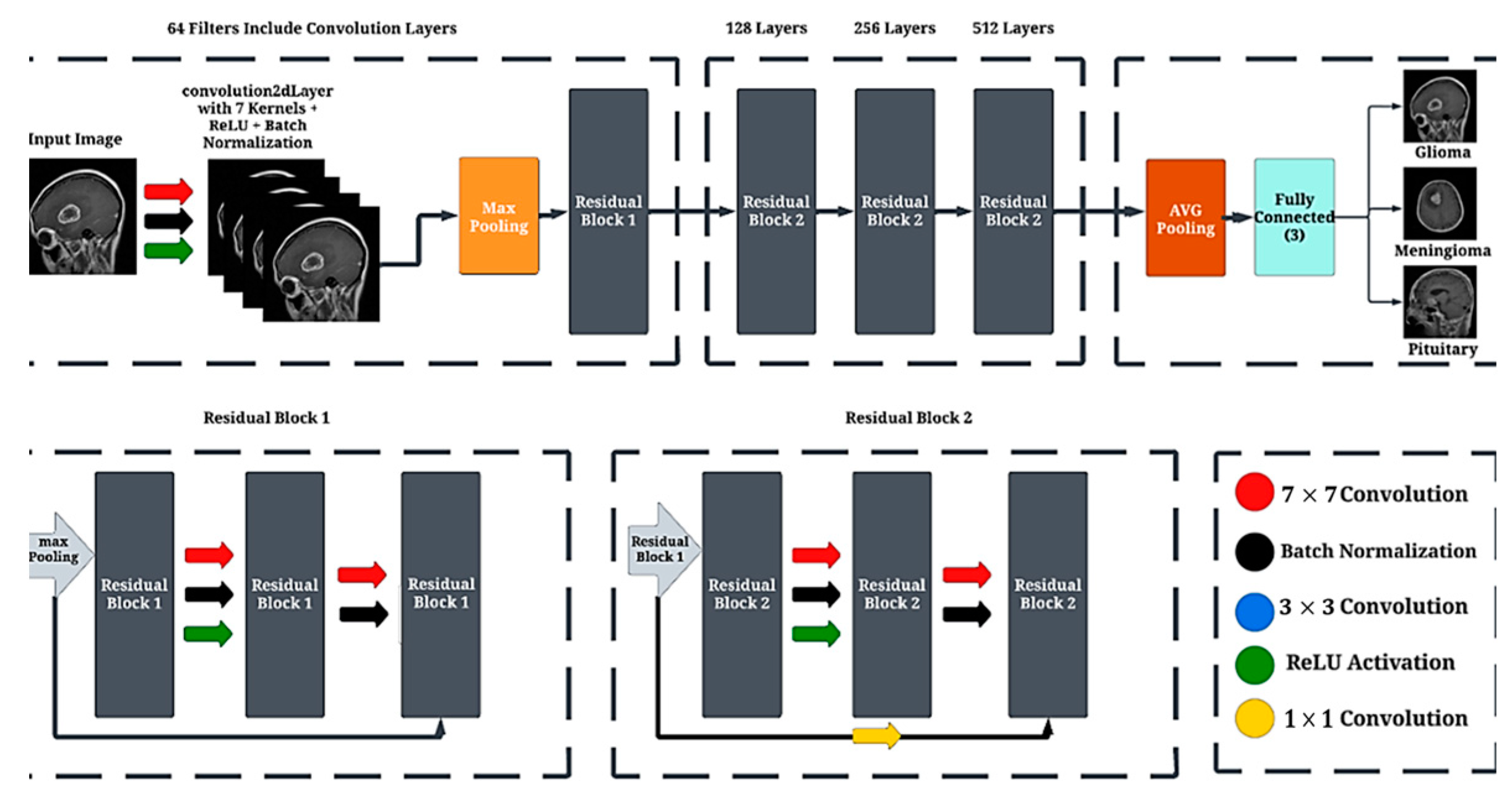

3.6. Residual Block Layer

3.7. Batch Normalizatio

3.8. Max Pooling

3.9. Fully Connected Layer

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allen, B.D.; Limoli, C.L. Breaking barriers: Neurodegenerative repercussions of radiotherapy-induced damage on the blood-brain and blood-tumor barrier. Free Radic. Biol. Med. 2022, 178, 189–201. [Google Scholar] [CrossRef] [PubMed]

- Jibon, F.A.; Khandaker, M.U.; Miraz, M.H.; Thakur, H.; Rabby, F.; Tamam, N.; Sulieman, A.; Itas, Y.S.; Osman, H. Cancerous and Non-Cancerous Brain MRI Classification Method Based on Convolutional Neural Network and Log-Polar Transformation. Healthcare 2022, 10, 1801. [Google Scholar] [CrossRef] [PubMed]

- Quader, S.; Kataoka, K.; Cabral, H. Nanomedicine for brain cancer. Adv. Drug Deliv. Rev. 2022, 182, 114115. [Google Scholar] [CrossRef]

- Ullah, N.; Khan, J.A.; Khan, M.S.; Khan, W.; Hassan, I.; Obayya, M.; Negm, N.; Salama, A.S. An Effective Approach to Detect and Identify Brain Tumors Using Transfer Learning. Appl. Sci. 2022, 12, 5645. [Google Scholar] [CrossRef]

- Manouchehri, E.; Taghipour, A.; Ebadi, A.; Shandiz, F.H.; Roudsari, R.L. Understanding breast cancer risk factors: Is there any mismatch between laywomen perceptions and expert opinions. BMC Cancer 2022, 22, 309. [Google Scholar] [CrossRef]

- Solanki, S.; Singh, U.P.; Chouhan, S.S.; Jain, S. Brain Tumor Detection and Classification Using Intelligence Techniques: An Overview. IEEE Access 2023, 11, 12870–12886. [Google Scholar] [CrossRef]

- Cahyo, N.R.D.; Sari, C.A.; Rachmawanto, E.H.; Jatmoko, C.; Al-Jawry, R.R.A.; Alkhafaji, M.A. A Comparison of Multi Class Support Vector Machine vs Deep Convolutional Neural Network for Brain Tumor Classification. In Proceedings of the 2023 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 16–17 September 2023; pp. 358–363. [Google Scholar] [CrossRef]

- Ejaz, K.; Rahim, M.S.M.; Arif, M.; Izdrui, D.; Craciun, D.M.; Geman, O. Review on Hybrid Segmentation Methods for Identification of Brain Tumor in MRI. Contrast Media Mol. Imaging 2022, 2022, 1541980. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A. Study and analysis of different segmentation methods for brain tumor MRI application. Multimed. Tools Appl. 2023, 82, 7117–7139. [Google Scholar] [CrossRef] [PubMed]

- Gatti, M.; Maino, C.; Darvizeh, F.; Serafini, A.; Tricarico, E.; Guarneri, A.; Inchingolo, R.; Ippolito, D.; Ricardi, U.; Fonio, P.; et al. Role of gadoxetic acid-enhanced liver magnetic resonance imaging in the evaluation of hepa-tocellular carcinoma after locoregional treatment. World J. Gastroenterol. 2022, 28, 3116–3131. [Google Scholar] [CrossRef] [PubMed]

- Mallum, A.; Mkhize, T.; Akudugu, J.M.; Ngwa, W.; Vorster, M. The Role of Positron Emission Tomography and Computed Tomographic (PET/CT) Imaging for Radiation Therapy Planning: A Literature Review. Diagnostics 2023, 13, 53. [Google Scholar] [CrossRef]

- Almalki, Y.E.; Ali, M.U.; Kallu, K.D.; Masud, M.; Zafar, A.; Alduraibi, S.K.; Irfan, M.; Basha, M.A.A.; Alshamrani, H.A.; Alduraibi, A.K.; et al. Isolated Convolutional-Neural-Network-Based Deep-Feature Extraction for Brain Tumor Classification Using Shallow Classifier. Diagnostics 2022, 12, 1793. [Google Scholar] [CrossRef] [PubMed]

- Salahuddin, Z.; Woodruff, H.C.; Chatterjee, A.; Lambin, P. Transparency of deep neural networks for medical image analysis: A review of interpretability methods. Comput. Biol. Med. 2022, 140, 105111. [Google Scholar] [CrossRef] [PubMed]

- ul Haq, A.; Li, J.P.; Kumar, R.; Ali, Z.; Khan, I.; Uddin, M.I.; Agbley, B.L.Y. MCNN: A multi-level CNN model for the classification of brain tumors in IoT-healthcare system. J. Ambient. Intell. Humaniz. Comput. 2022, 14, 4695–4706. [Google Scholar] [CrossRef]

- Anagun, Y. Smart brain tumor diagnosis system utilizing deep convolutional neural networks. Multimed. Tools Appl. 2023, 82, 44527–44553. [Google Scholar] [CrossRef] [PubMed]

- Kesav, N.; Jibukumar, M.G. Efficient and low complex architecture for detection and classification of Brain Tumor using RCNN with Two Channel CNN. J. King Saud. Univ. Comput. Inf. Sci. 2022, 34, 6229–6242. [Google Scholar] [CrossRef]

- Toptaş, B.; Toptaş, M.; Hanbay, D. Detection of Optic Disc Localization from Retinal Fundus Image Using Optimized Color Space. J. Digit. Imaging 2022, 35, 302–319. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. Cnn variants for computer vision: History, architecture, application, challenges and future scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Rahman, W.; Faruque, M.G.G.; Roksana, K.; Sadi, A.H.M.S.; Rahman, M.M.; Azad, M.M. Multiclass blood cancer classification using deep CNN with optimized features. Array 2023, 18, 100292. [Google Scholar] [CrossRef]

- Vankdothu, R.; Hameed, M.A. Brain tumor MRI images identification and classification based on the recurrent convolutional neural network. Meas. Sens. 2022, 24, 100412. [Google Scholar] [CrossRef]

- Raza, A.; Ayub, H.; Khan, J.A.; Ahmad, I.; Salama, A.S.; Daradkeh, Y.I.; Javeed, D.; Ur Rehman, A.; Hamam, H. A hybrid deep learning-based approach for brain tumor classification. Electronics 2022, 11, 1146. [Google Scholar] [CrossRef]

- Lakshmi, M.J.; Rao, S.N. Brain tumor magnetic resonance image classification: A deep learning approach. Soft Comput. 2022, 26, 6245–6253. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Kasgari, A.B.; Ghoushchi, S.J.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef] [PubMed]

- Sartaj, B. Brain Tumor Classification (MRI). Kaggle. Available online: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri (accessed on 6 June 2023).

- Nickparvar, M. Brain Tumor MRI Dataset. Available online: https://www.kaggle.com/datasets/masoudnickparvar/brain-tumor-mri-dataset (accessed on 28 June 2023).

- Zerouaoui, H.; Idri, A. IMAGE & SIGNAL PROCESSING Reviewing Machine Learning and Image Processing Based Decision-Making Systems for Breast Cancer Imaging. J. Med. Syst. 2021, 45, 8. [Google Scholar] [CrossRef] [PubMed]

- Sarki, R.; Ahmed, K.; Wang, H.; Zhang, Y.; Ma, J.; Wang, K. Image Preprocessing in Classification and Identification of Diabetic Eye Diseases. Data Sci. Eng. 2021, 6, 455–471. [Google Scholar] [CrossRef] [PubMed]

- Chitnis, S.; Hosseini, R.; Xie, P. Brain tumor classification based on neural architecture search. Sci. Rep. 2022, 12, 19206. [Google Scholar] [CrossRef] [PubMed]

- Haq, I.U.; Ali, H.; Wang, H.Y.; Cui, L.; Feng, J. BTS-GAN: Computer-aided segmentation system for breast tumor using MRI and conditional adversarial networks. Eng. Sci. Technol. Int. J. 2022, 36, 101154. [Google Scholar] [CrossRef]

- Sharifi, M.; Cho, W.C.; Ansariesfahani, A.; Tarharoudi, R.; Malekisarvar, H.; Sari, S.; Bloukh, S.H.; Edis, Z.; Amin, M.; Gleghorn, J.P.; et al. An Updated Review on EPR-Based Solid Tumor Targeting Nanocarriers for Cancer Treatment. Cancers 2022, 14, 2868. [Google Scholar] [CrossRef]

- Theissler, A.; Thomas, M.; Burch, M.; Gerschner, F. ConfusionVis: Comparative evaluation and selection of multi-class classifiers based on confusion matrices. Knowl. Based Syst. 2022, 247, 108651. [Google Scholar] [CrossRef]

- Badjie, B.; Ülker, E.D. A deep transfer learning based architecture for brain tumor classification using MR images. Inf. Technol. Control 2022, 51, 332–344. [Google Scholar] [CrossRef]

- Afham, M.; Dissanayake, I.; Dissanayake, D.; Dharmasiri, A.; Thilakarathna, K.; Rodrigo, R. CrossPoint: Self-Supervised Cross-Modal Contrastive Learning for 3D Point Cloud Understanding. Available online: https://github.com/MohamedAfham/CrossPoint (accessed on 22 May 2024).

- Abiwinanda, N.; Hanif, M.; Hesaputra, S.T.; Handayani, A.; Mengko, T.R. Brain tumor classification using convolutional neural network. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering 2018, Prague, Czech Republic, 3–8 June 2018; Springer: Singapore, 2019; Volume 1, pp. 183–189. [Google Scholar]

| Parameters | Value | Classic Layers | Resnet50 Layers |

|---|---|---|---|

| Optimizer | Adam | Input Image (200 × 200 × 1) | Input Image (200 × 200 × 1) |

| MaxEpoch | 24 | Convolutional (3 × 3 × 8) Batch Normalization ReLU | Convolutional Layer (7 × 7 × 64) Batch Normalization Layer ReLU |

| Validation frequency | 30 | Max Pooling | Max Pooling |

| InitialLearnRate | 0.001 | Convolutional Layer (3 × 3 × 16) Batch Normalization ReLU | Residual Block (64, 64) (64, 64) (64, 64) |

| MiniBatchSize | 64 | Max Pooling | Residual Block (128, 128) (128, 128) (128, 128) |

| Execution by GPU | Convolutional (3 × 3 × 32) Batch Normalization ReLU | Residual Block (256, 256) (256, 256) (256, 256) | |

| Max Pooling | Residual Block(512, 512) (512, 512) (512, 512) | ||

| Fully Connected (3) | Average Pooling Layer (1 × 1) | ||

| Softmax Layer | Fully Connected Layer (3) | ||

| Classified | Softmax Classified | ||

| Image | Method | Results | TP or TN |

|---|---|---|---|

| Classic Layer | Glioma | TP |

| Classic Layer | Meningioma | TP |

| Classic Layer | Pituitary | TP |

| ResNet-50 Layers | Glioma | TP |

| ResNet-50 Layers | Meningioma | TP |

| ResNet-50 Layers | Pituitary | TP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, R.R.; Yaacob, N.M.; Alqaryouti, M.H.; Sadeq, A.E.; Doheir, M.; Iqtait, M.; Rachmawanto, E.H.; Sari, C.A.; Yaacob, S.S. Learning Architecture for Brain Tumor Classification Based on Deep Convolutional Neural Network: Classic and ResNet50. Diagnostics 2025, 15, 624. https://doi.org/10.3390/diagnostics15050624

Ali RR, Yaacob NM, Alqaryouti MH, Sadeq AE, Doheir M, Iqtait M, Rachmawanto EH, Sari CA, Yaacob SS. Learning Architecture for Brain Tumor Classification Based on Deep Convolutional Neural Network: Classic and ResNet50. Diagnostics. 2025; 15(5):624. https://doi.org/10.3390/diagnostics15050624

Chicago/Turabian StyleAli, Rabei Raad, Noorayisahbe Mohd Yaacob, Marwan Harb Alqaryouti, Ala Eddin Sadeq, Mohamed Doheir, Musab Iqtait, Eko Hari Rachmawanto, Christy Atika Sari, and Siti Salwani Yaacob. 2025. "Learning Architecture for Brain Tumor Classification Based on Deep Convolutional Neural Network: Classic and ResNet50" Diagnostics 15, no. 5: 624. https://doi.org/10.3390/diagnostics15050624

APA StyleAli, R. R., Yaacob, N. M., Alqaryouti, M. H., Sadeq, A. E., Doheir, M., Iqtait, M., Rachmawanto, E. H., Sari, C. A., & Yaacob, S. S. (2025). Learning Architecture for Brain Tumor Classification Based on Deep Convolutional Neural Network: Classic and ResNet50. Diagnostics, 15(5), 624. https://doi.org/10.3390/diagnostics15050624