Comparative Study of AI Modes in Ultrasound Diagnosis of Breast Lesions

Abstract

1. Introduction

2. Materials and Methods

2.1. Patient Selection

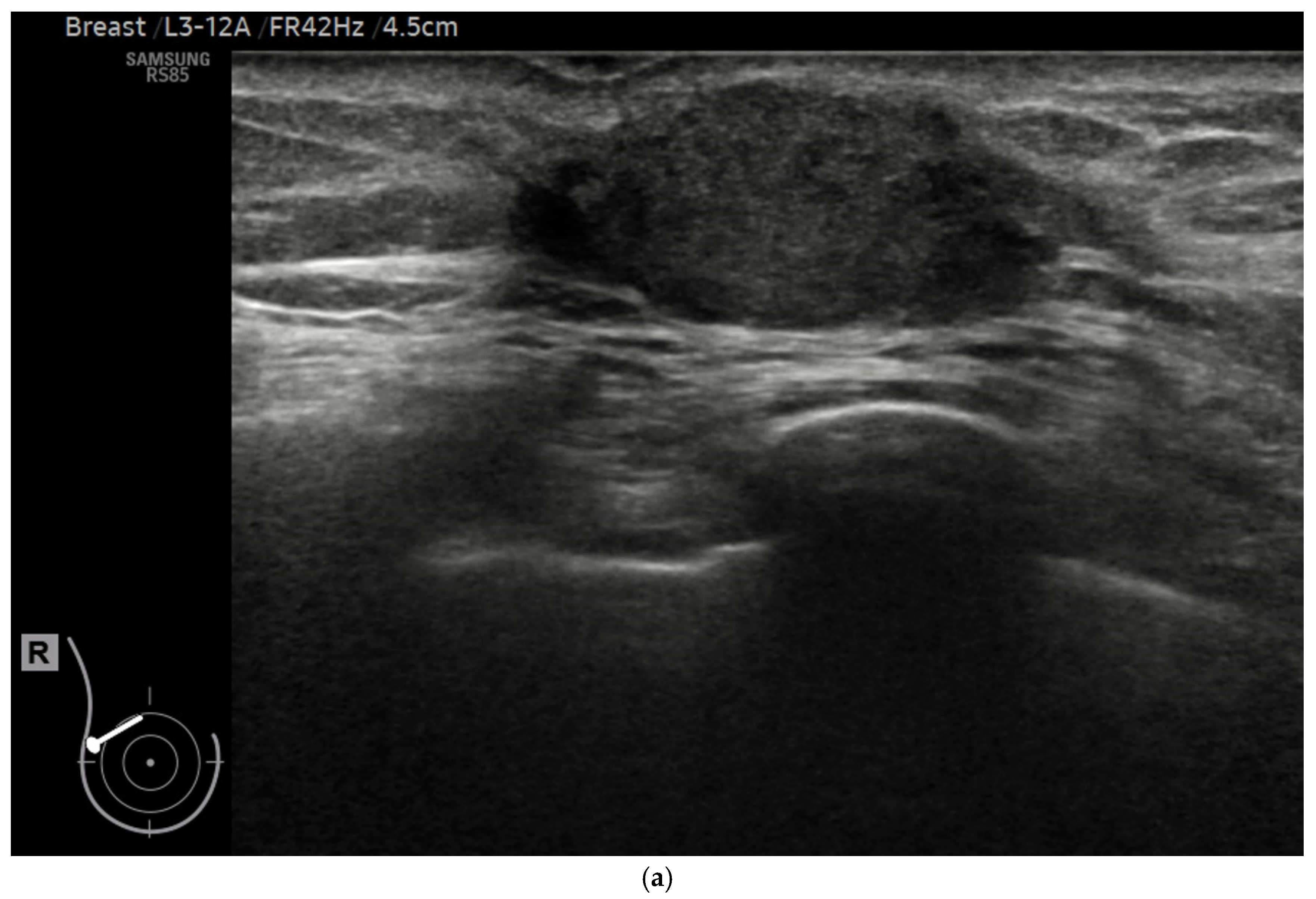

2.2. Ultrasound Procedure

2.3. Imaging Selection

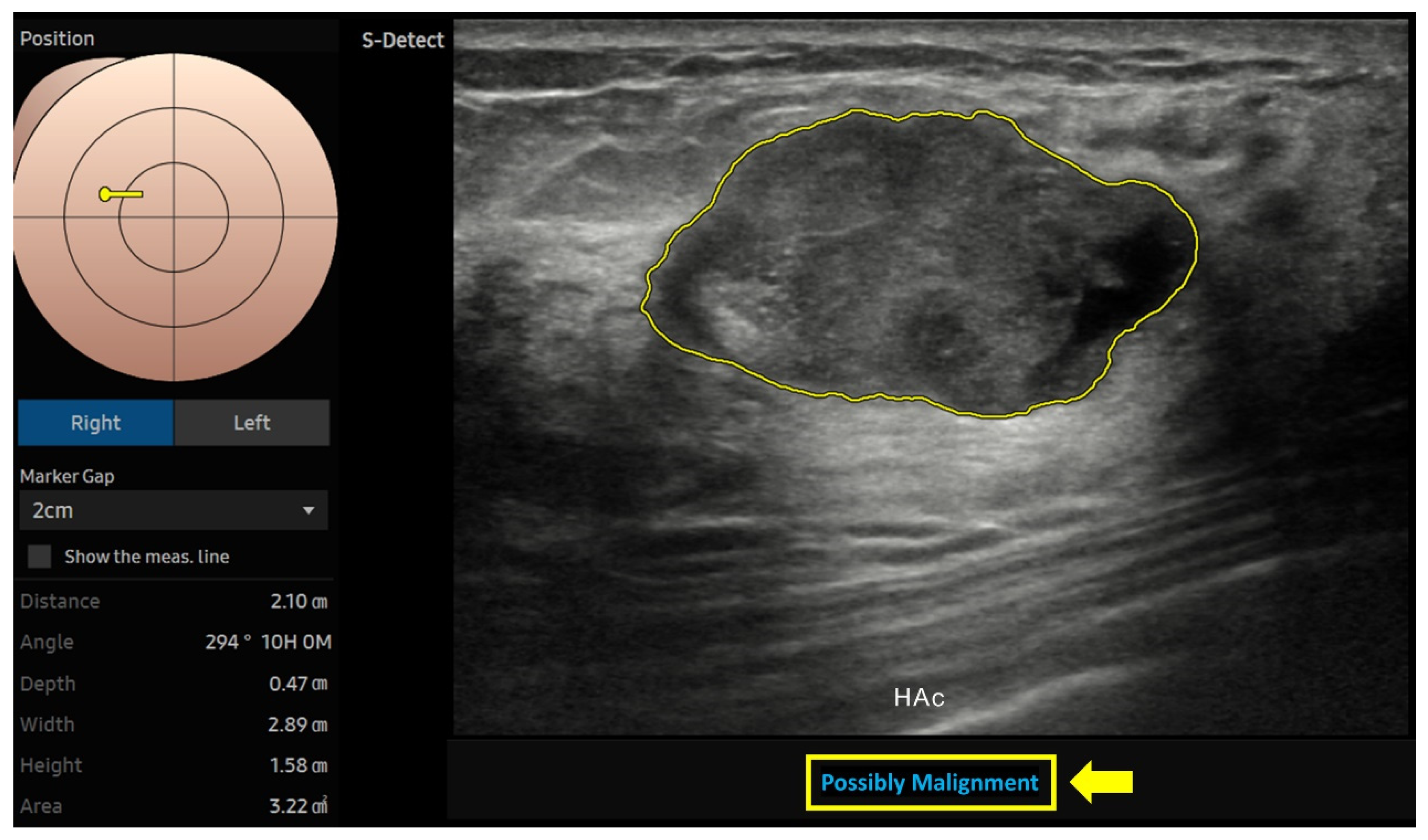

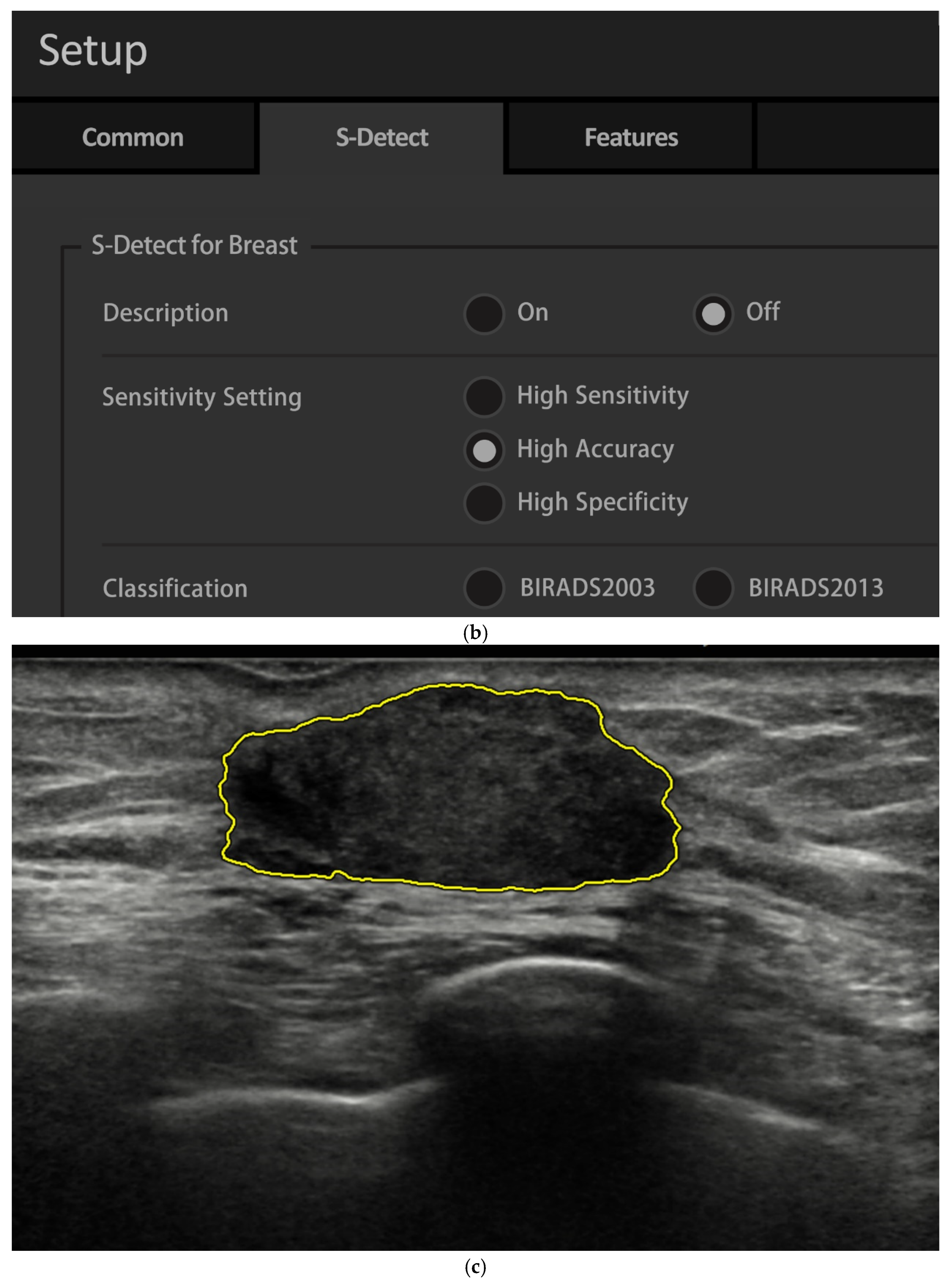

2.4. AI Analysis

2.5. Diagnosis

2.6. Performance Metrics

2.7. Statistical Analysis

3. Results

3.1. Patient Demographics and Clinical Data

3.2. Breast Lesion Imaging Features

3.3. Biopsy Histopathologic Findings

3.4. Radiologist vs. AI Performance

3.5. F1 Score: Radiologists vs. AI

3.6. AUC: Radiologist vs. AI Accuracy

3.7. Performance by Age and Size

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Ding, S.C.; Lo, Y.D. Cell-free DNA fragmentomics in liquid biopsy. Diagnostics 2022, 12, 978. [Google Scholar] [CrossRef] [PubMed]

- Akkus, Z.; Cai, J.; Boonrod, A.; Zeinoddini, A.; Weston, A.D.; Philbrick, K.A.; Erickson, B.J. A Survey of Deep-Learning Applications in Ultrasound: Artificial Intelligence-Powered Ultrasound for Improving Clinical Workflow. J. Am. Coll. Radiol. JACR 2019, 16, 1318–1328. [Google Scholar] [CrossRef] [PubMed]

- Song, P.; Zhang, L.; Bai, L.; Wang, Q.; Wang, Y. Diagnostic performance of ultrasound with computer-aided diagnostic system in detecting breast cancer. Heliyon 2023, 9, e20712. [Google Scholar] [CrossRef] [PubMed]

- He, P.; Chen, W.; Bai, M.-Y.; Li, J.; Wang, Q.-Q.; Fan, L.-H.; Zheng, J.; Liu, C.-T.; Zhang, X.-R.; Yuan, X.-R. Application of computer-aided diagnosis to predict malignancy in BI-RADS 3 breast lesions. Heliyon 2024, 10, e24560. [Google Scholar] [CrossRef]

- Wu, G.G.; Zhou, L.Q.; Xu, J.W.; Wang, J.Y.; Wei, Q.; Deng, Y.B.; Cui, X.W.; Dietrich, C.F. Artificial intelligence in breast ultrasound. World J. Radiol. 2019, 11, 19–26. [Google Scholar] [CrossRef]

- Catalano, O.; Fusco, R.; De Muzio, F.; Simonetti, I.; Palumbo, P.; Bruno, F.; Borgheresi, A.; Agostini, A.; Gabelloni, M.; Varelli, C. Recent advances in ultrasound breast imaging: From industry to clinical practice. Diagnostics 2023, 13, 980. [Google Scholar] [CrossRef]

- Wang, X.; Meng, S. Diagnostic accuracy of S-Detect to breast cancer on ultrasonography: A meta-analysis (PRISMA). Medicine 2022, 101, e30359. [Google Scholar] [CrossRef]

- Brunetti, N.; Calabrese, M.; Martinoli, C.; Tagliafico, A.S. Artificial Intelligence in Breast Ultrasound: From Diagnosis to Prognosis-A Rapid Review. Diagnostics 2022, 13, 58. [Google Scholar] [CrossRef]

- Berg, W.A.; Gur, D.; Bandos, A.I.; Nair, B.; Gizienski, T.-A.; Tyma, C.S.; Abrams, G.; Davis, K.M.; Mehta, A.S.; Rathfon, G. Impact of original and artificially improved artificial intelligence–based computer-aided diagnosis on breast US interpretation. J. Breast Imaging 2021, 3, 301–311. [Google Scholar] [CrossRef]

- Du, L.-W.; Liu, H.-L.; Cai, M.-J.; Pan, J.-Z.; Zha, H.-L.; Nie, C.-L.; Lin, M.-J.; Li, C.-Y.; Zong, M.; Zhang, B. Ultrasound S-detect system can improve diagnostic performance of less experienced radiologists in differentiating breast masses. A retrospective dual-center study. Br. J. Radiol. 2024, 98, 404–411. [Google Scholar] [CrossRef]

- Chen, P.; Tong, J.; Lin, T.; Wang, Y.; Yu, Y.; Chen, M.; Yang, G. The added value of S-detect in the diagnostic accuracy of breast masses by senior and junior radiologist groups: A systematic review and meta-analysis. Gland Surg. 2022, 11, 1946. [Google Scholar] [CrossRef] [PubMed]

- O’Connell, A.M.; Bartolotta, T.V.; Orlando, A.; Jung, S.H.; Baek, J.; Parker, K.J. Diagnostic Performance of an Artificial Intelligence System in Breast Ultrasound. J. Ultrasound Med. 2022, 41, 97–105. [Google Scholar] [CrossRef]

- Antico, M.; Sasazawa, F.; Wu, L.; Jaiprakash, A.; Roberts, J.; Crawford, R.; Pandey, A.K.; Fontanarosa, D. Ultrasound guidance in minimally invasive robotic procedures. Med. Image Anal. 2019, 54, 149–167. [Google Scholar] [CrossRef] [PubMed]

- Yongping, L.; Zhou, P.; Juan, Z.; Yongfeng, Z.; Liu, W.; Shi, Y. Performance of Computer-Aided Diagnosis in Ultrasonography for Detection of Breast Lesions Less and More Than 2 cm: Prospective Comparative Study. JMIR Med. Inform. 2020, 8, e16334. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.Y.; Zhao, Z.Z.; Zhang, W.Y.; Liang, M.; Ou, B.; Yang, H.Y.; Luo, B.M. Computer-Aided Diagnosis of Solid Breast Lesions With Ultrasound: Factors Associated With False-negative and False-positive Results. J. Ultrasound Med. 2019, 38, 3193–3202. [Google Scholar] [CrossRef]

- Cheng, Y.; Xia, Q.; Wang, J.; Xie, H.; Yu, Y.; Liu, H.; Yao, Z.; Hu, J. Value of ultrasonic S-Detect technique in diagnosis of breast masses. J. South. Med. Univ. 2022, 42, 1044–1049. [Google Scholar]

- Choi, J.S.; Han, B.K.; Ko, E.S.; Bae, J.M.; Ko, E.Y.; Song, S.H.; Kwon, M.R.; Shin, J.H.; Hahn, S.Y. Effect of a Deep Learning Framework-Based Computer-Aided Diagnosis System on the Diagnostic Performance of Radiologists in Differentiating between Malignant and Benign Masses on Breast Ultrasonography. Korean J. Radiol. 2019, 20, 749–758. [Google Scholar] [CrossRef]

- Bartolotta, T.V.; Orlando, A.A.M.; Di Vittorio, M.L.; Amato, F.; Dimarco, M.; Matranga, D.; Ienzi, R. S-Detect characterization of focal solid breast lesions: A prospective analysis of inter-reader agreement for US BI-RADS descriptors. J. Ultrasound 2021, 24, 143–150. [Google Scholar] [CrossRef]

- Hicks, S.A.; Strümke, I.; Thambawita, V.; Hammou, M.; Riegler, M.A.; Halvorsen, P.; Parasa, S. On evaluation metrics for medical applications of artificial intelligence. Sci. Rep. 2022, 12, 5979. [Google Scholar] [CrossRef]

- Hand, D.J.; Christen, P.; Kirielle, N.F. An interpretable transformation of the F-measure. Mach. Learn. 2021, 110, 451–456. [Google Scholar] [CrossRef]

- Park, S.H.; Han, K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology 2018, 286, 800–809. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, S.A.; Hussain, L.; Sadiq, T.; Shah, S.T.H.; Mir, A.A.; Nadim, M.A.; Williams, D.K.A.; Duong, T.Q.; Habib, N.; Ahmad, A. Breast Cancer Detection using Mammography: Image Processing to Deep Learning. IEEE Access 2024. [Google Scholar] [CrossRef]

- Li, H.-Y.; Lin, Y.; Hong, Y.-T.; Chou, C.-P. Comparative Study of Artificial Intelligence-based System Alone in Synthesized Mammography Versus Radiologist’s Interpretation of Digital Breast Tomosynthesis in Screening Women. J. Radiol. Sci. 2024, 49, 59–65. [Google Scholar]

- Kim, K.; Song, M.K.; Kim, E.K.; Yoon, J.H. Clinical application of S-Detect to breast masses on ultrasonography: A study evaluating the diagnostic performance and agreement with a dedicated breast radiologist. Ultrasonography 2017, 36, 3–9. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Yan, Y.-J.; Wu, G.-G.; Ye, X.-R.; Jiang, F.; Liu, J.; Wang, G.; Wang, Y.; Song, J.; Pan, Z.-P. The diagnostic performance of ultrasound computer-aided diagnosis system for distinguishing breast masses: A prospective multicenter study. Eur. Radiol. 2022, 32, 4046–4055. [Google Scholar] [CrossRef] [PubMed]

- Park, H.J.; Kim, S.M.; La Yun, B.; Jang, M.; Kim, B.; Jang, J.Y.; Lee, J.Y.; Lee, S.H. A computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of breast masses on ultrasound: Added value for the inexperienced breast radiologist. Medicine 2019, 98, e14146. [Google Scholar] [CrossRef]

- Shreffler, J.; Huecker, M.R. Diagnostic Testing Accuracy: Sensitivity, Specificity, Predictive Values and Likelihood Ratios. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2020. Available online: www.ncbi.nlm.nih.gov/books/NBK557491/ (accessed on 28 December 2024).

- Xing, B.; Chen, X.; Wang, Y.; Li, S.; Liang, Y.K.; Wang, D. Evaluating breast ultrasound S-detect image analysis for small focal breast lesions. Front. Oncol. 2022, 12, 1030624. [Google Scholar] [CrossRef]

- Cho, E.; Kim, E.K.; Song, M.K.; Yoon, J.H. Application of Computer-Aided Diagnosis on Breast Ultrasonography: Evaluation of Diagnostic Performances and Agreement of Radiologists According to Different Levels of Experience. J. Ultrasound Med. 2018, 37, 209–216. [Google Scholar] [CrossRef]

- Chiang, C.-L.; Liang, H.-L.; Chou, C.-P.; Huang, J.-S.; Yang, T.-L.; Chou, Y.-H.; Pan, H.-B. Easily recognizable sonographic patterns of ductal carcinoma in situ of the breast. J. Chin. Med. Assoc. 2016, 79, 493–499. [Google Scholar] [CrossRef][Green Version]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Bahl, M.; Chang, J.M.; Mullen, L.A.; Berg, W.A. Artificial intelligence for breast ultrasound: AJR expert panel narrative review. Am. J. Roentgenol. 2024, 223, e2330645. [Google Scholar] [CrossRef]

- Park, S.H. Artificial intelligence for ultrasonography: Unique opportunities and challenges. Ultrasonography 2020, 40, 3. [Google Scholar] [CrossRef]

| Characteristic | n (%) |

|---|---|

| Total | 232 |

| Age, y | |

| Mean ± SD | 50.2 ± 14.2 |

| Median, range | 48, 19–87 |

| >50 | 108 (47) |

| ≤50 | 124 (53) |

| Menopausal status | |

| Premenopausal state | 137 (59) |

| Postmenopausal state | 95 (41) |

| Laterality of lesion | |

| Left breast | 116 (50) |

| Right breast | 103 (44) |

| Bilateral breasts | 13 (6) |

| Lesion number per patient | |

| 1 | 209 (90) |

| 2 | 18 (8) |

| ≥3 | 5 (2) |

| Purpose of exam | |

| Screening | 67 (29) |

| Diagnosis | 165 (71) |

| Methods of diagnosis | |

| Biopsy | 147 (63) |

| Follow-up (24 months) | 85 (37) |

| Characteristic | n (%) |

|---|---|

| Final diagnosis | |

| Malignant | 70 (27) |

| Benign | 190 (73) |

| Diagnosis method | |

| Biopsy | 160 (62) |

| 24-month follow-up | 100 (38) |

| Shape | |

| Oval | 150 (58) |

| Round | 21 (8) |

| Irregular | 89 (34) |

| Margin | |

| Circumscribed | 128 (50) |

| Indistinct | 49 (19) |

| Angular | 7 (2) |

| Microlobulated | 57 (22) |

| Spiculated | 19 (7) |

| Lesion size (cm) | |

| <1 | 79 (30) |

| ≥1, ≤2 | 113 (44) |

| >2 | 68 (26) |

| BI-RADS determined by radiologists | |

| 2 | 59 (23) |

| 3 | 63 (24) |

| 4 | 133 (51) |

| 5 | 5 (2) |

| Characteristics | n (%) |

|---|---|

| Benign (n = 90) | |

| Fibroadenoma | 40 (44) |

| FCD (fibrocystic changes) | 32 (36) |

| Inflammatory breast disease | 5 (6) |

| Papilloma | 4 (4) |

| Phyllode tumor | 2 (2) |

| Other benign pathology | 7 (8) |

| Malignant (n = 70) | |

| IDC (invasive ductal carcinoma) | 57 (81) |

| ILC (invasive lobular carcinoma) | 5 (7) |

| DCIS (ductal carcinoma in situ) | 2 (3) |

| Papillary ductal carcinoma in situ | 2 (3) |

| Other invasive cancer | 4 (6) |

| Mode | Sensitivity | Specificity | Accuracy | PPV | NPV | F1 Score |

|---|---|---|---|---|---|---|

| Rad | 98.6 (92.4–100) | 64.2 (56.7–70.8) | 73.5 (67.7–78.7) | 50.7 (45.9–55.5) | 99.2 (94.5–100) | 0.668 (0.588–0.737) |

| HSe | 95.7 (87.9–99.1) p = 0.049 * | 68.4 (61.3–75) p = 0.288 | 75.8 (70.1–80.9) p = 0.545 | 52.8 (47.4–58.1) p = 0.643 | 97.7 (95.6–99.8) p = 0.183 | 0.678 (0.599–0.751) |

| HAc | 81.4 (70.3–89.7) p < 0.001 *** | 87.9 (82.4–92.2) p < 0.001 *** | 86.2 (81.4–90.1) p < 0.001 *** | 71.3 (62.4–78.7) p < 0.001 *** | 92.8 (88.7–95.5) p < 0.001 *** | 0.760 (0.677–0.832) |

| HSp | 45.7 (33.7–58.1) p < 0.001 *** | 95.8 (91.9–98.2) p < 0.001 *** | 82.3 (77.1–86.8) p = 0.015 ** | 80.0 (66.0–89.2) p < 0.001 *** | 82.7 (79.4–85.6) p < 0.001 *** | 0.579 (0.465–0.685) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Y.-T.; Yu, Z.-H.; Chou, C.-P. Comparative Study of AI Modes in Ultrasound Diagnosis of Breast Lesions. Diagnostics 2025, 15, 560. https://doi.org/10.3390/diagnostics15050560

Hong Y-T, Yu Z-H, Chou C-P. Comparative Study of AI Modes in Ultrasound Diagnosis of Breast Lesions. Diagnostics. 2025; 15(5):560. https://doi.org/10.3390/diagnostics15050560

Chicago/Turabian StyleHong, Yu-Ting, Zi-Han Yu, and Chen-Pin Chou. 2025. "Comparative Study of AI Modes in Ultrasound Diagnosis of Breast Lesions" Diagnostics 15, no. 5: 560. https://doi.org/10.3390/diagnostics15050560

APA StyleHong, Y.-T., Yu, Z.-H., & Chou, C.-P. (2025). Comparative Study of AI Modes in Ultrasound Diagnosis of Breast Lesions. Diagnostics, 15(5), 560. https://doi.org/10.3390/diagnostics15050560