3.1. Model Performance Evaluation

The main objective of the four experiments was to detect PCa with the intention of enhancing patient outcomes, streamlining the diagnostic process, and significantly lowering both the time and costs incurred by patients. In our experiments, we allocated 70% of the TPP dataset, which comprised 1072 images, for training purposes, while the remaining 30% (456 images) was reserved for testing. We utilized supervised pre-training to prepare four DL models—DenseNet-169, MobileNet-V2, ResNet-50, and VGG-19—training them on the ImageNet dataset.

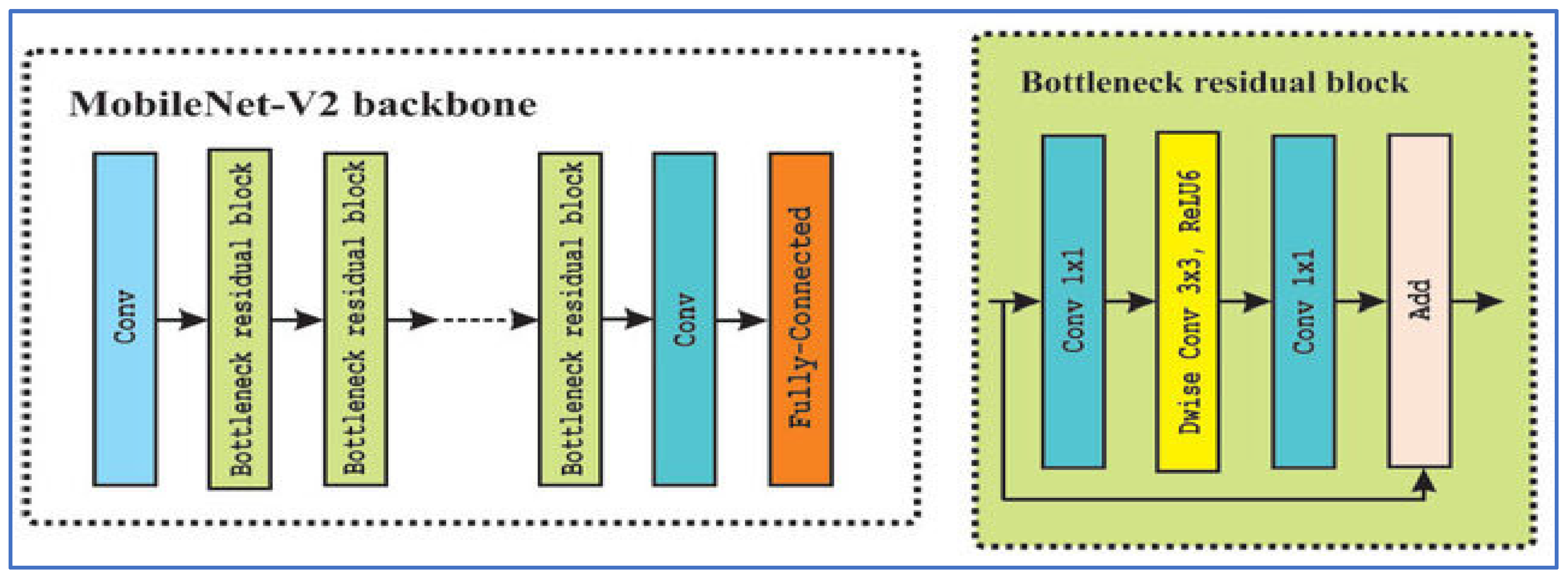

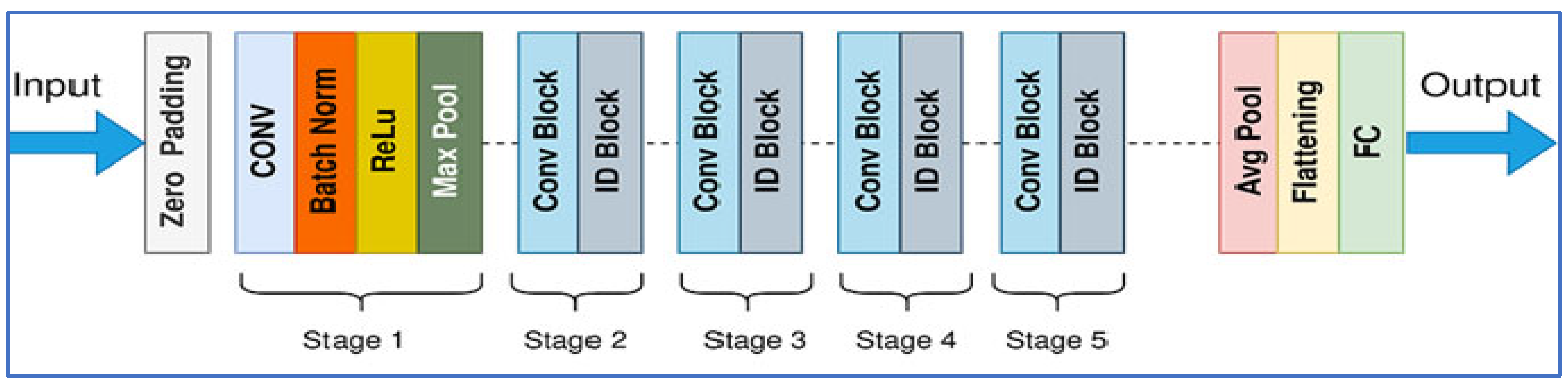

In the first experiment, we employed four DL models to extract deep features for capturing high-level image representations, which were then used as classifiers. In the second experiment, we again used the four DL models for deep feature extraction but applied SVD to reduce dimensionality, decrease computational complexity, and preserve important information. We utilized ML techniques—XGB, CatBoost, RF, and SVM—as classifiers.

In the third experiment, we extracted handcrafted features using HOG to effectively detect structural patterns using the same ML techniques for classification.

Finally, in the fourth experiment, we combined the four DL models for deep feature extraction with HOG for handcrafted feature detection. We again used SVD to reduce dimensionality, lower computational complexity, and maintain critical information, employing the ML techniques as classifiers.

In all four experiments, we used the five-fold cross-validation technique. This method involved splitting the training dataset into five equal parts. In each iteration, one part was designated as the validation set, while the other four parts were used to train the model. Each iteration represented a distinct training and validation process that updated the model’s parameters. This process was repeated five times, with each part serving as the validation set once. We calculated the average performance across all five iterations to assess the model’s generalization ability. At the end of the experiment, we applied the measured metrics (Equations (1)–(7)) to the four DL and ML models.

In the initial experiment, we used four DL models to extract deep features for capturing high-level image representations, which were later utilized as classifiers. The results of the five-fold cross-validation for these models, along with their corresponding evaluation metrics, are presented in

Table 2,

Table 3,

Table 4 and

Table 5 and

Figure 7. The average accuracy from the five-fold cross-validation for each model is as follows: DenseNet-169 achieved 94.37%, MobileNet-V2 reached 74.08%, ResNet-50 attained 79.33%, and VGG-19 recorded 98.10%. Based on these results, VGG-19 demonstrated the highest accuracy among the models evaluated.

Table 2 illustrates that DenseNet-169 achieved an accuracy of 94.37%, with a specificity of 94.25%, precision of 94.45%, recall of 94.51%, and an F1-score of 94.38%. The model consistently exhibited strong discriminative capabilities, obtaining an average AUC of 98.70%. These findings indicate that DenseNet-169 maintained a well-balanced trade-off between sensitivity and specificity, with stable precision and recall across different folds. Although minor fluctuations were noted—particularly a decrease in specificity during the fourth fold and recall in the second fold—the overall averages suggested a solid and dependable classification performance for the dataset.

In the first fold, DenseNet-169 recorded an accuracy of 94.44%, accompanied by high specificity (96.08%) and precision (95.95%). However, recall was slightly lower at 92.81%, resulting in an F1-score of 94.35% and an impressive AUC of 98.71%. The second fold saw a slight decline in performance, with accuracy at 92.48% and recall at 88.89%, although specificity (96.08%) and precision (95.77%) remained robust. The decreased recall lowered the F1-score to 92.20%, while AUC remained strong at 97.85%. The third fold exhibited balanced performance, achieving an accuracy of 95.10% with precision (94.81%) and recall (95.42%) nearly equal, leading to an F1-score of 95.11%. AUC reached 99.15%, indicating strong discriminative capability. In the fourth fold, recall peaked at 98.68%, but specificity fell to 86.27%, the lowest observed across all folds. This imbalance resulted in an accuracy of 92.46% and an F1-score of 92.88%, while AUC still maintained a high value at 98.15%. The fifth fold demonstrated the highest overall performance, achieving an accuracy of 97.38%, specificity of 98.03%, precision of 98.01%, and recall of 96.73%, culminating in an F1-score of 97.37% and the best AUC of 99.63%.

From

Table 3, the MobileNet-V2 model achieved an accuracy of 74.08%, demonstrating relatively balanced performance across various metrics. The average specificity was 80.38%, indicating that the model effectively identified negative cases. Precision averaged at 79.60%, showing that positive predictions were generally reliable. However, recall averaged lower at 67.82%, indicating that the model frequently overlooked positive cases. This discrepancy was further illustrated by an average F1-score of 71.66%, suggesting that the weaknesses in recall impacted the overall balance between precision and recall. The average AUC of 81.86% highlighted a strong discriminatory capability, indicating good separability between positive and negative cases at different thresholds.

In the first fold, the model recorded a moderate accuracy of 71.57%, with high specificity (80.39%) and precision (76.19%), although recall was lower at 62.75%. This resulted in a balanced F1-score of 68.82% and an AUC of 78.70%. In the second fold, performance improved, with accuracy increasing to 77.45% and recall rising to 84.97%, resulting in the highest F1-score (79.03%) across all folds. However, specificity declined to 69.93%, indicating an increase in false positives. The third fold showed the highest specificity (92.16%) and strong precision (86.81%), but recall was notably low at 51.63%, which lowered the F1-score to 64.75%, despite a respectable AUC of 81.59%. In the fourth fold, the model achieved an accuracy of 74.75% with recall increasing to 84.21%, though specificity fell to 65.36%, reflecting a trade-off between sensitivity and control of false positives. Finally, in the fifth fold, the model recorded the highest specificity (94.08%) and precision (90.43%), but recall decreased to 55.56%, negatively impacting the F1-score (68.83%) despite achieving the best AUC (85.62%) across all folds.

Table 4 illustrates that the ResNet-50 model achieved an accuracy of 79.33%, a precision of 75.37%, and an F1-score of 81.92%, indicating robust classification performance. The recall was consistently high at 92.41%, underscoring the model’s sensitivity to positive cases, which is essential for medical diagnoses like PCa detection. However, the average specificity was relatively low at 66.23%, highlighting a tendency to misclassify negative cases as positive. The average AUC score of 93.00% reflected strong overall discriminative capability, despite fluctuations in specificity across the folds.

Examining the fold-wise results, in Fold 1, the model achieved an accuracy of 70.92% with a notably low specificity of 41.83% but perfect recall at 100%. This indicates that while the model successfully detected all positive cases, it misclassified a significant number of negative cases, resulting in a reduced precision of 63.22%. In Fold 2, there was a slight improvement, with accuracy rising to 74.51% and specificity increasing to 49.67%. Recall remained exceptionally high at 99.35%, but the discrepancy between sensitivity and specificity indicated ongoing concerns about false positives. In Fold 3, the model recorded its highest specificity at 89.54% and precision at 87.60%, although recall dropped to 73.86%. This suggested a shift towards a more conservative approach, prioritizing correct negative predictions over identifying some positive cases. Fold 4 showcased the best overall balance, with the highest accuracy of 87.54% and recall of 90.13%, along with strong specificity at 84.97%, precision at 85.63%, and an F1-score of 87.82%. This indicated that the model generalized effectively in this fold, managing both positive and negative cases well. In Fold 5, the model again prioritized recall at 98.69%, resulting in strong sensitivity but lower specificity at 65.13%. Accuracy at 81.97% and an F1-score of 84.59% suggested a fair balance, though it still leaned towards detecting positive cases.

Table 5 shows that the VGG-19 model achieved an accuracy of 98.10%, indicating highly reliable classification performance across all folds. The average specificity was 98.95%, and the average precision was 98.99%, both suggesting that the model consistently minimized false positives. The average recall was 97.25%, showing strong sensitivity in identifying positive cases. The corresponding F1-score averaged 98.07%, confirming the balance between precision and recall. Importantly, the AUC value remained at a perfect 100% across all folds, highlighting the model’s excellent discriminatory ability.

According to Fold-wise Analysis, In the first and second folds, the VGG-19 model achieved an accuracy of 97.06%, with perfect specificity (100%) and precision (100%), while recall was slightly lower at 94.12%, resulting in an F1-score of 96.97% and an AUC of 100%. In the third fold, the model performed exceptionally well, reaching its highest accuracy of 99.67%, along with a recall of 100% and an F1-score of 99.67%. Specificity and precision in this fold were 99.35%, and the AUC remained perfect at 100%. The fourth fold demonstrated slightly lower performance compared to the third, with an accuracy of 97.70%, specificity of 95.42%, and precision of 95.60%, though recall remained high at 100%, leading to an F1-score of 97.75% and an AUC of 100%. In the fifth fold, the model achieved an accuracy of 99.02%, with specificity and precision at 100%, recall at 98.04%, and an F1-score of 99.01%, while the AUC continued to hold at 100%.

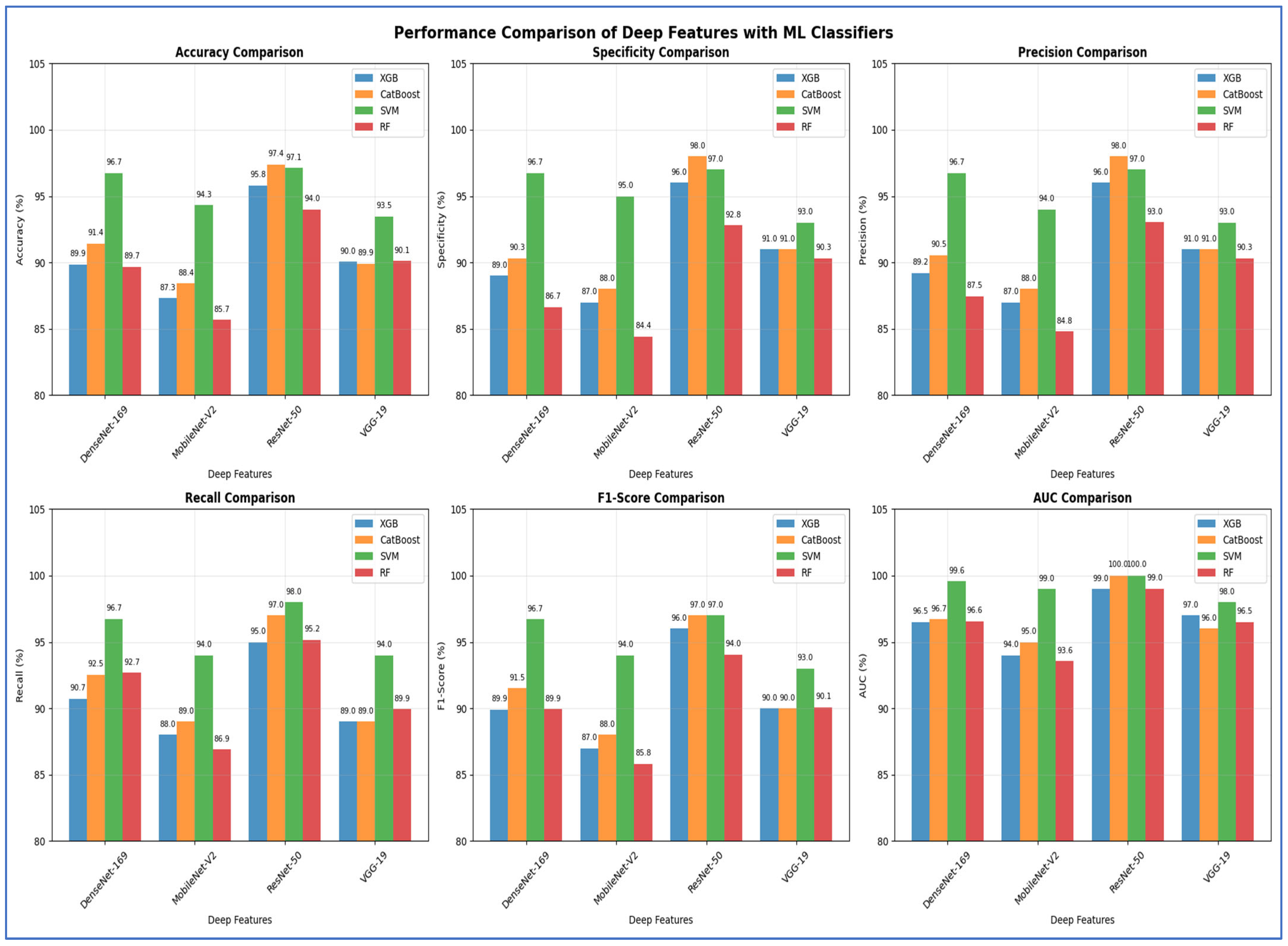

In the second experiment, we utilized the four DL models to extract deep features. However, this time we applied SVD to reduce dimensionality, decrease computational complexity, and retain essential information. We employed ML techniques—XGBoost, CatBoost, RF, and SVM—as classifiers. The results from the five-fold cross-validation for these models, along with their evaluation metrics, are presented in

Table 6 and

Figure 8.

Table 6 and

Figure 8 illustrate that using different CNN models as a feature extractor followed by feature reduction using SVD method, the DenseNet-169 architecture, the SVM model was clearly the best-performing classifier. It significantly outperformed the other models across all evaluated metrics, achieving a dominant accuracy of 96.73%, which was over five percentage points higher than the next best model, CatBoost. Furthermore, SVM demonstrated perfect balance and consistency, as evidenced by its identical scores for accuracy, specificity, precision, and recall (all at 96.73%), and it also attained the highest F1-score (96.71%) and a near-perfect AUC of 99.57%. In contrast, the other models—XGBoost, CatBoost, and RF—all clustered at a lower performance tier, with accuracy scores ranging from 89.66% to 91.43%, and their metrics showed less internal consistency, such as the RF model’s notable disparity between its specificity (86.65%) and recall (92.67%). Therefore, the SVM model proved to be the most accurate and robust machine learning model for this specific task. As shown in

Table 6 and

Figure 8, the performance of the MobileNet-V2 model is paired with the Four ML classifiers. Based on the results from the MobileNet-V2 architecture, the SVM model was clearly the best performing classifier. It significantly outperformed all other models, achieving a top accuracy of 94.31%, which was almost six percentage points higher than the next best model, CatBoost. The SVM model also showed superior and well-balanced performance across all other metrics, achieving the highest scores in specificity (0.95), precision (0.94), recall (0.94), F1-score (0.94), and AUC (0.99). In contrast, the other models—XGBoost, CatBoost, and RF—all performed at a lower level, with accuracy scores ranging from 85.67% to 88.42%, and their metrics were less consistent. Thus, the SVM model proved to be the most accurate and reliable machine learning model for this task.

Based on the results of ResNet-50 architecture, the CatBoost model emerged as the best-performing classifier, closely followed by the SVM. CatBoost achieved the highest accuracy at 97.38%, just surpassing the SVM at 97.12%, and it also attained a perfect AUC score of 1.00. It exhibited excellent balance across various metrics, boasting top-tier specificity (0.98) and precision (0.98), while maintaining a strong recall (0.97) and F1-score (0.97). The SVM came in a very close second, also achieving a perfect AUC and demonstrating similarly robust and balanced results. The other models, XGBoost and RF, performed well, but they were clearly outclassed by the top two. Therefore, CatBoost proved to be the most accurate model for this task, with the SVM representing an equally strong alternative in terms of overall robustness. With VGG-19 architecture, the SVM model emerged as the top-performing classifier. It significantly surpassed all other models, achieving a maximum accuracy of 93.46%, which was over three percentage points higher than the next best model, RF. The SVM also showed excellent and well-balanced performance across all key metrics, achieving the highest scores in specificity (0.93), precision (0.93), recall (0.94), F1-score (0.93), and AUC (0.98). In contrast, the other three models—XGBoost, CatBoost, and RF—grouped closely together with accuracy scores around 90%, and none consistently challenged the SVM’s superiority across the metrics. Therefore, the SVM model demonstrated itself to be the most accurate and robust machine learning model for this specific task.

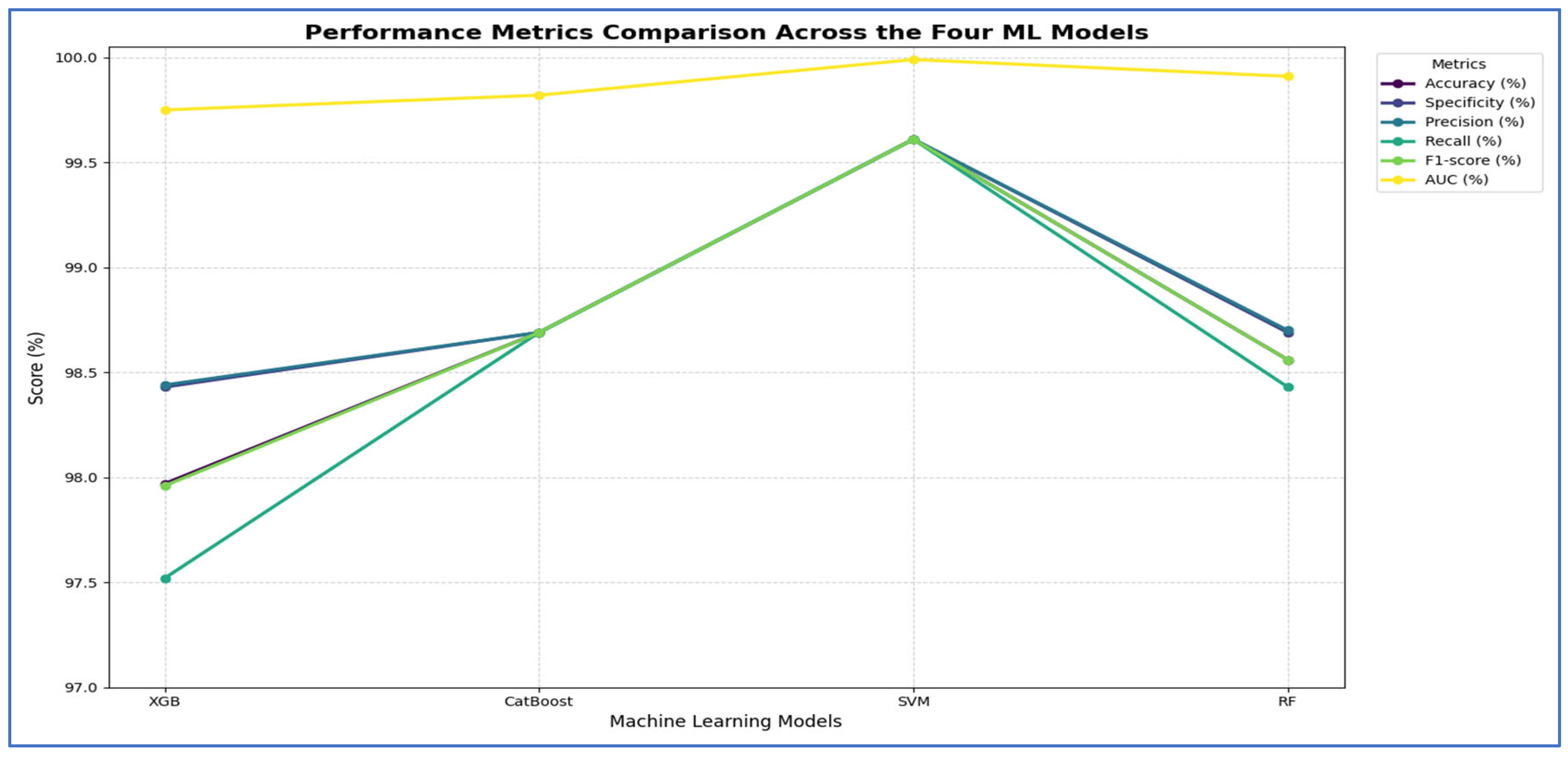

In the third experiment, we extracted handcrafted features using HOG to effectively detect structural patterns and employed the same ML techniques for classification. The results from the five-fold cross-validation for this experiment, along with the evaluation metrics, are presented in

Table 7 and

Figure 9. Based on

Table 7, the SVM model emerged as the best performing model overall. It significantly outperformed the other three models across all evaluation metrics. The SVM achieved an impressive accuracy of 98.30%, which was more than four percentage points higher than the next best models. It also recorded the highest scores in specificity (97.77%), precision (97.80%), recall (98.82%), F1-score (98.31%), and a nearly perfect AUC (99.84%).

In contrast, the performances of XGBoost and CatBoost were quite similar, placing them in a distinct second tier, with accuracy scores of 94.05% and 94.24%, respectively. While these models performed well, their results were consistently and significantly lower than those of the SVM across all metrics. The RF model was the least effective, with all its metrics, including an accuracy of 89.53%, falling noticeably below the others.

Thus, the SVM model proved to be the most accurate, balanced, and robust choice for the task.

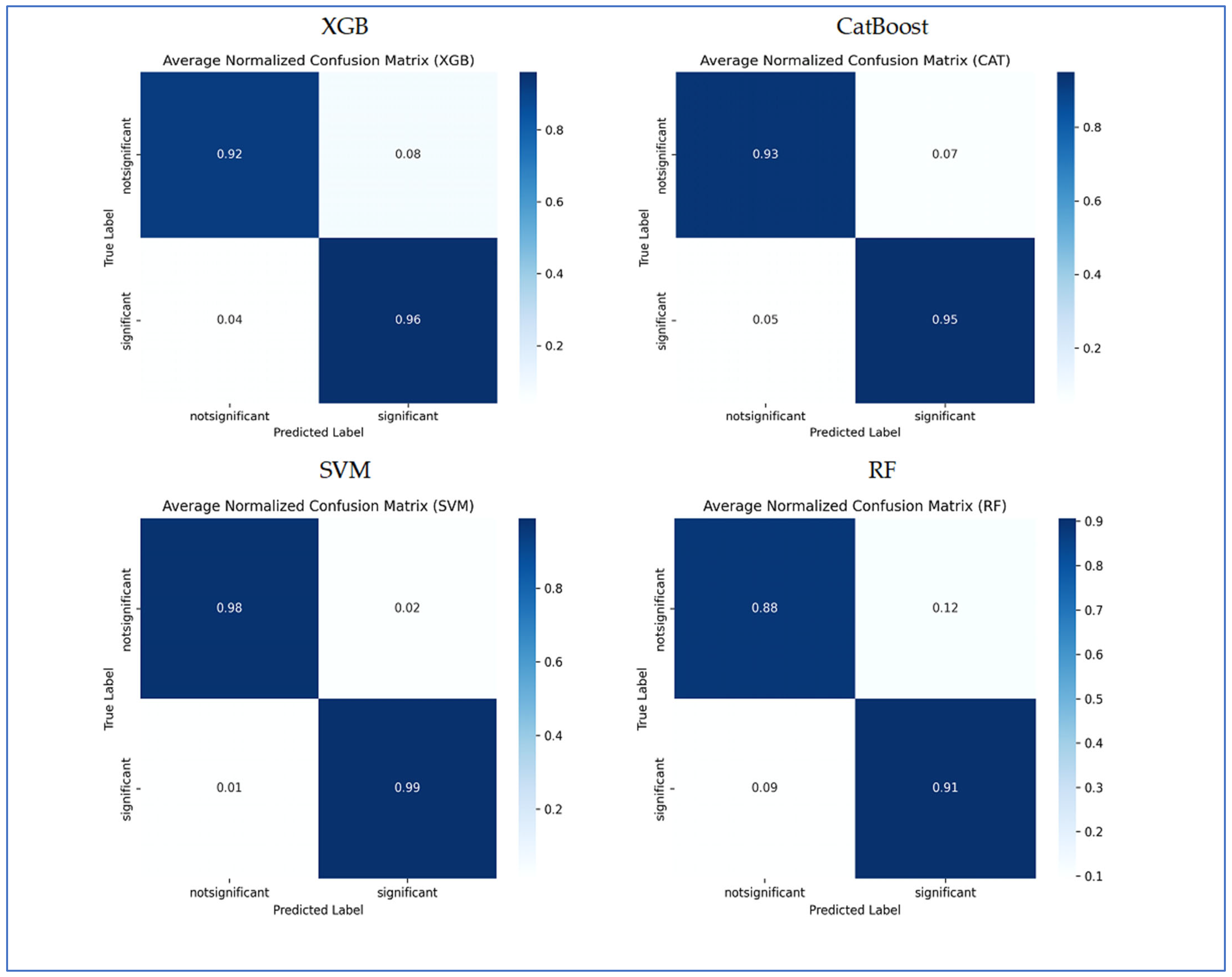

In

Figure 10, the normalized confusion matrices illustrate the classification performance of the four ML models. XGB model accurately classified 92% of the non-significant cases and 96% of the significant cases. It misclassified 8% of non-significant as significant and 4% of significant as non-significant. Overall, XGB exhibited strong performance, with slightly higher accuracy in significant predictions. CatBoost achieved 93% accuracy in identifying non-significant instances and 95% accuracy for significant instances. It misclassified 7% of non-significant cases as significant and 5% of significant cases as non-significant. The results demonstrated balanced classification ability, with a slight tendency to mislabel non-significant samples. SVM delivered the highest performance among the models, correctly classifying 98% of non-significant and 99% of significant cases. Misclassification was minimal, with only 2% of non-significant predicted as significant and 1% of significant predicted as non-significant. This indicates that SVM achieved the most reliable classification performance. RF attained 88% accuracy in detecting non-significant cases and 91% in detecting significant cases. Misclassification was more frequent compared to other models, with 12% of non-significant predicted as significant and 9% of significant predicted as non-significant. Among the four models, RF demonstrated the lowest classification accuracy. In conclusion, SVM achieved the best overall performance with the least misclassification, followed by XGBoost and CatBoost, which yielded very similar results. RF underperformed relative to the other models, particularly in predicting the non-significant class.

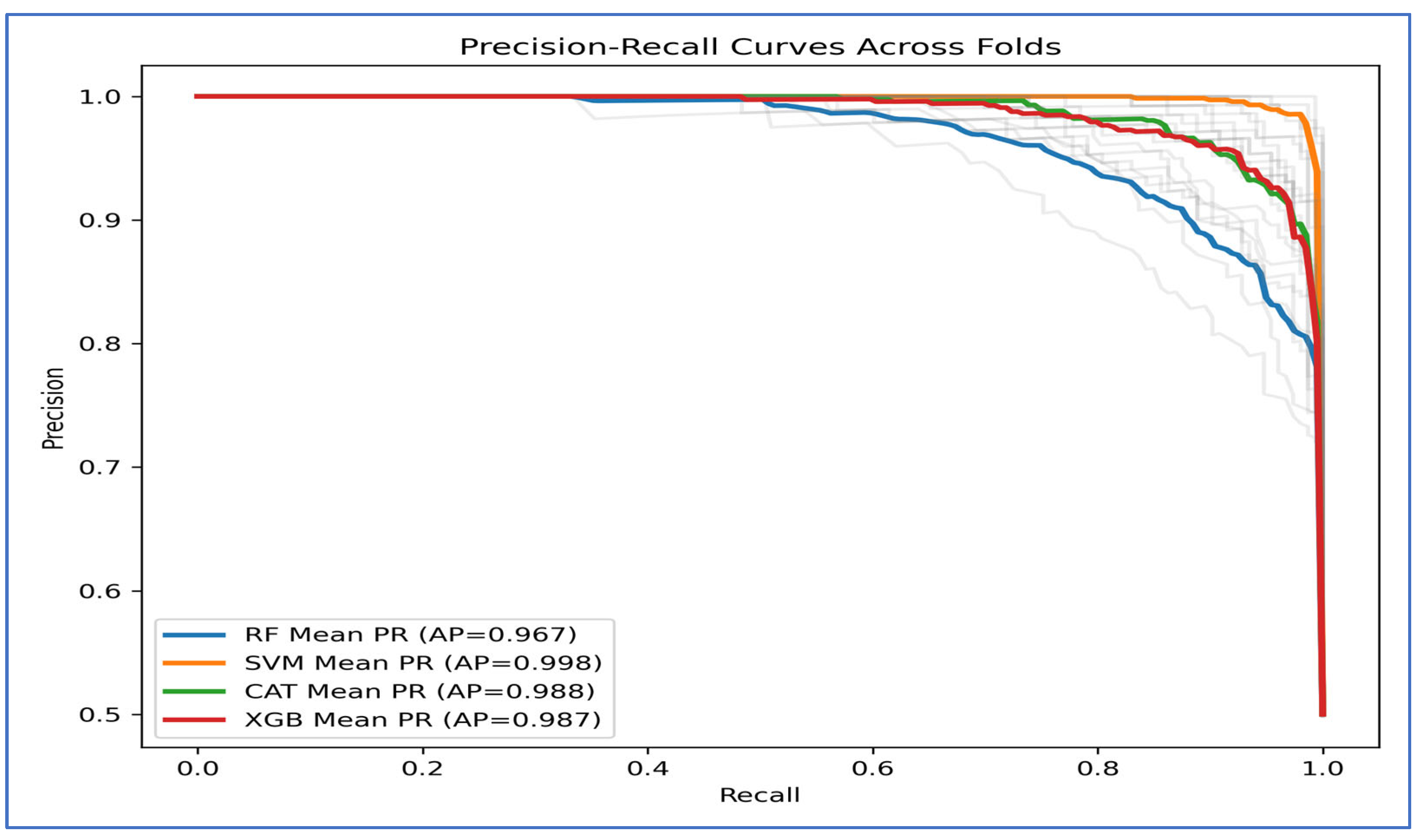

Figure 11 depicts the precision-recall (PR) curves for the four ML models, indicating that they achieved high precision and recall, demonstrating strong performance in distinguishing between classes. The average precision (AP) values revealed that the SVM performed the best, with an AP of 0.998. CatBoost followed closely with an AP of 0.988, while XGBoost had an AP of 0.987. RF had the lowest performance, recording an AP of 0.967.

The PR curve for SVM remained near the top-right corner, suggesting it effectively maintained high precision and recall across different thresholds. CatBoost and XGBoost also showed strong and consistent results, though they slightly underperformed compared to SVM. In contrast, Random Forest’s curve declined earlier, indicating a trade-off where it sacrificed precision at higher recall levels, which contributed to its lower AP score.

Overall,

Figure 11 demonstrated that SVM consistently outperformed the other models, whereas CatBoost and XGBoost achieved nearly identical outcomes. Random Forest fell behind, especially at higher recall values.

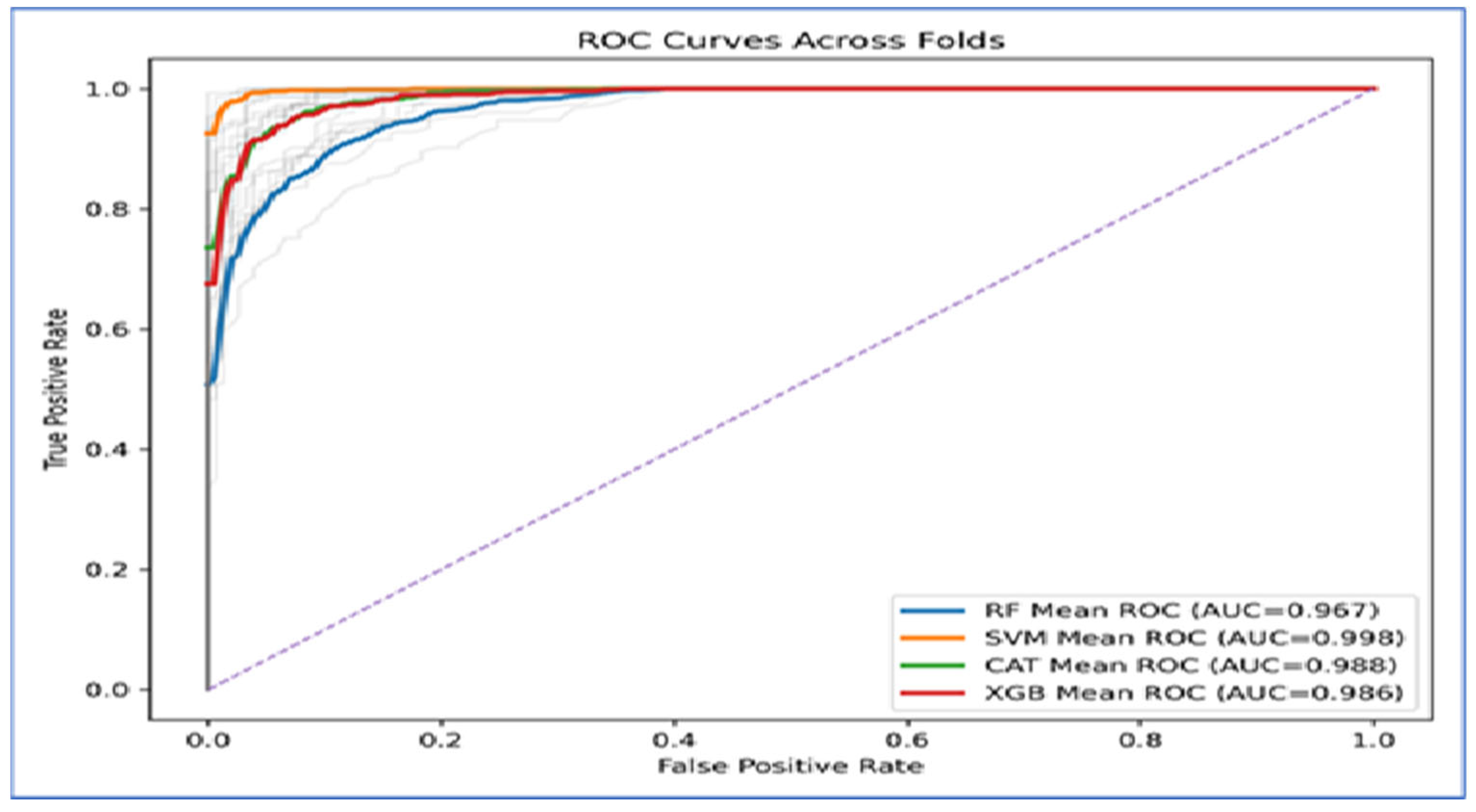

Figure 12 presents the Receiver Operating Characteristic (ROC) curves across folds indicated that the four ML models exhibited strong classification ability, as their curves were positioned significantly above the diagonal baseline. The Area Under the Curve (AUC) values supported this finding. SVM achieved the highest performance, with a mean AUC of 0.998, suggesting nearly perfect separability between the classes. CatBoost (AUC = 0.988) and XGBoost (AUC = 0.986) closely followed, both demonstrating excellent classification performance with only minor deviations from the ideal curve. RF produced the lowest AUC at 0.967, which, while still strong, indicated less discriminative power compared to the other models.

The curves also showed that SVM maintained a consistently high true positive rate across all false positive rates, while CatBoost and XGBoost exhibited similar robustness. In contrast, Random Forest displayed a steeper decline at higher false positive rates, which accounted for its relatively lower AUC value.

In Conclusion, the ROC analysis revealed that SVM outperformed all other models, achieving near-perfect results. CatBoost and XGBoost provided highly competitive performance, while Random Forest lagged behind but still delivered reliable classification.

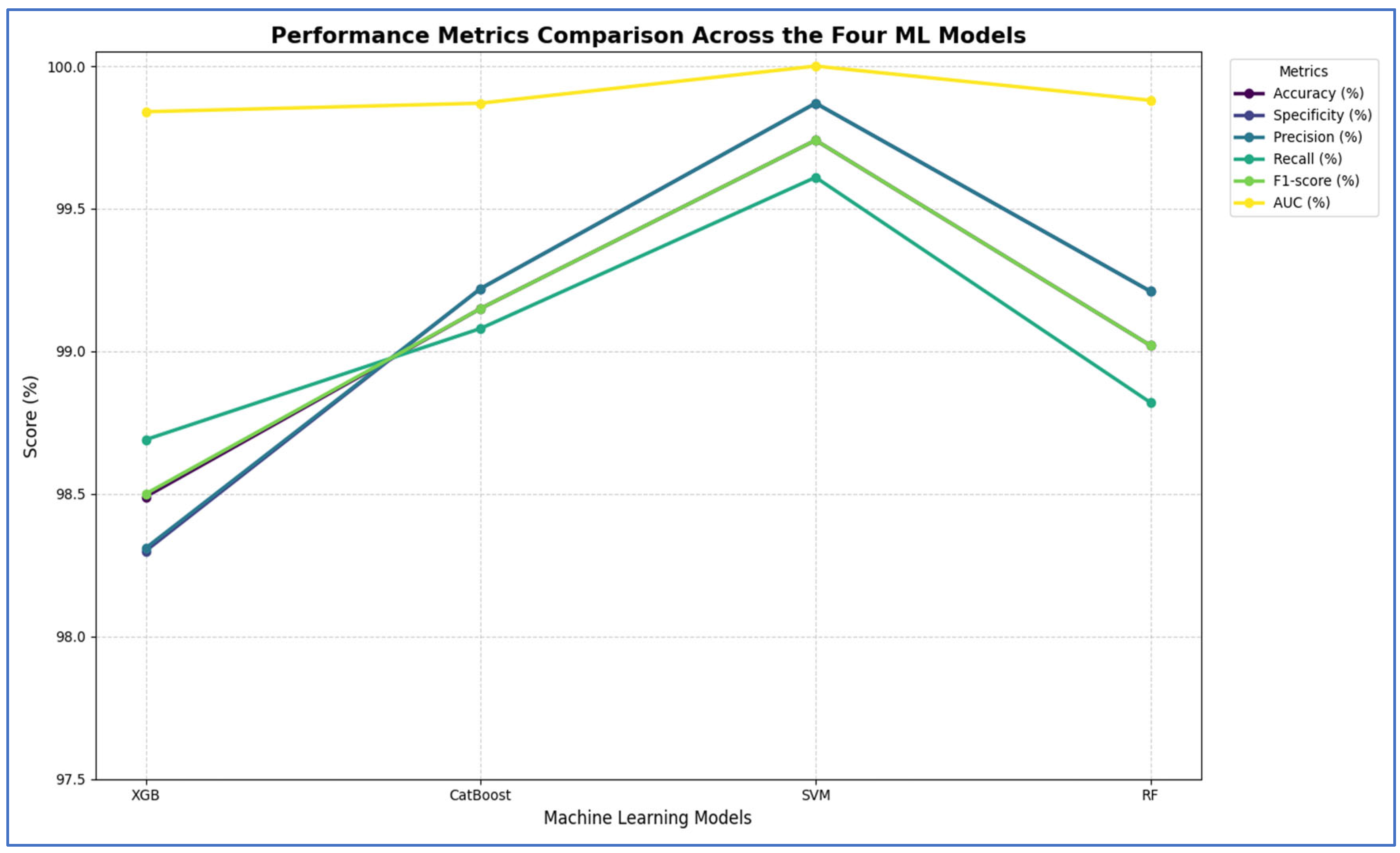

In the fourth experiment, we combined the four DL models—DenseNet-169, MobileNet-V2, ResNet-50, and VGG-19—for deep feature extraction with HOG for handcrafted feature detection. We utilized SVD to reduce dimensionality, decrease computational complexity, and preserve essential information. The classifiers employed were XGBoost, CatBoost, SVM, and RF. The results of the five-fold cross-validation for these ML models, along with their evaluation metrics, are shown in

Table 8,

Table 9,

Table 10 and

Table 11. The highest average accuracy from the five-fold cross-validation was achieved by MobileNet-V2 and SVM, which reached 99.74%.

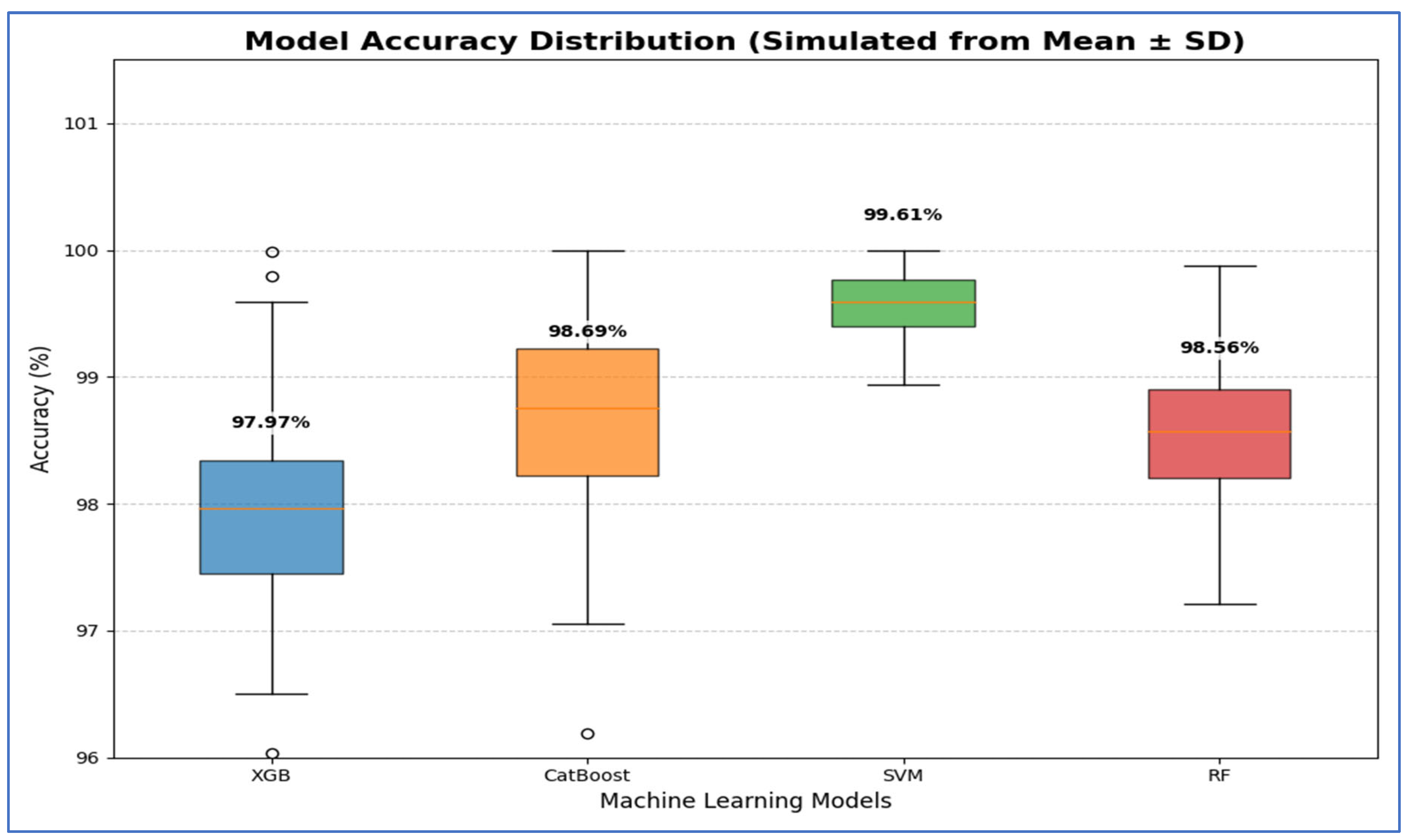

From

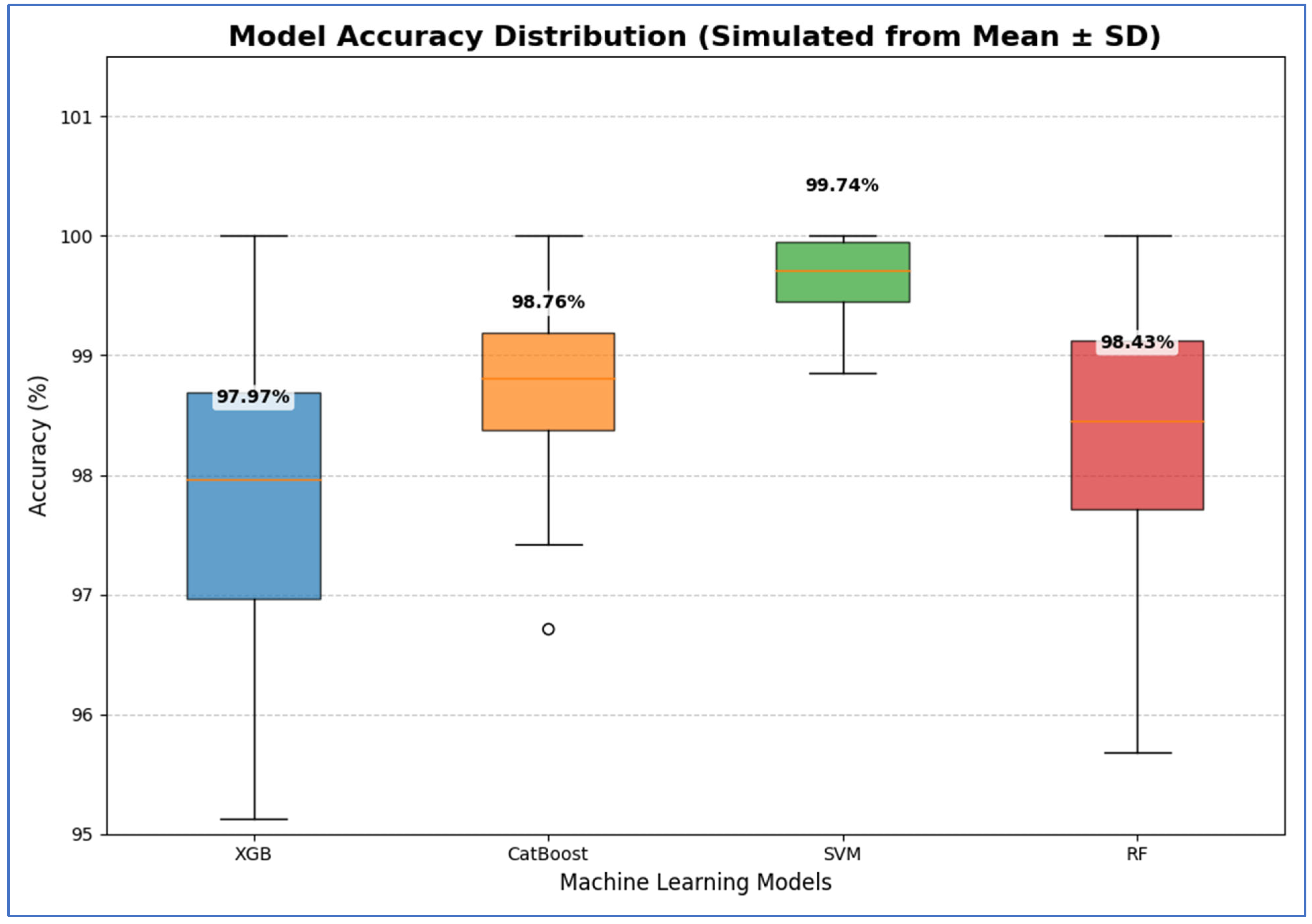

Table 8 and

Figure 13, the integration of DenseNet-169 with four ML classifiers—XGB, CatBoost, SVM, and RF—showed strong performance across all evaluation metrics. Among these models, SVM achieved the highest results, with an accuracy, specificity, precision, recall, and F1-score of 99.61% each, along with an AUC of 99.99%, indicating near-perfect classification.

CatBoost also performed exceptionally well, attaining 98.69% in all five metrics and an AUC of 99.82%, demonstrating stable and balanced results. Similarly, RF exhibited competitive performance, with an accuracy of 98.56%, precision of 98.70%, recall of 98.43%, and an AUC of 99.91%. It slightly outperformed CatBoost in AUC but fell marginally short in other metrics.

XGB had the lowest results among the four, although still high, with an accuracy of 97.97%, precision of 98.44%, recall of 97.52%, and an AUC of 99.75%. While its performance was strong, it lagged slightly behind CatBoost, RF, and SVM.

In summary, all four ML models performed well when used with DenseNet-169. However, SVM had the best overall performance, followed by CatBoost and RF, while XGB showed slightly lower but still strong results.

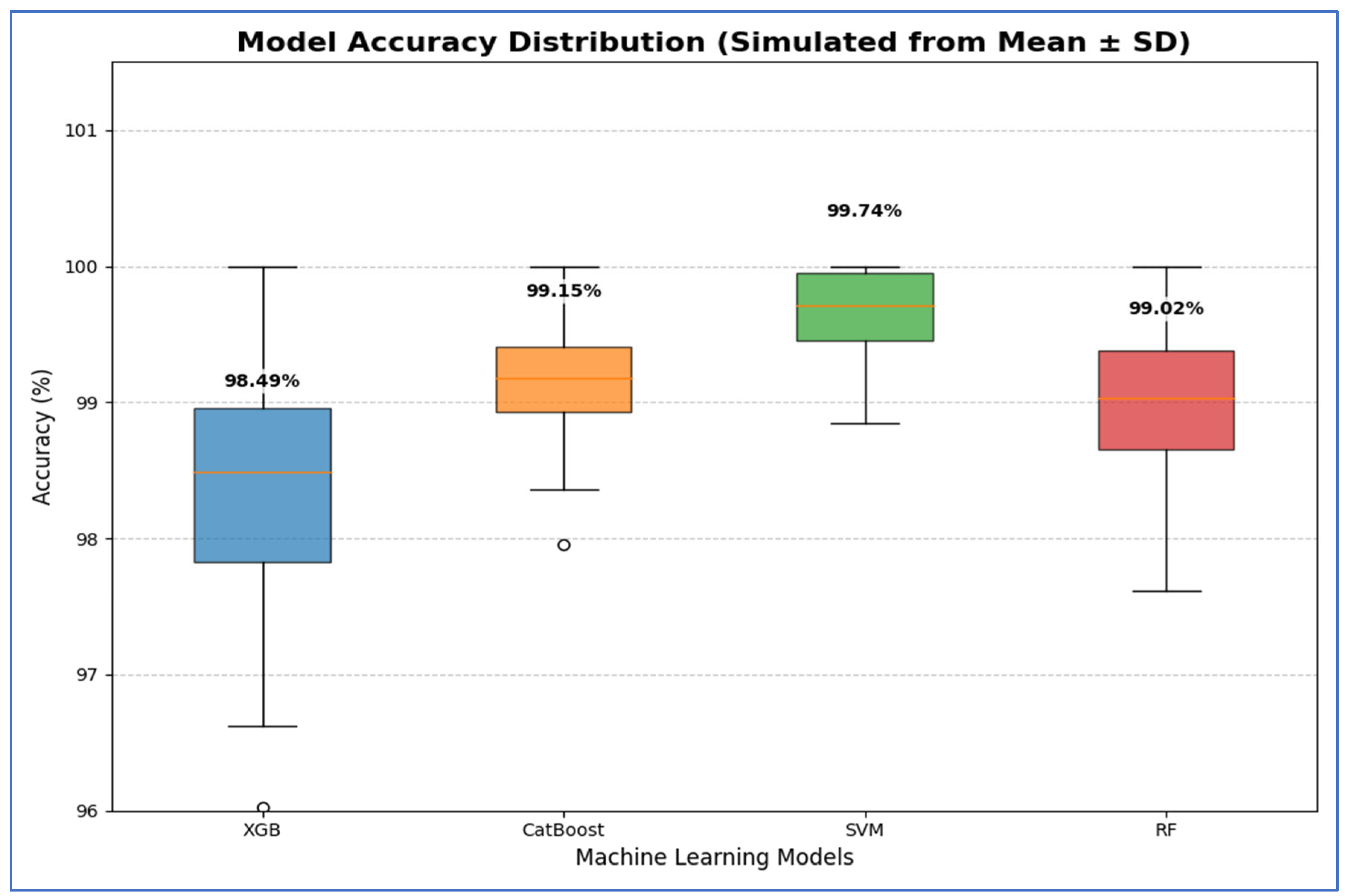

From

Table 9 and

Figure 14, the integration of MobileNet-V2 with the four ML classifiers (XGB, CatBoost, SVM, and RF) showed excellent performance across all evaluation metrics. SVM achieved the highest performance overall, with accuracy, specificity, precision, recall, and F1-score all reaching 99.74% or higher, and an AUC of 100%, reflecting perfect discriminative ability.

CatBoost also performed strongly, obtaining an accuracy of 98.76%, recall of 99.08%, and an AUC of 99.87%, which indicated stable and well-balanced results across metrics. RF achieved accuracy, specificity, precision, and recall of 98.43%, with an AUC of 99.89%. Although its results were strong, they were slightly lower than those of CatBoost and SVM. XGB provided the lowest performance among the four, with 97.97% accuracy, 97.52% specificity, and an AUC of 99.72%, though it still maintained high values overall.

In conclusion, although all four ML models performed well when combined with MobileNet-V2, the SVM was the most effective. It was followed by CatBoost, then RF, while XGB produced lower results, though still reliable.

Table 10 and

Figure 15 show that integrating ResNet-50 with SVM resulted in the best overall performance. It achieved the highest scores for accuracy (99.74%), specificity (99.87%), precision (99.87%), and AUC (100%). Additionally, its F1-score (99.74%) was among the highest, demonstrating exceptional and balanced classification performance. The RF and CatBoost models also performed strongly and competitively. While CatBoost slightly outperformed RF in accuracy, recall, and F1-score, the differences were minimal. Both models exhibited nearly identical and excellent performance in specificity (99.21% for RF and 99.22% for CatBoost) and precision (99.21% for RF and 99.22% for CatBoost), with their AUC scores being virtually indistinguishable from each other and from SVM. Thus, their performances were statistically comparable.

The XGB model was consistently identified as the least effective classifier in this comparison, recording the lowest scores across all metrics, including accuracy (98.49%), specificity (98.3%), precision (98.31%), recall (98.69%), and F1-score (98.5%). Although its AUC was still high (99.84%), it was the lowest among the four models, confirming its relative inferiority.

In summary, the evaluation established a clear performance hierarchy: SVM was the top-performing model, followed closely by CatBoost and RF, with XGB being the least accurate.

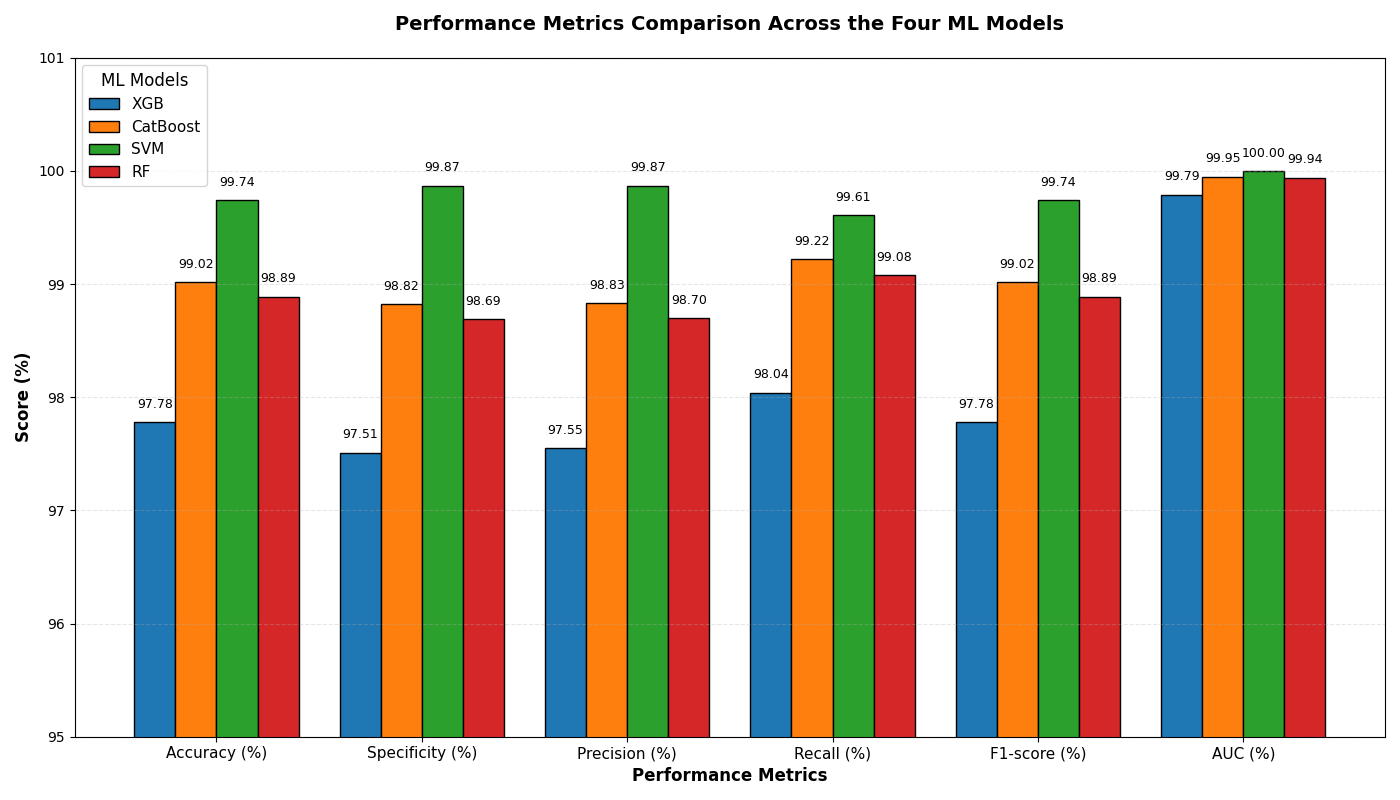

The results presented in

Table 11 and

Figure 16 show that the integration of VGG-19 with various ML classifiers showed strong overall performance across all evaluation metrics. Among these models, the SVM achieved the highest results, with an accuracy of 99.74%, specificity and precision of 99.87%, recall of 99.61%, F1-score of 99.74%, and an AUC of 100%, indicating near-perfect classification ability.

CatBoost also performed well, attaining an accuracy of 99.02%, precision of 98.83%, recall of 99.22%, and an AUC of 99.95%, demonstrating high consistency and stability across all metrics. RF closely followed, with an accuracy of 98.89%, specificity of 98.69%, precision of 98.70%, recall of 99.08%, F1-score of 98.89%, and an AUC of 99.94%. Its performance was slightly lower than that of CatBoost, particularly in precision and recall. XGB recorded the lowest performance among the four models, yet remained competitive, with an accuracy of 97.78%, specificity of 97.51%, precision of 97.55%, recall of 98.04%, F1-score of 97.78%, and an AUC of 99.79%.

In summary, when combined with VGG-19, SVM proved to be the best classifier, followed by CatBoost and RF, while XGB provided the lowest performance, though it was still strong.

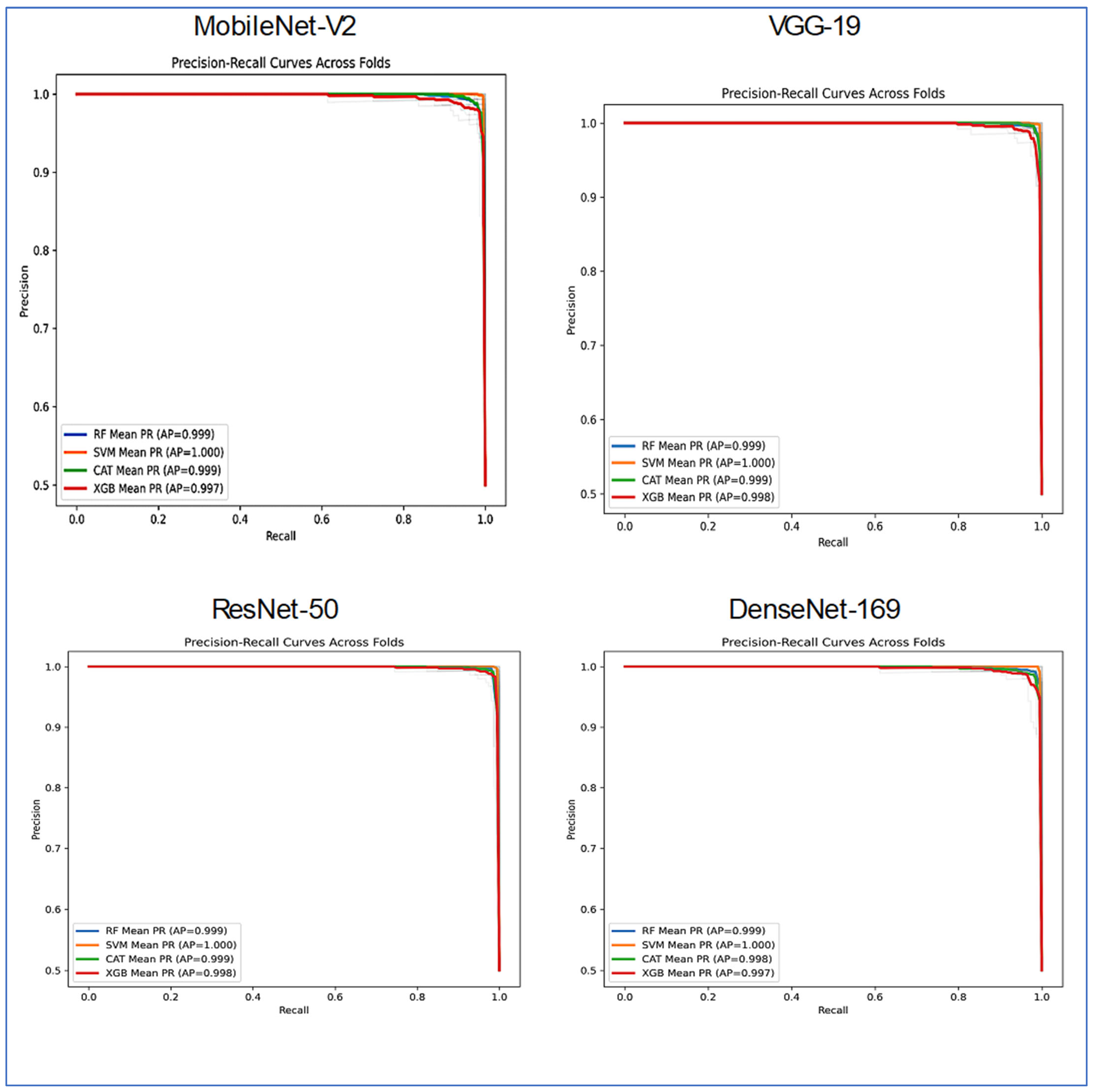

Figure 17 presented a comparative analysis of the classifier performance across the four distinct DL architectures: DenseNet-169, MobileNet-V2, ResNet-50, and VGG-19. The evaluation utilized the four ML models, with the results visualized through PR curves averaged across several folds.

Most model-architecture combinations demonstrated exceptionally high performance, as indicated by their AP scores, which approached the ideal value of 1.0. VGG-19 and MobileNet-V2 architectures enabled the classifiers to achieve nearly perfect results. The AP scores for RF, SVM, CatBoost, and XGBoost ranged from approximately 0.997 to 1.000. The performance of DenseNet-169 remained very strong, with all classifiers, including RF, SVM, CatBoost, and XGBoost, achieving AP scores above 0.99. ResNet-50 exhibited a noticeable, albeit small, drop in performance. The RF and CATBoost classifiers recorded slightly lower AP scores (approximately 0.917 and 0.909, respectively), while the SVM and XGBoost models maintained very high performance (AP ~1.000 and 0.995).

The SVM stood out as the most consistent top performer, achieving a perfect or near-perfect AP score of 1.000 across all four neural network architectures. XGBoost also displayed robust and high performance across all backbones, with AP scores ranging from 0.987 to 0.998. RF and CATBoost excelled on GG-19, MobileNet-v2, and DenseNet-169, but their performance was somewhat lower when applied to the ResNet-50 feature extractor.

In summary,

Figure 17 illustrated that while all combinations were highly effective, the SVM classifier consistently yielded the best and most stable results across all feature extraction architectures. The ResNet-50 backbone proved to be the most challenging for some models, though it still facilitated very high performance.

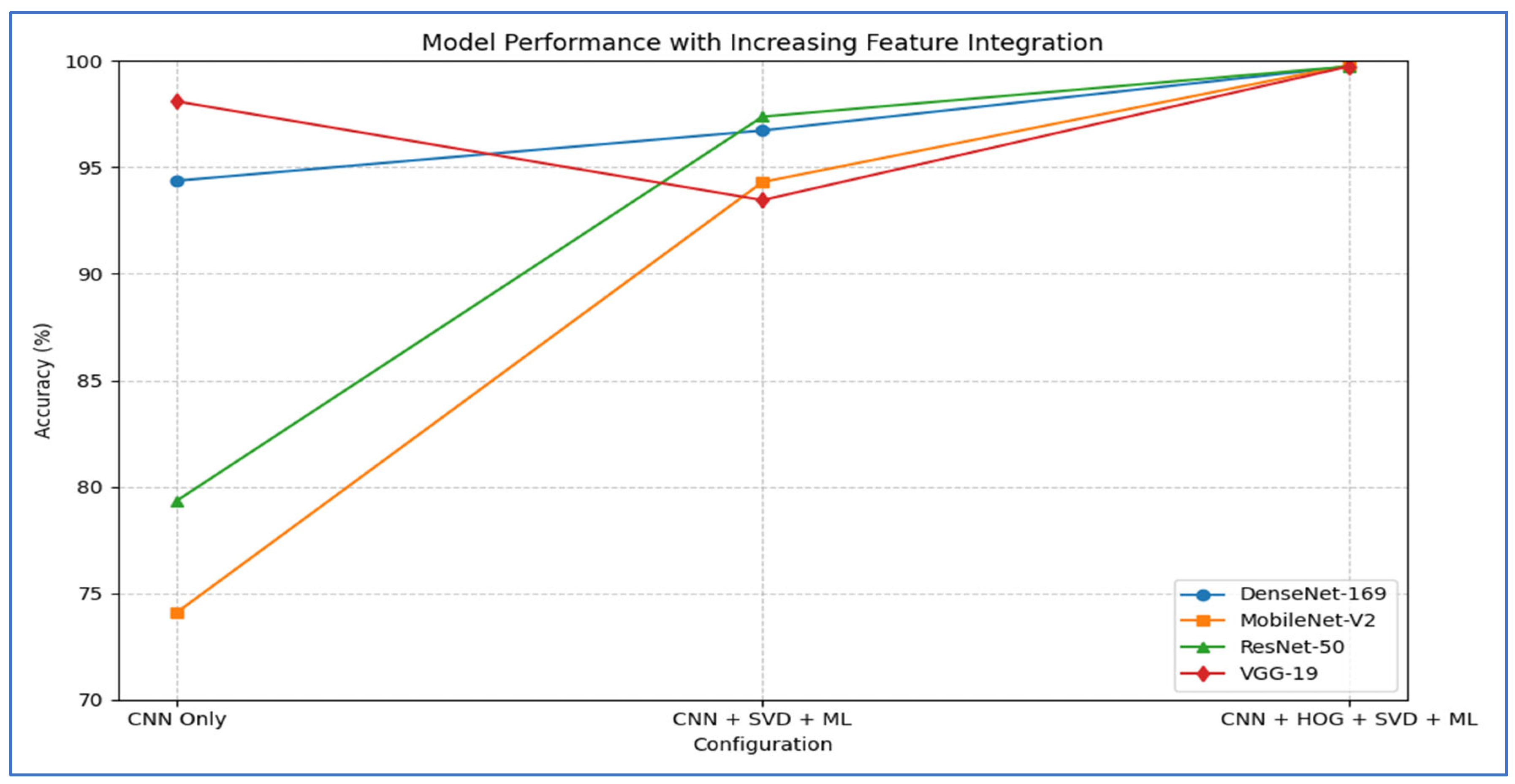

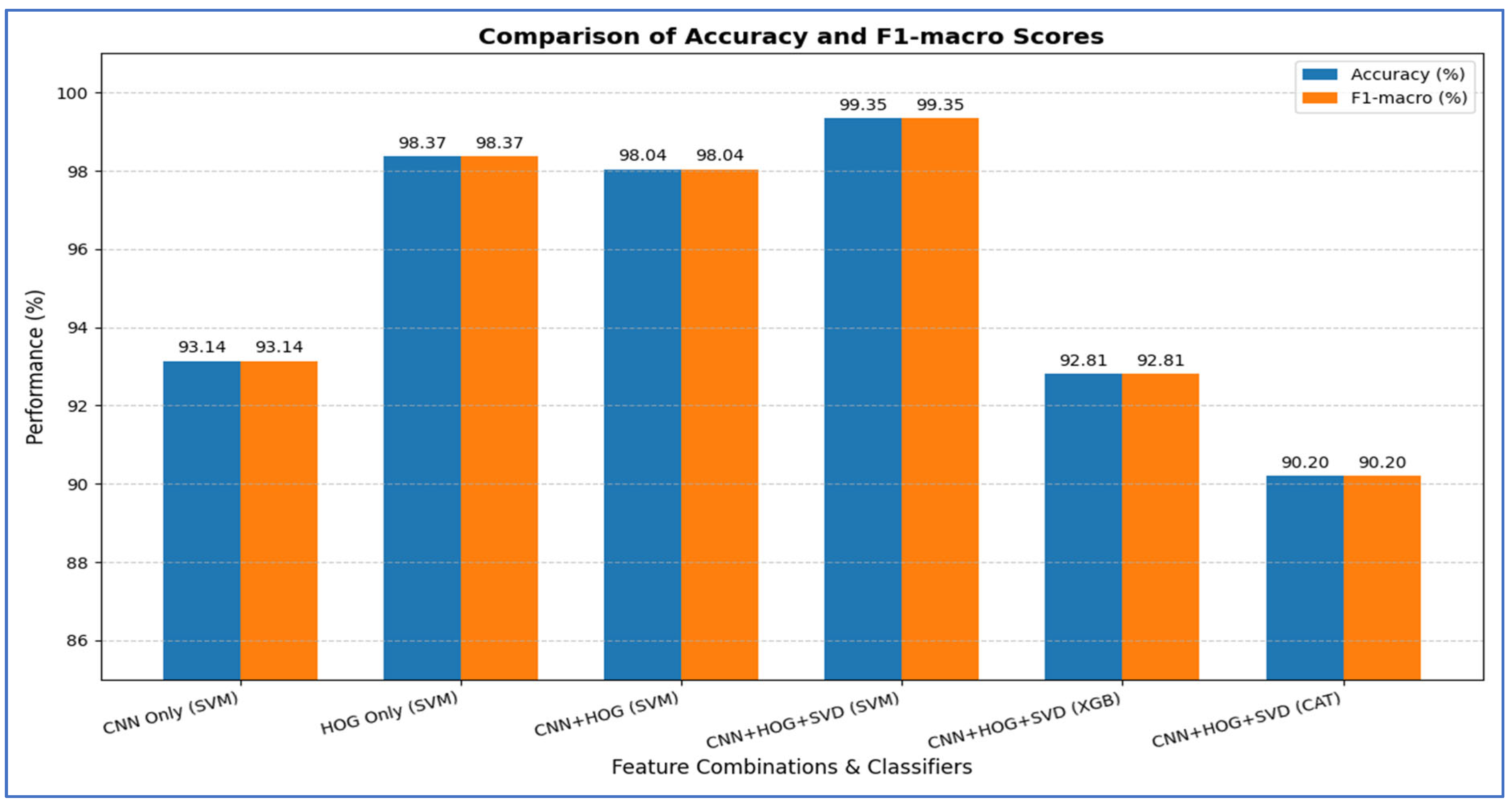

Table 12 and

Figure 18 show the impact of combining CNN features with additional preprocessing and ML models on classification performance. When using CNN features only, DenseNet-169 achieved 94.37%, MobileNet-V2 reached 74.08%, ResNet-50 obtained 79.33%, and VGG-19 recorded 98.10%, showing that standalone CNN performance varied considerably depending on the architecture.

When SVD and ML were added to the CNN features, all models experienced a substantial improvement. DenseNet-169 and MobileNet-V2 both achieved their highest performance with SVM, reaching 96.73% and 94.31%, respectively. ResNet-50 achieved 97.38% with CatBoost, while VGG-19 achieved 93.46% with SVM. This indicated that dimensionality reduction and ML classifiers significantly enhanced predictive accuracy, particularly for models that initially performed less strongly.

Finally, when HOG, SVD, and ML were combined with CNN features, all models reached near-perfect performance. DenseNet-169 achieved 99.61, while MobileNet-V2 ResNet-50, and VGG-19 achieved 99.74% with SVM with SVM. This demonstrated that integrating multiple feature extraction techniques with advanced ML classifiers maximized accuracy across all architectures, effectively overcoming the limitations of standalone CNNs.

From the four experiments, we concluded that the highest performance was achieved by the SVM when all three feature types—MobileNet-V2 (or ResNet-50 or VGG-19), HOG, and SVD—were combined, reaching an accuracy of 99.74%.

3.5. Interpretation of Results in the Context of Literature

PCa is among the most frequently diagnosed cancers in men globally and ranks as a primary contributor to cancer-related fatalities in various areas [

3]. This condition develops when cells in the prostate begin to grow abnormally, spreading to adjacent organs and tissues [

4]. PCa primarily impacts men in their middle age, particularly those between 45 and 60 years old, and is the leading cause of cancer deaths in Western countries [

5]. A notable challenge in diagnosing PCa is that many men may not show any symptoms, particularly in the disease’s early stages. Despite being the most common cancer type, early detection significantly enhances survival rates due to the cancer’s slow growth. Therefore, efficient monitoring and prompt diagnosis are essential for improving patient outcomes.

Numerous approaches exist for diagnosing PCa, with screening tests considered the most effective for early detection. Key methods include PSA testing, digital rectal examination (DRE), biopsy, and MRI [

14]. Although these techniques are fundamental for screening, they do have limitations, such as invasiveness, subjective interpretation, and a significant occurrence of false positives and negatives [

1]. Therefore, there is an urgent requirement for a fully automated and dependable system to classify PCa mpMRI images.

To address these challenges, this research introduced a hybrid method for classifying PCa by integrating deep features derived from MobileNet-V2 (or ResNet-50 or VGG-19) with manually designed HOG descriptors. The resulting feature set undergoes dimensionality reduction using Truncated SVD, followed by classification through SVM. This model is intended to help healthcare professionals detect PCa at an earlier stage, thereby reducing both diagnostic duration and expenses. Furthermore, the research utilized a five-fold cross-validation technique to enhance the accuracy of the hybrid method. This approach enables effective parameter optimization by dividing the data into five subsets and repeating the process five times, allowing each subset to serve as the validation set once. Five-fold cross-validation is a specific instance of k-fold cross-validation, where (k) can be any integer greater than 1. This method is well-regarded for delivering a more precise and dependable assessment of DL models on previously unseen data.

We implemented four experiments, where the main objective of them was to detect PCa with the intention of enhancing patient outcomes, streamlining the diagnostic process, and significantly lowering both the time and costs incurred by patients. In our experiments, we allocated 70% of the TPP dataset, which comprised 1072 images, for training purposes, while the remaining 30% (456 images) was reserved for testing. We utilized supervised pre-training to prepare four DL models—DenseNet-169, MobileNet-V2, ResNet-50, and VGG-19—training them on the ImageNet dataset.

In the first experiment, we employed four DL models to extract deep features for capturing high-level image representations, which were then used as classifiers. In the second experiment, we again used the four DL models for deep feature extraction but applied SVD to reduce dimensionality, decrease computational complexity, and preserve important information. We utilized ML techniques—XGB, CatBoost, RF, and SVM—as classifiers.

In the third experiment, we extracted handcrafted features using HOG to effectively detect structural patterns. Similar to the previous experiment, we applied SVD for dimensionality reduction, computational efficiency, and information retention, using the same machine learning techniques for classification.

Finally, in the fourth experiment, we combined the four DL models for deep feature extraction with HOG for handcrafted feature detection. We again used SVD to reduce dimensionality, lower computational complexity, and maintain critical information, employing the ML techniques as classifiers.

In all four experiments, we used the five-fold cross-validation technique. This method involved splitting the training dataset into five equal parts. In each iteration, one part was designated as the validation set, while the other four parts were used to train the model. Each iteration represented a distinct training and validation process that updated the model’s parameters. This process was repeated five times, with each part serving as the validation set once. We calculated the average performance across all five iterations to assess the model’s generalization ability. At the end of the experiment, we applied the measured metrics (Equations (1)–(7)) to the four DL and ML models.

Using five-fold cross-validation, the SVM classifier, combined with MobileNet-V2, (or ResNet-50 or VGG-19) features, HOG, and SVD, achieved the highest overall performance among the classifiers tested. It recorded an accuracy of 99.74%, indicating that nearly all test samples were classified correctly. The specificity of 99.87% demonstrated the model’s effectiveness in identifying true negatives, while the recall of 99.61% showed its ability to capture almost all true positives. Additionally, the precision of 99.87% indicated that false positives were minimal, resulting in a very high F1-score of 99.74%, which balanced both precision and recall effectively. The AUC value of 100% confirmed that the SVM classifier provided perfect class separability. Overall, these results suggested that the combination of MobileNet-V2 (or ResNet-50 or VGG-19) as a feature extractor with HOG, SVD, and SVM as a classifier consistently outperformed XGB, CatBoost, and RF, establishing it as the most reliable and robust approach in this experiment.

SVM outperformed XGB, CatBoost, and RF classifiers when used with features from MobileNet-V2 (or ResNet-50 or VGG-19). This success was due to SVM’s ability to manage the high-dimensional feature space created by the DL model effectively. The kernel-based optimization in SVM enabled it to identify an optimal separating hyperplane with minimal error, resulting in impressive performance metrics: 99.74% accuracy, 99.74% F1-score, and 100% AUC.

In contrast, while XGB and CatBoost are robust ensemble methods, they experienced slight misclassifications because they rely on iterative boosting of decision trees, which can struggle to capture complex nonlinear boundaries present in deep features. The RF classifier performed the least well, as its straightforward tree structure was more prone to overfitting and did not generalize effectively in such a complex feature space. This discrepancy explains why SVM consistently achieved nearly perfect class separability, whereas the other models demonstrated comparatively lower performance.

Table 20 and

Figure 24 show that the state-of-the-art studies consistently demonstrated strong classification performance on the TPP dataset, but our hybrid approach (MobileNet-V2 (or ResNet-50 or VGG-19), HOG, SVD, and SVM) outperformed them all. In

Table 20, The reviewed studies showed significant variation in accuracy and AUC values for PCa detection using different DL and ML methods on the TPP dataset. Hashem et al. [

1] utilized InceptionResNetV2 and achieved an accuracy of 89.20%, indicating moderate performance. Yoo et al. [

3] implemented a CNN model, reporting an AUC of 87%, while Liu et al. [

24] applied XmasNet (CNNs) and obtained a lower AUC of 84%, both demonstrating limited discriminative ability compared to more advanced architectures. Giganti et al. [

28] also used CNNs and achieved an AUC of 91%, outperforming earlier CNN-based studies but still falling short of the top-performing methods.

In contrast, Sarıateş and Özbay [

23] employed DenseNet-201 with RMSProp optimization, reporting an accuracy of 98.63%, which indicated a significant improvement in classification performance. Similarly, Pellicer-Valero et al. [

27] achieved an AUC of 96% using CNNs, reflecting strong discriminative capacity. Mehta et al. [

25] utilized a PCF-based SVM model, reporting a ROC of 86%, suggesting weaker performance compared to deep learning methods. Meanwhile, Salama and Aly [

26] combined ResNet-50 and VGG-16, with SVM to achieve an accuracy of 98.79%, making it one of the top-performing models in the literature.

The proposed hybrid model, combining MobileNet-V2 (or ResNet-50 or VGG-19), HOG, SVD, and SVM, outperformed all previously reported approaches, achieving an accuracy of 99.74% and an AUC of 100%. This performance exceeded even the strongest baselines, such as DenseNet-201 (98.63%) [

23] and the combination of ResNet-50 with VGG-16 and SVM (98.79%) [

26]. By integrating handcrafted features (HOG and SVD) with the deep learning representations from MobileNet-V2, along with the robust classification capability of SVM, the hybrid approach effectively captured both high-level abstract features and low-level discriminative details, leading to near-perfect class separability.

Overall, while several prior studies achieved high accuracy and AUC values using state-of-the-art CNN architectures, the proposed hybrid model exhibited superior generalization and discriminative power, establishing it as the most effective approach among the works compared on the TPP dataset.