1. Introduction

Major Depressive Disorder (MDD) is a severe mental illness characterized by persistent low mood, loss of interest, and recurrent suicidal thoughts, significantly impairing patient quality of life [

1]. According to the latest epidemiological data from the World Health Organization (WHO), approximately 280 million people worldwide suffer from depression, making it one of the leading causes of disability worldwide [

2]. However, the diagnosis of MDD primarily relies on clinicians’ subjective evaluation of patients’ symptoms. These diagnostic approaches depend largely on patients’ self-reports and physicians’ clinical experience and lack objective biomarkers. As a result, diagnostic consistency among different doctors is low. Moreover, MDD’s symptom spectrum overlaps significantly with other psychiatric disorders. This further increases the difficulty of early differential diagnosis [

3]. Although a growing body of research has explored potential biomarkers for MDD [

4], the field has not yet converged on a standardized set of criteria for clinical application. Thus, developing an objective and quantifiable biomarker system is critical, and has therefore become an urgent challenge in psychiatry.

Structural magnetic resonance imaging (sMRI) and functional magnetic resonance imaging (fMRI) are core components of neuroimaging. They provide complementary objective evidence for the pathological mechanisms of MDD. Specifically, sMRI focuses on brain morphology [

5], while fMRI focuses on dynamic neural activity [

6,

7]. sMRI, through high-resolution brain scanning, enables the quantification of structural metrics such as gray matter volume (GMV), white matter volume, and cerebrospinal fluid. Research has consistently demonstrated that MDD patients often exhibit structural abnormalities in brain regions including the prefrontal cortex and occipital lobe [

8,

9]. These alterations may be associated with neuroplasticity impairment resulting from chronic mood disorders. fMRI captures temporal variations in blood-oxygen-level-dependent (BOLD) signals, reflecting functional connectivity (FC) patterns and neural network dynamics during both resting-state and task-based conditions. For instance, functional abnormalities in the default mode network (DMN) have been conclusively linked to MDD’s negative cognitive biases and emotional dysregulation [

10,

11]. These analyses show that sMRI and fMRI offer complementary insights into MDD, together providing an objective perspective on its neurobiological basis. Nevertheless, transforming these information-rich imaging datasets into clinically applicable diagnostic tools still requires overcoming a series of technical bottlenecks.

Machine learning has become a pivotal tool in biomedical research. Its impactful applications range from the accurate prediction of diabetes [

12] to the management of hematological disorders [

13], demonstrating its broad utility. In the specific domain of psychiatric neuroimaging, however, translating these insights into clinically applicable diagnostic tools for MDD faces three major challenges: data harmonization, model construction, and multimodal fusion. First, data harmonization remains a significant hurdle. While multi-center studies expand sample sizes, the resulting data often exhibit significant site heterogeneity due to variations in MRI scanners, sequence parameters, and acquisition protocols. This heterogeneity introduces systematic biases that can confound disease-related signals. Existing harmonization methods like ComBat [

14] typically assume site effects are independent of biological signals, representing a static correction approach. Although recent frameworks such as

[

15], USMDA [

16], and FSM-MSDA [

17] have advanced site adaptation via adversarial learning and domain adaptation, they are primarily validated on a limited number of sites (e.g., 2–3 centers) and face scalability and stability challenges when applied to large-scale multi-center settings involving 25 sites. Second, effective model construction for multimodal MRI data demands a paradigm shift from generic architectures to designs that explicitly encode modality-specific features. MRI data exhibits high-dimensional characteristics and inherent noise (e.g., head motion artifacts, field inhomogeneity), posing challenges such as the curse of dimensionality and feature extraction bottlenecks for conventional machine learning methods. Traditional analytical approaches rely on predefined imaging features (e.g., functional connectivity [

11,

18]) and shallow machine learning models (e.g., support vector machines, linear regression [

10,

19]), which are ill-suited to capture the complex nonlinear pathological patterns of MDD. Current research tends to directly model raw signals through end-to-end deep learning frameworks (e.g., convolutional neural networks (CNNs) [

20,

21], Swin Transformers [

22,

23]). While these approaches avoid the limitations of handcrafted feature design, they do not address the critical need for modeling modality-specific characteristics. Third, achieving deep multimodal fusion is nontrivial. Most existing methods adopt simple concatenation or traditional cross-attention fusion strategies. Moreover, they fail to fully explore the deep correlations between structural and functional modalities. Traditional cross-attention fusion methods [

24] typically use a unidirectional mechanism: one modality serves as the Query, while the other acts as the Key and Value, forming a unidirectional information flow. The main flaws of this design lie in asymmetric information flow and insufficient modal interaction.

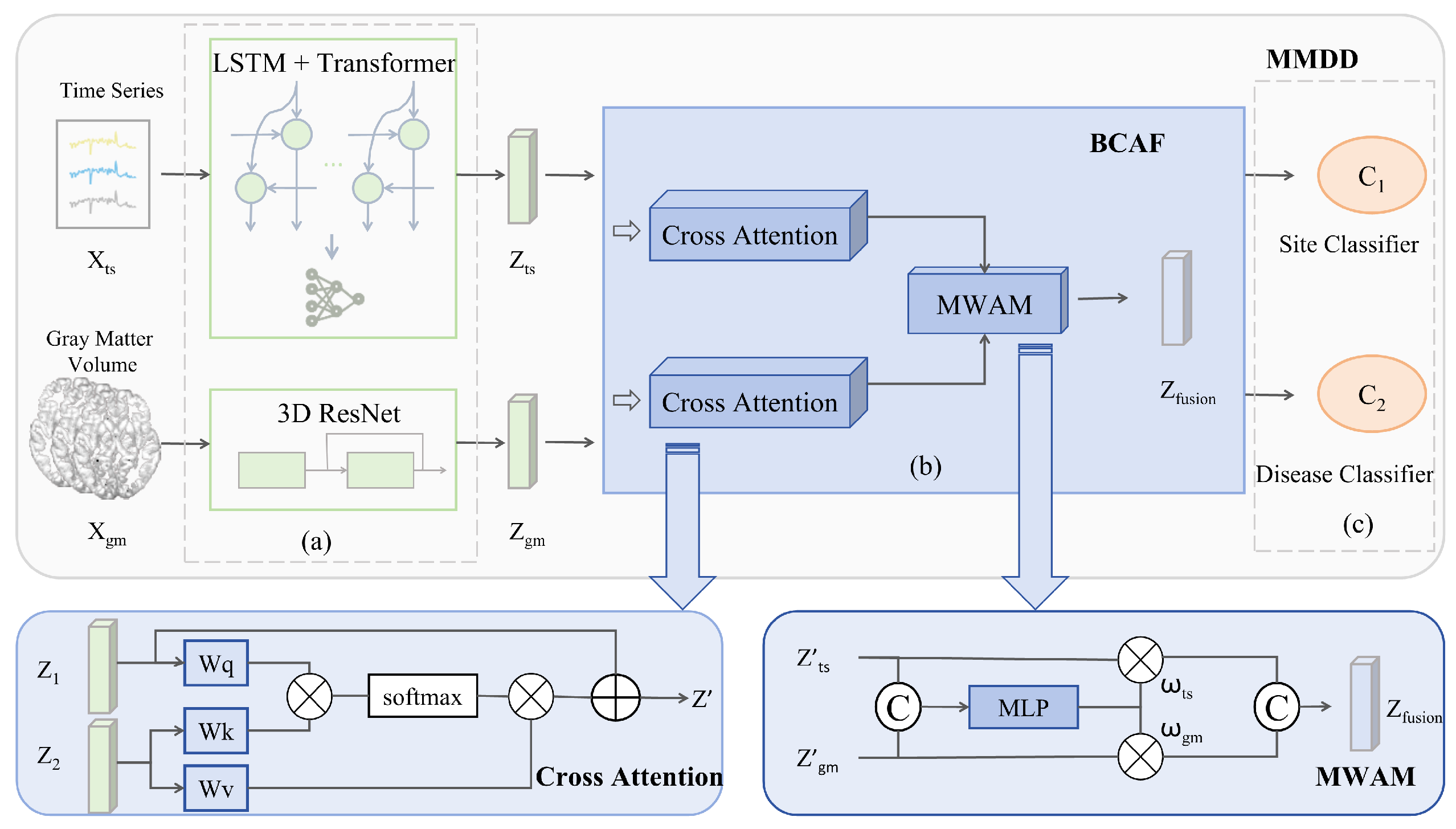

To overcome these challenges, we propose the Multimodal Multitask Dynamic Disentanglement Framework (MMDD). The key contributions of this work are outlined below:

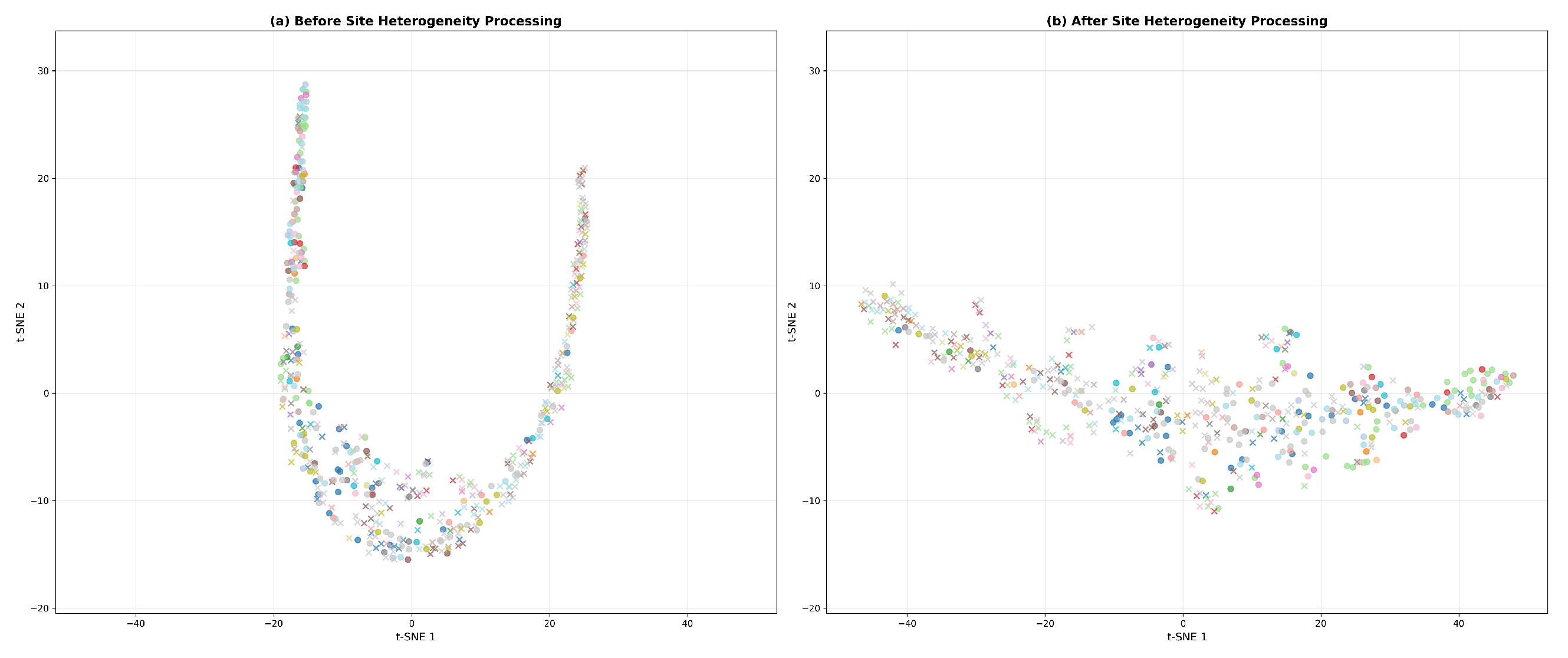

To tackle the critical issue of site heterogeneity in large-scale multi-center MDD studies, we propose a Gradient Reversal Layer-based Multitask Learning (GRL-MTL) strategy. Instead of applying a static correction, our method reframes site harmonization as an auxiliary task. By leveraging a Gradient Reversal Layer, it dynamically learns site-invariant representations that are robust to scanner and protocol variations. This approach demonstrates superior scalability and stability in experiments involving a large number of sites.

We designed a dual-pathway feature extraction network to accurately capture modality-specific characteristics from sMRI and fMRI data. Specifically, a 3D ResNet is employed to extract rich spatial structural features from sMRI, while an LSTM–Transformer hybrid encoder is designed to model the complex temporal dynamics of fMRI. This specialized design ensures the optimal extraction and representation of each modality’s unique information before fusion, forming a more informed basis for multimodal integration.

For the core challenge of multimodal integration, we introduce a Bidirectional Cross-Attention Fusion (BCAF) mechanism. Unlike traditional unidirectional fusion methods, BCAF establishes bidirectional interaction paths, allowing sMRI and fMRI to mutually and simultaneously guide each other’s representation learning. This process dynamically integrates complementary information through attention-based weighting, thus forming a more holistic and discriminative feature representation.

On the REST-meta-MDD dataset (comprising 1300 MDD patients and 1128 healthy controls across 25 sites), our model achieved an overall classification accuracy of 77.76%. In a more challenging leave-one-site-out cross-validation setting (using all available sites as independent test sets), our method consistently outperformed baseline approaches across all test sites, demonstrating strong generalizability across unseen sites.

Interpretability analysis, based on SHAP values and two-sample t-tests, revealed distinct neurobiological patterns: temporal features from fMRI were predominantly localized to subcortical hubs and the cerebellum, while gray matter volume (GMV) features mainly involved higher-order cognitive and emotion-regulation cortical regions. Notably, the middle cingulate gyrus consistently exhibited significant abnormalities in both imaging modalities.

2. Related Work

Based on the multi-center REST-meta-MDD dataset, computer-aided diagnosis of MDD using resting-state fMRI (rs-fMRI) has become an important research direction. Existing work primarily revolves around the core challenge of effectively modeling brain FC using Graph Neural Networks (GNNs). It has evolved from static FC extraction to dynamic FC generation, and from a general architecture to a specifically optimized one. Several recent studies (2023–2025) on MRI-based MDD classification were selected for analysis in the review, as summarized in

Table 1. Early research, such as DGCNN [

25], initially validated the effectiveness of GNN in processing static FC. DGCNN achieved performance superior to traditional machine learning methods (with an accuracy of 72.1%) on a sample of 1601 subjects, laying the foundation for subsequent studies. However, research by Gallo et al. [

26] demonstrated that simply applying standard Graph Convolutional Network (GCN) or support vector machine (SVM) models leads to a significant decline in generalization performance (62% accuracy) on large multi-center datasets (2338 subjects) due to site heterogeneity. To address this challenge, the FGDN [

27] framework integrates linear and nonlinear functional connectivity information through its Dual Graph Attention Network (DGAT) module to extract more robust features. More importantly, it reconstructs the disrupted graph structural connections across different sites via the Federated Graph Convolutional Network (FedGCN) module. However, its classification accuracy of 61.8% on 841 subjects from three sites of the REST-meta-MDD dataset indicates that multi-site depression diagnosis still faces significant challenges. This highlights the necessity for model innovation.

Subsequent researchers have deepened this work along multiple dimensions, although validation is often not performed on the complete dataset. Firstly, in terms of feature learning, GAE-FCNN [

18] learns low-dimensional topological embeddings of brain networks in an unsupervised manner, aiming to more fully capture complex relationships between nodes. The N2V-GAT [

28] framework innovatively combines Node2Vec graph embedding with graph attention mechanisms to differentially evaluate the contributions of different brain regions, achieving high classification performance (78.73% accuracy). Secondly, on the data modeling level, research expanded from static to dynamic networks. For instance, the DSFGNN [

29] framework attempts to capture temporal dynamics of brain activity by simultaneously modeling static and dynamic FC and fusing them using an attention mechanism. Meanwhile, BrainFC-CGAN [

30] adopts a generative approach, specifically designing layers to preserve the symmetry and topological properties of FC. Thirdly, regarding data quality, DDN-Net [

31] directly addresses noise in fMRI data by introducing deep residual shrinkage denoising and subgraph normalization, enhancing model robustness. Although the aforementioned methods have advanced MDD classification, they typically rely on predefined brain atlases to extract FC as input, which carries potential information loss. This limitation is also evident in broader neuroimaging research.

The current trend is to bypass handcrafted feature extraction and perform end-to-end learning directly on raw signals. For example, Muksimova et al. [

20] proposed a CNN architecture integrated with an attention mechanism for end-to-end learning from original MRI slices, enabling automatic multi-scale feature capture and fusion. Xin et al. [

22] employed a CNN + Swin Transformer dual-stream network to process static 3D sMRI, focusing on capturing spatial patterns of gray/white matter atrophy. SwiFT [

23] adapted the Swin Transformer for dynamic 4D fMRI analysis by introducing a temporal modeling mechanism, enabling dynamic characterization of FC networks. These methods focus on single modality. Despite significant progress in deep learning-based MRI analysis, comprehensively parsing the complex human brain often requires integrating multimodal data.

Multimodal medical imaging research has made remarkable progress [

32,

33,

34], focusing on integrating data from different modalities to enhance model perception, understanding, and reasoning. Early studies like camAD [

24] used CNN-based multi-scale feature extraction and cross-attention fusion, dynamically weighting different modalities to significantly improve Alzheimer’s disease classification accuracy. Similarly, AGGN [

35] further developed a dual-domain (channel + spatial) attention mechanism combined with multi-scale feature extraction for precise glioma grading. In terms of architectural innovation, OmniVec2 [

36] built a universal Transformer framework supporting 12 modalities, using cross-attention in a dual-stream architecture for intermodal feature fusion and enabling cross-modal knowledge transfer via shared transformer parameters. To improve computational efficiency, MDDMamba [

37] introduced the CrossMamba module, replacing the traditional Transformer’s quadratic complexity with a linear alternative for efficient multimodal fusion. For modeling high-order relationships, CIA-HGCN [

38] applied hypergraph convolutional networks to mental disorder classification, capturing complex biomarker associations by constructing brain region-gene hypergraph networks. These methods collectively drive the paradigm shift in multimodal analysis from simple feature concatenation to deep, intelligent fusion, focusing on uncovering latent biological correlations through adaptive mechanisms.

Site heterogeneity in multi-center data poses a significant challenge, as existing static harmonization methods struggle to dynamically disentangle site-specific variances from disease biomarkers. Multi-site neuroimaging has become a key paradigm, enhancing statistical power and generalizability by aggregating data from different scanners and populations. Early methods focused on data-level harmonization; for instance, ComBat [

14], which uses an empirical Bayes framework for static correction of site effects, became a widely used baseline. Subsequently, SiMix [

39] introduced data augmentation via cross-site image mixing and test-time multi-view boosting to improve generalization to unseen sites. With the rise in deep learning, Svanera et al. [

40] used a progressive level-of-detail network, learning site-invariant anatomical priors at lower levels while adaptively handling site-specific intensity distributions at higher levels, achieving implicit heterogeneity disentanglement. The domain adaptation paradigm spurred advances in feature-space alignment techniques: Guan et al. [

15] used an adversarial dual-branch structure to separate shared and site-specific features; USMDA [

16] enabled unsupervised multi-source domain adaptation; FSM-MSDA [

17] established semantic feature matching mechanisms. Recent research also addresses privacy preservation and heterogeneous collaboration: SFPGCL [

41] innovatively combined federated learning with contrastive learning, using a shared branch to capture global invariant features and a personalized branch to retain site-specific characteristics, mitigating client heterogeneity via contrastive learning; SAN-Net [

42] employed self-adaptive normalization and Gradient Reversal Layers to dynamically suppress site-related variations at the feature level.

In summary, research on automated MDD diagnosis and MRI analysis clearly illustrates a technological development path from methodological innovation to addressing real-world challenges. Initially, various neural network models significantly enhanced the characterization of brain functional connectivity networks by incorporating attention mechanisms, dynamic fusion, and embedding learning. However, the inherent site heterogeneity and scanner effects in multi-center data have highlighted the necessity of moving beyond mere model performance optimization. This has driven the development of technologies such as domain adaptation, feature disentanglement, and federated learning, aimed at enhancing model generalizability and robustness. Looking ahead, research in this field will increasingly focus on integrating the depth of end-to-end learning with the breadth of multimodal fusion, while leveraging explainable artificial intelligence technologies to ensure model transparency and trustworthiness. Ultimately, through the synergistic optimization of algorithmic architecture, data harmonization, and multimodal information integration, we can expect to develop objective diagnostic tools that are truly applicable in clinical practice and demonstrate high generalizability.

Table 1.

Recent research on MRI-based MDD classification on the REST-meta-MDD dataset.

Table 1.

Recent research on MRI-based MDD classification on the REST-meta-MDD dataset.

| Study | Methodology | Sample | Data Characteristics | Site Harmonization | Accuracy |

|---|

| Zhu et al. (2023) [25] | Deep Graph CNN | HC, n = 771 MDD, n = 830 | fMRI | No (Random split) | 72.10% |

| Gallo et al. (2023) [26] | SVM and GCN | HC, n = 1083 MDD, n = 1255 | fMRI | No (Random split) | 62.00% |

| Zheng et al. (2023) [43] | a brain function encoder and a brain structure encoder to extract features, a function and structure co-attention fusion module | HC, n = 1179 MDD, n = 1008 | fMRI and sMRI | No (Random split) | 75.20% |

| Noman et al. (2024) [18] | graph autoencoder (GAE) architecture, built upon GCN | HC, n = 227 MDD, n = 250 | fMRI | No (Random split) | 65.07% |

| Tan et al. (2024) [30] | Generative Adversarial Network (GAN) | HC, n = 684 MDD, n = 747 | fMRI | No (Random split) | 76.28% |

| Zhao et al. (2024) [29] | GNN | HC, n = 779 MDD, n = 832 | fMRI | No (Random split) | 67.12% |

| Zhang et al. (2024) [31] | Deep Residual Shrinkage Denoising Network | unknown | fMRI | No (Random split) | 72.43% |

| Xi et al. (2025) [44] | a Random Forest and an ensemble classifier | HC, n = 742 MDD, n = 821 | fMRI | No (Random split) | 75.30% |

| Su et al. (2025) [28] | Graph Attention Network (GAT) | HC, n = 765 MDD, n = 821 | fMRI | No (Random split) | 78.73% |

| Liu et al. (2025) [27] | a Federated Graph Convolutional Network framework with Dual Graph Attention Network | HC, n = 384 MDD, n = 457 | fMRI | Yes | 61.80% |

5. Discussion

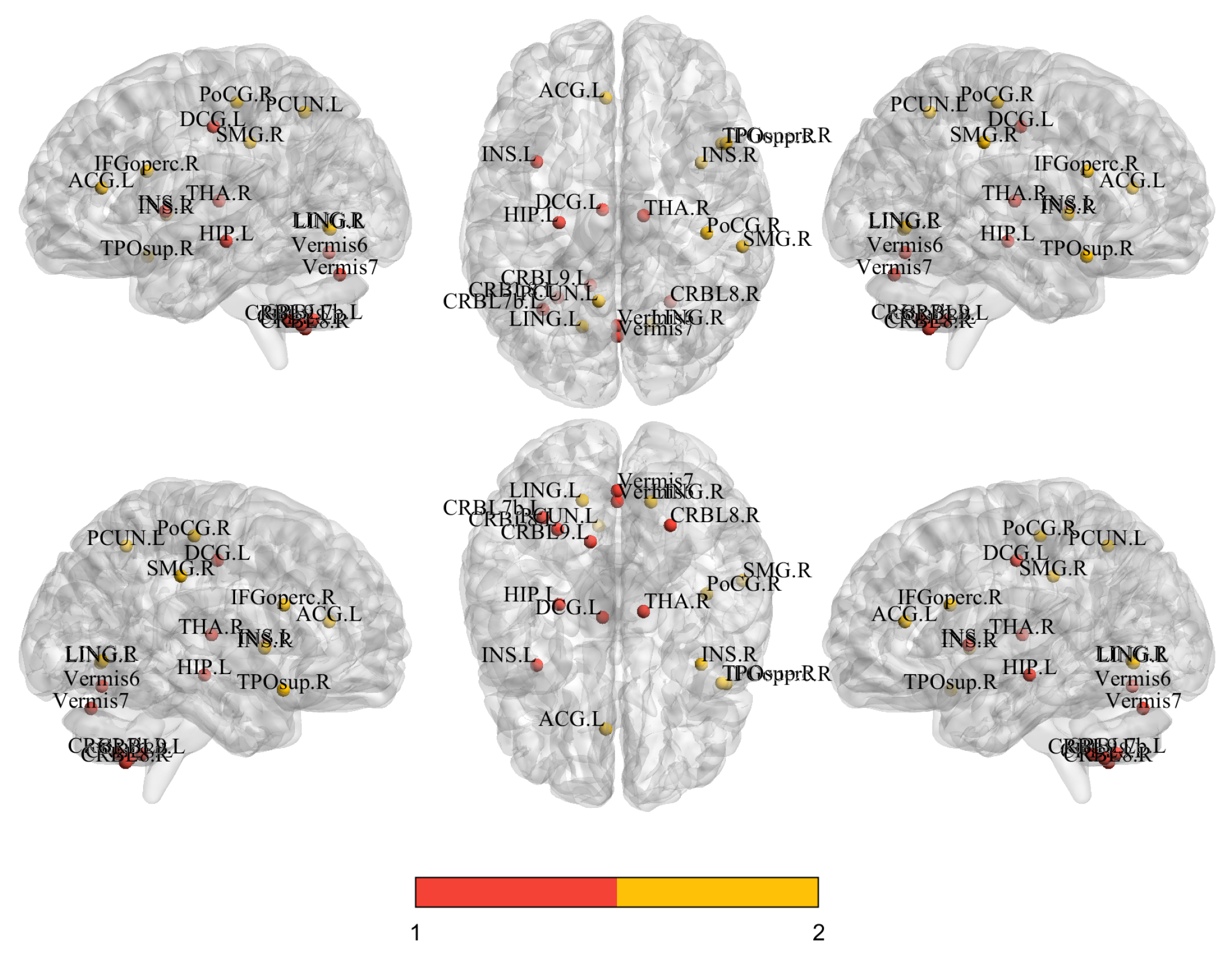

To elucidate the key neural mechanisms underpinning the model’s prediction of major depressive disorder (MDD), we employed the SHAP (SHapley Additive exPlanations [

60]) methodology to systematically quantify the contribution strengths of temporal sequence dynamic functional features and gray matter volume (GMV) structural features for the classification decision. Through comprehensive multimodal interpretability analysis, we identified brain regions with significant modality-specific influences and further interpreted their potential pathophysiological implications. The feature importance rankings derived from SHAP analysis, along with their statistical significance validated by independent

t-tests, are presented in

Table 7. The corresponding brain network visualization [

61] results are shown in

Figure 4.

In distinguishing between patients with MDD and HC, the key neuroimaging features based on time series and gray matter volume exhibited distinct yet complementary patterns. The key brain regions identified at the time series level were primarily concentrated in subcortical hubs and the cerebellum, such as “Thalamus_R”, “Hippocampus_L”, and various cerebellar subregions (“Cerebellum_8_R”, “Vermis_7”). This finding is consistent with previous reports of cerebellar abnormalities in MDD patients [

62,

63]. In contrast, the critical regions identified at the gray matter volume level were clustered in cortical areas associated with higher-order cognition and emotion regulation, including “Cingulum_Mid_L”, “Frontal_Inf_Oper_R”, “Insula_R”, and “Precuneus_L”. The structural alterations in these regions are more directly linked to the core symptoms of depression, such as emotional dysregulation and cognitive dysfunction. Notably, the middle part of the left cingulate gyrus was identified as a significant feature in both modalities. This multimodal comparison suggests that the pathological mechanisms of depression simultaneously involve functional dysregulation in deep brain regions responsible for basic information processing and structural alterations in superficial cortical centers for cognition and emotion.

Collectively, these findings demonstrate a clear bimodal complementarity: while subcortical hubs and the cerebellum primarily manifest as abnormalities in temporal activation patterns, higher-order cognitive and emotion-related cortices predominantly exhibit structural volumetric alterations. These distinct patterns likely reflect divergent neuropathological processes, involving both functional dysregulation in information processing hubs and structural degradation in cognitive–emotional centers. The observed bimodal heterogeneity underscores that reliance on single-modality data may insufficiently capture MDD’s pathological complexity. Future investigations should prioritize the integration of multimodal data to achieve a more comprehensive delineation of the disorder’s neural underpinnings.

Regarding clinical translational potential, these findings hold significant implications. First, the identified multimodal biomarker combinations show promise for objective neuroimaging-based auxiliary diagnostic tools. This would help address the current over-reliance on subjective clinical symptoms for diagnosis, particularly by providing a biological basis for differentiating complex or ambiguous cases. Second, these features can construct disease prediction models, for instance, to identify individuals at high risk, thereby advancing precision medicine. Finally, this deeper understanding of aberrant brain regions (such as the cerebellum) may inspire novel treatment targets, for example, by guiding non-invasive brain stimulation techniques to more precisely target previously overlooked key nodes. Therefore, this study not only deepens our understanding of the neural mechanisms underlying depression but also lays a solid foundation for advancing its clinical diagnosis and treatment towards a more objective and precise paradigm.

This study has several limitations that should be acknowledged. While we conducted statistical tests that revealed significant differences in sex distribution and education level between the MDD and HC groups, these variables were not included as covariates in our primary neuroimaging analyses. It leaves open the possibility that the observed group differences may be partially influenced by these demographic disparities. Future studies should incorporate sex and education as covariates to verify the robustness of our findings.

6. Conclusions

This study proposes an innovative multimodal multitask deep learning framework MMDD for the objective diagnosis of major depressive disorder (MDD). By integrating gray matter volume (GMV) features and temporal sequence data, the framework identifies neuropsychiatric biomarkers of depression. In terms of model architecture, a dual-pathway deep neural network is adopted. Specifically, 3D ResNet is employed to extract spatial structural features from GMV, while an LSTM–Transformer hybrid encoder is utilized to model temporal sequence information. Furthermore, an innovative Bidirectional Cross-Attention Fusion (BCAF) mechanism is implemented to achieve dynamic interaction and complementary fusion of spatiotemporal features. To enhance cross-center generalization, a Gradient Reversal Layer-based Multitask Learning (GRL-MTL) strategy is designed to optimize both site-invariant features and disorder-specific signatures.

To uncover the neural mechanisms driving the model’s decisions, we employed SHAP analysis, with key findings validated by two-sample t-tests. This approach revealed a clear functional–structural dichotomy: the model’s predictions relied on temporal abnormalities in functional circuits encompassing subcortical hubs and the cerebellum, and on structural volume alterations in higher-order cognitive–emotional cortices. The left middle cingulate gyrus emerged as a convergent hub across both modalities. This bimodal complementarity suggests MDD involves concurrent functional dysregulation in deep brain regions and structural degradation in cortical centers.

In conclusion, this integrative research combines advanced deep learning techniques with interpretability analysis. Beyond establishing a novel paradigm for developing robust and generalizable objective diagnostic tools for depression, the study significantly advances our understanding of the multimodal neural mechanisms underlying MDD, with profound clinical implications for advancing precision medicine in psychiatric disorders.