Prediction of Neonatal Length of Stay in High-Risk Pregnancies Using Regression-Based Machine Learning on Computerized Cardiotocography Data

Abstract

1. Introduction

2. Materials and Methods

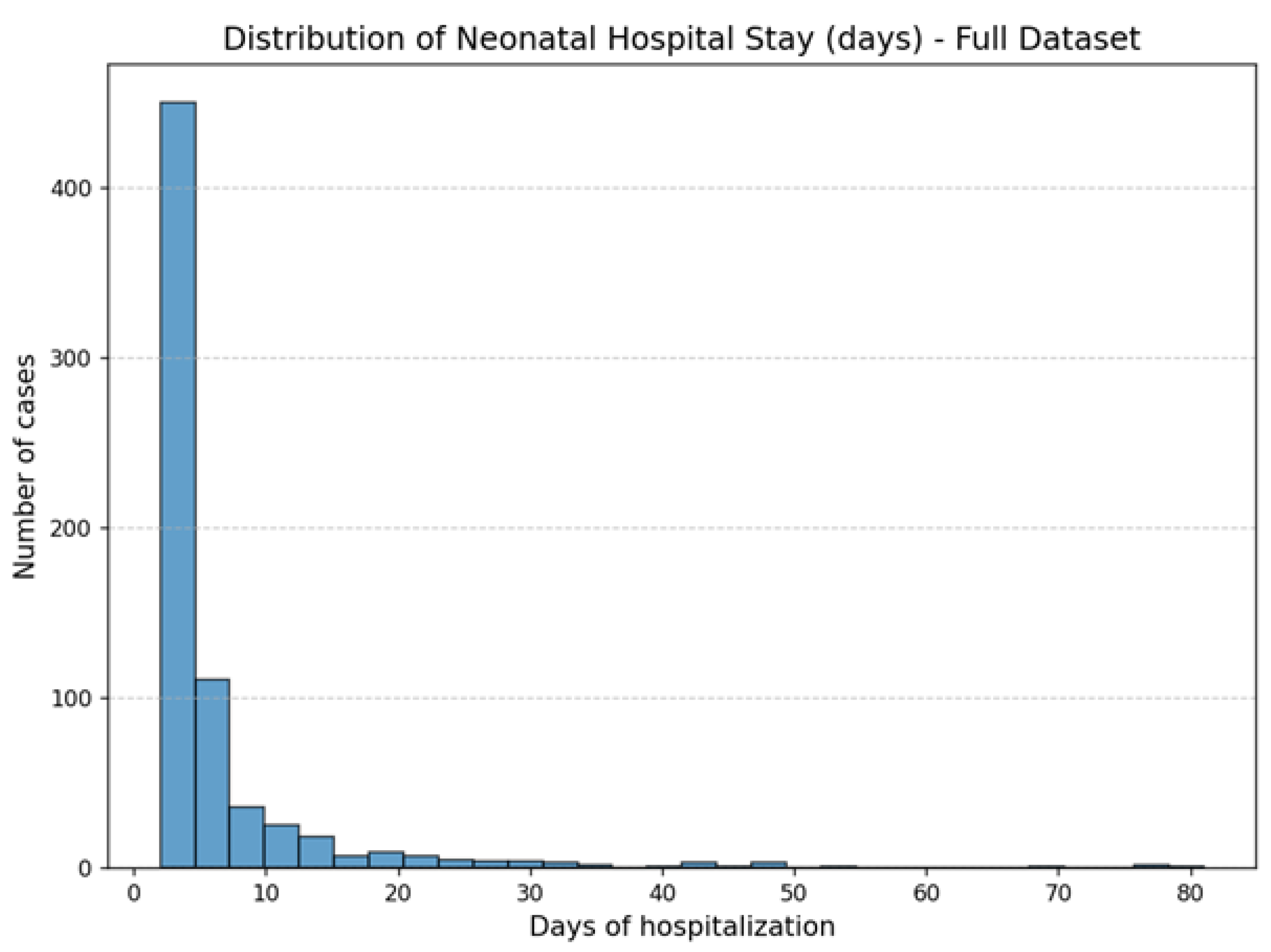

2.1. Dataset

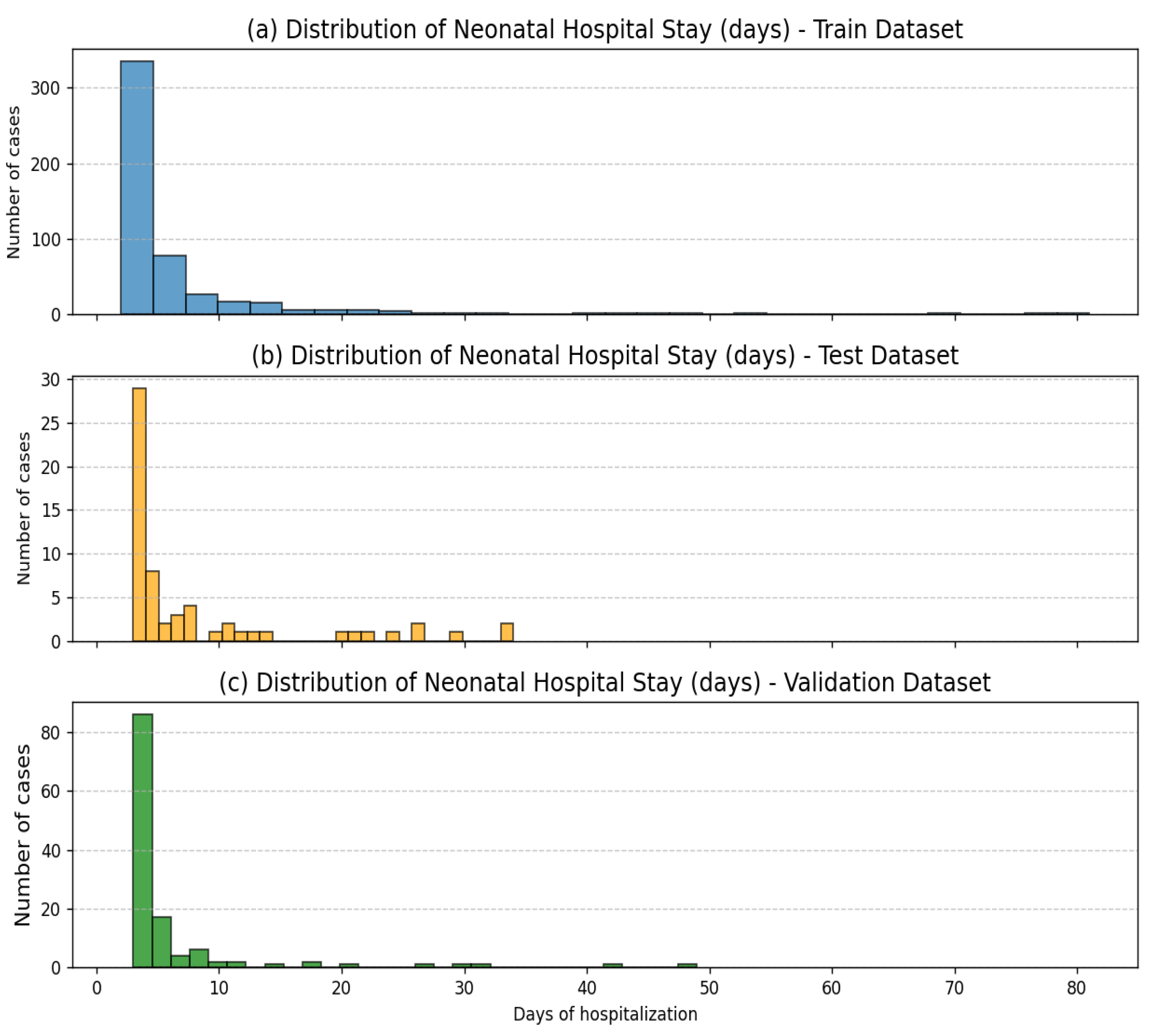

2.2. Dataset Preparation and Splitting

2.3. Machine Learning Methods

3. Results

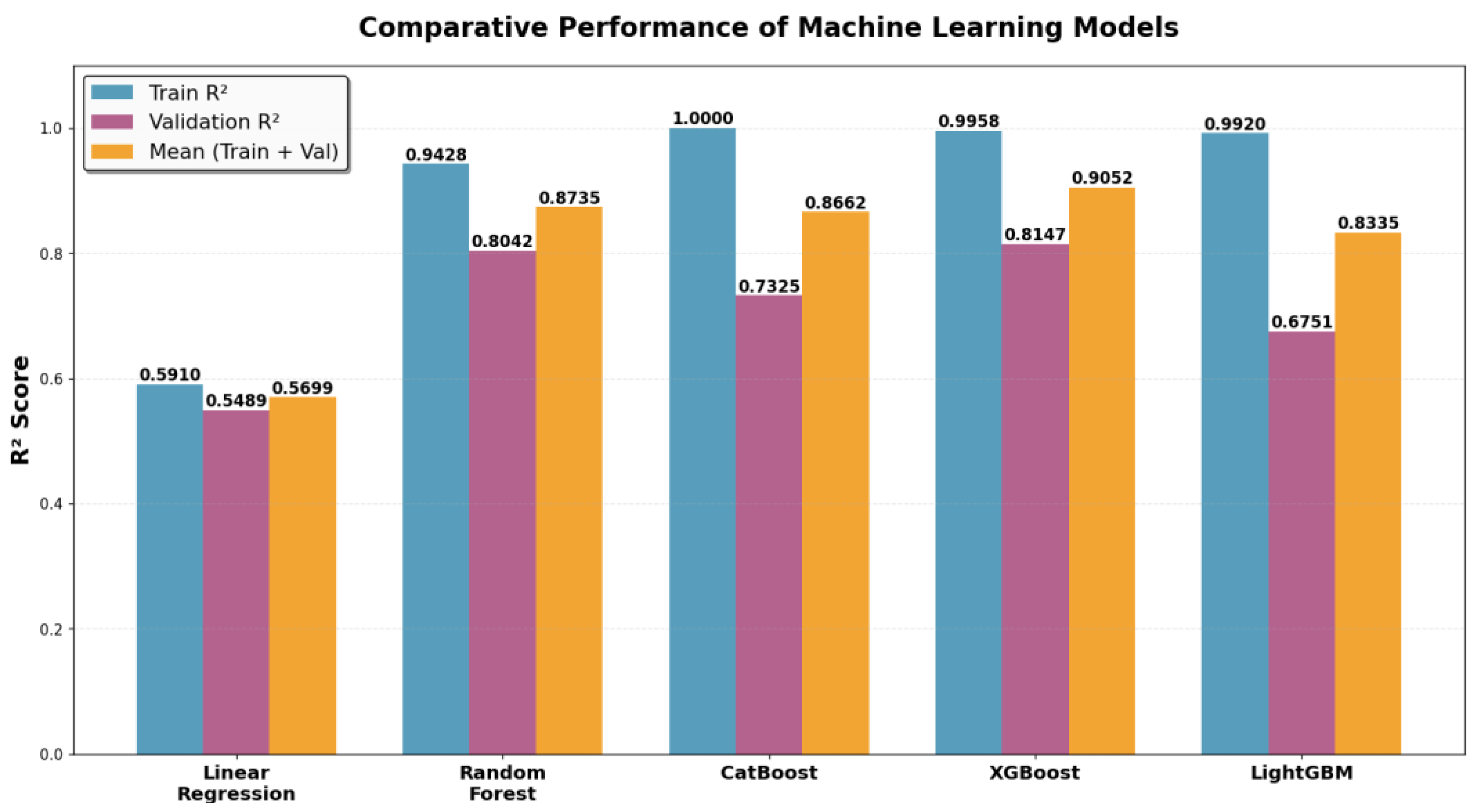

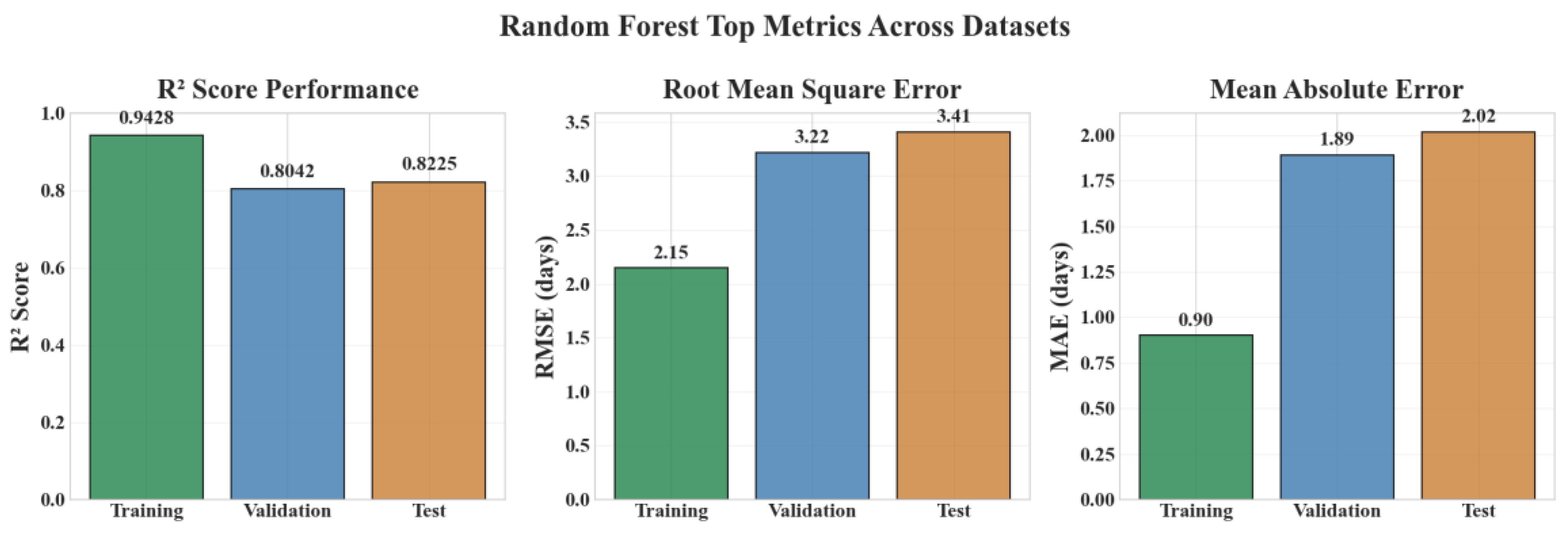

3.1. Performance on Training and Validation Sets

- Random Forest: Highest generalization ability and most balanced performance.

- XGBoost: Strong predictive accuracy with moderate overfitting.

- CatBoost: Perfect training fit but reduced validation performance.

- LightGBM: Low validation score and largest performance gap.

- Linear regression: Poor performance both on train and validation.

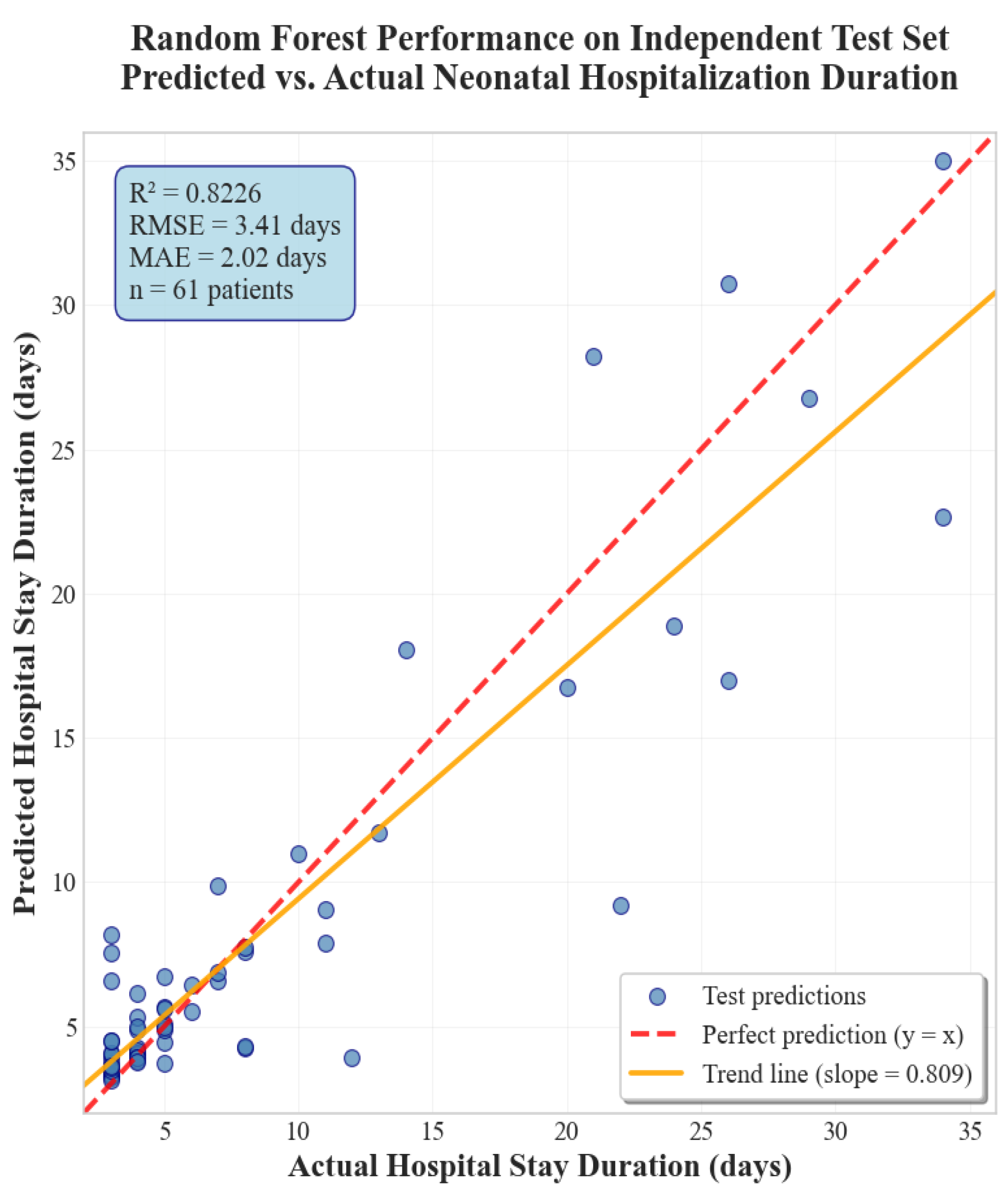

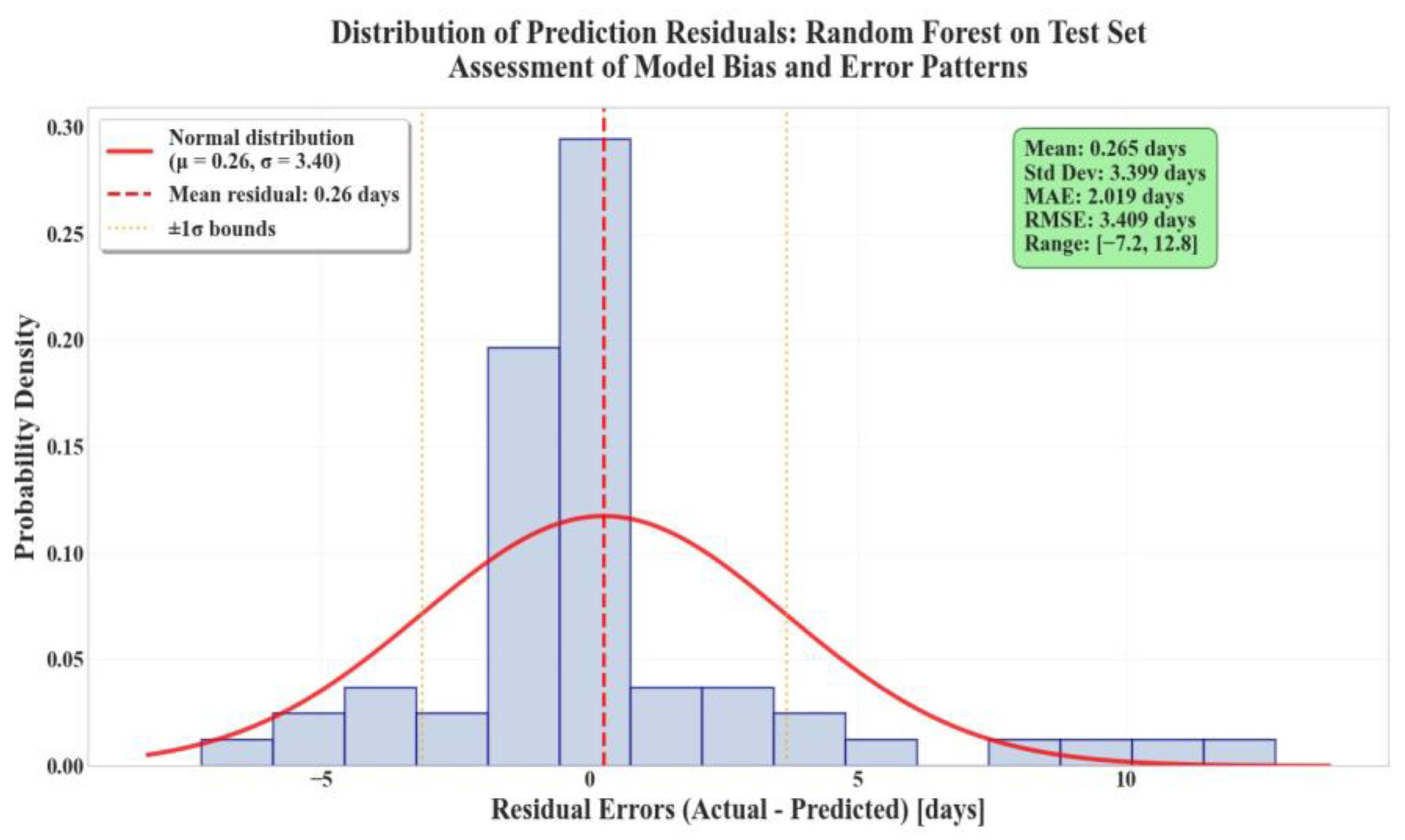

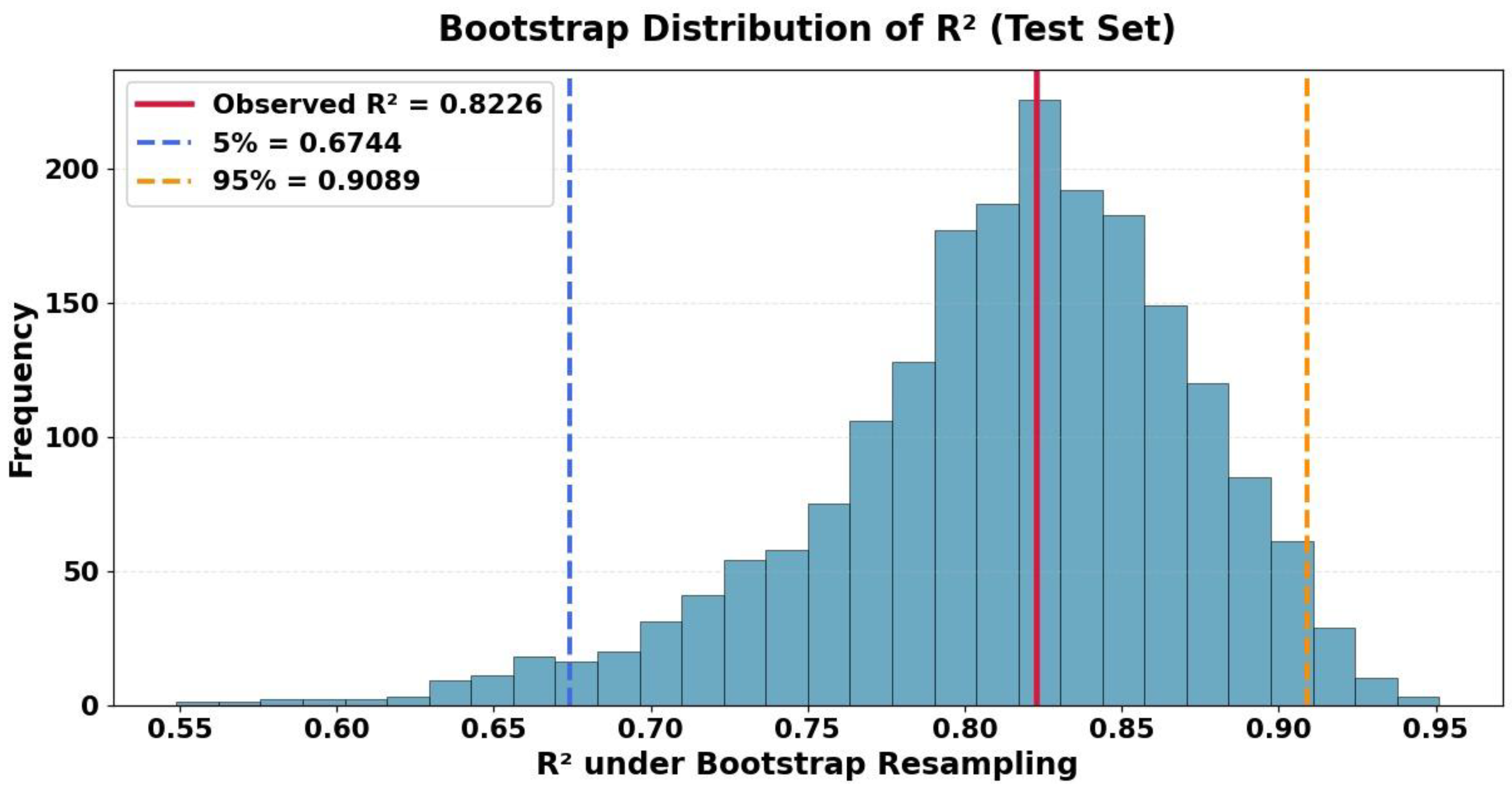

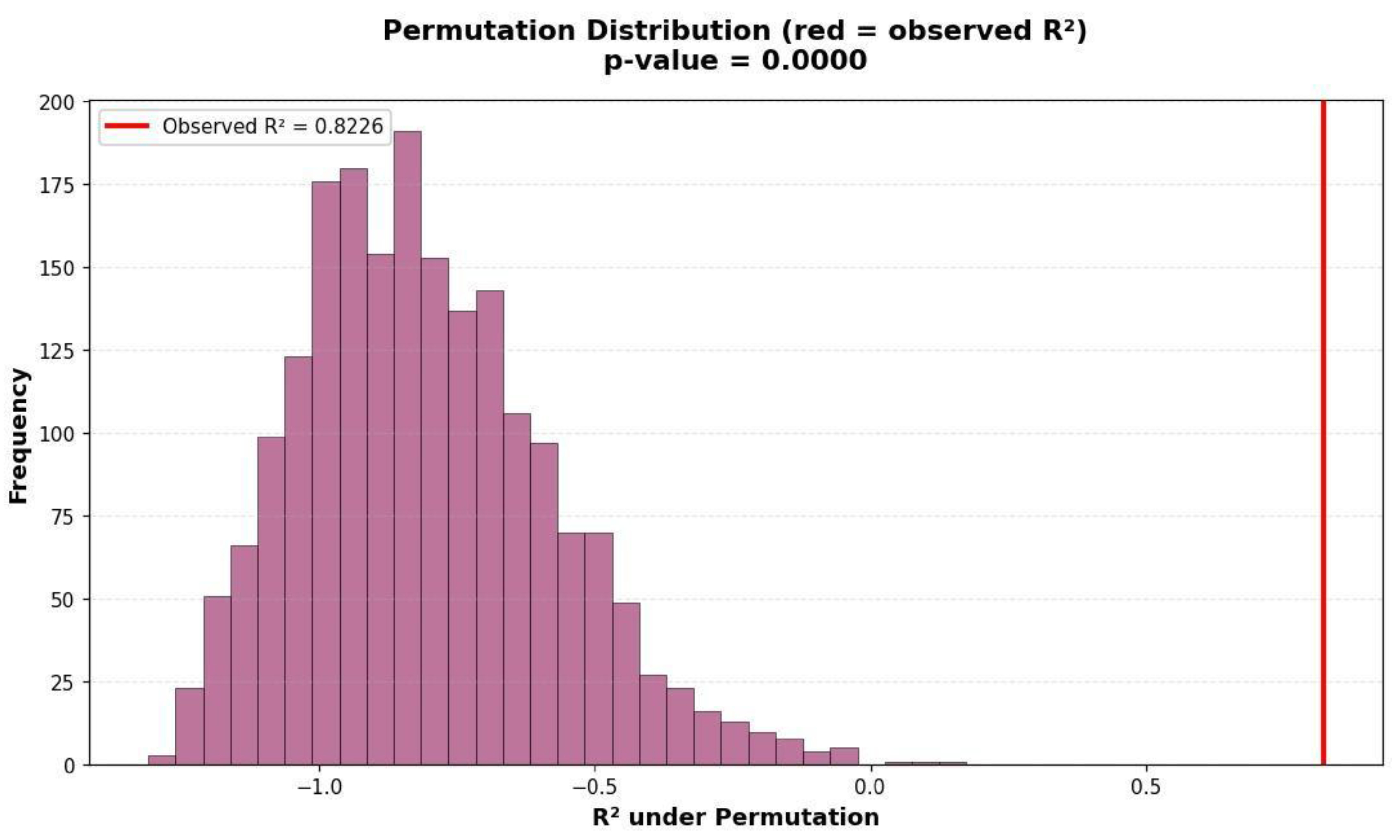

3.2. Performance on Test Set

3.3. Summary of Findings

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACOG | American College of Obstetricians and Gynecologists |

| AI | Artificial Intelligence |

| AUC | Area Under the Curve |

| ANN | Artificial Neural Network |

| BERT | Bidirectional Encoder Representations from Transformers |

| CART | Classification and Regression Tree |

| CatBoost | Categorical Boosting |

| CTG | Cardiotocography |

| cCTG | Computerized Cardiotocography |

| DL | Deep Learning |

| ELM | Extreme Learning Machine |

| FHR | Fetal Heart Rate |

| ICU | Intensive Care Unit |

| IUGR | Intrauterine Growth Restriction |

| KNN | K-Nearest Neighbor |

| LightGBM | Light Gradient Boosting Machine |

| LoS/LOS | Length of Stay |

| LSTM | Long Short-Term Memory |

| LTV | Long-term variability |

| MAE | Mean Absolute Error |

| ML | Machine Learning |

| MIMIC-III/MIMIC-IV | Medical Information Mart for Intensive Care III/IV |

| NICU | Neonatal Intensive Care Unit |

| PCA | Principal Component Analysis |

| RBFN | Radial Basis Function Neural Network |

| RF | Random Forest |

| RFR | Random Forest Regressor |

| RMSE | Root Mean Squared Error |

| ROC-AUC | Receiver Operating Characteristic—Area Under the Curve |

| STV | Short-Term Variability |

| SVM | Support Vector Machine |

| WRF | Weighted Random Forest |

| XGBoost | eXtreme Gradient Boosting |

References

- Ayres-De-Campos, D. Obstetric Emergencies; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Petterson, B.; Bourke, J.; Leonard, H.; Jacoby, P.; Bower, C. Co-Occurrence of Birth Defects and Intellectual Disability. Paediatr. Perinat. Epidemiol. 2007, 21, 65–75. [Google Scholar] [CrossRef]

- Bogdanovic, G.; Babovic, A.; Rizvanovic, M.; Ljuca, D.; Grgic, G.; Djuranovic-Milicic, J. Cardiotocography in the Prognosis of Perinatal Outcome. Med. Arch. 2014, 68, 102–105. [Google Scholar] [CrossRef]

- Klumper, J.; Kaandorp, J.J.; Schuit, E.; Groenendaal, F.; Koopman-Esseboom, C.; Mulder, E.J.H.; Van Bel, F.; Benders, M.J.N.L.; Mol, B.W.J.; van Elburg, R.M.; et al. Behavioral and Neurodevelopmental Outcome of Children after Maternal Allopurinol Administration during Suspected Fetal Hypoxia: 5-Year Follow-Up of the ALLO-Trial. PLoS ONE 2018, 13, e0201063. [Google Scholar] [CrossRef]

- Aliyu, I.; Lawal, T.O.; Onankpa, B. Hypoxic-ischemic encephalopathy and the Apgar scoring system: The experience in a resource-limited setting. J. Clin. Sci. 2018, 15, 18–21. [Google Scholar] [CrossRef]

- Wood, C.E.; Keller-Wood, M. Current Paradigms and New Perspectives on Fetal Hypoxia: Implications for Fetal Brain Development in Late Gestation. Am. J. Physiol.—Regul. Integr. Comp. Physiol. 2019, 317, R1–R13. [Google Scholar] [CrossRef] [PubMed]

- Bell, G.H. The Human Foetal Electrocardiogram. BJOG Int. J. Obstet. Gynaecol. 1938, 45, 802–809. [Google Scholar] [CrossRef]

- Alfirevic, Z.; Devane, D.; Gyte, G.M.; Cuthbert, A. Continuous Cardiotocography (CTG) as a Form of Electronic Fetal Monitoring (EFM) for Fetal Assessment during Labour. Cochrane Database Syst. Rev. 2017, 2, CD006066. [Google Scholar] [CrossRef]

- Danciu, B.M.; Simionescu, A.A. Optimizing Fetal Surveillance in Fetal Growth Restriction: A Narrative Review of the Role of the Computerized Cardiotocographic Assessment. J. Clin. Med. 2025, 14, 7010. [Google Scholar] [CrossRef]

- Schiermeier, S.; Westhof, G.; Leven, A.; Hatzmann, H.; Reinhard, J. Intra- and Interobserver Variability of Intrapartum Cardiotocography: A Multicenter Study Comparing the FIGO Classification with Computer Analysis Software. Gynecol. Obstet. Investig. 2011, 72, 169–173. [Google Scholar] [CrossRef]

- Das, S.; Mukherjee, H.; Roy, K.; Saha, C.K. Fetal Health Classification from Cardiotocograph for Both Stages of Labor—A Soft-Computing-Based Approach. Diagnostics 2023, 13, 858. [Google Scholar] [CrossRef] [PubMed]

- Dawes, G.S.; Lobb, M.; Moulden, M.; Redman, C.W.; Wheeler, T. Antenatal Cardiotocogram Quality and Interpretation Using Computers. BJOG Int. J. Obstet. Gynaecol. 2014, 121 (Suppl. S7), 2–8. [Google Scholar] [CrossRef] [PubMed]

- Moungmaithong, S.; Lam, M.S.N.; Kwan, A.H.W.; Wong, S.T.K.; Tse, A.W.T.; Sahota, D.S.; Tai, S.T.A.; Poon, L.C.Y. Prediction of Labour Outcomes Using Prelabour Computeried Cardiotocogram and Maternal and Fetal Doppler Indices: A Prospective Cohort Study. BJOG 2024, 131, 472–482. [Google Scholar] [CrossRef] [PubMed]

- Petrozziello, A.; Redman, C.W.; Papageorghiou, A.T.; Jordanov, I.; Georgieva, A. Multimodal Convolutional Neural Networks to Detect Fetal Compromise during Labor and Delivery. IEEE Access 2019, 7, 112026–112036. [Google Scholar] [CrossRef]

- Zwertbroek, E.; Bernardes, T.; Mol, B.W.; Battersby, C.; Koopmans, C.; Broekhuijsen, K.; Chappell, L.C. Clinical Trial Protocol Evaluating Planned Early Delivery Compared to Expectant Management for Women with Pre-Eclampsia between 34 and 36 Weeks’ Gestation in Low- and Lower-Middle-Income Settings. In Systematic Review; AJOG.org: New York, NY, USA, 2024; Chapter 7. [Google Scholar]

- Galan, H.L. Timing Delivery of the Growth-Restricted Fetus. Semin. Perinatol. 2011, 35, 262–269. [Google Scholar] [CrossRef] [PubMed]

- Georgieva, A.; Payne, S.J.; Moulden, M.; Redman, C.W. Computerized Fetal Heart Rate Analysis in Labor: Detection of Intervals with Un-Assignable Baseline. Physiol. Meas. 2011, 32, 1549–1560. [Google Scholar] [CrossRef]

- Roozbeh, N.; Montazeri, F.; Farashah, M.V.; Mehrnoush, V.; Darsareh, F. Proposing a Machine Learning-Based Model for Predicting Nonreassuring Fetal Heart. Sci. Rep. 2025, 15, 7812. [Google Scholar] [CrossRef]

- Pardasani, R.; Vitullo, R.; Harris, S.; Yapici, H.O.; Beard, J. Development of a Novel Artificial Intelligence Algorithm for Interpreting Fetal Heart Rate and Uterine Activity Data in Cardiotocography. Front. Digit. Health 2025, 7, 1638424. [Google Scholar] [CrossRef]

- Aeberhard, J.L.; Radan, A.P.; Soltani, R.A.; Strahm, K.M.; Schneider, S.; Carrié, A.; Lemay, M.; Krauss, J.; Delgado-Gonzalo, R.; Surbek, D. Introducing Artificial Intelligence in Interpretation of Foetal Cardiotocography: Medical Dataset Curation and Preliminary Coding—An Interdisciplinary Project. Methods Protoc. 2024, 7, 5. [Google Scholar] [CrossRef]

- Park, C.E.; Choi, B.; Park, R.W.; Kwak, D.W.; Ko, H.S.; Seong, W.J.; Cha, H.-H.; Kim, H.M.; Lee, J.; Seol, H.-J.; et al. Automated Interpretation of Cardiotocography Using Deep Learning in a Nationwide Multicenter Study. Sci. Rep. 2025, 15, 19617. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.S.; Mahmoud, N.M. Early Detection of Fetal Health Status Based on Cardiotocography Using Artificial Intelligence. Neural Comput. Appl. 2025, 37, 16753–16779. [Google Scholar] [CrossRef]

- Salini, Y.; Mohanty, S.N.; Ramesh, J.V.N.; Yang, M.; Chalapathi, M.M.V. Cardiotocography Data Analysis for Fetal Health Classification Using Machine Learning Models. IEEE Access 2024, 12, 26005–26022. [Google Scholar] [CrossRef]

- Kaliappan, J.; Bagepalli, A.R.; Almal, S.; Mishra, R.; Hu, Y.-C.; Srinivasan, K. Impact of Cross-Validation on Machine Learning Models for Early Detection of Intrauterine Fetal Demise. Diagnostics 2023, 13, 1692. [Google Scholar] [CrossRef]

- Jebadurai, I.J.; Paulraj, G.J.L.; Jebadurai, J.; Silas, S. Experimental Analysis of Filtering-Based Feature Selection Techniques for Fetal Health Classification. Serbian J. Electr. Eng. 2022, 19, 207–224. [Google Scholar] [CrossRef]

- Li, J.; Liu, X. Fetal Health Classification Based on Machine Learning. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; pp. 899–902. [Google Scholar] [CrossRef]

- Abbas, S.A.; Rehman, A.U.; Majeed, F.; Majid, A.; Malik, M.S.A.; Kazmi, Z.H.; Zafar, S. Performance Analysis of Classification Algorithms on Birth Dataset. IEEE Access 2020, 8, 102146–102154. [Google Scholar] [CrossRef]

- Piri, J.; Mohapatra, P. Exploring Fetal Health Status Using an Association Based Classification Approach. In Proceedings of the International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2019; pp. 166–171. [Google Scholar] [CrossRef]

- Vani, R. Weighted Deep Neural Network Based Clinical Decision Support System for the Determination of Fetal Health. Int. J. Recent Technol. Eng. (IJRTE) 2019, 8, 8564–8569. [Google Scholar] [CrossRef]

- Chen, J.Y.; Liu, X.C.; Wei, H.; Chen, Q.-Q.; Hong, J.-M.; Li, Q.-N.; Hao, Z.-F. Imbalanced Cardiotocography Multi-Classification for Antenatal Fetal Monitoring Using Weighted Random Forest. Lect. Notes Comput. Sci. 2019, 11924, 75–85. [Google Scholar] [CrossRef]

- Uzun, A.; Capa-Kiziltas, E.; Yilmaz, E. Cardiotocography Data Set Classification with Extreme Learning Machine. In Proceedings of the International Conference on Advanced Technologies, Computer Engineering and Science (ICATCES’18), Safranbolu, Turkey, 11–13 May 2018. [Google Scholar]

- Sahin, H.; Subasi, A. Classification of the Cardiotocogram Data for Anticipation of Fetal Risks Using Machine Learning Techniques. Appl. Soft Comput. J. 2015, 33, 231–238. [Google Scholar] [CrossRef]

- Subasi, A.; Kadasa, B.; Kremic, E. Classification of the Cardiotocogram Data for Anticipation of Fetal Risks Using Bagging Ensemble Classifier. Procedia Comput. Sci. 2020, 187, 262–268. [Google Scholar] [CrossRef]

- Yildirim, A.E.; Canayaz, M. Machine Learning-Based Prediction of Length of Stay (LoS) in the Neonatal Intensive Care Unit Using Ensemble Methods. Neural Comput. Appl. 2024, 36, 14433–14448. [Google Scholar] [CrossRef]

- Straathof, B.T. A Deep Learning Approach to Predicting the Length of Stay of Newborns in the Neonatal Intensive Care Unit. Master’s Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2020. Available online: https://www.diva-portal.org/smash/get/diva2:1472280/FULLTEXT01.pdf (accessed on 12 May 2025).

- Jain, R.; Singh, M.; Rao, A.R.; Garg, R. Predicting Hospital Length of Stay Using Machine Learning on a Large Open Health Dataset. BMC Health Serv. Res. 2024, 24, 860. [Google Scholar] [CrossRef] [PubMed]

- Gabitashvili, A.; Kellmeyer, P. Predicting Length of Stay in Neurological ICU Patients Using Classical Machine Learning and Neural Network Models: A Benchmark Study on MIMIC-IV. arXiv 2025, arXiv:2505.17929. [Google Scholar] [CrossRef]

- De Silva, D.A.; Sauve, R.; von Dadelszen, P.; Synnes, A.; Lee, T.; Magee, L.A. Timing of Delivery in a High-Risk Obstetric Population: A Clinical Prediction Model. BMC Pregnancy Childbirth 2017, 17, 182. [Google Scholar] [CrossRef]

- American College of Obstetricians and Gynecologists (ACOG). Intrapartum Fetal Heart Rate Monitoring: Nomenclature, Interpretation, and General Management Principles. Obstet. Gynecol. 2009, 114, 192–202. [Google Scholar] [CrossRef]

- Ayres-de-Campos, D.; Spong, C.Y.; Chandraharan, E. FIGO consensus guidelines on intrapartum fetal monitoring: Cardiotocography. Int. J. Gynecol. Obstet. 2015, 131, 13–24. [Google Scholar] [CrossRef] [PubMed]

- Malakooti, N.; Mehrnoush, V.; Abdi, F.; Farashah, M.S.V.; Darsareh, F. Development of a Machine Learning Model to Identify the Predictors of the Neonatal Intensive Care Unit Admission. Sci. Rep. 2025, 15, 20914. [Google Scholar] [CrossRef] [PubMed]

- Singh, H.; Cho, S.J.; Gupta, S.; Kaur, R.; Sunidhi, S.; Saluja, S.; Pandey, A.K.; Bennett, M.V.; Lee, H.C.; Das, R.; et al. Designing a Bed-Side System for Predicting Length of Stay in a Neonatal Intensive Care Unit. Sci. Rep. 2021, 11, 3342. [Google Scholar] [CrossRef] [PubMed]

- Vijgen, S.M.; Westerhuis, M.E.; Opmeer, B.C.; Visser, G.H.; Moons, K.G.; Porath, M.M.; Oei, G.S.; Van Geijn, H.P.; Bolte, A.C.; Willekes, C.; et al. Cost-Effectiveness of Cardiotocography Plus ST Analysis of the Fetal Electrocardiogram Compared with Cardiotocography Only. Acta Obstet. Gynecol. Scand. 2011, 90, 772–778. [Google Scholar] [CrossRef]

- Abbey, M.; Green, K.I. Admission Cardiotocography versus Doppler Auscultation of Fetal Heart in High-Risk Pregnancy in a Tertiary Health Facility in Nigeria. Int. J. Reprod. Contracept. Obstet. Gynecol. 2021, 10, 3269. [Google Scholar] [CrossRef]

- Ranaei-Zamani, N.; David, A.L.; Siassakos, D.; Dadhwal, V.; Melbourne, A.; Aughwane, R.; Mitra, S.; Russell-Buckland, J.; Tachtsidis, I.; Hillman, S. Saving Babies and Families from Preventable Harm: A Review of the Current State of Fetoplacental Monitoring and Emerging Opportunities. NPJ Women’s Health 2024, 2, 10. [Google Scholar] [CrossRef]

- Sato, I.; Hirono, Y.; Shima, E.; Yamamoto, H.; Yoshihara, K.; Kai, C.; Yoshida, A.; Uchida, F.; Kodama, N.; Kasai, S. Comparison and Verification of Detection Accuracy for Late Deceleration with and without Uterine Contractions Signals Using Convolutional Neural Networks. Front. Physiol. 2025, 16, 1525266. [Google Scholar] [CrossRef]

- Gupta, A.; Stead, T.S.; Ganti, L. Determining a Meaningful R-Squared Value in Clinical Medicine. Acad. Med. Surg. 2024. [Google Scholar] [CrossRef]

| Variable | Mean | Std | Min | Median | Max |

|---|---|---|---|---|---|

| HTA | 0.32 | 0.46 | 0 | 0 | 1 |

| IUGR | 0.32 | 0.46 | 0 | 0 | 1 |

| Gestational diabetes (GD) | 0.27 | 0.44 | 0 | 0 | 1 |

| Cholestasis | 0.19 | 0.39 | 0 | 0 | 1 |

| Gestational age at monitoring (days) | 255.8 | 16.0 | 197 | 260 | 281 |

| Gestational age at delivery (days) | 263.0 | 12.9 | 200 | 266 | 285 |

| Neonatal hospital stays (days, target variable) | 6.57 | 8.65 | 2 | 4 | 81 |

| Signal loss (%) | 5.13 | 5.61 | 0 | 3 | 29 |

| Signal quality (%) | 94.99 | 5.47 | 71 | 97 | 100 |

| Model | Train R2 | Validation R2 | Mean (Train + Val) |

|---|---|---|---|

| Random Forest | 0.9428 | 0.8042 | 0.8735 |

| CatBoost | 1.0000 | 0.7325 | 0.8662 |

| XGBoost | 0.9958 | 0.8147 | 0.9052 |

| LightGBM | 0.9920 | 0.6751 | 0.8335 |

| Linear Regression | 0.5910 | 0.5489 | 0.5699 |

| Dataset | R2 | RMSE | MSE |

|---|---|---|---|

| Training | 0.9428 | 2.1456 | 0.9009 |

| Validation | 0.8042 | 3.2177 | 1.8904 |

| Model | Train R2 | Validation R2 | Test R2 | Mean (Train + Val) |

|---|---|---|---|---|

| Random Forest | 0.9428 | 0.8042 | 0.8226 | 0.8735 |

| CatBoost | 1.0000 | 0.7325 | 0.7059 | 0.8662 |

| XGBoost | 0.9958 | 0.8147 | 0.6911 | 0.9052 |

| LightGBM | 0.9920 | 0.6751 | 0.6851 | 0.8335 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Danciu, B.M.; Șișială, M.-E.; Dumitru, A.-I.; Simionescu, A.A.; Sebacher, B. Prediction of Neonatal Length of Stay in High-Risk Pregnancies Using Regression-Based Machine Learning on Computerized Cardiotocography Data. Diagnostics 2025, 15, 2964. https://doi.org/10.3390/diagnostics15232964

Danciu BM, Șișială M-E, Dumitru A-I, Simionescu AA, Sebacher B. Prediction of Neonatal Length of Stay in High-Risk Pregnancies Using Regression-Based Machine Learning on Computerized Cardiotocography Data. Diagnostics. 2025; 15(23):2964. https://doi.org/10.3390/diagnostics15232964

Chicago/Turabian StyleDanciu, Bianca Mihaela, Maria-Elisabeta Șișială, Andreea-Ioana Dumitru, Anca Angela Simionescu, and Bogdan Sebacher. 2025. "Prediction of Neonatal Length of Stay in High-Risk Pregnancies Using Regression-Based Machine Learning on Computerized Cardiotocography Data" Diagnostics 15, no. 23: 2964. https://doi.org/10.3390/diagnostics15232964

APA StyleDanciu, B. M., Șișială, M.-E., Dumitru, A.-I., Simionescu, A. A., & Sebacher, B. (2025). Prediction of Neonatal Length of Stay in High-Risk Pregnancies Using Regression-Based Machine Learning on Computerized Cardiotocography Data. Diagnostics, 15(23), 2964. https://doi.org/10.3390/diagnostics15232964