1. Introduction

Skin cancer is one of the most common types of cancer worldwide, and its incidence has steadily increased over the past few decades. According to the 2020 global cancer statistics from the World Health Organization (WHO), cancer has become one of the leading causes of death globally, with nearly 10 million deaths from cancer in 2020. Non-melanoma skin cancer (NMSC) accounts for approximately 1.2 million new cases annually, making it one of the most prevalent cancer types globally. Although melanoma represents only a small portion of all skin cancer cases, its biological behavior is highly aggressive, and it is the leading cause of death related to skin cancer. According to statistics from the American Cancer Society (ACS), when melanoma is detected early and treated promptly, the five-year survival rate for patients can exceed 99%. However, if cancer cells have spread to distant organs, the five-year survival rate may drop to 32%.

Dermoscopy is a non-invasive imaging technique that enhances the visibility of skin lesions by reducing surface light reflection and uniformly illuminating the area [

1]. However, dermoscopy diagnosis relies on clinicians’ subjective judgment, making it susceptible to factors like lighting changes, lesion complexity, and hair interference, leading to diagnostic instability. This highlights the potential of computer-aided diagnosis (CAD) systems for automatic skin lesion analysis [

2]. Automatic segmentation is crucial in CAD to extract lesion areas accurately; however, it faces challenges such as diverse lesion shapes, indistinct boundaries, low contrast, and imaging artifacts. Traditional segmentation methods, like thresholding [

3], clustering [

4], and region merging [

5], rely on shallow features but struggle with generalizability and adapting to the complexity of skin lesions.

In recent years, deep learning has greatly advanced medical image segmentation. U-Net and its variants [

6] remain strong baselines because the encoder–decoder topology with skip connections enables multi-scale feature fusion. Nevertheless, their locality-dominated receptive fields often struggle with blurred boundaries, low contrast, and irregular lesion geometry. To mitigate these issues, multi-scale CNN designs typified by Atrous Spatial Pyramid Pooling in the DeepLab [

7] enlarge the effective receptive field through parallel dilated convolutions, thereby enriching context while preserving resolution. At the same time, Transformer-based models [

8] have been introduced to capture long-range dependencies. TransFuse [

9] combines a convolutional branch and a Transformer branch and fuses them bidirectionally so that local texture cues and global semantic relations can be aligned across stages. Swin-UNETR [

10] adopts a Swin-Transformer encoder with shifted windows to realize hierarchical self-attention at multiple scales and couples it with a UNet-style decoder for dense prediction. MedT [

11] employs a gated axial attention mechanism with learnable positional-encoding gates and introduces a Local–Global training scheme that combines a global full-image branch with a local patch branch, enabling the model to capture long-range dependencies effectively under limited medical data conditions. Building on these trends, hybrid CNN–Transformer systems and dual-encoder frameworks decouple local detail from global semantics and then reunify them along the decoding path.

In this paper, we propose a novel dual-encoder segmentation network, MSDTCN-Net, specifically designed for skin lesion segmentation tasks. We conduct extensive experiments on four publicly available datasets: ISIC 2016 [

12], ISIC 2017 [

13], ISIC 2018 [

14,

15], and PH2 [

16]. Comparative experiments with current state-of-the-art segmentation methods demonstrate the effectiveness of the proposed approach. Our main contributions are summarized as follows:

- (1)

We propose a dual-encoder architecture to balance the ability to extract local features and model long-range dependencies. ConvNeXt [

17] is responsible for capturing local detail information, while Deformable Transformer [

18] enhances the modeling of long-range dependencies through its deformable attention mechanism.

- (2)

We introduce the Squeeze-and-Excitation (SE) [

19] mechanism and Multi-Scale Receptive Field (MSRF) module to enhance feature fusion capabilities and improve the model’s adaptability to lesions of different scales.

- (3)

We propose the Hierarchical Feature Transfer (HFT) mechanism and optimize the decoder structure to improve boundary recovery for lesion areas.

2. Related Works

Deep learning has made significant progress in the field of medical image segmentation, with U-Net being one of the most representative Convolutional Neural Network (CNN) architectures. However, due to the blurred boundaries and low contrast of many skin lesions, the original U-Net often struggles to accurately segment lesion areas in certain cases. As a result, many researchers have proposed several improvements to U-Net, resulting in various network variants. For example, U-Net++ [

20] enhances feature fusion through dense skip connections, improving segmentation accuracy; ResU-Net [

21] incorporates residual connections to mitigate the vanishing gradient problem, enhancing the network’s training stability; AttU-Net [

22] introduces attention mechanisms in the decoder to focus the model’s attention on key regions; and DCSAU-Net [

23] combines depthwise separable convolutions with adaptive attention to improve computational efficiency and boundary segmentation capabilities. While these U-Net variants have made improvements in medical image segmentation, the limitations of CNN’s local receptive fields still persist, making it difficult for the model to capture global information across distant pixels.

To address the limitations of CNNs in capturing global information, researchers have introduced the Transformer self-attention mechanism to model long-range dependencies. Transformer, through global self-attention computation of input features, effectively models the relationships between distant pixels, making it particularly well-suited for medical image segmentation tasks involving complex morphological structures. The success of Vision Transformer (ViT) [

24] in image classification tasks has driven Transformer-based research in the medical image segmentation field. Swin Transformer [

25], an improved version of the Vision Transformer, adopts a sliding window (Shifted Window) mechanism, which reduces computational complexity while enhancing local information modeling.

With the widespread application of Transformer in medical image segmentation, researchers have further explored hybrid architectures that combine CNNs and Transformers to leverage the strengths of CNNs in extracting local fine-grained features while taking advantage of Transformers’ capability in modeling long-range dependencies. For instance, TransAttUNet [

26] uses Transformer Self-Attention (TSA) and Global Spatial Attention (GSA) modules to effectively extracts both deep and shallow features, improving segmentation performance in cases where lesion boundaries are complex and contrast is low. SUTrans-Net [

27] employs a Spatial Group Attention (SGA) module to optimize the spatial attention mechanism, enhancing the feature representation of key region. FAT-Net [

28] uses a dual-encoder structure and designs a Feature Adaptation Module (FAM) to match feature distributions of different layers in skip connections. DEU-Net [

29] utilizes a dual-encoder U-Net structure, combining an EfficientNet-B6 convolutional encoder with a MaxViT Transformer encoder to simultaneously extract local details and global context. Pact-Net [

30] designs a Channel-Space Fusion (CSF) module and a Self-Selection Multi-Scale Fusion (SSMF) module, optimizing feature fusion from different branches. DEMF-Net [

31] integrates a dual encoder composed of a multi-scale Transformer branch and a CNN branch, and further introduces Dual-branch Attention Fusion (DAF), an Advanced Feature Fusion Module (AFFM), and Characterization Supplementary Blocks (CSBs) to reinforce edge details during decoding. DefNet [

32] aggregates a convolutional distillation module (CDM) with a dual-branch attention transformer (DBAT) and employs a Multi-Scale Feature Enhancement Module (MFEM) to improve skip-level fusion. MEFP-Net [

33] adopts an asymmetric dual-encoder in which a Global Information Extraction Module (GIEM) complements the main CNN pathway; its decoder employs a Multi-Scale Adaptive Feature Fusion Module (MAFFM) with attention to suppress background noise, followed by an Atrous Pooling Dense Perception Module (APDPM) that strengthens boundary perception.

3. Methods

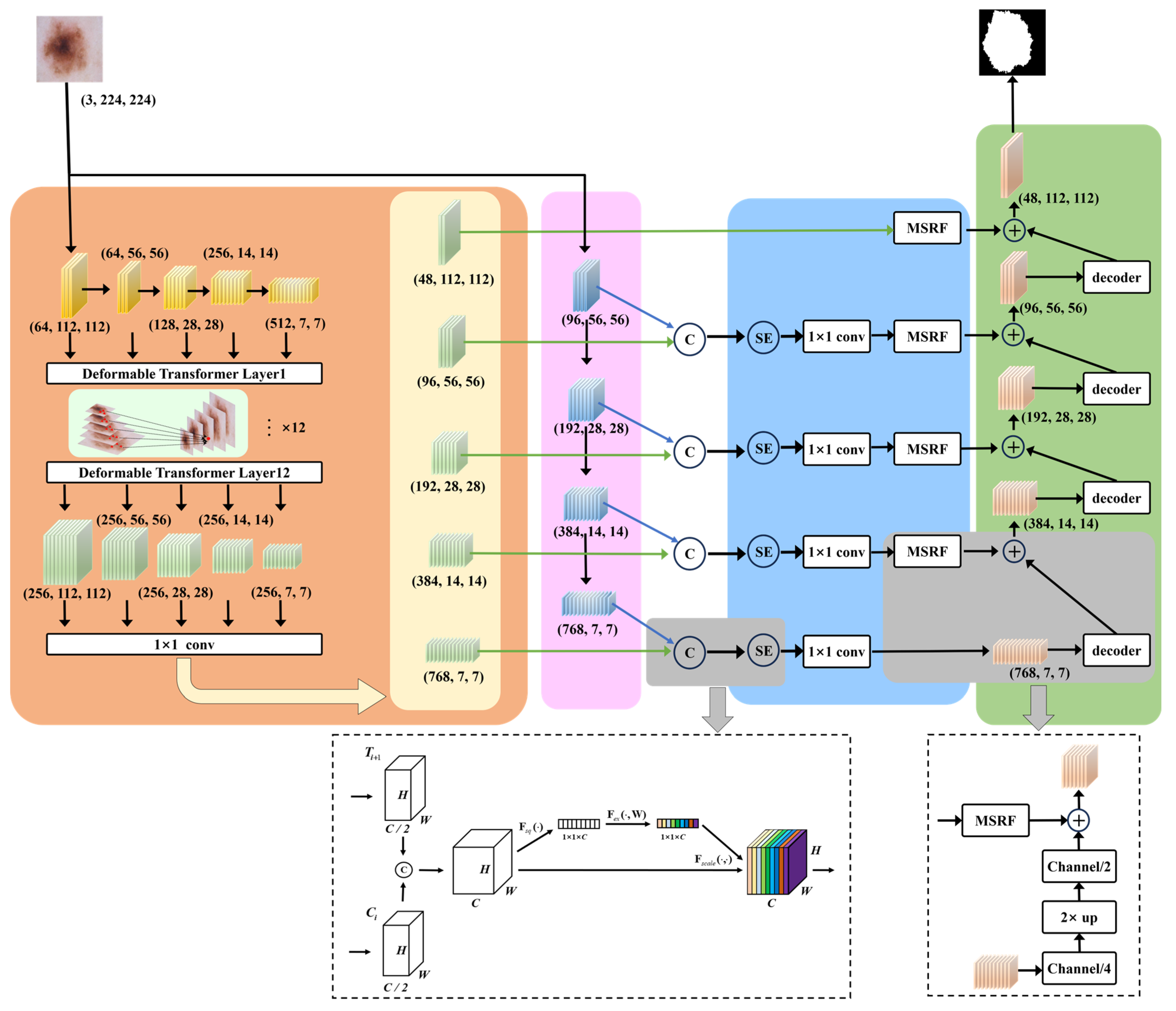

The overview of our proposed MSDTCN-Net is shown in

Figure 1. This chapter mainly introduces the network’s overall architecture, including data preprocessing, feature extraction, feature enhancement, decoding, and the loss function. For data preprocessing, we employ a series of morphological operations to remove hair artifacts from the images, ensuring clearer inputs for segmentation. For feature extraction, we use a dual-encoder architecture, with ConvNeXt capturing local details and Deformable Transformer modeling long-range dependencies to enhance global context. For feature enhancement, we introduce the Squeeze-and-Excitation (SE) mechanism to perform channel-wise adaptive weighting, alongside the Multi-Scale Receptive Field (MSRF) module, which captures features across multiple scales using multi-branch and dilated convolutions. For decoding, we propose the Hierarchical Feature Transfer (HFT) mechanism that effectively restores spatial details by combining high-level semantic information with low-level features, improving segmentation accuracy. For the loss function, we adopt a hybrid loss function to improve the overall segmentation accuracy of the model, enhancing its performance in detecting lesion areas.

3.1. Preprocessing of Skin Lesion Images

Skin lesion images may suffer from issues like pixel intensity variations, and artifacts, which can affect segmentation accuracy. To address these, we designed a standardized image preprocessing pipeline.

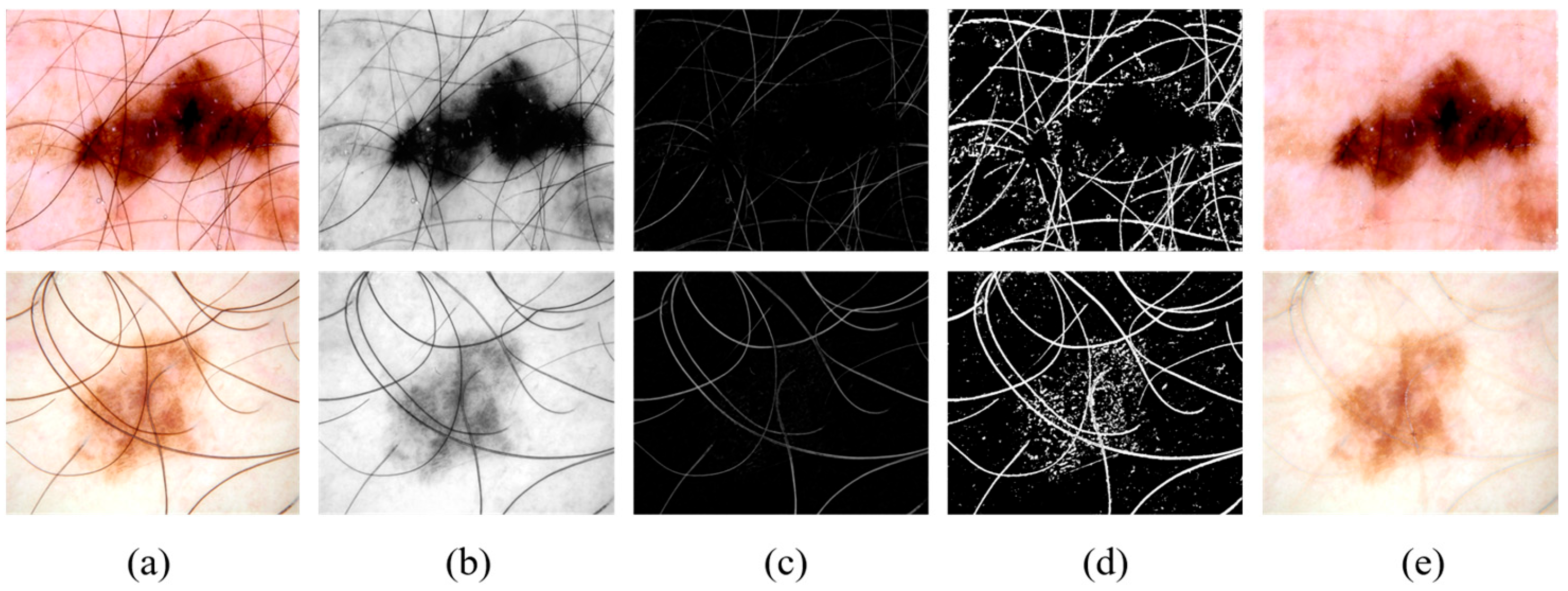

As shown in

Figure 2a,b, the images are converted to grayscale to remove color information, focusing more on texture and structural features, simplifying the image complexity.

As shown in

Figure 2b,c, a morphological-based black-hat transform is applied to remove hair artifacts, which are fine structures that can interfere with precise lesion segmentation.

As shown in

Figure 2c,d, a thresholding operation is performed on the black-hat transformed image, generating a binary mask to highlight the hair artifact regions.

As shown in

Figure 2d,e, the INPAINT_TELEA algorithm is used to repair the artifact regions, restoring the original structure and texture of the image.

To further enhance robustness, we also apply data augmentation, including bounded random rotations (±15°), random horizontal and vertical flips (each with probability 0.5), and mild adjustments to brightness, contrast, saturation, and hue within small ranges (0.03).

3.2. Dual-Encoder Feature Extraction

ConvNeXt is a deep optimization of traditional convolutional neural networks (CNNs). The core computation module, the ConvNeXt block, uses depthwise convolution for feature extraction and incorporates channel expansion and normalization operations to enhance feature learning ability. The specific computation process can be represented by Equation (1):

where

and

represent the input and output feature maps,

represents the 7 × 7 depthwise convolution,

represents the LayerNorm normalization,

and

are the weight matrices for the 1 × 1 pointwise convolutions, and

represents the GELU activation function. In our model, the input image

is passed through the ConvNeXt encoder, resulting in four feature maps at different scales:

,

,

,

. These multi-scale features provide information ranging from low-level textures to high-level semantics, which facilitates subsequent feature fusion and lesion region segmentation.

The Deformable Transformer introduces a Deformable Attention mechanism with a sparse sampling strategy, allowing the model to adaptively select focus points and compute attention only at a few key locations in the feature map. To fully leverage multi-scale information, we employ ResNet34 as the backbone network to extract features at 5 different scales. To enhance the model’s ability to capture low-level boundary information, we retain the feature layers before the max-pooling operation to preserve more spatial details. These 5 feature maps are denoted as

,

,

,

,

. Due to the varying number of channels in these features, we apply 1 × 1 convolutions to unify the channel count across all feature maps for the Deformable Transformer, as shown in Equation (2):

We input the aligned features into a 12-layer Deformable Transformer for multi-scale feature fusion, resulting in five sets of enhanced representations

,

,

,

,

.

3.3. Squeeze-and-Excitation Mechanism and Multi-Scale Receptive Field

The SE mechanism is applied to the fused features of C1 + T2, C2 + T3, C3 + T4, C4 + T5 to optimize the complementarity between local and global information. We first compute the global feature representation for each channel using Global Average Pooling. Then, we calculate the channel attention weights by passing the computed global channel information through two fully connected layers with reduction ratio r = 16, we perform channel weighting by applying the computed channel attention weights to the original input features. The final fused feature representation is shown in Equation (3):

where

denotes the concatenation operation along the channel dimension,

represents the channel attention weights computed by SE.

To better capture complex lesions with varied scales, we introduce the MSRF module for improved spatial representation. The MSRF employs a three-branch multi-scale convolutional architecture, with the computation path illustrated in

Figure 3.

The base branch applies a 1 × 1 convolution directly to the input features to provide baseline information. The medium receptive field branch first applies a 1 × 1 convolution to the input features, followed by a combination of 1 × 3 and 3 × 1 convolutions, and then a 3 × 3 dilated convolution (dilation = 3) for feature extraction. The large receptive field branch first applies a 1 × 1 convolution to the input features, followed by a combination of 1 × 5 and 5 × 1 convolutions, and then a 5 × 5 dilated convolution (dilation = 3) to extract features at a larger scale. Finally, the features extracted from all branches are concatenated along the channel dimension and fused using a 3 × 3 convolution. Additionally, the input features are added to enhance the deep features.

3.4. Hierarchical Feature Transfer and Decoder

To fully utilize features from different levels, we propose an HFT mechanism, which enables effective transmission of deep semantic information to shallow layers. The first layer of the decoder directly receives

as input, ensuring that high-level semantic information is fully preserved during the decoding process. The intermediate layers of the decoder sequentially receive

,

, and

as inputs, with each feature passing through the MSRF module to enhance its multi-scale adaptability. The decoder inputs can be written as Equation (4):

In the shallow layers of the decoder, we directly introduce the low-level features

. These features contain rich boundary and texture information, which helps to complement the fine structure of the lesion regions.

To further illustrate the complete data flow and the interaction among modules, the overall computation process of MSDTCN-Net is summarized in Algorithm 1.

| Algorithm 1 The PyTorch-Style Pseudo-Code of MSDTCN-Net |

| #Input: X with shape [B, 3, 224, 224] |

| #Output: Y with shape [B, n_classes, 224, 224] |

| #Operators: CNX = ConvNeXt-Tiny encoder; RES = ResNet-34 encoder |

| PE = positional embedding; DefT = Deformable Transformer encoder |

| Out_k = Conv2d(256, Ck, kernel = 3, padding = 1) with (C0, C1, C2, C3, C4) = (48, 96, 192, 384, 768) |

Head = ConvTranspose2d (48 → 32, k = 4, s = 2, p = 1) → Conv2d(32 → 32, 3, p = 1) → Conv2d (32 → n_classes, 3, p = 1)

⊕ = element-wise addition; ‖ = channel concatenation |

| 1: C1..C4 = CNX(X) | # (96, 56 × 56), (192, 28 × 28), (384, 14 × 14), (768, 7 × 7) |

| 2: R0..R4 = RES(X) | # (64, 112 × 112), (64, 56 × 56), (128, 28 × 28), (256, 14 × 14), (512, 7 × 7) |

| 3: A0..A4 = Align1×1(R0)..Align1×1(R4) |

| 4: P0..P4 = PE(A0)..PE(A4); M0..M4 = zeros_bool_masks() |

| 5: mem, shapes, offsets = DefT ([A0..A4], [M0..M4], [P0..P4]) |

| 6: O0..O4 = Split(mem) | # each Oj: (256, Hj × Wj) |

| 7: T0..T4 = Out_0..Out_4(O0..O4) | # (48, 112 × 112), (96, 56 × 56), (192, 28 × 28), (384, 14 × 14), (768, 7 × 7) |

| 8: F4_cat = Cat(C4‖T4); F4_se = SE(F4_cat); F4 = Conv1×1(F4_se) | # (768,7 × 7) |

| 9: F3_cat = Cat(C3‖T3); F3_se = SE(F3_cat); F3 = Conv1×1(F3_se); F3m = MSRF(F3) | #(384, 14 × 14) |

| 10: F2_cat = Cat(C2‖T2); F2_se = SE(F2_cat); F2 = Conv1×1(F2_se); F2m = MSRF(F2) | #(192, 28 × 28) |

| 11: F1_cat = Cat(C1‖T1); F1_se = SE(F1_cat); F1 = Conv1×1(F1_se); F1m = MSRF(F1) | #(96, 56 × 56) |

| 12: F0m = MSRF(T0) | # (48, 112 × 112) |

| 13: D4 = F4; D3 = UP4(D4)⊕F3m; D2 = UP3(D3)⊕F2m; D1 = UP2(D2)⊕F1m; D0 = UP1(D1)⊕F0m |

| 14: Y = Head(D0) |

| 15: return Y |

3.5. Loss Function

We adopt a hybrid loss function combining Binary Cross-Entropy Loss and Dice Loss to improve lesion recognition and maintain classification stability. The binary cross-entropy loss

is defined in Equation (5):

where

N is the total number of pixels in the image,

is the true class of pixel

I, and

is the predicted probability of the lesion for pixel

i. The dice loss

is defined in Equation (6):

where

and

represent the true label and the model’s predicted probability, respectively.

ϵ is a small smoothing term. Combining the advantages of

and

, we adopt a weighted loss function to ensure a balance between overall classification performance and lesion area recognition capability [

28], as shown in Equation (7):

4. Experiments

4.1. Datasets

To evaluate the effectiveness of our method, we conducted experiments on four publicly available datasets. These four datasets are widely recognized in the field of skin lesion analysis and are extensively used for benchmarking and model evaluation. The first three datasets are ISIC 2016, ISIC 2017, and ISIC 2018, which are part of the dataset provided by the International Skin Imaging Collaboration (ISIC). The fourth dataset is the PH2 dataset, which was collected from the Dermatology Department at Pedro Hispano Hospital in Matosinhos, Portugal. In our experiments, the datasets were divided as follows:

- (1)

ISIC 2016: This dataset contains 1279 RGB skin lesion images with corresponding annotations. In the experiment, we randomly split the dataset, selecting 788 images for the training set, 112 images for the validation set, and 379 images for the test set.

- (2)

ISIC 2017: This dataset consists of 2750 annotated RGB images. The dataset was divided into 2000 training images, 150 validation images, and 600 test images.

- (3)

ISIC 2018: This dataset includes 2594 fully annotated RGB images. In the experiment, we randomly split the dataset, selecting 1815 images for the training set, 259 images for the validation set, and 520 images for the test set.

- (4)

PH2: This dataset contains 200 finely annotated RGB dermoscopic images. In the experiment, we randomly split the dataset, selecting 140 images for the training set, 20 images for the validation set, and 40 images for the test set.

It should be noted that ISIC 2016, ISIC 2017, and ISIC 2018 are all derived from the ISIC archive and may contain overlapping or visually similar lesions. In this study, we follow the common practice in existing work and do not exclude any images as duplicates.

4.2. Implementation Details

Our experiments were conducted in a Python 3.8 and PyTorch 2.0.0 environment, and implemented on an NVIDIA GeForce RTX 4090 GPU with 24 GB of VRAM. The resolution of all training, validation, and test images was uniformly adjusted to 224 × 224 to ensure consistent input dimensions. We selected ConvNeXt-Tiny and ResNet-34 as pre-trained models and Adam algorithm [

34] to improve the model’s convergence speed and optimization stability. In the optimizer configuration, the learning rate was set to 0.001 to ensure stable weight updates. The momentum parameters, betas = (0.5, 0.999), were used to control the first and second moment estimates. The value of 0.5 reduces the inertia of the first moment estimate, making the optimizer more sensitive to changes in the current gradient compared to the traditional value of 0.9. This helps enhance training stability, while 0.999 smooths the second moment estimate to suppress gradient fluctuations. The weight decay was set to 0 to avoid the influence of additional L2 regularization on the model’s training process. We set the batch size to 4 during training. Throughout the training process, we monitored the model’s performance by calculating evaluation metrics on the validation set. If the Dice score on the validation set improved, we updated and saved the new weight file to ensure that the best model was used for subsequent testing.

4.3. Evaluation Metrics

We employed five commonly used metrics in skin lesion segmentation to evaluate the effectiveness of our proposed method: Accuracy (ACC), Intersection over Union (IoU), Dice coefficient (Dice), Sensitivity (SE), and Specificity (SP), as shown in Equations (8)–(12):

where TP and TN represent the correctly identified skin lesion regions and background regions, respectively, FP refers to the background regions incorrectly identified as skin lesions, and FN represents the skin lesion regions incorrectly identified as background.

4.4. Ablation Studies

To evaluate the impact of each component in our proposed network architecture on the skin disease segmentation task, we designed and conducted 8 ablation experiments. The experiments were conducted using the ISIC 2017 dataset, with the same computational environment to ensure fairness of the results. The summarized experimental results are presented in

Table 1. First, we used CE (ConvNeXt Encoder) as the baseline, which adopts a U-shaped architecture similar to U-Net, with ConvNeXt as the encoder. Then, we gradually added DE (Dual Encoder), SE (Squeeze-and-Excitation), HFT (Hierarchical Feature Transfer), and MSRF (Multi-Scale Receptive Field) to the network to construct several different experimental setups.

Compared to the CE method, the DE method achieved improvements of 1.44% and 1.01% in IoU and Dice, respectively. Compared to the CE + MSRF + HFT method, the IoU and Dice of the DE + MSRF + HFT method are improved by 3.03% and 2.44%, respectively.

Compared to the DE method, the DE + SE method showed improvements of 0.62% and 0.68% in IoU and Dice, respectively. Compared to the DE + MSRF + HFT method, the DE + SE + MSRF + HFT method achieved improvements of 1.07% and 0.63% in IoU and Dice, respectively.

Compared to the DE method, the DE + HFT method showed improvements of 3.83% and 2.70% in IoU and Dice, respectively. Compared to the DE + SE method, the DE + SE + HFT method improved IoU and Dice by 3.85% and 2.52%, respectively.

Compared to the DE + HFT method, the DE + MSRF + HFT method showed improvements of 0.88% and 0.72% in IoU and Dice, respectively. Compared to the DE + SE + HFT method, the DE + SE + MSRF + HFT method improved IoU and Dice by 1.31% and 0.85%, respectively.

4.5. Results on the ISIC 2016 Dataset

On the ISIC 2016 skin lesion segmentation dataset, we compared our method with 10 state-of-the-art segmentation methods.

Table 2 summarizes the experimental results of each model in terms of ACC, IoU, Dice, SE, and SP. Our model achieved the highest scores in the three core metrics: ACC, IoU, and Dice, reaching 97.27%, 89.23%, and 94.02%, respectively. Notably, ACC improved by 0.63% over the second-best DEU-Net, IoU improved by 1.70% over the second-best ULFAC-Net, and Dice improved by 1.09% over ULFAC-Net, demonstrating the superiority of our method in terms of overall segmentation accuracy and region matching.

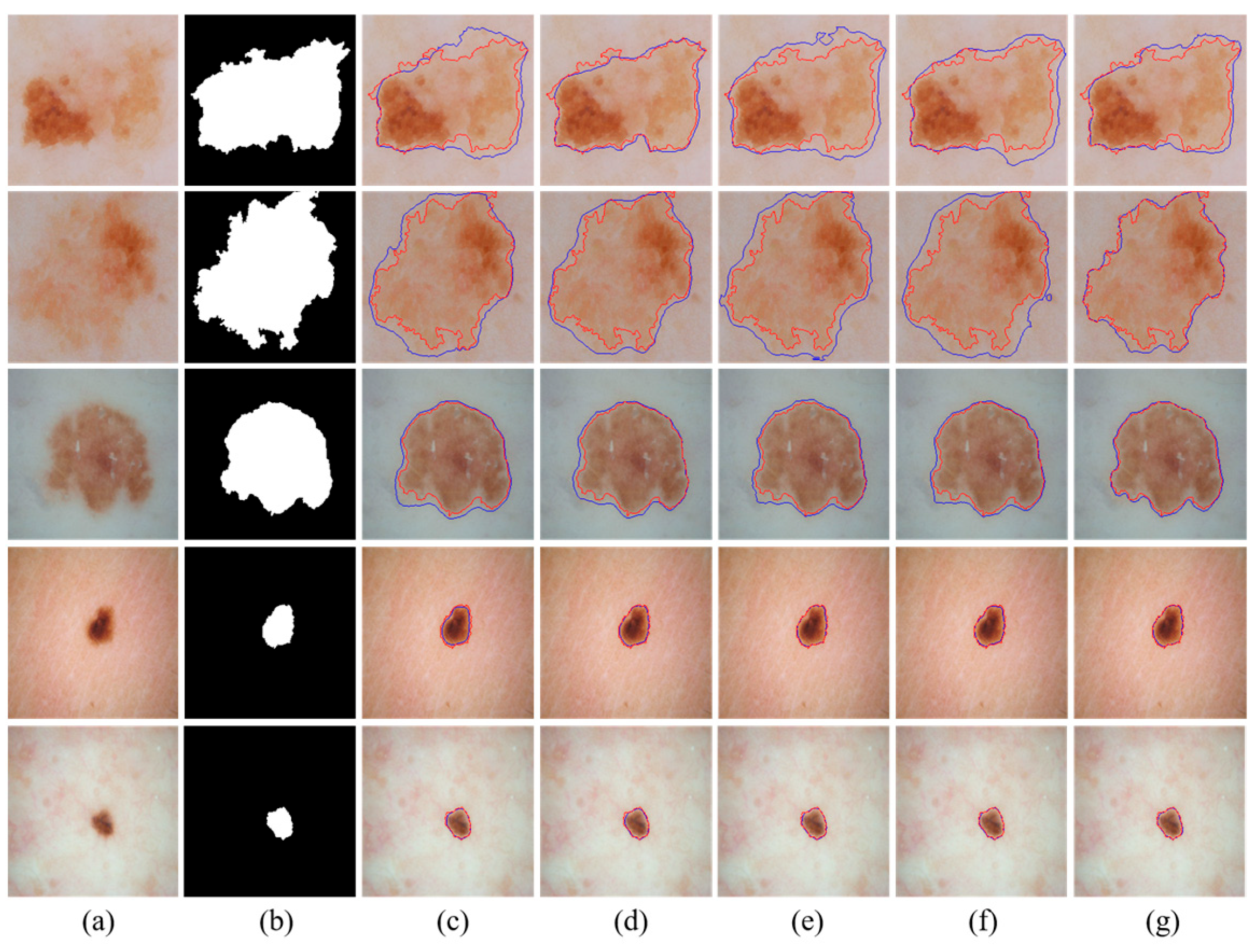

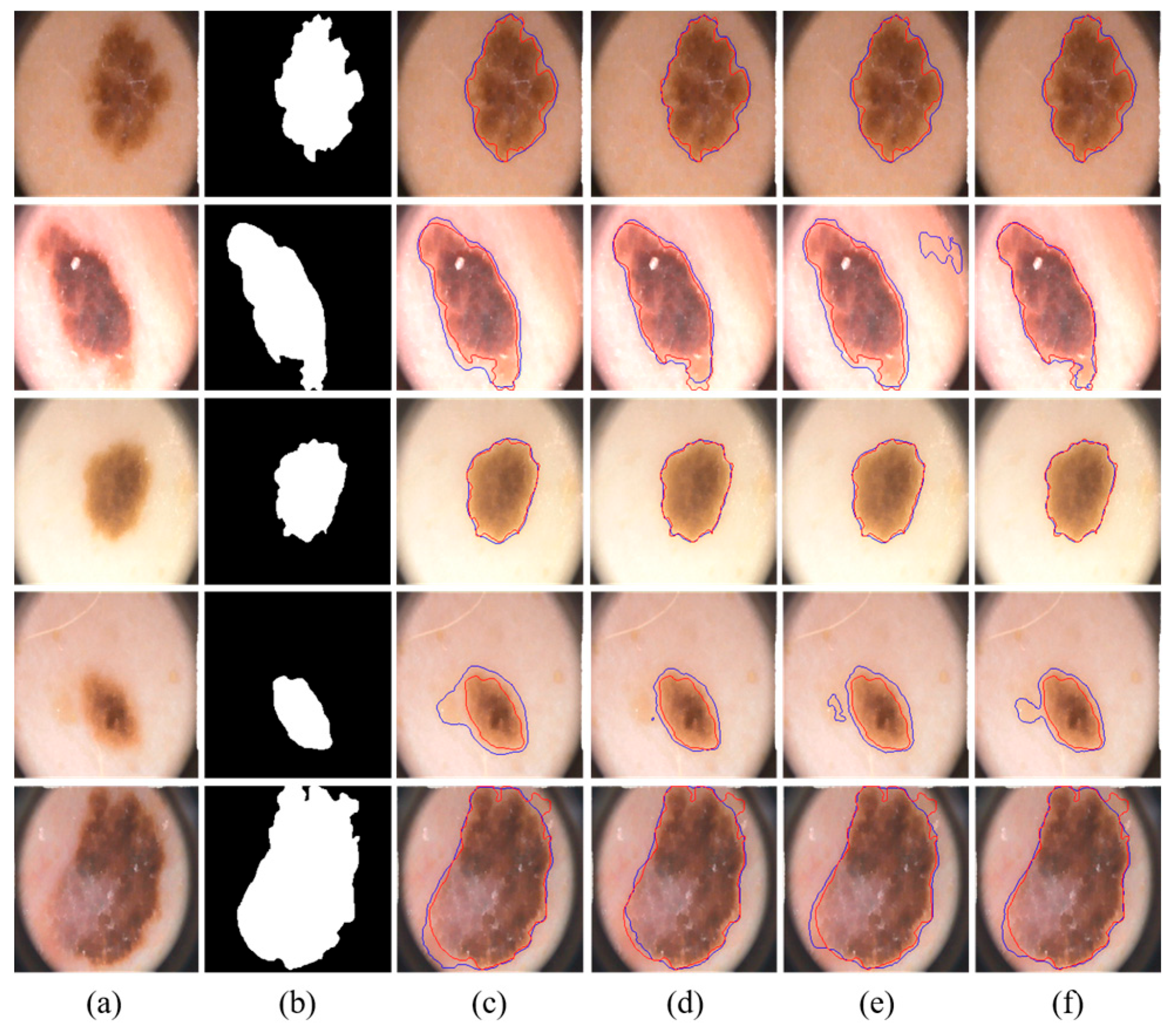

We visually compared the segmentation results of our proposed MSDTCN-Net with four other methods, as shown in

Figure 4. The experimental results demonstrate that MSDTCN-Net outperforms the other methods in terms of boundary contour, segmentation accuracy, and region matching. When the lesion area has faint colors and low contrast, MSDTCN-Net still performs well in segmenting the lesion region.

It should be noted that the U-Net results reported in

Table 2,

Table 3 and

Table 4 come from our retrained model. In contrast, the quantitative results of the other methods in these tables are directly taken from their original papers. In addition, all visualization results in

Figure 4,

Figure 5 and

Figure 6 are generated from our retrained models.

4.6. Results on the ISIC 2018 Dataset

On the ISIC 2018 skin lesion segmentation dataset, we compared our method with 10 state-of-the-art segmentation methods.

Table 3 summarizes the experimental results of each model on the ISIC 2018 skin lesion segmentation task. Our method achieved the highest scores on the two core metrics, IoU and Dice, with 85.01% and 90.92%, respectively. Specifically, IoU improved by 0.36% compared to the second-best model, ULFAC-Net, and Dice improved by 0.01%, indicating that our method is competitive in terms of overall segmentation accuracy and region matching.

We visually compared the segmentation results of our proposed MSDTCN-Net with four other methods, as shown in

Figure 5. The experimental results show that MSDTCN-Net excels in both overall segmentation performance and local detail recovery. It maintains the consistency of lesion shapes and boundaries, effectively distinguishing details in complex and blurred edges.

4.7. Results on the PH2 Dataset

On the PH2 skin lesion segmentation dataset, we compared our method with 5 state-of-the-art segmentation methods.

Table 4 summarizes the experimental results of each model on the PH2 skin lesion segmentation task. Our model achieved the highest scores in the three core metrics: ACC, IoU, and Dice, reaching 97.24%, 88.54%, and 93.74%, respectively. ACC improved by 0.2% over the second-best U-Net, IoU improved by 1.15% over the second-best SUTrans-NET, and Dice improved by 1.03% over CACDU-Net.

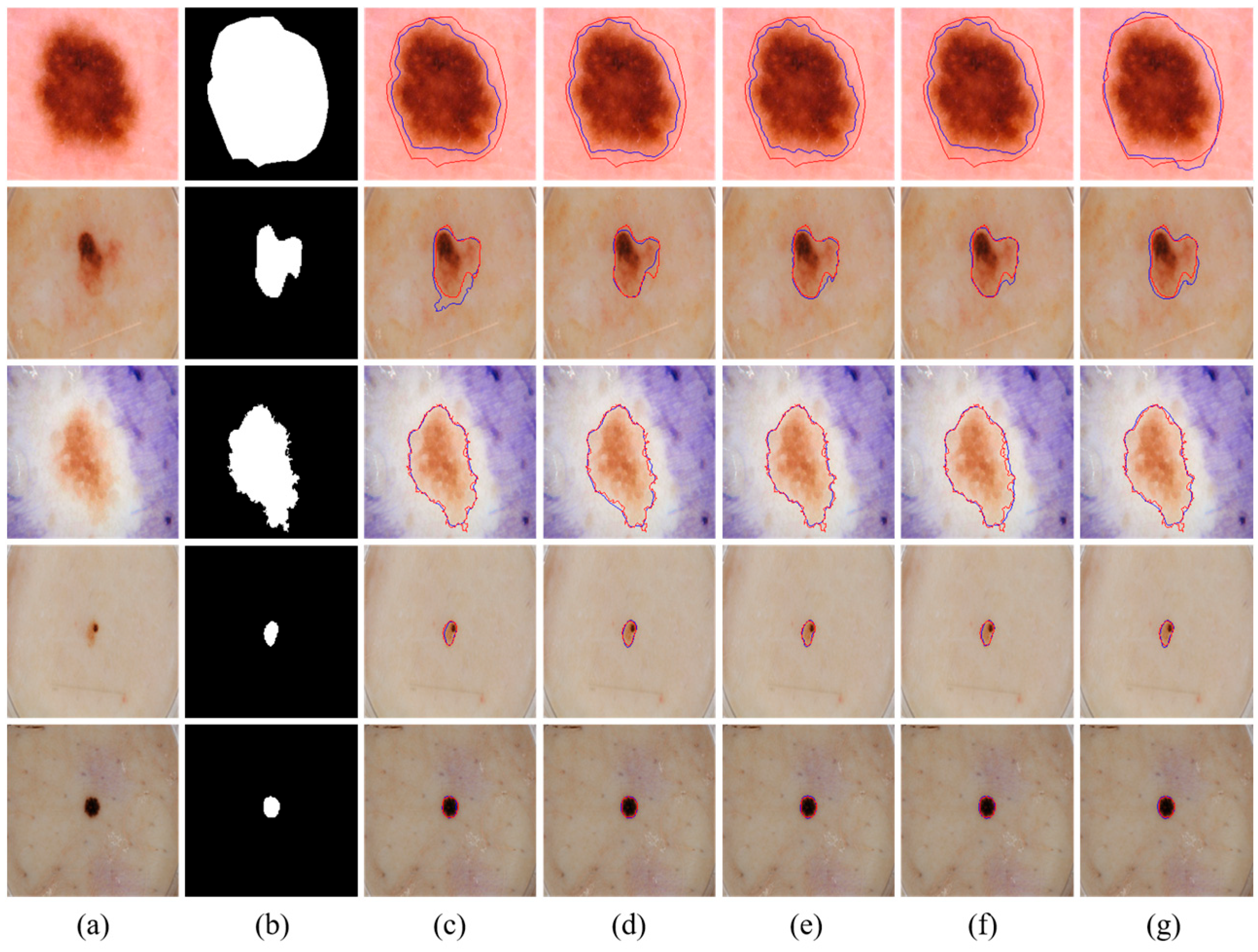

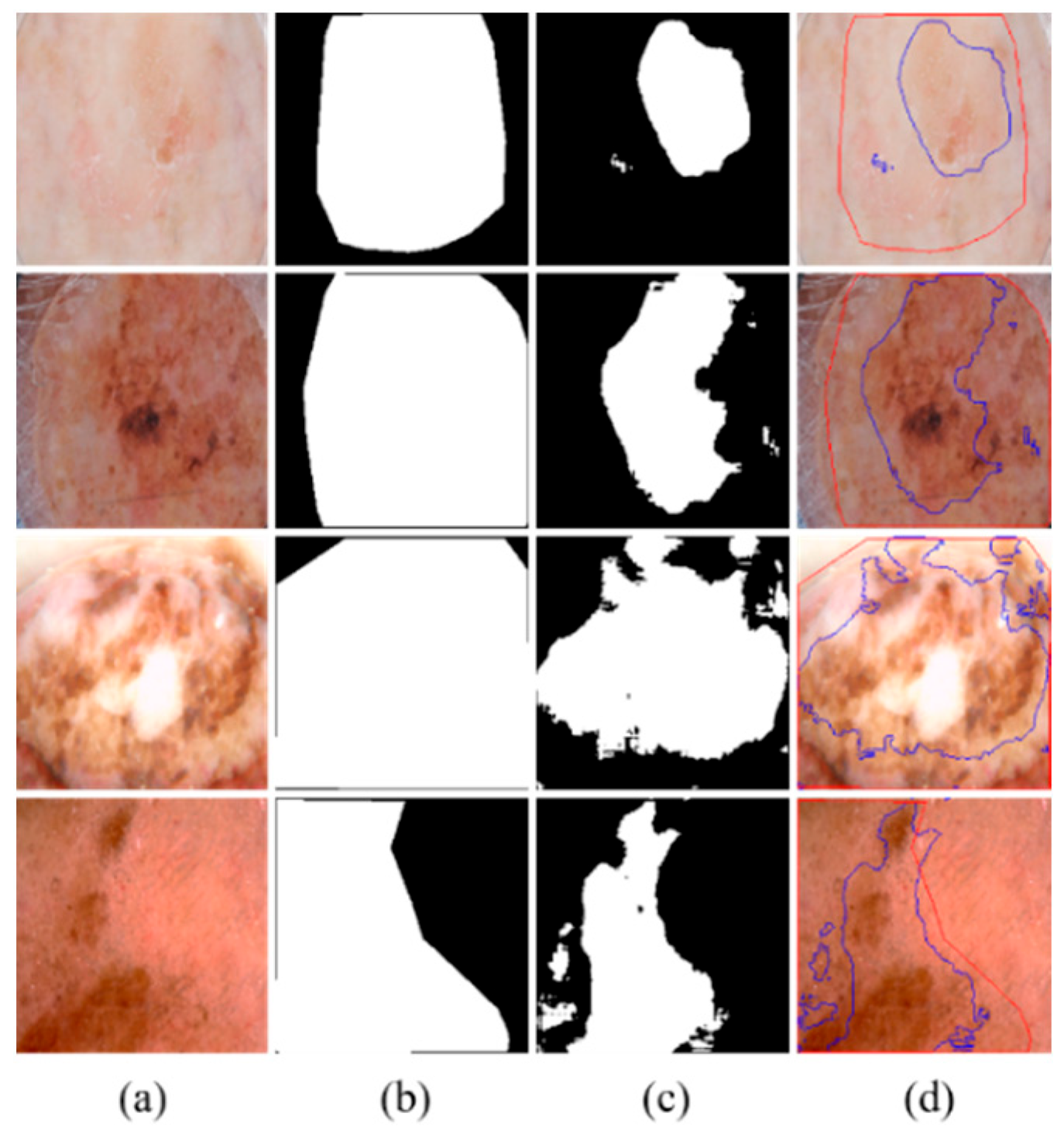

We visually compared the segmentation results of our proposed MSDTCN-Net with three other methods: U-Net, DCSAU-Net, and TransAttUnet, as shown in

Figure 6. The experimental results show that MSDTCN-Net excels in delineating complex boundaries while effectively capturing small lesions with faint colors.

5. Discussion and Limitations

The encoder–decoder structure has been widely used in medical image segmentation tasks, achieving good performance on multiple datasets due to its hierarchical feature extraction and ability to progressively recover spatial information. However, existing methods still have limitations in capturing local details and modeling global context, which results in difficulties in achieving high-precision segmentation in complex lesion regions. To address these issues, we propose MSDTCN-Net, a dual-encoder architecture combining ConvNeXt and Deformable Transformer to capture local details and enhance global context modeling, improving lesion area perception. Our HFT mechanism optimizes feature flow during decoding, progressively fusing high-level semantics with low-level spatial details to improve complex boundary recovery. MSRF module, which combines multi-branch convolutions with dilated convolutions, improves the model’s adaptability to lesion areas of varying sizes. Ablation experiments on the ISIC 2017 dataset validated the contribution of each module. Experiments on the ISIC 2016, ISIC 2018, and PH2 datasets demonstrate strong generalization and robustness across various datasets and lesion types.

Although MSDTCN-Net has achieved significant performance improvements across multiple datasets, it still has certain limitations. When there is substantial color variation within the lesion region or when the contrast between the lesion and background is extremely low, the model may still struggle to accurately differentiate the lesion area, leading to boundary discrepancies (as shown in

Figure 7). Despite the dual-encoder architecture effectively combining the advantages of CNN and Transformer, the model’s feature representation capability can still be limited in cases of low contrast or uneven texture, which may hinder accurate segmentation in such challenging scenarios.

Beyond these accuracy-side limitations under challenging imaging conditions, there is also a cost-side constraint: the dual-encoder design introduces computational overhead. Incorporating a Deformable Transformer increases parameters relative to single-encoder baselines, which raises resource consumption during training and inference. However, this trade-off yields accuracy benefits. In practice, efficiency can be improved via model compression and architectural simplification (e.g., reducing Transformer encoder depth), seeking a better balance between performance and cost.

From a clinical deployment standpoint, the modular design of MSDTCN-Net enables flexible adoption. Lightweight variants are suitable for hospital servers or edge devices for near–real-time screening, whereas the full model can support offline, high-precision analysis. Future work will prioritize improving computational efficiency and exploring adaptive inference schemes to balance segmentation accuracy, latency, and hardware constraints.

6. Conclusions

In this study, we propose MSDTCN-Net, a model based on the dual-encoder architecture combining ConvNeXt and Deformable Transformer, aimed at improving the accuracy of skin lesion segmentation, particularly in regions with complex boundaries and irregular lesion shapes. The proposed HFT mechanism passes multi-scale features layer by layer, ensuring effective interaction between high-level semantic information and low-level spatial details, thereby enhancing the decoder’s ability to recover lesion boundaries. The designed MSRF module combines multi-branch convolutions and dilated convolutions, boosting the model’s adaptability to lesions of varying scales and ensuring stable segmentation performance even in cases of complex morphology or fuzzy boundaries. To validate the effectiveness of MSDTCN-Net, we conducted extensive experiments on four publicly available skin lesion datasets: ISIC 2016, ISIC 2017, ISIC 2018, and PH2. The experimental results demonstrate that our method exhibits competitive segmentation performance across key metrics such as IoU, Dice, and ACC, surpassing existing state-of-the-art segmentation methods on multiple datasets. Additionally, intuitive visual analysis further confirms MSDTCN-Net’s capability in segmenting complex boundary regions.

Author Contributions

D.L.: Writing—review and editing, Supervision, Conceptualization, Methodology, Formal analysis. X.W.: Writing—review and editing, Investigation, Methodology, Software, Visualization, Validation. Q.W.: Writing—review and editing, Supervision, Conceptualization, Validation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kittler, H.; Pehamberger, H.; Wolff, K.; Binder, M.J. Diagnostic accuracy of dermoscopy. Lancet Oncol. 2002, 3, 159–165. [Google Scholar] [CrossRef]

- Luo, N.; Zhong, X.; Su, L.; Cheng, Z.; Ma, W.; Hao, P. Artificial intelligence-assisted dermatology diagnosis: From unimodal to multimodal. Comput. Biol. Med. 2023, 165, 107413. [Google Scholar] [CrossRef]

- Pare, S.; Kumar, A.; Singh, G.K.; Bajaj, V.J. Image segmentation using multilevel thresholding: A research review. Iran. J. Sci. Technol. Trans. Electr. Eng. 2020, 44, 1–29. [Google Scholar] [CrossRef]

- Sathya, B.; Manavalan, R. Image segmentation by clustering methods: Performance analysis. Int. J. Comput. Appl. 2011, 29, 27–32. [Google Scholar] [CrossRef]

- Celebi, M.E.; Kingravi, H.A.; Iyatomi, H.; Lee, J.; Aslandogan, Y.A.; Van Stoecker, W.; Moss, R.; Malters, J.M.; Marghoob, A.A. Fast and accurate border detection in dermoscopy images using statistical region merging. In Medical Imaging 2007: Image Processing 2007; SPIE: St Bellingham, WA, USA, 2007; Volume 6512, pp. 1297–1306. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; part III. Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Zhang, Y.; Liu, H.; Hu, Q. Transfuse: Fusing transformers and cnns for medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 14–24. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin unetr: Swin transformers for semantic segmentation of brain tumors in mri images. In International MICCAI Brainlesion Workshop; Springer International Publishing: Cham, Switzerland, 2021; pp. 272–284. [Google Scholar]

- Valanarasu, J.M.; Oza, P.; Hacihaliloglu, I.; Patel, V.M. Medical transformer: Gated axial-attention for medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 36–46. [Google Scholar]

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic). In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; IEEE: New York, NY, USA, 2018; pp. 168–172. [Google Scholar]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Halpern, A. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Mendonça, T.; Pedro, M.F.; Jorge, S.M.; André, R.S.; Rozeira, J. PH2—A dermoscopic image database for research and benchmarking. In Proceedings of the 35th International Conference of the IEEE Engineering in Medicine and Biology Society, Osaka, Japan, 3–7 July 2013. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Xu, Q.; Ma, Z.; Duan, W. DCSAU-Net: A deeper and more compact split-attention U-Net for medical image segmentation. Comput. Biol. Med. 2023, 154, 106626. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, B.; Liu, Y.; Zhang, Z.; Lu, G.; Kong, A.W. Transattunet: Multi-level attention-guided u-net with transformer for medical image segmentation. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 55–68. [Google Scholar] [CrossRef]

- Li, Y.; Tian, T.; Hu, J.; Yuan, C. SUTrans-NET: A hybrid transformer approach to skin lesion segmentation. PeerJ Comput. Sci. 2024, 10, e1935. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.; Chen, G.; Wang, W.; Lei, B.; Wen, Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Med. Image Anal. 2022, 76, 102327. [Google Scholar] [CrossRef] [PubMed]

- Karimi, A.; Faez, K.; Nazari, S. DEU-NET: Dual-encoder u-net for automated skin lesion segmentation. IEEE Access 2023, 11, 134804–134821. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, R.; Zhang, Y.; Bao, F.; Lv, H.; Li, L.; Zhang, C. Pact-Net: Parallel CNNs and Transformers for medical image segmentation. Comput. Methods Programs Biomed. 2023, 242, 107782. [Google Scholar] [CrossRef]

- Cao, X.; Yu, H.; Yan, K.; Cui, R.; Guo, J.; Li, X.; Xing, X.; Huang, T. DEMF-Net: A dual encoder multi-scale feature fusion network for polyp segmentation. Biomed. Signal Process. Control 2024, 96, 106487. [Google Scholar] [CrossRef]

- Xiong, B.; Hong, R.; Wang, J.; Li, W.; Zhang, J.; Lv, S.; Ge, D. DefNet: A multi-scale dual-encoding fusion network aggregating Transformer and CNN for crack segmentation. Constr. Build. Mater. 2024, 448, 138206. [Google Scholar] [CrossRef]

- Hao, S.; Yu, Z.; Zhang, B.; Dai, C.; Fan, Z.; Ji, Z.; Ganchev, I. MEFP-Net: A dual-encoding multi-scale edge feature perception network for skin lesion segmentation. IEEE Access 2024, 12, 140039–140052. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ma, Y.; Wu, L.; Gao, Y.; Gao, F.; Zhang, J.; Luo, Z. ULFAC-Net: Ultra-lightweight fully asymmetric convolutional network for skin lesion segmentation. IEEE J. Biomed. Health Inform. 2023, 27, 2886–2897. [Google Scholar] [CrossRef]

- Qiu, S.; Li, C.; Feng, Y.; Zuo, S.; Liang, H.; Xu, A. GFANet: Gated fusion attention network for skin lesion segmentation. Comput. Biol. Med. 2023, 155, 106462. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, R.; Peng, H.; Guo, C.; Luo, X.; Wang, J.; Long, X. SLP-Net: An efficient lightweight network for segmentation of skin lesions. Biomed. Signal Process. Control 2025, 101, 107242. [Google Scholar] [CrossRef]

- Zhong, L.; Li, T.; Cui, M.; Cui, S.; Wang, H.; Yu, L. DSU-Net: Dual-Stage U-Net based on CNN and Transformer for skin lesion segmentation. Biomed. Signal Process. Control 2025, 100, 107090. [Google Scholar] [CrossRef]

- Hao, S.; Wu, H.; Du, C.; Zeng, X.; Ji, Z.; Zhang, X.; Ganchev, I. CACDU-Net: A novel DoubleU-Net based semantic segmentation model for skin lesions detection in images. IEEE Access 2023, 11, 82449–82463. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).