Deep Learning Performance in Analyzing Nailfold Videocapillaroscopy Images in Systemic Sclerosis

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

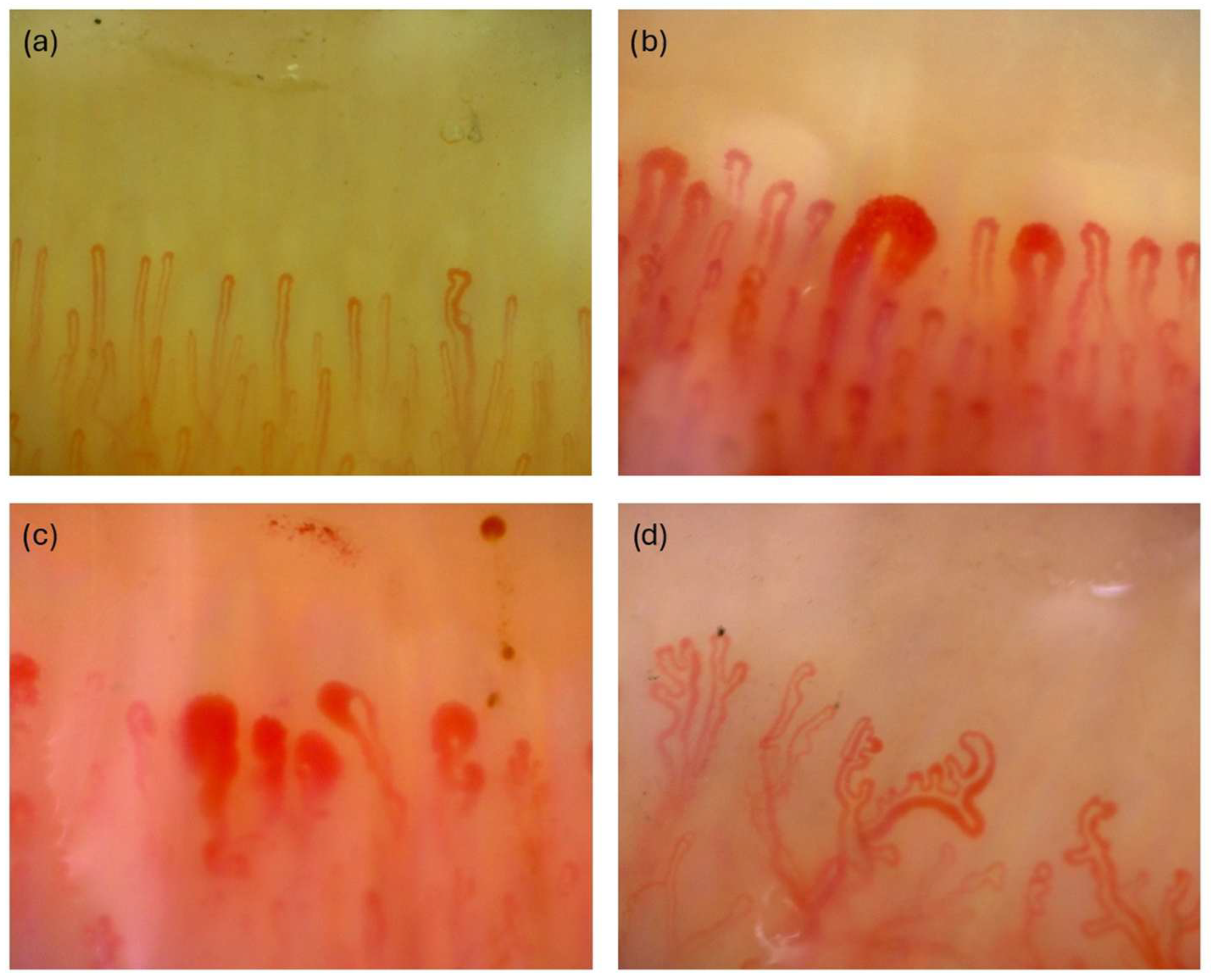

2.2. Obtaining NVC Images

2.3. Data Preparation

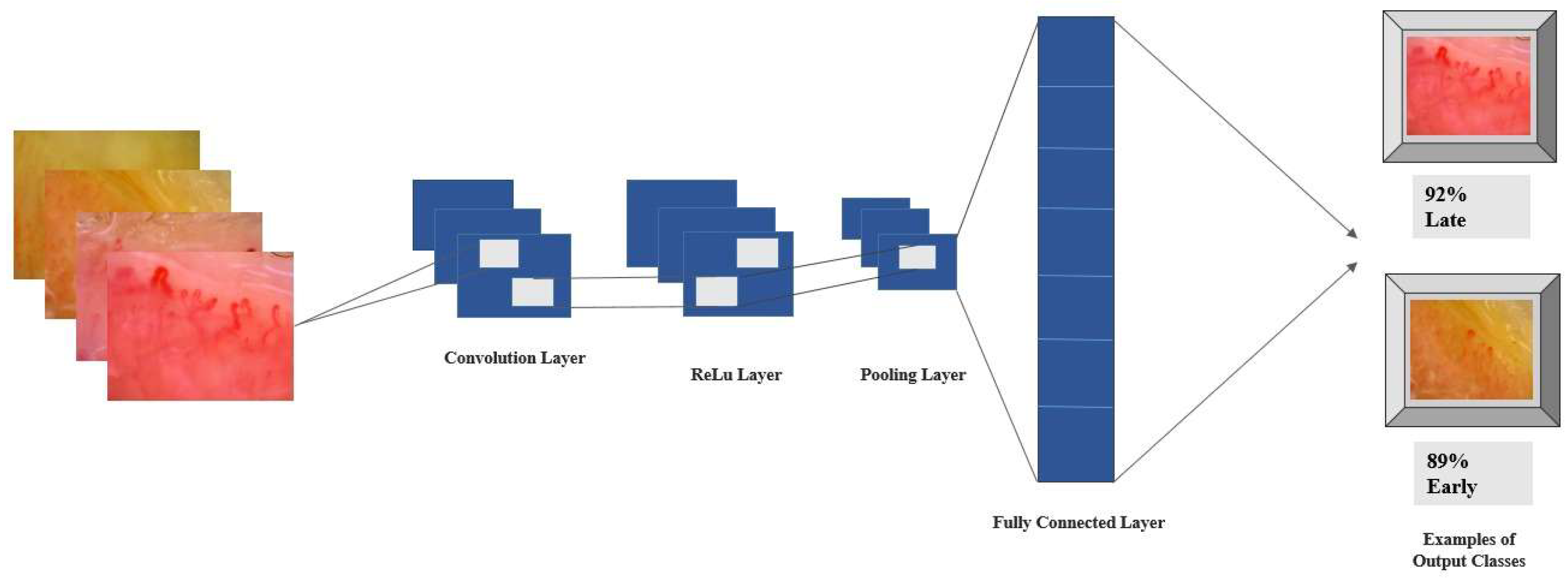

2.4. Deep Learning Approach

2.5. Model Evaluation Metrics

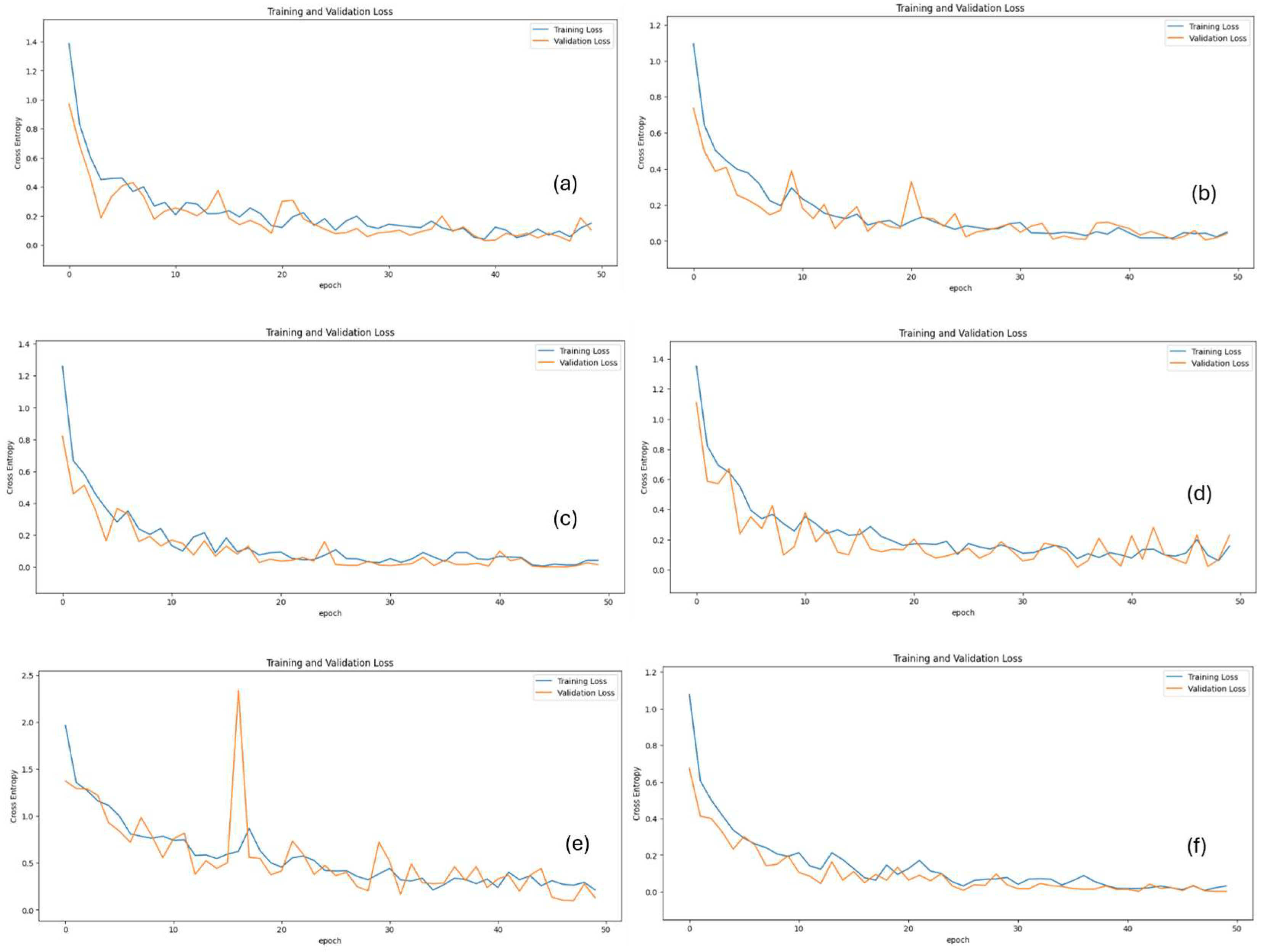

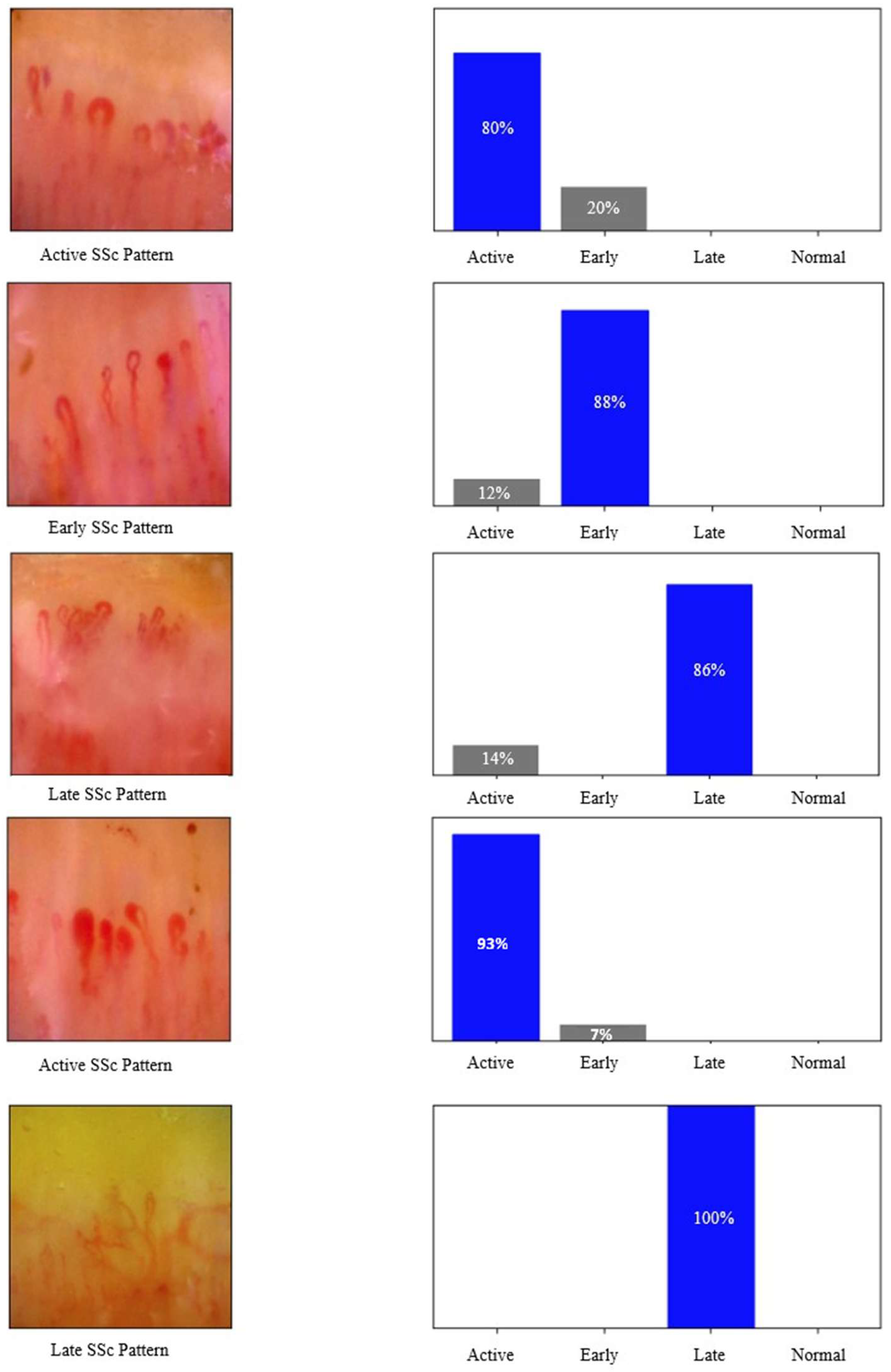

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACR | American College of Rheumatology |

| AUC | Area under curve |

| EULAR | European Alliance of Associations for Rheumatology |

| EUSTAR | European Scleroderma Trials and Research |

| NAS | Neural architecture search |

| NVC | Nailfold videocapillaroscopy |

| ReLU | Rectified linear unit |

| ROC | Receiver-operating characteristic curve |

| SE | Squeeze-and-Excitation |

| SSc | Systemic sclerosis |

| VEDOSS | Very Early Diagnosis of Systemic Sclerosis |

| VGG | Visual geometry group |

| ViT | Vision transformer |

References

- Denton, C.P.; Khanna, D. Systemic sclerosis. Lancet 2017, 390, 1685–1699. [Google Scholar] [CrossRef] [PubMed]

- Cutolo, M.; Sulli, A.; Pizzorni, C.; Accardo, S. Nailfold videocapillaroscopy assessment of microvascular damage in systemic sclerosis. J. Rheumatol. 2000, 27, 155–160. [Google Scholar] [PubMed]

- Hoogen, F.; Khanna, D.; Fransen, J.; Johnson, S.R.; Baron, M.; Tyndall, A.; Matucci-Cerinic, M.; Naden, R.P.; Medsger, T.A.; Carreira, P.E.; et al. 2013 classification criteria for systemic sclerosis: An American College of Rheumatology/European League against Rheumatism collaborative initiative. Arthritis. Rheumatol. 2013, 65, 2737–2747. [Google Scholar] [CrossRef] [PubMed]

- Smith, V.; Beeckman, S.; Herrick, A.L.; Decuman, S.; Deschepper, E.; De Keyser, F.; Distler, O.; Foeldvari, I.; Ingegnoli, F.; Muller-Ladner, U.; et al. An EULAR study group pilot study on reliability of simple capillaroscopic definitions to describe capillary morphology in rheumatic diseases. Rheumatology 2016, 55, 883–890. [Google Scholar] [CrossRef] [PubMed]

- Boulon, C.; Blaise, S.; Lazareth, I.; Le Hello, C.; Pistorius, M.A.; Imbert, B.; Mangin, M.; Sintes, P.; Senet, P.; Decamps-Le Chevoir, J.; et al. Reproducibility of the scleroderma pattern assessed by wide-field capillaroscopy in subjects suffering from Raynaud’s phenomenon. Rheumatology 2017, 56, 1780–1783. [Google Scholar] [CrossRef] [PubMed]

- Emrani, Z.; Karbalaie, A.; Fatemi, A.; Etehadtavakol, M.; Erlandsson, B.E. Capillary density: An important parameter in nailfold capillaroscopy. Microvasc. Res. 2017, 109, 7–18. [Google Scholar] [CrossRef] [PubMed]

- Ingegnoli, F.; Herrick, A.L.; Schioppo, T.; Bartoli, F.; Ughi, N.; Pauling, J.D.; Sulli, A.; Cutolo, M.; Smith, V.; The European League Against Rheumatism (EULAR) Study Group on Microcirculation in Rheumatic Diseases and the Scleroderma Clinical Trials Consortium. Reporting items for capillaroscopy in clinical research on musculoskeletal diseases: A systematic review and international Delphi consensus. Rheumatology 2021, 60, 1410–1418. [Google Scholar] [CrossRef] [PubMed]

- Berks, M.; Tresadern, P.; Dinsdale, G.; Murray, A.; Moore, T.; Herrick, A.; Taylor, C. An automated system for detecting and measuring nailfold capillaries. Med. Image Comput. Comput. Assist. Interv. 2014, 17 Pt 1, 658–665. [Google Scholar] [CrossRef] [PubMed]

- Cutolo, M.; Trombetta, A.C.; Melsens, K.; Pizzorni, C.; Sulli, A.; Ruaro, B.; Paolino, S.; Deschepper, E.; Smith, V. Automated assessment of absolute nailfold capillary number on videocapillaroscopic images: Proof of principle and validation in systemic sclerosis. Microcirculation 2018, 25, e12447. [Google Scholar] [CrossRef] [PubMed]

- Garaiman, A.; Nooralahzadeh, F.; Mihai, C.; Gonzalez, N.P.; Gkikopoulos, N.; Becker, M.O.; Distler, O.; Krauthammer, M.; Maurer, B. Vision transformer assisting rheumatologists in screening for capillaroscopy changes in systemic sclerosis: An artificial intelligence model. Rheumatology 2023, 62, 2492–2500. [Google Scholar] [CrossRef] [PubMed]

- Bharathi, P.G.; Berks, M.; Dinsdale, G.; Murray, A.; Manning, J.; Wilkinson, S.; Cutolo, M.; Smith, V.; Herrick, A.L.; Taylor, C.J. A deep learning system for quantitative assessment of microvascular abnormalities in nailfold capillary images. Rheumatology 2023, 62, 2325–2329. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. (Eds.) Identity mappings in deep residual networks. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14, 2016; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Chollet, F. (Ed.) Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. (Eds.) Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. (Eds.) Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 8–23 June 2018. [Google Scholar]

- Doshi, N.P.; Schaefer, G.; Merla, A. (Eds.) Nailfold capillaroscopy pattern recognition using texture analysis. In Proceedings of the 2012 IEEE-EMBS International Conference on Biomedical and Health Informatics, Shenzhen, China, 5–7 January 2012; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Ebadi Jalal, M.; Emam, O.S.; Castillo-Olea, C.; Garcia-Zapirain, B.; Elmaghraby, A. Abnormality detection in nailfold capillary images using deep learning with EfficientNet and cascade transfer learning. Sci. Rep. 2025, 15, 2068. [Google Scholar] [CrossRef] [PubMed]

- Ozturk, L.; Laclau, C.; Boulon, C.; Mangin, M.; Braz-Ma, E.; Constans, J.; Dari, L.; Le Hello, C. Analysis of nailfold capillaroscopy images with artificial intelligence: Data from literature and performance of machine learning and deep learning from images acquired in the SCLEROCAP study. Microvasc. Res. 2025, 157, 104753. [Google Scholar] [CrossRef] [PubMed]

| Class | Training | Validation | Test | Total |

|---|---|---|---|---|

| Active | 122 | 35 | 17 | 174 |

| Early | 115 | 33 | 16 | 164 |

| Late | 104 | 30 | 15 | 149 |

| Normal | 172 | 49 | 24 | 245 |

| Total | 513 | 147 | 72 | 732 |

| Accuracy | |

| Precision | |

| Recall | |

| F1 Score | |

| Cross-entropy loss |

| Model | Loss | Accuracy | ROC AUC |

|---|---|---|---|

| MobileNetV3Large | 0.08 ± 0.01 | 95.83% ± 0.20% | 100.00% ± 0.00% |

| ResNet152V2 | 0.26 ± 0.04 | 90.62% ± 0.23% | 99.98% ± 0.06% |

| Xception | 0.07 ± 0.01 | 96.87% ± 0.08% | 99.95% ± 0.01% |

| VGG-19 | 0.18 ± 0.02 | 94.79% ± 0.11% | 98.99% ± 0.10% |

| InceptionV3 | 0.03 ± 0.01 | 98.95% ± 0.08% | 99.99% ± 0.01% |

| NasNetLarge | 0.03 ± 0.01 | 97.91% ± 0.08% | 100.00% ± 0.00% |

| Model | Precision | Recall | F1 Score |

|---|---|---|---|

| MobileNetV3Large | 96.87% ± 0.19% | 94.49% ± 0.25% | 95.66% ± 0.20% |

| ResNet152V2 | 93.38% ± 0.27% | 90.62% ± 0.29% | 91.98% ± 0.26% |

| Xception | 96.83% ± 0.10% | 97.08% ± 0.09% | 96.95% ± 0.2% |

| VGG-19 | 95.52% ± 0.12% | 91.60% ± 0.19% | 93.52% ± 0.19% |

| InceptionV3 | 98.94% ± 0.02% | 98.80% ± 0.05% | 98.88% ± 0.01% |

| NasNetLarge | 98.21% ± 0.08% | 97.91% ± 0.09% | 98.06% ± 0.09% |

| Model | Normal | Active | Early | Late |

|---|---|---|---|---|

| MobileNet V3 Large | 96.12% ± 0.31% | 95.40% ± 0.44% | 94.82% ± 0.3% | 97.5% ± 0.2% |

| ResNet152 V2 | 91.34% ± 0.37% | 90.22% ± 0.31% | 89.60% ± 0.54% | 91.80% ± 0.41% |

| Xception | 97.03% ± 0.16% | 96.80% ± 0.31% | 96.33 ± 0.29% | 97.94 ± 0.21% |

| VGG-19 | 94.56% ± 0.30% | 93.81% ± 0.34% | 92.70 ± 0.39% | 95.93 ± 0.% |

| Inception V3 | 99.04% ± 0.12% | 98.82% ± 0.19% | 98.60 ± 0.12% | 99.21 ± 0.14% |

| NasNet Large | 98.25% ± 0.23% | 97.90% ± 0.20% | 97.40 ± 0.31% | 98.70 ± 0.24% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yayla, M.E.; Aydın, A.; Kılıçaslan, M.; Kalkan, M.; Güzel, M.S.; Shikhaliyeva, A.; Sezer, S.; Uslu, E.; Ateş, A.; Turgay, T.M. Deep Learning Performance in Analyzing Nailfold Videocapillaroscopy Images in Systemic Sclerosis. Diagnostics 2025, 15, 2912. https://doi.org/10.3390/diagnostics15222912

Yayla ME, Aydın A, Kılıçaslan M, Kalkan M, Güzel MS, Shikhaliyeva A, Sezer S, Uslu E, Ateş A, Turgay TM. Deep Learning Performance in Analyzing Nailfold Videocapillaroscopy Images in Systemic Sclerosis. Diagnostics. 2025; 15(22):2912. https://doi.org/10.3390/diagnostics15222912

Chicago/Turabian StyleYayla, Müçteba Enes, Ayhan Aydın, Mahmut Kılıçaslan, Mürüvvet Kalkan, Mehmet Serdar Güzel, Aida Shikhaliyeva, Serdar Sezer, Emine Uslu, Aşkın Ateş, and Tahsin Murat Turgay. 2025. "Deep Learning Performance in Analyzing Nailfold Videocapillaroscopy Images in Systemic Sclerosis" Diagnostics 15, no. 22: 2912. https://doi.org/10.3390/diagnostics15222912

APA StyleYayla, M. E., Aydın, A., Kılıçaslan, M., Kalkan, M., Güzel, M. S., Shikhaliyeva, A., Sezer, S., Uslu, E., Ateş, A., & Turgay, T. M. (2025). Deep Learning Performance in Analyzing Nailfold Videocapillaroscopy Images in Systemic Sclerosis. Diagnostics, 15(22), 2912. https://doi.org/10.3390/diagnostics15222912