From Machine Learning to Ensemble Approaches: A Systematic Review of Mammogram Classification Methods

Abstract

1. Introduction

- An evaluation of a wide range of classification methods, from machine learning, deep learning to hybrid/ensemble models, applied specifically to breast cancer diagnosis using mammogram images, along with their integration into CAD systems.

- Through a critical comparative analysis of recent works, the study highlights performance trends, trade-offs, and taxonomy that can assist researchers and practitioners in choosing appropriate models.

- An exploration of limitations encountered in current research and practical implementation, followed by recommendations intended to guide future investigations and support the advancement of more effective detection tools.

2. Materials and Methods

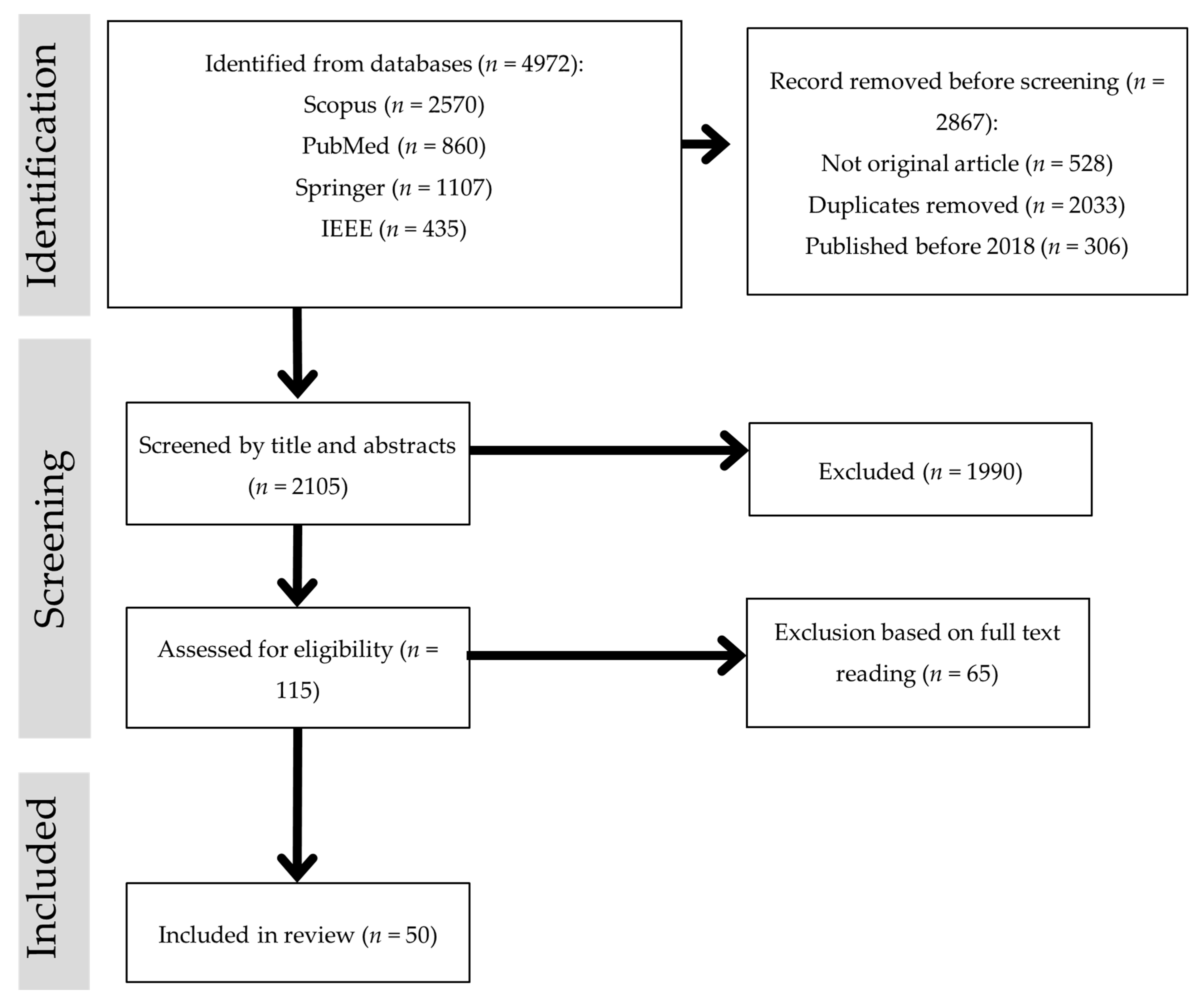

- Identification: A comprehensive search was conducted in four major databases—Scopus, PubMed, SpringerLink, and IEEE Xplore—to identify studies on mammogram-based computer-aided detection (CAD). The search covered publications from 2018 to 2025 using keywords such as “CAD for mammogram”, “mammogram classification”, “deep learning mammogram”, “machine learning mammogram”, and “hybrid/ensemble mammogram”. A total of 4972 records were retrieved (Scopus = 2570; PubMed = 860; SpringerLink = 1107; IEEE Xplore = 435). Before screening, 2867 records were removed, including 528 non-original articles (e.g., reviews, editorials), 2033 duplicates, and 306 studies published before 2018.

- Screening and eligibility assessment: After initial removals, 2105 records underwent title and abstract screening to remove studies that were clearly irrelevant to mammogram-based segmentation or classification. This step excluded 1990 records. The remaining 115 studies were then subjected to a full-text eligibility assessment. Articles were excluded at this stage if they:

- were non-journal publications (e.g., conference papers, book chapters),

- lacked a primary focus on classification (e.g., preprocessing techniques, feature extraction/selection, segmentation, optimization algorithms),

- used imaging modalities other than mammography,

- were inaccessible due to paywalls, or

- did not provide sufficient methodological or result details relevant to classification.

- 3.

- Inclusion: After applying these criteria, 50 studies were included in the review, offering insights into various classification techniques pertinent to mammogram-based breast cancer detection.

3. Results

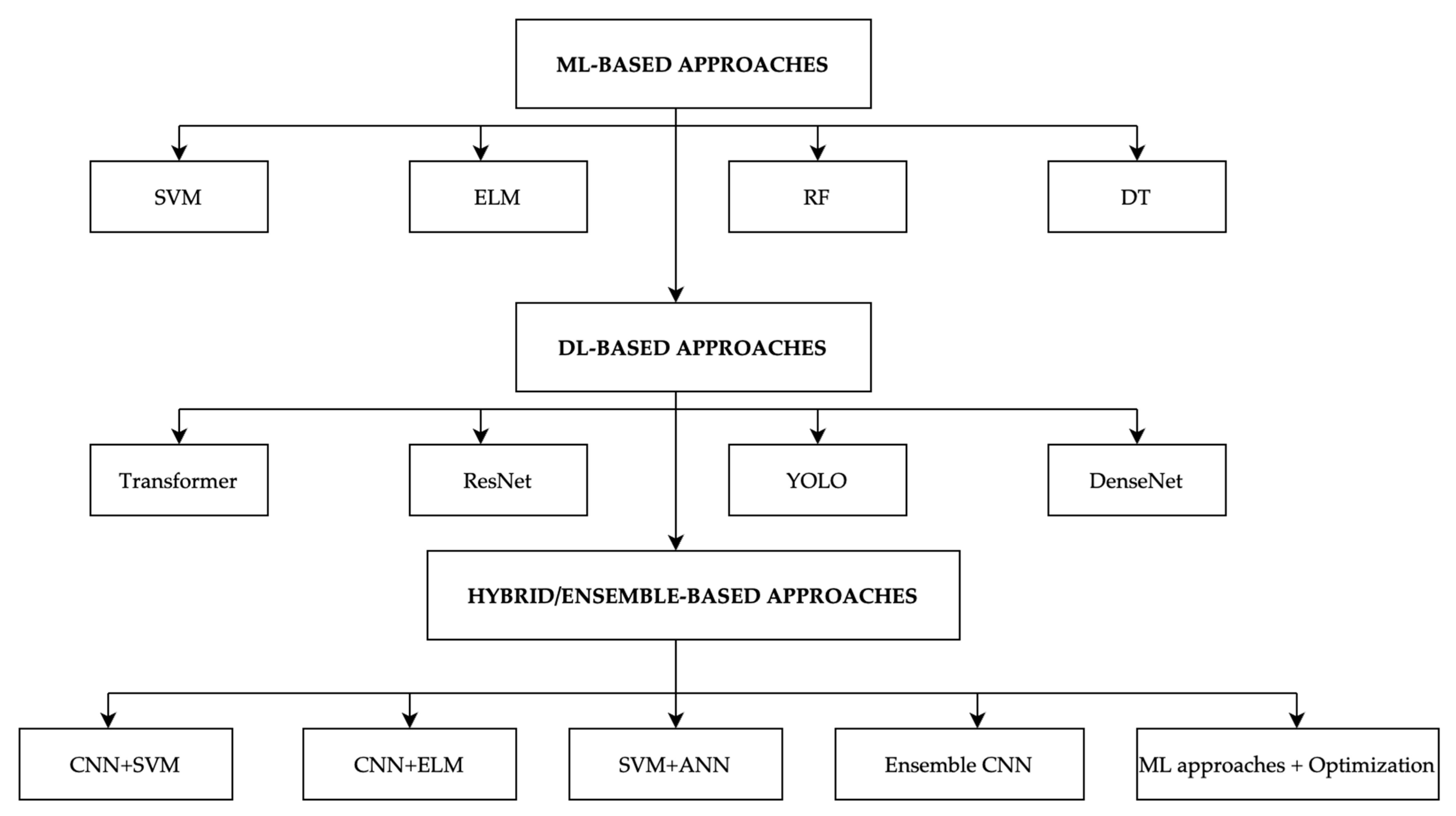

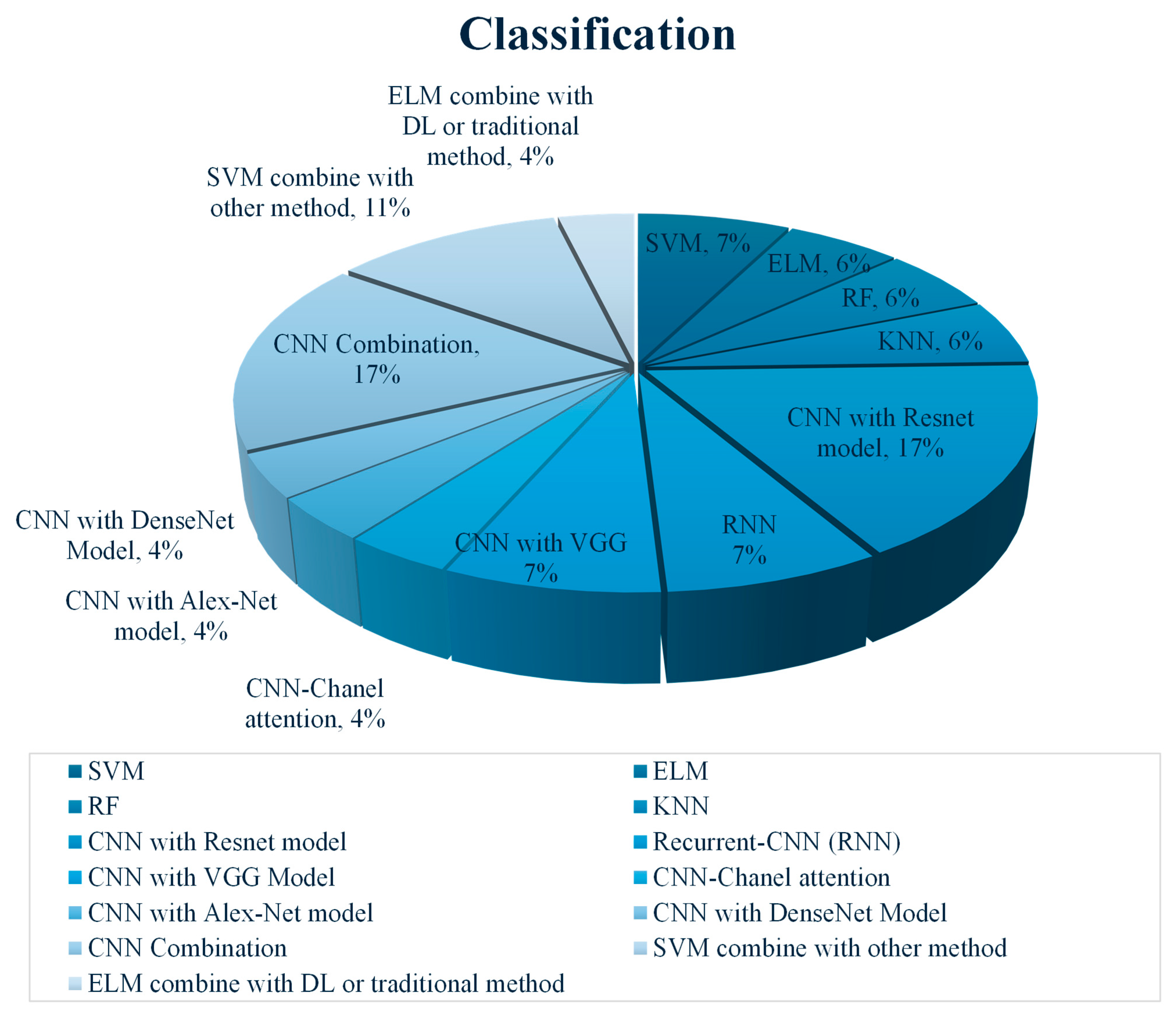

3.1. Machine Learning (ML)-Based Classification

3.2. Deep Learning (DL)-Based Classification

3.3. Hybrid/Ensemble Classification Methods

4. Discussion

5. Challenges, Opportunities, and Future Directions in Breast Cancer Detection

5.1. Classification Challenges

- Feature Extraction: Classification models heavily depend on the quality of feature extraction [72,73]. Classifiers such as SVM and Decision Trees (DTs) rely on manual feature extraction techniques like GLCM or HoG, which may not capture the full complexity of tumor characteristics [74,75]. Even in DL models, where features are automatically learned, extracting meaningful features from small or low-contrast tumors remains a challenge [19,31].

- Overfitting: Overfitting is a common issue in ML and DL classifiers, particularly when models are trained on small or imbalanced datasets like MIAS or INbreast [21,28]. Models such as SVM, ELM, and even advanced CNN-based classifiers tend to perform well on training data but often fail to generalize to new, unseen data [16,17]. Hybrid models that combine multiple classifiers also risk overfitting when trained on small datasets [57].

- Computational resources and time: DL models and hybrid approaches often require significant computational resources for training and inference. Models such as YOLO combined with Mask R-CNN or DenseNet architectures are computationally expensive and may not be feasible for real-time clinical applications [29,30]. Moreover, hybrid approaches that combine optimization algorithms with classification models, such as MODPSO-ELM, can further increase training times, limiting clinical implementation [21].

5.2. Opportunities and Future Direction of Breast Cancer Detection

- Combining Feature Extraction with Classification: Hybrid models that integrate DL-based feature extraction (e.g., using CNNs) with ML classifiers, such as SVM and Random Forest (RF), have shown notable improvements in classification performance [52,55]. This fusion allows for better utilization of the learned hierarchical features from DL models, while ML classifiers can handle the final decision-making step. In studies like those by [21,56], hybrid models significantly boosted the accuracy in both segmentation and classification. Future research should explore more efficient combinations of these models and identify which pairing yields the best results under varying conditions.

- Optimization Algorithms and Metaheuristics: Many hybrid methods include the use of metaheuristic algorithms [76], such as Particle Swarm Optimization (PSO), Genetic Algorithms (GA), or Moth Flame Optimization (MFO), to optimize model parameters and enhance performance [67]. These algorithms have proven effective in tuning model weights and improving the learning process [77], particularly when dealing with complex datasets like MIAS, DDSM, and INbreast. Future work should focus on integrating more sophisticated optimization techniques, such as reinforcement learning [78] or evolutionary algorithms, to further refine breast cancer detection models.

- Transfer Learning: Transfer learning has emerged as a key opportunity for leveraging pre-trained DL models, such as ResNet, DenseNet, and EfficientNet, to reduce the computational burden associated with training deep models from scratch [34]. These models, trained on large datasets like ImageNet, can be fine-tuned [69] for specific breast cancer detection tasks [79], which allows researchers to overcome the challenges of limited mammogram datasets. Transfer learning has shown promise in improving classification accuracy while reducing the training time. Beyond efficiency, transfer learning also improves representation quality. For example, ResNet/DenseNet/EfficientNet backbones preserve fine-grained image details through skip connections and multi-scale feature extraction layers [80], which is particularly beneficial for subtle or low-contrast mammographic lesions. By reusing pretrained convolutional filters that already capture edge, gradient, and texture patterns, the model can enhance lesion visibility even when intensity differences are minimal. Fine-tuning only the higher-level layers allows for adaptation to mammography’s domain characteristics, such as glandular tissue density and microcalcification patterns, while maintaining robust low-level representations learned from large-scale datasets. Accordingly, future directions should involve exploring more domain-specific pre-training techniques that are tailored to medical images [81], ensuring that models are better suited to the nuances of mammogram data.

- Integration with Other Imaging Modalities: Another promising direction for future research is the integration of mammogram analysis with other imaging modalities [82], such as ultrasound and MRI. Combining information from multiple imaging [83] techniques could improve the accuracy and robustness of breast cancer detection models by providing complementary views of the same region, reducing the likelihood of false negatives [84]. Such multimodal fusion is especially valuable for subtle or low-contrast lesions that are difficult to identify on mammograms alone, as ultrasound and MRI provide richer tissue contrast, margin definition feature, and contextual cues that help delineate ambiguous structures [84,85,86]. Beyond imaging, multimodal learning can also combine mammograms with clinical records, pathology reports, or other tabular data, enabling richer feature representation and potentially improving diagnostic performance. Recent works [87] have shown that integrating imaging with structured clinical data enhances model generalization and supports more clinically relevant decision-making.

- Real-time Application and Model Efficiency: A major future goal is to develop models that can be deployed in real-time clinical environments. Techniques such as model pruning [88], quantization, and knowledge distillation can be explored to reduce the size and computational requirements of deep learning models without sacrificing accuracy. These methods will be essential for integrating AI-driven breast cancer detection systems into everyday clinical workflows, especially in under-resourced healthcare settings. Recent studies have shown that pruning removes redundant connections, and quantization reduces precision from 32-bit to lower bit widths (e.g., INT8), significantly decreasing the inference latency and energy consumption without major accuracy loss [89,90,91].

- Clinical Perspective and Translation: Beyond technical performance, clinical adoption is essential for breast cancer classification models. For real-world use, models must provide interpretability, reliability, and validation across diverse patient populations and imaging protocols. Interpretability can be supported through visualization tools such as Grad-CAM [36] or attention heatmaps [53], which help radiologists understand the model’s decision basis. Meanwhile, clinical reliability requires rigorous external validation using independent and multi-institutional datasets to verify robustness beyond training conditions [92]. In practice, this involves testing models on data from different hospitals or imaging devices, reporting results at clinically relevant operating points (e.g., maintaining high sensitivity with corresponding specificity), and evaluating whether AI assistance improves radiologist performance or reading efficiency. Difficult or uncertain cases should be referred to for manual review rather than automated decision-making. Finally, practical deployment also depends on the clear reporting of inference time, hardware needs, and integration into daily clinical workflow [93]. Integration into radiology practice further requires efficiency, regulatory approval, and minimization of false positives to ensure radiologist trust. While current systems show promising accuracy, most remain at the proof-of-concept stage, emphasizing the need for large-scale validation and collaboration between engineers and healthcare professionals [94].

- Foundation and Large Vision Models: Although transfer learning models such as ResNet, DenseNet, and EfficientNet have demonstrated strong performance by reusing pretrained representations from large-scale datasets, recent advancements have shifted toward foundation models and large vision models (LVMs) that offer broader generalization and adaptability. Foundation models are large-scale deep architectures trained on massive and diverse images or multimodal datasets, enabling them to serve as general-purpose backbones that can be adapted to various medical imaging tasks with minimal fine-tuning. In medical imaging, LVMs such as Vision Transformers (ViT) [42], Segment Anything Model (SAM) [95], MedCLIP [96], and BioViL have shown strong potential in capturing fine-grained anatomical patterns, handling cross-domain variations, and linking visual features with textual clinical information. These models not only provide richer visual-semantic representations but also allow zero-shot or few-shot adaptation, which is particularly beneficial when labeled medical data are limited. Applying such models to mammography could improve lesion localization and classification performance by leveraging their multi-scale and multimodal understanding. Future research should explore how foundation and large vision models can be effectively fine-tuned, compressed, or adapted for mammographic imaging, balancing their computational cost with clinical feasibility while maintaining interpretability and reliability for real-world deployment.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CAD | Computer Aided-Detection |

| ML | Machine Learning |

| DL | Deep Learning |

| SVM | Support Vector Machine |

| CNN | Convolution Neural Network |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RoI | Region of Interest |

| GLCM | Gray Level Co-occurrence Matrix |

| YOLO | You Only Look Once |

| IoU | Intersection over Unit |

| AUC | Area Under Cover |

| ROC | Receiver Operating Characteristic |

| ELM | Extreme Learning Machine |

| KNN | k-Nearest Neighbors |

| MFO | Moth Flame Optimization |

| PSO | Particle Swarm Optimization |

| ECA | Efficient Channel Attention |

| MLO | Mediolateral-Oblique |

| CC | Cranio-Caudal |

References

- Ferlay, J.; Ervik, M.; Lam, F.; Laversanne, M.; Colombet, M.; Mery, L.; Piñeros, M.; Znaor, A.; Soerjomataram, I.; Bray, F. Global Cancer Observatory: Cancer Today. Available online: https://gco.iarc.who.int/today (accessed on 4 February 2025).

- Arnold, M.; Morgan, E.; Rumgay, H.; Mafra, A.; Singh, D.; Laversanne, M.; Vignat, J.; Gralow, J.R.; Cardoso, F.; Siesling, S.; et al. Current and Future Burden of Breast Cancer: Global Statistics for 2020 and 2040. Breast 2022, 66, 15–23. [Google Scholar] [CrossRef]

- American Cancer Society Understanding a Breast Cancer Diagnosis. Available online: https://www.cancer.org/Cancer/Breast-Cancer/About/Types-of-Breast-Cancer.Html#References (accessed on 4 November 2024).

- Rezaei, Z. A Review on Image-Based Approaches for Breast Cancer Detection, Segmentation, and Classification. Expert. Syst. Appl. 2021, 182, 115204. [Google Scholar] [CrossRef]

- Meenalochini, G.; Ramkumar, S. A Deep Learning Based Breast Cancer Classification System Using Mammograms. J. Electr. Eng. Technol. 2024, 19, 2637–2650. [Google Scholar] [CrossRef]

- López-Úbeda, P.; Martín-Noguerol, T.; Paulano-Godino, F.; Luna, A. Comparative Evaluation of Image-Based vs. Text-Based vs. Multimodal AI Approaches for Automatic Breast Density Assessment in Mammograms. Comput. Methods Programs Biomed. 2024, 255, 108334. [Google Scholar] [CrossRef] [PubMed]

- Ranjbarzadeh, R.; Dorosti, S.; Jafarzadeh Ghoushchi, S.; Caputo, A.; Tirkolaee, E.B.; Ali, S.S.; Arshadi, Z.; Bendechache, M. Breast Tumor Localization and Segmentation Using Machine Learning Techniques: Overview of Datasets, Findings, and Methods. Comput. Biol. Med. 2023, 152, 106443. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, S.Z. Methods Used in Computer-Aided Diagnosis for Breast Cancer Detection Using Mammograms: A Review. J. Healthc. Eng. 2020, 2020, 9162464. [Google Scholar] [CrossRef]

- Jalloul, R.; Chethan, H.K.; Alkhatib, R. A Review of Machine Learning Techniques for the Classification and Detection of Breast Cancer from Medical Images. Diagnostics 2023, 13, 2460. [Google Scholar] [CrossRef]

- Gao, Y.; Lin, J.; Zhou, Y.; Lin, R. The Application of Traditional Machine Learning and Deep Learning Techniques in Mammography: A Review. Front. Oncol. 2023, 13, 1213045. [Google Scholar] [CrossRef]

- Abhisheka, B.; Biswas, S.K.; Purkayastha, B. A Comprehensive Review on Breast Cancer Detection, Classification and Segmentation Using Deep Learning. Arch. Comput. Methods Eng. 2023, 30, 5023–5052. [Google Scholar] [CrossRef]

- Zebari, D.A.; Ibrahim, D.A.; Zeebaree, D.Q.; Haron, H.; Salih, M.S.; Damaševičius, R.; Mohammed, M.A. Systematic Review of Computing Approaches for Breast Cancer Detection Based Computer Aided Diagnosis Using Mammogram Images. Appl. Artif. Intell. 2021, 35, 2157–2203. [Google Scholar] [CrossRef]

- Loizidou, K.; Elia, R.; Pitris, C. Computer-Aided Breast Cancer Detection and Classification in Mammography: A Comprehensive Review. Comput. Biol. Med. 2023, 153, 106554. [Google Scholar] [CrossRef]

- Agrawal, S.; Oza, P.; Kakkar, R.; Tanwar, S.; Jetani, V.; Undhad, J.; Singh, A. Analysis and Recommendation System-Based on PRISMA Checklist to Write Systematic Review. Assess. Writ. 2024, 61, 100866. [Google Scholar] [CrossRef]

- Sahu, A.; Das, P.K.; Meher, S. Recent Advancements in Machine Learning and Deep Learning-Based Breast Cancer Detection Using Mammograms. Phys. Medica 2023, 114, 103138. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, M.; Wang, H.; Jiang, H.; Yao, Y.; Zhang, H.; Xin, J. Breast Cancer Detection Using Extreme Learning Machine Based on Feature Fusion with CNN Deep Features. IEEE Access 2019, 7, 105146–105158. [Google Scholar] [CrossRef]

- Avcı, H.; Karakaya, J. A Novel Medical Image Enhancement Algorithm for Breast Cancer Detection on Mammography Images Using Machine Learning. Diagnostics 2023, 13, 348. [Google Scholar] [CrossRef]

- Ketabi, H.; Ekhlasi, A.; Ahmadi, H. A Computer-Aided Approach for Automatic Detection of Breast Masses in Digital Mammogram via Spectral Clustering and Support Vector Machine. Phys. Eng. Sci. Med. 2021, 44, 277–290. [Google Scholar] [CrossRef]

- Sha, Z.; Hu, L.; Rouyendegh, B.D. Deep Learning and Optimization Algorithms for Automatic Breast Cancer Detection. Int. J. Imaging Syst. Technol. 2020, 30, 495–506. [Google Scholar] [CrossRef]

- Sannasi Chakravarthy, S.R.; Bharanidharan, N.; Rajaguru, H. Deep Learning-Based Metaheuristic Weighted K-Nearest Neighbor Algorithm for the Severity Classification of Breast Cancer. Irbm 2023, 44, 100749. [Google Scholar] [CrossRef]

- Muduli, D.; Dash, R.; Majhi, B. Fast Discrete Curvelet Transform and Modified PSO Based Improved Evolutionary Extreme Learning Machine for Breast Cancer Detection. Biomed. Signal Process. Control 2021, 70, 102919. [Google Scholar] [CrossRef]

- Thawkar, S.; Ingolikar, R. Classification of Masses in Digital Mammograms Using Biogeography-Based Optimization Technique. J. King Saud Univ.-Comput. Inf. Sci. 2020, 32, 1140–1148. [Google Scholar] [CrossRef]

- Mannarsamy, V.; Mahalingam, P.; Kalivarathan, T.; Amutha, K.; Paulraj, R.K.; Ramasamy, S. Sift-BCD: SIFT-CNN Integrated Machine Learning-Based Breast Cancer Detection. Biomed. Signal Process. Control 2025, 106, 107686. [Google Scholar] [CrossRef]

- Mohanty, F.; Rup, S.; Dash, B.; Majhi, B.; Swamy, M.N.S. An Improved Scheme for Digital Mammogram Classification Using Weighted Chaotic Salp Swarm Algorithm-Based Kernel Extreme Learning Machine. Appl. Soft Comput. J. 2020, 91, 106266. [Google Scholar] [CrossRef]

- Thawkar, S.; Ingolikar, R. Classification of Masses in Digital Mammograms Using the Genetic Ensemble Method. J. Intell. Syst. 2020, 29, 831–845. [Google Scholar] [CrossRef]

- Ragab, D.A.; Sharkas, M.; Attallah, O. Breast Cancer Diagnosis Using an Efficient CAD System Based on Multiple Classifiers. Diagnostics 2019, 9, 165. [Google Scholar] [CrossRef] [PubMed]

- Kayode, A.A.; Akande, N.O.; Adegun, A.A.; Adebiyi, M.O. An Automated Mammogram Classification System Using Modified Support Vector Machine. Med. Devices Evid. Res. 2019, 12, 275–284. [Google Scholar] [CrossRef]

- Han, B.; Sun, L.; Li, C.; Yu, Z.; Jiang, W.; Liu, W.; Tao, D.; Liu, B. Deep Location Soft-Embedding-Based Network with Regional Scoring for Mammogram Classification. IEEE Trans. Med. Imaging 2024, 43, 3137–3148. [Google Scholar] [CrossRef]

- Anas, M.; Haq, I.U.; Husnain, G.; Jaffery, S.A.F. Advancing Breast Cancer Detection: Enhancing YOLOv5 Network for Accurate Classification in Mammogram Images. IEEE Access 2024, 12, 16474–16488. [Google Scholar] [CrossRef]

- Liu, W.; Shu, X.; Zhang, L.; Li, D.; Lv, Q. Deep Multiscale Multi-Instance Networks with Regional Scoring for Mammogram Classification. IEEE Trans. Artif. Intell. 2022, 3, 485–496. [Google Scholar] [CrossRef]

- Shu, X.; Zhang, L.; Wang, Z.; Lv, Q.; Yi, Z. Deep Neural Networks with Region-Based Pooling Structures for Mammographic Image Classification. IEEE Trans. Med. Imaging 2020, 39, 2246–2255. [Google Scholar] [CrossRef]

- Hamed, G.; Marey, M.; Amin, S.; Tolba, M.F. Automated Breast Cancer Detection and Classification in Full Field Digital Mammograms Using Two Full and Cropped Detection Paths Approach. IEEE Access 2021, 9, 116898–116913. [Google Scholar] [CrossRef]

- Nasir Khan, H.; Shahid, A.R.; Raza, B.; Dar, A.H.; Alquhayz, H. Multi-View Feature Fusion Based Four Views Model for Mammogram Classification Using Convolutional Neural Network. IEEE Access 2019, 7, 165724–165733. [Google Scholar] [CrossRef]

- Le, T.L.; Bui, M.H.; Nguyen, N.C.; Ha, M.T.; Nguyen, A.; Nguyen, H.P. Transfer Learning for Deep Neural Networks-Based Classification of Breast Cancer X-Ray Images. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2024, 12, 2275708. [Google Scholar] [CrossRef]

- Basha, A.A.; Vivekanandan, S.; Mubarakali, A.; Alqahtani, A.S. Enhanced Mammogram Classification with Convolutional Neural Network: An Improved Algorithm for Automated Breast Cancer Detection. Measurement 2023, 221, 113551. [Google Scholar] [CrossRef]

- Lou, Q.; Li, Y.; Qian, Y.; Lu, F.; Ma, J. Mammogram Classification Based on a Novel Convolutional Neural Network with Efficient Channel Attention. Comput. Biol. Med. 2022, 150, 106082. [Google Scholar] [CrossRef]

- Viegas, L.; Domingues, I.; Mendes, M. Study on Data Partition for Delimitation of Masses in Mammography. J. Imaging 2021, 7, 174. [Google Scholar] [CrossRef]

- Mohammed, A.D.; Ekmekci, D. Breast Cancer Diagnosis Using YOLO-Based Multiscale Parallel CNN and Flattened Threshold Swish. Appl. Sci. 2024, 14, 2680. [Google Scholar] [CrossRef]

- Salh, C.H.; Ali, A.M. Unveiling Breast Tumor Characteristics: A ResNet152V2 and Mask R-CNN Based Approach for Type and Size Recognition in Mammograms. Trait. Du Signal 2023, 40, 1821–1832. [Google Scholar] [CrossRef]

- Prodan, M.; Paraschiv, E.; Stanciu, A. Applying Deep Learning Methods for Mammography Analysis and Breast Cancer Detection. Appl. Sci. 2023, 13, 4272. [Google Scholar] [CrossRef]

- Kumbhare, S.; B.Kathole, A.; Shinde, S. Federated Learning Aided Breast Cancer Detection with Intelligent Heuristic-Based Deep Learning Framework. Biomed. Signal Process. Control 2023, 86, 105080. [Google Scholar] [CrossRef]

- Ayana, G.; Dese, K.; Dereje, Y.; Kebede, Y.; Barki, H.; Amdissa, D.; Husen, N.; Mulugeta, F.; Habtamu, B.; Choe, S.W. Vision-Transformer-Based Transfer Learning for Mammogram Classification. Diagnostics 2023, 13, 178. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Peng, J.; Hu, C.; Jian, W.; Wang, X.; Liu, W. Breast Cancer Detection and Classification in Mammogram Using a Three-Stage Deep Learning Framework Based on PAA Algorithm. Artif. Intell. Med. 2022, 134, 102419. [Google Scholar] [CrossRef]

- Ibrokhimov, B.; Kang, J.Y. Two-Stage Deep Learning Method for Breast Cancer Detection Using High-Resolution Mammogram Images. Appl. Sci. 2022, 12, 4616. [Google Scholar] [CrossRef]

- Adedigba, A.P.; Adeshina, S.A.; Aibinu, A.M. Performance Evaluation of Deep Learning Models on Mammogram Classification Using Small Dataset. Bioengineering 2022, 9, 161. [Google Scholar] [CrossRef] [PubMed]

- Alruwaili, M.; Gouda, W. Automated Breast Cancer Detection Models Based on Transfer Learning. Sensors 2022, 22, 876. [Google Scholar] [CrossRef]

- Maqsood, S.; Damaševičius, R.; Maskeliunas, R. TTCNN: A Breast Cancer Detection and Classification towards Computer-Aided Diagnosis Using Digital Mammography in Early Stages. Appl. Sci. 2022, 12, 3273. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rakibul Haque Rafid, A.K.M.; Ghosh, P.; Hasan, M.Z.; Jonkman, M.; De Boer, F. BreastNet18: A High Accuracy Fine-Tuned VGG16 Model Evaluated Using Ablation Study for Diagnosing Breast Cancer from Enhanced Mammography Images. Biology 2021, 10, 1347. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, L.; Hu, T.; Huang, H.; Yi, Z. Neural Networks Model Based on an Automated Multi-Scale Method for Mammogram Classification. Knowl. Based Syst. 2020, 208, 106465. [Google Scholar] [CrossRef]

- Prinzi, F.; Insalaco, M.; Orlando, A.; Gaglio, S.; Vitabile, S. A Yolo-Based Model for Breast Cancer Detection in Mammograms. Cogn. Comput. 2024, 16, 107–120. [Google Scholar] [CrossRef]

- Sathesh Raaj, R. Breast Cancer Detection and Diagnosis Using Hybrid Deep Learning Architecture. Biomed. Signal Process. Control 2023, 82, 104558. [Google Scholar] [CrossRef]

- Ahmad, J.; Akram, S.; Jaffar, A.; Rashid, M.; Bhatti, S.M. Breast Cancer Detection Using Deep Learning: An Investigation Using the DDSM Dataset and a Customized AlexNet and Support Vector Machine. IEEE Access 2023, 11, 108386–108397. [Google Scholar] [CrossRef]

- Berghouse, M.; Bebis, G.; Tavakkoli, A. Exploring the Influence of Attention for Whole-Image Mammogram Classification. Image Vis. Comput. 2024, 147, 105062. [Google Scholar] [CrossRef]

- Yan, F.; Huang, H.; Pedrycz, W.; Hirota, K. Automated Breast Cancer Detection in Mammography Using Ensemble Classifier and Feature Weighting Algorithms. Expert. Syst. Appl. 2023, 227, 120282. [Google Scholar] [CrossRef]

- Sureshkumar, V.; Prasad, R.S.N.; Balasubramaniam, S.; Jagannathan, D.; Daniel, J.; Dhanasekaran, S. Breast Cancer Detection and Analytics Using Hybrid CNN and Extreme Learning Machine. J. Pers. Med. 2024, 14, 792. [Google Scholar] [CrossRef] [PubMed]

- Kalpana, P.; Selvy, P.T. A Novel Machine Learning Model for Breast Cancer Detection Using Mammogram Images. Med. Biol. Eng. Comput. 2024, 62, 2247–2264. [Google Scholar] [CrossRef] [PubMed]

- Chakravarthy, S.; Bharanidharan, N.; Khan, S.B.; Kumar, V.V.; Mahesh, T.R.; Almusharraf, A.; Albalawi, E. Multi-Class Breast Cancer Classification Using CNN Features Hybridization. Int. J. Comput. Intell. Syst. 2024, 17, 191. [Google Scholar] [CrossRef]

- Luong, H.H.; Vo, M.D.; Phan, H.P.; Dinh, T.A.; Nguyen, L.Q.T.; Tran, Q.T.; Thai-Nghe, N.; Nguyen, H.T. Improving Breast Cancer Prediction via Progressive Ensemble and Image Enhancement. Multimed. Tools Appl. 2024, 84, 8623–8650. [Google Scholar] [CrossRef]

- Huynh, H.N.; Tran, A.T.; Tran, T.N. Region-of-Interest Optimization for Deep-Learning-Based Breast Cancer Detection in Mammograms. Appl. Sci. 2023, 13, 6894. [Google Scholar] [CrossRef]

- Azevedo, V.; Silva, C.; Dutra, I. Quantum Transfer Learning for Breast Cancer Detection. Quantum Mach. Intell. 2022, 4, 5. [Google Scholar] [CrossRef]

- Lim, T.S.; Tay, K.G.; Huong, A.; Lim, X.Y. Breast Cancer Diagnosis System Using Hybrid Support Vector Machine-Artificial Neural Network. Int. J. Electr. Comput. Eng. 2021, 11, 3059–3069. [Google Scholar] [CrossRef]

- Zhang, Y.D.; Satapathy, S.C.; Guttery, D.S.; Górriz, J.M.; Wang, S.H. Improved Breast Cancer Classification Through Combining Graph Convolutional Network and Convolutional Neural Network. Inf. Process Manag. 2021, 58, 102439. [Google Scholar] [CrossRef]

- Chouhan, N.; Khan, A.; Shah, J.Z.; Hussnain, M.; Khan, M.W. Deep Convolutional Neural Network and Emotional Learning Based Breast Cancer Detection Using Digital Mammography. Comput. Biol. Med. 2021, 132, 104318. [Google Scholar] [CrossRef] [PubMed]

- Altameem, A.; Mahanty, C.; Poonia, R.C.; Saudagar, A.K.J.; Kumar, R. Breast Cancer Detection in Mammography Images Using Deep Convolutional Neural Networks and Fuzzy Ensemble Modeling Techniques. Diagnostics 2022, 12, 1812. [Google Scholar] [CrossRef]

- Deshmukh, J.; Bhosle, U. A Study of Mammogram Classification Using AdaBoost with Decision Tree, KNN, SVM and Hybrid SVM-KNN as Component Classifiers. J. Inf. Hiding Multimed. Signal Process. 2018, 9, 548–557. [Google Scholar]

- Niranjana, R.; Ravi, A.; Sivadasan, J. Performance Analysis of Novel Hybrid\ Deep Learning Model IEU Net++ for Multiclass Categorization of Breast Mammogram Images. Biomed. Signal Process. Control 2025, 105, 107607. [Google Scholar] [CrossRef]

- Muduli, D.; Dash, R.; Majhi, B. Automated Breast Cancer Detection in Digital Mammograms: A Moth Flame Optimization Based ELM Approach. Biomed. Signal Process. Control 2020, 59, 101912. [Google Scholar] [CrossRef]

- Vijayarajeswari, R.; Parthasarathy, P.; Vivekanandan, S.; Basha, A.A. Classification of Mammogram for Early Detection of Breast Cancer Using SVM Classifier and Hough Transform. Measurement 2019, 146, 800–805. [Google Scholar] [CrossRef]

- Falconi, L.G.; Perez, M.; Aguilar, W.G.; Conci, A. Transfer Learning and Fine Tuning in Breast Mammogram Abnormalities Classification on CBIS-DDSM Database. Adv. Sci. Technol. Eng. Syst. 2020, 5, 154–165. [Google Scholar] [CrossRef]

- Logan, J.; Kennedy, P.J.; Catchpoole, D. A Review of the Machine Learning Datasets in Mammography, Their Adherence to the FAIR Principles and the Outlook for the Future. Sci. Data 2023, 10, 595. [Google Scholar] [CrossRef] [PubMed]

- Chris, C.; Felipe, K.; George, P.; Jayashree, K.-C.; John, M.; Katherine, A.; Lavender; Maryam, V.; Michelle, R.; Robyn, B.; et al. RSNA Screening Mammography Breast Cancer Detection. Available online: https://kaggle.com/competitions/rsna-breast-cancer-detection (accessed on 24 October 2024).

- Chakraborty, S.; Das, H. Performance Analysis of Feature Extraction Techniques for Medical Data Classification. In Proceedings of the Advances in Power Systems and Energy Management; Priyadarshi, N., Padmanaban, S., Ghadai, R.K., Panda, A.R., Patel, R., Eds.; Springer Nature: Singapore, 2021; pp. 387–401. [Google Scholar]

- Hassooni, A.J.; Naser, M.A.; Al-Mamory, S.O. A Proposed Method for Feature Extraction to Enhance Classification Algorithms Performance. In Proceedings of the New Trends in Information and Communications Technology Applications; Al-Bakry, A.M., Al-Mamory, S.O., Sahib, M.A., Hasan, H.S., Oreku, G.S., Nayl, T.M., Al-Dhaibani, J.A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 157–166. [Google Scholar]

- Mishra, S.; Prakash, M. Digital Mammogram Inferencing System Using Intuitionistic Fuzzy Theory. Comput. Syst. Sci. Eng. 2022, 41, 1099–1115. [Google Scholar] [CrossRef]

- Shinde, V.D.; Rao, B.T. A Novel Approach to Mammogram Classification Using Spatio-Temporal and Texture Feature Extraction Using Dictionary Based Sparse Representation Classifier. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 320–332. [Google Scholar] [CrossRef]

- Pattnaik, R.K.; Siddique, M.; Mishra, S.; Gelmecha, D.J.; Singh, R.S.; Satapathy, S. Breast Cancer Detection and Classification Using Metaheuristic Optimized Ensemble Extreme Learning Machine. Int. J. Inf. Technol. 2023, 15, 4551–4563. [Google Scholar] [CrossRef]

- Gudhe, N.R.; Behravan, H.; Sudah, M.; Okuma, H.; Vanninen, R.; Kosma, V.M.; Mannermaa, A. Area-Based Breast Percentage Density Estimation in Mammograms Using Weight-Adaptive Multitask Learning. Sci. Rep. 2022, 12, 12060. [Google Scholar] [CrossRef] [PubMed]

- Thakur, N.; Kumar, P.; Kumar, A. Reinforcement Learning (RL)-Based Semantic Segmentation and Attention Based Backpropagation Convolutional Neural Network (ABB-CNN) for Breast Cancer Identification and Classification Using Mammogram Images. Neural Comput. Appl. 2024, 36, 14797–14823. [Google Scholar] [CrossRef]

- Wei, T.; Aviles-Rivero, A.I.; Wang, S.; Huang, Y.; Gilbert, F.J.; Schönlieb, C.B.; Chen, C.W. Beyond Fine-Tuning: Classifying High Resolution Mammograms Using Function-Preserving Transformations. Med. Image Anal. 2022, 82, 102618. [Google Scholar] [CrossRef] [PubMed]

- Anari, S.; Sadeghi, S.; Sheikhi, G.; Ranjbarzadeh, R.; Bendechache, M. Explainable Attention Based Breast Tumor Segmentation Using a Combination of UNet, ResNet, DenseNet, and EfficientNet Models. Sci. Rep. 2025, 15, 1027. [Google Scholar] [CrossRef] [PubMed]

- Saber, A.; Sakr, M.; Abo-Seida, O.M.; Keshk, A.; Chen, H. A Novel Deep-Learning Model for Automatic Detection and Classification of Breast Cancer Using the Transfer-Learning Technique. IEEE Access 2021, 9, 71194–71209. [Google Scholar] [CrossRef]

- Sushanki, S.; Bhandari, A.K.; Singh, A.K. A Review on Computational Methods for Breast Cancer Detection in Ultrasound Images Using Multi-Image Modalities. Arch. Comput. Methods Eng. 2024, 31, 1277–1296. [Google Scholar] [CrossRef]

- Sahu, A.; Das, P.K.; Meher, S. An Efficient Deep Learning Scheme to Detect Breast Cancer Using Mammogram and Ultrasound Breast Images. Biomed. Signal Process. Control 2024, 87, 105377. [Google Scholar] [CrossRef]

- Atrey, K.; Singh, B.K.; Bodhey, N.K. Integration of Ultrasound and Mammogram for Multimodal Classification of Breast Cancer Using Hybrid Residual Neural Network and Machine Learning. Image Vis. Comput. 2024, 145, 104987. [Google Scholar] [CrossRef]

- Xu, C.; Qi, Y.; Wang, Y.; Lou, M.; Pi, J.; Ma, Y. ARF-Net: An Adaptive Receptive Field Network for Breast Mass Segmentation in Whole Mammograms and Ultrasound Images. Biomed. Signal Process. Control 2022, 71, 103178. [Google Scholar] [CrossRef]

- Mann, R.M.; Cho, N.; Moy, L. Breast MRI: State of the Art. Radiology 2019, 292, 520–536. [Google Scholar] [CrossRef] [PubMed]

- Hussain, S.; Ali, M.; Naseem, U.; Avalos, D.B.A.; Cardona-Huerta, S.; Tamez-Pena, J.G. Multiview Multimodal Feature Fusion for Breast Cancer Classification Using Deep Learning. IEEE Access 2024, 13, 9265–9275. [Google Scholar] [CrossRef]

- Qasem, A.; Sahran, S.; Abdullah, S.N.H.S.; Albashish, D.; Hussain, R.I.; Arasaratnam, S. Heterogeneous Ensemble Pruning Based on Bee Algorithm for Mammogram Classification. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 231–239. [Google Scholar] [CrossRef]

- Chen, F.Y.; Hsu, Y.J.; Lu, C.H.; Shuai, H.H.; Yeh, L.Y.; Shen, C.Y. Compressing Deep Neural Networks with Goal-Specific Pruning and Self-Distillation. ACM Trans. Knowl. Discov. Data 2025, 19, 1–27. [Google Scholar] [CrossRef]

- Dinsdale, N.K.; Jenkinson, M.; Namburete, A.I.L. STAMP: Simultaneous Training and Model Pruning for Low Data Regimes in Medical Image Segmentation. Med. Image Anal. 2022, 81, 102583. [Google Scholar] [CrossRef] [PubMed]

- Blott, M.; Fraser, N.J.; Gambardella, G.; Halder, L.; Kath, J.; Neveu, Z.; Umuroglu, Y.; Vasilciuc, A.; Leeser, M.; Doyle, L. Evaluation of Optimized CNNs on Heterogeneous Accelerators Using a Novel Benchmarking Approach. IEEE Trans. Comput. 2021, 70, 1654–1669. [Google Scholar] [CrossRef]

- Wang, X.; Liang, G.; Zhang, Y.; Blanton, H.; Bessinger, Z.; Jacobs, N. Inconsistent Performance of Deep Learning Models on Mammogram Classification. J. Am. Coll. Radiol. 2020, 17, 796–803. [Google Scholar] [CrossRef]

- Barba, D.; León-Sosa, A.; Lugo, P.; Suquillo, D.; Torres, F.; Surre, F.; Trojman, L.; Caicedo, A. Breast Cancer, Screening and Diagnostic Tools: All You Need to Know. Crit. Rev. Oncol. Hematol. 2021, 157, 103174. [Google Scholar] [CrossRef]

- Santos, C.S.; Amorim-Lopes, M. Externally Validated and Clinically Useful Machine Learning Algorithms to Support Patient-Related Decision-Making in Oncology: A Scoping Review. BMC Med. Res. Methodol. 2025, 25, 45. [Google Scholar] [CrossRef]

- Zhang, B.; Rigall, E.; Huang, Y.; Zou, X.; Zhang, S.; Dong, J.; Yu, H. A Method for Breast Mass Segmentation Using Image Augmentation with SAM and Receptive Field Expansion. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2023; pp. 387–394. [Google Scholar]

- Wang, Z.; Wu, Z.; Agarwal, D.; Sun, J. MedCLIP: Contrastive Learning from Unpaired Medical Images and Text. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Association for Computational Linguistics (ACL): Kerrville, TX, USA, 2022; pp. 3876–3887. [Google Scholar]

| No. | Ref. | Datasets | Segmentation Method | Feature Extraction and Selection | Classification Methods | Result | Limitation |

|---|---|---|---|---|---|---|---|

| 1 | [16] | 400 private mammogram images | Not applicable | Morphological, texture, and density features | Extreme Learning Machine (ELM) | Benign and malignant categories: Accuracy: 96.2%, sensitivity: 95.8%, and specificity: 96.6% | Manual extraction of features (such as morphological features), which can still be prone to human error or variability based on expert experience. |

| 2 | [17] | Mammographic Image Analysis Society (MIAS) | k-means clustering algorithm | Gray Level Co-occurrence Matrix (GLCM) and Gray Level Run Length Matrix (GLRLM) | SVM, RF, ANN, k-NN, Naive Bayes (NB), DT | Normal vs. Abnormal, and benign vs. malignant: SVM, RF, and Neural Networks showed the best performance | Reliance on the mini-MIAS dataset, which is relatively small, potentially limiting the generalizability of the results to larger datasets or more diverse populations. |

| 3 | [18] | DDSM dataset | Spectral clustering | Feature extraction: GLCM, Feature selection: Genetic Algorithm (GA) | SVM: classify regions as mass or non-mass | Sensitivity: 89.5%, Specificity: 91.2%, Accuracy: 90% | Spectral clustering method may struggle with complex mass boundaries and overlapping tissues, leading to reduced accuracy in highly heterogeneous breast images. |

| 4 | [19] | MIAS, DDSM | CNN optimized by the Grasshopper Optimization Algorithm (GOA) | Geometric features, texture features, statistical features. GOA is used for feature selection | SVM | Sensitivity: 96%, Specificity: 93%, Accuracy: 92% | The learning time is relatively high due to the large number of iterations required for optimization |

| 5 | [20] | MIAS, INbreast | Not applicable | Feature extraction: ResNet18 extracts 512 features from each mammogram Feature selection: PSO, DFOA, CSOA | Weighted K-Nearest Neighbor (wKNN) | Benign and malignant classification: MIAS: accuracy: 84.35% using CSOA-wKNN INbreast: accuracy: 83.19% using CSOA-wKNN | The computational complexity of metaheuristic algorithms, particularly DFOA, which requires significant parameter tuning and exhibits a slower convergence rate compared to PSO and CSOA. |

| 6 | [21] | MIAS, DDSM, INbreast | Not applicable | Feature extraction: Fast discrete curvelet transform (FDCT-WRP) Feature selection: PCA and LDA | Extreme Learning Machine (ELM) | Benign vs. malignant classification: Accuracy achieved on MIAS: 100%. Accuracy on DDSM: 98.94%. Accuracy on INbreast: 98.76%. | ELM model’s complexity can be an issue, particularly regarding the computational cost involved in feature extraction and optimization |

| 7 | [22] | DDSM | Not applicable | Feature Extraction: Intensity-based Features, texture-based Features, shape-based Features Feature Selection: Biogeography-Based Optimization (BBO) | Adaptive Neuro-Fuzzy Inference System (ANFIS), ANN | Benign vs. malignant classification: Accuracy: 98.92% Sensitivity: 99.10% Specificity: 98.72% | The model was tested on a relatively small dataset, and the study did not explore other advanced classifiers or deeper neural networks |

| 8 | [23] | CBIS-DDSM | RoI based U-Net | Deep learning-based Scale Invariant Feature Transform (SIFT) | Fuzzy Decision Tree (FDT) | Normal, Benign, and malignant: Accuracy: 99.2%, Sensitivity: 95.36%, specificity: 97.41% | Relies on handcrafted feature (SIFT), which may limit performance, generalizability restricted since only tested on CBIS-DDSM. |

| 9 | [24] | MIAS, DDSM, BCDR | Not applicable | Feature extraction: Discrete Wavelet Packet Transform (DWPT). Feature selection: PCA and Weighted Chaotic Salp Swarm Algorithm (WC-SSA) | Kernel Extreme Learning Machine (KELM) | Normal vs. Abnormal Classification: MIAS dataset: Accuracy of 99.62%. DDSM dataset: Accuracy of 99.92%. Benign vs. Malignant Classification: MIAS dataset: Accuracy of 99.28%. DDSM dataset: Accuracy of 99.63%. | The combination of wavelet-based feature extraction and the complex WC-SSA optimization adds computational overhead |

| 10 | [25] | DDSM | Not applicable | Feature Extraction: Intensity-based Features, texture-based Features, and shape-based Features Feature Selection: Genetic Algorithm (GA) | AdaBoost, RF, and DT. | Benign and malignant classification: AdaBoost achieved: Accuracy: 96.15%. Random Forest achieved: Accuracy: 92.70% | The use of Genetic Algorithms, along with ensemble methods, increases the computational complexity of the model |

| 11 | [26] | MIAS, and Digital Mammography DREAM Challenge Dataset | Not applicable | Feature Extraction: Statistical features. Feature Selection: Best First Search | k-NN, Decision Trees (J48, Random Forest, Random Tree). | Normal vs. abnormal classification: Adaboosting J48, Decision Tree, and Random Forest: Accuracy: 100%. AUC: 1.000 (MIAS dataset). | Although data augmentation was applied, the number of abnormal samples was still limited compared to normal ones |

| 12 | [27] | MIAS Dataset | Thresholding-based segmentation | Feature Extraction: Gray Level Co-occurrence Matrix (GLCM) Feature Selection: Genetic Algorithm (GA) | Modified SVM | Accuracy: 96.34%. Sensitivity: 94.28% (ability to correctly detect malignant cases). | The study used a relatively small dataset (322 images), which could limit the generalizability of the results when applied to larger or more diverse datasets |

| No. | Ref. | Datasets | Segmentation Method | Feature Extraction | Classification Methods | Result | Limitation |

|---|---|---|---|---|---|---|---|

| 1 | [28] | CBIS-DDSM, INbreast | Otsu Thresholding | CNN: DenseNet-169 | Deep Location Soft-Embedding-Based Network-Regional Scoring (DLSEN-RS) | Benign, and malignant: INbreast dataset: accuracy: 91.5%. CBIS-DDSM dataset: accuracy: 89.4%. | A limitation of the DLSEN-RS model is the challenge of determining the optimal k value. If “k” is too small, important information might be missed, and if too large, redundant and irrelevant features may be selected, leading to reduced performance. |

| 2 | [29] | INbreast, CBIS-DDSM, BNS | Mask R-CNN | YOLOv5 and Mask R-CNN | Mask R-CNN | Benign, and malignant: False Positive Rate (FPR): 0.049% False Negative Rate (FNR): 0.029% | Computational complexity associated with training both YOLOv5 and Mask R-CNN |

| 3 | [30] | INbreast and HX dataset (private dataset) | Otsu segmentation | Pretrained DenseNet model | Deep Multiscale Multi-Instance Networks | Benign, and malignant: INbreast dataset: Accuracy: 93.2%. HX dataset: Accuracy: 0.872 | The choice of the k value, which determines the number of patches to be selected for classification. A suboptimal value of k could lead to the inclusion of irrelevant regions, reducing performance. |

| 4 | [31] | INbreast, CBIS-DDSM | Otsu segmentation | DenseNet, ImageNet | DenseNet, ImageNet. | Benign, and malignant: INbreast dataset: Accuracy: 91.6% CBIS-DDSM dataset: Accuracy: 83.9% | Dividing the image into many smaller regions and calculating probabilities for each increases the time and computational resources needed for processing. |

| 5 | [32] | INbreast Dataset | Not applicable | YOLO-V4 | ResNet, VGG, and InceptionNet. | Benign, and malignant: Inception-V3 accuracy: 98% | The need for significant computational resources for processing large mammograms and handling cropped slices, which may slow down real-time applications. |

| 6 | [33] | MIAS, CBIS-DDSM | Not applicable | CNN | Various CNN architectures (VGGNet, ResNet, and GoogLeNet). | AUC of 0.932 for mass and calcification and 0.84 for malignant and benign. | Computational complexity of fusing multi-view data. |

| 7 | [34] | DDSM, Hanoi Medical University (HMU) dataset | Not applicable | ResNet-34, | ResNet-34 | Normal, benign, and malignant: macAUC of 0.766 | The availability of annotated mammogram datasets, which restricts the fine-tuning process and may limit the generalization of the model. |

| 8 | [35] | Unknown mammogram dataset | Morphological clustering, dilation and erosion | Gray Level Run Length Matrix (GLRLM) | Kernel-Based Convolutional Neural Network (KBCNN) | Benign and malignant accuracy: 95% | The computational cost is associated with using KBCNN, which may hinder real-time processing. |

| 9 | [36] | INbreast Dataset | Not applicable | ECA-Net50 model | Efficient Channel Attention (ECA-Net50) | Benign and malignant categories: Accuracy: 92.9%. Precision: 88.3%. | The study relies on the INbreast dataset, which is relatively small compared to large-scale datasets. |

| 10 | [37] | INbreast Dataset | Mask R-CNN (Region-based Convolutional Neural Network) | Not applicable | Mask R-CNN framework | Benign and malignant: True Positive Rate (TPR) of 0.936 with a standard deviation of 0.063 | The small size of the INbreast dataset, which may not provide enough diversity to fully train modern deep learning models like Mask R-CNN. |

| 11 | [38] | CBIS-DDSM, INbreast | Not applicable | Parallel Feature Extraction Stem (PFES), Dense Connection Blocks (DCB) and Inception Blocks (IB) | YOLO-based multiscale parallel CNN architecture. | INbreast Dataset: Accuracy: 98.72% | YOLO model can be biased toward smaller lesions due to its default loss function |

| 12 | [39] | 510 digital mammogram images collected from Erbil hospital (Zheen) | Mask R-CNN | ResNet152V2 | CNN: ResNet152V2, and Mask R-CNN | ResNet152V2: Accuracy: 100% for classifying breast density types and distinguishing normal or abnormal tissue. | The difficulty in detecting tumors in extremely dense breasts (types C and D), which may affect the system’s accuracy in these specific cases. |

| 13 | [40] | Radiological Society of North America (RSNA) | Not applicable | CNN-based architectures (ResNet18, ResNet34, ResNet152, EfficientNetB0, MaxViT) | Multiple pre-trained models (ResNet, EfficientNet, and MaxViT) | Normal and abnormal categories: ResNet18: Accuracy: 94% EfficientNetB0: Accuracy: 95% MaxViT: Accuracy: 89% | The scaling of images to 256 × 256 and 512 × 512 pixels, which might reduce classification performance |

| 14 | [41] | CBIS-DDSM Dataset | Not applicable | DenseNet | Enhanced Recurrent Neural Network (E-RNN) | Benign and malignant categories: Accuracy: 95% Matthews Correlation Coefficient (MCC): 91% | Difficulty in balancing data across institutions in federated learning setups and the challenge of optimizing communication between clients and the central server. |

| 15 | [42] | DDSM Dataset | Not applicable | Vision transformers (ViT) | Vision transformers | Benign and malignant categories: 1.00 ± 0 of accuracy | Vision transformers require high computational resources, particularly in large variants like ViT-large. |

| 16 | [43] | CBIS-DDSM, INbreast, and MIAS | The Probabilistic Anchor Assignment (PAA) algorithm, an anchor-free object detection approach | EfficientNet-B3 as the backbone network | Faster R-CNN and PAA | AUC for ROI classifier: 0.889. | The non-maximum suppression (NMS) and weighted box fusion (WBF) algorithms used in post-processing may still miss detections in dense breasts. |

| 17 | [44] | INbreast and private dataset | Square small patches (sliding window) are generated from the region | Not applicable | Faster R-CNN | Mean Average Precision (mAP): 0.94 | Although the model achieves high accuracy, the use of small patches might still miss certain lesions if not appropriately handled during training. |

| 18 | [45] | INbreast Dataset | Not applicable | DenseNet and AlexNet | DenseNet and AlexNet | Benign and malignant categories: DenseNet: accuracy: 99.8%. AlexNet: accuracy: 98.8% | The study does not address potential biases in the dataset that could arise from its small size and class imbalance. |

| 19 | [46] | MIAS Dataset | Not applicable | Not applicable | Nasnet-Mobile: classifying mammographic images into benign or malignant categories. Modified ResNet50 (MOD-RES): fine-tuned for classifying breast masses | MOD-RES accuracy: 89.5%. Nasnet-Mobile: accuracy: 70% | The models are trained on a relatively small dataset, which might not generalize well to larger and more diverse populations |

| 20 | [47] | MIAS, DDSM, INbreast Dataset | Not applicable | CNN models: InceptionResNet-V2, Inception-V3, VGG-16, VGG-19, GoogLeNet, ResNet-18, ResNet-50, and ResNet-101 | Transferable Texture Convolutional Neural Network (TTCNN) | Benign and malignant categories: DDSM: Accuracy: 99.08%. INbreast: Accuracy: 96.82%. MIAS: Accuracy: 96.57% | Though the proposed method shows significant improvement, there is still room for enhancing accuracy, especially in detecting smaller tumors at very early stages. |

| 21 | [48] | CBIS-DDSM | Not applicable | CNN: VGG16 network | BreastNet18 model, which is a fine-tuned version of VGG16. | Benign and malignant: Training accuracy: 96.72% Validation accuracy: 97.91% Test accuracy: 98.02% | The relatively small size of the dataset could lead to overfitting despite data augmentation |

| 22 | [49] | INbreast | Not applicable | CNN: DenseNet121 and MobileNet | CNN: DenseNet121 and MobileNet | DenseNet Accuracy: 96.34% MobileNet Accuracy: 95.12% | Although DenseNet provides excellent performance, it is computationally expensive. While MobileNet is more efficient, its performance is slightly lower. |

| 23 | [50] | CBIS-DDSM, INbreast, and private dataset collected from University Hospital “Paolo Giaccone,” Palermo, Italy | Yolo-based model | Yolo model | Yolo-based model | Benign and malignant: CBIS-DDSM dataset: mAP (mean Average Precision) of 0.498. INbreast dataset: mAP of 0.835 | The proprietary dataset is heavily imbalanced, with 82.4% of the lesions being malignant, which may affect the model’s ability to generalize well |

| No. | Ref. | Datasets | Segmentation Method | Feature Extraction | Classification Methods | Result | Limitation |

|---|---|---|---|---|---|---|---|

| 1 | [52] | DDSM dataset | Not applicable | CNN: AlexNet | BreastNet + SVM | Benign, and malignant categories: Accuracy: 99.16% Sensitivity: 97.13% Specificity: 99.30% | The model’s performance may be impacted by the choice of optimizers, with varying results based on hyperparameter tuning. |

| 2 | [53] | CBIS-DDSM, INbreast dataset | Not applicable | Not applicable | Attention mechanisms + CNN architectures (ResNet50, DenseNet169, RegNetX064) | CBIS-DDSM dataset: DenseNet169 + Attention Module: AU-ROC of 0.79. INbreast dataset: DenseNet169 + Squeeze and Excitation (SE): AU-ROC of 0.88 | Attention improved performance inconsistently across different datasets and abnormality types. Additionally, complex models with higher pooling tended to overfit on smaller datasets, and certain attention mechanisms such as convolutional bottleneck attention module (CBAM) were harder to optimize. |

| 3 | [54] | MIAS and DDSM dataset | Thresholding and region growing method | GLCM, shape and margin features. | An ensemble classifier model including KNN, bagging, and EigenClass algorithms | Normal, benign, or malignant categories: DDSM’s accuracy: 93.26%. MIAS’s accuracy: 91%. | Computational complexity due to the ensemble classification and feature weighting algorithms. |

| 4 | [55] | MIAS Dataset | A Gabor filter | CNN model | CNN + Extreme Learning Machine (ELM) | Benign and malignant categories: The hybrid CNN-ELM model Accuracy: 86% on the MIAS dataset. | The model was tested on a relatively small dataset (MIAS dataset with 322 images), which may limit its generalizability to larger datasets. |

| 5 | [56] | MIAS, INbreast, BCDR Dataset | Not applicable | Probabilistic Principal Component Analysis (PPCA) | Naïve Bayes + Firefly Binary Grey Optimization (FBGO), Transfer Convolutional Neural Network (TCNN) + Moth Flame Lion Optimization (MMFLO) | Benign and malignant categories: Naïve Bayes + FBGO (MIAS dataset): Accuracy: 96.3% TCNN + MMFLO (MIAS dataset): Accuracy: 98% | The high computational complexity associated with the ensemble model, especially when combining both Naïve Bayes and TCNN models. |

| 6 | [57] | MIAS, INbreast, CBIS-DDSM Dataset | Not applicable | CNN architecture: VGG16, VGG19, ResNet50, and DenseNet121 | CNN hybrid approach: VGG16, VGG19, ResNet50, DenseNet121. | Normal, Benign, and malignant categories: MIAS’s accuracy: 98.70% Inbreast’s accuracy: 98.83%. | Computational complexity due to the combination of multiple CNN models. Additionally, there were slight challenges in discriminating against malignant cases compared to normal and benign cases. |

| 7 | [58] | CBIS-DDSM Dataset | Not applicable | Pre-trained models (ResNet-50, EfficientNet-B5, and Xception) | ResNet-50 + Xception + EfficientNet-B5. | Mass/calcification classification: accuracy: 91.36%. Benign/malignant classification: accuracy: 76.79%. | Computational complexity due to the combination of multiple CNN models. |

| 8 | [59] | VinDr-Mammo, DDSM, CMMD, CDD-CESM, BMCD, RSNA | YOLOX | The features for classification are extracted using two CNN architectures: EfficientNet and ConvNeXt | EfficientNet-B7 + ConvNeXt-101 | VinDr-Mammo’s accuracy: 90% CMMD’s accuracy: 92% BMCD’s accuracy: 92% | The primary limitation of the study lies in the reliance on gradCAM for visualizing the important regions of the ROIs, which may sometimes produce noisy or incomplete heat maps. |

| 9 | [60] | Breast Cancer Digital Repository (BCDR) dataset. | Not applicable | Pre-trained classical neural networks (ResNet18) | Combination of pre-trained classical models (ResNet18) + quantum neural networks | Normal and abnormal categories: 84% of accuracy. | The study notes that quantum devices are still in the early stages of development (NISQ era), and the results from real quantum devices showed slightly lower accuracy (81%) compared to the quantum simulator (84%). |

| 10 | [61] | MIAS Dataset | Not applicable | GLCM and statistical features | A hybrid model combining SVM for the first stage of classification (normal vs. abnormal) and ANN for the second stage (benign vs. malignant) | The hybrid SVM + ANN model: 99.4% of accuracy for normal vs. abnormal classification. | The use of only 160 mammograms for training and testing limits the generalizability of the model. The small dataset size could introduce overfitting or bias in the model’s performance. |

| 11 | [62] | MIAS Dataset | Not applicable | Convolutional Neural Network (CNN) + Graph Convolutional Network (GCN) | CNN + Graph Convolutional Network (GCN) | Sensitivity: 96.20 ± 2.90% Specificity: 96.00 ± 2.31% Accuracy: 96.10 ± 1.60% | The dataset was imbalanced, with fewer abnormal images (113) compared to normal images (209), which may affect the model’s generalizability |

| 12 | [63] | IRMA Dataset | Not applicable | Statistical Features, Local Binary Pattern (LBP) Features, Taxonomic Features, and Dynamic Features | SVM + Emotional Learning inspired Ensemble Classifier (ELiEC) | Accuracy: 80.54%. | While hybrid features improved accuracy, the margin of improvement was around 2–3%, which, while notable, may still leave room for optimization. |

| 13 | [64] | BCDR, MINI-MIAS, DDSM, INbreast Dataset | Not applicable | Deep convolutional neural networks (CNN): VGG-11, ResNet-164, DenseNet121, and Inception V4 | Combination of fuzzy ensemble modeling+deep CNNs (VGG-11, ResNet-164, DenseNet121, and Inception V4) | Normal, benign, and malignant categories: accuracy of 99.32%. | The use of multiple CNNs in an ensemble increases computational complexity. |

| 14 | [65] | MIAS, DDSM Dataset | Not applicable | Feature Extraction: GLCM Feature Selection: semi-supervised K-means clustering algorithm | AdaBoost combined with multiple base classifiers: Decision Tree (DT), k-Nearest Neighbors (KNN), Support Vector Machine (SVM), and hybrid SVM-KNN | Benign and malignant classification: DDSM Dataset: AdaBoost with Hybrid SVM-KNN: 90.625% of accuracy. | The study relies on manual ROI segmentation, which may limit its applicability in fully automated systems. |

| 15 | [66] | MIAS, INBreast, CBIS-DDSM | IEUNet++ | Not applicable | Hybrid deep learning IEUNet++ | Normal, benign, malignant: INBreast: Accuracy: 99.87%, sensitivity: 99.77%, specificity: 0.998 | Computationally expensive due to ensemble of InceptionResNetV2 + EfficientNetB7. |

| Method Approach | Accuracy Range | Sensitivity Range | Precision Range | Specificity Range |

|---|---|---|---|---|

| Machine Learning | 82.42–100% (normal and abnormal class) | 86.67–99.1% | 82.42–83.87% | 83.33–98.72% |

| Deep Learning | 70–100% (normal and abnormal class) | X | 88.3–99.16% | X |

| Hybrid | 74.96–99.87% (normal, benign, malignant) | 96.2–99.77% | X | 96–99.8% |

| Approaches | Datasets | Pre-Processing | Feature Extraction | Feature Selection | Optimization | Classification | Accuracy |

|---|---|---|---|---|---|---|---|

| Machine learning [21] | MIAS, DDSM, INbreast | X | Fast discrete curvelet transform (FDCT-WRP) | Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) | Modified Particle Swarm Optimization | Extreme Learning Machine (ELM) | 100% (benign and malignant class) |

| Deep learning [42] | DDSM | Data balancing (augmentation), color jitter, gamma correction, salt and pepper noise | Vision transformers and pretrained weights from ImageNet | X | X | Vision transformer | 100% (benign and malignant class) |

| Hybrid/Ensemble [66] | MIAS, CBIS-DDSM, INbreast Dataset | Segmentation: IEUNet++ | X | X | X | InceptionResNet + EfficientNetB7 + UNet (IEUNet++) | 99.87% (normal, benign, and malignant) |

| Datasets | Total Images | Lesion Type | Image Category | Usage in This Review Article | Remarks |

|---|---|---|---|---|---|

| MIAS/MINI-MIAS | 322 | All kind | Normal, Benign, and Malignant | 23 studies | Classic, widely used despite small size |

| DDSM | 10,480 | Mass, calcification | Normal, Benign, and Malignant | 23 studies | One of the earliest large datasets, image quality is relatively low. |

| CBIS-DDSM | 3012 | Mass, calcification | Benign, Malignant | 14 studies | Curated, more consistent version of DDSM |

| INbreast | 410 | All kind | Normal, Benign, And Malignant | 21 studies | High-quality, pixel-level annotation |

| BCDR | 7315 | All kind | Normal, Cancer | 4 studies | Includes RoI annotation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fajrin, H.R.; Min, S.D. From Machine Learning to Ensemble Approaches: A Systematic Review of Mammogram Classification Methods. Diagnostics 2025, 15, 2829. https://doi.org/10.3390/diagnostics15222829

Fajrin HR, Min SD. From Machine Learning to Ensemble Approaches: A Systematic Review of Mammogram Classification Methods. Diagnostics. 2025; 15(22):2829. https://doi.org/10.3390/diagnostics15222829

Chicago/Turabian StyleFajrin, Hanifah Rahmi, and Se Dong Min. 2025. "From Machine Learning to Ensemble Approaches: A Systematic Review of Mammogram Classification Methods" Diagnostics 15, no. 22: 2829. https://doi.org/10.3390/diagnostics15222829

APA StyleFajrin, H. R., & Min, S. D. (2025). From Machine Learning to Ensemble Approaches: A Systematic Review of Mammogram Classification Methods. Diagnostics, 15(22), 2829. https://doi.org/10.3390/diagnostics15222829