1. Introduction

Acute kidney injury (AKI) represents a significant adverse event among hospitalized patients [

1]. Given that kidney function is frequently affected by cardiovascular dysfunction [

2], sepsis [

3], autoimmune diseases, and various causes of circulatory collapse [

4], AKI serves as a critical indicator of in-hospital mortality and prolonged hospital stays [

5]. Consequently, the early detection of AKI is a strategic approach for enhancing patient outcomes in hospital settings [

6,

7].

The implementation of an electronic AKI alert system holds considerable promise for early detection. A multicenter cohort study demonstrated that such a system improved renal function recovery in patients admitted to the intensive care units [

8]. Another multicenter study reported that the use of a computerized decision support system was associated with reduced in-hospital mortality, fewer dialysis sessions, and shorter hospital stays [

9]. In a prospective study conducted in Korea, an electronic AKI alert system linked to automated nephrologist consultation revealed that early consultation and intervention by a nephrologist increased the likelihood of renal recovery from AKI in hospitalized patients [

10]. Although the electronic AKI alert system does not consistently alter clinical management or improve AKI outcomes, it shows potential for optimization [

11,

12].

Nevertheless, constructing an electronic AKI alert system is challenging. Such computerized algorithms detect AKI events based on an increase in serum creatinine (SCr) exceeding 1.5 times the baseline level within 7 days [

13,

14]. For patients lacking “baseline SCr within 7 days,” computerized algorithms must deduce a diagnosis, potentially lowering diagnostic accuracy. To address this limitation, machine learning models that detect AKI based on point-of-care clinical features may offer a solution. AKI is considered an ideal syndrome for the application of artificial intelligence due to its standardized and readily identifiable definition [

15]. Patients with AKI may exhibit various clinical features, including demographic characteristics, comorbidities, changes in vital signs, and diverse laboratory findings [

16]. These features may be input into machine learning models to facilitate point-of-care AKI diagnoses in the absence of baseline SCr levels. For instance, when a patient with no known medical history presents with abnormal SCr, it can be challenging for an inexperienced physician to differentiate between AKI and CKD, and to initiate an accurate diagnostic and therapeutic plan. In such cases, our machine learning model may assist less experienced clinicians in making this distinction, thereby enabling timely and appropriate clinical decision-making. Therefore, the present study aimed to construct machine learning models in the context of absent baseline SCr within seven days, utilizing clinical features at a single time point. To achieve this, we first assess model stability using a repeated sampling methodology (Method 1) before evaluating their real-world generalizability on an independent, temporally distinct test set (Method 2). We then benchmark these models against both routine clinician diagnoses and the traditional computerized algorithm to fully characterize their clinical potential.

2. Methods

2.1. Study Design and Participants

This retrospective study was conducted at Wan Fang Hospital, Taipei Medical University, Taipei, Taiwan. The study was approved by the Ethics Committee and Institutional Review Board of Taipei Medical University (approval no. N202111017, date 30 September 2021) and adhered to the tenets of the 1975 Declaration of Helsinki, as revised in 2013. Informed consent for participation is not required as per the Ethics Committee and Institutional Review Board of Taipei Medical University. The study population comprised hospitalized patients possessing one or more records of SCr levels exceeding 1.3 mg/dL. Two datasets, designated as Datasets A and B, were used for patients meeting this criterion. Dataset A served both as the training and testing set in the repeated machine learning model (Method 1), and as the training set for the single machine learning model (Method 2). Dataset B was exclusively used as the testing dataset for the single machine-learning model (Method 2). To mitigate selection bias, all patients were included through simple randomization. Given that 26 features were input into the machine learning models, the study aimed to enroll over 2600 patients in the training dataset and more than 1000 patients in the testing dataset.

The study was intentionally designed to use two separate datasets—Dataset A for training and Dataset B for testing—to ensure a rigorous and clinically relevant evaluation of the models. This approach serves two primary purposes. First, it facilitates temporal validation, where the models are trained on older data (Jan 2018–June 2020) and evaluated on newer, unseen data (July 2020–Dec 2020). This simulates a real-world deployment scenario and tests the model’s robustness to potential shifts in patient characteristics or clinical practices over time.

For Dataset A, patients hospitalized from January 2018 to June 2020 were randomly screened for eligibility for inclusion in the training dataset. The inclusion criteria were as follows: (1) at least one SCr value > 1.3 mg/dL during hospitalization and (2) age > 20 years. The exclusion criterion was the absence of baseline SCr within 7 days preceding the indexed abnormal SCr level. Patients with abnormal SCr levels were categorized into AKI and non-AKI groups using a computerized algorithm for AKI diagnosis, which will be detailed subsequently. The AKI and non-AKI groups were then randomly balanced to form Dataset A, with 1423 patients in each group.

For Dataset B, patients hospitalized from July 2020 to December 2020 with (1) at least one SCr value > 1.3 mg/dL during hospitalization and (2) age > 20 years were randomly selected for inclusion in the testing dataset. Notably, the availability of baseline SCr within 7 days was not a requirement for Dataset B. For each patient in Dataset B, a final diagnosis of AKI or non-AKI was retrospectively established by our researcher nephrologists based on a comprehensive review of the patient’s record according to KDIGO guidelines; this expert diagnosis served as the ground truth for evaluating all other methods. AKI was defined by the following criteria: (1) For patients with available baseline SCr values within 7 days before the indexed abnormal SCr level, an increase in SCr > 1.5 times satisfied the diagnosis of AKI. (2) For patients with available SCr values more than 7 days before the indexed abnormal SCr, the nearest previous SCr value was assumed to be the baseline SCr, and an increase in SCr > 1.5 times above this baseline value satisfied the diagnosis of AKI. 3. For cases in which previous SCr values were unavailable, it was assumed that patients had normal baseline SCr levels, and AKI was arbitrarily defined. Notably, the approach to patients without baseline SCr within 7 days stated above may lead to an AKI diagnosis in some CKD patients. In addition to this ground truth, two baseline diagnostic labels were collected for comparison: the routine clinician’s diagnosis documented in the final discharge summary, and the diagnosis generated by our pre-existing computerized algorithm. Dataset B included 334 and 997 patients in the AKI and non-AKI groups, respectively. To address the imbalance in Dataset B, additional trials using balanced and refined subsets were conducted. These trials showed improved or consistent model performance, indicating the imbalance was mitigated. Thus, the impact of dataset imbalance on model metrics was carefully evaluated and controlled.

2.2. Features of the Machine Learning Models

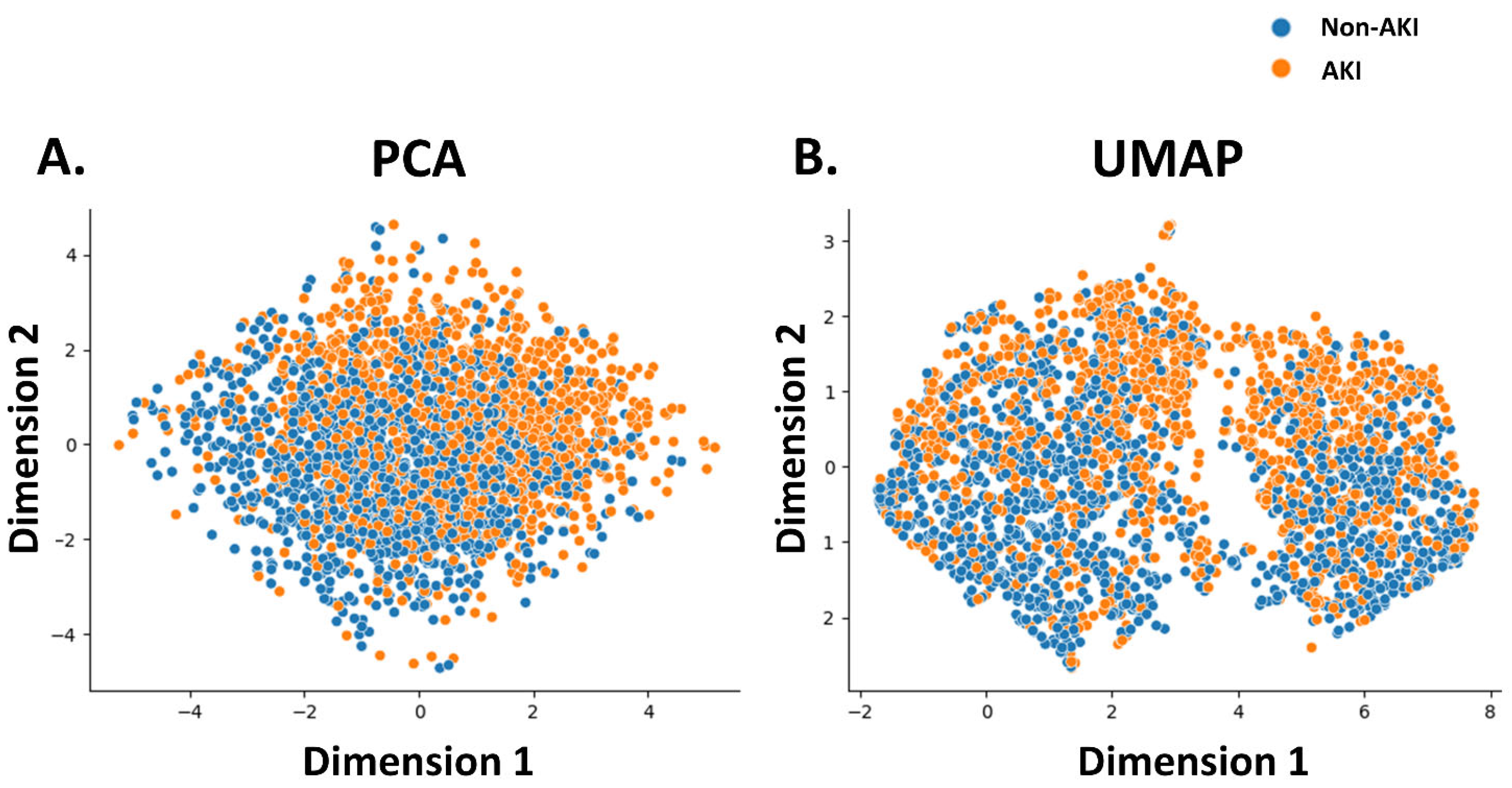

The features utilized in the machine learning models were sex, age, and laboratory and physical readings obtained at the time of admission. Notably, the present study aimed to diagnose AKI in patients with no known medical history who presented with abnormal SCr. Consequently, comorbidities were excluded from the feature set used by the machine learning model. Laboratory parameters included SCr, Na, K, aspartate aminotransferase (AST), alanine aminotransferase (ALT), red blood cell (RBC) count, hemoglobin, hematocrit (Hct), red cell distribution width (RDW-CV), white blood cell (WBC) count, and fractions of neutrophils, lymphocytes, monocytes, eosinophils, basophils, platelet counts, and platelet distribution width (PDW). Physical readings included respiration rate, systolic blood pressure (SBP), diastolic blood pressure (DBP), oxygen saturation (SpO2), body temperature, pulse rate, weight, and height. Before running the machine learning models, the association between the model features and AKI events was evaluated using principal component analysis (PCA), Uniform Manifold Approximation and Projection (UMAP). Physical readings were recorded by attending nurses at 7:00 on the day the indexed laboratory data were obtained. Biochemical data were measured using a Beckman Coulter DxC AU5800 (Beckman Coulter Inc., Brea, CA, USA), and hematological data were measured using a Beckman Coulter DxH 1601(Beckman Coulter Inc., Brea, CA, USA).

2.3. Computerized Algorithm for Defining AKI

The accuracy of the computerized algorithm was validated in a separate study conducted by our research team [

15]. Briefly, if an azotemic patient (SCr > 1.3 mg/dL) with previous SCr value within 90 days was identified, an increase in SCr > 1.5 times satisfied the diagnosis of AKI; if an azotemic patient without previous SCr value within 90 days was identified, the baseline SCr was assumed to be normal and AKI was diagnosed arbitrarily; for an azotemic patient with previous SCr > 90 days before the indexed abnormal SCr, the nearest previous SCr was assumed as the baseline value, and increase in SCr > 1.5 times satisfied the AKI diagnosis. The program code was developed using Node.js 14.19.1 (OpenJS Foundation, San Francisco, CA, USA).

2.4. Researcher’s Definition for AKI and Clinician’s Diagnosis

AKI was defined according to the KDIGO Clinical Practice Guidelines for AKI [

17]. Once an SCr value > 1.3 mg/dL was identified, the previous SCr values were reviewed. AKI was defined as follows: (1) In patients with previous SCr tests within 7 days preceding the indexed SCr values, an increase in SCr > 1.5 was defined as AKI. However, no cases of AKI were observed in the present study. (2) In patients with previous SCr tests exceeding 7 days before the indexed abnormal SCr values, the nearest previous SCr value was assumed as the baseline SCr, and an increase in SCr > 1.5 times above this value was defined as AKI. However, AKI was not observed in the present study. (3) In patients without previous SCr values, the patient was assumed to have normal baseline SCr levels, and AKI was defined as present. This diagnosis was utilized as the standard in the present study. Notably, the AKI criteria for decreased urine output in the KDIGO Clinical Practice Guidelines were not applied in this study. The AKI diagnosis documented in the discharge summaries was considered as the clinician’s diagnosis. In cases in which AKI was not included in the discharge diagnosis of patients with AKI, the diagnosis was considered inaccurate.

2.5. Data Preprocessing and Imputation

A standardized preprocessing pipeline was applied to the data before model training to ensure consistency and optimal performance. This pipeline, which includes imputation and feature scaling, was developed using only the training dataset (Dataset A) to prevent any information leakage from the test set (Dataset B).

First, to handle missing data, we employed mean imputation. In this procedure, any missing value for a given feature was replaced with the arithmetic mean of all observed values of that feature within the training data. This approach ensures a complete dataset for model training.

Second, following imputation, all features were standardized. This transformation rescales each feature so that it has a mean of zero and a standard deviation of one. Standardization is a critical step that prevents features with larger numeric ranges from disproportionately influencing the model’s learning process, which is particularly important for distance-based and gradient-based algorithms.

2.6. Development of Machine Learning Models

We employed two distinct approaches to develop and validate our machine learning models. Method 1 (Repeated Learning) was designed to assess the inherent stability and internal validity of the models by repeatedly training and testing on subsets of a single dataset (Dataset A). In contrast, Method 2 (Single Learning on an Independent Test Set) was designed to assess the models’ real-world generalizability on new, unseen data (Dataset B) and to investigate the impact of factors like data imbalance.

For Method 1, only Dataset A was used in the repeated machine learning models. In each iteration, 70% of Dataset A was randomly selected as the training dataset and the remaining 30% served as the testing dataset. This procedure was repeated 1000 times to obtain the average performance of the machine learning models. In each iteration, seven machine learning models were employed: the Support Vector Machine (SVM), Logistic Regression (LR), Gradient Boosting (GB), Extreme Gradient Boosting (XGBoost), Random Forest (RF), Naive Bayes classifier (NB), and Neural Network (NN).

For Method 2, we used the entire Dataset A as the training dataset to build a single, final version of each model. The performance of these models was then evaluated on the independent Dataset B, which served exclusively as the testing dataset.

The evaluation in Method 2 was conducted under three distinct clinical scenarios using the independent Dataset B:

Trial 1: Performance on the Full, Unbalanced Test Set

First, we tested the models on the entire Dataset B to evaluate their performance in a scenario that mirrors a typical clinical setting, where non-AKI cases are often more prevalent than AKI cases.

Trial 2: Performance on a Balanced Test Set

Second, to mitigate the potential effects of class imbalance on performance metrics like precision and F1-score, we evaluated the models on a reduced subset of Dataset B containing a balanced number of AKI and non-AKI patients.

Trial 3: Performance on a Post-Exclusion Test Set

Finally, to assess model performance on the most diagnostically definitive cases, we used a third subset of Dataset B that excluded patients who lacked baseline SCr values within the preceding seven days. This created a “cleaner” dataset to test the models’ core diagnostic capability.

The models were implemented using Python’sversion 3.12.12 scikit-learn and XGBoost libraries. For the more complex models, key hyperparameters were selected following a tuning process that utilized a randomized search with 5-fold cross-validation on the training data to optimize for accuracy. Specifically, the Random Forest model was constructed as an ensemble of 400 decision trees, with a maximum tree depth of 14 and a maximum of 8 features considered at each split. The XGBoost model was configured with 250 boosting rounds, a learning rate of 0.06, and a maximum tree depth of 4. Similarly, the Gradient Boosting model used 60 estimators and a learning rate of 0.11. The Neural Network was a Multi-layer Perceptron configured with a single hidden layer of 100 neurons and was set to stop training early if validation performance ceased to improve over 10 consecutive epochs to prevent overfitting. For the Support Vector Machine, Logistic Regression, and Naive Bayes models, we utilized the standard, well-established default parameters from the scikit-learn library, as they provided robust baseline performance without extensive modification.

2.7. Evaluation of Model Performance

The performance of each machine learning model was evaluated based on its accuracy, precision, recall (sensitivity), specificity, and F1 score calculated using the formula (2 × precision × recall)/(precision + recall). The predictive values of the machine learning models were evaluated using the area under the receiver operating characteristic curve (AUROC). These parameters constituted multiple performance metrics—accuracy, precision, recall, specificity, F1 score, and AUROC—to capture different aspects of diagnostic performance, especially under data imbalance. These metrics collectively assess the trade-offs between false positives and false negatives, which are critical in AKI diagnosis.

2.8. Statistical Analyses

Continuous variables with normal distribution were shown as mean ± standard deviation; continuous variables deviated from normal distribution were shown as median and interquartile range; categorical variables were shown as frequency and percentage. Analytic statistical tests for continuous variables with normal distribution were performed by using two-tailed t-test for independent samples; analytic statistical tests for continuous variables deviated from normal distribution were performed by using Wilcoxon sum rank test; analytic statistical tests for categorical variables were made using chi-squared test. p values of <0.05 was considered as significant. The distribution of data was examined using Q-Q plots. Statistical analysis was performed using SAS 9.4 (SAS Institute Inc., Cary, NC, USA).

To compare the performance between all machine learning models and baseline algorithms, we conducted pairwise statistical tests using the expert nephrologists’ diagnosis as the ground truth. For accuracy, recall (sensitivity), and specificity, we used McNemar’s test. For precision and F1-score, we employed a non-parametric bootstrap procedure with 2000 resamples. For the area under the receiver operating characteristic curve (AUROC), we used DeLong’s test. A p-value of <0.05 was considered statistically significant. The results of these pairwise comparisons are presented in the results tables using superscript letter notations, where models sharing a letter are not significantly different from one another.

4. Discussion

In summary, the repeated machine learning models employed in the present study demonstrated accuracy ranging from 0.65 to 0.69 and an AUROC ranging from 0.73 to 0.76 for the diagnosis of AKI in the absence of baseline SCr. Conversely, the single machine leaning models exhibited an accuracy range of 0.53 to 0.74 and the AUROC ranged from 0.70 to 0.74 for the diagnosis of AKI without available baseline SCr. These findings suggest that repeated machine-learning models offer superior accuracy and predictive value for AKI diagnosis. Additionally, while the single machine learning models did not exhibit better accuracy and predictive value in the balanced testing dataset (Method 2, trial 2), they exhibited better performance in the testing dataset after exclusion of patients with uncertain AKI status (Methods 2, trial 3). Notably, with available past SCr records, the computerized algorithm exhibited superiority in every index compared to either repeated or single machine learning models. While RF, XGBoost, and GB consistently ranked among the top models, our statistical analysis revealed no significant performance difference between them across most metrics. This suggests that several advanced algorithms can achieve a similar performance ceiling. The results imply that future improvements may lie more in feature engineering and addressing data-driven challenges like class imbalance, rather than in selecting a single ‘best’ algorithm.

As the diagnosis of AKI is based on an increase in SCr over a 7-day period [

16], computerized algorithms can accurately diagnose AKI in patients with available baseline SCr or a recent record of SCr. Nevertheless, for patients without such reference SCr values, the diagnosis of AKI is challenging using computerized algorithms, and even for clinicians. The present study attempted to overcome this impediment using machine learning models to identify AKI events based on point-of-care features of patients presenting with abnormal SCr. In cases where a patient with no known medical history presents with abnormal SCr, our machine learning model can assist inexperienced physicians in distinguishing between AKI and CKD, thereby facilitating the initiation of appropriate diagnostic and therapeutic strategies. Remarkably, all included patients had abnormal SCr values; thus, the function of our models was to distinguish AKI events from preexisting chronic kidney disease. To date, the application of machine learning in the management of AKI has primarily focused on AKI prediction. Thus, AKI prediction models with short time windows can be compared to our AKI diagnosis models [

18]. In an AKI prediction model for all-care settings conducted by Cronin et al. in 2015 [

19], pre-admission laboratory tests of −5 days to +48 h from the admission date were obtained for over 1.6 million hospitalizations for model training. They found that the models (LR, LASSO regression, and RF) exhibited an AUROC of 0.746–0.758 for predicting in-hospital AKI events [

19]. In another study, He et al. tested machine learning models to differentiate AKI in different prediction time windows. Their models exhibited AUROC values ranging from 0.720 to 0.764. Among the tested models, the best model performance was achieved in predicting AKI one day in advance [

20]. A similar study by Cheng et al. tested different data collection time windows to train datasets of AKI prediction models. The results suggested that the RF algorithm showed the best performance for AKI prediction 1–3 days in advance, with AUROC of 0.765, 0.733, and 0.709, respectively [

21]. Compared with these studies, our repeated machine learning models exhibited AUROC of 0.73–0.76, depending on the training model used, showing that repeated machine learning could exhibit similar performance with different model algorithms and is comparable to machine learning models with large training datasets.

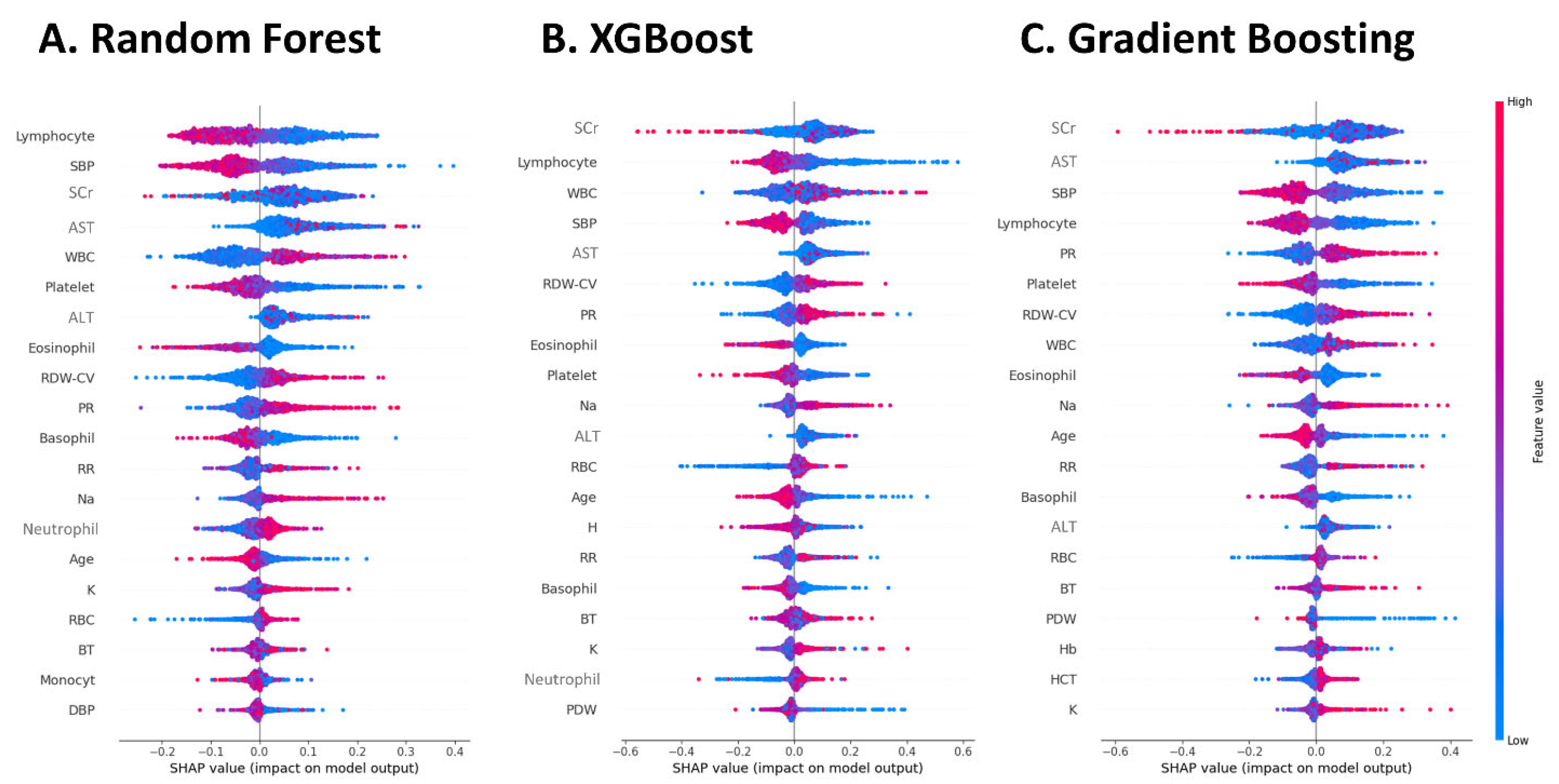

Although we compared the present AKI diagnostic model with AKI prediction models, a difference existed between these two models. Koyner et al. tested models with and without a change in SCr from the baseline in an all-care setting. The results showed that excluding “change in SCr” from input features did not affect the model’s AKI prediction ability [

22]. In contrast, in the present study, SCr was an important feature to be input into the machine learning model for AKI diagnosis, regardless of the algorithm used. The reason for this difference may be that AKI prediction relies more on the severity of comorbidities than on the existing abnormal SCr readings. In contrast, the SCr value at point-of-care is an important feature for the identification of AKI events.

Among the studies developing AKI prediction models, researchers have been seeking the best machine learning algorithm for predicting the risk of AKI events. The AKI prediction model developed by Cronin et al. in 2015, using a 1.6 million training dataset revealed that the performance of traditional LR and LASSO regression models was slightly superior to that of the RF model [

19]. In a 2021 study by Kim et al., in which they intended to develop a continuous real-time prediction model for AKI events, a recurrent neural network algorithm was found to be most suitable for predicting AKI events 48 h in advance [

23]. In the single machine learning models used in the present study, we found that the RF, XGBoost, and GB algorithms exhibited superior performance in AKI diagnosis. Nonetheless, in the case of repeated machine learning models, the differences between the different algorithms were not evident. This finding suggests that with repeated training, the performance of different machine-learning algorithms may approach a consistent level.

Yue et al. developed a machine learning model for AKI prediction in patients with sepsis, identifying key features such as urine output, mechanical ventilation, body mass index, estimated glomerular filtration rate, SCr, partial thromboplastin time, and blood urea nitrogen [

24]. In addition to features directly associated with renal function, those indicative of general disease severity are crucial in this model of sepsis-related AKI. In the present model, which was designed for an all-care setting, features related to sepsis include lymphocyte fraction, white blood cell (WBC) count, platelet count, pulse rate, SBP, and GOT also play important roles. This finding suggests that, in an all-care setting, sepsis is the most important cause of AKI in hospitalized patients.

As electronic diagnostic tools have been integrated into decision support and electronic alert systems for AKI, these studies showed a heterogeneous system design and revealed mixed results [

25]. Previous research has shown that electronic AKI alert systems possess acceptable accuracy and applicability [

26,

27]. Furthermore, Hodgson et al. demonstrated that their electronic AKI alert system reduced the incidence of hospital-acquired AKI and in-hospital mortality [

28]. Conversely, a study by Wilson et al. involving 6030 patients indicated that the electronic AKI alert system did not reduce the risk of the primary outcome, with variable effects across clinical centers [

29]. The findings of the present study suggest that, while electronic diagnostic tools may enhance the accuracy of AKI diagnosis, timely differential diagnosis and management are imperative to improve outcomes.

The results of the present study showed that single model trials of machine learning models were associated with a wide variety of accuracy. The variation in accuracy (0.53–0.74) across single model trials reflects differences in Dataset B’s characteristics—specifically, data imbalance and inclusion of deduced diagnoses. When tested with balanced or refined subsets, model performance became more consistent (accuracy 0.63–0.72), with RF, XGBoost, and GB generally outperforming others. This demonstrates that the observed inconsistency is largely driven by dataset quality and composition. In addition, we also found that machine learning models underperform compared to traditional computerized algorithms in diagnosing AKI. A possible explanation may be that the computerized algorithm achieved higher accuracy (up to 0.95) because it directly relied on detecting a defined increase in baseline serum creatinine (SCr), as per AKI diagnostic criteria. In contrast, our machine learning models were designed for cases lacking recent baseline SCr, a scenario where traditional algorithms fail or rely on assumptions. A key finding of our study, now robustly supported by formal statistical analysis, is that machine learning models significantly outperform routine clinician’s diagnoses in identifying AKI when baseline SCr is unavailable. This was consistently observed across all three distinct evaluation scenarios (

Table 4,

Table 5 and

Table 6). This suggests that in data-limited, real-world settings where clinicians may rely on subjective judgment, the models provide a valuable and more accurate diagnostic support tool.

The present study unexpectedly demonstrates that machine learning models outperform clinicians in diagnosing acute kidney injury (AKI). One possible explanation is that AKI may not have been the primary clinical concern in many cases, with clinicians focusing more on dominant conditions such as sepsis or heart failure. As a result, timely recognition and management of AKI were sometimes overlooked. Additionally, when patients presented with renal impairment but lacked baseline renal function data, clinicians often relied on subjective judgment to diagnose AKI. In such scenarios—where objective diagnostic criteria are unavailable—machine learning models offer valuable support, enabling timely and accurate decision-making. Moving forward, we aim to incorporate clinician feedback into model development to explore the root causes of misdiagnoses and further enhance diagnostic performance.

A limitation of the present study is the relatively small sample size, particularly for the testing dataset. However, considering the all-care setting in the present study, our machine learning models may be applied to hospitalized patients admitted to both critical care units and general wards. Another limitation was the single-center design that limits the generalizability of the results. To compensate for this, an independent testing dataset was used for validation (Dataset B). Nevertheless, external validation is restricted by the institutional review board and is therefore not feasible. Furthermore, this study did not exclude patients based on specific comorbidities. Clinical features used in our models, such as inflammatory markers and vital signs, can be influenced by a wide range of conditions beyond AKI, such as sepsis, heart failure, or diabetes. This could introduce confounding factors and affect model stability. However, this approach was intentional, as our goal was to develop models that could function in a real-world clinical setting where patients often present with multiple, complex health issues. The models were thus trained to identify diagnostic patterns within this inherent clinical complexity. Nevertheless, future research should aim to quantify the impact of specific comorbidities on model performance. Integrating established comorbidity indices, such as the Charlson Comorbidity Index, as input features could potentially improve model robustness and accuracy.

Looking ahead, several avenues for future research could build upon our findings. Future studies should explore more advanced machine learning algorithms, such as deep learning models or sophisticated ensembles, to potentially capture more complex relationships in the data. To improve the generalizability of these models, conducting multicenter studies with diverse data sources—including time-series clinical data and novel inputs like genetic markers—is crucial. Most importantly, prospective validation in real-time clinical settings is essential to assess the models’ true clinical impact, their utility in decision support, and their seamless integration into existing hospital workflows.

In conclusion, machine learning models were able to diagnose AKI without baseline SCr records. Additionally, the machine learning models for AKI diagnosis demonstrated superior accuracy compared to clinicians. Also, repeated machine learning models exhibited more consistent and superior performance than single machine learning models. Notably, the computerized AKI diagnostic algorithms showed superior accuracy compared to the machine learning models when baseline SCr was available. Therefore, these two approaches can be combined to develop a more comprehensive electronic AKI diagnostic system.