Diagnostic Accuracy of a Multi-Target Artificial Intelligence Service for the Simultaneous Assessment of 16 Pathological Features on Chest and Abdominal CT

Abstract

1. Introduction

2. Materials and Methods

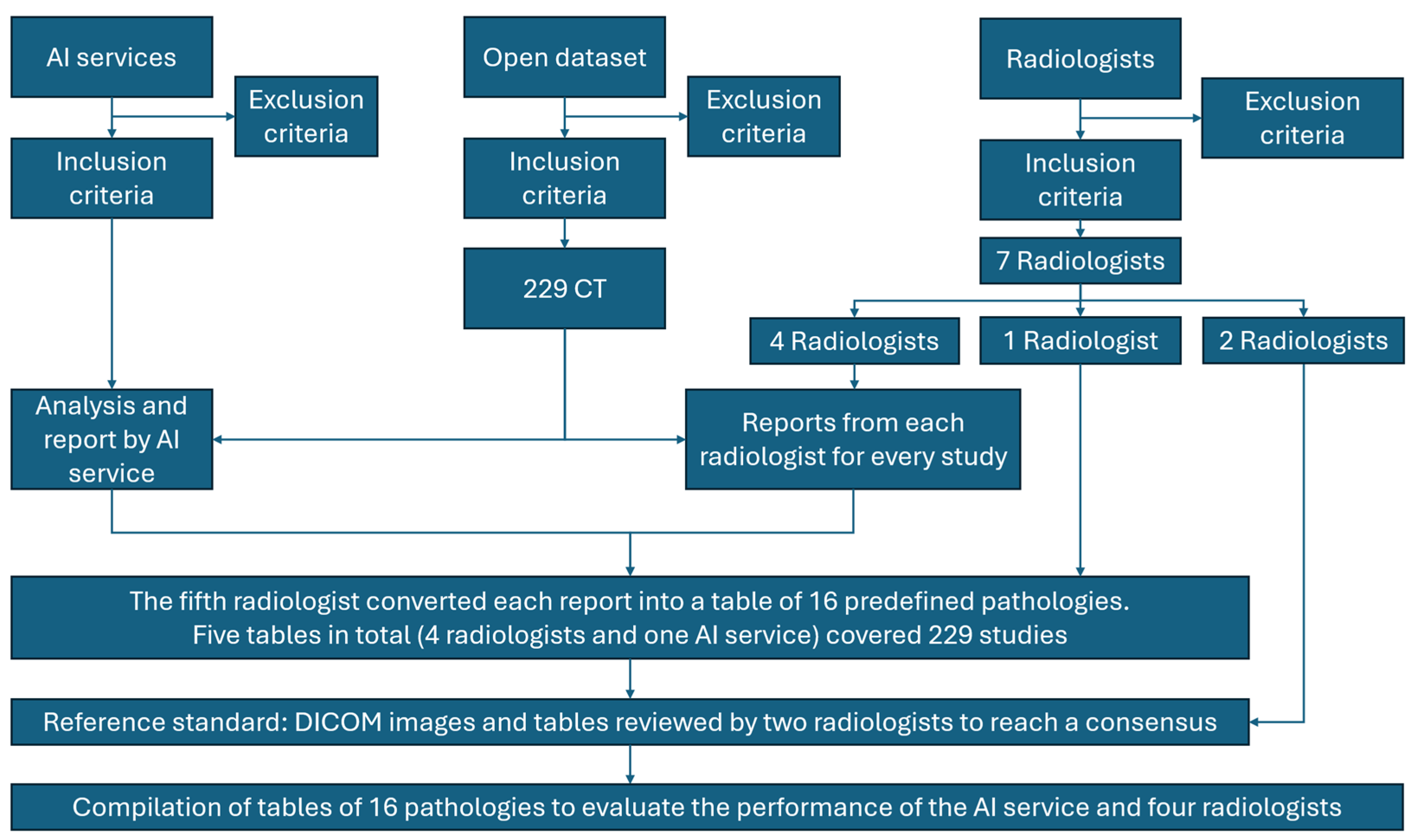

2.1. The Study Design

2.2. Study Registration

2.3. Data Source

2.3.1. Inclusion Criteria

2.3.2. Exclusion Criteria

2.4. Data Preprocessing

2.5. Data Partitions

2.5.1. Assignment of Data to Partitions

2.5.2. Level of Disjointness Between Partitions

2.6. Intended Sample Size

2.7. De-Identification Methods

2.8. Handling of Missing Data

2.9. Image Acquisition Protocol

2.10. Human Readers

2.11. Annotation Workflow

2.12. Reference Standard

2.13. Model

2.13.1. Model Description

2.13.2. AI Service Inclusion and Exclusion Criteria

Inclusion Criteria

Exclusion Criteria

2.13.3. Software and Environment

2.13.4. Initialization of Model Parameters

2.13.5. Training

Details of Training Approach

Method of Selecting the Final Model

Ensembling Techniques

2.14. Evaluation

2.14.1. Metrics

2.14.2. Robustness Analysis

2.14.3. Methods for Explainability

2.14.4. Data Independence

2.14.5. Comparison and Evaluation Methodology

2.14.6. Conversion of AI Outputs to Binary Labels

2.14.7. Handling of Small Segmentations and Borderline Cases

2.15. Outcomes

2.15.1. Primary Outcome

- Minor—no change in patient management or follow-up needed (examples: missed simple cysts < 5 mm, false-positive osteosclerosis misclassified as rib fracture).

- Intermediate—unlikely to affect primary disease treatment but requiring further testing or follow-up (examples: false-positive enlarged lymph nodes, over-detection of small pulmonary nodules).

- Major—likely to change treatment strategy or primary diagnosis (examples: missed liver/renal masses, missed intrathoracic lymphadenopathy suggestive of metastases).

2.15.2. Secondary Outcomes

2.16. Sample Size Calculation

2.17. Statistical Analysis

3. Results

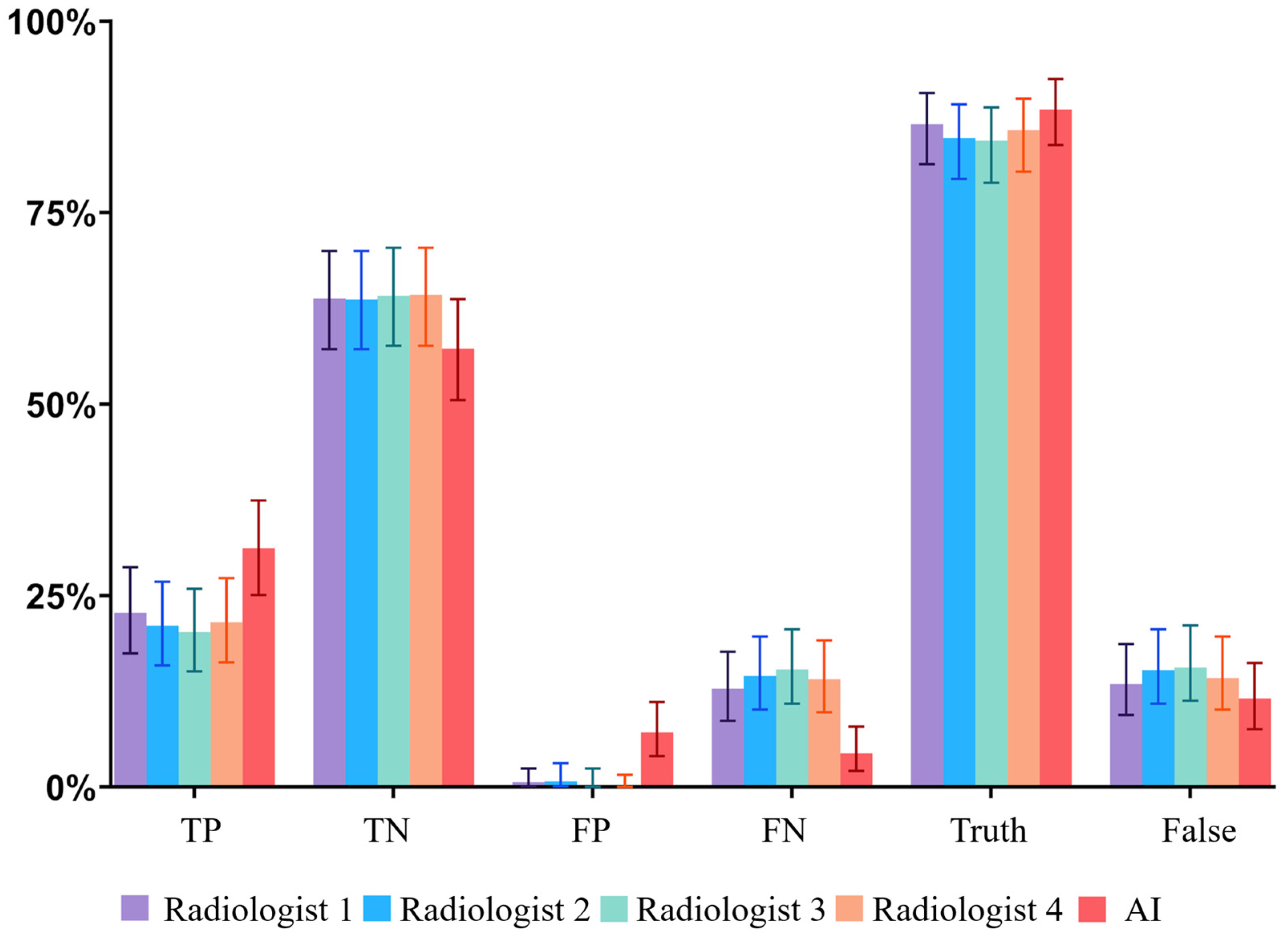

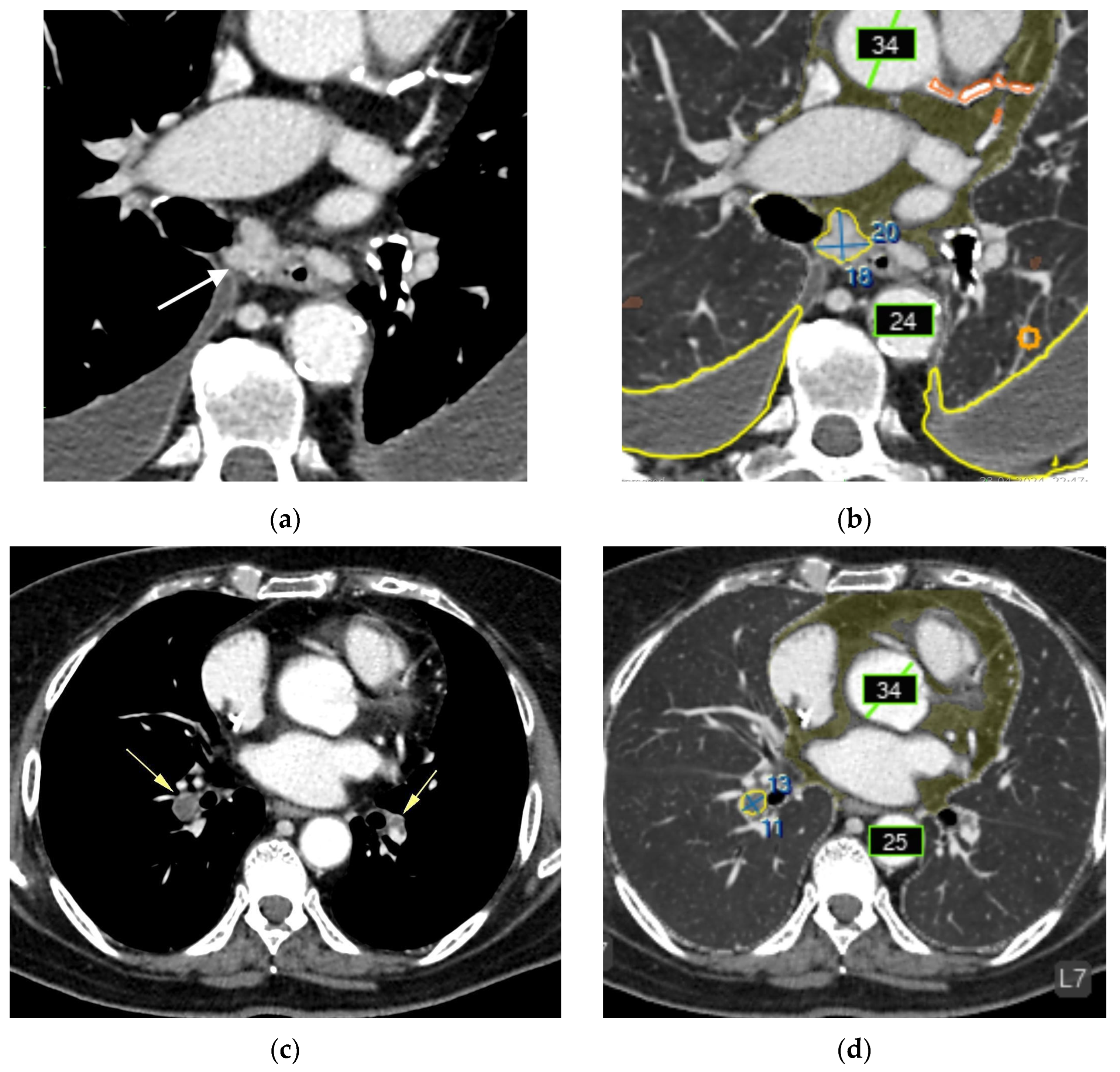

3.1. Overall Diagnostic Performance

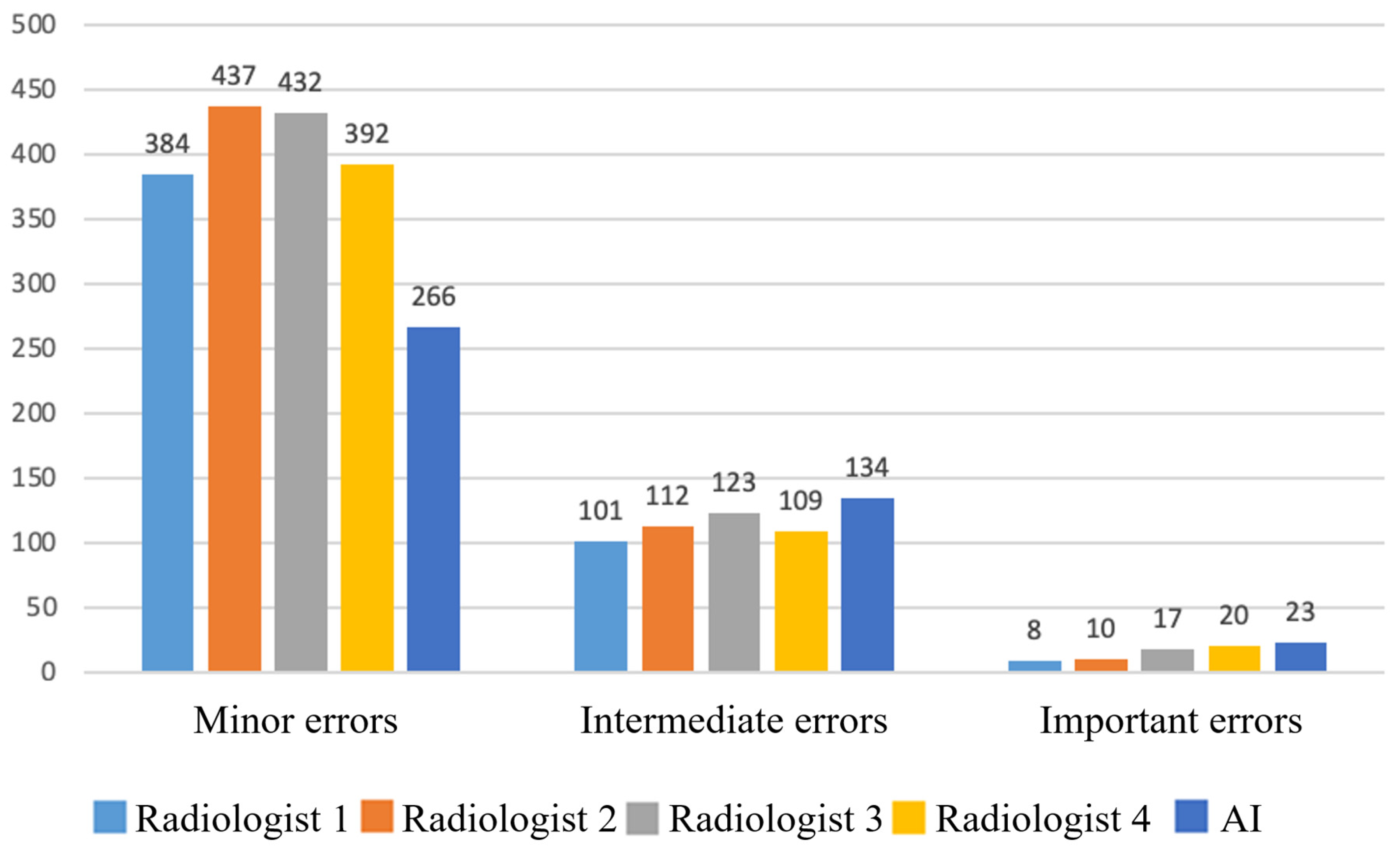

3.2. Clinical Significance of Errors

3.3. Breakdown of Clinically Significant AI Errors

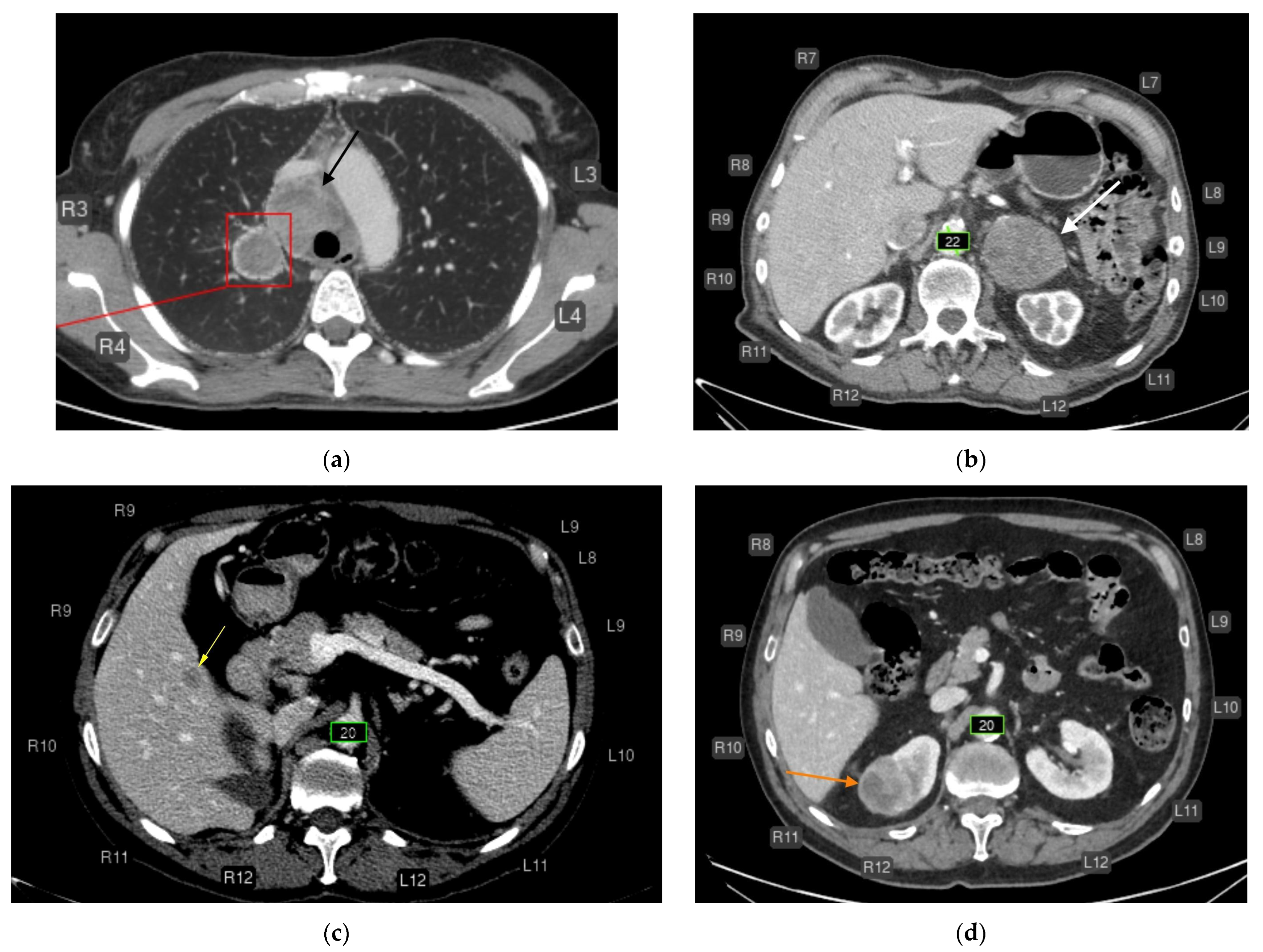

- Liver lesions (8);

- Renal lesions (2);

- Adrenal lesions (2);

- Impaired lung aeration (atelectasis, 2);

- Enlarged intrathoracic lymph nodes (3);

- Pulmonary nodule (1);

- Low vertebral body density (1);

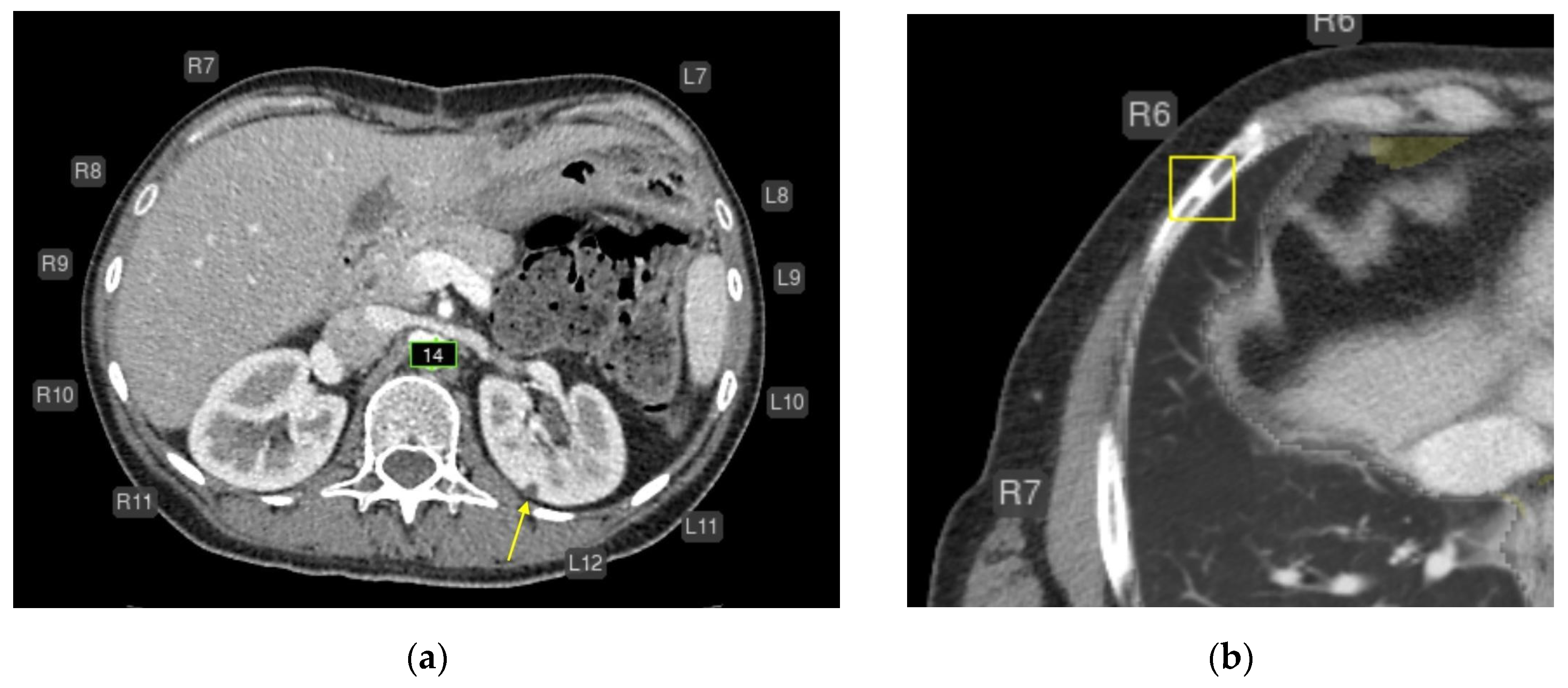

- Urolithiasis (1) (Figure 4).

- Intrathoracic lymph nodes (16);

- Pulmonary nodules (15);

- Impaired aeration (15);

- Aortic dilatation/aneurysm (10);

- Adrenal thickening (10) (Figure 5).

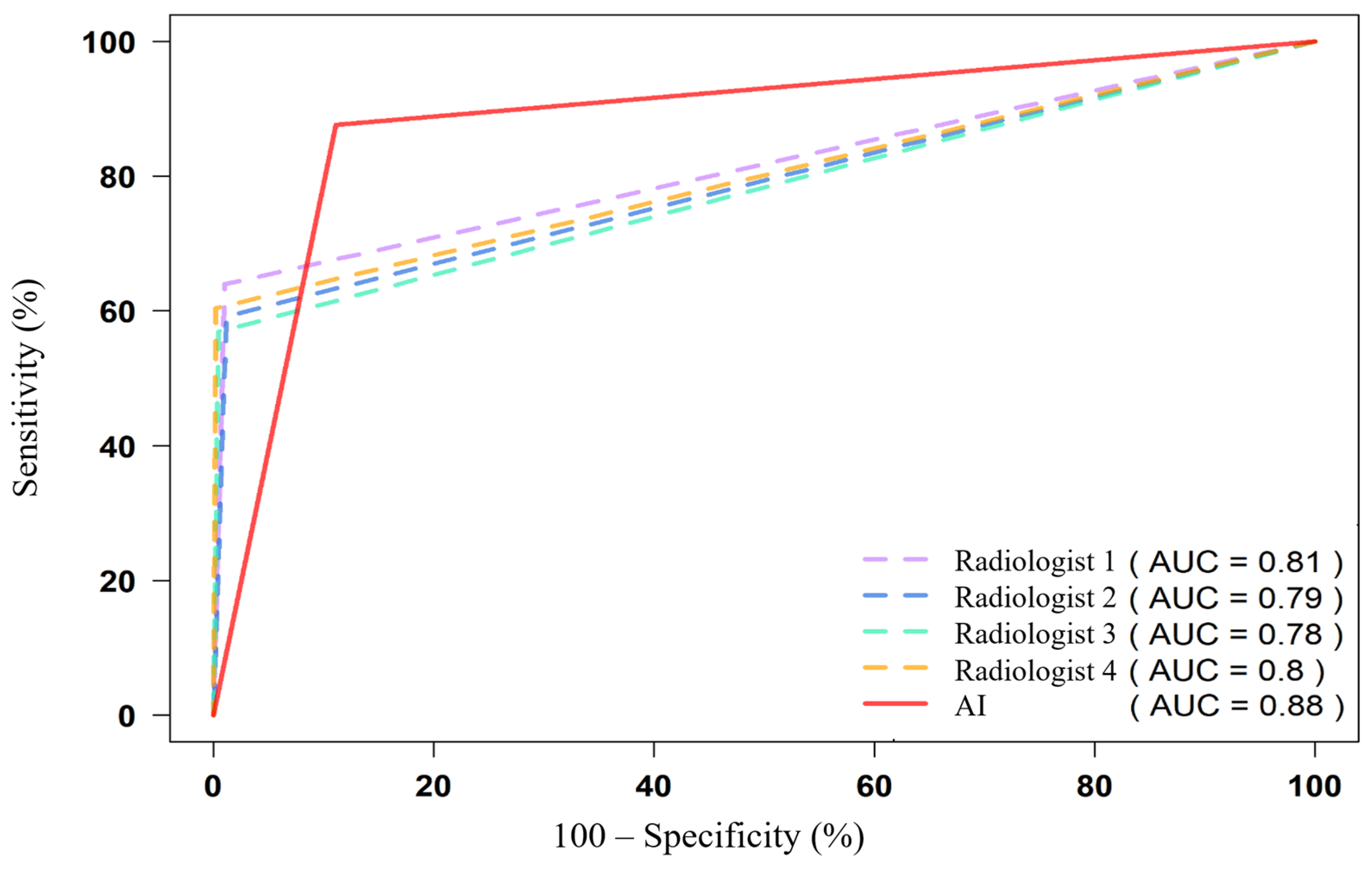

3.4. ROC Analysis and AUC Values

3.5. Diagnostic Performance Categories

- Aortic dilatation/aneurysm;

- Vertebral compression fractures;

- Rib fractures;

- Pulmonary artery dilatation;

- Low vertebral body density;

- Increased epicardial fat volume here.

4. Discussion

4.1. Strengths and Limitations

- Key strengths include:

4.2. Public Health Implications

4.3. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| CT | Computed tomography |

| AUC | Area under the ROC curve |

| ROC | Receiver operating characteristic |

| CI | Confidence interval |

| DICOM | Digital Imaging and Communications in Medicine |

| SR | Structured report |

| IRB | Institutional review board |

| APC | Article processing charge |

| MRMC | Multi-reader multi-case |

| HU | Hounsfield unit |

| TP | True positive |

| FP | False positive |

| TN | True negative |

| FN | False negative |

| CAC | Coronary artery calcification |

| UMLS | Unified Medical Language System |

| BIMCV-COVID-19+ | Biomedical Imaging and COVID-19 dataset (Valencia region) |

References

- Katal, S.; York, B.; Gholamrezanezhad, A. AI in Radiology: From Promise to Practice—A Guide to Effective Integration. Eur. J. Radiol. 2024, 181, 111798. [Google Scholar] [CrossRef]

- Buijs, E.; Maggioni, E.; Mazziotta, F.; Lega, F.; Carrafiello, G. Clinical Impact of AI in Radiology Department Management: A Systematic Review. Radiol. Med. 2024, 129, 1656–1666. [Google Scholar] [CrossRef] [PubMed]

- Pokataev, I.A.; Dudina, I.A.; Kolomiets, L.A.; Morkhov, K.Y.; Nechushkina, V.M.; Rumyantsev, A.A.; Tyulyadin, S.A.; Urmancheeva, A.F.; Khokhlova, S.V. Ovarian Cancer, Primary Peritoneal Cancer, and Fallopian Tube Cancer: Practical Recommendations of RUSSCO, Part 1.2. Malig. Tumors 2024, 14, 82–101. (In Russian) [Google Scholar] [CrossRef]

- Chernina, V.Y.; Belyaev, M.G.; Silin, A.Y.; Avetisov, I.O.; Pyatnitskiy, I.A.; Petrash, E.A.; Basova, M.V.; Sinitsyn, V.E.; Omelyanovskiy, V.V.; Gombolevskiy, V.A.; et al. Diagnostic and Economic Evaluation of a Comprehensive Artificial Intelligence Algorithm for Detecting Ten Pathological Findings on Chest Computed Tomography. Diagnostics 2023, 4, 105–132. (In Russian) [Google Scholar] [CrossRef]

- Wildman-Tobriner, B.; Allen, B.C.; Maxfield, C.M. Common Resident Errors When Interpreting Computed Tomography of the Abdomen and Pelvis: A Review of Types, Pitfalls, and Strategies for Improvement. Curr. Probl. Diagn. Radiol. 2019, 48, 4–9. [Google Scholar] [CrossRef]

- Nechaev, V.A.; Vasiliev, A.Y. Risk Factors for Perception Errors among Radiologists in the Analysis of Imaging Studies. Vestnik Surgu. Med. 2024, 17, 14–22. (In Russian) [Google Scholar] [CrossRef]

- Mongan, J.; Moy, L.; Kahn, C.E., Jr. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- Bossuyt, P.M.; Reitsma, J.B.; E Bruns, D.; A Gatsonis, C.; Glasziou, P.P.; Irwig, L.; Lijmer, J.G.; Moher, D.; Rennie, D.; de Vet, H.C.W.; et al. STARD 2015: An Updated List of Essential Items for Reporting Diagnostic Accuracy Studies. BMJ 2015, 351, h5527. [Google Scholar] [CrossRef]

- de la Iglesia Vayá, M.; Saborit-Torres, J.M.; Serrano, J.A.M.; Oliver-Garcia, E.; Pertusa, A.; Bustos, A.; Cazorla, M.; Galant, J.; Barber, X.; Orozco-Beltrán, D.; et al. BIMCV COVID-19: A Large Annotated Dataset of RX and CT Images from COVID-19 Patients. IEEE Dataport 2021. [Google Scholar] [CrossRef]

- Kulberg, N.S.; Reshetnikov, R.V.; Novik, V.P.; Elizarov, A.B.; Gusev, M.A.; Gombolevskiy, V.A.; Vladzymyrskyy, A.V.; Morozov, S.P. Inter-Observer Variability Between Readers of CT Images: All for One and One for All. Digit. Diagn. 2021, 2, 105–118. (In Russian) [Google Scholar] [CrossRef]

- Gombolevskiy, V.A.; Masri, A.G.; Kim, S.Y.; Morozov, S.P. Manual for Radiology Technicians on Performing CT Examination Protocols. In Methodological Guidelines No. 17; SBHI “SPCC for Diagnostics and Telemedicine Tech.”; Moscow Healthcare Department: Moscow, Russia, 2017; 56p. (In Russian) [Google Scholar]

- Morozov, S.P.; Vladzimirskiy, A.V.; Klyashtorny, V.G. Clinical Trials of Software Based on Intelligent Technologies (Radiology). In Best Practices in Radiology and Instrumental Diagnostics, Issue 23; SBHI “SPCC for Diagnostics and Telemedicine Tech.”; Moscow Healthcare Department: Moscow, Russia, 2019; 33p. (In Russian) [Google Scholar]

- Vasiliev, Y.A. (Ed.) Computer Vision in Radiology: The First Stage of the Moscow Experiment, 2nd rev. and exp. ed.; Izdatelskie Resheniya: Moscow, Russia, 2023; 376p. (In Russian) [Google Scholar]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the Areas under Two or More Correlated ROC Curves: A Nonparametric Approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef]

- RStudio Team. RStudio (Posit). 2023. Available online: https://posit.co/ (accessed on 16 September 2025).

- Gamer, M.; Lemon, J.; Fellows, I.; Singh, P. irr: Various Coefficients of Interrater Reliability and Agreement. R Package Version 0.84.1. Available online: https://CRAN.R-project.org/package=irr (accessed on 16 September 2025).

- Robin, X.; Turck, N.; Hainard, A.; Tiberti, N.; Lisacek, F.; Sanchez, J.-C.; Müller, M. pROC: An Open-Source Package to Analyze and Compare ROC Curves. BMC Bioinform. 2011, 12, 77. [Google Scholar] [CrossRef] [PubMed]

- GraphPad Software. GraphPad Prism, Version 10.2.2. 2024. Available online: https://www.graphpad.com/ (accessed on 16 September 2025).

- Chakraborty, D.P. Observer Performance Methods for Diagnostic Imaging: Foundations, Modeling, and Applications with R-Based Examples; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Plesner, L.L.; Müller, F.C.; Brejnebøl, M.W.; Laustrup, L.C.; Rasmussen, F.; Nielsen, O.W.; Boesen, M.; Andersen, M.B. Commercially Available Chest Radiograph AI Tools for Detecting Airspace Disease, Pneumothorax, and Pleural Effusion. Radiology 2023, 308, e231236. [Google Scholar] [CrossRef] [PubMed]

- Räty, P.; Mentula, P.; Lampela, H.; Nykänen, T.; Helanterä, I.; Haapio, M.; Lehtimäki, T.; Skrifvars, M.B.; Vaara, S.T.; Leppäniemi, A.; et al. Intravenous Contrast CT versus Native CT in Acute Abdomen with Impaired Renal Function (INCARO): Study Protocol. BMJ Open 2020, 10, e037928. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Vladzimirskyy, A.V.; Omelyanskaya, O.V.; Reshetnikov, R.V.; Blokhin, I.A.; Kodenko, M.M.; Nanova, O.G. A Review of Meta-Analyses on the Application of AI in Radiology. Med. Vis. 2024, 28, 22–41. (In Russian) [Google Scholar] [CrossRef]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic Accuracy of Deep Learning in Medical Imaging: A Systematic Review and Meta-Analysis. npj Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef]

- Seah, J.C.Y.; Tang, C.H.M.; Buchlak, Q.D.; Holt, X.G.; Wardman, J.B.; Aimoldin, A.; Esmaili, N.; Ahmad, H.; Pham, H.; Lambert, J.F.; et al. Effect of a Comprehensive Deep-Learning Model on Chest X-Ray Interpretation Accuracy: A Retrospective MRMC Study. Lancet Digit. Health 2021, 3, e496–e506. [Google Scholar] [CrossRef]

- Bernstein, M.H.; Atalay, M.K.; Dibble, E.H.; Maxwell, A.W.P.; Karam, A.R.; Agarwal, S.; Ward, R.C.; Healey, T.T.; Baird, G.L. Can Incorrect AI Results Impact Radiologists? A Multi-Reader Pilot Study of Lung Cancer Detection with Chest Radiography. Eur. Radiol. 2023, 33, 8263–8269. [Google Scholar] [CrossRef]

- Zeng, A.; Houssami, N.; Noguchi, N.; Nickel, B.; Marinovich, M.L. Frequency and Characteristics of Errors by Artificial Intelligence (AI) in Reading Screening Mammography: A Systematic Review. Breast Cancer Res. Treat. 2024, 207, 1–13. [Google Scholar] [CrossRef]

- Behzad, S.; Tabatabaei, S.M.H.; Lu, M.Y.; Eibschutz, L.S.; Gholamrezanezhad, A. Pitfalls in Interpretive Applications of AI in Radiology. Am. J. Roentgenol. 2024, 223, e2431493. [Google Scholar] [CrossRef]

- Pedrosa, J.; Aresta, G.; Ferreira, C.; Carvalho, C.; Silva, J.; Sousa, P.; Ribeiro, L.; Mendonça, A.M.; Campilho, A. Assessing Clinical Applicability of COVID-19 Detection in Chest Radiography with Deep Learning. Sci. Rep. 2022, 12, 6596. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Vladzymyrskyy, A.V.; Arzamasov, K.M.; Shulkin, I.M.; Astapenko, E.V.; Pestrenin, L.D. Limitations in the Application of AI Services for Chest Radiograph Analysis. Digit. Diagn. 2024, 5, 407–420. (In Russian) [Google Scholar] [CrossRef]

| Abnormalities | Thresholds |

|---|---|

| Pulmonary nodules | Presence of at least one pulmonary nodule or lesion larger than 6 mm in short-axis diameter |

| Airspace opacities (including consolidations/infiltrates) | Presence of any size/volume |

| Emphysema | Presence of any volume |

| Aortic dilatation/aneurysm | For aortic dilatation, the threshold diameters were defined as follows: ≥40 mm for the ascending aorta and aortic arch, ≥30 mm for the descending thoracic aorta, ≥25 mm for the abdominal aorta. For aortic aneurysm, the threshold was defined as a diameter > 55 mm in any segment. |

| Pulmonary artery dilatation | >30 mm in diameter |

| Coronary artery calcium | Agatston score > 1 |

| Enlarged intrathoracic lymph nodes | Presence of at least one intrathoracic lymph node enlarged to >15 mm in short-axis diameter |

| Adrenal thickening | Presence of thickening >10 mm |

| Urolithiasis | Presence of at least one urinary calculus |

| Rib fractures | Presence of at least one fracture |

| Low vertebral body density | Attenuation < +150 HU |

| Vertebral compression fractures | Vertebral body deformity > 25% (Genant grade 2) |

| Pathology | Positive Cases (n) | Negative Cases (n) | Prevalence (%) |

|---|---|---|---|

| Coronary artery calcium | 162 | 67 | 70.7 |

| Renal lesions | 148 | 81 | 64.6 |

| Airspace opacities | 144 | 85 | 62.9 |

| Low vertebral density | 126 | 103 | 55.0 |

| Emphysema | 98 | 131 | 42.8 |

| Liver lesions | 97 | 132 | 42.4 |

| Pulmonary nodules | 96 | 133 | 41.9 |

| Pleural effusion | 73 | 156 | 31.9 |

| Adrenal thickening | 69 | 160 | 30.1 |

| Rib fractures | 63 | 166 | 27.5 |

| Enlarged intrathoracic lymph nodes | 55 | 174 | 24.0 |

| Pulmonary artery dilatation | 54 | 175 | 23.6 |

| Vertebral compression fractures | 51 | 178 | 22.3 |

| Epicardial fat | 38 | 191 | 16.6 |

| Aortic dilatation/aneurysm | 32 | 197 | 14.0 |

| Urolithiasis | 22 | 207 | 9.6 |

| Pathology (Number of True Positive Findings from 229) | AUC [95% CI] | ||||

|---|---|---|---|---|---|

| Radiologist 1 | Radiologist 2 | Radiologist 3 | Radiologist 4 | AI | |

| Enlarged intrathoracic lymph nodes (55) | 0.952 [0.916–0.989] | 0.964 [0.932–0.996] | 0.891 [0.836–0.946] | 0.936 [0.892–0.981] | 0.854 [0.803–0.904] |

| Aortic dilatation/aneurysm (32) | 0.681 [0.593–0.769] | 0.567 [0.505–0.629] | 0.567 [0.505–0.629] | 0.55 [0.495–0.605] | 0.947 [0.926–0.969] |

| Vertebral compression fractures (51) | 0.6 [0.544–0.656] | 0.55 [0.508–0.592] | 0.56 [0.515–0.605] | 0.55 [0.508–0.592] | 0.943 [0.913–0.972] |

| Coronary artery calcification (CAC) (162) | 0.838 [0.784–0.893] | 0.73 [0.665–0.794] | 0.837 [0.788–0.885] | 0.864 [0.825–0.903] | 0.875 [0.824–0.926] |

| Lung nodules (96) | 0.906 [0.867–0.945] | 0.887 [0.845–0.929] | 0.849 [0.803–0.895] | 0.909 [0.87–0.948] | 0.863 [0.818–0.909] |

| Urolithiasis (22) | 0.818 [0.715–0.921] | 0.773 [0.666–0.879] | 0.795 [0.69–0.901] | 0.773 [0.666–0.879] | 0.523 [0.478–0.567] |

| Airspace opacities (infiltrates, consolidations) (144) | 0.92 [0.89–0.95] | 0.941 [0.915–0.967] | 0.948 [0.923–0.973] | 0.965 [0.944–0.986] | 0.81 [0.757–0.862] |

| Liver masses (97) | 0.969 [0.945–0.993] | 0.918 [0.88–0.955] | 0.852 [0.806–0.898] | 0.928 [0.893–0.963] | 0.793 [0.741–0.846] |

| Renal masses (148) | 0.964 [0.94–0.988] | 0.949 [0.924–0.974] | 0.949 [0.924–0.974] | 0.967 [0.945–0.989] | 0.778 [0.732–0.824] |

| Rib fractures (63) | 0.574 [0.529–0.619] | 0.574 [0.529–0.619] | 0.557 [0.517–0.598] | 0.541 [0.506–0.576] | 0.899 [0.868–0.929] |

| Pleural effusion (73) | 0.942 [0.905–0.979] | 0.952 [0.918–0.986] | 0.952 [0.918–0.986] | 0.938 [0.9–0.976] | 0.929 [0.9–0.959] |

| Pulmonary artery dilatation (54) | 0.574 [0.526–0.622] | 0.565 [0.52–0.61] | 0.528 [0.497–0.559] | 0.519 [0.493–0.544] | 0.959 [0.929–0.988] |

| Low vertebral body density (126) | 0.504 [0.496–0.513] | 0.513 [0.498–0.528] | 0.504 [0.496–0.513] | 0.509 [0.497–0.521] | 0.9 [0.863–0.937] |

| Adrenal thickening (69) | 0.848 [0.793–0.903] | 0.75 [0.691–0.81] | 0.726 [0.666–0.786] | 0.768 [0.709–0.827] | 0.849 [0.795–0.902] |

| Emphysema (98) | 0.738 [0.687–0.79] | 0.704 [0.654–0.755] | 0.742 [0.691–0.793] | 0.78 [0.729–0.83] | 0.941 [0.914–0.968] |

| All pathologies (1328) | 0.815 [0.802–0.828] | 0.790 [0.777–0.804] | 0.782 [0.769–0.796] | 0.801 [0.788–0.814] | 0.883 [0.872–0.894] |

| Pathology | AUC [95% CI] |

|---|---|

| Airspace opacities (infiltrates, consolidations) | 0.90 [0.85–0.95] |

| Emphysema | 1.00 [0.95–1.00] |

| Lung nodules | 0.97 [0.93–1.00] |

| Enlarged intrathoracic lymph nodes | 0.94 [0.89–0.99] |

| Pleural effusion | 1.00 [0.98–1.00] |

| Aortic dilatation/aneurysm | 1.00 [1.00] |

| Coronary artery calcification (CAC) | 0.96 [0.69–1.00] |

| Adrenal thickening | 0.95 [0.93–1.00] |

| Rib fractures | 0.98 [0.89–1.00] |

| Vertebral compression fractures | 0.73 [0.32–1.00] |

| Liver masses | 0.87 [0.79–0.95] |

| Renal masses | 0.86 [0.69–1.00] |

| Urolithiasis | 0.55 [0.00–1.00] |

| Average value for all pathologies | 0.9 [0.71–1.00] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nechaev, V.A.; Kashtanova, N.Y.; Kopeykin, E.V.; Magomedova, U.M.; Gribkova, M.S.; Hardin, A.V.; Sekacheva, M.I.; Sanikovich, V.D.; Chernina, V.Y.; Gombolevskiy, V.A. Diagnostic Accuracy of a Multi-Target Artificial Intelligence Service for the Simultaneous Assessment of 16 Pathological Features on Chest and Abdominal CT. Diagnostics 2025, 15, 2778. https://doi.org/10.3390/diagnostics15212778

Nechaev VA, Kashtanova NY, Kopeykin EV, Magomedova UM, Gribkova MS, Hardin AV, Sekacheva MI, Sanikovich VD, Chernina VY, Gombolevskiy VA. Diagnostic Accuracy of a Multi-Target Artificial Intelligence Service for the Simultaneous Assessment of 16 Pathological Features on Chest and Abdominal CT. Diagnostics. 2025; 15(21):2778. https://doi.org/10.3390/diagnostics15212778

Chicago/Turabian StyleNechaev, Valentin A., Nataliya Y. Kashtanova, Evgenii V. Kopeykin, Umamat M. Magomedova, Maria S. Gribkova, Anton V. Hardin, Marina I. Sekacheva, Varvara D. Sanikovich, Valeria Y. Chernina, and Victor A. Gombolevskiy. 2025. "Diagnostic Accuracy of a Multi-Target Artificial Intelligence Service for the Simultaneous Assessment of 16 Pathological Features on Chest and Abdominal CT" Diagnostics 15, no. 21: 2778. https://doi.org/10.3390/diagnostics15212778

APA StyleNechaev, V. A., Kashtanova, N. Y., Kopeykin, E. V., Magomedova, U. M., Gribkova, M. S., Hardin, A. V., Sekacheva, M. I., Sanikovich, V. D., Chernina, V. Y., & Gombolevskiy, V. A. (2025). Diagnostic Accuracy of a Multi-Target Artificial Intelligence Service for the Simultaneous Assessment of 16 Pathological Features on Chest and Abdominal CT. Diagnostics, 15(21), 2778. https://doi.org/10.3390/diagnostics15212778