Precision Through Detail: Radiomics and Windowing Techniques as Key for Detecting Dens Axis Fractures in CT Scans

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Patient Selection

2.2. Image Data Acquisition and Data Processing

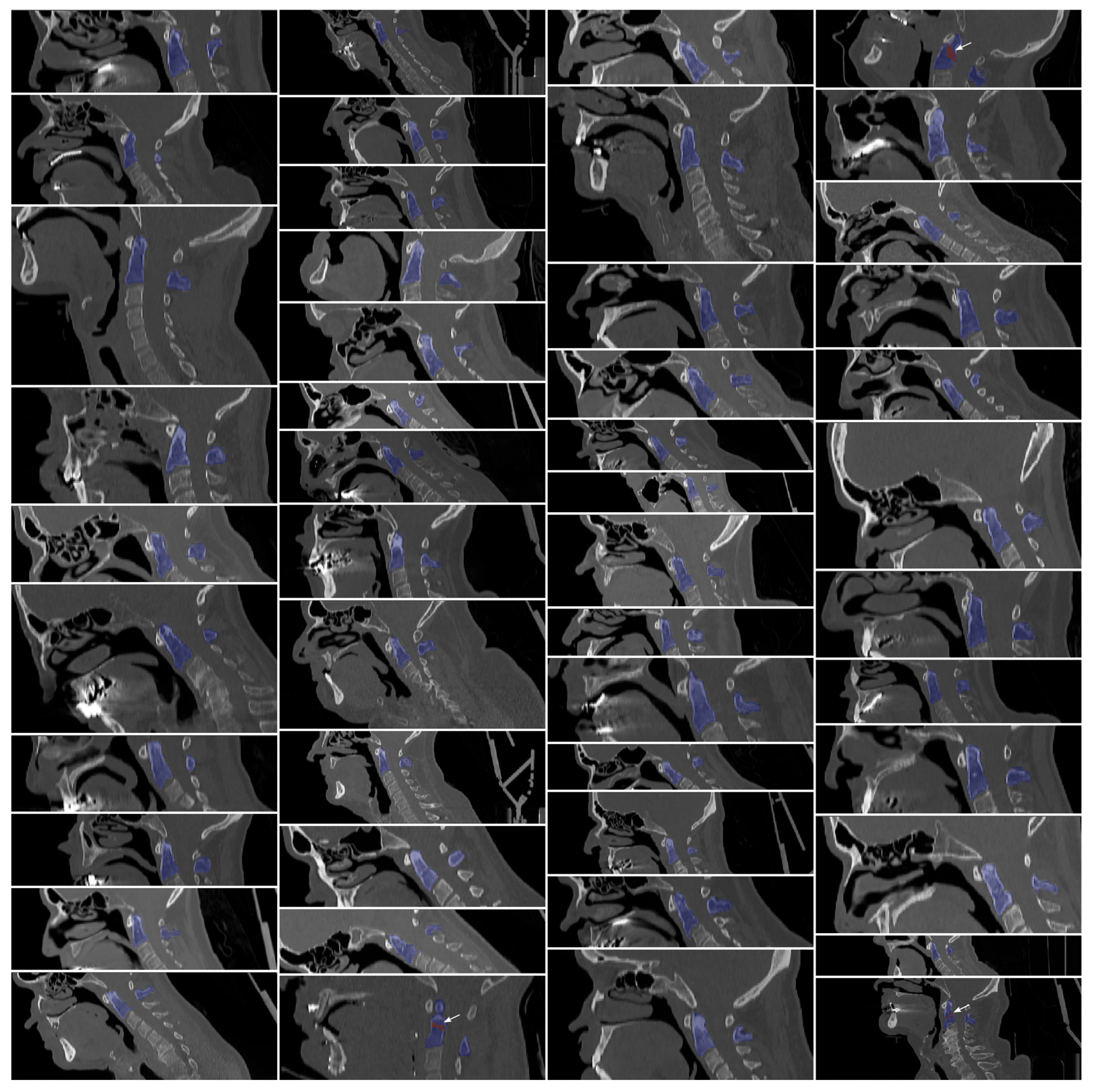

2.2.1. Manual Segmentations

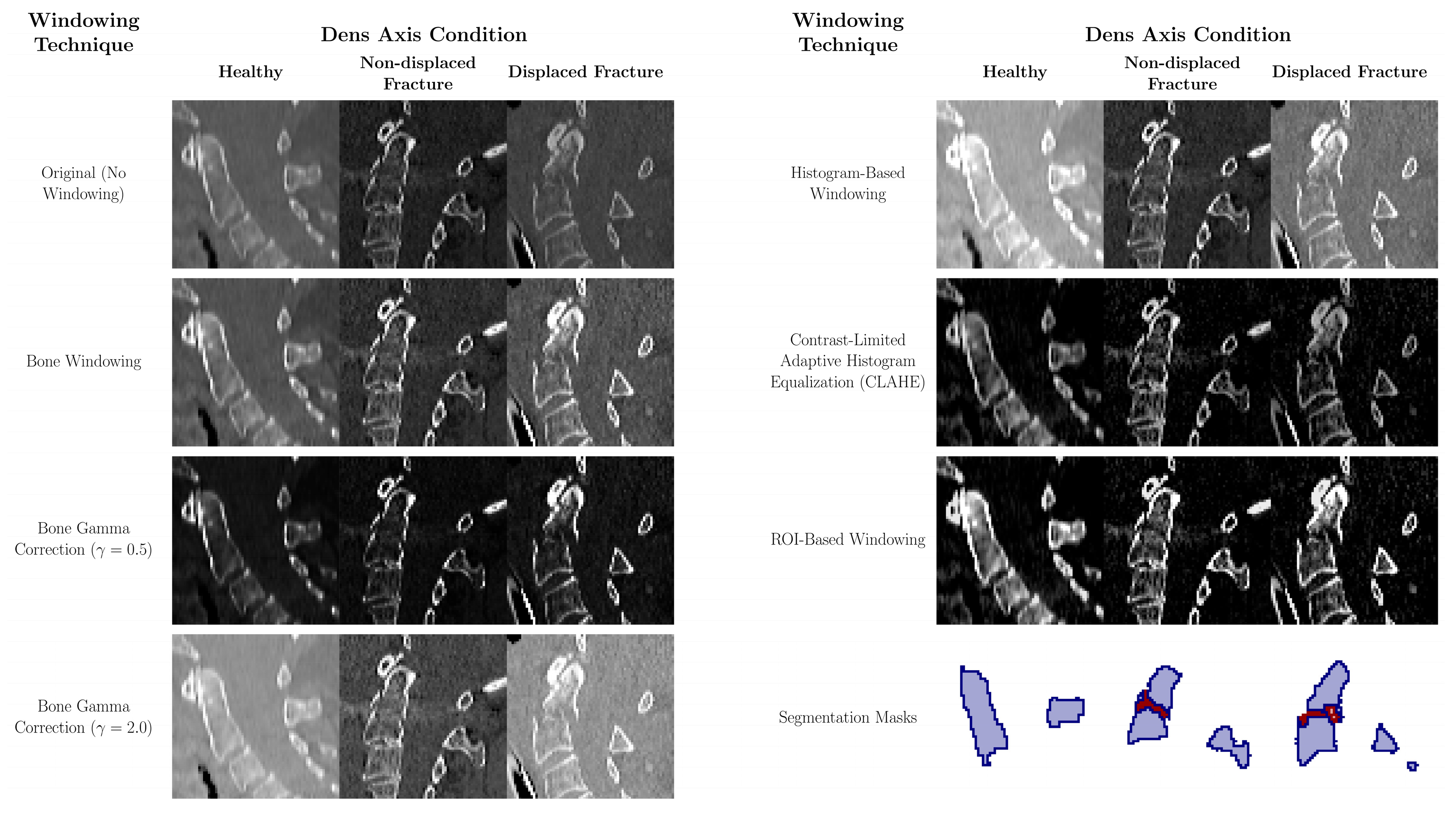

2.2.2. Data Pre-Processing and Contrast Adjustment

- Bone Windowing: A conventional windowing technique that enhances bone visualization by using a standard clinical window setting of 400 Hounsfield units (HU) for the window level and 2000 HU for the window width [21]. This setting highlights bone density and structure, facilitating better identification and assessment of bone pathologies.

- Bone Gamma Correction: This post-processing technique modifies the intensity distribution of bone-windowed images by applying gamma correction. Two gamma values were tested: γ = 0.5 to enhance brightness and γ = 2.0 to reduce it [16]. Gamma values less than 1 emphasize darker areas, while values greater than 1 reduce overall brightness, allowing finer details in both low- and high-intensity regions to emerge, particularly aiding in the detection of bone fractures.

- Histogram-Based Windowing: In this method, the window boundaries are determined by analyzing the histogram of the entire image. The 5th and 95th percentile values of the image’s pixel intensity data are used to set the window boundaries, effectively filtering out extreme pixel values [22]. This technique optimizes the contrast distribution across the entire image, reducing overexposure and underexposure and thereby improving image quality.

- Contrast-Limited Adaptive Histogram Equalization (CLAHE): CLAHE increases local contrast by dividing the image into smaller segments and applying histogram equalization to each segment individually [17,18]. This method improves the visibility of subtle structures, especially in areas of low contrast, without excessively increasing global contrast. While commonly applied in lung imaging [18,19], recent evidence supports its utility in bone imaging—for example, Park et al. demonstrated that CLAHE improved classification performance in scintigraphy [19].

- ROI-Based Windowing: This targeted method utilizes the segmentation mask of the dens region to calculate the 5th and 95th percentile intensity values within the ROI and its immediate surroundings. These values are then applied uniformly to the entire image to maintain consistent contrast and prevent localized discrepancies. This approach ensures clinical readability and, in a two-stage pipeline such as Model M2, can be fully automated using upstream segmentation results.

2.2.3. Data Augmentation

2.3. CNN-Based Segmentation and Classification

2.3.1. M1—CNN- and FNN-Based Classification

2.3.2. M2—CNN-Based Segmentation and Radiomics Analysis

2.3.3. Computational Implementation and Evaluation

2.4. Evaluation Metrics and Statistical Analysis

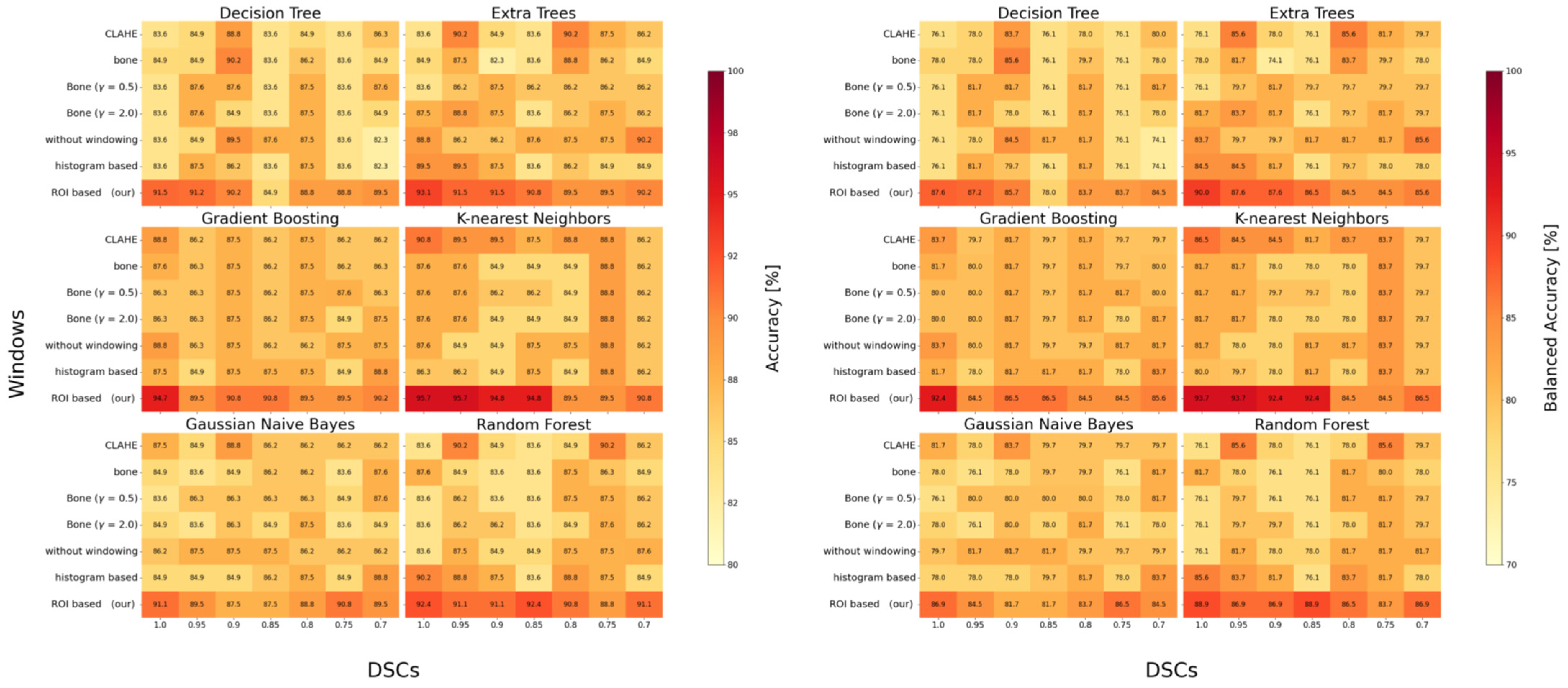

3. Results

3.1. Segmentation Performance

3.2. Classification Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CT | Computed Tomography |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| FNN | Feedforward Neural Network |

| ROI | Region of Interest |

| ML | Machine Learning |

| U-Net | U-shaped Convolutional Neural Network Architecture |

| 3D | Three-Dimensional |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| DSC | Dice Similarity Coefficient |

| SD | Standard Deviation |

| HU | Hounsfield Unit |

| CTDI_vol | Computed Tomography Dose Index Volume |

| PACS | Picture Archiving and Communication System |

| Adam | Adaptive Moment Estimation (optimizer) |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| MRI | Magnetic Resonance Imaging |

| AI | Artificial intelligence |

References

- Good, A.E.; Ramponi, D.R. Odontoid/Dens Fractures. Adv. Emerg. Nurs. J. 2024, 46, 38–43. [Google Scholar] [CrossRef]

- Krupinski, E.A.; Williams, M.B.; Andriole, K.; Strauss, K.J.; Applegate, K.; Wyatt, M.; Bjork, S.; Seibert, J.A. Digital radiography image quality: Image processing and display. J. Am. Coll. Radiol. 2007, 4, 389–400. [Google Scholar] [CrossRef]

- Kabasawa, H. MR Imaging in the 21st Century: Technical Innovation over the First Two Decades. Magn. Reson. Med. Sci. 2022, 21, 71–82. [Google Scholar] [CrossRef]

- Pruessmann, K.P.; Weiger, M.; Scheidegger, M.B.; Boesiger, P. SENSE: Sensitivity encoding for fast MRI. Magn. Reson. Med. 1999, 42, 952–962. [Google Scholar] [CrossRef]

- van Vaals, J.J.; Brummer, M.E.; Dixon, W.T.; Tuithof, H.H.; Engels, H.; Nelson, R.C.; Gerety, B.M.; Chezmar, J.L.; den Boer, J.A. “Keyhole” method for accelerating imaging of contrast agent uptake. J. Magn. Reson. Imaging 1993, 3, 671–675. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.-H.; Tang, J.; Leng, S. Prior image constrained compressed sensing (PICCS): A method to accurately reconstruct dynamic CT images from highly undersampled projection data sets. Med. Phys. 2008, 35, 660–663. [Google Scholar] [CrossRef] [PubMed]

- Radke, K.L.; Hußmann, J.; Röwer, L.; Voit, D.; Frahm, J.; Antoch, G.; Klee, D.; Pillekamp, F.; Wittsack, H.-J. “shortCardiac”—An open-source framework for fast and standardized assessment of cardiac function. SoftwareX 2023, 23, 101453. [Google Scholar] [CrossRef]

- Zhang, R.; Shi, J.; Liu, S.; Chen, B.; Li, W. Performance of radiomics models derived from different CT reconstruction parameters for lung cancer risk prediction. BMC Pulm. Med. 2023, 23, 132. [Google Scholar] [CrossRef]

- Wang, W.; Fan, Z.; Zhen, J. MRI radiomics-based evaluation of tuberculous and brucella spondylitis. J. Int. Med. Res. 2023, 51, 3000605231195156. [Google Scholar] [CrossRef]

- Salahuddin, Z.; Woodruff, H.C.; Chatterjee, A.; Lambin, P. Transparency of deep neural networks for medical image analysis: A review of interpretability methods. Comput. Biol. Med. 2022, 140, 105111. [Google Scholar] [CrossRef]

- Bhatt, R.B.; Gopal, M. Neuro-fuzzy decision trees. Int. J. Neural Syst. 2006, 16, 63–78. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Zhou, D.; Liu, H.; Wen, M. CT-based radiomics analysis of different machine learning models for differentiating benign and malignant parotid tumors. Eur. Radiol. 2022, 32, 6953–6964. [Google Scholar] [CrossRef] [PubMed]

- Benfante, V.; Salvaggio, G.; Ali, M.; Cutaia, G.; Salvaggio, L.; Salerno, S.; Busè, G.; Tulone, G.; Pavan, N.; Di Raimondo, D.; et al. Grading and Staging of Bladder Tumors Using Radiomics Analysis in Magnetic Resonance Imaging. In Image Analysis and Processing-ICIAP 2023 Workshops; Foresti, G.L., Fusiello, A., Hancock, E., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 93–103. ISBN 978-3-031-51025-0. [Google Scholar]

- Liang, C.; Cheng, Z.; Huang, Y.; He, L.; Chen, X.; Ma, Z.; Huang, X.; Liang, C.; Liu, Z. An MRI-based Radiomics Classifier for Preoperative Prediction of Ki-67 Status in Breast Cancer. Acad. Radiol. 2018, 25, 1111–1117. [Google Scholar] [CrossRef]

- Gutsche, R.; Lohmann, P.; Hoevels, M.; Ruess, D.; Galldiks, N.; Visser-Vandewalle, V.; Treuer, H.; Ruge, M.; Kocher, M. Radiomics outperforms semantic features for prediction of response to stereotactic radiosurgery in brain metastases. Radiother. Oncol. 2022, 166, 37–43. [Google Scholar] [CrossRef]

- Zhou, Y.; Shi, C.; Lai, B.; Jimenez, G. Contrast enhancement of medical images using a new version of the World Cup Optimization algorithm. Quant. Imaging Med. Surg. 2019, 9, 1528–1547. [Google Scholar] [CrossRef]

- Yoshimi, Y.; Mine, Y.; Ito, S.; Takeda, S.; Okazaki, S.; Nakamoto, T.; Nagasaki, T.; Kakimoto, N.; Murayama, T.; Tanimoto, K. Image preprocessing with contrast-limited adaptive histogram equalization improves the segmentation performance of deep learning for the articular disk of the temporomandibular joint on magnetic resonance images. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2023, 138, 128–141. [Google Scholar] [CrossRef]

- Alwakid, G.; Gouda, W.; Humayun, M. Deep Learning-Based Prediction of Diabetic Retinopathy Using CLAHE and ESRGAN for Enhancement. Healthcare 2023, 11, 863. [Google Scholar] [CrossRef]

- Park, K.S.; Cho, S.-G.; Kim, J.; Song, H.-C. Effect of contrast-limited adaptive histogram equalization on deep learning models for classifying bone scans. J. Nucl. Med. 2022, 63, 3240. [Google Scholar]

- Yushkevich, P.A.; Piven, J.; Hazlett, H.C.; Smith, R.G.; Ho, S.; Gee, J.C.; Gerig, G. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage 2006, 31, 1116–1128. [Google Scholar] [CrossRef]

- O’Broin, E.S.; Morrin, M.; Breathnach, E.; Allcutt, D.; Earley, M.J. Titanium mesh and bone dust calvarial patch during cranioplasty. Cleft Palate Craniofac. J. 1997, 34, 354–356. [Google Scholar] [CrossRef]

- Hemminger, B.M.; Zong, S.; Muller, K.E.; Coffey, C.S.; DeLuca, M.C.; Johnston, R.E.; Pisano, E.D. Improving the detection of simulated masses in mammograms through two different image-processing techniques. Acad. Radiol. 2001, 8, 845–855. [Google Scholar] [CrossRef] [PubMed]

- Khan, Z.; Yahya, N.; Alsaih, K.; Ali, S.S.A.; Meriaudeau, F. Evaluation of Deep Neural Networks for Semantic Segmentation of Prostate in T2W MRI. Sensors 2020, 20, 3183. [Google Scholar] [CrossRef] [PubMed]

- Radke, K.L.; Wollschläger, L.M.; Nebelung, S.; Abrar, D.B.; Schleich, C.; Boschheidgen, M.; Frenken, M.; Schock, J.; Klee, D.; Frahm, J.; et al. Deep Learning-Based Post-Processing of Real-Time MRI to Assess and Quantify Dynamic Wrist Movement in Health and Disease. Diagnostics 2021, 11, 1077. [Google Scholar] [CrossRef] [PubMed]

- Turečková, A.; Tureček, T.; Komínková Oplatková, Z.; Rodríguez-Sánchez, A. Improving CT Image Tumor Segmentation Through Deep Supervision and Attentional Gates. Front. Robot. AI 2020, 7, 106. [Google Scholar] [CrossRef]

- van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.-C.; Pieper, S.; Aerts, H.J.W.L. Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Falcon, W.; Borovec, J.; Wälchli, A.; Eggert, N.; Schock, J.; Jordan, J.; Skafte, N.; Ir1dXD; Bereznyuk, V.; Harris, E.; et al. PyTorchLightning/Pytorch-Lightning: 0.7.6 Release. 2020. Available online: https://zenodo.org/records/3828935 (accessed on 5 September 2025).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. 2014. Available online: https://arxiv.org/pdf/1412.6980.pdf (accessed on 5 September 2025).

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. 2017. Available online: https://arxiv.org/pdf/1707.03237.pdf (accessed on 13 May 2025).

- Ma, Y.; Liu, Q.; Quan, Z. Automated image segmentation using improved PCNN model based on cross-entropy. In Proceedings of the 2004 International Symposium on Intelligent Multimedia, Video and Speech Processing, ISIMP 2004, Hong Kong, China, 20–22 October 2004; IEEE Operations Center: Piscataway, NJ, USA, 2004; pp. 743–746, ISBN 0-7803-8687-6. [Google Scholar]

- Giaccone, P.; Benfante, V.; Stefano, A.; Cammarata, F.P.; Russo, G.; Comelli, A. PET Images Atlas-Based Segmentation Performed in Native and in Template Space: A Radiomics Repeatability Study in Mouse Models. In Image Analysis and Processing. ICIAP 2022 Workshops; Mazzeo, P.L., Frontoni, E., Sclaroff, S., Distante, C., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 351–361. ISBN 978-3-031-13320-6. [Google Scholar]

- Ren, M.; Yi, P.H. Deep learning detection of subtle fractures using staged algorithms to mimic radiologist search pattern. Skeletal Radiol. 2022, 51, 345–353. [Google Scholar] [CrossRef]

- Li, W.-G.; Zeng, R.; Lu, Y.; Li, W.-X.; Wang, T.-T.; Lin, H.; Peng, Y.; Gong, L.-G. The value of radiomics-based CT combined with machine learning in the diagnosis of occult vertebral fractures. BMC Musculoskelet. Disord. 2023, 24, 819. [Google Scholar] [CrossRef]

- Salehinejad, H.; Ho, E.; Lin, H.-M.; Crivellaro, P.; Samorodova, O.; Arciniegas, M.T.; Merali, Z.; Suthiphosuwan, S.; Bharatha, A.; Yeom, K.; et al. Deep Sequential Learning for Cervical Spine Fracture Detection on Computed Tomography Imaging. 2020. Available online: http://arxiv.org/pdf/2010.13336v4 (accessed on 3 October 2025).

- Small, J.E.; Osler, P.; Paul, A.B.; Kunst, M. CT Cervical Spine Fracture Detection Using a Convolutional Neural Network. AJNR Am. J. Neuroradiol. 2021, 42, 1341–1347. [Google Scholar] [CrossRef]

- Chłąd, P.; Ogiela, M.R. Deep Learning and Cloud-Based Computation for Cervical Spine Fracture Detection System. Electronics 2023, 12, 2056. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, L.; Zhang, X.; Liu, J.; Tang, J.; Xia, J.; Liu, Y.; Zhang, W.; Liang, Z.; Tang, G.; et al. Development and validation of a predictive model for vertebral fracture risk in osteoporosis patients. Eur. Spine J. 2024, 33, 3242–3260. [Google Scholar] [CrossRef]

- Singh, M.; Tripathi, U.; Patel, K.K.; Mohit, K.; Pathak, S. An efficient deep learning based approach for automated identification of cervical vertebrae fracture as a clinical support aid. Sci. Rep. 2025, 15, 25651. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, L.; Yuan, Y.; Tang, J.; Liu, Y.; Xia, L.; Zhang, J. Deep Learning Radiomics Model Based on Computed Tomography Image for Predicting the Classification of Osteoporotic Vertebral Fractures: Algorithm Development and Validation. JMIR Med. Inform. 2025, 13, e75665. [Google Scholar] [CrossRef]

- Bruschetta, R.; Tartarisco, G.; Lucca, L.F.; Leto, E.; Ursino, M.; Tonin, P.; Pioggia, G.; Cerasa, A. Predicting Outcome of Traumatic Brain Injury: Is Machine Learning the Best Way? Biomedicines 2022, 10, 686. [Google Scholar] [CrossRef]

- Fricke, C.; Alizadeh, J.; Zakhary, N.; Woost, T.B.; Bogdan, M.; Classen, J. Evaluation of Three Machine Learning Algorithms for the Automatic Classification of EMG Patterns in Gait Disorders. Front. Neurol. 2021, 12, 666458. [Google Scholar] [CrossRef]

- Choy, G.; Khalilzadeh, O.; Michalski, M.; Do, S.; Samir, A.E.; Pianykh, O.S.; Geis, J.R.; Pandharipande, P.V.; Brink, J.A.; Dreyer, K.J. Current Applications and Future Impact of Machine Learning in Radiology. Radiology 2018, 288, 318–328. [Google Scholar] [CrossRef]

- Koçak, B.; Durmaz, E.Ş.; Ateş, E.; Kılıçkesmez, Ö. Radiomics with artificial intelligence: A practical guide for beginners. Diagn. Interv. Radiol. 2019, 25, 485–495. [Google Scholar] [CrossRef]

| Windowing | Model Performance | ||

|---|---|---|---|

| Model 1 | Model 2 | ||

| Accuracy/Balanced Accuracy | Segmentation DSC (Mean ± SD) | Accuracy/Balanced Accuracy | |

| without windowing | 0.937/0.811 | 0.91 ± 0.01 | 0.853/0.865 |

| CLAHE | 0.875/0.734 | 0.92 ± 0.01 | 0.895/0.817 |

| Bone (γ = 1.0) | 0.918/0.834 | 0.85 ± 0.01 | 0.849/0.817 |

| Bone (γ = 0.5) | 0.932/0.862 | 0.82 ± 0.03 | 0.853/0.817 |

| Bone (γ = 2.0) | 0.891/0.817 | 0.90 ± 0.01 | 0.849/0.817 |

| Histogram-based | 0.902/0.825 | 0.94 ± 0.01 | 0.862/0.800 |

| ROI-based 1 | (0.942/0.881) | n/a | 0.957/0.937 |

| Study | Region | Preprocessing | Pipeline/Classifier | Performance |

|---|---|---|---|---|

| Salehinejad et al., 2021 [35] | Cervical fracture (C1–C7) | CT | ResNet50-Cnn + BLSTM | Acc: 70.9–79.2% |

| Small et al., 2021 [36] | Cervical fracture (C1–C7) | CT | CNN (Aidoc) | Acc: 92% |

| Chłąd et al., 2023 [37] | Cervical fracture (slice based) | CT, bone window | Yolov5 + Vision Transformer | Acc: 98% |

| Li et al., 2023 [34] | Occult vertebral fractures (cervical + thoracolumbar) | CT, bone window | Radiomics + ML | Acc: 84.6% |

| Zhang et al., 2024 [38] | Osteoporotic vertebral fractures (cervical + thoracolumbar) | CT, RadImageNet feature extraction | CNN (RadImageNet vs. ImageNet) | C-Index: 0.795 |

| Singh et al., 2025 [39] | Cervical fracture (C1–C7) | HU normalization | Inception-ResNet-v2 + U-Net decoder | Acc: 98.4% |

| Liu et al., 2025 [40] | Vertebral fractures + osteoporosis (incl. cervical, thoracolumbar) | CT, RadImageNet pretrained features | CNN (RadImageNet) vs. ImageNet | Acc: 76–80% (per class) |

| our | Dens axis fracture | ROI-based | U-Net segmentation + Radiomics + KNN | Acc: 95.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radke, K.L.; Müller-Lutz, A.; Abrar, D.B.; Vach, M.; Rubbert, C.; Latz, D.; Antoch, G.; Wittsack, H.-J.; Nebelung, S.; Wilms, L.M. Precision Through Detail: Radiomics and Windowing Techniques as Key for Detecting Dens Axis Fractures in CT Scans. Diagnostics 2025, 15, 2599. https://doi.org/10.3390/diagnostics15202599

Radke KL, Müller-Lutz A, Abrar DB, Vach M, Rubbert C, Latz D, Antoch G, Wittsack H-J, Nebelung S, Wilms LM. Precision Through Detail: Radiomics and Windowing Techniques as Key for Detecting Dens Axis Fractures in CT Scans. Diagnostics. 2025; 15(20):2599. https://doi.org/10.3390/diagnostics15202599

Chicago/Turabian StyleRadke, Karl Ludger, Anja Müller-Lutz, Daniel B. Abrar, Marius Vach, Christian Rubbert, David Latz, Gerald Antoch, Hans-Jörg Wittsack, Sven Nebelung, and Lena Marie Wilms. 2025. "Precision Through Detail: Radiomics and Windowing Techniques as Key for Detecting Dens Axis Fractures in CT Scans" Diagnostics 15, no. 20: 2599. https://doi.org/10.3390/diagnostics15202599

APA StyleRadke, K. L., Müller-Lutz, A., Abrar, D. B., Vach, M., Rubbert, C., Latz, D., Antoch, G., Wittsack, H.-J., Nebelung, S., & Wilms, L. M. (2025). Precision Through Detail: Radiomics and Windowing Techniques as Key for Detecting Dens Axis Fractures in CT Scans. Diagnostics, 15(20), 2599. https://doi.org/10.3390/diagnostics15202599