1. Introduction

Breast cancer is one of the most common types of cancer in women. The mortality rates due to breast cancer are high worldwide [

1]. Millions of women are diagnosed with breast cancer every year. Mortality rates of this disease are directly related to the time of diagnosis. Mortality rates can be reduced with an early breast cancer diagnosis and treatment. Therefore, the development of cancer screening, examination, and imaging technologies is extremely important [

2,

3]. According to World Health Organization (WHO) data, in 2022, 670,000 people died due to breast cancer [

4]. According to research conducted worldwide, breast cancer is the most common type of cancer in women in 157 of 185 countries. Breast cancer is a type of disease seen in every country worldwide [

4]. The World Health Organization (WHO) aims to prevent 2.5 million deaths from breast cancer between 2020 and 2040 by reducing global breast cancer mortality rates by 2.5% per year. To reduce the breast cancer mortality rate worldwide and prevent 25% of deaths by 2030 and 40% by 2040. To achieve these goals, regular health check-ups, early diagnosis, and comprehensive breast cancer management are required [

4]. Early diagnosis methods are usually performed with medical imaging techniques such as mammography, magnetic resonance imaging (MRI) [

5], and ultrasound (US) [

2,

6,

7,

8,

9]. Early diagnosis methods include medical imaging techniques such as mammography, magnetic resonance imaging (MRI), and ultrasound (US). Ultrasound offers an important alternative, especially in women with dense breast tissue, because it is non-invasive and easily accessible. However, the interpretation of ultrasound images usually depends on the experience of the radiologist, which increases subjectivity. In recent years, artificial intelligence and deep learning-based systems have been developed for the automatic classification of breast lesions from ultrasound images and are integrated into clinical applications as an auxiliary tool in early diagnosis processes. Thus, the development of artificial intelligence-based automatic detection and diagnosis methods for medical image analysis increases diagnostic accuracy and reduces clinical workload. The accuracy rate is constantly increased when the studies conducted in this field in recent years are examined, and new generation architectures such as Vision Transformer (ViT) are being developed.

1.1. Related Works

Many artificial intelligence-based models are used for automatic detection using cancer images. Different models are used for the detection of cancerous images, segmentation of cancerous areas, and determination of cancer type. In this study, cancer images were classified using the BUSI dataset. Studies developed using the BUSI dataset in the literature were investigated and their contributions were examined.

Table 1 summarizes the current studies and methods in the literature using the BUSI dataset.

Table 1 summarizes current studies on classification and segmentation using the BUSI dataset and highlights two major trends: (i) a transition from CNN-based models to hybrid CNN–transformer and pure transformer architectures, and (ii) an increasing use of segmentation methods to support classification. Classification approaches are generally developed either for binary setups (cancer vs. normal) or for three-class setups (normal, benign, malignant). Most studies rely on deep or machine learning methods, where feature extraction is typically performed with pre-trained models and then classified using deep or machine learning classifiers. Hybrid strategies that combine multiple models are often employed to achieve higher accuracy, and more recent approaches, such as the vision transformer, divide images into patches to capture long-range dependencies. Segmentation-based methods have also become prominent, supported by lesion masks available in the BUSI dataset, with architectures such as U-Net++, EfficientUNet, Attention-driven U-Net, and CMU-Net being widely used. While binary classification setups frequently achieve very high accuracies, the three-class scenario remains more challenging and requires ROI-focused preprocessing, stronger regularization, and class balancing. ROI-based approaches are particularly critical as they enable the model to focus on diagnostically relevant lesion regions while reducing the influence of noisy background tissue, thereby improving both accuracy and clinical interpretability. Over the past five years, transformer-based models, hybrid architectures, and optimized U-Net derivatives have increasingly dominated BUSI-related research. These developments formed the motivation for our proposed method, which explicitly combines ROI cropping to suppress background noise, Vision Transformer architectures to capture global contextual information, and focal loss to alleviate the inherent class imbalance in the BUSI dataset.

1.2. Contribution and Novelty

The main contributions of this study to the literature can be summarized as follows.

ROI-Based Transformer Classification: Lesion regions (ROIs) were automatically extracted with mask files based on expert annotations, which were directly input to the model. Thus, only meaningful regions were classified.

The use of vision transformer (ViT) models over traditional CNN-based approaches to capture global dependencies in breast ultrasound images, which are often overlooked by local receptive field structures in CNNs.

A hybrid architecture combining ViT and a custom-designed MLP head, which enhances classification capability by capturing both global and non-linear patterns.

The integration of an ensemble of ViT variants (ViT-Base, DeiT, and ViT-Small), which boosts robustness and generalization across different folds.

The use of focal loss with a customized α coefficient to mitigate class imbalance—especially relevant due to the natural distribution of benign, malignant, and normal cases in the BUSI dataset.

Advanced data augmentation techniques (CutMix, ElasticTransform) and test-time augmentation (TTA) strategies to prevent overfitting and improve generalization.

Comprehensive Evaluation: The model was tested with 10-fold stratified cross-validation; many metrics, such as accuracy, precision, recall, F1-score, specificity, and AUC, were reported in detail on a class basis.

High Applicability: High recall and AUC values were obtained for the clinically critical class malignant, demonstrating a reliable decision support potential for early diagnosis.

With these aspects, the study aims to fill the gap in the literature by addressing the applicability of ViT-based architectures in medical image classification in an ROI-aware and ensemble structure.

2. Materials and Methods

In this study, a new classification approach based on mask-based ROI segmentation and ViT architecture is proposed to distinguish benign, malignant, and normal tissues in breast ultrasound images. The lesion region was extracted from each ultrasound image using expert annotation-based mask images. The obtained ROIs are enriched with various data augmentation techniques and given as input to pre-trained vision transformer (ViT) models. The representations obtained from the Transformer are classified using a multilayer MLP header structure. In addition, the results obtained from three different ViT models are combined with the ensemble method to improve the overall prediction performance. Focal loss and CutMix regularization strategies are applied to address class imbalance. The proposed method was tested with a 10-fold cross-validation, and its reliability was verified. The details of this approach are presented under headings in this section.

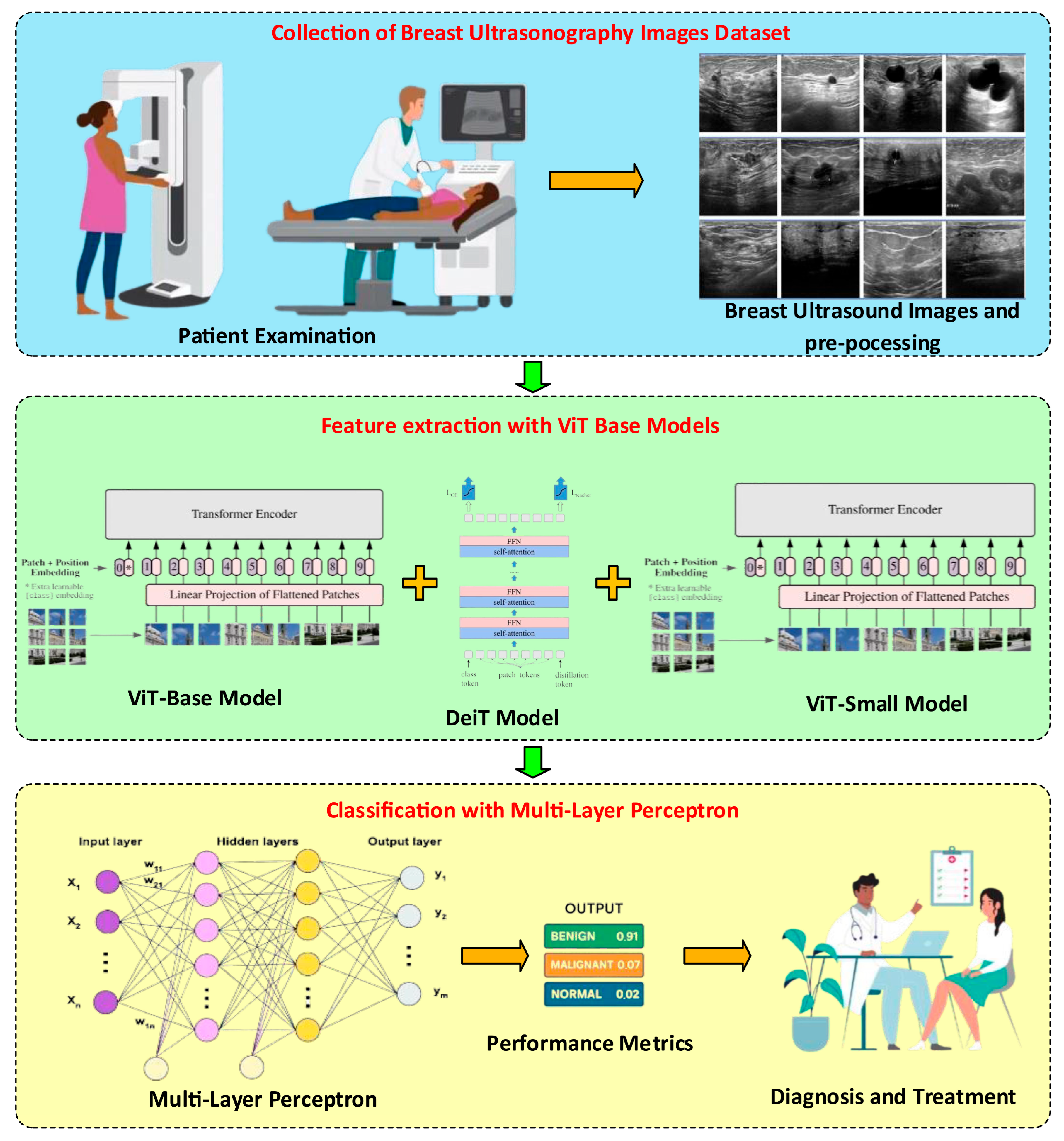

Figure 1 summarizes the general architecture of the proposed method.

Figure 1 shows that the proposed method comprises three basic steps. In the first step, we investigated the BUSI dataset. Image preprocessing was performed on the BUSI dataset images. In the image preprocessing steps, the lesion region (ROI) was automatically extracted using the method. The purpose of determining and cropping the lesion regions and cropping them from the main image is to ensure that the model is trained on the real image. In particular, it provides feature extraction on lesion images instead of the details in the non-lesion regions. The number of images was then increased using general image augmentation algorithms. After the preprocessing step, feature extraction was performed on the normal, benign, and malignant images obtained. ViT-based (ViT_base, DeiT, and ViT_small) models were used for feature extraction. Due to the different structures of these three models, three different feature extractions were performed, and the obtained features were combined and used for classification. The multilayer perceptron model was used for the classification process.

2.1. BUSI Dataset Features

In this study, the BUSI breast ultrasound images dataset published by Al-Dhabyani et al. and made publicly available [

3] was used. The dataset consists of

780 ultrasound images belonging to three different classes:

benign, malignant, and normal (

Table 2). The expert-created lesion area is shown in the binary mask files for each image. Because no lesion exists in the images belonging to the “Normal” class, there is no mask file for this class. This section may be divided by subheadings. It should provide a concise and precise description of the experimental results, their interpretation, as well as the experimental conclusions that can be drawn.

The images are in png format and their resolutions vary. Mask files show each lesion area in binary format and are indicated with the _mask extension in the file names. These masks are used to determine the tumor area, allowing only meaningful (lesion-containing) regions to be included in the model input. This approach is important in terms of focusing the model’s attention only on relevant regions during the learning process. Sample images from the BUSI dataset are given in

Figure 2.

2.2. ROI Segmentation and Image Preprocessing

For each ultrasound image belonging to the

benign and malignant classes in the dataset,

the lesion region (ROI) was automatically extracted using matching mask files. In this process, if multiple mask files corresponding to each image were used, these masks were combined by finding the maximum value pixel-by-pixel. The resulting combined mask defines the region where the tumor area.

The active pixel coordinates of the obtained mask (i.e., pixels with values different from zero) were determined, and

a 5% horizontal and vertical buffer margin was added around the area where the lesion was located, thus taking into account the tissues around the lesion. The following equation illustrates the establishment of the ROI boundaries were established in Equation (1).

Here (x

min,x

max) and (y

min,y

max) represent the boundaries of the masked lesion region, wpad, and hpad represent the buffer ratios calculated over the image width and height, respectively. Using these boundaries, only the region of interest (ROI) was cropped from the images, ensuring that the model learned only from tumor-containing tissues. This process both increases the generalization ability of the model and reduces the effect of noisy background data. After the ROI cropping process, all images were resized to 224 × 224 for model compatibility. During the normalization process, the transformation was made as in Equation (2) so that the mean μ = 0.5 and the standard deviation σ = 0.5 for the RGB channels:

These preprocessing steps ensure that the data are processed more consistently and decisively by the model. This entire process was performed automatically during data loading, without any manual intervention. It was embedded in the custom BUSI Dataset PyTorch 2.5.0 class, and applies the same logic consistently for all images during both training and evaluation. This automated pipeline ensured that the model focuses only on the relevant lesion region, improving convergence and reducing background noise. Sample images from the ROI segmentation process are given in

Figure 3.

2.3. Data Augmentation Techniques

To enhance the generalization capabilities of deep learning models and mitigate the risk of overfitting during the training process,

multiple data augmentation techniques were applied to the training images. These methods were developed to modify the spatial arrangement of images (location, size, direction) and their photometric properties (luminance, sharpness, defocus). Thus, the model was made more robust to variations in real-world conditions. All augmentation operations were implemented using the

Albumentations library, which is renowned for its powerful and flexible structure. During the training process, each image was subjected to the transformations shown in

Table 3.

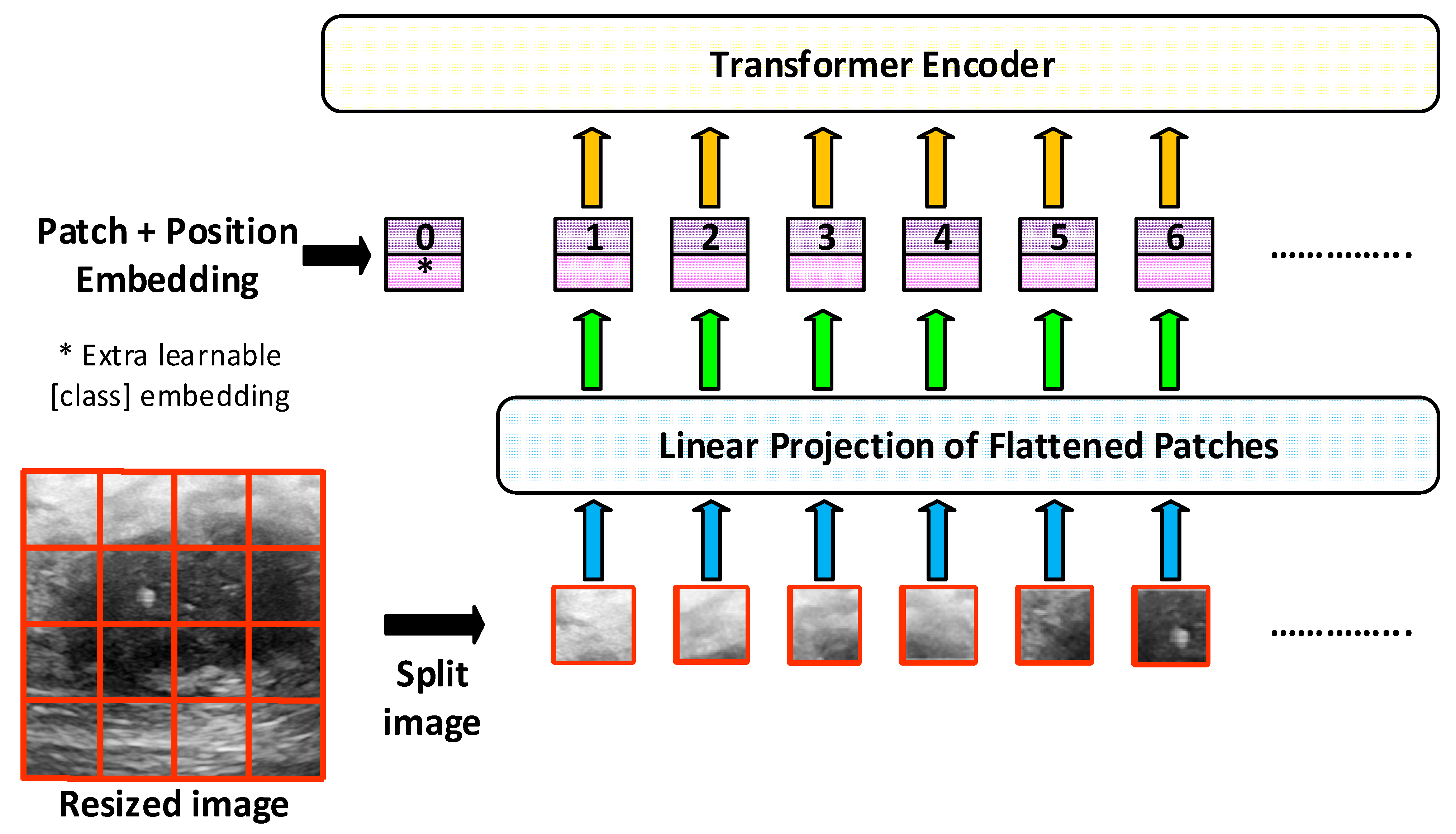

2.4. Vision Transformer-Based Feature Extraction

In this study, the vision transformer (ViT) architecture is used as an image-based classification model. Unlike traditional convolutional neural networks (CNNs), ViT divides images into small fixed-sized patches and feeds each one to a Transformer in turn. The Transformer architecture is notable for its ability to learn global context relationships between all patches and shows strong performance, especially in complex structures, such as medical images. The basic steps of the model can be summarized as follows:

Patch generation: The input image is of size

and is divided into small pieces of size

P × P. The number of each patch is calculated in Equation (3).

Flattening and Embedding: Each patch is converted to a fixed-size vector using the linear layer and Equation (4):

Here, E is the embedding weight matrix and represents the (pi)th patch.

Positional Embedding: The transformers lack position information; therefore, the fixed or learnable

positional embedding vectors are summed with P (Equation (5)):

Transformer Encoder Layers: Input vectors are passed through blocks consisting of multi-head self-attention (MHSA) and feedforward network layers (Equation (6)):

Here, LN represents the layer normalization process and MLP represents the two-layer fully connected network.

Classification Vector (CLS token): The [CLS] vector, which is specially placed at the end of the transformer, is used to represent the entire image. This vector is transferred to the classification head (MLP).

Vision transformer (ViT) is a deep learning architecture used in the field of image processing and is generally used in image classification [

26]. Transformer architectures, which were first used in the field of natural language processing, have been adapted to the field of image processing thanks to ViT. The purpose of this model is to divide the image into pieces. It usually divides the 224 × 224 image into small squares of 16 × 16. Each divided small piece is flattened and turned into a vector. Then, positional encoding is added for the location information in the image. Thus, feature extraction is performed on the image.

In this study, pre-trained ViT models such as vit_base_patch16_224, deit_base_distilled_patch16_224, and vit_small_patch16_224 are used. The general architecture of the ViT model is shown in

Figure 4.

In this study, the base and small types of the basic ViT model given in

Figure 3 are used. ViT-Small and ViT-Base models generally have the same vision transformer (ViT) architecture. However, they differ in terms of the parameter size, number of layers, and computational power. The ViT-Base model is preferred for deep analysis and strong classification performance in large datasets, where higher accuracy is required [

26,

27,

28,

29,

30]. The ViT-Small model is used for rapid prototyping, resource-limited environments, and developing practical applications in smaller datasets [

26].

Table 4 summarizes the general features of ViT-Base and ViT-Small models used in this study.

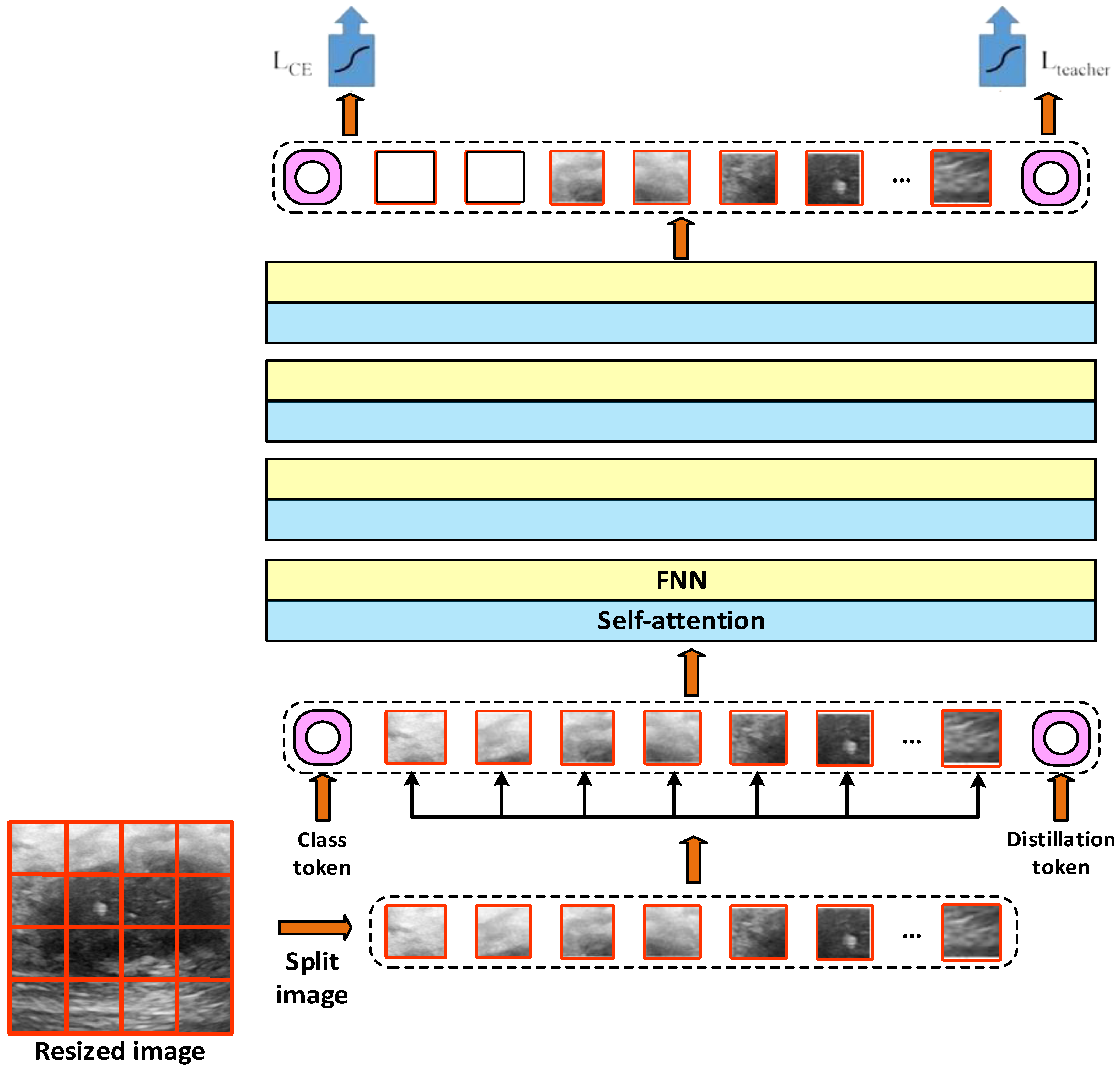

In addition to the features obtained with ViT-Base and ViT-Small, we performed feature extraction using the DeiT (data-efficient image transformer) model. DeiT is similar to the classical vision transformer (ViT) architecture. However, it is optimized for less data and higher accuracy [

31,

32,

33,

34,

35,

36]. The general architecture of the DeiT model is presented in

Figure 5 [

32].

Figure 4 shows that according to the DeiT model, the images are arranged according to a size of 224 × 224. These fixed-size images are divided into small pieces of 16 × 16 size. Each patch is flattened and converted to a fixed-size vector. The class token and the DeiT-specific distillation token are also included. The position information is added to the patch vectors and tokens. It is passed through multilayer self-attention and feedforward network (FNN) blocks. Classification is performed using the Class and Distillation token. Information from both the image and teaching model is blended thanks to the distillation token. The outputs of these models are then transferred to the defined multilayer perceptron (MLP) classifier.

2.5. Multi-Layer Perceptron (MLP) Classifier

Although the Transformer architecture is quite successful in extracting image representations, a final layer structure is usually needed to adapt these representations to the classification task. In this study, a three-class classifier is designed by adding

a multilayer perceptron (MLP) header structure to the end of the vision transformer (ViT) model. This MLP header takes the [CLS] vector obtained from the transformer as the input and produces the final class predictions. The layer structure of the MLP classifier header is listed in

Table 5.

The general structure of the model can be represented mathematically as in Equation (7):

Here, x represents the transformer output vector, W1,W2 weight matrices, b1,b2 represent bias terms. Thanks to this structure, high-dimensional representations of the transformer are classified through nonlinear transformations, allowing more precise distinctions to be made between different types of lesions.

2.6. Loss Function with Focal Loss

One of the most important difficulties frequently encountered in medical image classification problems is

class imbalance. In the BUSI dataset, there is a significant difference in the number of samples between the classes benign (437 samples), malignant (210 samples), and normal (133 samples). This situation may cause the model to be biased towards the majority class. In order to reduce this problem,

focal loss is used instead of the traditional cross-entropy loss in this study. Focal loss encourages the model to learn these classes by increasing the weight of the samples belonging to rare classes in the loss function. Its basic formula is given in Equation (8).

Here, pt is the probability that the model predicts the correct class. αt is the class-weight coefficient used to balance class imbalance. γ is the focusing parameter that reduces the contribution of correctly classified examples. When γ > 0, the model learns more about difficult examples. In this study, α = [1.0, 2.0, 1.0] is selected as the class weights (the malignant class is weighted twice as much), and γ = 2 is used as the focusing parameter. Thanks to this structure, the model can effectively recognize examples from minority classes without overfitting in the majority classes. Focal loss plays a critical role in improving performance, especially in the distinction between benign and malignant classes.

2.7. Editing with CutMix (Regularization)

In order to reduce overfitting in deep learning models and make the decision boundaries of the model more generalizable, a data augmentation-based regularization method called CutMix was applied in this study. The CutMix method combines two different images and performs a mixture at both the image and label level, allowing the model to learn more flexible representations. The CutMix method steps;

Two images (xA and xB) were randomly selected from the training samples.

A random rectangular region is determined from these images.

The pixels xB in the same region are pasted onto the selected region xA (Equation (9)):

Here, M represents the binary mask matrix and ⊙ represents element-wise multiplication.

- 4.

Similarly, the target labels are also shuffled according to the area covered by the region (Equation (10)):

Here, λ∈[0, 1] denotes the area ratio of the pasted region in the image and is usually sampled by the Beta distribution (e.g., Beta(α,α). In this study, α = 1.0 is chosen. Thanks to the CutMix application, the model learns not only from complementary examples for each class but also from mixed examples, making the decision boundaries smoother and more generalizable. This increases the learning capacity of the model, especially in classes with low sample numbers.

2.8. Training Strategy and Cross Validation

In order to evaluate the reliability of the model and to use the limited size of the dataset more efficiently,

the Stratified k-Fold Cross Validation method was applied in this study. This method divides the entire dataset into kkk subsets. One subset is used as validation data for each fold, and the remaining k − 1 subsets are used as training data. In addition, thanks to the

stratified approach preserves the distribution of classes within each fold according to the ratios in the original dataset. The parameters used in the training process are shown in

Table 6.

2.9. Ensemble Modeling Approach

In order to increase the classification performance of deep learning-based models and to strengthen model generalization, the

ensemble modeling approach is adopted in this study. Ensemble learning combines the predictions of multiple models to form a single decision mechanism, thus reducing the impact of individual errors while benefiting from the strengths of each model. In this study,

the average ensemble is created by combining the outputs of the three different

vision transformer (ViT)-based models listed below (vit_base_patch16_224, vit_small_patch16_224, deit_base_distilled_patch16_224). Each model was trained on the same training data and the prediction probabilities (softmax scores) for the input image were obtained during validation. Then, the outputs of these models are specified in Equation (11).

Here represents the prediction probability vector of the i-th model, N represents the total number of models (3 in this study), represents the final prediction. The average prediction vector obtained is then assigned to the class with the highest probability using the argmax operation, and the final decision is made. Thanks to this method, the learning differences of each model are balanced, the over-learning effect caused by random variations is reduced, and the discrimination performance between classes is improved. The application of the ensemble strategy results in higher accuracy and F1-score than single models. These results demonstrate the effectiveness of ensemble modeling in medical image classification applications.

2.10. Performance Evaluation Metrics

In addition, to combat the class imbalance problem, a weighted random sampler was used during training, and samples belonging to minority classes were presented to the model more frequently during training. The sampling weights of each sample were inversely proportional to the class frequencies. The predictions and accuracy values obtained at the end of each fold were stored, and the average of the overall performance was calculated; thus, the generalizability of the model was evaluated in a statistically reliable manner.

3. Results

In this study, a comprehensive set of augmentation techniques was applied during training to improve the model’s generalization ability and to reduce the risk of overfitting. Using the Albumentations library, we implemented horizontal and vertical flipping, brightness and contrast adjustment, shift-scale-rotation, elastic transformation, Gaussian blur, and coarse dropout, each with carefully constrained parameter ranges to prevent unrealistic distortions while still introducing clinically meaningful variability. In addition to these transformations, CutMix was employed to generate mixed samples that smooth decision boundaries and improve minority-class learning, while test-time augmentation (TTA) was used to reduce prediction variance during inference. To quantify the effect of these augmentations, we conducted a comparative experiment against a baseline model trained on the original dataset without any augmentation. The results, summarized in

Table 7, clearly demonstrate the effectiveness of the augmentation strategy: accuracy increased from 87.92% to 94.03%, macro-averaged F1-score improved from 0.8614 to 0.9300, and recall values also showed substantial gains across classes. These findings highlight that augmentation techniques such as CutMix, elastic transformation, and brightness–rotation not only act as a safeguard against overfitting but also significantly enhance the robustness and generalization capability of the proposed model in the challenging three-class classification of breast ultrasound images.

Detailed evaluation results of the proposed ViT + MLP + Ensemble-based classification model on the BUSI dataset for three classes (benign, malignant, normal) are presented in

Table 8. The accuracy, precision, recall, specificity, and F1-score metrics calculated for each class comprehensively reveal the success of the model on a class basis. Additionally, training time and parameter size are reported, indicating that the model converges efficiently (~810 s) despite its relatively high capacity (~86 million parameters).

The results obtained for the

benign class show that the model recognizes images belonging to this class with high accuracy. The high

precision value of 0.9620 shows that nearly all the samples predicted as benign actually belong to the correct class. On the other hand, the

recall value of 0.8636 shows that the model may miss some benign samples (false negatives). Nevertheless, the

F1-score of 0.9102 indicates a balanced overall performance.

The malignant class is of particular importance as it is the most critical class from a clinical perspective.

The recall value obtained for this class is quite high at 0.9286, indicating that the model has a high rate of correctly recognizing malignant cases. However,

the precision value is relatively low at

0.7647; in other words, the model may occasionally incorrectly predict benign samples as malignant (false positive). This situation is also reflected in the relatively

low specificity value of 0.8947. However, the

F1-score of 0.8387 indicates a successful overall recall performance. The performance of the model for

the normal class is close to perfect. All metrics were calculated as

1.0000, indicating that the model correctly recognized all samples in the normal class and did not cause any confusion. This shows that the proposed method can reliably distinguish healthy tissues from other tissues. In general, the proposed method produced extremely successful results in the benign and normal classes and was successful in capturing clinically critical samples by maintaining high sensitivity in the malignant class. These results strongly support the applicability of the proposed method to medical decision support systems.

Figure 6 (loss curve and accuracy curve) visualizes how

the loss and accuracy values of the proposed model in the training process change with the number of epochs. When

the loss curve is examined, both training and validation losses generally show a decreasing trend, and the model makes fewer errors over time. In particular, the sudden jumps observed in validation loss are quite natural in cases such as medical imaging, where the data distribution is complex and the number of classes is unbalanced.

Despite these fluctuations, the low level of general loss shows that the model performs a balanced learning process away from the

overfitting tendency. The Validation Accuracy Curve shows that the model quickly reaches high accuracy levels after the first few epochs and largely maintains this level during the training period. The fact that the validation accuracy is fixed at 90% levels in the training conducted for 500 epochs shows that the model has learned

a stable and generalizable structure. Accuracy fluctuations observed in early epochs indicate local errors that may arise from imbalance, especially in minority classes. These curves show that the model

has high success on both training and validation data, avoids overfitting, and develops a robust generalization capacity.

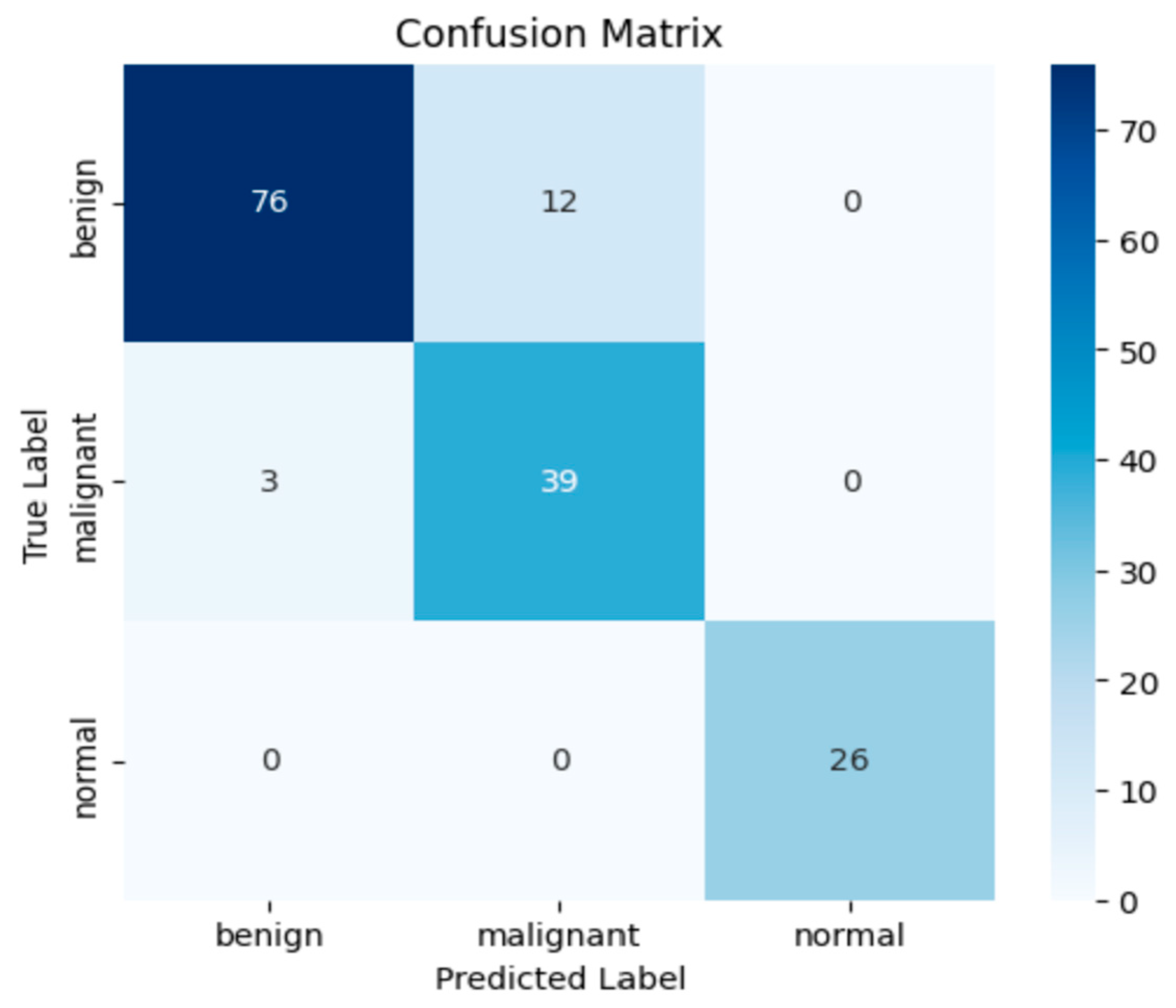

Figure 7 presents the confusion matrix results.

The confusion matrix presented in

Figure 6 provides a detailed insight into the classification performance of the proposed model. Out of 88 benign samples, 76 were correctly classified (accuracy: 86.4%), while 12 were misclassified as malignant. For the malignant class, the model achieved a correct classification rate of 92.9%, with 39 out of 42 samples accurately predicted and only 3 misclassified as benign. Most notably, the normal class exhibited perfect classification performance, with all 26 samples correctly identified, yielding a class accuracy of 100%. These results underline the model’s strong sensitivity and precision, particularly in detecting malignant lesions, which are clinically critical. The overall confusion matrix supports the model’s high discriminative power and robust generalization across all classes.

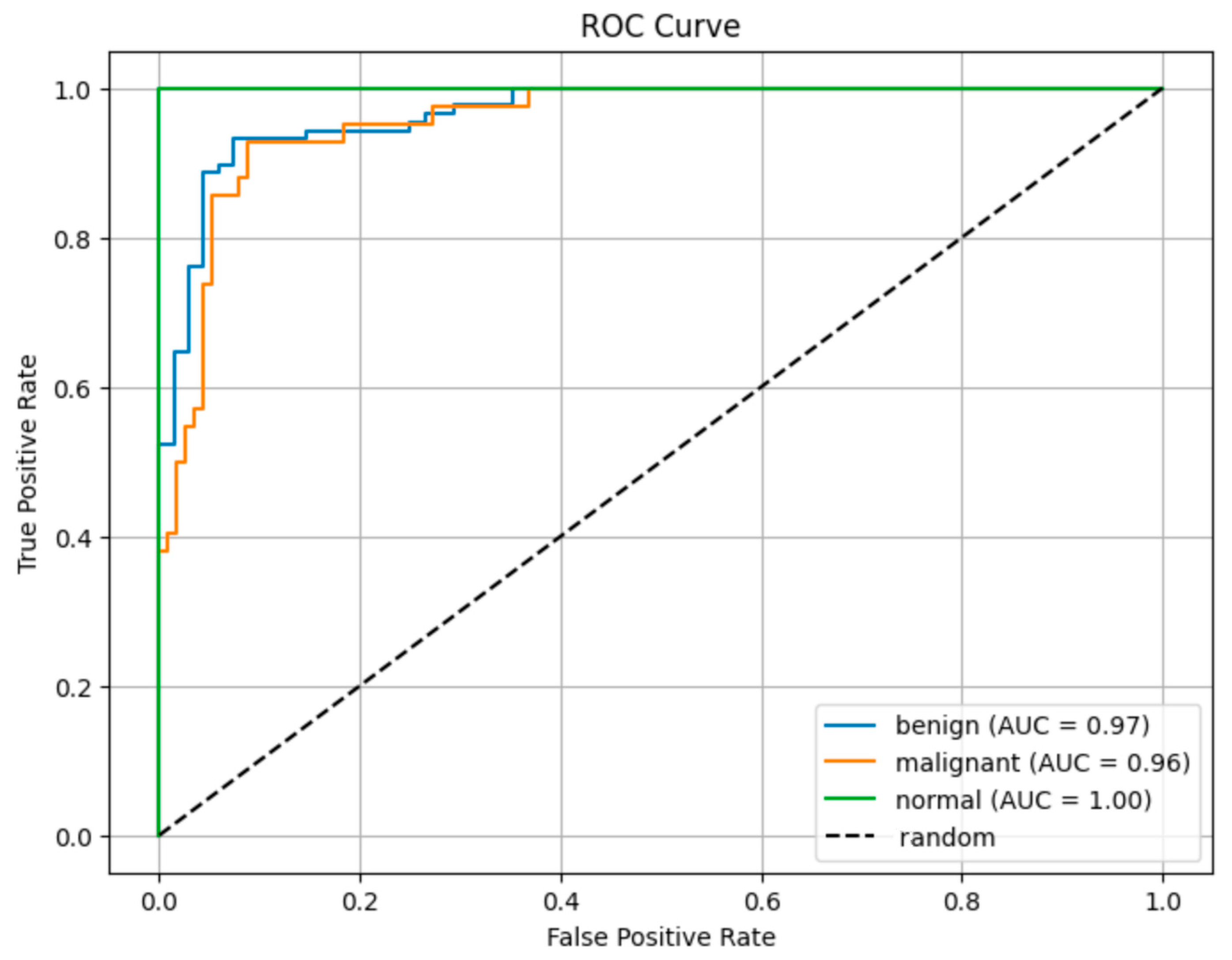

Figure 8 shows the ROC curve results.

The ROC curves in

Figure 7 show the class discrimination performance of the proposed model. A comparison of AUC values based on ROC curves reveals that the proposed model has high discrimination power among all classes.

The AUC value of 0.96 obtained in the malignant class shows that the model can successfully recognize this clinically critical class with high success.

The AUC value of 1.00 for the normal class proves that the model distinguishes the samples in this class without error. The AUC of 0.97 obtained in the benign class shows that the model can successfully distinguish benign cases from malignant ones. The fact that all AUC values are above 0.95 reveals that the model offers

a strong general discrimination capacity despite the unbalanced data distribution. 4. Discussion

In this study, the proposed model approach based on ViT, which was developed to classify benign, malignant, and normal tissues from breast ultrasound images, is supported by mask-based ROI segmentation, advanced data augmentation, focal loss, CutMix, and ensemble strategies.

To evaluate the contribution of the ensemble strategy, each individual ViT-based model was also trained and tested independently using the same experimental setup.

Table 9 compares the performance metrics of ViT-Base, DeiT-Base, and ViT-Small with the proposed ensemble model. While all standalone ViT architectures achieved relatively high classification accuracy (above 90%), the ensemble approach outperformed all individual models, achieving 94.03% accuracy, 0.94 precision, 0.93 recall, and an F1-score of 0.935. These results validate that combining complementary transformer architectures through an ensemble strategy significantly enhances model robustness and generalizability.

To comprehensively evaluate the performance of the proposed ViT + MLP ensemble architecture, we compared it with several widely adopted deep learning models in medical image classification, including ResNet50, DenseNet121, VGG19, and Xception (

Table 10). The results showed that our method achieved the highest overall accuracy (94.03%) and the highest macro-averaged F1-score (0.935) among all models evaluated. While DenseNet121 and Xception performed competitively in terms of F1-score and parameter efficiency, the ViT-based ensemble model demonstrated clear superiority in both global feature extraction and multi-class discrimination, confirming the advantage of incorporating Transformer-based architectures. In addition to outperforming CNN-based models, our ensemble design, despite its relatively higher parameter size (~258 million), provides a favorable trade-off between accuracy and computational complexity, as it significantly improves robustness and generalization across the three-class setup. Class-wise evaluations further highlight the strengths and limitations of the model: for the malignant class, recall was high at 0.9286, ensuring that clinically critical malignant lesions were rarely missed, but precision was relatively lower at 0.7647, indicating an increased false positive rate where some benign lesions were misclassified as malignant. In contrast, the normal class achieved perfect scores across all metrics (accuracy, precision, recall, F1 = 1.0000), and ROC/AUC values further validated the discriminative power of the model with 0.97 (benign), 0.96 (malignant), and 1.00 (normal). These strong results are attributed to the synergy of ROI cropping, which minimizes background noise; transformer-based attention, which captures long-range contextual dependencies; and focal loss with weighted sampling, which addresses the imbalance between benign, malignant, and normal cases. Nevertheless, several shortcomings remain: (i) the recall–precision trade-off in the malignant class, (ii) dependence on expert-provided ROI masks that may limit deployment in mask-free clinical workflows, (iii) the relatively large parameter size of the ensemble model, (iv) reliance on a single-center dataset with inherent class imbalance, and (v) the absence of patient-level aggregation strategies that could further reduce false positives. Future work will focus on addressing these limitations through multi-center validations, weakly supervised methods that remove ROI dependency, lightweight transformer architectures for clinical feasibility, and improved calibration and patient-level decision strategies.

The experimental results show that the proposed model exhibits high accuracy and discriminative performance in all three classes. The high sensitivity (recall: 0.9286) obtained in the malignant class shows that the model can successfully detect clinically critical malignant lesions to a large extent. However, the lower precision value (0.7647) indicates that some benign samples were incorrectly classified as malignant. Although this situation potentially increases the false positive rate of the model, it offers a significant advantage in terms of not missing malignant lesions. The focal loss function and class-weighted sampling play an important role in this result. Obtaining 100% accuracy and AUC (1.00) in the normal class shows that the model is extremely successful in distinguishing healthy tissues from lesions. This is quite valuable in terms of both the low error rate and reliability of decision support systems. The success of the model can be associated with the Vision Transformer architecture’s ability to learn global context relationships in medical images more effectively than CNN-based approaches. In addition, combining ViT models with the ensemble (multiplexing) method increased the generalization capacity of the model and provided consistent performance on validation sets. When the curve analyses (loss, accuracy) were examined, it was determined that the model performed stable learning during the training process and that there was no over-learning tendency. However, this study has some limitations. First, the fact that the dataset was single-center and had a limited number of samples may not fully reflect the performance of the developed model on real-world data. In addition, the imbalance in the class distribution (benign > malignant > normal) made learning minority classes difficult for the model. Future studies with multi-center, larger, and balanced data sets will allow a more reliable test of the external validity of the model. This study has shown that ViT-based approaches provide promising results in medical image classification. The developed model is a strong candidate for clinical decision support systems owing to its high accuracy and reliable classification performance. The comparison of the performance of the proposed method with studies in the literature is listed in

Table 11.

Recently, various deep learning-based approaches have been used in the classification of breast ultrasound images.

Table 11 shows that the success rates of the methods in the literature vary depending on the model architecture used, data augmentation strategies, and the addition of innovative components. In the studies conducted by Walid Al-Dhabyani et al. [

7] on the BUSI dataset, the classical CNN architecture AlexNet was used and the effect of data augmentation methods was investigated. The highest accuracy reached 94% with the integration of DAGAN augmentation and transfer learning were integrated. However, the model structure remained at the basic CNN level, and more modern Transformer-based architectures were not evaluated. Jabeen et al. [

1] tested different deep learning architectures together with traditional augmentation methods and reported the highest success rate in the literature as 99.1% with the combination of DarkNet-53 + RDE + RGWt + Fusion. However, the model complexity in this study was quite high, and region-based analysis or a transformer architecture was not used. A comprehensive study conducted by Pa [

6] compared both CNN-based (AlexNet, VGG16, ResNet series, EfficientNet) and transformer-based (Swin transformer, vision transformer) models. The use of the vision transformer achieved 88.6% accuracy. Techniques, such as ensemble learning and focal loss, that reduce class imbalance were also not used. Gheflati et al. [

2] calculated success rates of 82% and 83% by comparing the ViT-B/32 and ResNet models. Although this result shows the advantages of the ViT architecture, we did not try smaller ViT variants (e.g., ViT-Small), or strong optimization techniques were not tried. Liu et al. [

10] achieved 97.18% accuracy with a deep neural network (DNN) based structure, but they did not include transformer architectures and regional images. Ali et al. [

11] reported 90% accuracy with the ResNet50, DenseNet121, and ensemble meta-model approaches. However, the potential of the transformer structure was not evaluated in this study. Xiong et al. [

13] achieved 88.6% accuracy using UNet + ResNet combinations and SIFT-based methods. Although image segmentation integration is provided, transformer models are excluded.

Newer studies also demonstrate strong performance on BUSInusing diverse architectures and strategies. CNN-based methods with VGG16 backbones [

15], customized AlexNet variants [

16], and EfficientNet-B7 with explainable AI [

20] have reported accuracies exceeding 96%, with some reaching over 99%. Hybrid CNN–transformer approaches [

17] and advanced feature fusion techniques [

14] have also demonstrated strong performance, while other works explored alternative paradigms such as graph convolutional networks [

18] and meta-learning frameworks [

19].

In comparison, our proposed method (ROI extraction + ViT + MLP + Ensemble + focal loss) achieves 94.03% accuracy while addressing key limitations observed in many high-performing CNN-only models. By integrating transformer-based feature extraction, the model captures long-range dependencies and richer contextual information from lesion-focused regions, improving generalization across varied cases. The ensemble structure enhances robustness by combining complementary decision boundaries, while focal loss mitigates class imbalance—critical given the uneven class distribution in BUSI. Moreover, the ROI-based preprocessing ensures that the network focuses on diagnostically relevant regions, reducing background noise and improving interpretability. This balanced combination of accuracy, robustness, and clinical relevance positions our approach as a strong candidate for real-world deployment, with the added flexibility to incorporate explainable AI frameworks and multi-modal data in future work.

6. Conclusions

In this study, a ViT-based classification model was proposed, extensively tested, and evaluated on the BUSI breast ultrasound image dataset for three classes (benign, malignant, normal). Mask-based region of interest (ROI) cropping, data augmentation, CutMix, focal loss, and ensemble strategies were used to support the model. Performance evaluations were performed with 10-fold cross-validation (stratified 10-fold CV) and measured using metrics such as accuracy, precision, sensitivity, specificity, F1-score, and AUC. In the benign class, the model exhibited high reliability performance with 96.2% precision and 86.3% recall. In the malignant class, a 92.9% recall was obtained, demonstrating that the model can detect malignant lesions with high sensitivity. In the normal class, 100% success was achieved in all metrics, and the model could clearly distinguish healthy tissues from others. According to the confusion matrix analysis, the model occasionally confused benign samples with malignant samples, but it successfully maintained its discrimination power between classes. ROC curves also supported this success, with AUC = 0.97 and AUC = 0.96 for benign and malignant classes, respectively, and AUC = 1.00 for the normal class. Additionally, the validation accuracy curve observed throughout the training process shows that the model provides stable learning, whereas the validation loss curve shows that overlearning is not encountered. With the contribution of the ensemble structure, the generalization power of the model against different variations has increased, and stable success has been achieved, especially in minority classes.