SHAP-Based Identification of Potential Acoustic Biomarkers in Patients with Post-Thyroidectomy Voice Disorder

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Ethical Approval

Sample Size and Power Analysis

2.2. Timing of Voice Recordings

2.3. Voice Handicap Index (VHI-10) Assessment

2.4. Videolaryngostroboscopic Evaluation

2.5. Voice Data Acquisition and Preprocessing

2.6. Acoustic Feature Extraction

2.6.1. Noise Filtering

2.6.2. Frequency Band-Based Acoustic Feature Extraction

2.6.3. Power Spectral Density (PSD) and the Burg Algorithm

2.6.4. Comprehensive Extraction of Acoustic, Spectral, and Cepstral Features

2.7. Classification Using Machine Learning

2.7.1. Data Partitioning and 3-Fold Cross-Validation

2.7.2. Feature Selection and Normalization

2.7.3. Definition and Theoretical Foundations of the Models Used

2.7.4. Model Hyperparameters

2.7.5. Performance Evaluation Metrics

2.8. Model Explainability via SHAP Analysis

2.9. Evaluation of the Clinical Relevance of Stable Features Identified via SHAP Analysis

- (1)

- Features that demonstrated high contribution and stability in the classification model were identified via SHAP analysis;

- (2)

- The degree to which these features produced clinically meaningful group differences in PTVD diagnosis was assessed using Cohen’s d.

2.10. Determining Clinical Discriminative Power via ROC Analysis

2.11. TRIPOD-AI Compliance and Reporting

2.11.1. Stability and Confidence Interval Analysis

2.11.2. Statistical Analysis

2.12. Computational Environment

2.13. Model Deployment Considerations

- Quad-core CPU;

- 8 GB RAM;

- 256 GB SSD storage;

- 64-bit Windows 10 or 11 operating system.

2.14. Data and Code Availability

2.15. Use of AI Tools

3. Results

3.1. Patient Characteristics and PTVD Group Definition

3.2. Outlier Analysis

3.3. Machine Learning Findings

3.3.1. ROC-Based Model Comparisons

3.3.2. Accuracy-Based Model Comparisons

3.3.3. F1-Score-Based Model Comparisons

3.3.4. Precision-Based Model Comparisons

3.3.5. Recall-Based Model Comparisons

3.3.6. Specificity-Based Model Comparisons

3.3.7. Confusion Matrix-Based Model Comparisons

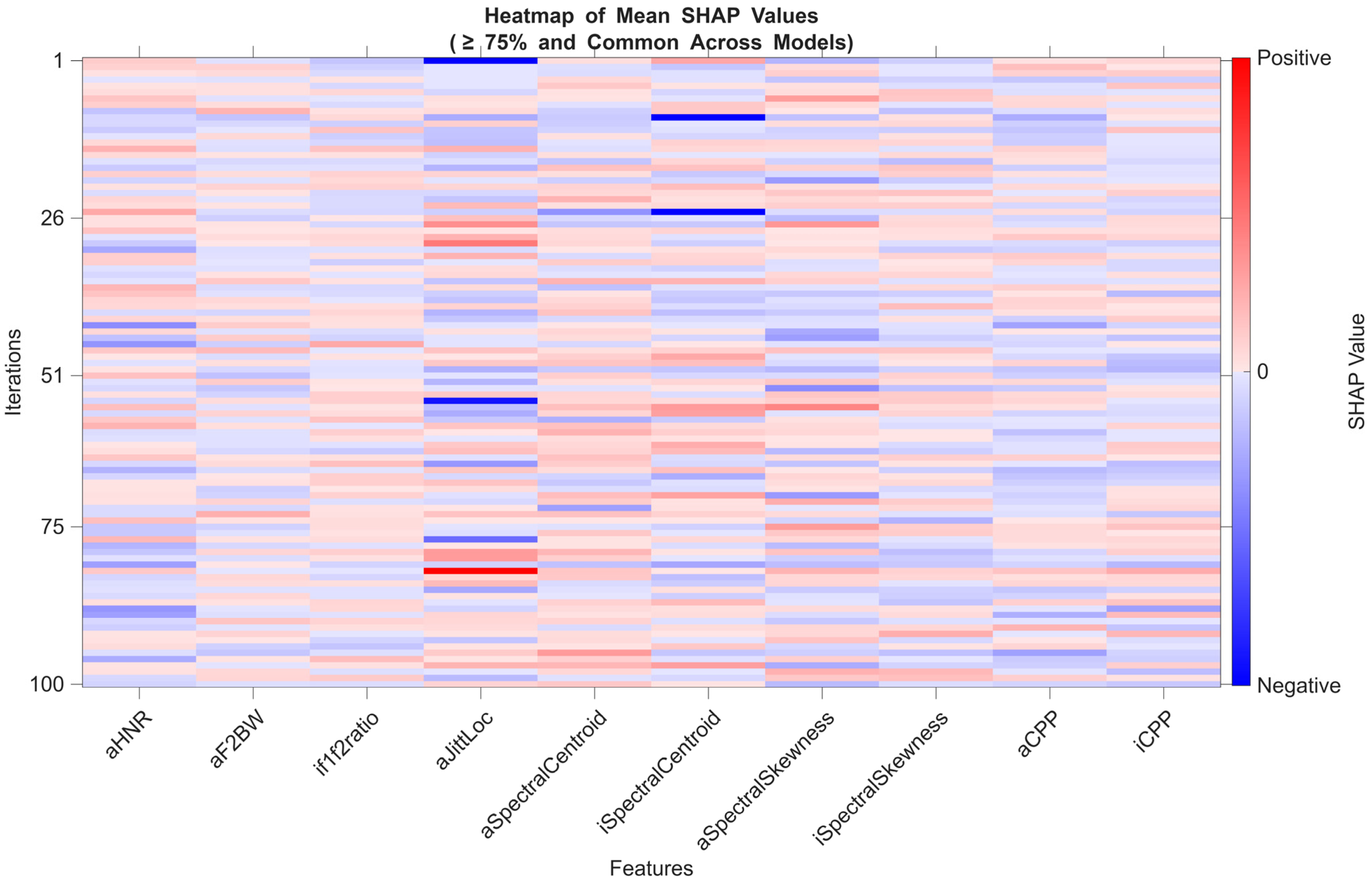

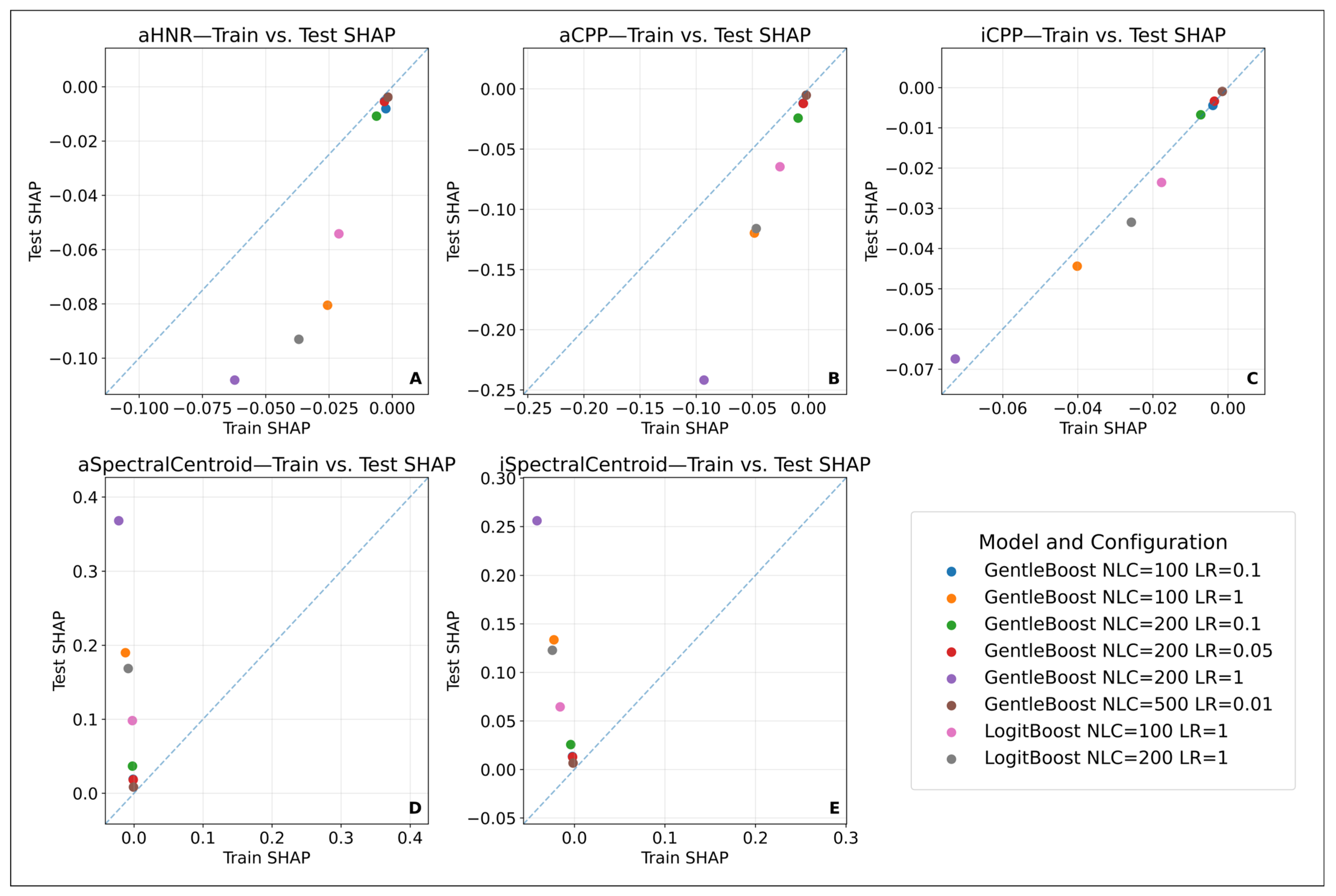

3.3.8. Model Explainability: SHAP-Based Feature Evaluation

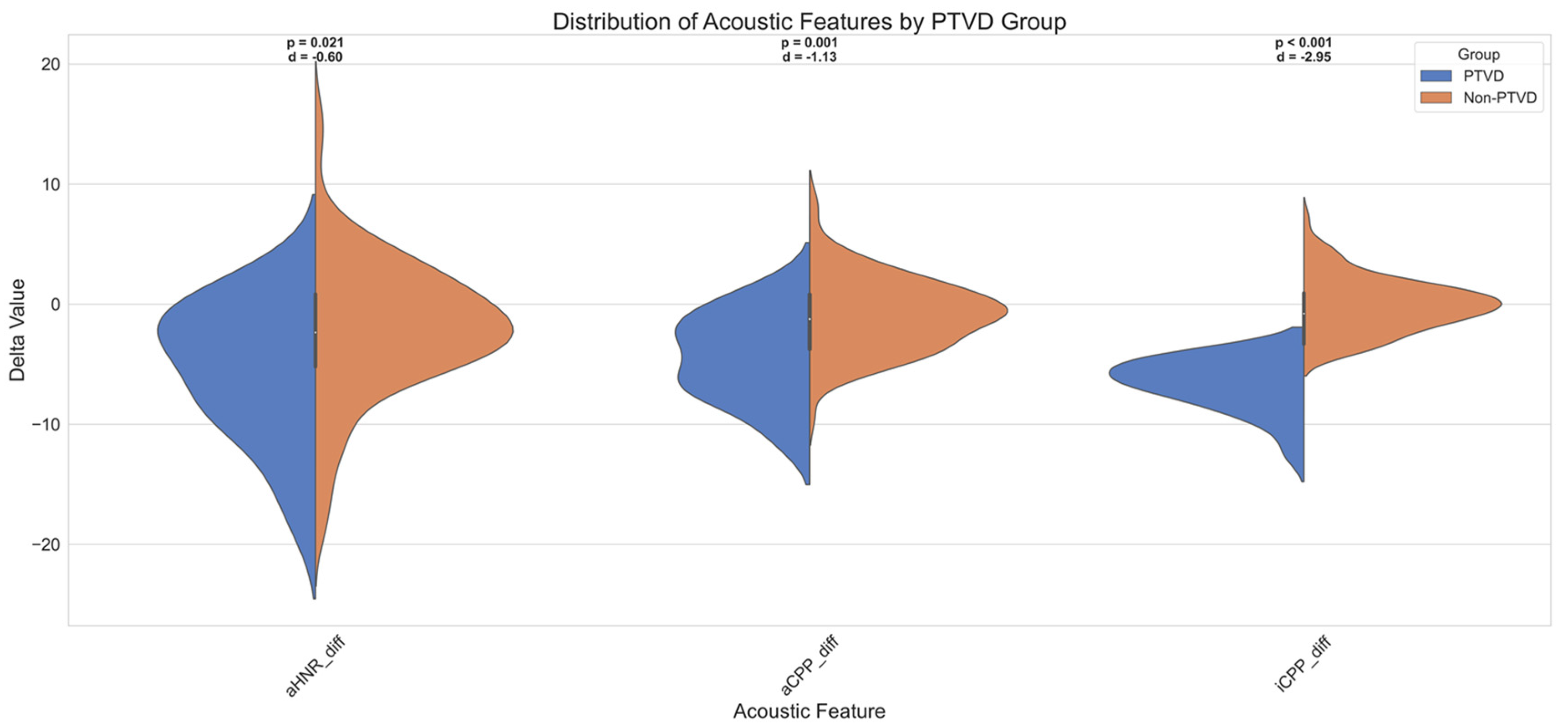

3.3.9. Diagnostic Utility of SHAP-Based Features in PTVD Identification

4. Discussion

4.1. Diagnostic Challenges of PTVD and Acoustic–Machine Learning Approaches

4.2. Classification Performance of Machine Learning Models

4.2.1. The Effect of Small Sample Size and Modelling Strategies

4.2.2. Model Configurations and the Bias–Variance Trade-Off

4.2.3. Eliminating Randomness and Ensuring Model Consistency

4.2.4. Areas of Agreement and Discrepancy Between the Findings and the Existing Literature

4.3. VHI-10 Correlation and Clinical Validation

4.4. SHAP-Based Explainability and Potential Stable Acoustic Biomarker Candidates

4.5. Clinical Implications of Stable Acoustic Biomarkers: Personalized Therapy and Early Monitoring

5. Conclusions

6. Strengths, Generalizability, Reproducibility, and Limitations

6.1. Strengths

- (1)

- After assessing normality, Cohen’s d was calculated to evaluate the effect size of the features;

- (2)

- Between-group differences were analysed using the Mann–Whitney U test;

- (3)

- Post hoc power analysis was conducted using G*Power software to assess the power of these tests.

6.2. Generalizability

6.2.1. Factors Supporting Generalizability

6.2.2. Limitations of Generalizability

- The fact that data were collected solely from Gülhane Training and Research Hospital limits direct generalization to patient groups from different geographic regions or clinical practices.

- Only /a/ and /i/ sounds were used in the study, and spontaneous speech or sentence-based recordings were not included. This may restrict model performance in natural speech scenarios.

- The dataset, consisting of a total of 252 recordings, may have limited generalizability to voice data collected using different devices, microphones, or clinical settings.

- The participant group consisted of Turkish-speaking individuals with similar clinical profiles, which may reduce the applicability of the identified biomarkers to other linguistic or cultural populations.

- The use of self-report scales such as the VHI-10 and single-observer videolaryngostroboscopic evaluations introduces the limitation of the reference standard due to potential inter-individual interpretation differences.

- Some features, such as aSpectralCentroid and iSpectralCentroid, exhibited high variance between training and test sets, which was found to be limiting in terms of consistency and generalizability.

- Since the models were trained on the MATLAB platform and their integration with Electronic Health Record (EHR) systems has not yet been tested, there may be practical limitations in transitioning to clinical applicability.

- Although the model was proposed to be compatible with clinical decision support systems, this has not yet been validated in real-world environments and remains only a theoretical suggestion.

6.3. Reproducibility

6.4. Limitations

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAO-HNS | American Academy of Otolaryngology—Head and Neck Surgery |

| aCPP | /a/ phonation cepstral peak prominence |

| aHNR | /a/ phonation harmonic-to-noise ratio |

| APQ3 | Amplitude Perturbation Quotient (3 cycles) |

| APQ5 | Amplitude Perturbation Quotient (5 cycles) |

| ATA | American Thyroid Association |

| AUC | Area Under the Curve |

| AVQI | Acoustic Voice Quality Index |

| BTA | British Thyroid Association |

| BTT | Bilateral Total Thyroidectomy |

| CI | Confidence interval |

| CPP | Cepstral peak prominence |

| CPU | Central processing unit |

| EBSLN | External branch of the superior laryngeal nerve |

| EHR | Electronic Health Record |

| f0 | Fundamental frequency |

| F1, F2 | Formant 1, formant 2 |

| F1BW, F2BW | Bandwidth of formant 1, 2 |

| FN | False negative |

| FP | False positive |

| GPU | Graphical processing unit |

| HNR | Harmonic-to-noise ratio |

| INMSG | International Neural Monitoring Study Group |

| iCPP | /i/ phonation cepstral peak prominence |

| KVKK | Kişisel Verilerin Korunması Kanunu (Turkish Personal Data Protection Law) |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| L-EMG | Laryngeal electromyography |

| ML | Machine learning |

| PPQ5 | Pitch Perturbation Quotient (5 cycles) |

| PSD | Power spectral density |

| PTVD | Post-thyroidectomy vocal dysfunction |

| RAP | Relative Average Perturbation |

| RBF | Radial Basis Function |

| RLN | Recurrent laryngeal nerve |

| ROC | Receiver operating characteristic |

| SD | Standard deviation |

| SHAP | SHapley Additive exPlanations |

| SMO | Sequential Minimal Optimization |

| SSD | Solid-State Drive |

| SVM | Support Vector Machine |

| TN | True negative |

| TP | True positive |

| TRIPOD-AI | Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis—Artificial Intelligence extension |

| UTT | Unilateral Total Thyroidectomy |

| VHI-10 | Voice Handicap Index-10 |

| VLS | Videolaryngostroboscopy |

References

- Sahli, Z.; Canner, J.K.; Najjar, O.; Schneider, E.B.; Prescott, J.D.; Russell, J.O.; Tufano, R.P.; Zeiger, M.A.; Mathur, A. Association between age and patient-reported changes in voice and swallowing after thyroidectomy. Laryngoscope 2019, 129, 519–524. [Google Scholar] [CrossRef]

- Choi, Y.; Keum, B.R.; Kim, J.E.; Lee, J.S.; Hong, S.M.; Park, I.-S.; Kim, H. Early Assessment of Voice Problems in Post-Thyroidectomy Syndrome Using Cepstral Analysis. Diagnostics 2024, 14, 111. [Google Scholar] [CrossRef]

- Orestes, M.I.; Chhetri, D.K. Superior laryngeal nerve injury: Effects, clinical findings, prognosis, and management options. Curr. Opin. Otolaryngol. Head Neck Surg. 2014, 22, 439–443. [Google Scholar] [CrossRef]

- Barczyński, M.; Randolph, G.W.; Cernea, C.R.; Dralle, H.; Dionigi, G.; Alesina, P.F.; Mihai, R.; Finck, C.; Lombardi, D.; Hartl, D.M.; et al. External branch of the superior laryngeal nerve monitoring during thyroid and parathyroid surgery: International Neural Monitoring Study Group standards guideline statement. Laryngoscope 2013, 123, S1–S14. [Google Scholar] [CrossRef] [PubMed]

- Prgomet, I.Š.; Frkanec, S.; Radojković, I.G.; Prgomet, D. Prospective Voice Assessment After Thyroidectomy Without Recurrent Laryngeal Nerve Injury. Diagnostics 2025, 15, 37. [Google Scholar] [CrossRef] [PubMed]

- Nam, I.; Bae, J.; Chae, B.; Shim, M.; Hwang, Y.; Sun, D. Therapeutic approach to patients with a lower-pitched voice after thyroidectomy. World J. Surg. 2013, 37, 1940–1950. [Google Scholar] [CrossRef]

- Gumus, T.; Makay, O.; Eyigor, S.; Ozturk, K.; Cetin, Z.E.; Sezgin, B.; Kolcak, Z.; Icoz, G.; Akyildiz, M. Objective analysis of swallowing and functional voice outcomes after thyroidectomy: A prospective cohort study. Asian J. Surg. 2020, 43, 116–123. [Google Scholar] [CrossRef]

- Leite, D.R.A.; de Moraes, R.M.; Lopes, L.W. Different performances of machine learning models to classify dysphonic and non-dysphonic voices. J. Voice 2022, 39, 577–590. [Google Scholar] [CrossRef] [PubMed]

- Dankovičová, Z.; Sovák, D.; Drotár, P.; Vokorokos, L. Machine learning approach to dysphonia detection. Appl. Sci. 2018, 8, 1927. [Google Scholar] [CrossRef]

- Hadjaidji, E.; Korba, M.C.A.; Khelil, K. Spasmodic dysphonia detection using machine learning classifiers. In Proceedings of the 2021 International Conference on Recent Advances in Mathematics and Informatics (ICRAMI), Tebessa, Algeria, 21–22 September 2021; pp. 1–5. [Google Scholar]

- Low, D.M.; Rao, V.; Randolph, G.; Song, P.C.; Ghosh, S.S. Identifying bias in models that detect vocal fold paralysis from audio recordings using explainable machine learning and clinician ratings. PLOS Digit. Health 2024, 3, e0000516. [Google Scholar] [CrossRef]

- Costantini, G.; Leo, P.D.; Asci, F.; Zarezadeh, Z.; Marsili, L.; Errico, V.; Suppa, A.; Saggio, G. Machine learning based voice analysis in spasmodic dysphonia: An investigation of most relevant features from specific vocal tasks. In Biosignals: Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies-Vol 4: Biosignals; SCITEPRESS—Science and Technology: Setubal, Portugal, 2021; pp. 103–113. [Google Scholar]

- van der Woerd, B.; Chen, Z.; Flemotomos, N.; Oljaca, M.; Sund, L.T.; Narayanan, S.; Johns, M.M. A machine-learning algorithm for the automated perceptual evaluation of dysphonia severity. J. Voice 2023. [Google Scholar] [CrossRef]

- Hu, C.; Li, L.; Huang, W.; Wu, T.; Xu, Q.; Liu, J.; Hu, B. Interpretable Machine Learning for Early Prediction of Prognosis in Sepsis: A Discovery and Validation Study. Infect. Dis. Ther. 2022, 11, 1117–1132. [Google Scholar] [CrossRef]

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for The Behavioral Sciences; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Song, P.C.; Hussain, I.; Bruch, J.; Franco, R.A., Jr. Postoperative management of unilateral RLN paralysis. In The Recurrent and Superior Laryngeal Nerves; Springer: Berlin/Heidelberg, Germany, 2016; pp. 271–284. [Google Scholar]

- Chandrasekhar, S.S.; Randolph, G.W.; Seidman, M.D.; Rosenfeld, R.M.; Angelos, P.; Barkmeier-Kraemer, J.; Benninger, M.S.; Blumin, J.H.; Dennis, G.; Hanks, J.; et al. Clinical practice guideline: Improving voice outcomes after thyroid surgery. Otolaryngol. Neck Surg. 2013, 148, S1–S37. [Google Scholar] [CrossRef] [PubMed]

- Dionigi, G.; Boni, L.; Rovera, F.; Rausei, S.; Castelnuovo, P.; Dionigi, R. Postoperative laryngoscopy in thyroid surgery: Proper timing to detect recurrent laryngeal nerve injury. Langenbeck’s Arch. Surg. 2010, 395, 327–331. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-S. Voice Therapy Method for Post-Thyroidectomy Syndrome. Commun. Sci. Disord. 2024, 29, 938–948. [Google Scholar] [CrossRef]

- Ribeiro, V.V.; Batista, D.d.J.; Silveira, W.L.; Barbosa, I.; Casmerides, M.C.B.; Dornelas, R.; Behlau, M. Reliability, Measurement Error, and Responsiveness of the Voice Handicap Index: A Systematic Review and Meta-analysis. J. Voice 2024. [Google Scholar] [CrossRef] [PubMed]

- Arffa, R.E.; Krishna, P.; Gartner-Schmidt, J.; Rosen, C.A. Normative values for the voice handicap index-10. J. Voice 2012, 26, 462–465. [Google Scholar] [CrossRef]

- Rosen, C.A.; Lee, A.S.; Osborne, J.; Zullo, T.; Murry, T. Development and validation of the voice handicap index-10. Laryngoscope 2004, 114, 1549–1556. [Google Scholar] [CrossRef]

- Kiliç, M.A.; Okur, E.; Yildirim, I.; Oğüt, F.; Denizoğlu, I.; Kizilay, A.; Oğuz, H.; Kandoğan, T.; Doğan, M.; Akdoğan, O.; et al. Reliability and validity of the Turkish version of the Voice Handicap Index. Kulak Burun Bogaz Ihtis. Derg. KBB J. Ear Nose Throat 2008, 18, 139–147. [Google Scholar]

- Woo, P. Objective measures of stroboscopy and high-speed video. Adv. Otorhinolaryngol 2020, 85, 25–44. [Google Scholar]

- Boersma, P.; Weenink, D. PRAAT, a system for doing phonetics by computer. Glot Int. 2001, 5, 341–345. [Google Scholar]

- Putri, N.P. Analysis of Noise Removal Performance in Speech Signals through Comparison of Median Filter, Low FIR Filter, and Butterworth Filter: Simulation and Evaluation. Ultim. Comput. J. Sist. Komput. 2024, 16, 56–62. [Google Scholar]

- Zhang, T.; Dorman, M.F.; Spahr, A.J. Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 2010, 31, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C.J.; Stone, M.A.; Füllgrabe, C.; Glasberg, B.R.; Puria, S. Spectro-Temporal Characteristics of Speech at High Frequencies, and the Potential for Restoration of Audibility to People with Mild-to-Moderate Hearing Loss. Ear Hear. 2008, 29, 907–922. [Google Scholar] [CrossRef]

- Kryter, K.D. Speech Bandwidth Compression through Spectrum Selection. J. Acoust. Soc. Am. 1960, 32, 547–556. [Google Scholar] [CrossRef]

- Donai, J.J.; Halbritter, R.M. Gender identification using high-frequency speech energy: Effects of increasing the low-frequency limit. Ear Hear. 2017, 38, 65–73. [Google Scholar] [CrossRef]

- Schneider, T.; Neumaier, A. Algorithm 808: ARfit—A Matlab package for the estimation of parameters and eigenmodes of multivariate autoregressive models. ACM Trans. Math. Softw. (TOMS) 2001, 27, 58–65. [Google Scholar] [CrossRef]

- Paliwal, K. Performance of the weighted burg methods of ar spectral estimation for pitch-synchronous analysis of voiced speech. Speech Commun. 1984, 3, 221–231. [Google Scholar] [CrossRef]

- Paliwal, K. Further simulation results on tapered and energy-weighted Burg methods. IEEE Trans. Acoust. Speech Signal Process. 2003, 33, 1624–1626. [Google Scholar] [CrossRef]

- Atal, B.S. Automatic speaker recognition based on pitch contours. J. Acoust. Soc. Am. 1972, 52, 1687–1697. [Google Scholar] [CrossRef]

- Snell, R.C.; Milinazzo, F. Formant location from LPC analysis data. IEEE Trans. Speech Audio Process. 1993, 1, 129–134. [Google Scholar] [CrossRef]

- Fernandes, J.F.T.; Freitas, D.; Junior, A.C.; Teixeira, J.P. Determination of harmonic parameters in pathological voices—Efficient algorithm. Appl. Sci. 2023, 13, 2333. [Google Scholar] [CrossRef]

- Boersma, P. Accurate Short-Term Analysis of the Fundamental Frequency and the Harmonics-to-Noise Ratio of a Sampled Sound. In Proceedings of the Institute of Phonetic Sciences; 1993; Volume 17, pp. 97–110. Available online: https://www.researchgate.net/profile/Paul-Boersma-2/publication/2326829_Accurate_Short-Term_Analysis_Of_The_Fundamental_Frequency_And_The_Harmonics-To-Noise_Ratio_Of_A_Sampled_Sound/links/0fcfd511668e1a0fb2000000/Accurate-Short-Term-Analysis-Of-The-Fundamental-Frequency-And-The-Harmonics-To-Noise-Ratio-Of-A-Sampled-Sound.pdf (accessed on 7 August 2025).

- Vashkevich, M.; Petrovsky, A.; Rushkevich, Y. Bulbar ALS detection based on analysis of voice perturbation and vibrato. In Proceedings of the 2019 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 18–20 September 2019; pp. 267–272. [Google Scholar]

- Teixeira, J.P.; Gonçalves, A. Accuracy of jitter and shimmer measurements. Procedia Technol. 2014, 16, 1190–1199. [Google Scholar] [CrossRef]

- Peeters, G. A large set of audio features for sound description (similarity and classification) in the CUIDADO project. Cuid. Ist Proj. Rep. 2004, 54, 1–25. [Google Scholar]

- Scheirer, E.; Slaney, M. Construction and evaluation of a robust multifeature speech/music discriminator. In Proceedings of the 1997 IEEE International Conference on Acoustics, Speech, and Signal Processing, Munich, Germany, 21–24 April 1997; Volume 2, pp. 1331–1334. [Google Scholar]

- Johnston, J. Transform coding of audio signals using perceptual noise criteria. IEEE J. Sel. Areas Commun. 1988, 6, 314–323. [Google Scholar] [CrossRef]

- Misra, H.; Ikbal, S.; Bourlard, H.; Hermansky, H. Spectral entropy based feature for robust ASR. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 1, pp. 1–193. [Google Scholar]

- Murray, E.S.H.; Chao, A.; Colletti, L. A Practical Guide to Calculating Cepstral Peak Prominence in Praat. J. Voice 2025, 39, 365–370. [Google Scholar] [CrossRef] [PubMed]

- Lyu, Y.; Jiang, Q.-C.; Yuan, S.; Hong, J.; Chen, C.-F.; Wu, H.-M.; Wang, Y.-Q.; Shi, Y.-J.; Yan, H.-X.; Xu, J. Non-invasive acoustic classification of adult asthma using an XGBoost model with vocal biomarkers. Sci. Rep. 2025, 15, 28682. [Google Scholar] [CrossRef] [PubMed]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; National Taiwan University: Taipei, Taiwan, 2003. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive logistic regression: A statistical view of boosting (with discussion and a rejoinder by the authors). Ann. Stat. 2000, 28, 337–407. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf (accessed on 7 August 2025).

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.-I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Çorbacıoğlu, Ş.K.; Aksel, G. Receiver operating characteristic curve analysis in diagnostic accuracy studies: A guide to interpreting the area under the curve value. Turk. J. Emerg. Med. 2023, 23, 195–198. [Google Scholar] [CrossRef]

- Mandrekar, J.N. Receiver Operating Characteristic Curve in Diagnostic Test Assessment. J. Thorac. Oncol. 2010, 5, 1315–1316. [Google Scholar] [CrossRef] [PubMed]

- Scheda, R.; Diciotti, S. Explanations of machine learning models in repeated nested cross-validation: An application in age prediction using brain complexity features. Appl. Sci. 2022, 12, 6681. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; L. Erlbaum Associates: Mahwah, NJ, USA, 1988. [Google Scholar]

- Nogueira, S.; Sechidis, K.; Brown, G. On the stability of feature selection algorithms. J. Mach. Learn. Res. 2018, 18, 1–54. [Google Scholar]

- Kalousis, A.; Prados, J.; Hilario, M. Stability of feature selection algorithms: A study on high-dimensional spaces. Knowl. Inf. Syst. 2007, 12, 95–116. [Google Scholar] [CrossRef]

- Hassanzad, M.; Hajian-Tilaki, K. Methods of determining optimal cut-point of diagnostic biomarkers with application of clinical data in ROC analysis: An update review. BMC Med. Res. Methodol. 2024, 24, 84. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef]

- Hazra, A. Using the confidence interval confidently. J. Thorac. Dis. 2017, 9, 4125–4130. Available online: https://jtd.amegroups.org/article/view/16406 (accessed on 7 August 2025). [CrossRef]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591. [Google Scholar] [CrossRef]

- Kim, G.-J.; Bang, J.; Shin, H.-I.; Kim, S.-Y.; Bae, J.S.; Kim, K.; Kim, J.S.; Hwang, Y.-S.; Shim, M.-R.; Sun, D.-I. Persistent subjective voice symptoms for two years after thyroidectomy. Am. J. Otolaryngol. 2023, 44, 103820. [Google Scholar] [CrossRef]

- Sahoo, A.K.; Sahoo, P.K.; Gupta, V.; Behera, G.; Sidam, S.; Mishra, U.P.; Chavan, A.; Binu, R.; Gour, S.; Velayutham, D.K.; et al. Assessment of Changes in the Quality of Voice in Post-thyroidectomy Patients With Intact Recurrent and Superior Laryngeal Nerve Function. Cureus 2024, 16, e60873. [Google Scholar] [CrossRef]

- Singh, K.; Singh, I.; Meher, R.; Kumar, J.; Gopal, A.; Sahoo, A.; Sharma, R. Incidence of Injury to External Branch of Superior Laryngeal Nerve as Diagnosed by Acoustic Voice Analysis After Thyroidectomy. Indian J. Otolaryngol. Head Neck Surg. 2025, 77, 1401–1409. [Google Scholar] [CrossRef]

- Van Lierde, K.; D’HAeseleer, E.; Wuyts, F.L.; Baudonck, N.; Bernaert, L.; Vermeersch, H. Impact of thyroidectomy without laryngeal nerve injury on vocal quality characteristics: An objective multiparameter approach. Laryngoscope 2009, 120, 338–345. [Google Scholar] [CrossRef]

- Lang, B.H.H.; Wong, C.K.H.; Ma, E.P.M. A systematic review and meta-analysis on acoustic voice parameters after uncomplicated thyroidectomy. Laryngoscope 2016, 126, 528–537. [Google Scholar] [CrossRef]

- Haugen, B.R.; Alexander, E.K.; Bible, K.C.; Doherty, G.M.; Mandel, S.J.; Nikiforov, Y.E.; Pacini, F.; Randolph, G.W.; Sawka, A.M.; Schlumberger, M.; et al. 2015 American Thyroid Association management guidelines for adult patients with thyroid nodules and differentiated thyroid cancer: The American Thyroid Association guidelines task force on thyroid nodules and differentiated thyroid cancer. Thyroid 2016, 26, 1–133. [Google Scholar] [CrossRef] [PubMed]

- Randolph, G.W.; Dralle, H.; Abdullah, H.; Barczynski, M.; Bellantone, R.; Brauckhoff, M.; Carnaille, B.; Cherenko, S.; Chiang, F.-Y.; Dionigi, G.; et al. Electrophysiologic recurrent laryngeal nerve monitoring during thyroid and parathyroid surgery: International standards guideline statement. Laryngoscope 2011, 121, S1–S16. [Google Scholar] [CrossRef]

- Association, B.T. Guidelines for the Management of Thyroid Cancer; Royal College of Physicians: Sydney, Australia, 2007. [Google Scholar]

- Jeannon, J.-P.; Orabi, A.A.; Bruch, G.A.; Abdalsalam, H.A.; Simo, R. Diagnosis of recurrent laryngeal nerve palsy after thyroidectomy: A systematic review. Int. J. Clin. Pract. 2009, 63, 624–629. [Google Scholar] [CrossRef] [PubMed]

- Rickert, S.M.; Childs, L.F.; Carey, B.T.; Murry, T.; Sulica, L. Laryngeal electromyography for prognosis of vocal fold palsy: A Meta-Analysis. Laryngoscope 2011, 122, 158–161. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Ryu, Y.M.; Jung, Y.-S.; Kim, S.-J.; Lee, Y.J.; Lee, E.-K.; Kim, S.-K.; Kim, T.H.; Lee, C.Y.; Park, S.Y.; et al. Extent of thyroidectomy affects vocal and throat functions: A prospective observational study of lobectomy versus total thyroidectomy. Surgery 2013, 154, 611–620. [Google Scholar] [CrossRef]

- Stojadinovic, A.; Henry, L.R.; Howard, R.S.; Gurevich-Uvena, J.; Makashay, M.J.; Coppit, G.L.; Shriver, C.D.; Solomon, N.P. Prospective trial of voice outcomes after thyroidectomy: Evaluation of patient-reported and clinician-determined voice assessments in identifying postthyroidectomy dysphonia. Surgery 2008, 143, 732–742. [Google Scholar] [CrossRef]

- Vicente, D.A.; Solomon, N.P.; Avital, I.; Henry, L.R.; Howard, R.S.; Helou, L.B.; Coppit, G.L.; Shriver, C.D.; Buckenmaier, C.C.; Libutti, S.K.; et al. Voice outcomes after total thyroidectomy, partial thyroidectomy, or non-neck surgery using a prospective multifactorial assessment. J. Am. Coll. Surg. 2014, 219, 152–163. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, J.; Huang, Y.; Ke, W.; Yu, S.; Liang, W.; Xiao, H.; Li, Y.; Guan, H. Smoking is associated with adverse clinical outcomes after thyroidectomy: A 5-year retrospective analysis. BMC Endocr. Disord. 2025, 25, 70. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med. Informatics Decis. Mak. 2020, 20, 16. [Google Scholar] [CrossRef] [PubMed]

- Beam, A.L.; Kohane, I.S. Big data and machine learning in health care. JAMA 2018, 319, 1317–1318. [Google Scholar] [CrossRef]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A.J. Machine learning algorithm validation with a limited sample size. PLoS ONE 2019, 14, e0224365. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 2017, 50, 1–45. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning. 2020. Available online: https://www.lulu.com/ (accessed on 14 August 2025).

- Ponce-Bobadilla, A.V.; Schmitt, V.; Maier, C.S.; Mensing, S.; Stodtmann, S. Practical guide to SHAP analysis: Explaining supervised machine learning model predictions in drug development. Clin. Transl. Sci. 2024, 17, e70056. [Google Scholar] [CrossRef] [PubMed]

- Uloza, V.; Maskeliunas, R.; Pribuisis, K.; Vaitkus, S.; Kulikajevas, A.; Damasevicius, R. An artificial intelligence-based algorithm for the assessment of substitution voicing. Appl. Sci. 2022, 12, 9748. [Google Scholar] [CrossRef]

- Raudys, S.; Jain, A. Small sample size effects in statistical pattern recognition: Recommendations for practitioners. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 252–264. [Google Scholar] [CrossRef]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Ranglani, H. Empirical Analysis of the Bias-Variance Tradeoff Across Machine Learning Models. Mach. Learn. Appl. Int. J. 2024, 11, 01–12. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Kinnunen, T.; Li, H. An overview of text-independent speaker recognition: From features to supervectors. Speech Commun. 2010, 52, 12–40. [Google Scholar] [CrossRef]

- Aronowitz, H.; Irony, D.; Burshtein, D. Modeling intra-speaker variability for speaker recognition. In Proceedings of the INTERSPEECH, Lisbon, Portugal, 4–8 September 2005; pp. 2177–2180. [Google Scholar]

- Cavalcanti, J.C.; da Silva, R.R.; Eriksson, A.; Barbosa, P.A. Exploring the performance of automatic speaker recognition using twin speech and deep learning-based artificial neural networks. Front. Artif. Intell. 2024, 7, 1287877. [Google Scholar] [CrossRef]

- Gillespie, A.I.; Gooding, W.; Rosen, C.; Gartner-Schmidt, J. Correlation of VHI-10 to voice laboratory measurements across five common voice disorders. J. Voice 2014, 28, 440–448. [Google Scholar] [CrossRef]

- Kim, G.-J.; Lee, O.-H.; Kim, S.-Y.; Bae, J.S.; Kim, K.; Kim, J.S.; Hwang, Y.-S.; Shim, M.-R.; Sun, D.-I. The efficacy of early voice therapy in patients with vocal fold paralysis after thyroidectomy. Auris Nasus Larynx 2025, 52, 263–271. [Google Scholar] [CrossRef]

- Yousef, A.M.; Castillo-Allendes, A.; Berardi, M.L.; Codino, J.; Rubin, A.D.; Hunter, E.J. Screening Voice Disorders: Acoustic Voice Quality Index, Cepstral Peak Prominence, and Machine Learning. Folia Phoniatr. Logop. 2025. [Google Scholar] [CrossRef]

- Sampaio, M.; Masson, M.L.V.; Soares, M.F.d.P.; Bohlender, J.E.; Brockmann-Bauser, M. Effects of fundamental frequency, vocal intensity, sample duration, and vowel context in cepstral and spectral measures of dysphonic voices. J. Speech, Lang. Hear. Res. 2020, 63, 1326–1339. [Google Scholar] [CrossRef] [PubMed]

- Ryu, C.H.; Lee, S.J.; Cho, J.G.; Choi, I.J.; Choi, Y.S.; Hong, Y.T.; Jung, S.Y.; Kim, J.W.; Lee, D.Y.; Lee, D.K. Care and management of voice change in thyroid surgery: Korean Society of Laryngology, Phoniatrics and Logopedics Clinical Practice Guideline. Clin. Exp. Otorhinolaryngol. 2022, 15, 24–48. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Q.; Fu, Y.; Yang, J.; Yang, H.; Ma, T.; Pan, Z.; Peng, Y.; Zuo, J.; Gong, Y.; Lu, D. Knowledge, Attitudes, and Practices Regarding Voice Disorders After Thyroid Surgery: A Cross-Sectional Study Among Patients in Southwestern Mainland China. J. Voice 2024. [Google Scholar] [CrossRef] [PubMed]

| Model | Hyperparameter | Value |

|---|---|---|

| Cubic SVM | Kernel Function | Polynomial |

| Polynomial Degree | 3 | |

| Box Constraint (C) | 0.1/1/0.01 | |

| Kernel Scale | Auto | |

| Quadratic SVM | Kernel Function | Polynomial |

| Polynomial Degree | 2 | |

| Box Constraint (C) | 0.1/1/0.01 | |

| Kernel Scale | Auto | |

| RBF SVM | Kernel Function | Gaussian |

| Box Constraint (C) | 0.1/1/0.01 | |

| Kernel Scale | Auto | |

| GentleBoost | Number of Learning Cycles | 100/200/500 |

| Learning Rate | 1/0.1/0.05/0.01 | |

| Weak Learner Type | Decision Tree | |

| Max Number of Splits | 10 | |

| Min Parent Size | 6 | |

| Min Leaf Size | 3 | |

| Pruning | Off | |

| Merge Leaves | Off | |

| LogitBoost | Number of Learning Cycles | 100/200/500 |

| Learning Rate | 1/0.1/0.05/0.01 | |

| Weak Learner Type | Decision Tree | |

| Max Number of Splits | 10 | |

| Min Parent Size | 6 | |

| Min Leaf Size | 3 | |

| Pruning | Off | |

| Merge Leaves | Off |

| Female: 92 | Male: 34 | Total: 126 | p-Value * | |

|---|---|---|---|---|

| Mean Age | 47.85 ± 11.80 | 50.21 ± 11.10 | 48.48 ± 11.66 | 0.238 |

| Smoker (+) | 20 (21.74%) | 14 (41.18%) | 34 (26.98%) | 0.032 |

| Pathological Diagnosis | ||||

| Malign | 33 (35.87%) | 13 (38.24%) | 46 (36.51%) | 0.713 |

| Benign | 59 (64.13%) | 21 (61.76%) | 80 (63.49%) | |

| Thyroid Surgery Type | ||||

| UTT | 10 (10.87%) | 4 (11.76%) | 14 (11.11%) | 0.710 |

| BTT | 82 (89.13%) | 30 (88.24%) | 112 (88.89%) |

| Group | Preop VHI-10 (Mean ± SD) | Postop VHI-10 (Mean ± SD) | Preop VHI ≥ 11 n (%) | Postop VHI ≥ 11 n (%) | p-Value | PTVD n (%) |

|---|---|---|---|---|---|---|

| Female (n = 92) | 10.37 ± 5.10 | 13.29 ± 5.00 | 24 (26.09%) | 43 (46.74%) | 0.000 * | 19 (20.65%) |

| Male (n = 34) | 9.44 ± 4.04 | 12.68 ± 5.05 | 9 (26.47%) | 12 (35.29%) | 0.000 * | 3 (8.82%) |

| Total (n = 126) | 10.12 ± 4.87 | 13.13 ± 5.04 | 33 (26.98%) | 55 (39.68%) | 0.000 * | 22 (17.46%) |

| Feature | Cohen’s d | Effect Size | AUC | Optimal Cutoff | Sensitivity | Specificity | Youden Index |

|---|---|---|---|---|---|---|---|

| iCPP | −2.95 | Very Large | 0.66 | −10.10 | 0.39 | 0.98 | 0.37 |

| aCPP | −1.13 | Large | 0.64 | −16.45 | 0.89 | 0.34 | 0.22 |

| aHNR | −0.60 | Medium | 0.59 | −18.42 | 0.48 | 0.74 | 0.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Celepli, S.; Bigat, I.; Karakas, B.; Tezcan, H.M.; Yar, M.D.; Celepli, P.; Aksahin, M.F.; Hancerliogullari, O.; Yilmaz, Y.F.; Erogul, O. SHAP-Based Identification of Potential Acoustic Biomarkers in Patients with Post-Thyroidectomy Voice Disorder. Diagnostics 2025, 15, 2065. https://doi.org/10.3390/diagnostics15162065

Celepli S, Bigat I, Karakas B, Tezcan HM, Yar MD, Celepli P, Aksahin MF, Hancerliogullari O, Yilmaz YF, Erogul O. SHAP-Based Identification of Potential Acoustic Biomarkers in Patients with Post-Thyroidectomy Voice Disorder. Diagnostics. 2025; 15(16):2065. https://doi.org/10.3390/diagnostics15162065

Chicago/Turabian StyleCelepli, Salih, Irem Bigat, Bilgi Karakas, Huseyin Mert Tezcan, Mehmet Dincay Yar, Pinar Celepli, Mehmet Feyzi Aksahin, Oguz Hancerliogullari, Yavuz Fuat Yilmaz, and Osman Erogul. 2025. "SHAP-Based Identification of Potential Acoustic Biomarkers in Patients with Post-Thyroidectomy Voice Disorder" Diagnostics 15, no. 16: 2065. https://doi.org/10.3390/diagnostics15162065

APA StyleCelepli, S., Bigat, I., Karakas, B., Tezcan, H. M., Yar, M. D., Celepli, P., Aksahin, M. F., Hancerliogullari, O., Yilmaz, Y. F., & Erogul, O. (2025). SHAP-Based Identification of Potential Acoustic Biomarkers in Patients with Post-Thyroidectomy Voice Disorder. Diagnostics, 15(16), 2065. https://doi.org/10.3390/diagnostics15162065