Abstract

Background/Objectives: Renal cell carcinoma (RCC) is a malignant disease that requires rapid and reliable diagnosis to determine the correct treatment protocol and to manage the disease effectively. However, the fact that the textural and morphological features obtained from medical images do not differ even among different tumor types poses a significant diagnostic challenge for radiologists. In addition, the subjective nature of visual assessments made by experts and interobserver variability may cause uncertainties in the diagnostic process. Methods: In this study, a deep learning-based hybrid model using multiphase magnetic resonance imaging (MRI) data is proposed to provide accurate classification of RCC subtypes and to provide a decision support mechanism to radiologists. The proposed model performs a more comprehensive analysis by combining the T2 phase obtained before the administration of contrast material with the arterial (A) and venous (V) phases recorded after the injection of contrast material. Results: The model performs RCC subtype classification at the end of a five-step process. These are regions of interest (ROI), preprocessing, augmentation, feature extraction, and classification. A total of 1275 MRI images from different phases were classified with SVM, and 90% accuracy was achieved. Conclusions: The findings reveal that the integration of multiphase MRI data and deep learning-based models can provide a significant improvement in RCC subtype classification and contribute to clinical decision support processes.

1. Introduction

The kidney is a basic organ that performs vital functions such as removing harmful waste and regulating body pressure. Tumor structures formed in the organ disrupt these functions and pose vital risks. Renal cell carcinoma (RCC) is among the ten most common types of cancer in adults and is the most common malignant tumor in the kidney. It constitutes approximately 2–3% of all malignant tumors globally [1,2]. In addition, according to GLOBOCAN 2020 data, more than 400,000 new kidney cancer/tumor cases have been reported worldwide, and approximately 200,000 deaths have occurred [3].

RCC is divided into three main subtypes based on histopathological features: clear cell renal cell carcinoma (ccRCC), papillary renal cell carcinoma (pRCC), and chromophobe renal cell carcinoma (chRCC). Clear cell RCC (ccRCC) is the most common type, accounting for 75% of all cases. This subtype is particularly aggressive and has a poor prognosis [4]. After clear cell carcinoma, the most common subtypes are papillary and chromophobe. Abdominal imaging is an important diagnostic tool for the detection and characterization of renal tumors. The widespread use of cross-sectional imaging techniques has led to significant progress in the detection of renal tumors. Computed tomography is the most commonly used screening method due to its short scanning time. MRI has many advantages over CT. It can be used for the initial diagnosis and staging of RCC. Radiation-free MRI provides high soft tissue contrast that visualizes the tumor’s internal structure or components. Due to soft tissue similarity, it is quite difficult to distinguish RCC subtypes with MRI alone [5]. To overcome this challenge, different MRI phases are analyzed. ccRCC lesions, due to their hyper-vascular nature, exhibit heterogeneous and intense enhancement relative to the renal parenchyma following contrast administration. They show marked enhancement in the corticomedullary phase and demonstrate washout in the nephrographic phase. In contrast, pRCC lesions display minimal, slow, and progressive enhancement, with less contrast uptake compared to renal parenchyma in the corticomedullary phase. Meanwhile, chRCC lesions, being hypo-vascular, show lower enhancement than ccRCC but greater than pRCC after contrast administration. In this study, the corticomedullary phase was denoted as “A,” and the nephrographic phase as “V”.

Although kidney biopsy is performed to distinguish malignant from benign masses, complications such as tumor cell seeding, bleeding, fistula formation, pseudoaneurysm and infection are possible along the biopsy path. The ability to distinguish tumor cells without taking tissue samples can be very valuable in facilitating diagnosis and ensuring timely treatment and can greatly assist clinicians in treatment planning [6].

1.1. Motivation

The use of deep learning-based models, especially convolutional neural networks (CNNs), in the classification of medical data has become widespread in the last decade. Various studies in this direction aim to provide decision support systems to radiologists. However, while the majority of current research focuses on the distinction between benign and malignant tumors and lesions, limited progress has been made in the classification of RCC subtypes [7,8]. In particular, the fact that textural and morphological features in MRI images do not differ even between different tumor types poses a significant diagnostic challenge for radiologists. Therefore, the correct classification of RCC subtypes and the determination of the treatment protocol to be applied are of critical importance. This study aims to detect RCC subtypes using MRI data from patients diagnosed with kidney cancer in different phases. Considering the insufficient number of radiologists, the development of an artificial intelligence-supported decision support system stands out as an inevitable necessity.

1.2. Contributions

The proposed method aims to increase diagnostic accuracy by performing feature fusion by combining multiple MRI sequences. Thus, details that cannot be detected or may be overlooked in a single image will be included in the model, providing a more reliable diagnostic process. The main contributions of this study are summarized below:

- A, T2, and V MRI phases were integrated in the evaluation process, and a comprehensive analysis was performed.

- More detailed and precise feature extraction was performed using deep convolutional neural networks (CNNs) with a dense feature set.

- Model training was performed by combining the features obtained separately from each phase, and the classification performance was evaluated in detail.

- While the literature generally focuses on Normal–Tumor distinction, this study considered Renal Cell Carcinoma as a multi-class classification and achieved high success rates.

- There are studies in the literature that generally use Computerized Tomography (CT) images and a single image. In this study, a more detailed analysis was performed by evaluating MRI images taken from different sequences. The results obtained show that the level of radiation exposure can be reduced by using MRI instead of CT.

1.3. Outline

The rest of the paper is organized as follows. Section 2 includes a summary of the studies in the literature. Section 3 presents the characteristics of the proposed dataset and the steps of the method in detail. Section 4 includes the evaluation of the results of the proposed method. Section 5 provides a brief evaluation of the paper.

2. Related Work

Advances in deep learning have significantly contributed to the increase in the number of studies on the diagnosis and classification of kidney tumors. These studies are generally divided into three main categories: classification, segmentation, and determination of the degree of the mass. The self-supervised learning method developed by Özbay et al. [7] was applied to a dataset consisting of computerized tomography (CT) images. Accuracy rates of 99.82% and 95.24% were achieved in the distinction of normal and tumor tissues, respectively. Mehemedi et al. used a deep neural network (DNN) to determine kidney tumors. In the two-stage process, UNet and SegNet were used in the segmentation, and MobileNetV2, VGG16, and InceptionV3 were used for classification [8]. Ghalib et al. classified normal and abnormal tissues by using patterns such as contrast, color, and volume [9]. Pande et al. successfully distinguished cyst, stone, and tumor using the YOLOv8 model for multiple classification using kidney CT images [10]. Zhou et al. applied the InceptionV3 model on CT images to distinguish benign–malignant kidney tumors and achieved 97% accuracy [11]. This study showed that transfer learning is more effective in kidney tumor classification. In a similar study, cyst, stone, and tumor classification based on CT images was performed using low-parameter deep learning models, and high accuracy rates were achieved [12]. Abdullah et al. proposed a deep learning-based system that allows tumors to be distinguished from stones and cysts from CT images. The proposed CNN-4 and CNN-6 models achieved 92% and 97% accuracy, respectively [13]. The most important result that draws attention in these studies is that the performance parameters of benign–malignant or cyst, stone, and all classifications are quite high.

On the other hand, a study conducted to determine tumor types focused on the distinction between clear cell renal cell carcinoma (ccRCC) and benign oncocytoma (ONC). CT images were used, and the highest accuracy rate was obtained in the EX phase, at 74% [14]. This success rate showed that distinguishing the subtypes of malignant tumors is a very difficult problem. When the current literature is examined, it is observed that classification studies are largely focused on the distinction between normal and abnormal, and that CT images are predominantly used. Studies on the distinction between different tumor types with multi-class and similar features are limited.

Sundaramoorthy et al. achieved 79% accuracy using VGG-16 and AlexNet architectures [15]. Gupta et al. used deep learning (DL) to detect renal cell carcinoma (RCC) and its subtypes (clear cell RCC (ccRCC) and non-ccRCC) from CT images. In this study, accuracy was 0.950, F1 score was 0.893, and AUC was 0.985 with CT images obtained from 196 patients [16]. Koçak et al. used ANN and SVM classifiers to classify cc-RCC, papillary cell RCC (pc-RCC), and chromophobe cell RCC (chc-RCC) kidney cancers. In their study using the TCGA dataset, the Matthews correlation coefficient (MCC) was obtained as 0.804 with SVM [17]. Uhm et al. proposed a deep learning model that can detect five major renal tumors, including both benign and malignant tumors, in CT images. The model was tested on CT images from 308 patients, and the AUC value was 0.889 [18]. Zhu et.al. proposed a neural network model to distinguish clear cell RCC, papillary RCC, chromophobe RCC, renal oncocytoma, and normal kidney tissues from pathology images. The Cancer Genome Atlas (TCGA) dataset yielded an AUC of 0.95 [19]. Han et al. proposed an image-based deep learning framework to distinguish three major subtypes of renal cell carcinoma (clear cell, papillary, and chromophobe) from CT images. In this study, in which 169 cases were examined, images were acquired in three phases. The modified Googlenet architecture achieved 85% accuracy [6]. Pan et al. investigated the prediction of Fuhrman grade in clear cell renal cell carcinoma (ccRCC) using MRI (T1- and T2-weighted imaging) and functional MRI (fMRI) modalities, including Dixon-MRI, blood oxygen level-dependent (BOLD)-MRI, and susceptibility-weighted imaging (SWI). Their study, which analyzed histopathologically confirmed cases of 89 patients, achieved an average accuracy of 85.40% using logistic regression analysis [20]. This study highlights the potential of MRI-based radiomic features, combined with qualitative radiological assessments and machine learning (ML) models, to characterize solid renal neoplasms. Further research by Said et al. utilized random forest models to analyze MRI-based quantitative radiomic features for differentiating renal cell carcinoma (RCC) from benign lesions and subtypes of RCC, such as ccRCC and papillary RCC (pRCC). Their findings demonstrated high diagnostic performance, with areas under the curve (AUC) ranging from 0.73 to 0.77 [21]. In another study, Du et al. explored the use of multiparametric magnetic resonance imaging (mpMRI) in conjunction with convolutional neural network (CNN) fusion to predict the aggressiveness of RCC preoperatively. This non-invasive approach aimed to assess tumor behavior and provided promising insights into the potential application of deep learning for tumor characterization in RCC [22]. These studies collectively underscore the growing role of advanced MRI techniques and ML-based radiomics in the accurate diagnosis, classification, and prognostication of renal neoplasms. The integrated analysis of images obtained from different MRI phases has the potential to provide greater diagnostic and prognostic accuracy. However, the number of studies exploring this approach remains limited. This highlights a significant gap in the integration of multiphase MRI imaging and its application in clinical practice, underscoring the need for further research in this area.

Different from these studies in the literature, this article focuses on the classification of clear cell, chromophobe, and papillary renal cell carcinoma (RCC) tumors on MRI images. We propose a deep learning-based method that combines the T2 phase obtained before contrast medium administration with the arterial (A) and venous (V) phases recorded after contrast medium injection. Unlike previous studies that primarily focus on individual MRI phases or rely solely on tumor regions for feature extraction, our method employs the segmentation of the entire kidney as the region of interest (ROI). This enables the model to capture both tumor-specific and organ-level structural information, enhancing its diagnostic accuracy. Furthermore, the extracted deep features from DenseNet are subsequently classified using a support vector machine (SVM), which has demonstrated superior performance in leveraging high-dimensional features compared to conventional fully connected layers.

3. Materials and Methods

This study aims to develop a decision support system for radiologists in order to accelerate the medical diagnosis process and to make the transition to protocol applications in a shorter time. The proposed system is designed to minimize the dependency on pathological evaluations and individual experiences of radiologists in the process of determining the types of kidney tumor. Multiple data usage is provided in order to increase the accuracy of the system in decision-making processes. By combining the features obtained from different MRI phases, the missing or skipped information in a single image is completed with the data obtained from other phases, thus reducing the error tolerance to the lowest level. The dataset definition used and the details of the method are presented in the following sections.

3.1. Dataset

The dataset used in this article includes 1275 MRI images obtained from 62 patients (34 male, 28 female) with definite pathological diagnoses between August 2018 and March 2025 in City Hospital. The images belong to clear cell, papillary, and chromophobe kidney cancer types and are in JPG format and have a resolution of 512 × 512. While creating medical images, T2 phases were created without drug administration. The A phase was taken at the 40th second after contrast material administration, and the V phase was taken at the 100th second after contrast material administration. The cases consist entirely of retrospective images with confirmed histopathological diagnoses. The details of the data are given in Table 1.

Table 1.

Dataset properties.

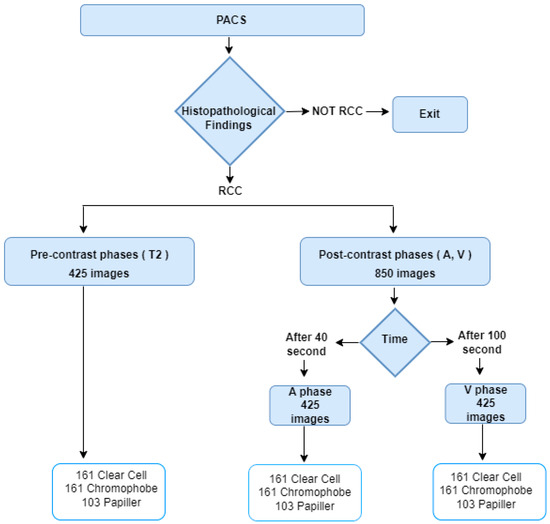

The data collection procedure is given in Figure 1. For the experimental results, 80% of the data were used as training, 10% as validation, and 10% as test. Within the scope of this study, 42 of the 425 images in each phase were separated as test data.

Figure 1.

Data Curation.

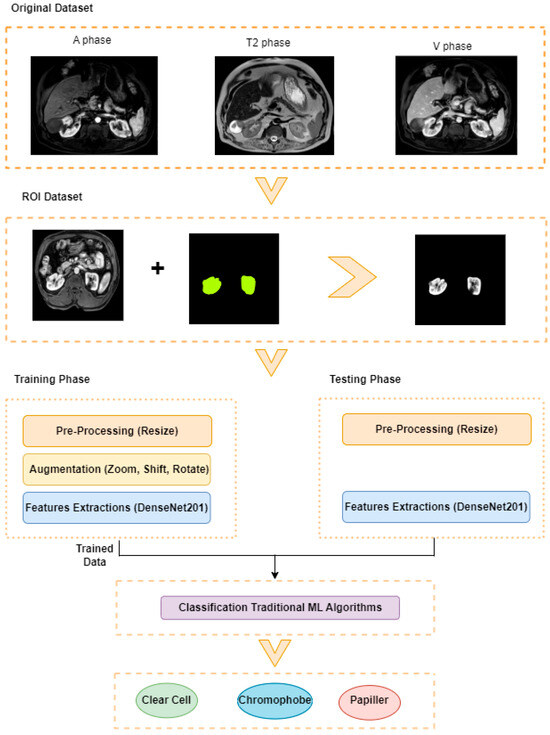

3.2. Proposed Model

This study aims to determine the subtypes of kidney tumors using data obtained from different magnetic resonance imaging (MRI) phases. The proposed approach consists of five basic steps: Region of Interest (ROI) extraction, pre-processing, data augmentation, feature extraction, and classification. The framework of the planned model is presented in Figure 2.

Figure 2.

Proposed Model.

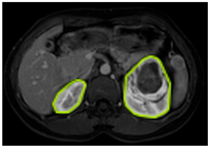

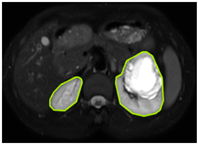

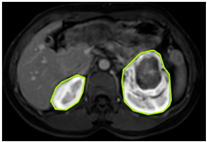

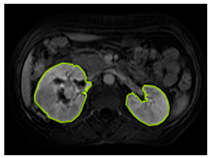

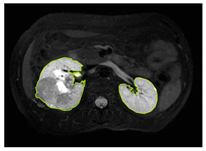

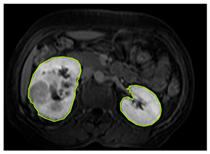

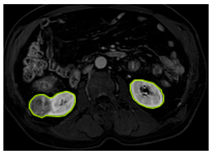

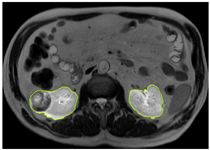

3.2.1. ROI Dataset

The images used in the study include different anatomical areas, such as the liver, spleen, and intestine. However, in order to process the data more quickly and provide more accurate results from the analysis, only the kidney areas (Regions of Interest, ROI) were extracted and evaluated. The Roboflow platform was used for spatial matching and segmentation of the kidneys. Polygonal ROI regions were defined by applying the semantic segmentation method, and then the transformations performed were verified by the radiologist, increasing the reliability of the system. In our study, MRI phase images were acquired at a resolution of 512 × 512 and the renal region of interest (ROI) was segmented and resized to 224 × 224 for input into the DenseNet architecture. In a similar study, Alhussaini et al. utilized 512 × 512 CT images and processed tumor-containing ROIs for their analysis; however, segmented region details were not provided [23]. Another study aimed to differentiate ccRCC from oncocytoma by extracting ROIs from T2-weighted images (T2-WI), pre-contrast T1-weighted images (T1-WI), and post-contrast arterial and venous phases. Tumor regions segmented at 100 × 100 mm were subsequently resized for input into the AlexNet model [24]. Unlike these studies, our approach involves segmenting the entire kidney, rather than solely the tumor region, to enable the deep learning model to leverage structural information for the organ as a whole.

Table 2 shows sample images containing ROI areas for different phases.

Table 2.

ROI areas in different phases.

In case of image and mask matching, the Region of Interest (ROI) is determined as the kidney area whereas, in case of no match, the relevant area is classified as non-kidney. In this context, the mathematical definition of ROI is given as follows:

Image = I(x,y)

Mask = C

3.2.2. Pre-Processing

In many studies conducted in the literature, a great deal of emphasis has been placed on preprocessing steps, and various filtering, normalization, contrast enhancement, and denoising methods have been applied in this process. In this study, the resizing process was performed only to ensure compliance with the input dimensions of the model. Thus, the images were transferred to the model in their raw form, without any filtering or artificial intervention, and the aim was to directly learn the features in the original structure of the data. This approach allows the model to learn generalizable features over real data and prevents the loss of information that may be caused by preprocessing steps.

3.2.3. Augmentation

To increase the learning capacity of the model and reduce the risk of overfitting, data augmentation techniques were applied to the region of interest data. In medical imaging, various features, particularly those linked to distinct tissue types, hold significant clinical value for diagnostic purposes. Geometric augmentation remains one of the most commonly employed techniques in this domain. In the present study, considering the diagnostic importance of texture and color, augmentation was restricted to 20% rotation, 0.2 shift, and 0.2 zoom. Geometric transformations, such as shifting images horizontally or vertically, serve as effective strategies to mitigate positional bias within the dataset [25]. The study primarily assessed the performance of dense layer architectures. In this context, the diversity of the dataset was increased by applying zoom, vertical shift, horizontal shift, and rotation operations to the images. Thanks to this method, the ability of the model to recognize different variations was improved.

3.2.4. Extraction of Deep Features Using DenseNet

DenseNet is one of the pre-trained models that exhibit superior performance in various image classification tasks and is widely used in the field of deep learning [26]. In this architecture, each layer is directly connected to all subsequent layers with feedforward connections [27]. This dense connection structure facilitates the gradient flow and allows the model to be trained efficiently even on deeper structures. At the same time, thanks to this structure, learning of fine details and perception of complex visual patterns can be performed more effectively. The chained connection of inputs minimizes information loss by enabling direct access to the feature maps learned at previous levels. When pre-trained DenseNet models are fine-tuned, they can largely preserve the original and characteristic features of images [28]. Transfer learning was applied using DenseNet121, DenseNet169, and DenseNet201 models, which were initialized with pre-trained weights on the ImageNet dataset. The architecture of these models was modified by removing the final classification layers and adding GlobalAveragePooling2D and Dense layers. The pre-trained layers were frozen, allowing only the newly added layers to be fine-tuned. This approach was adopted to mitigate the risk of overfitting, particularly given the limited size of the dataset. In this study, experimental studies were carried out using DenseNet121, DenseNet169, and DenseNet201 architectures, which have a densely connected network structure, in order to effectively learn even the finest visual details.

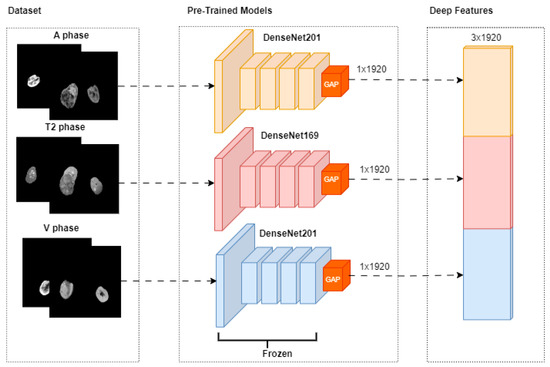

The fusion-based pipeline utilized in this study is presented in Figure 3. The pipeline incorporates three distinct magnetic resonance imaging (MRI) phases: A-phase, T2-phase, and V-phase. Each phase is processed through pre-trained models, specifically DenseNet201, DenseNet169, and DenseNet201, respectively. Feature extraction for each phase is performed using a Global Average Pooling (GAP) layer, resulting in deep feature vectors of size 1 × 1920 for each phase. These deep feature vectors are subsequently concatenated during the fusion phase to create a unified feature representation.

Figure 3.

Feature Extraction.

XA, XT2, and XV represent A-phase, T2-phase, and V-phase imaging data, respectively. GAP represents the Global Average Pooling process.

is a 3 × 1920 combined feature vector that combines the deep features from the phases.

Ablation studies were conducted with these models on the images of each MRI phase, and the models that gave the most successful results specific to the phases were determined. Then, these successful models were combined under a hybrid structure, and the classification process was applied by performing maximum feature extraction. All experiments were performed in the T4 GPU environment using Colab Pro. The equipment was sourced from Google Colab Pro, provided by Google LLC, Mountain View, CA, USA.

3.2.5. Classification

In the classification step, which is the last stage of the model, traditional machine learning methods were used to determine tumor subtypes. In this stage, the classification process was performed using previously extracted deep features with Support Vector Machines (SVM), Random Forests (RF), and K-Nearest Neighbors (KNN) algorithms. SVM transforms an input space into a high-dimensional feature space to create an ideal separation hyperplane from training examples [29]. In SVM, the input is divided into linear and nonlinear structures using margin and support vectors to create a useful decision boundary [30]. Random Forest aims to create a large number of decision trees for classification and to aggregate the results. It uses multiple DTs working together to make predictions [31]. Another algorithm used predominantly in classification is k-NN. k-NN works by finding the nearest data point or neighbor from the training dataset [32]. XGBoost is a gradient boosting framework. It aims to increase generalization ability by creating new decision trees that learn from the errors of previous models and reduce the error rate. The use of different classifiers allowed comparative analysis of the methods and made it possible to determine the algorithm that provides the most appropriate performance.

4. Experimental Results and Discussion

To demonstrate the effectiveness of the hybrid approach and the use of multiple data, ablation experiments were first performed. In this direction, the proposed method was compared with scenarios where only single models and single images were used. All experimental studies were conducted with fixed hyperparameter settings, and each model was trained for 50 epochs. The Adam algorithm was selected as the optimization algorithm with a mini-batch size of 16 and a learning rate of 0.001. The results of the performance parameters obtained from the confusion matrix and specified in Equations (1)–(6) for each phase are given in Table 3, Table 4 and Table 5.

Table 3.

Performance of V-phase data.

Table 4.

Performance of T2-phase data.

Table 5.

Performance of A-phase data.

To extract detailed features from MRI phase V images, deep learning-based dense layered architectures were used. In this context, transfer learning-based DenseNet121, DenseNet169, and DenseNet201 architectures were compared, and their classification performances were evaluated. As a result of the experimental study, the highest overall accuracy rate was obtained with the DenseNet201 model. This result shows that the depth and densely connected structure of the model are effective in extracting more detailed and distinctive features from phase V images. Although low success was generally observed in the classification of papillary tumors, the DenseNet169 architecture exhibited the highest performance in distinguishing this tumor type.

In the classification experiments performed on MRI T2 phase images, similar difficulties were encountered in distinguishing the Papillary tumor class, as in phase V. However, in the classification analyses performed with T2 phase data, the highest overall accuracy rate was obtained with the DenseNet169 architecture.

According to the classification results based on MRI A phase data, DenseNet201 stands out as the most successful model when the overall accuracy and class-based F1 scores are taken into account. However, the Papillary tumor class was again the most difficult to distinguish. These findings emphasize the decisive role of the interaction between phase-based imaging and deep learning architectures on tumor classification performance. In order to demonstrate the effectiveness of the proposed model, V, T2, and A phase images were given as input to the model. Each phase image was checked by a radiologist. In the study, a total of 1275 MRI images belonging to 62 patients were labeled as clear cell, chromophobe, and papillary, three different kidney tumor subtypes, based on pathological findings. The 224 × 224 MRI phase image was given as input to the pre-trained DenseNet201 model. Deep feature vectors of fixed length 1 × 1920 were obtained for each image via the Global Average Pooling (GAP) layer at the end of each DenseNet201 model. The deep features obtained for each of the three phase images were combined and then subjected to the classification process using traditional machine learning algorithms. Performance Metrics of the Paired MRI Phase Groups are given in Table 6.

Table 6.

Performance Metrics of Paired MRI Phase Groups with SVM.

When MRI phases are evaluated in pairs, it has been observed that the results are more successful compared to single-phase evaluations. The combination of arterial and venous phases demonstrated overall effectiveness across all classes. Notably, the T2-V group exhibited the most balanced performance, with consistently high F1-scores across all subtypes. These findings highlight that combining features derived from different MRI phases leads to more robust and effective classification outcomes and promising results.

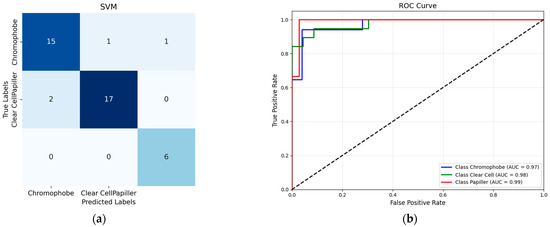

When the classification results performed with Support Vector Machines (SVMs) are examined (Table 7), it is seen that the model performs consistently well across all classes, with slight room for improvement in precision for the “Papiller” class. The high recall for “Papiller” (100%) and balanced performance across other classes indicate robustness, particularly in detecting all true cases. Overall metrics like accuracy, MCC, and Kappa above 0.84 suggest a high-quality model with reliable predictions.

Table 7.

Hybrit CNN + SVM Performance metrics.

According to the confusion matrix for the SVM classifier presented in Figure 4, 17 out of 19 Clear Cell tumor samples were accurately classified, while two samples were misclassified as Chromophobe tumors. Upon examining the classification performance of the Chromophobe class, it was observed that 15 out of 17 samples were correctly identified. Among the remaining two samples, one was misclassified as Clear Cell, and the other as Papillary. This misclassification shows potential visual similarities or a limited set of discriminatory features between the Chromophobe class and the other tumor classes. Notably, all samples belonging to the Papillary tumor class were correctly classified, demonstrating the robustness of the model for this class. These findings indicate that the tumor class with the highest degree of inter-class confusion under a single-method, single-dataset approach was effectively distinguished using the proposed methodology.

Figure 4.

(a) SVM confusion matrix; (b) SVM ROC Curve.

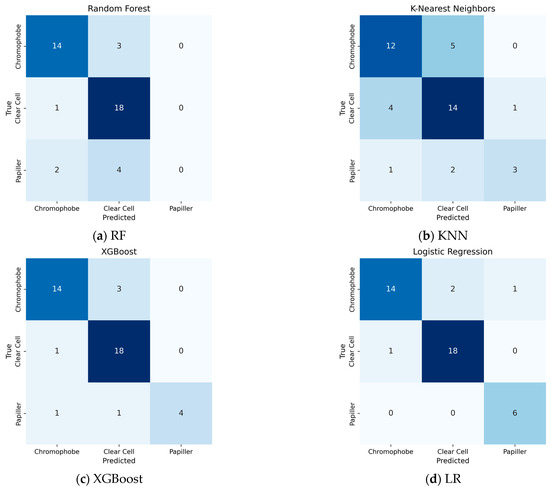

When traditional classification models other than SVM are evaluated (Figure 5), the confusion matrix obtained by the Logistic Regression algorithm is remarkable. Logistic Regression showed the most successful classification performance after the SVM model. This model managed to classify the Clear Cell tumor class with 94.7% accuracy, while this rate was 82.3% for the Chromophobe class. However, the discriminatory power of the model was significantly lower in the Papillary class, with only 66.6% accuracy. When the results of the K-Nearest Neighbor (KNN) algorithm are examined, it is observed that the model correctly classified Clear Cell tumors in 14 samples, but incorrectly classified 4 samples of Clear Cell tumors and assigned them to the Chromophobe class. This shows that Clear Cell and Chromophobe tumor classes may have structural similarities in terms of neighborhood relations that form the basis of the k-NN algorithm. On the other hand, the model was insufficient in distinguishing the Papillary class.

Figure 5.

Performance of traditional ML Algorithms: (a) RF; (b) KNN; (c) Xgboost; (d) LR.

XGBoost produced a more successful result in the Papillary class compared to previous models, especially compared to RF. However, the confusion seen in the Chromophobe class shows that the model has difficulty in distinguishing the class in the examples belonging to this class.

RF success rate in this classification was obtained as 76%. While the Clear Cell subtype was detected with 81% accuracy, the Chromophobe subtype reached 82% accuracy. However, there were difficulties in detecting the papillary subtype.

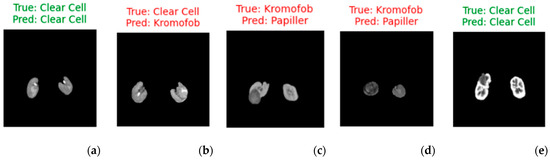

Figure 6 shows 5 sample images classified with the hybrid method using the SVM classifier. Clear cell RCC shows more contrast than Papillary and Chromophobe RCC (Figure 6a,e), but the other two types are difficult to distinguish because they have lower and similar contrast (Figure 5c,d). Clear cell contains more cystic, necrotic, and hemorrhagic areas, while chromophobe is more solid. In Figure 5b, the lesion contains more solid areas. Therefore, the model made the wrong classification. Table 8 presents the statistical analyses.

Figure 6.

Visualized predictions with true labels (for five random selected images). (a,e) represent correct predictions, while (b–d) represent incorrect predictions.

Table 8.

Statistical analysis.

In McNemar analysis, i → j denotes instances where class i is misclassified as class j, whereas j → i represents cases where class j is misclassified as class i. The McNemar statistic assesses whether the difference between these asymmetric misclassifications is statistically significant. In the case of the Clear Cell and Papillary classes, no misclassifications occurred between them (i.e., both i → j and j → i are zero). Consequently, the test could not be performed, indicating that the model made no errors between these two classes. For the other class pairs, no statistically significant differences were observed. This suggests that the model distinguishes between these classes with comparable accuracy and that the misclassification errors are relatively balanced. It is also important to acknowledge the potential impact of the small sample size, which may reduce the statistical power of the test and limit the ability to detect meaningful differences.

The comparison of our results with the studies conducted to distinguish kidney cancers in the literature is presented in Table 7. The most important feature that distinguishes our study from other studies is the use of MRI images. The second is the determination of kidney cancer subtypes from different phase images. The third is the determination of cancer type by combining feature vectors obtained from three-phase images. Finally, the classification success, which is considered a multi-class problem, is higher than in the literature. Table 9 shows comparisons with similar studies in the literature.

Table 9.

Literature comparison.

A review of the literature reveals that most existing studies predominantly utilize CT and histopathological images for renal tumor classification, while MRI—despite being a less harmful modality in terms of radiation exposure—has been comparatively underexplored. In the present study, we emphasize the diagnostic value of multiparametric MRI, demonstrating that the integration of features from different imaging phases of the same lesion can enhance classification performance. This approach allows for a more comprehensive representation of tumor heterogeneity. Furthermore, the proposed method successfully addresses the challenge of class imbalance, particularly for underrepresented subtypes, such as chromophobe and papillary RCC, by leveraging transfer learning techniques. By reducing dependence on radiologist expertise and utilizing features extracted across multiple MRI phases, the likelihood of diagnostic error is minimized. These findings suggest a meaningful advancement over previous studies that rely heavily on single-phase data or modalities associated with higher radiation risk. However, there are several limitations to our study. The first of these is the complexity of the decision-making process. Although the system architecture is complex, higher accuracy was obtained compared to ablation tests using a simpler architecture. Other challenges faced by the model in accurately classifying this subtype can be attributed to several factors. Firstly, among renal cell carcinoma (RCC) subtypes, clear cell carcinoma accounts for approximately 75% of cases, making other subtypes significantly less prevalent. This inherent imbalance in the dataset leads to a scarcity of training examples for the rarer subtypes, thereby limiting the model’s ability to learn representative features effectively. Secondly, the limited dataset size necessitates the development of techniques for effective learning from small data samples, which remains a significant challenge in deep learning. The lack of sufficient training data makes the model more susceptible to overfitting and reduces its generalizability to unseen cases. Additionally, obtaining definitive diagnoses often requires invasive, time-intensive, and costly procedures, such as biopsies or surgical interventions. These constraints not only hinder the availability of labeled data but also highlight the critical need for accurate and reliable decision-support systems.

5. Conclusions

This study highlights the complexity and subjectivity involved in identifying kidney tumor subtypes. The application of deep learning plays a pivotal role in accurately differentiating papillary, clear cell, and chromophobe subtypes. It is difficult to distinguish this cancer from a single MRI image due to textural similarities. In order to overcome this problem, a hybrid deep learning method is proposed in this article, where images obtained from different MRI phases are used together. Experimental findings have shown that an effective decision support system can be created by combining features obtained from different MRI phases and, thus, clinical experts can be supported in the diagnosis process. As a result of analyzing the images of each phase with densely connected deep neural networks, it was observed that the highest performance was obtained with the DenseNet201 architecture. In this direction, the images of each MRI phase were processed separately with the DenseNet201 model, and then the deep features obtained were combined to perform the classification process. This approach not only increased the representation power of the features but also minimized the error rate by compensating for information that may be missed in an image from other phase images. The results obtained support the effectiveness of the method. In addition, the reduction in the amount of ionizing radiation to which patients are exposed by using Magnetic Resonance Imaging (MRI) data instead of the frequently preferred Computerized Tomography (CT) images is another important contribution of the study. In the scope of future studies, it is aimed to process more meaningful and weighted features, instead of all features, with the integration of attention-based mechanisms. In this way, both the computational load can be reduced and the model can learn the distinctive features more effectively. Moreover the performance of the model is influenced by both hyperparameters and the scale of data augmentation. Identifying the most effective parameter configurations and augmentation strategies through optimization techniques and applying them to medical images constitutes a promising direction for future work.

Author Contributions

Conceptualization, G.K., D.C., T.T. and M.Y.; methodology, G.K., D.C., T.T. and M.Y.; software, D.C., T.T. and M.Y.; validation, G.K., D.C., T.T. and M.Y.; formal analysis, G.K., D.C., T.T. and M.Y.; investigation, G.K., D.C., T.T. and M.Y.; resources, G.K., D.C., T.T. and M.Y.; data curation, G.K., and D.C.; writing—original draft preparation, G.K., D.C., T.T. and M.Y.; writing—review and editing, G.K., D.C., T.T. and M.Y.; visualization, G.K. and D.C.; supervision, G.K., D.C., T.T. and M.Y.; project administration, G.K., D.C., T.T. and M.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The dataset used in this study was collected with the decision of the ethics committee of Elazığ Fethi Sekin City Hospital(dated 24 April 2025 and numbered 2025/8).

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in this research are available upon request from the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this article.

References

- Fitzmaurice, C.; Dicker, D.; Pain, A.; Hamavid, H.; Moradi-Lakeh, M.; MacIntyre, M.F.; Allen, C.; Hansen, G.; Woodbrook, R.; Global Burden of Disease Cancer Collaboration; et al. The global burden of cancer 2013. JAMA Oncol. 2015, 1, 505–527. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics, 2018. CA Cancer J. Clin. 2018, 68, 7–30. [Google Scholar] [CrossRef]

- Budumuru, P.R.; Murugapandiyan, P.; Sri Rama Krishna, K. Performance Assessment of Deep Learning-Models for Kidney Tumor Segmentation using CT Images. In International Conference on Cognitive Computing and Cyber Physical Systems; Springer Nature: Cham, Switzerland, 2024; pp. 124–134. [Google Scholar]

- Kase, A.M.; George, D.J.; Ramalingam, S. Clear cell renal cell carcinoma: From biology to treatment. Cancers 2023, 15, 665. [Google Scholar] [CrossRef]

- Zheng, Y.; Wang, S.; Chen, Y.; Du, H.Q. Deep learning with a convolutional neural network model to differentiate renal parenchymal tumors: A preliminary study. Abdom. Radiol. 2021, 46, 3260–3268. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Hwang, S.I.; Lee, H.J. The Classification of Renal Cancer in 3-Phase CT Images Using a Deep Learning Method. J. Digit. Imaging 2019, 32, 638–643. [Google Scholar] [CrossRef] [PubMed]

- Özbay, E.; Özbay, F.A.; Gharehchopogh, F.S. Kidney tumor classification on CT images using self-supervised learning. Comput. Biol. Med. 2024, 176, 108554. [Google Scholar] [CrossRef] [PubMed]

- Mehedi, M.H.K.; Haque, E.; Radin, S.Y.; Rahman, M.A.U.; Reza, M.T.; Alam, M.G.R. Kidney Tumor Segmentation and Classification using Deep Neural Network on CT Images. In Proceedings of the 2022 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 28 November–30 December 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Ghalib, M.R.; Bhatnagar, S.; Jayapoorani, S.; Pande, U. Artificial neural network based detection of renal tumors using CT scan image processing. Int. J. Eng. Technol. 2014, 2, 28–35. [Google Scholar]

- Pande, S.D.; Agarwal, R. Multi-class kidney abnormalities detecting novel system through computed tomography. IEEE Access 2024, 12, 21147–21155. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, Z.; Chen, Y.-C.; Zhao, Z.-Y.; Yin, X.-D.; Jiang, H.-B. A deep learning-based radiomics model for differentiating benign and malignant renal tumors. Transl. Oncol. 2019, 12, 292–300. [Google Scholar] [CrossRef]

- Karthikeyan, V.; Kishore, M.N.; Sajin, S. End-to-end light-weighted deep-learning model for abnormality classification in kidney CT images. Int. J. Imaging Syst. Technol. 2024, 34, e23022. [Google Scholar] [CrossRef]

- Abdullah, M.; Hmeidi, I.; AlAzab, R.; Gharaibeh, M.; El-Heis, M.; Almotairi, K.H.; Forestiero, A.; Hussein, A.M.; Abualigah, L. Kidney Tumor Detection and Classification Based on Deep Learning Approaches: A New Dataset in CT Scans. J. Healthc. Eng. 2021, 2022, 3861161. [Google Scholar]

- Coy, H.; Hsieh, K.; Wu, W.; Nagarajan, M.B.; Young, J.R.; Douek, M.L.; Brown, M.S.; Scalzo, F.; Raman, S.S. Deep learning and radiomics: The utility of Google TensorFlow™ Inception in classifying clear cell renal cell carcinoma and oncocytoma on multiphasic CT. Abdom. Radiol. 2019, 44, 2009–2020. [Google Scholar] [CrossRef]

- Sundaramoorthy, S.; Jayachandru, K. Designing of Enhanced Deep Neural Network Model for Analysis and Identification of Kidney Stone, Cyst, and Tumour. SN Comput. Sci. 2023, 4, 466. [Google Scholar] [CrossRef]

- Gupta, A.; Dhanakshirur, R.R.; Jain, K.; Garg, S.; Yadav, N.; Seth, A.; Das, C.J. Deep Learning for Detecting and Subtyping Renal Cell Carcinoma on Contrast-Enhanced CT Scans Using 2D Neural Network with Feature Consistency Techniques. Indian J. Radiol. Imaging 2025, 35, 395–401. [Google Scholar] [CrossRef]

- Kocak, B.; Yardimci, A.H.; Bektas, C.T.; Turkcanoglu, M.H.; Erdim, C.; Yucetas, U.; Koca, S.B.; Kilickesmez, O. Textural differences between renal cell carcinoma subtypes: Machine learning-based quantitative computed tomography texture analysis with independent external validation. Eur. J. Radiol. 2018, 107, 149–157. [Google Scholar] [CrossRef]

- Uhm, K.-H.; Jung, S.-W.; Choi, M.H.; Shin, H.-K.; Yoo, J.-I.; Oh, S.W.; Kim, J.Y.; Kim, H.G.; Lee, Y.J.; Youn, S.Y.; et al. Deep learning for end-to-end kidney cancer diagnosis on multi-phase abdominal computed tomography. npj Precis. Oncol. 2021, 5, 54. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.; Ren, B.; Richards, R.; Suriawinata, M.; Tomita, N.; Hassanpour, S. Development and evaluation of a deep neural network for histologic classification of renal cell carcinoma on biopsy and surgical resection slides. Sci. Rep. 2021, 11, 7080. [Google Scholar] [CrossRef]

- Pan, L.; Chen, M.; Sun, J.; Jin, P.; Ding, J.; Cai, P.; Chen, J.; Xing, W. Prediction of Fuhrman grade of renal clear cell carcinoma by multimodal MRI radiomics: A retrospective study. Clin. Radiol. 2024, 79, e273–e281. [Google Scholar] [CrossRef]

- Said, D.; Hectors, S.J.; Wilck, E.; Rosen, A.; Stocker, D.; Bane, O.; Beksaç, A.T.; Lewis, S.; Badani, K.; Taouli, B. Characterization of solid renal neoplasms using MRI-based quantitative radiomics features. Abdom. Radiol. 2020, 45, 2840–2850. [Google Scholar] [CrossRef]

- Du, G.; Chen, L.; Wen, B.; Lu, Y.; Xia, F.; Liu, Q.; Shen, W. Deep learning-based prediction of tumor aggressiveness in RCC using multiparametric MRI: A pilot study. Int. Urol. Nephrol. 2025, 57, 1365–1379. [Google Scholar] [CrossRef]

- Alhussaini, A.J.; Steele, J.D.; Nabi, G. Comparative analysis for the distinction of chromophobe renal cell carcinoma from renal oncocytoma in computed tomography imaging using machine learning radiomics analysis. Cancers 2022, 14, 3609. [Google Scholar] [CrossRef]

- Nikpanah, M.; Xu, Z.; Jin, D.; Farhadi, F.; Saboury, B.; Ball, M.W.; Gautam, R.; Merino, M.J.; Wood, B.J.; Turkbey, B.; et al. A deep-learning based artificial intelligence (AI) approach for differentiation of clear cell renal cell carcinoma from oncocytoma on multi-phasic MRI. Clin. Imaging 2021, 77, 291–298. [Google Scholar] [CrossRef] [PubMed]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- De, A.; Mishra, N.; Chang, H.T. An approach to the dermatological classification of histopathological skin images using a hybridized CNN-DenseNet model. PeerJ Comput. Sci. 2024, 10, e1884. [Google Scholar] [CrossRef] [PubMed]

- Bello, A.; Ng, S.C.; Leung, M.F. Skin cancer classification using fine-tuned transfer learning of DENSENET-121. Appl. Sci. 2024, 14, 7707. [Google Scholar] [CrossRef]

- Mary, A.R.; Kavitha, P. Diabetic retinopathy disease detection using shapley additive ensembled densenet-121 resnet-50 model. Multimed. Tools Appl. 2024, 83, 69797–69824. [Google Scholar] [CrossRef]

- Norikane, T.; Ishimura, M.; Mitamura, K.; Yamamoto, Y.; Arai-Okuda, H.; Manabe, Y.; Murao, M.; Morita, R.; Obata, T.; Tanaka, K.; et al. Texture Features of 18F-Fluorodeoxyglucose Positron Emission Tomography for Predicting Programmed Death-Ligand-1 Levels in Non-Small Cell Lung Cancer. J. Clin. Med. 2024, 13, 1625. [Google Scholar] [CrossRef]

- Lakshmi, M.; Das, R.; Manohar, B. A new COVID-19 classification approach based on Bayesian optimization SVM kernel using chest X-ray datasets. Evol. Syst. 2024, 15, 1521–1540. [Google Scholar] [CrossRef]

- Çetintaş, D. Efficient monkeypox detection using hybrid lightweight CNN architectures and optimized SVM with grid search on imbalanced data. Signal Image Video Process. 2025, 19, 336. [Google Scholar] [CrossRef]

- Uddin, S.; Haque, I.; Lu, H.; Moni, M.A.; Gide, E. Comparative performance analysis of K-nearest neighbour (KNN) algorithm and its different variants for disease prediction. Sci. Rep. 2022, 12, 6256. [Google Scholar] [CrossRef]

- Yao, N.; Hu, H.; Chen, K.; Huang, H.; Zhao, C.; Guo, Y.; Li, B.; Nan, J.; Li, Y.; Han, C.; et al. A robust deep learning method with uncertainty estimation for the pathological classification of renal cell carcinoma based on CT images. J. Imaging Inform. Med. 2024, 38, 1323–1333. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; An, Z.C.; Du, L.F.; Li, F.; Cai, Y.Y. Deep Learning for Classification of Solid Renal Parenchymal Tumors Using Contrast-Enhanced Ultrasound. J. Imaging Inform. Med. 2025, 42, 184. [Google Scholar] [CrossRef] [PubMed]

- Kan, H.-C.; Lin, P.-H.; Shao, I.-H.; Cheng, S.-C.; Fan, T.-Y.; Chang, Y.-H.; Huang, L.-K.; Chu, Y.-C.; Yu, K.-J.; Chuang, C.-K.; et al. Using deep learning to differentiate among histology renal tumor types in computed tomography scans. BMC Med. Imaging 2025, 25, 66. [Google Scholar] [CrossRef] [PubMed]

- Chanchal, A.K.; Lal, S.; Kumar, R.; Kwak, J.T.; Kini, J. A novel dataset and efficient deep learning framework for automated grading of renal cell carcinoma from kidney histopathology images. Sci. Rep. 2023, 13, 5728. [Google Scholar] [CrossRef]

- Ye, Z.; Ge, S.; Yang, M.; Du, C.; Ma, F. An Explainable Classification Model of Renal Cancer Subtype Using Deep Learning. In Proceedings of the 2024 17th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 26–28 October 2024; IEEE: New York, NY, USA; pp. 1–10. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).