Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection and Preprocessing

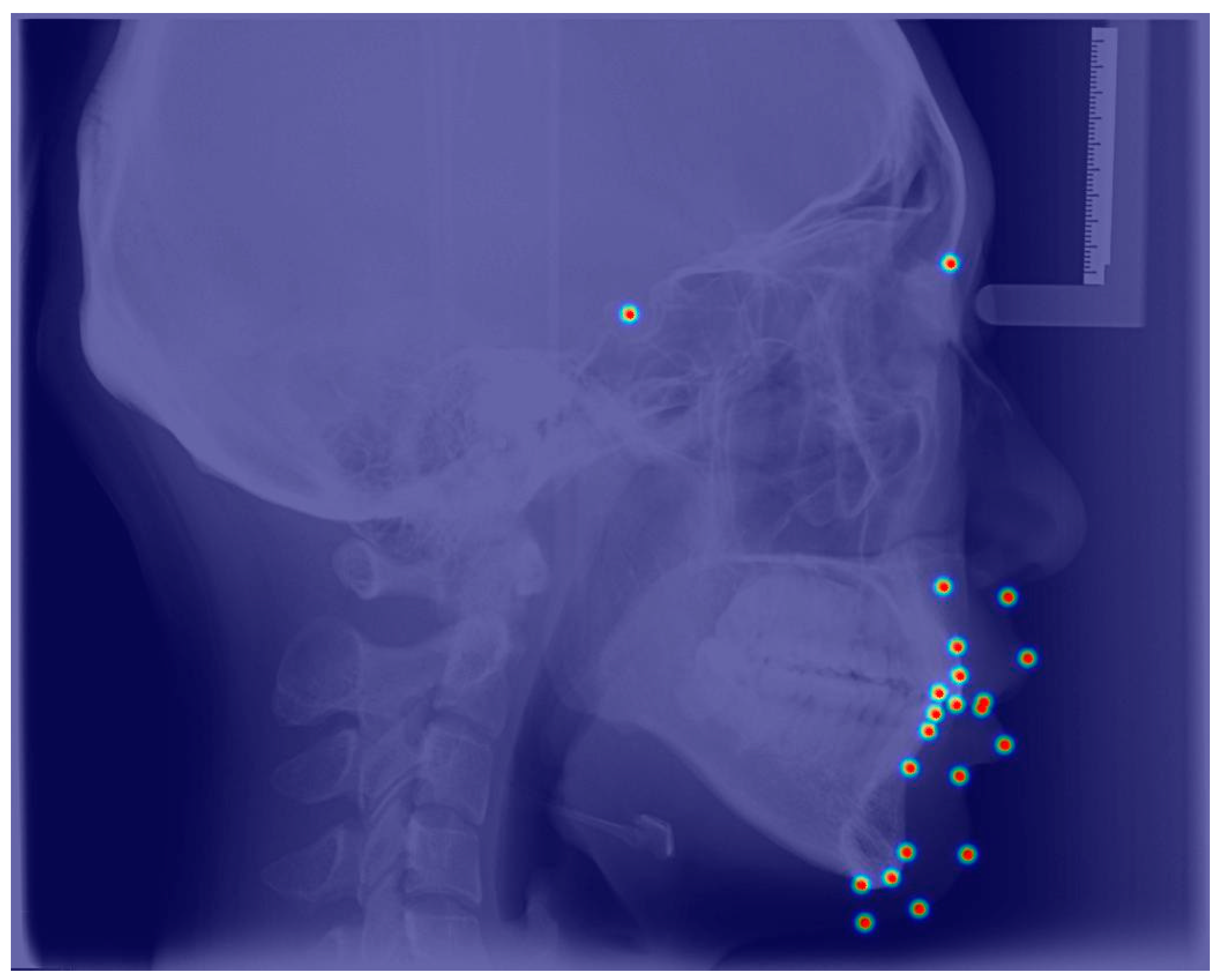

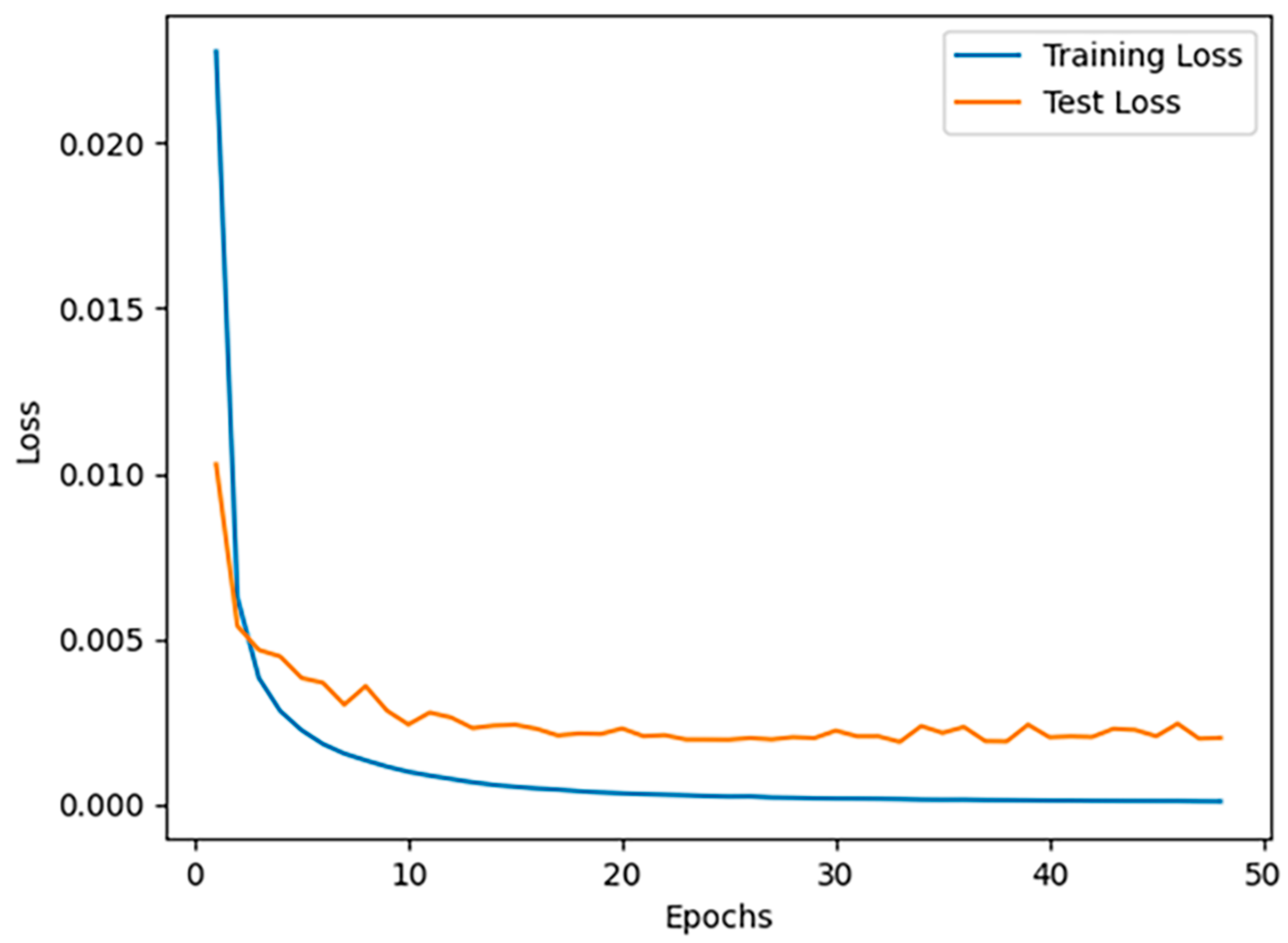

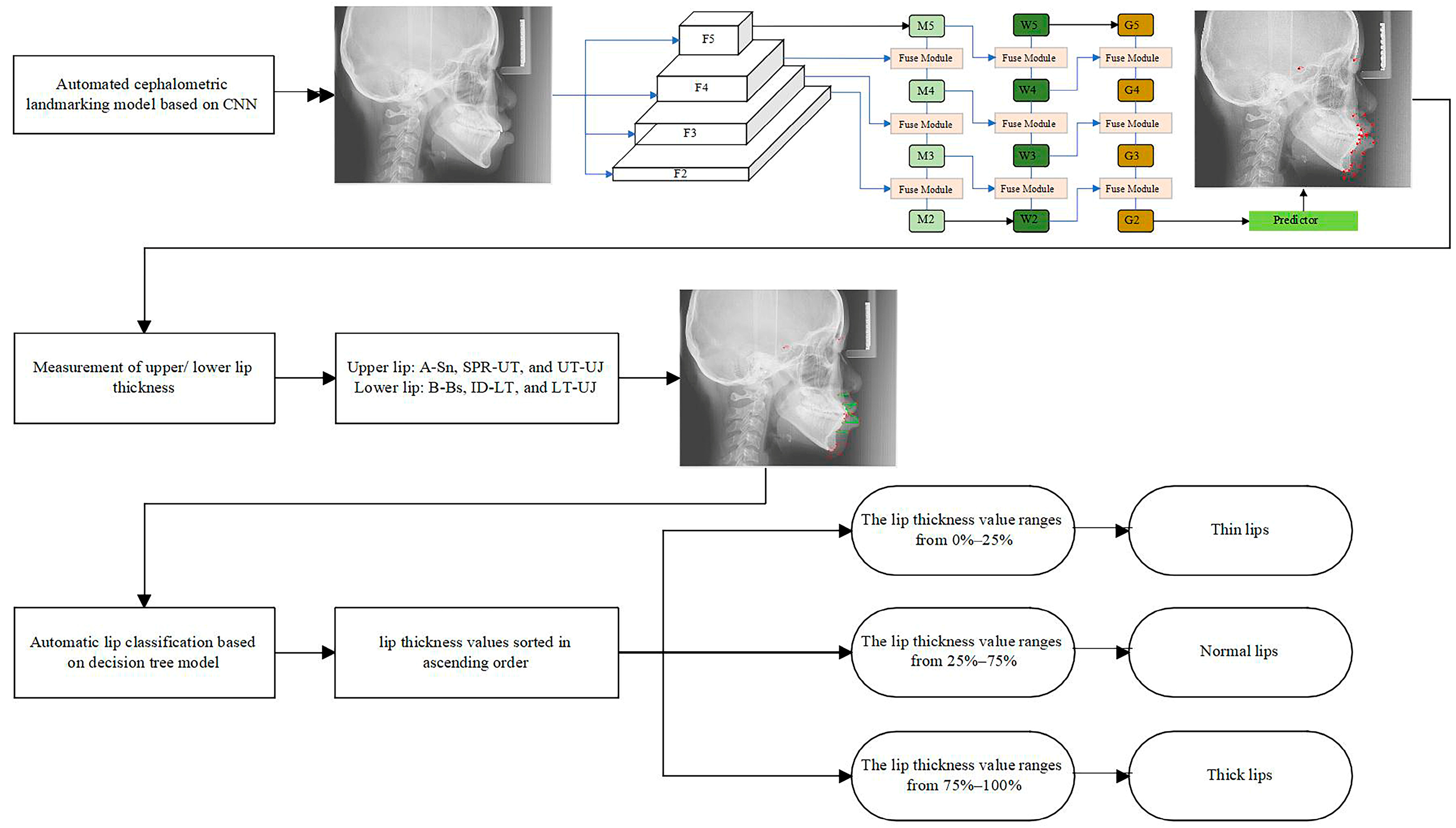

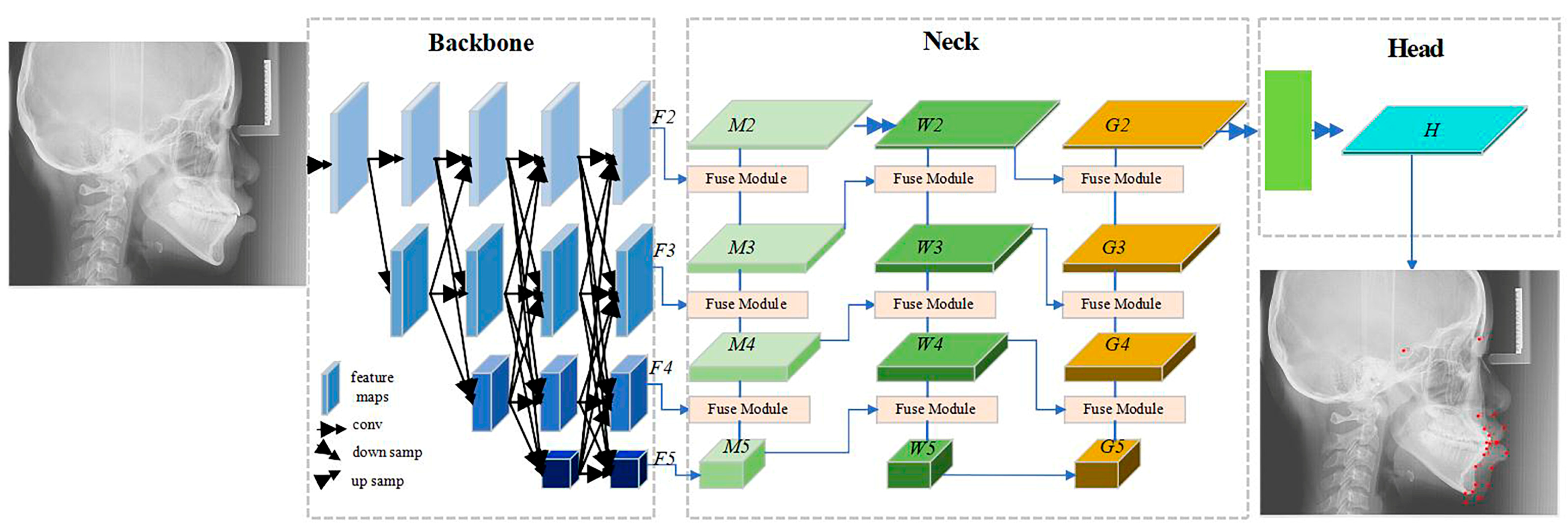

2.2. The Automatic Cephalometric Landmark Detection Model

2.3. Model Evaluation and Accuracy Assessment

2.4. The Decision Tree Model

3. Results

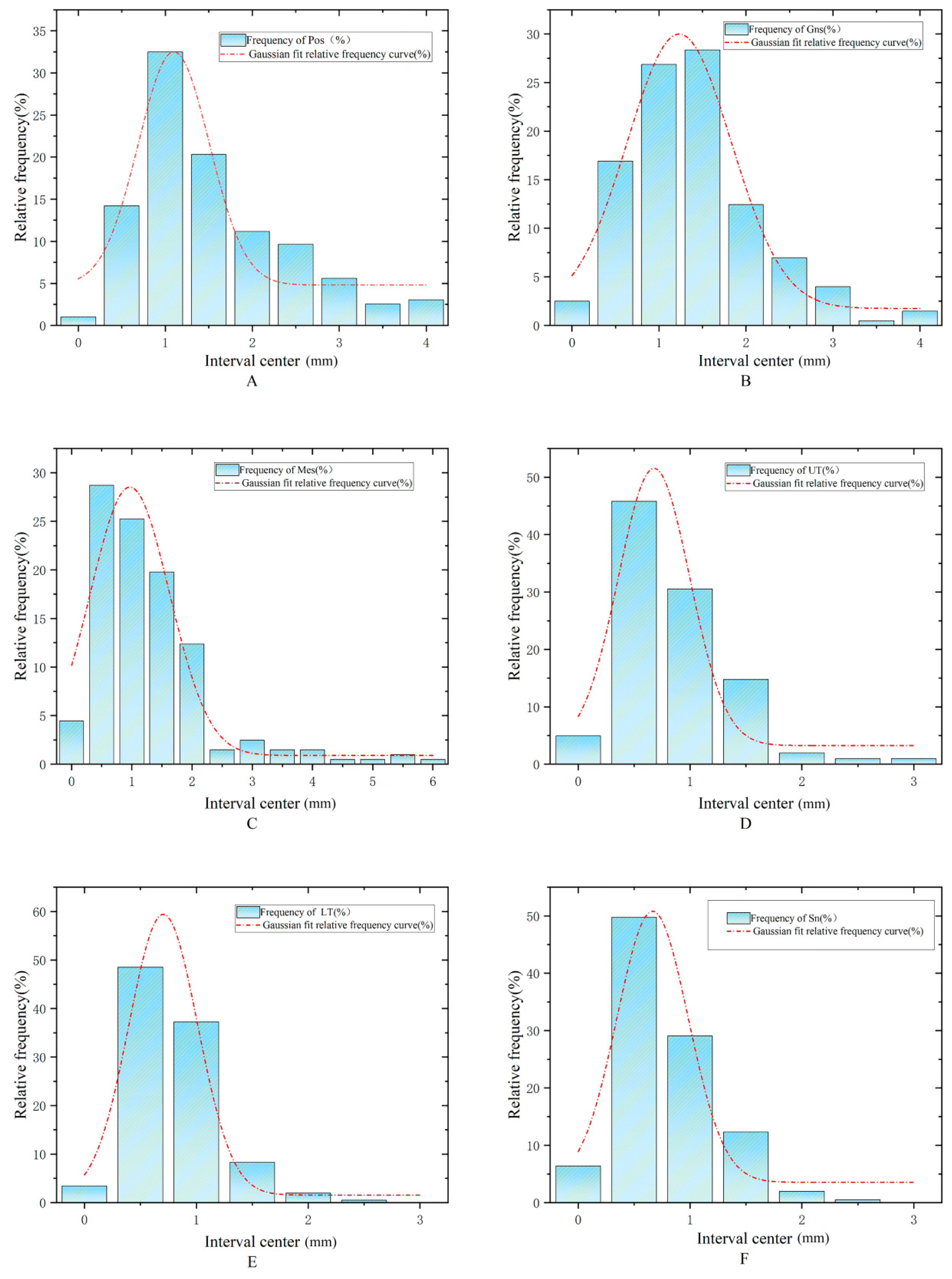

3.1. Accuracy of Automatic Landmark Localization

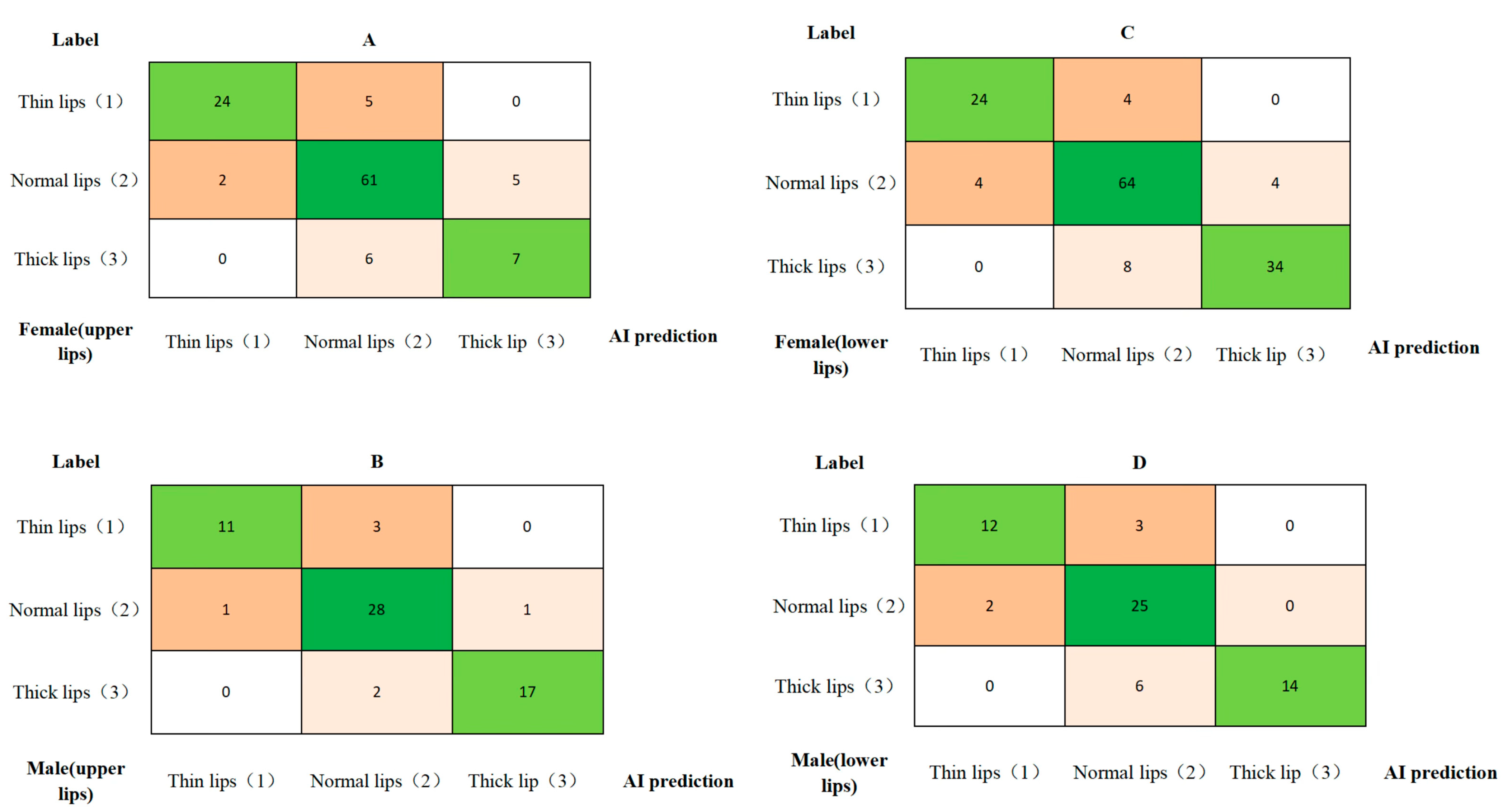

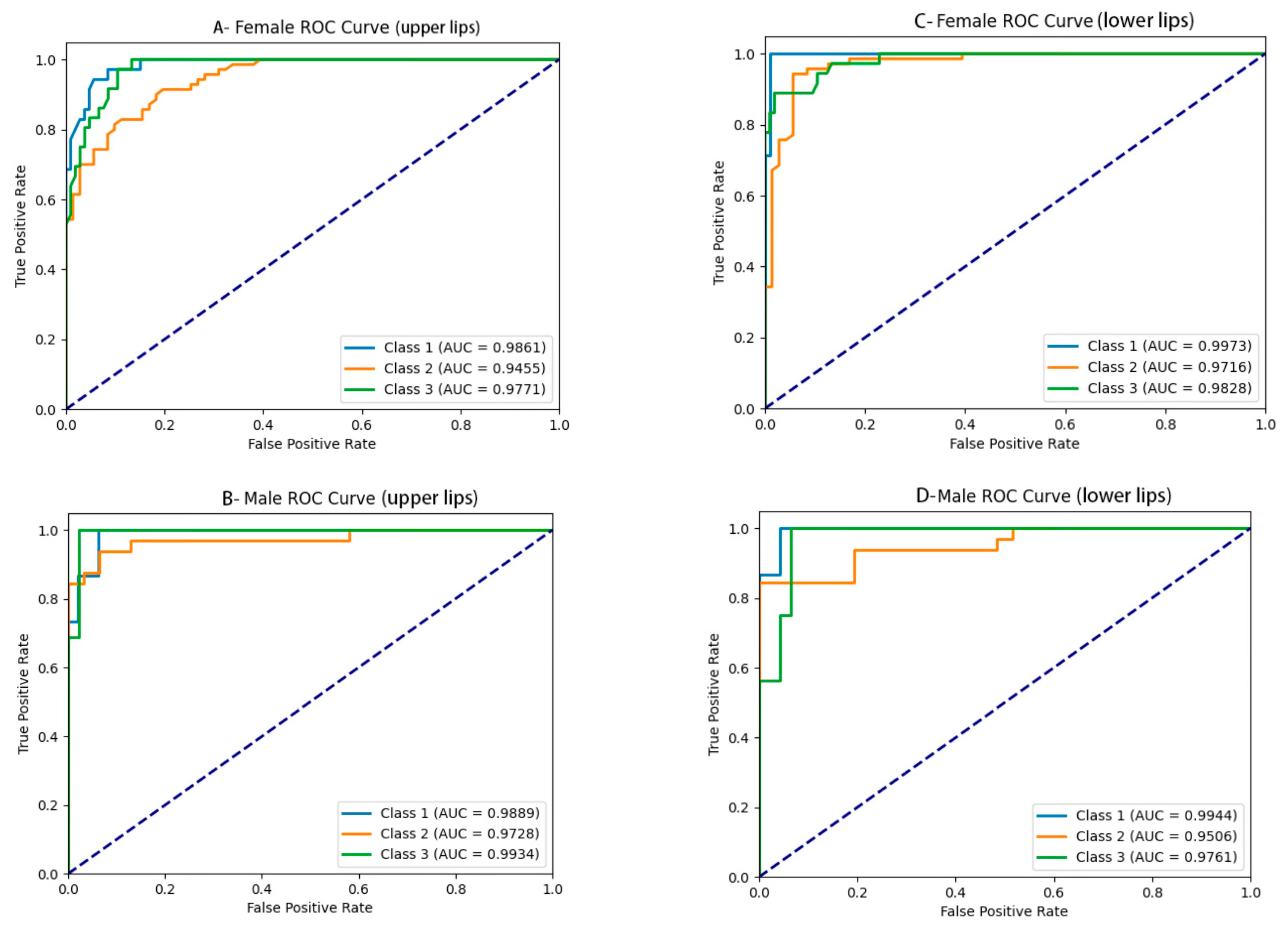

3.2. Lip Thickness Classification Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Peck, H.; Peck, S. A concept of facial esthetics. Angle Orthod. 1970, 40, 284–318. [Google Scholar] [PubMed]

- Sodagar, A.; Borujeni, D.G.; Amini, G. Prediction of soft tissue profile changes following orthodontic retraction of incisors in Iranian girls. World J. Orthod. 2010, 11, 262–268. [Google Scholar]

- Ramos, A.L.; Sakima, M.T.; Pinto Ados, S.; Bowman, S.J. Upper lip changes correlated to maxillary incisor retraction—A metallic implant study. Angle Orthod. 2005, 75, 499–505. [Google Scholar] [PubMed]

- Kiełczykowski, M.; Perkowski, K.; Kamiński, K.; Zadurska, M.; Czochrowska, E. Evaluation of the soft tissue facial profile in different skeletal malocclusions in relation to age. BMC Oral. Health 2024, 24, 711. [Google Scholar] [CrossRef]

- Mariscal, J.F.; Ravelli, D.B.; Pinto, A.d.S.; Dominguez, G.C.; Aidar, L.A.d.A.; Jacob, H.B. Comparisons of soft tissue changes after Herbst appliance treatment in three different growth phases. J. World Fed. Orthod. 2024, 13, 78–85. [Google Scholar] [CrossRef]

- Khurshid, H.M.; Rizwan, S.; Ahmed, I.; Mehmood, H.T.; Nagani, N.I.; Tanvir, F. Comparison of upper and lower lip position, length and thickness in sagittal and vertical malocclusion. J. Pak. Med. Assoc. 2023, 73, 2423–2426. [Google Scholar] [CrossRef] [PubMed]

- Hamid, S.; Abuaffan, A.H. Facial soft tissue thickness in a sample of Sudanese adults with different occlusions. Forensic Sci. Int. 2016, 266, 209–214. [Google Scholar] [CrossRef]

- Schwendicke, F.; Singh, T.; Lee, J.-H.; Gaudin, R.; Chaurasia, A.; Wiegand, T.; Uribe, S.; Krois, J.; on behalf of the IADR e-Oral Health Network; The ITU WHO Focus Group AI for Health. Artificial intelligence in dental research: Checklist for authors, reviewers, readers. J. Dent. 2021, 107, 103610. [Google Scholar] [CrossRef]

- McBee, M.P.; Awan, O.A.; Colucci, A.T.; Ghobadi, C.W.; Kadom, N.; Kansagra, A.P.; Auffermann, W.F. Deep Learning in Radiology. Acad. Radiol. 2018, 25, 1472–1480. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry-A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Hu, M.; Gong, X.; Wei, X. Advances in the Application of Machine Learning in Orthodontic Diagnosis and Treatment. Chin. J. Stomatol. 2021, 56, 1277–1281. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Wang, H.; Liu, J.; Tang, J.; Wu, G. Lightweight Super-Resolution Head for Human Pose Estimation. arXiv 2023, arXiv:2307.16765. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R. Soft-NMS: Improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Guan, Y.-X.; Xin, L.; Tian, P.-F.; Kyung, H.-M.; Kwon, T.-G.; Bing, L.; Wu, X.-P. Effect of Soft Tissue Thickness on the Morphology of Lip in Orthodontic Treatment. Int. J. Morphol. 2019, 37, 1245–1251. [Google Scholar] [CrossRef]

- Albertini, P.; Barbara, L.; Albertini, E.; Willeit, P.; Lombardo, L. Soft-tissue profile changes in adult patients treated with premolar extractions. Am. J. Orthod. Dentofacial Orthop. 2024, 166, 171–178. [Google Scholar] [CrossRef]

- Kang, S.; Kim, I.; Kim, Y.; Kim, N.; Baek, S.; Sung, S. Accuracy and clinical validity of automated cephalometric analysis using convolutional neural networks. Orthod. Craniofac Res. 2024, 27, 64–77. [Google Scholar] [CrossRef]

- Yao, J.; Zeng, W.; He, T.; Zhou, S.; Zhang, Y.; Guo, J.; Tang, W. Automatic localization of cephalometric landmarks based on convolutional neural network. Am. J. Orthod. Dentofac. Orthop. 2022, 161, e250–e259. [Google Scholar] [CrossRef]

- Hong, W.B.; Kim, S.-M.B.; Choi, J.B.; Ahn, J.; Paeng, J.-Y.; Kim, H. Automated Cephalometric Landmark Detection Using Deep Reinforcement Learning. J. Craniofac. Surg. 2023, 34, 2336–2342. [Google Scholar] [CrossRef]

- Bao, H.; Zhang, K.; Yu, C.; Li, H.; Cao, D.; Shu, H.; Liu, L.; Yan, B. Evaluating the accuracy of automated cephalometric analysis based on artificial intelligence. BMC Oral. Health 2023, 23, 191. [Google Scholar] [CrossRef]

- Sadry, S.; Koru, B.E.; Kayalar, E. Analyzing the effects of tooth extraction on the lip in orthodontic treatment. J. Stomatol. Oral. Maxillofac. Surg. 2022, 123, e126–e132. [Google Scholar] [CrossRef] [PubMed]

- Snow, J.I.; Chung, D.D. Hispanic adolescent lip response to extraction and nonextraction orthodontic treatment. Am. J. Orthod. Dentofac. Orthop. 2023, 163, 68–78. [Google Scholar] [CrossRef] [PubMed]

- Perlaza Ruiz, N.A. Facial soft tissue thickness of Colombian adults. Forensic Sci. Int. 2013, 229, 160.e1–160.e9. [Google Scholar] [CrossRef]

- Kamak, H.; Celikoglu, M. Facial soft tissue thickness among skeletal malocclusions: Is there a difference? Korean J. Orthod. 2012, 42, 23–31. [Google Scholar] [CrossRef] [PubMed]

- Noman, M.; Hashmi, G.; Ali, M.M.; Yousaf, U.; Hussain, M.; Mujeeb, R. Comparison of Soft Tissue Chin Thickness in Adult Patients With Various Mandibular Divergence Patterns. Cureus 2024, 16, e59150. [Google Scholar] [CrossRef]

- Veni, S.K.; Elsayed, M.; Singh, I.S.; Nayan, K.; Varma, P.K.; Naik, M.K. Changes in Soft Tissue Variable of Lips Following Retraction of Anterioir Teeth- A Cephalometric Study. J. Pharm. Bioallied Sci. 2023, 15 (Suppl. S1), S248–S251. [Google Scholar] [CrossRef]

- Negrutiu, B.M.; Vaida, L.L.; Todor, B.I.; Judea, A.S.; Lile, I.E.; Moca, A.E.; Judea-Pusta, C.T. An important morphological feature of the face: Upper lip length. Rom. J. Morphol. Embryol. 2019, 60, 537–541. [Google Scholar]

- Yin, X.X.; Ng, B.W.H.; Yang, Q.; Pitman, A.; Ramamohanarao, K.; Abbott, D. Anatomical landmark localization in breast dynamic contrast-enhanced MR imaging. Med. Biol. Eng. Comput. 2012, 50, 91–101. [Google Scholar] [CrossRef]

- Geng, Z.; Sun, K.; Xiao, B.; Zhang, Z.; Wang, J. Bottom-up human pose estimation via disentangled keypoint regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 14676–14686. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Human pose estimation via convolutional part heatmap regression. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 717–732. [Google Scholar]

- Lindner, C.; Wang, C.-W.; Huang, C.-T.; Li, C.-H.; Chang, S.-W.; Cootes, T.F. Fully Automatic System for Accurate Localisation and Analysis of Cephalometric Landmarks in Lateral Cephalograms. Sci. Rep. 2016, 6, 33581. [Google Scholar] [CrossRef]

| No. | Landmarks |

|---|---|

| 1 | Sella (S) |

| 2 | Nasion (N) |

| 3 | Subspinale (A) |

| 4 | Superior prosthion (Spr) |

| 5 | The most labial surface of the upper incisor (UJ) |

| 6 | Upper incisor (UI) |

| 7 | Lower incisor (LI) |

| 8 | The most labial surface of the lower incisor (LJ) |

| 9 | Infradentale (Id) |

| 10 | Supramental (B) |

| 11 | Pogonion (Po) |

| 12 | Gnathion (Gn) |

| 13 | Menton (Me) |

| 14 | Subnasale (Sn) |

| 15 | Labrale superius (UT) |

| 16 | Stomion superius (UL) |

| 17 | Stomion superius (LL) |

| 18 | Labrale inferius (LT) |

| 19 | Inferior labial sulcus (Bs) |

| 20 | Pogonion of soft tissue (Pos) |

| 21 | Gnathion of soft tissue (Gns) |

| 22 | Menton of soft tissue (Mes) |

| 23 | Ruler point 1 |

| 24 | Ruler point 2 |

| Landmark | Success Detection Rates (%) | Mean ± SD (mm) | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 mm | 1.5 mm | 2 mm | 2.5 mm | 3 mm | 4 mm | |||

| Soft tissue | ||||||||

| Sn | 75.98 | 95.59 | 99.02 | 100.00 | 100.00 | 100.00 | 0.71 ± 0.41 | |

| UL | 75.49 | 92.65 | 98.04 | 98.53 | 99.51 | 100.00 | 0.76 ± 0.46 | |

| UT | 76.96 | 95.10 | 96.57 | 97.55 | 99.02 | 99.51 | 0.85 ± 0.47 | |

| LL | 79.41 | 93.63 | 98.04 | 99.51 | 100.00 | 100.00 | 0.70 ± 0.45 | |

| LT | 83.82 | 95.10 | 99.51 | 99.51 | 100.00 | 100.00 | 0.73 ± 0.37 | |

| Bs | 78.92 | 92.65 | 96.57 | 100.00 | 100.00 | 100.00 | 0.79 ± 0.49 | |

| Pos | 34.31 | 61.27 | 78.92 | 85.78 | 91.18 | 96.57 | 1.54 ± 1.09 | |

| Gns | 38.73 | 66.18 | 83.82 | 91.67 | 95.59 | 98.53 | 1.34 ± 0.83 | |

| Mes | 50.00 | 75.00 | 86.76 | 89.71 | 92.16 | 95.59 | 2.50 ± 1.27 | |

| Hard tissue | ||||||||

| S | 84.31 | 97.55 | 99.51 | 100.00 | 100.00 | 100.00 | 0.66 ± 0.37 | |

| N | 72.55 | 90.69 | 96.08 | 97.55 | 99.02 | 99.02 | 1.95 ± 1.56 | |

| A | 59.31 | 84.31 | 95.59 | 98.04 | 98.53 | 100.00 | 0.96 ± 0.56 | |

| Spr | 87.75 | 98.04 | 99.51 | 100.00 | 100.00 | 100.00 | 0.64 ± 0.32 | |

| UJ | 86.76 | 99.02 | 100.00 | 100.00 | 100.00 | 100.00 | 0.65 ± 0.30 | |

| UI | 86.27 | 96.57 | 99.02 | 99.51 | 100.00 | 100.00 | 0.65 ± 0.38 | |

| LI | 79.41 | 94.12 | 96.57 | 99.02 | 99.02 | 99.51 | 0.78 ± 0.52 | |

| LJ | 84.31 | 95.59 | 98.53 | 99.02 | 99.51 | 99.51 | 0.72 ± 0.43 | |

| Id | 85.78 | 96.08 | 98.53 | 100.00 | 100.00 | 100.00 | 0.69 ± 0.35 | |

| B | 61.27 | 83.82 | 90.69 | 96.08 | 98.04 | 100.00 | 1.02 ± 0.78 | |

| Po | 60.29 | 81.86 | 93.14 | 99.51 | 100.00 | 100.00 | 0.98 ± 0.55 | |

| Gn | 75.49 | 95.59 | 98.04 | 99.51 | 100.00 | 100.00 | 0.77 ± 0.41 | |

| Me | 72.55 | 90.69 | 96.57 | 98.04 | 99.51 | 100.00 | 0.83 ± 0.52 | |

| Average | 72.26 | 89.59 | 95.41 | 97.66 | 98.69 | 99.47 | 0.97 ± 0.52 | |

| Classification | Upper Lip | Lower Lip | ||

|---|---|---|---|---|

| Female | Male | Female | Male | |

| Thin lip | 6.69–10.14 | 6.84–10.81 | 7.72–11.51 | 8.88–11.47 |

| Normal lip | 10.15–12.64 | 10.83–13.93 | 11.52–13.74 | 11.49–14.61 |

| Thick lip | 12.67–15.98 | 13.96–20.88 | 13.75–17.07 | 14.67–20.35 |

| Accuracy | Sensitivity | Specificity | Precision | F1-Score | AUC | ||

|---|---|---|---|---|---|---|---|

| Upper lip thickness | |||||||

| Female | 0.91 ± 0.04 | 0.86 ± 0.04 | 0.92 ± 0.07 | 0.88 ± 0.04 | 0.87 ± 0.01 | 0.97 ± 0.02 | |

| Class 1 | 0.95 | 0.82 | 0.98 | 0.92 | 0.87 | 0.98 | |

| Class 2 | 0.87 | 0.89 | 0.84 | 0.84 | 0.87 | 0.94 | |

| Class 3 | 0.92 | 0.86 | 0.94 | 0.88 | 0.87 | 0.97 | |

| Male | 0.92 ± 0.03 | 0.87 ± 0.76 | 0.93 ± 0.07 | 0.90 ± 0.05 | 0.88 ± 0.04 | 0.98 ± 0.02 | |

| Class 1 | 0.93 | 0.78 | 0.97 | 0.91 | 0.84 | 0.98 | |

| Class 2 | 0.88 | 0.93 | 0.84 | 0.84 | 0.88 | 0.97 | |

| Class 3 | 0.95 | 0.89 | 0.97 | 0.94 | 0.91 | 0.99 | |

| Lower lip thickness | |||||||

| Female | 0.90 ± 0.04 | 0.85 ± 0.02 | 0.91 ± 0.07 | 0.86 ± 0.03 | 0.85 ± 0.01 | 0.98 ± 0.01 | |

| Class 1 | 0.94 | 0.85 | 0.96 | 0.85 | 0.85 | 0.99 | |

| Class 2 | 0.85 | 0.88 | 0.82 | 0.84 | 0.86 | 0.97 | |

| Class 3 | 0.91 | 0.80 | 0.96 | 0.89 | 0.85 | 0.98 | |

| Male | 0.88 ± 0.05 | 0.80 ± 0.11 | 0.90 ± 0.14 | 0.86 ± 0.13 | 0.82 ± 0.04 | 0.98 ± 0.02 | |

| Class 1 | 0.91 | 0.80 | 0.95 | 0.85 | 0.82 | 0.99 | |

| Class 2 | 0.82 | 0.92 | 0.74 | 0.73 | 0.81 | 0.95 | |

| Class 3 | 0.90 | 0.70 | 1.00 | 1.00 | 0.82 | 0.97 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, M.; Huo, Z.; Ren, J.; Zhu, H.; Li, H.; Li, J.; Mei, L. Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs. Diagnostics 2025, 15, 1468. https://doi.org/10.3390/diagnostics15121468

Han M, Huo Z, Ren J, Zhu H, Li H, Li J, Mei L. Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs. Diagnostics. 2025; 15(12):1468. https://doi.org/10.3390/diagnostics15121468

Chicago/Turabian StyleHan, Miaomiao, Zhengqun Huo, Jiangyan Ren, Haiting Zhu, Huang Li, Jialing Li, and Li Mei. 2025. "Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs" Diagnostics 15, no. 12: 1468. https://doi.org/10.3390/diagnostics15121468

APA StyleHan, M., Huo, Z., Ren, J., Zhu, H., Li, H., Li, J., & Mei, L. (2025). Automated Landmark Detection and Lip Thickness Classification Using a Convolutional Neural Network in Lateral Cephalometric Radiographs. Diagnostics, 15(12), 1468. https://doi.org/10.3390/diagnostics15121468