Prediction of 123I-FP-CIT SPECT Results from First Acquired Projections Using Artificial Intelligence

Abstract

:1. Introduction

1.1. Clinical Context

1.2. Artificial Intelligence in Nuclear Imaging

1.3. Objective

2. Materials and Methods

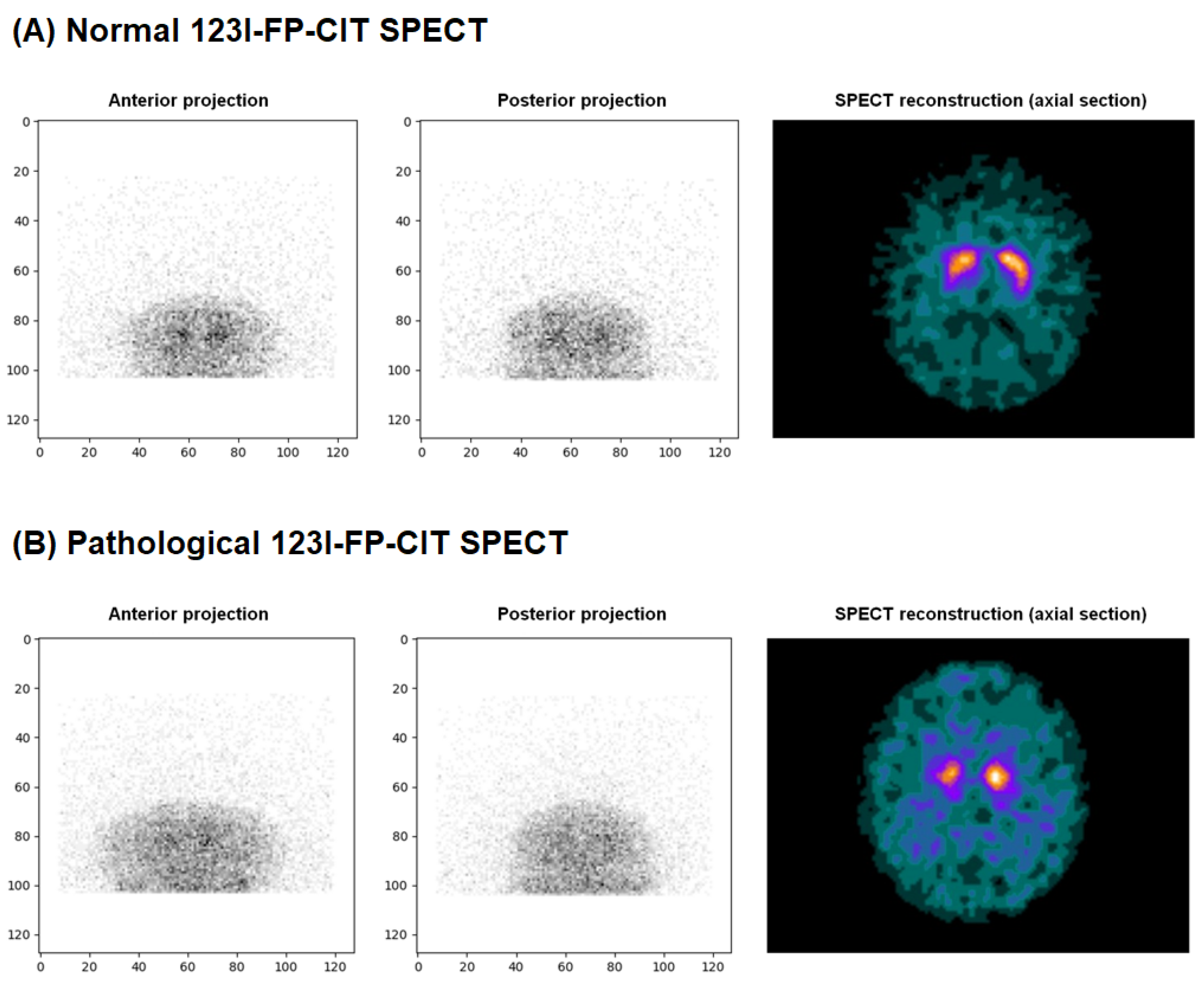

2.1. Data Collection and Labeling

- 0: No loss of dopaminergic activity

- 1: Doubtful exam but towards no loss of dopaminergic activity

- 2: Doubtful exam but towards a loss of dopaminergic activity

- 3: Loss of dopaminergic activity

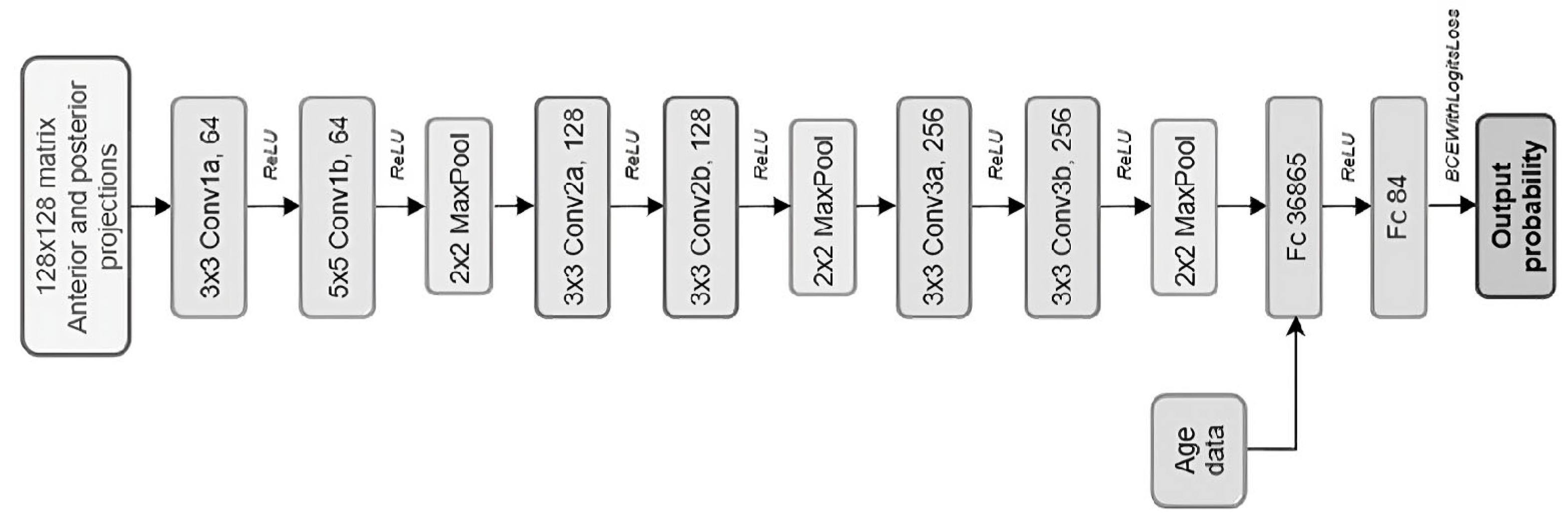

2.2. CNN Model Development

- (1)

- The model evaluates all DaT-SPECT in the training set, making predictions based on the current weights within the neural network.

- (2)

- The discrepancy between these predictions and the actual status is measured by the loss function. The Adam optimizer then readjusts the neural weights within the network to reduce this discrepancy.

- (3)

- Accuracy (i.e., the rate of correct predictions) is calculated using these adjusted parameters, completing this epoch.

2.3. Threshold and Statistics

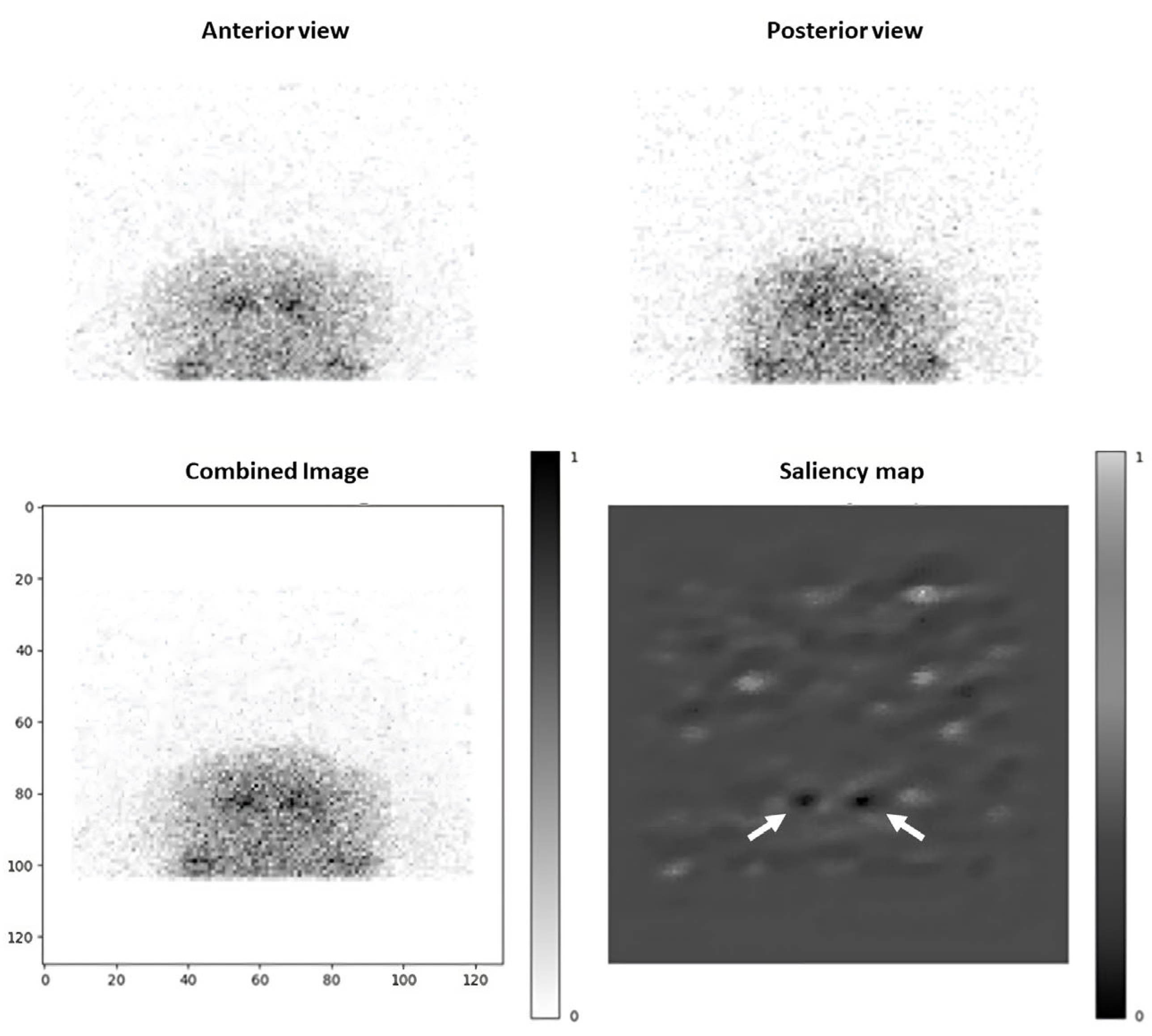

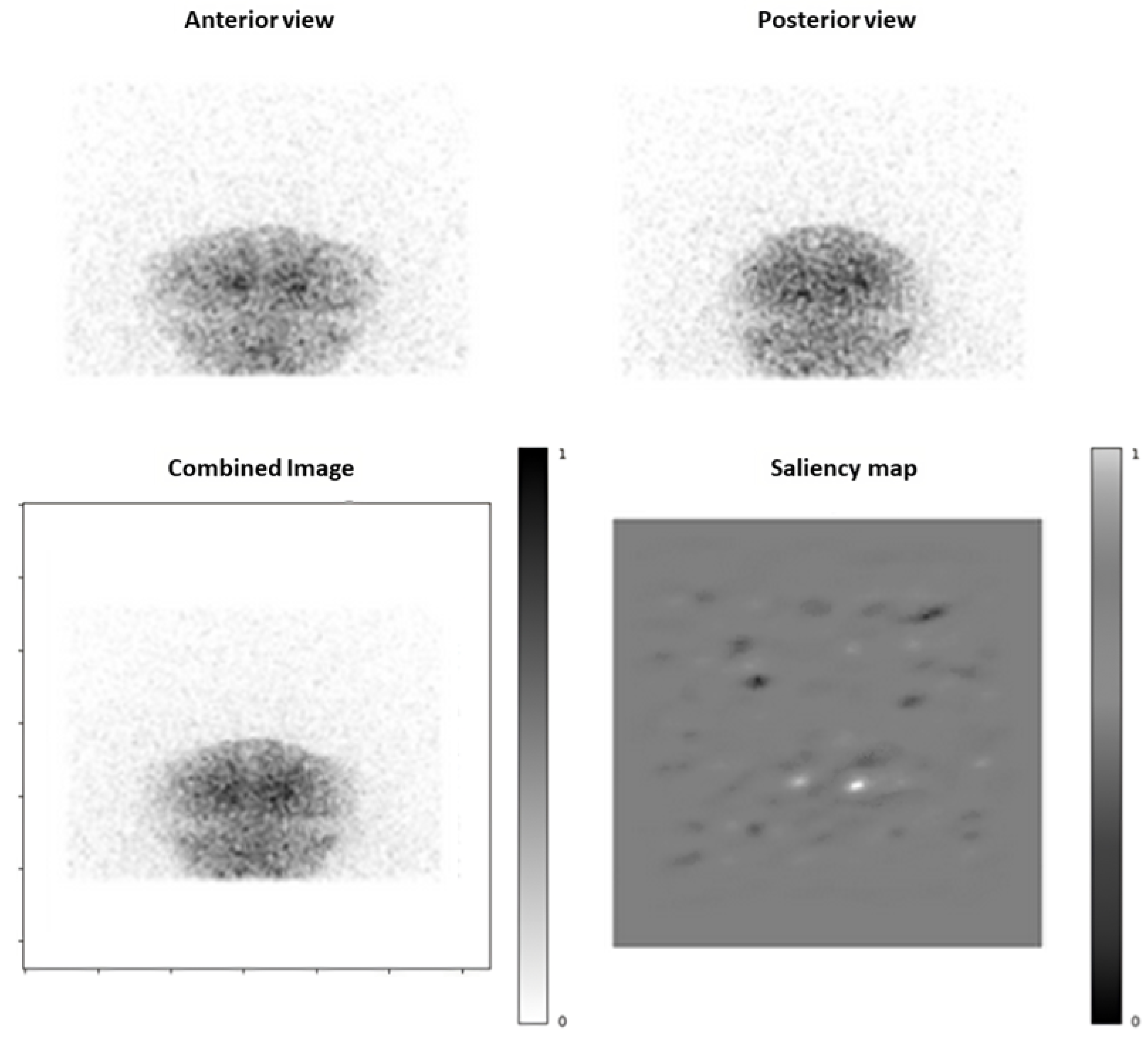

2.4. Saliency Maps

3. Results

3.1. Included Patients

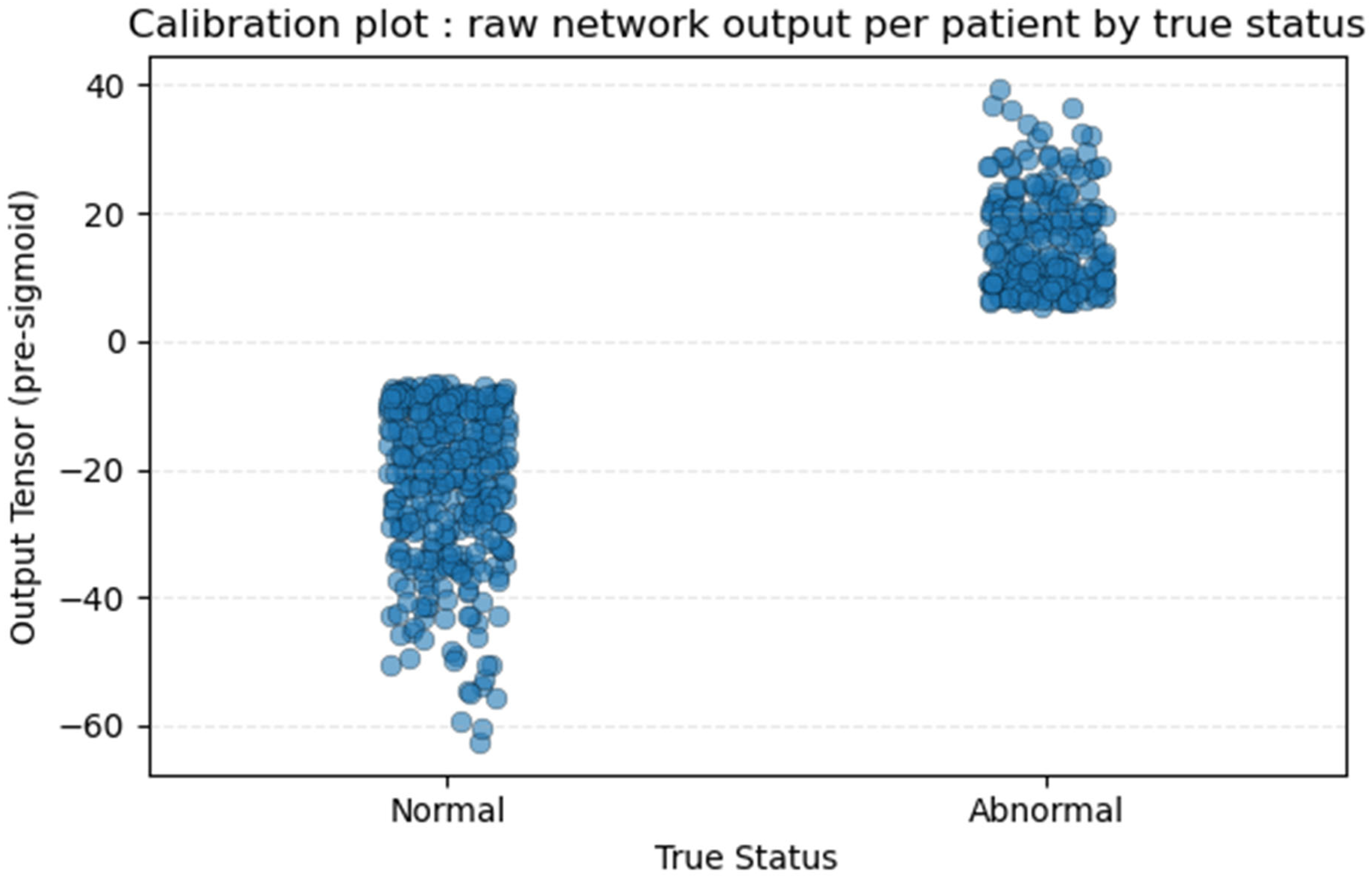

3.2. Training and Validation Outcomes

3.3. Model Performances

- Sensitivity: 98.0% [89.5–99.6]; Specificity: 52.0% [38.5–65.2]

- Accuracy: 75.0% [65.7–82.5]

- Negative Predictive Value: 96.3% [81.7–99.3]

- Positive Predictive Value: 67.1% [55.7–76.8]

3.4. Saliency Maps Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| CI | Confidence intervals |

| CNN | Convolutional Neural Network |

| DaT | Dopamine Transporter ligands |

| FN | False Negative |

| FP | False Positive |

| PCI | Pathological Confidence Index |

| SPECT | Single-Photon Emission Computed Tomography |

| ReLU | Rectified Linear Unit |

| Se | Sensitivity |

| Sp | Specificity |

| TN | True Negative |

| TP | True Positive |

| VGG16 | Visual Geometry Group of the University of Oxford—16 layers |

Appendix A

| Section | Topic | Item | Development/ Evaluation | Checklist Item | Page and Comments |

|---|---|---|---|---|---|

| TITLE | Title | 1 | D;E | Identify the study as developing or evaluating the performance of a multivariable prediction model, the target population, and the outcome to be predicted. | p1 |

| ABSTRACT | Abstract | 2 | D;E | Report an abstract addressing each item in the TRIPOD+AI for Abstracts checklist. | p1 |

| INTRODUCTION | Background | 3a | D;E | Explain the healthcare context (including whether diagnostic or prognostic) and rationale for developing or evaluating the prediction model, including references to existing models. | p2–3 §1.1 and §1.2— Table 1 |

| 3b | D;E | Describe the target population and the intended purpose of the prediction model in the care pathway, including its intended users. | p2 §1.1 and §1.3 | ||

| 3c | D;E | Describe any known health inequalities between sociodemographic groups. | NA | ||

| Objectives | 4 | D;E | Specify the study objectives, including whether the study describes development or validation of the prediction model (or both). | p2 §1.3 | |

| METHODS | Data | 5a | D;E | Describe the sources of data separately for the development and evaluation datasets (e.g., randomised trial, cohort, routine care or registry data), the rationale for using these data, and representativeness of the data. | p4 §2.1 |

| 5b | D;E | Specify the dates of the collected participant data, including start and end of participant accrual; and, if applicable, end of follow-up. | p4 §2.1 | ||

| Participants | 6a | D;E | Specify key elements of the study setting (e.g., primary care, secondary care, general population) including the number and location of centres. | p4 §2.1 | |

| 6b | D;E | Describe the eligibility criteria for study participants. | p4 §2.1 | ||

| 6c | D;E | Give details of any treatments received, and how they were handled during model development or evaluation, if relevant. | NA— diagnostic imaging | ||

| Data preparation | 7 | D;E | Describe any data pre-processing and quality checking, including whether this was similar across relevant sociodemographic groups. | p5–6 §2.2 | |

| Outcome | 8a | D;E | Clearly define the outcome that is being predicted and the time horizon, including how and when assessed, the rationale for choosing this outcome, and whether the method of outcome assessment is consistent across sociodemographic groups. | p4 §2.1 | |

| 8b | D;E | If outcome assessment requires subjective interpretation, describe the qualifications and demographic characteristics of the outcome assessors. | p4 §2.1 | ||

| 8c | D;E | Report any actions to blind assessment of the outcome to be predicted. | p4 §2.1 | ||

| Predictors | 9a | D | Describe the choice of initial predictors (e.g., literature, previous models, all available predictors) and any pre-selection of predictors before model building. | p2 §1.2 and Table 1 | |

| 9b | D;E | Clearly define all predictors, including how and when they were measured (and any actions to blind assessment of predictors for the outcome and other predictors). | p5–7 §2.2 and §2.3 | ||

| 9c | D;E | If predictor measurement requires subjective interpretation, describe the qualifications and demographic characteristics of the predictor assessors. | NA | ||

| Sample size | 10 | D;E | Explain how the study size was arrived at (separately for development and evaluation), and justify that the study size was sufficient to answer the research question. Include details of any sample size calculation. | p4 §2.1 | |

| Missing data | 11 | D;E | Describe how missing data were handled. Provide reasons for omitting any data. | p4 §2.1 | |

| Analytical methods | 12a | D | Describe how the data were used (e.g., for development and evaluation of model performance) in the analysis, including whether the data were partitioned, considering any sample size requirements. | p5–6 §2.2 | |

| 12b | D | Depending on the type of model, describe how predictors were handled in the analyses (functional form, rescaling, transformation, or any standardisation). | p5–7 §2.2 and §2.3 | ||

| 12c | D | Specify the type of model, rationale2, all model-building steps, including any hyperparameter tuning, and method for internal validation. | p5–7 §2.2 and §2.3—Figure 2 | ||

| 12d | D;E | Describe if and how any heterogeneity in estimates of model parameter values and model performance was handled and quantified across clusters (e.g., hospitals, countries). See TRIPOD-Cluster for additional considerations | NA— single-centre study | ||

| 12e | D;E | Specify all measures and plots used (and their rationale) to evaluate model performance (e.g., discrimination, calibration, clinical utility) and, if relevant, to compare multiple models. | p6–7 §2.3; p8 §3.2—Figure 3 and Figure A1 calibration plot | ||

| 12f | E | Describe any model updating (e.g., recalibration) arising from the model evaluation, either overall or for particular sociodemographic groups or settings. | NA | ||

| 12g | E | For model evaluation, describe how the model predictions were calculated (e.g., formula, code, object, application programming interface). | p5–6 §2.2 | ||

| Class imbalance | 13 | D;E | If class imbalance methods were used, state why and how this was done, and any subsequent methods to recalibrate the model or the model predictions. | NA | |

| Fairness | 14 | D;E | Describe any approaches that were used to address model fairness and their rationale. | NA | |

| Model output | 15 | D | Specify the output of the prediction model (e.g., probabilities, classification). Provide details and rationale for any classification and how the thresholds were identified. | p6–7 §2.3 | |

| Development versus evaluation | 16 | D;E | Identify any differences between the development and evaluation data in healthcare setting, eligibility criteria, outcome, and predictors. | p4 §2.1 | |

| Ethical approval | 17 | D;E | Name the institutional research board or ethics committee that approved the study and describe the participant-informed consent or the ethics committee waiver of informed consent. | p13 | |

| OPEN SCIENCE | Funding | 18a | D;E | Give the source of funding and the role of the funders for the present study. | p13 |

| Conflicts of interest | 18b | D;E | Declare any conflicts of interest and financial disclosures for all authors. | p13 | |

| Protocol | 18c | D;E | Indicate where the study protocol can be accessed or state that a protocol was not prepared. | p13 | |

| Registration | 18d | D;E | Provide registration information for the study, including register name and registration number, or state that the study was not registered. | p1 | |

| Data sharing | 18e | D;E | Provide details of the availability of the study data. | p13 | |

| Code sharing | 18f | D;E | Provide details of the availability of the analytical code. | p13 | |

| PATIENT AND PUBLIC INVOLVEMENT | Patient and public involvement | 19 | D;E | Provide details of any patient and public involvement during the design, conduct, reporting, interpretation, or dissemination of the study or state no involvement. | p4 and p13 |

| RESULTS | Participants | 20a | D;E | Describe the flow of participants through the study, including the number of participants with and without the outcome and, if applicable, a summary of the follow-up time. | p7 §3.1– Table 2 |

| 20b | D;E | Report the characteristics overall and, where applicable, for each data source or setting, including the key dates, key predictors (including demographics), treatments received, sample size, number of outcome events, follow-up time, and amount of missing data. A table may be helpful. Report any differences across key demographic groups. | p7 §3.1– Table 2 | ||

| 20c | E | For model evaluation, show a comparison with the development data of the distribution of important predictors (demographics, predictors, and outcome). | NA—no other model predicting from only 2 projections | ||

| Model development | 21 | D;E | Specify the number of participants and outcome events in each analysis (e.g., for model development, hyperparameter tuning, model evaluation). | p7 | |

| Model specification | 22 | D | Provide details of the full prediction model (e.g., formula, code, object, API) to allow predictions in new individuals and to enable third-party evaluation and implementation, including any restrictions to access or re-use (e.g., freely available, proprietary). | p6—Figure 2 | |

| Model performance | 23a | D;E | Report model performance estimates with confidence intervals, including for any key subgroups (e.g., sociodemographic). | p8–9 §3.2 and §3.3—Table 3 | |

| 23b | D;E | If examined, report results of any heterogeneity in model performance across clusters. See TRIPOD Cluster for additional details. | NA | ||

| Model updating | 24 | E | Report the results from any model updating, including the updated model and subsequent performance. | NA | |

| DISCUSSION | Interpretation | 25 | D;E | Give an overall interpretation of the main results, including issues of fairness in the context of the objectives and previous studies. | p11–12 |

| Limitations | 26 | D;E | Discuss any limitations of the study (such as a non-representative sample, sample size, overfitting, missing data) and their effects on any biases, statistical uncertainty, and generalizability. | p11–12 | |

| Usability of the model in the context of current care | 27a | D | Describe how poor quality or unavailable input data (e.g., predictor values) should be assessed and handled when implementing the prediction model. | p12 | |

| 27b | D | Discuss whether users will be required to interact in the handling of the input data or use of the model, and what level of expertise is required of users. | p11 | ||

| 27c | D;E | Discuss any next steps for future research, with a specific view to applicability and generalizability of the model. | p12 |

References

- Buchert, R.; Buhmann, C.; Apostolova, I.; TMeyer, P.; Gallinat, J. Nuclear Imaging in the Diagnosis of Clinically Uncertain Parkinsonian Syndromes. Dtsch Ärztebl Int. 2019, 116, 747–754. [Google Scholar] [PubMed]

- Seibyl, J.P.; Kupsch, A.; Booij, J.; Grosset, D.G.; Costa, D.C.; Hauser, R.A.; Darcourt, J.; Bajaj, N.; Walker, Z.; Marek, K.; et al. Individual-Reader Diagnostic Performance and Between-Reader Agreement in Assessment of Subjects with Parkinsonian Syndrome or Dementia Using 123 I-Ioflupane Injection (DaTscan) Imaging. J. Nucl. Med. 2014, 55, 1288–1296. [Google Scholar] [PubMed]

- Van Laere, K.; Everaert, L.; Annemans, L.; Gonce, M.; Vandenberghe, W.; Vander Borght, T. The cost effectiveness of 123I-FP-CIT SPECT imaging in patients with an uncertain clinical diagnosis of parkinsonism. Eur. J. Nucl. Med. Mol. Imaging 2008, 35, 1367–1376. [Google Scholar] [PubMed]

- Morbelli, S.; Esposito, G.; Arbizu, J.; Barthel, H.; Boellaard, R.; Bohnen, N.I.; Brooks, D.J.; Darcourt, J.; Dickson, J.C.; Douglas, D.; et al. EANM practice guideline/SNMMI procedure standard for dopaminergic imaging in Parkinsonian syndromes 1. 0. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 1885–1912. [Google Scholar]

- O’Brien, J.T.; Oertel, W.H.; McKeith, I.G.; Grosset, D.G.; Walker, Z.; Tatsch, K.; Tolosa, E.; Sherwin, P.F.; Grachev, I.D. Is ioflupane I123 injection diagnostically effective in patients with movement disorders and dementia? Pooled analysis of four clinical trials. BMJ Open 2014, 4, e005122. [Google Scholar]

- Isaacson, J.R.; Brillman, S.; Chhabria, N.; Isaacson, S.H. Impact of DaTscan Imaging on Clinical Decision Making in Clinically Uncertain Parkinson’s Disease. J. Park. Dis. 2021, 11, 885–889. [Google Scholar]

- Visvikis, D.; Lambin, P.; Mauridsen, K.B.; Hustinx, R.; Lassmann, M.; Rischpler, C.; Shi, K.; Pruim, J. Application of artificial intelligence in nuclear medicine and molecular imaging: A review of current status and future perspectives for clinical translation. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 4452–4463. [Google Scholar]

- Prashanth, R.; Dutta Roy, S.; Mandal, P.K.; Ghosh, S. High-Accuracy Detection of Early Parkinson’s Disease through Multimodal Features and Machine Learning. Int. J. Med. Inform. 2016, 90, 13–21. [Google Scholar] [CrossRef]

- Ortiz, A.; Munilla, J.; Martínez-Ibañez, M.; Górriz, J.M.; Ramírez, J.; Salas-Gonzalez, D. Parkinson’s Disease Detection Using Isosurfaces-Based Features and Convolutional Neural Networks. Front. Neuroinform. 2019, 13, 48. [Google Scholar] [CrossRef]

- Chien, C.Y.; Hsu, S.W.; Lee, T.L.; Sung, P.S.; Lin, C.C. Using Artificial Neural Network to Discriminate Parkinson’s Disease from Other Parkinsonisms by Focusing on Putamen of Dopamine Transporter SPECT Images. Biomedicines 2021, 9, 12. [Google Scholar]

- Magesh, P.R.; Myloth, R.D.; Tom, R.J. An Explainable Machine Learning Model for Early Detection of Parkinson’s Disease using LIME on DaTSCAN Imagery. Comput. Biol. Med. 2020, 126, 104041. [Google Scholar]

- Hathaliya, J.; Parekh, R.; Patel, N.; Gupta, R.; Tanwar, S.; Alqahtani, F.; Elghatwary, M.; Ivanov, O.; Raboaca, M.S.; Neagu, B.-C. Convolutional Neural Network-Based Parkinson Disease Classification Using SPECT Imaging Data. Mathematics 2022, 10, 2566. [Google Scholar] [CrossRef]

- Thakur, M.; Kuresan, H.; Dhanalakshmi, S.; Lai, K.W.; Wu, X. Soft Attention Based DenseNet Model for Parkinson’s Disease Classification Using SPECT Images. Front. Aging Neurosci. 2022, 14, 908143. [Google Scholar] [CrossRef]

- Kurmi, A.; Biswas, S.; Sen, S.; Sinitca, A.; Kaplun, D.; Sarkar, R. An Ensemble of CNN Models for Parkinson’s Disease Detection Using DaTscan Images. Diagnostics 2022, 12, 1173. [Google Scholar]

- Budenkotte, T.; Apostolova, I.; Opfer, R.; Krüger, J.; Klutmann, S.; Buchert, R. Automated Identification of Uncertain Cases in Deep Learning-Based Classification of Dopamine Transporter SPECT to Improve Clinical Utility and Acceptance. Eur. J. Nucl. Med. Mol. Imaging 2024, 51, 1333–1344. [Google Scholar] [CrossRef]

- Yoon, H.; Kang, D.-Y.; Kim, S. Enhancement and Evaluation for Deep Learning-Based Classification of Volumetric Neuroimaging with 3D-to-2D Knowledge Distillation. Sci. Rep. 2024, 14, 29611. [Google Scholar] [CrossRef]

- Nazari, M.; Kluge, A.; Apostolova, I.; Klutmann, S.; Kimiaei, S.; Schroeder, M.; Buchert, R. Explainable AI to improve acceptance of convolutional neural networks for automatic classification of dopamine transporter SPECT in the diagnosis of clinically uncertain parkinsonian syndromes. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 1176–1186. [Google Scholar]

- Olsen, B.; Peck, D.; Voslar, A. Comparison of spatial resolution and sensitivity of fan beam and parallel hole collimators in brain imaging. J. Nucl. Med. 2016, 57, 2831. [Google Scholar]

- van Dyck, C.H.; Seibyl, J.P.; Malison, R.T.; Laruelle, M.; Zoghbi, S.S.; Baldwin, R.M.; Innis, R.B. Age-related decline in dopamine transporters: Analysis of striatal subregions, nonlinear effects, and hemispheric asymmetries. Am. J. Geriatr. Psychiatry 2002, 10, 36–43. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- BCEWithLogitsLoss—PyTorch 2.3 Documentation. Available online: https://pytorch.org/docs/stable/generated/torch.nn.BCEWithLogitsLoss.html (accessed on 10 October 2024).

- Saahil, A.; Smitha, R. Significance of Epochs on Training a Neural Network. Int. J. Sci. Technol. Res. 2020, 9, 485–488. [Google Scholar]

- Power, M.; Fell, G.; Wright, M. Principles for high-quality, high-value testing. BMJ Evid-Based Med. 2013, 18, 5–10. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar]

- Dobbin, K.K.; Simon, R.M. Optimally splitting cases for training and testing high dimensional classifiers. BMC Med. Genom. 2011, 4, 31. [Google Scholar]

- Parikh, R.; Mathai, A.; Parikh, S.; Chandra Sekhar, G.; Thomas, R. Understanding and using sensitivity, specificity and predictive values. Indian J. Ophthalmol. 2008, 56, 45–50. [Google Scholar]

- Buddenkotte, T.; Buchert, R. Unrealistic Data Augmentation Improves the Robustness of Deep Learning-Based Classification of Dopamine Transporter SPECT Against Variability Between Sites and Between Cameras. J. Nucl. Med. 2024, 65, 1463–1466. [Google Scholar] [CrossRef]

- Mumuni, A.; Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array 2022, 16, 100258. [Google Scholar]

- Saboury, B.; Bradshaw, T.; Boellaard, R.; Buvat, I.; Dutta, J.; Hatt, M.; Jha, A.K.; Li, Q.; Liu, C.; McMeekin, H.; et al. Artificial Intelligence in Nuclear Medicine: Opportunities, Challenges, and Responsbilities Toward a Trustworthy Ecosystem. J. Nucl. Med. 2023, 64, 188–196. [Google Scholar]

- Benamer, T.S.; Patterson, J.; Grosset, D.G. Accurate differentiation of parkinsonism and essential tremor using visual assessment of [123I]-FP-CIT SPECT imaging: The [123I]-FP-CIT study group. Mov. Disord. 2000, 15, 503–510. [Google Scholar]

- Booij, J.; Dubroff, J.; Pryma, D.; Yu, J.Q.; Agarwal, R.; Lakhani, P.; Kuo, P.H. Diagnostic Performance of the Visual Reading of 123I-Ioflupane SPECT Images With or Without Quantification in Patients with Movement Disorders or Dementia. J. Nucl. Med. Novemb. 2017, 58, 1821–1826. [Google Scholar]

- Şengöz, N.; Yiğit, T.; Özmen, Ö.; Isık, A.H. Importance of Preprocessing in Histopathology Image Classification Using Deep Convolutional Neural Network. Adv. Artif. Intell. Res. 2022, 2, 1–6. [Google Scholar]

- Missir, E.; Begley, P. Quantitative [123]I-Ioflupane DaTSCAN single-photon computed tomography-computed tomography in Parkinsonism. Nucl. Med. Commun. 2023, 44, 843–853. [Google Scholar] [PubMed]

- Chen, Y.; Goorden, M.; Beekman, F.J. Convolutional neural network basedattenuation correction for 123I-FP-CIT SPECT with focusedstriatum imaging. Phys. Med. Biol. 2021, 66, 195007. [Google Scholar]

- Massari, R.; Mok, G.S.P. New trends in single photon emission computed tomography (SPECT). Front. Med. 2023, 10, 1349877. [Google Scholar]

- Adams, M.P.; Rahmim, A.; Tang, J. Improved motor outcome prediction in Parkinson’s disease applying deep learning to DaTscan SPECT images. Comput. Biol. Med. 2021, 132, 104312. [Google Scholar]

| Study (Year) | Imaging Modality (Projections) | AI Model Type | Input Features | Output Task | Performance |

|---|---|---|---|---|---|

| Prashanth et al. (2016) [8] | DaT-SPECT (full 3D volume) | SVM (support vector machine) | Parkinson’s Progression Markers Initiative (PPMI) database: striatal shape and surface features | Normal vs. PD | Accuracy 96.1%, Se 95.7%, Sp 77.3% |

| Ortiz et al. (2019) [9] | DaT-SPECT (3D isosurfaces) | CNN (AlexNet/LeNet) | Isosurface-derived features | Normal vs. PD | Accuracy 95.1%, Se 95.5%, Sp 94.8% |

| Chien et al. (2020) [10] | DaT-SPECT (full 3D volume) | ANN (transfer learning) | Segmented putamen ROI | PD vs. other Parkinsonisms | Accuracy 86%, Se 81.8%, Sp 88.6% |

| Magesh et al. (2020) [11] | DaT-SPECT (2D slices from all projections) | CNN (VGG16 + LIME) | Striatal ROI in slices | Normal vs. PD | Accuracy 95.2%, Se 97.5%, Sp 90.9% |

| Hathaliya et al. (2022) [12] | DaT-SPECT (full 3D volume) | CNN (custom) | Parkinson’s Progression Markers Initiative (PPMI) database: striatal ROI from slices | Normal vs. PD | Accuracy 88.9% |

| Thakur et al. (2022) [13] | DaT-SPECT (augmented slices from 3D volumes) | CNN (DenseNet-121) | Full slices with attention | Normal vs. PD | Accuracy 99.2%, Se 99.2%, Sp 99.4% |

| Kurmi et al. (2022) [14] | DaT-SPECT (full 3D volume) | Ensemble of 4 CNN | Slices inputs | Normal vs. PD | Accuracy 98.4%, Se 98.8%, Sp 97.7% |

| Budenkotte et al. (2024) [15] | DaT-SPECT (full 3D volume) | Ensemble of 5 ResNet-style CNNs + Uncertainty-Detection Module | Full pre-processed SPECT aggregated to 12 mm axial slabs | Normal vs. PD | Accuracy 98.0% |

| Yoon et al. (2024) [16] | DaT-SPECT and 18F-AV133 PET (all projections) | 3D-to-2D Knowledge-Distillation framework (from Teacher 3D-CN to Student 2D-CNN) | Full 3D; stacked maximum-intensity-projection and representative 2D slices | Normal vs. PD | Accuracy 98.3% |

| Negative DaT-SPECT: Normal | Positive DaT-SPECT: Abnormal | Total | |

|---|---|---|---|

| Training set | Score 0: 359 | Score 1: 9 Score 2: 9 Score 3: 241 | 618 |

| Validation set | Score 0: 157 | Score 1: 4 Score 2: 3 Score 3: 100 | 264 |

| Testing set | Score 0: 50 | Score 1: 0 Score 2: 0 Score 3: 50 | 100 |

| Total | 566 | 416 | 982 |

| Actual Negative | Actual Positive | TOTAL | ||

|---|---|---|---|---|

| Predicted Negative | 26 (TN) | 1 (FN) | 27 | NPV = 96.3% CI 95% [81.7–99.3] |

| Predicted Positive | 24 (FP) | 49 (TP) | 73 | PPV = 67.1% CI 95% [55.7–76.8] |

| TOTAL | 50 | 50 | 100 | |

| Se = 98.0% CI 95% [89.5–99.6] | Sp = 52.0% CI 95% [38.5–65.2] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Othmani, W.; Coste, A.; Papathanassiou, D.; Morland, D. Prediction of 123I-FP-CIT SPECT Results from First Acquired Projections Using Artificial Intelligence. Diagnostics 2025, 15, 1407. https://doi.org/10.3390/diagnostics15111407

Othmani W, Coste A, Papathanassiou D, Morland D. Prediction of 123I-FP-CIT SPECT Results from First Acquired Projections Using Artificial Intelligence. Diagnostics. 2025; 15(11):1407. https://doi.org/10.3390/diagnostics15111407

Chicago/Turabian StyleOthmani, Wadi’, Arthur Coste, Dimitri Papathanassiou, and David Morland. 2025. "Prediction of 123I-FP-CIT SPECT Results from First Acquired Projections Using Artificial Intelligence" Diagnostics 15, no. 11: 1407. https://doi.org/10.3390/diagnostics15111407

APA StyleOthmani, W., Coste, A., Papathanassiou, D., & Morland, D. (2025). Prediction of 123I-FP-CIT SPECT Results from First Acquired Projections Using Artificial Intelligence. Diagnostics, 15(11), 1407. https://doi.org/10.3390/diagnostics15111407