Explainable Artificial Intelligence in Radiological Cardiovascular Imaging—A Systematic Review

Abstract

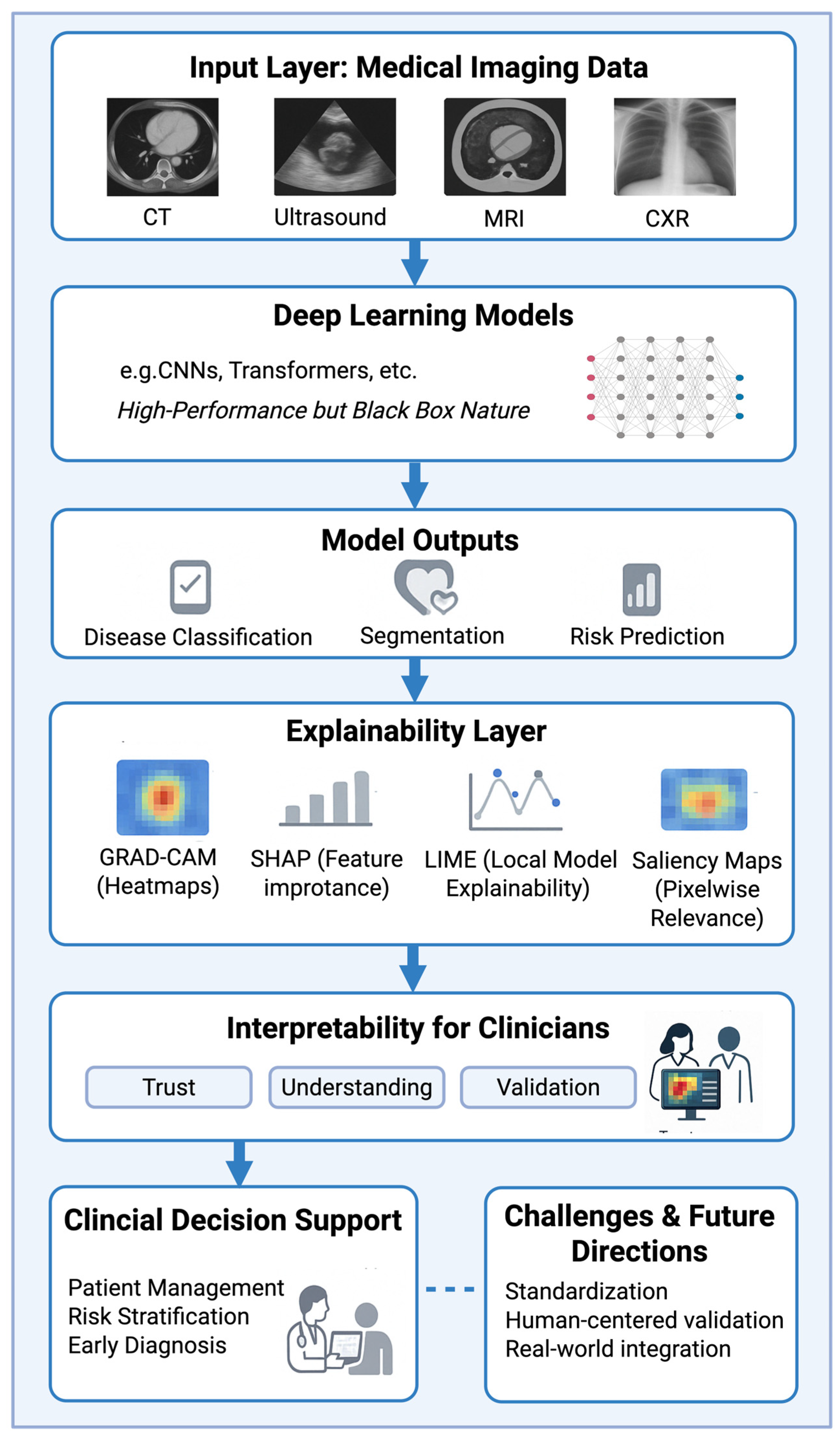

1. Introduction

2. Methods

2.1. Literature Search Strategy

2.2. Study Selection

3. Results

3.1. Overview of Included Studies

3.2. Computed Tomography

| Author | Year | XAI Method(s) | Imaging Modality | Aim of the Study | Ref. |

|---|---|---|---|---|---|

| Gerbasi et al. | 2024 | Deep SHAP | CCTA | To develop a fully automated, visually explainable deep learning pipeline using a Multi-Axis Vision Transformer for CAD-RADS scoring of CCTA scans, aiming to classify patients based on the need for further investigations and severity of coronary stenosis. | [12] |

| Sakai et al. | 2024 | SHAP | contrast-enhanced CT angiography | To classify culprit versus non-culprit calcified carotid plaques in embolic stroke of undetermined source using an explainable ML model with clinically interpretable imaging features. | [15] |

| Fu et al. | 2024 | SHAP | non-contrast abdominal CT | To predict early outcomes after percutaneous transluminal renal angioplasty in patients with severe atherosclerotic renal artery stenosis using CT-based radiomics. | [20] |

| Lo Iacono et al. | 2023 | SHAP | contrast-enhanced cardiac CT | To differentiate cardiac amyloidosis from aortic stenosis using radiomic features and machine learning. | [18] |

| Penso et al. | 2023 | Grad-CAM | CCTA | To classify coronary stenosis from MPR images using a ConvMixer-based token-mixer architecture according to CAD-RADS scoring. | [13] |

| Lopes et al. | 2022 | SHAP | CCTA | To use machine learning models to predict insufficient contrast enhancement in coronary CT angiography and interpret predictive features using SHAP. | [14] |

| Candemir et al. | 2020 | Grad-CAM | CCTA | Automated detection and weakly supervised localization of coronary artery atherosclerosis using a 3D-CNN. | [16] |

| Wang et al. | 2021 | Grad-CAM | Contrast-enhanced Cardiac CT | To develop an explainable AI model for recognizing Tetralogy of Fallot in cardiovascular CT images. | [17] |

| Huo et al. | 2019 | Grad-CAM | non-contrast chest CT | To detect coronary artery calcium using 3D attention-based deep learning with weakly supervised learning and to visualize predictions using 3D Grad-CAM. | [19] |

3.3. Magnetic Resonance Imaging

| Author | Year | XAI Method(s) | Imaging Modality | Aim of the Study | Ref. |

|---|---|---|---|---|---|

| Zhang et al. | 2025 | SHAP | Cardiac MRI | To predict microvascular obstruction using a machine learning model based on angio-based microvascular resistance and clinical data during PPCI in STEMI patients and to interpret the model using SHAP. | [22] |

| Wang et al. | 2024 | Grad-CAM, SHAP | Cardiac MRI | Development and evaluation of AI models for screening and diagnosis of multiple cardiovascular diseases using cardiac MRI, with explainability analysis. | [25] |

| Sufian et al. | 2024 | LIME, SHAP | Cardiovascular MRI | To address algorithmic bias in AI-driven cardiovascular imaging using fairness-aware machine learning methods and explainability techniques such as SHAP and LIME. | [26] |

| Paciorek et al. | 2024 | Grad-CAM | Cardiac MRI | To develop and compare deep learning models using DenseNet-161 for automated assessment of cardiac pathologies on T1-mapping and LGE PSIR cardiac MRI sequences. | [21] |

| Cai et al. | 2023 | SHAP | Brain MRI and carotid ultrasound | To predict cerebral perfusion status based on internal carotid artery blood flow using machine learning models and explain predictions with SHAP. | [24] |

| Mouches et al. | 2022 | Saliency maps (SmoothGrad) | Brain MRI | To predict biological brain age using multimodal MRI data and identify predictive brain and vascular regions. | [23] |

3.4. Echocardiography and Other Ultrasound Examinations

| Author | Year | XAI Method(s) | Imaging Modality | Aim of the Study | Ref. |

|---|---|---|---|---|---|

| Day et al. | 2024 | Grad-CAM | Fetal Cardiac Ultrasound | To evaluate whether AI advice improves the diagnostic performance of clinicians in detecting fetal atrioventricular septal defect (AVSD) and assess the effect of displaying additional AI model information (confidence and Grad-CAM) on collaborative performance. | [33] |

| Ragnarsdottir et al. | 2024 | Grad-CAM | Echocardiography | Automated and explainable prediction of pulmonary hypertension and classification of its severity in newborns. | [30] |

| Holste et al. | 2023 | Grad-CAM, Saliency Maps | Echocardiography | To develop and validate an AI model for severe aortic stenosis detection using single-view transthoracic echocardiography without Doppler imaging. | [27] |

| Chao et al. | 2023 | Grad-CAM | Echocardiography | Deep learning model (ResNet50) to differentiate constrictive pericarditis from cardiac amyloidosis based on apical four-chamber echocardiographic views. | [34] |

| Sakai et al. | 2022 | Graph Chart Diagram | Fetal Cardiac Ultrasound | To improve fetal cardiac ultrasound screening using a novel interpretable deep learning representation to enhance examiner performance. | [32] |

| Wang et al. | 2022 | Grad-CAM | 2D Echocardiography | To develop a CNN-based cardiac segmentation method incorporating coordinate attention and domain knowledge to improve segmentation accuracy and interpretability. | [29] |

| Vafaeezadeh et al. | 2022 | Grad-CAM | Transthoracic Echocardiography | To automatically classify mitral valve morphologies using explainable deep learning based on Carpentier’s functional classification. | [28] |

| Nurmaini et al. | 2022 | Grad-CAM, Guided Backpropagation | Prenatal Fetal Ultrasound | To improve prenatal screening for congenital heart disease using deep learning and explain classification via visualization methods. | [31] |

| Lee et al. | 2022 | CAM | 2D Echocardiography | To distinguish incomplete Kawasaki disease from pneumonia in children using echocardiographic imaging and explainable deep learning. | [35] |

3.5. Chest X-Ray (CXR)

| Author | Year | XAI Method(s) | Imaging Modality | Aim of the Study | Ref. |

|---|---|---|---|---|---|

| Bhave et al. | 2024 | Saliency Mapping, CAM | CXR | To develop and evaluate a deep learning model to detect structural heart abnormalities (SLVH and DLV) from chest X-rays. | [36] |

| Ueda et al. | 2023 | Grad-CAM | CXR | Simultaneous classification of cardiac function and valvular diseases from chest X-rays. | [38] |

| Matsumoto et al. | 2022 | Grad-CAM, Saliency Maps | CXR | To detect atrial fibrillation using a deep learning model trained on chest X-rays and to visualize the regions of interest using saliency maps. | [37] |

| Kusunose et al. | 2022 | Grad-CAM | CXR | To predict exercise-induced pulmonary hypertension in patients with scleroderma using DL on CXR. | [39] |

4. Discussion

5. Conclusions and Perspective

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chong, B.; Jayabaskaran, J.; Jauhari, S.M.; Chan, S.P.; Goh, R.; Kueh, M.T.W.; Li, H.; Chin, Y.H.; Kong, G.; Anand, V.V.; et al. Global Burden of Cardiovascular Diseases: Projections from 2025 to 2050. Eur. J. Prev. Cardiol. 2024. [Google Scholar] [CrossRef] [PubMed]

- Salih, A.M.; Galazzo, I.B.; Gkontra, P.; Rauseo, E.; Lee, A.M.; Lekadir, K.; Radeva, P.; Petersen, S.E.; Menegaz, G. A Review of Evaluation Approaches for Explainable AI with Applications in Cardiology. Artif. Intell. Rev. 2024, 57, 240. [Google Scholar] [CrossRef]

- Borys, K.; Schmitt, Y.A.; Nauta, M.; Seifert, C.; Krämer, N.; Friedrich, C.M.; Nensa, F. Explainable AI in Medical Imaging: An Overview for Clinical Practitioners—Beyond Saliency-Based XAI Approaches. Eur. J. Radiol. 2023, 162, 110786. [Google Scholar] [CrossRef]

- Gala, D.; Behl, H.; Shah, M.; Makaryus, A.N. The Role of Artificial Intelligence in Improving Patient Outcomes and Future of Healthcare Delivery in Cardiology: A Narrative Review of the Literature. Healthcare 2024, 12, 481. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Szabo, L.; Raisi-Estabragh, Z.; Salih, A.; McCracken, C.; Pujadas, E.R.; Gkontra, P.; Kiss, M.; Maurovich-Horvath, P.; Vago, H.; Merkely, B.; et al. Clinician’s Guide to Trustworthy and Responsible Artificial Intelligence in Cardiovascular Imaging. Front. Cardiovasc. Med. 2022, 9, 1016032. [Google Scholar] [CrossRef]

- Marey, A.; Arjmand, P.; Alerab, A.D.S.; Eslami, M.J.; Saad, A.M.; Sanchez, N.; Umair, M. Explainability, Transparency and Black Box Challenges of AI in Radiology: Impact on Patient Care in Cardiovascular Radiology. Egypt. J. Radiol. Nucl. Med. 2024, 55, 183. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Muhammad, D.; Bendechache, M. Unveiling the Black Box: A Systematic Review of Explainable Artificial Intelligence in Medical Image Analysis. Comput. Struct. Biotechnol. J. 2024, 24, 542–560. [Google Scholar] [CrossRef]

- Salahuddin, Z.; Woodruff, H.C.; Chatterjee, A.; Lambin, P. Transparency of Deep Neural Networks for Medical Image Analysis: A Review of Interpretability Methods. Comput. Biol. Med. 2022, 140, 105111. [Google Scholar] [CrossRef]

- Hassan, S.U.; Abdulkadir, S.J.; Zahid, M.S.M.; Al-Selwi, S.M. Local Interpretable Model-Agnostic Explanation Approach for Medical Imaging Analysis: A Systematic Literature Review. Comput. Biol. Med. 2024, 185, 109569. [Google Scholar] [CrossRef] [PubMed]

- Gerbasi, A.; Dagliati, A.; Albi, G.; Chiesa, M.; Andreini, D.; Baggiano, A.; Mushtaq, S.; Pontone, G.; Bellazzi, R.; Colombo, G. CAD-RADS Scoring of Coronary CT Angiography with Multi-Axis Vision Transformer: A Clinically-Inspired Deep Learning Pipeline. Comput. Methods Programs Biomed. 2023, 244, 107989. [Google Scholar] [CrossRef] [PubMed]

- Penso, M.; Moccia, S.; Caiani, E.G.; Caredda, G.; Lampus, M.L.; Carerj, M.L.; Babbaro, M.; Pepi, M.; Chiesa, M.; Pontone, G. A Token-Mixer Architecture for CAD-RADS Classification of Coronary Stenosis on Multiplanar Reconstruction CT Images. Comput. Biol. Med. 2022, 153, 106484. [Google Scholar] [CrossRef] [PubMed]

- Lopes, R.R.; van den Boogert, T.P.W.; Lobe, N.H.J.; Verwest, T.A.; Henriques, J.P.S.; Marquering, H.A.; Planken, R.N. Machine Learning-Based Prediction of Insufficient Contrast Enhancement in Coronary Computed Tomography Angiography. Eur. Radiol. 2022, 32, 7136–7145. [Google Scholar] [CrossRef]

- Sakai, Y.; Kim, J.; Phi, H.Q.; Hu, A.C.; Balali, P.; Guggenberger, K.V.; Woo, J.H.; Bos, D.; Kasner, S.E.; Cucchiara, B.L.; et al. Explainable Machine-Learning Model to Classify Culprit Calcified Carotid Plaque in Embolic Stroke of Undetermined Source. MedRxiv 2024. [Google Scholar] [CrossRef]

- Candemir, S.; White, R.D.; Demirer, M.; Gupta, V.; Bigelow, M.T.; Prevedello, L.M.; Erdal, B.S. Automated Coronary Artery Atherosclerosis Detection and Weakly Supervised Localization on Coronary CT Angiography with a Deep 3-Dimensional Convolutional Neural Network. Comput. Med. Imaging Graph. 2020, 83, 101721. [Google Scholar] [CrossRef]

- Wang, S.H.; Wu, K.; Chu, T.; Fernandes, S.L.; Zhou, Q.; Zhang, Y.D.; Sun, J. SOSPCNN: Structurally Optimized Stochastic Pooling Convolutional Neural Network for Tetralogy of Fallot Recognition. Wirel. Commun. Mob. Comput. 2021, 2021, 5792975. [Google Scholar] [CrossRef]

- Iacono, F.L.; Maragna, R.; Pontone, G.; Corino, V.D.A. A Robust Radiomic-Based Machine Learning Approach to Detect Cardiac Amyloidosis Using Cardiac Computed Tomography. Front. Radiol. 2023, 3, 1193046. [Google Scholar] [CrossRef]

- Huo, Y.; Terry, J.G.; Wang, J.; Nath, V.; Bermudez, C.; Bao, S.; Parvathaneni, P.; Carr, J.J.; Landman, B.A. Coronary Calcium Detection Using 3D Attention Identical Dual Deep Network Based on Weakly Supervised Learning. In Proceedings of the SPIE 10949, Medical Imaging 2019: Image Processing, San Diego, CA, USA, 16–21 February 2019; p. 42. [Google Scholar]

- Fu, J.; Fang, M.; Lin, Z.; Qiu, J.; Yang, M.; Tian, J.; Dong, D.; Zou, Y. CT-Based Radiomics: Predicting Early Outcomes after Percutaneous Transluminal Renal Angioplasty in Patients with Severe Atherosclerotic Renal Artery Stenosis. Vis. Comput. Ind. Biomed. Art 2024, 7, 1. [Google Scholar] [CrossRef]

- Paciorek, A.M.; von Schacky, C.E.; Foreman, S.C.; Gassert, F.G.; Gassert, F.T.; Kirschke, J.S.; Laugwitz, K.-L.; Geith, T.; Hadamitzky, M.; Nadjiri, J. Automated Assessment of Cardiac Pathologies on Cardiac MRI Using T1-Mapping and Late Gadolinium Phase Sensitive Inversion Recovery Sequences with Deep Learning. BMC Med. Imaging 2024, 24, 43. [Google Scholar] [CrossRef]

- Zhang, Z.; Dai, Y.; Xue, P.; Bao, X.; Bai, X.; Qiao, S.; Gao, Y.; Guo, X.; Xue, Y.; Dai, Q.; et al. Prediction of Microvascular Obstruction from Angio-Based Microvascular Resistance and Available Clinical Data in Percutaneous Coronary Intervention: An Explainable Machine Learning Model. Sci. Rep. 2025, 15, 3045. [Google Scholar] [CrossRef] [PubMed]

- Mouches, P.; Wilms, M.; Rajashekar, D.; Langner, S.; Forkert, N.D. Multimodal Biological Brain Age Prediction Using Magnetic Resonance Imaging and Angiography with the Identification of Predictive Regions. Hum. Brain Mapp. 2022, 43, 2554. [Google Scholar] [CrossRef]

- Cai, L.; Zhao, E.; Niu, H.; Liu, Y.; Zhang, T.; Liu, D.; Zhang, Z.; Li, J.; Qiao, P.; Lv, H.; et al. A Machine Learning Approach to Predict Cerebral Perfusion Status Based on Internal Carotid Artery Blood Flow. Comput. Biol. Med. 2023, 164, 107264. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.R.; Yang, K.; Wen, Y.; Wang, P.; Hu, Y.; Lai, Y.; Wang, Y.; Zhao, K.; Tang, S.; Zhang, A.; et al. Screening and Diagnosis of Cardiovascular Disease Using Artificial Intelligence-Enabled Cardiac Magnetic Resonance Imaging. Nat. Med. 2024, 30, 1471–1480. [Google Scholar] [CrossRef]

- Sufian, A.; Alsadder, L.; Hamzi, W.; Zaman, S.; Sagar, A.S.M.S.; Hamzi, B. Mitigating Algorithmic Bias in AI-Driven Cardiovascular Imaging for Fairer Diagnostics. Diagnostics 2024, 14, 2675. [Google Scholar] [CrossRef]

- Holste, G.; Oikonomou, E.K.; Mortazavi, B.J.; Coppi, A.; Faridi, K.F.; Miller, E.J.; Forrest, J.K.; McNamara, R.L.; Ohno-Machado, L.; Yuan, N.; et al. Severe Aortic Stenosis Detection by Deep Learning Applied to Echocardiography. Eur. Heart J. 2023, 44, 4592–4604. [Google Scholar] [CrossRef] [PubMed]

- Vafaeezadeh, M.; Behnam, H.; Hosseinsabet, A.; Gifani, P. Automatic Morphological Classification of Mitral Valve Diseases in Echocardiographic Images Based on Explainable Deep Learning Methods. Int. J. Comput. Assist. Radiol. Surg. 2021, 17, 413–425. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, W.; Tang, T.; Xie, W.; Jiang, Y.; Zhang, H.; Zhou, X.; Yuan, K. Cardiac Segmentation Method Based on Domain Knowledge. Ultrason. Imaging 2022, 44, 105–117. [Google Scholar] [CrossRef]

- Ragnarsdottir, H.; Ozkan, E.; Michel, H.; Chin-Cheong, K.; Manduchi, L.; Wellmann, S.; Vogt, J.E. Deep Learning Based Prediction of Pulmonary Hypertension in Newborns Using Echocardiograms. Int. J. Comput. Vis. 2024, 132, 2567–2584. [Google Scholar] [CrossRef]

- Nurmaini, S.; Partan, R.U.; Bernolian, N.; Sapitri, A.I.; Tutuko, B.; Rachmatullah, M.N.; Darmawahyuni, A.; Firdaus, F.; Mose, J.C. Deep Learning for Improving the Effectiveness of Routine Prenatal Screening for Major Congenital Heart Diseases. J. Clin. Med. 2022, 11, 6454. [Google Scholar] [CrossRef]

- Sakai, A.; Komatsu, M.; Komatsu, R.; Matsuoka, R.; Yasutomi, S.; Dozen, A.; Shozu, K.; Arakaki, T.; Machino, H.; Asada, K.; et al. Medical Professional Enhancement Using Explainable Artificial Intelligence in Fetal Cardiac Ultrasound Screening. Biomedicines 2022, 10, 551. [Google Scholar] [CrossRef] [PubMed]

- Day, T.G.; Matthew, J.; Budd, S.F.; Venturini, L.; Wright, R.; Farruggia, A.; Vigneswaran, T.V.; Zidere, V.; Hajnal, J.V.; Razavi, R.; et al. Interaction between Clinicians and Artificial Intelligence to Detect Fetal Atrioventricular Septal Defects on Ultrasound: How Can We Optimize Collaborative Performance? Ultrasound Obstet. Gynecol. 2024, 64, 28–35. [Google Scholar] [CrossRef] [PubMed]

- Chao, C.-J.; Jeong, J.; Arsanjani, R.; Kim, K.; Tsai, Y.-L.; Yu, W.-C.; Farina, J.M.; Mahmoud, A.K.; Ayoub, C.; Grogan, M.; et al. Echocardiography-Based Deep Learning Model to Differentiate Constrictive Pericarditis and Restrictive Cardiomyopathy. JACC Cardiovasc. Imaging 2023, 17, 349–360. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Eun, Y.; Hwang, J.Y.; Eun, L.Y. Explainable Deep Learning Algorithm for Distinguishing Incomplete Kawasaki Disease by Coronary Artery Lesions on Echocardiographic Imaging. Comput. Methods Programs Biomed. 2022, 223, 106970. [Google Scholar] [CrossRef]

- Bhave, S.; Rodriguez, V.; Poterucha, T.; Mutasa, S.; Aberle, D.; Capaccione, K.M.; Chen, Y.; Dsouza, B.; Dumeer, S.; Goldstein, J.; et al. Deep Learning to Detect Left Ventricular Structural Abnormalities in Chest X-Rays. Eur. Heart J. 2024, 45, 2002–2012. [Google Scholar] [CrossRef]

- Matsumoto, T.; Ehara, S.; Walston, S.L.; Mitsuyama, Y.; Miki, Y.; Ueda, D. Artificial Intelligence-Based Detection of Atrial Fibrillation from Chest Radiographs. Eur. Radiol. 2022, 32, 5890–5897. [Google Scholar] [CrossRef]

- Ueda, D.; Matsumoto, T.; Ehara, S.; Yamamoto, A.; Walston, S.L.; Ito, A.; Shimono, T.; Shiba, M.; Takeshita, T.; Fukuda, D.; et al. Artificial Intelligence-Based Model to Classify Cardiac Functions from Chest Radiographs: A Multi-Institutional, Retrospective Model Development and Validation Study. Lancet Digit. Health 2023, 5, e525–e533. [Google Scholar] [CrossRef]

- Kusunose, K.; Hirata, Y.; Yamaguchi, N.; Kosaka, Y.; Tsuji, T.; Kotoku, J.; Sata, M. Deep Learning for Detection of Exercise-Induced Pulmonary Hypertension Using Chest X-Ray Images. Front. Cardiovasc. Med. 2022, 9, 891703. [Google Scholar] [CrossRef]

- Lo, S.H.; Yin, Y. A Novel Interaction-Based Methodology towards Explainable AI with Better Understanding of Pneumonia Chest X-Ray Images. Discov. Artif. Intell. 2021, 1, 16. [Google Scholar] [CrossRef]

- McNamara, S.L.; Yi, P.H.; Lotter, W. The Clinician-AI Interface: Intended Use and Explainability in FDA-Cleared AI Devices for Medical Image Interpretation. NPJ Digit. Med. 2024, 7, 80. [Google Scholar] [CrossRef]

- Panigutti, C.; Hamon, R.; Hupont, I.; Llorca, D.F.; Yela, D.F.; Junklewitz, H.; Scalzo, S.; Mazzini, G.; Sanchez, I.; Garrido, J.S.; et al. The Role of Explainable AI in the Context of the AI Act. In ACM International Conference Proceeding Series, Proceedings of the FAccT ′23: 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1139–1150. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haupt, M.; Maurer, M.H.; Thomas, R.P. Explainable Artificial Intelligence in Radiological Cardiovascular Imaging—A Systematic Review. Diagnostics 2025, 15, 1399. https://doi.org/10.3390/diagnostics15111399

Haupt M, Maurer MH, Thomas RP. Explainable Artificial Intelligence in Radiological Cardiovascular Imaging—A Systematic Review. Diagnostics. 2025; 15(11):1399. https://doi.org/10.3390/diagnostics15111399

Chicago/Turabian StyleHaupt, Matteo, Martin H. Maurer, and Rohit Philip Thomas. 2025. "Explainable Artificial Intelligence in Radiological Cardiovascular Imaging—A Systematic Review" Diagnostics 15, no. 11: 1399. https://doi.org/10.3390/diagnostics15111399

APA StyleHaupt, M., Maurer, M. H., & Thomas, R. P. (2025). Explainable Artificial Intelligence in Radiological Cardiovascular Imaging—A Systematic Review. Diagnostics, 15(11), 1399. https://doi.org/10.3390/diagnostics15111399