A Narrative Review of Artificial Intelligence in MRI-Guided Prostate Cancer Diagnosis: Addressing Key Challenges

Abstract

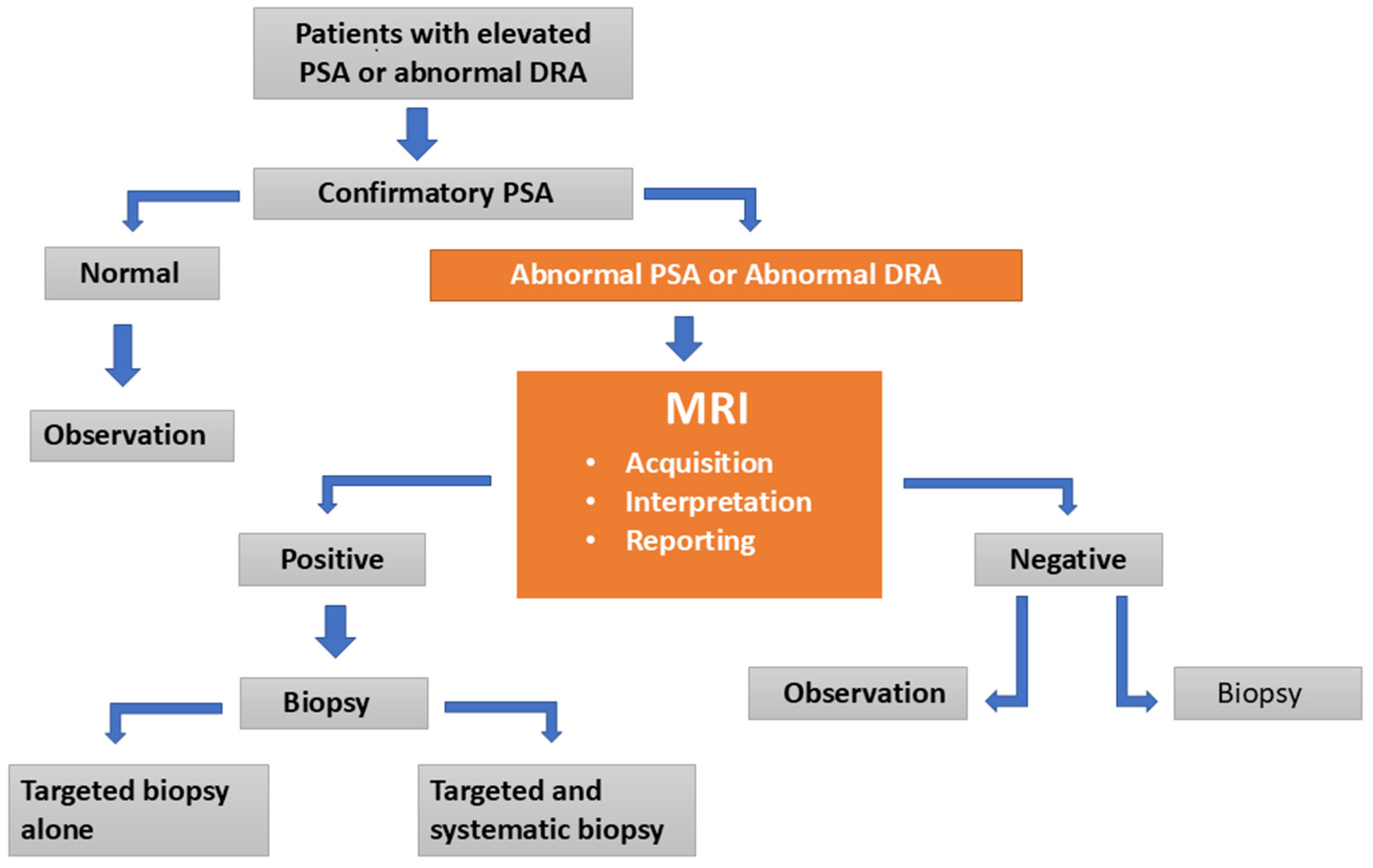

1. Introduction

Literature Search

- Peer-reviewed full-text articles in English.

- Human studies involving participants diagnosed or clinically suspected of having PCa/csPCa.

- Studies utilizing mpMRI or bi-parametric MRI (bpMRI).

- Studies confirming csPCa through pathological reference standards (biopsy or radical prostatectomy).

- Articles explicitly focused on AI applications in MRI-guided PCa diagnosis, specifically covering: AI-driven enhancements in MRI acquisition (image quality and acquisition time); AI-based assessment and standardization of MRI image quality; AI performance metrics in lesion detection, PI-RADS scoring, diagnostic accuracy, and radiologist comparisons; and AI-assisted reduction in inter-reader variability.

- Non-human studies (animal models, phantoms).

- Conference abstracts, commentaries, editorials, letters, case reports, or articles lacking primary data.

- Articles without direct clinical relevance or those unavailable in full text.

- Studies not explicitly aligned with the predefined thematic focus of this review.

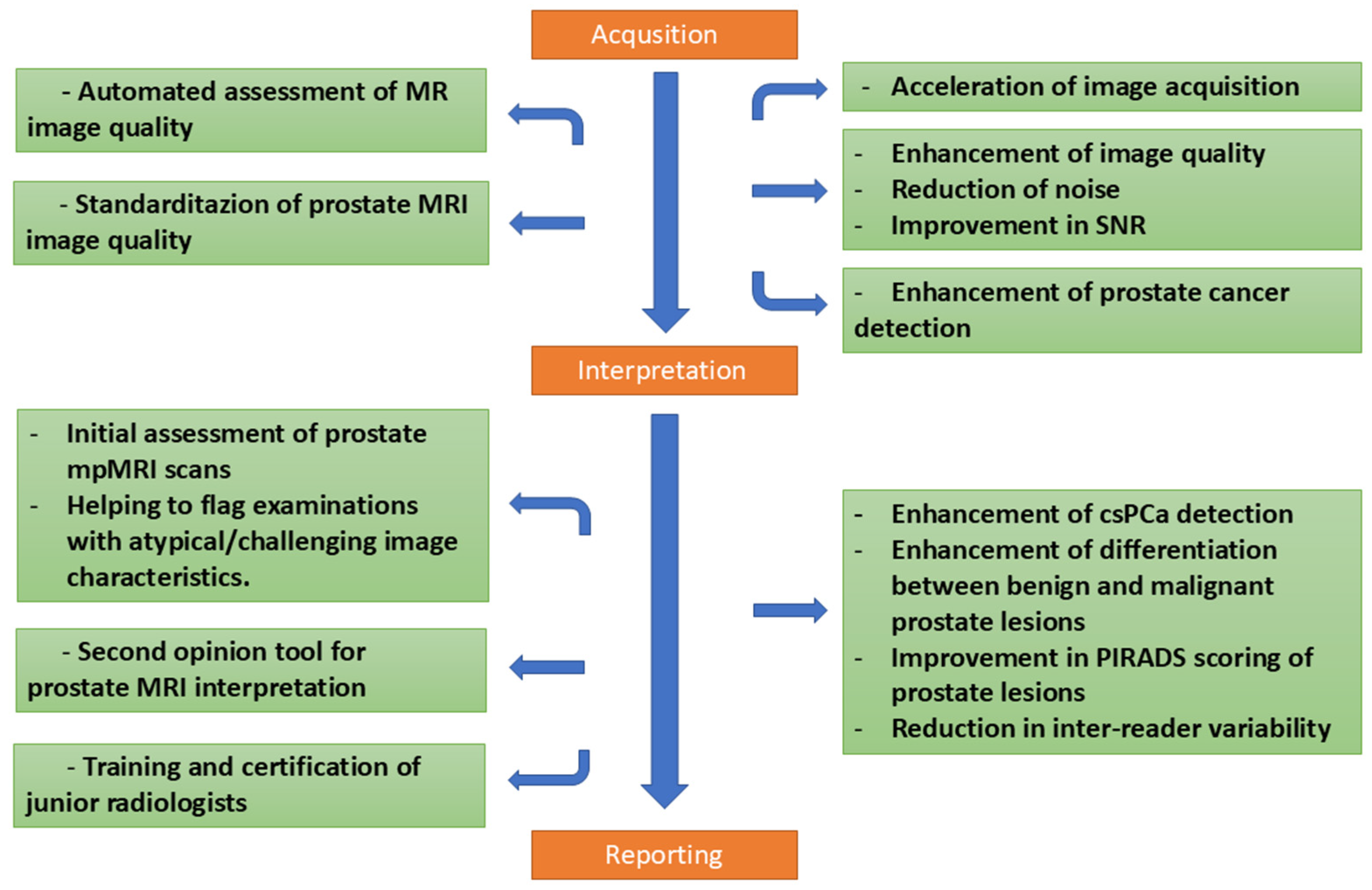

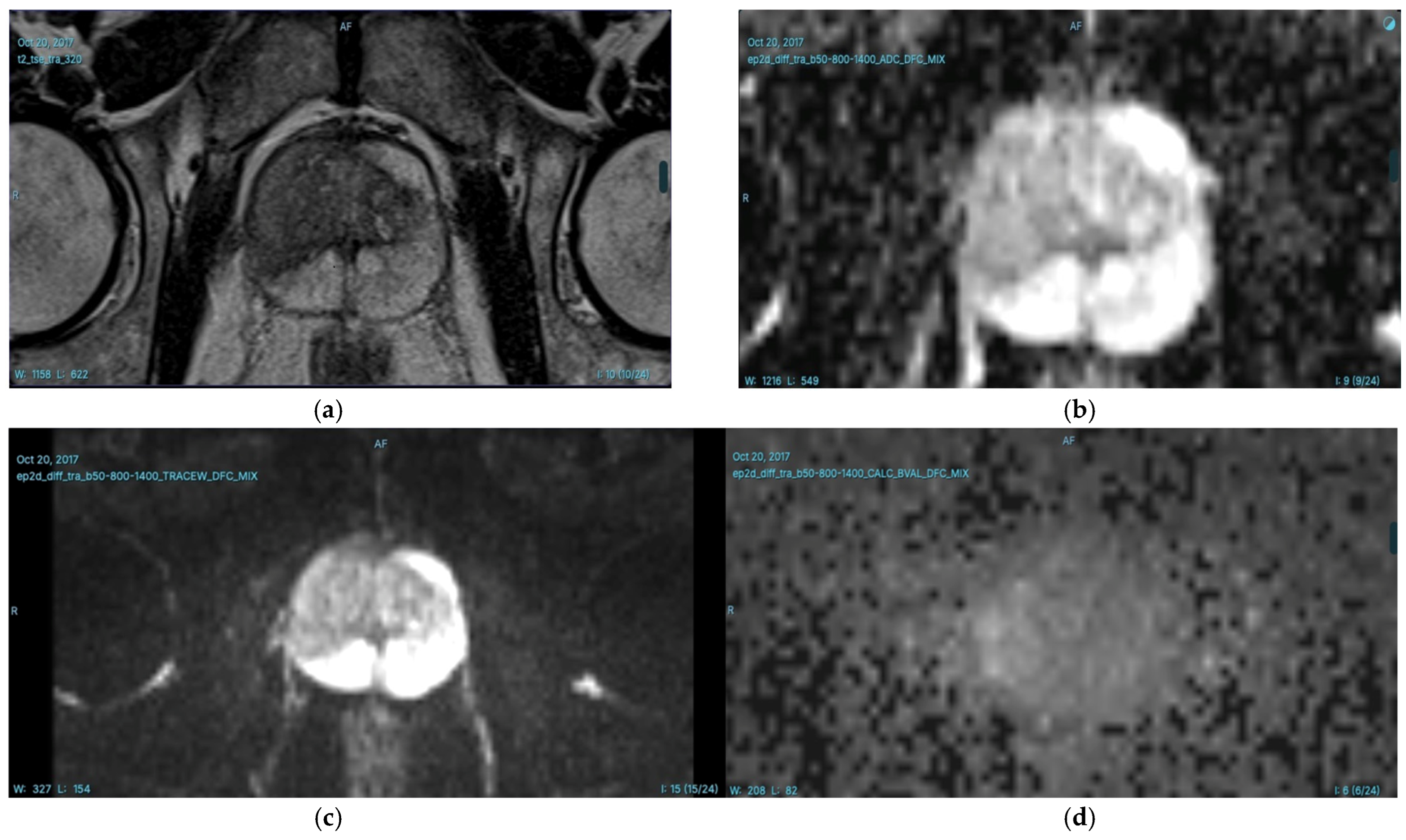

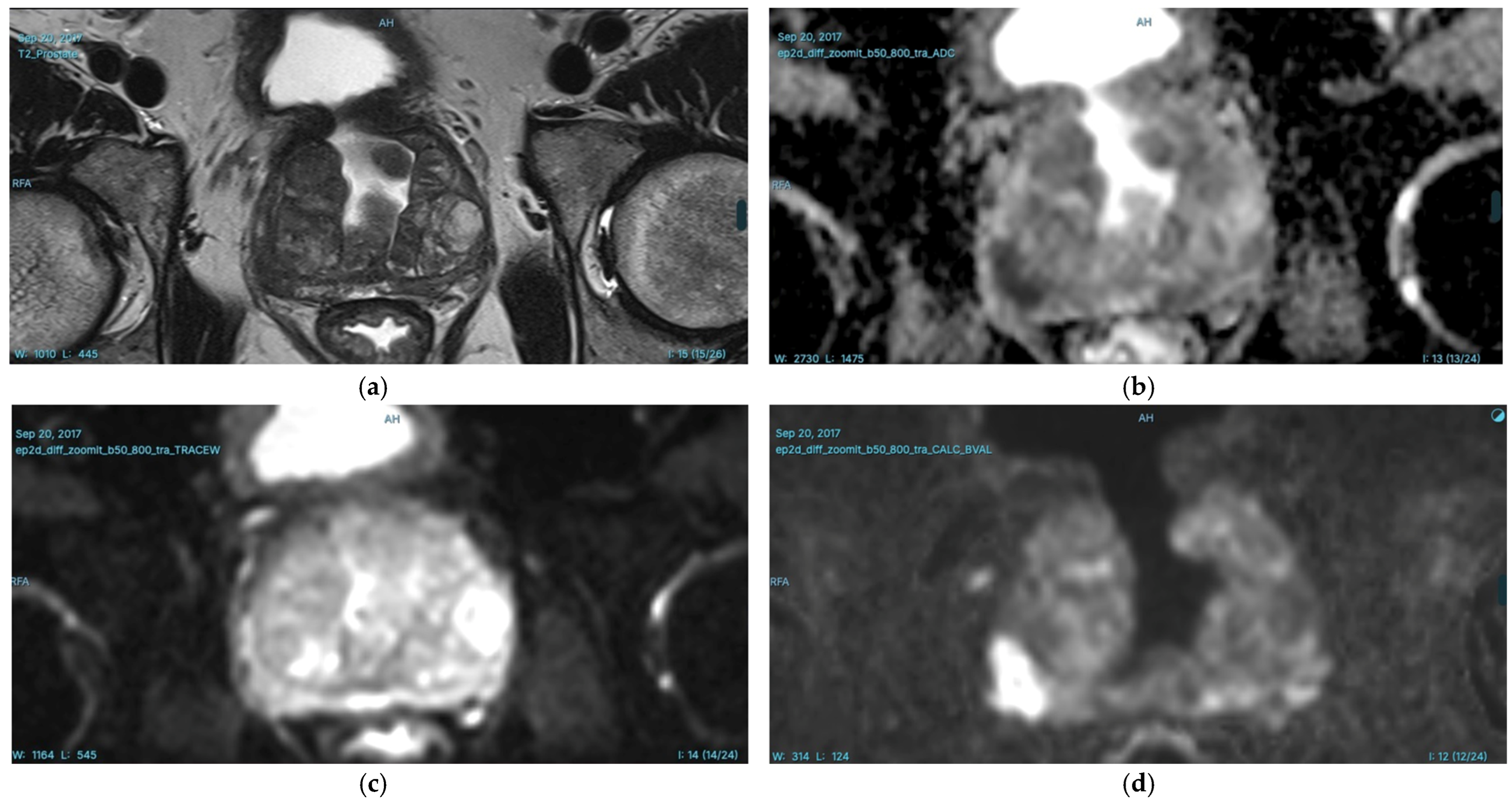

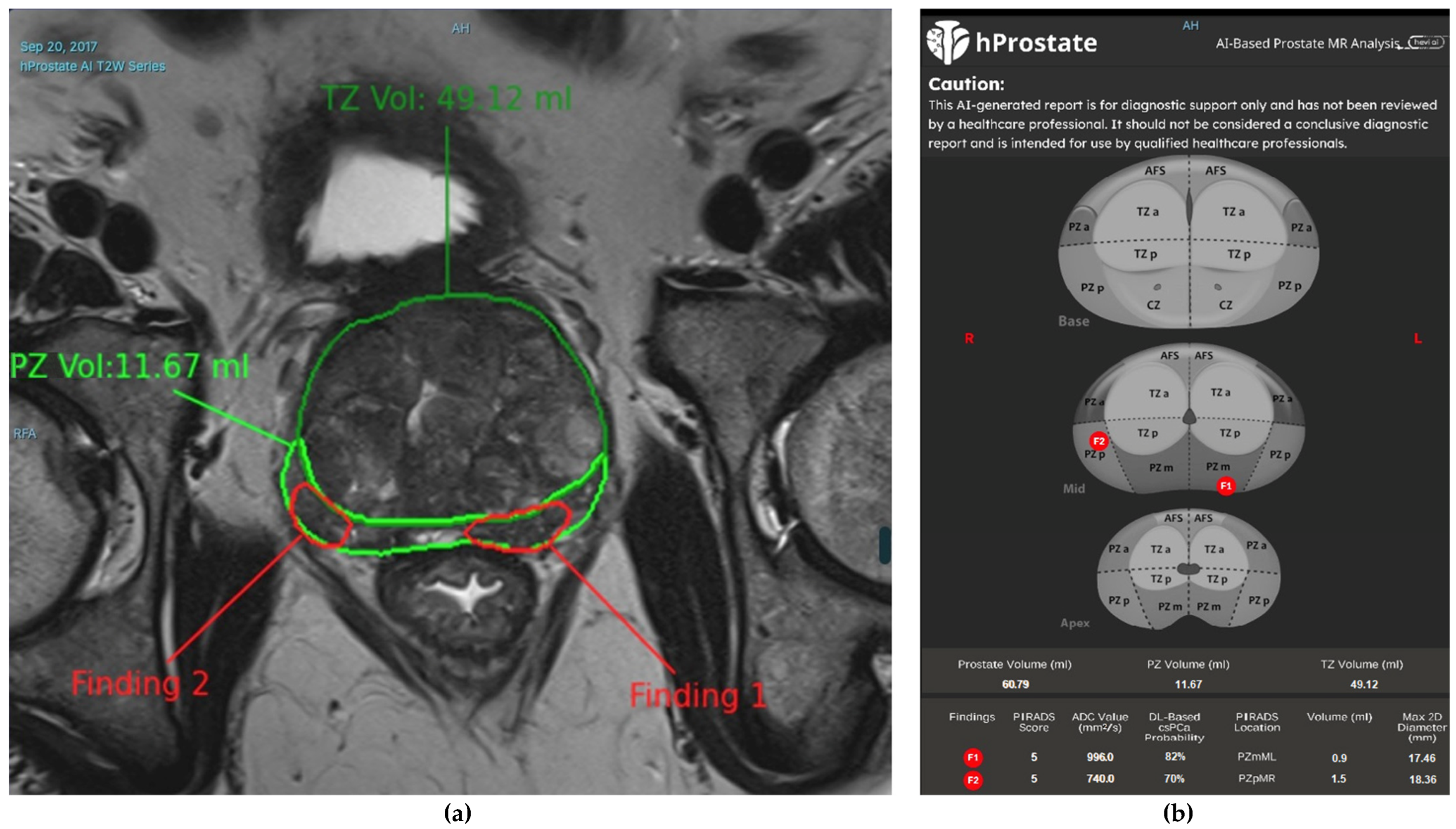

2. AI in Prostate MRI Acquisition and Interpretation

2.1. The Potential of AI in Prostate MRI: Advancements and Opportunities

2.2. AI and Enhancing Image Quality and Reducing Acquisition Time

2.3. AI and Image Quality Assessment

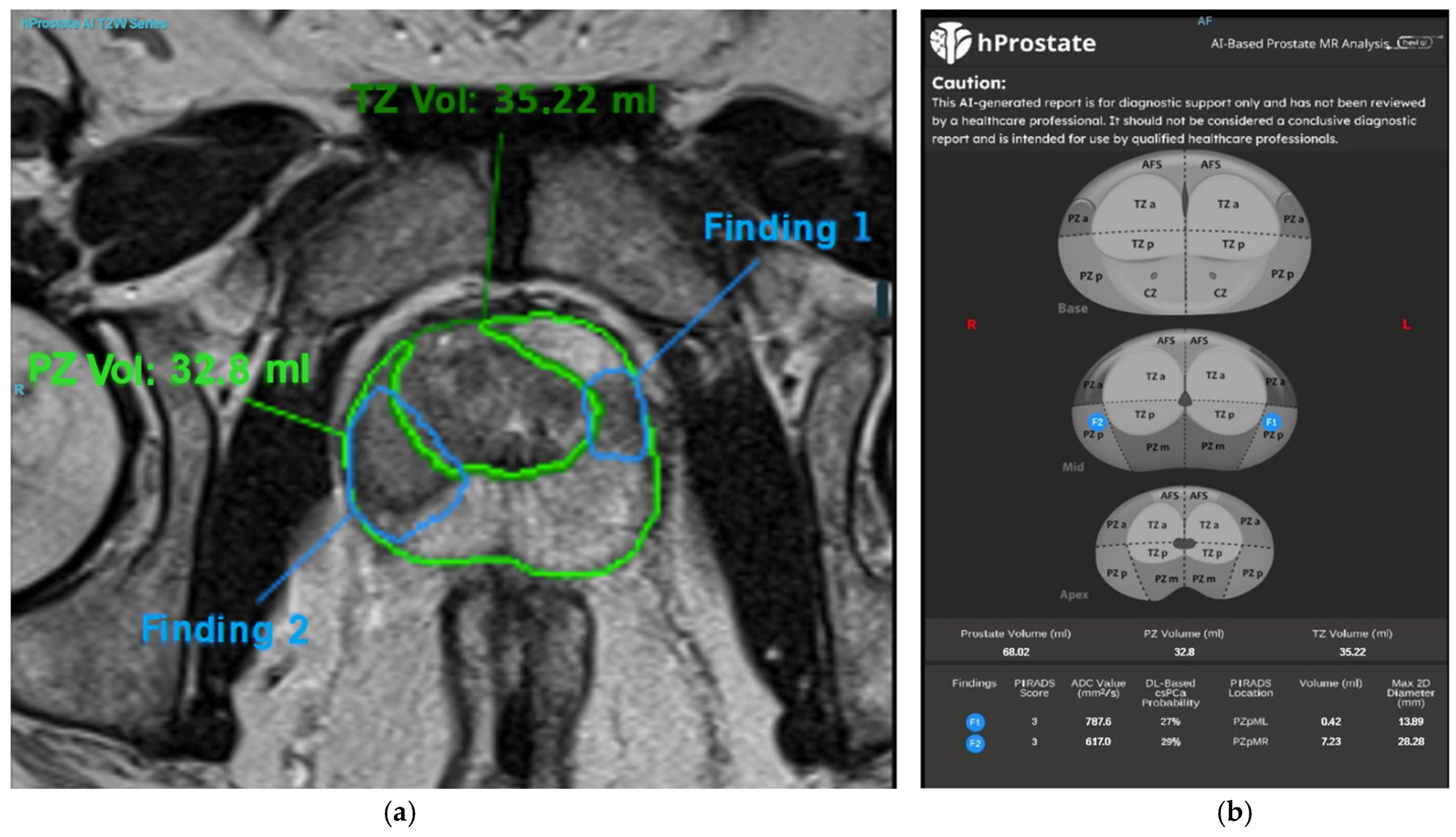

2.4. Prostate MRI Interpretation and the Role of AI

2.5. Approaches to AI-Based Detection of PCa

2.5.1. ML Approaches

2.5.2. DL Approaches

2.6. Performance of AI-Based Detection in PCa

3. Challenges and Future Directions

3.1. Challenges in AI-Based Quality Control of Prostate MRI Acquisition

3.2. Challenges and Standards in AI-Based Prostate MRI Interpretation

4. Discussion

5. Conclusions

5.1. Main Findings

5.2. Limitations and Biases

5.3. Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADC | Apparent diffusion coefficient |

| AI | Artificial intelligence |

| AUA | The American Urological Association |

| AUC | Area under the curve |

| AUROC | Area under the receiver operating characteristic curve |

| bpMRI | Bi-parametric MRI |

| CAD | Computer aided diagnosis |

| CLAIM | Checklist for artificial intelligence in medical imaging |

| CNN | Convolutional neural network |

| csPCa | Clinically significant prostate cancer |

| DCE | Dynamic contrast-enhanced |

| DL | Deep learning |

| DWI | Diffusion-weighted imaging |

| EAU | European Association of Urology |

| EPE | Extraprostatic extension |

| ESUR | The European Society of Urogenital Radiology |

| FROC | Free-response receiver operating characteristic |

| GAN | Generative adversarial network |

| IV | Intravenous |

| ISUP | International Society of Urological Pathology |

| ML | Machine learning |

| mpMRI | Multi-parametric MRI |

| MRI | Magnetic resonance imaging |

| NICE | The National Institute for Health and Care Excellence |

| NPV | Negative predictive value |

| PACS | Picture archiving and communication system |

| PCa | Prostate cancer |

| PI-CAI | Prostate imaging: cancer AI |

| PI-QUAL | Prostate imaging quality |

| PI-RADS | Prostate imaging reporting and data system |

| PPV | Positive predictive value |

| PRECISION | Prostate evaluation for clinically important disease: sampling using image guidance or not? |

| PSA | Prostate-specific antigen |

| PZ | Peripheral zone |

| RNN | Recurrent neural network |

| ROC | Receiver operating characteristic |

| SNR | Signal-to-noise ratio |

| STARD | Standards for reporting of diagnostic accuracy studies |

| SVM | Support vector machine |

| T1WI | T1-weighted imaging |

| T2WI | T2-weighted imaging |

| TRUS | Traditional transrectal ultrasound |

| TSE | Turbo spin echo |

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Bell, K.J.L.; Del Mar, C.; Wright, G.; Dickinson, J.; Glasziou, P. Prevalence of incidental prostate cancer: A systematic review of autopsy studies. Int. J. Cancer 2015, 137, 1749–1757. [Google Scholar] [CrossRef] [PubMed]

- Heidenreich, A.; Aus, G.; Bolla, M.; Joniau, S.; Matveev, V.B.; Schmid, H.P.; Zattoni, F. EAU Guidelines on Prostate Cancer. Eur. Urol. 2008, 53, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Gillessen, S.; Armstrong, A.; Attard, G.; Beer, T.M.; Beltran, H.; Bjartell, A.; Bossi, A.; Briganti, A.; Bristow, R.G.; Bulbul, M.; et al. Management of Patients with Advanced Prostate Cancer: Report from the Advanced Prostate Cancer Consensus Conference 2021. Eur. Urol. 2022, 82, 115–141. [Google Scholar] [CrossRef]

- Saad, F.; Hotte, S.J.; Finelli, A.; Malone, S.; Niazi, T.; Noonan, K.; Shayegan, B.; So, A.I.; Danielson, B.; Basappa, N.S.; et al. Results from a Canadian consensus forum of key controversial areas in the management of advanced prostate cancer: Recommendations for Canadian healthcare providers. Can. Urol. Assoc. J. 2021, 15, 353–358. [Google Scholar] [CrossRef]

- Tan, E.H.; Burn, E.; Barclay, N.L.; Delmestri, A.; Man, W.Y.; Golozar, A.; Serrano, À.R.; Duarte-Salles, T.; Cornford, P.; Prieto Alhambra, D.; et al. Incidence, Prevalence, and Survival of Prostate Cancer in the UK. JAMA Netw. Open 2024, 7, e2434622. [Google Scholar] [CrossRef]

- Kasivisvanathan, V.; Rannikko, A.S.; Borghi, M.; Panebianco, V.; Mynderse, L.A.; Vaarala, M.H.; Briganti, A.; Budäus, L.; Hellawell, G.; Hindley, R.G.; et al. MRI-Targeted or Standard Biopsy for Prostate-Cancer Diagnosis. N. Engl. J. Med. 2018, 378, 1767–1777. [Google Scholar] [CrossRef]

- Drost, F.J.H.; Roobol, M.J.; Nieboer, D.; Bangma, C.H.; Steyerberg, E.W.; Hunink, M.G.M.; Schoots, I.G. MRI pathway and TRUS-guided biopsy for detecting clinically significant prostate cancer. Cochrane Database Syst. Rev. 2017, 5, CD012663. [Google Scholar] [CrossRef]

- Ahmed, H.U.; El-Shater Bosaily, A.; Brown, L.C.; Gabe, R.; Kaplan, R.; Parmar, M.K.; Collaco-Moraes, Y.; Ward, K.; Hindley, R.G.; Freeman, A.; et al. Diagnostic accuracy of multi-parametric MRI and TRUS biopsy in prostate cancer (PROMIS): A paired validating confirmatory study. Lancet 2017, 389, 815–822. [Google Scholar] [CrossRef]

- Mottet, N.; van den Bergh, R.C.N.; Briers, E.; Van den Broeck, T.; Cumberbatch, M.G.; De Santis, M.; Fanti, S.; Fossati, N.; Gandaglia, G.; Gillessen, S.; et al. EAU-EANM-ESTRO-ESUR-SIOG Guidelines on Prostate Cancer;2020 Update. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur. Urol. 2021, 79, 243–262. [Google Scholar] [CrossRef]

- National Institute for Health and Care Excellence (NICE). Prostate Cancer: Diagnosis and Management. NICE Guideline NG131. 2019. Available online: https://www.nice.org.uk/guidance/ng131 (accessed on 14 May 2025).

- Wei, J.T.; Barocas, D.; Carlsson, S.; Coakley, F.; Eggener, S.; Etzioni, R.; Fine, S.W.; Han, M.; Konety, B.R.; Miner, M.; et al. Early detection of prostate cancer: AUA/SUO guideline part I: Prostate cancer screening. J. Urol. 2023, 210, 45–53. [Google Scholar] [CrossRef] [PubMed]

- Padhani, A.R.; Schoots, I.G.; Turkbey, B.; Giannarini, G.; Barentsz, J.O. A multifaceted approach to quality in the MRI-directed biopsy pathway for prostate cancer diagnosis. Eur. Radiol. 2021, 31, 4386–4389. [Google Scholar] [CrossRef] [PubMed]

- Turkbey, B.; Oto, A. Factors Impacting Performance and Reproducibility of PI-RADS. Can. Assoc. Radiol. J. 2021, 72, 337–338. [Google Scholar] [CrossRef] [PubMed]

- Stabile, A.; Giganti, F.; Kasivisvanathan, V.; Giannarini, G.; Moore, C.M.; Padhani, A.R.; Panebianco, V.; Rosenkrantz, A.B.; Salomon, G.; Turkbey, B.; et al. Factors Influencing Variability in the Performance of Multiparametric Magnetic Resonance Imaging in Detecting Clinically Significant Prostate Cancer: A Systematic Literature Review. Eur. Urol. Oncol. 2020, 3, 145–167. [Google Scholar] [CrossRef]

- Caglic, I.; Barrett, T. Optimising prostate mpMRI: Prepare for success. Clin. Radiol. 2019, 74, 831–840. [Google Scholar] [CrossRef]

- Turkbey, B.; Rosenkrantz, A.B.; Haider, M.A.; Padhani, A.R.; Villeirs, G.; Macura, K.J.; Tempany, C.M.; Choyke, P.L.; Cornud, F.; Margolis, D.J.; et al. Prostate Imaging Reporting and Data System Version 2.1: 2019 Update of Prostate Imaging Reporting and Data System Version 2. Eur. Urol. 2019, 76, 340–351. [Google Scholar] [CrossRef]

- Giganti, F.; Allen, C.; Emberton, M.; Moore, C.M.; Kasivisvanathan, V. Prostate Imaging Quality (PI-QUAL): A New Quality Control Scoring System for Multiparametric Magnetic Resonance Imaging of the Prostate from the PRECISION trial. Eur. Urol. Oncol. 2020, 3, 615–619. [Google Scholar] [CrossRef]

- de Rooij, M.; Allen, C.; Twilt, J.J.; Thijssen, L.C.P.; Asbach, P.; Barrett, T.; Brembilla, G.; Emberton, M.; Gupta, R.T.; Haider, M.A.; et al. PI-QUAL version 2: An update of a standardised scoring system for the assessment of image quality of prostate MRI. Eur. Radiol. 2024, 34, 7068–7079. [Google Scholar] [CrossRef]

- Barentsz, J.O.; Richenberg, J.; Clements, R.; Choyke, P.; Verma, S.; Villeirs, G.; Rouviere, O.; Logager, V.; Fütterer, J.J. ESUR prostate MR guidelines 2012. Eur. Radiol. 2012, 22, 746–757. [Google Scholar] [CrossRef]

- Turkbey, B.; Purysko, A.S. PI-RADS: Where Next? Radiology 2023, 307, e223128. [Google Scholar] [CrossRef]

- Saha, A.; Bosma, J.S.; Twilt, J.J.; van Ginneken, B.; Bjartell, A.; Padhani, A.R.; Bonekamp, D.; Villeirs, G.; Salomon, G.; Giannarini, G.; et al. Artificial intelligence and radiologists in prostate cancer detection on MRI (PI-CAI): An international, paired, non-inferiority, confirmatory study. Lancet Oncol. 2024, 25, 879–887. [Google Scholar] [CrossRef] [PubMed]

- Belue, M.J.; Turkbey, B. Tasks for artificial intelligence in prostate MRI. Eur. Radiol. Exp. 2022, 6, 33. [Google Scholar] [CrossRef] [PubMed]

- Jia, H.; Zhang, J.; Ma, K.; Qiao, X.; Ren, L.; Shi, X. Application of convolutional neural networks in medical images: A bibliometric analysis. Quant. Imaging. Med. Surg. 2024, 14, 3501–3518. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Lin, K.; Jie, B.; Dong, P.; Ding, X.; Bian, W.; Liu, M. Convolutional Recurrent Neural Network for Dynamic Functional MRI Analysis and Brain Disease Identification. Front. Neurosci. 2022, 16, 933660. [Google Scholar] [CrossRef] [PubMed]

- Figueira, A.; Vaz, B. Survey on Synthetic Data Generation, Evaluation Methods and GANs. Mathematics 2022, 10, 2733. [Google Scholar] [CrossRef]

- Mir, N.; Fransen, S.J.; Wolterink, J.M.; Fütterer, J.J.; Simonis, F.F.J. Recent Developments in Speeding up Prostate MRI. J. Magn. Reson. Imaging 2024, 60, 813–826. [Google Scholar] [CrossRef]

- Huang, H.; Mo, J.; Ding, Z.; Peng, X.; Liu, R.; Zhuang, D.; Zhang, Y.; Hu, G.; Huang, B.; Qiu, Y. Deep Learning to Simulate Contrast-Enhanced MRI for Evaluating Suspected Prostate Cancer. Radiology 2025, 314, e240238. [Google Scholar] [CrossRef] [PubMed]

- Gassenmaier, S.; Afat, S.; Nickel, D.; Mostapha, M.; Herrmann, J.; Othman, A.E. Deep learning-accelerated T2-weighted imaging of the prostate: Reduction of acquisition time and improvement of image quality. Eur. J. Radiol. 2021, 137, 109600. [Google Scholar] [CrossRef] [PubMed]

- Kordbacheh, H.; Seethamraju, R.T.; Weiland, E.; Kiefer, B.; Nickel, M.D.; Chulroek, T.; Cecconi, M.; Baliyan, V.; Harisinghani, M.G. Image quality and diagnostic accuracy of complex-averaged high b value images in diffusion-weighted MRI of prostate cancer. Abdom. Radiol. 2019, 44, 2244–2253. [Google Scholar] [CrossRef] [PubMed]

- Ueda, T.; Ohno, Y.; Yamamoto, K.; Murayama, K.; Ikedo, M.; Yui, M.; Hanamatsu, S.; Tanaka, Y.; Obama, Y.; Ikeda, H.; et al. Deep Learning Reconstruction of Diffusion-weighted MRI Improves Image Quality for Prostatic Imaging. Radiology 2022, 303, 373–381. [Google Scholar] [CrossRef]

- Lee, K.-L.; Kessler, D.A.; Dezonie, S.; Chishaya, W.; Shepherd, C.; Carmo, B.; Graves, M.J.; Barrett, T. Assessment of deep learning-based reconstruction on T2-weighted and diffusion-weighted prostate MRI image quality. Eur. J. Radiol. 2023, 166, 111017. [Google Scholar] [CrossRef]

- Jung, W.; Kim, E.H.; Ko, J.; Jeong, G.; Choi, M.H. Convolutional neural network-based reconstruction for acceleration of prostate T2 weighted MR imaging: A retro- and prospective study. Br. J. Radiol. 2022, 95, 20211378. [Google Scholar] [CrossRef]

- Jendoubi, S.; Wagner, M.; Montagne, S.; Ezziane, M.; Mespoulet, J.; Comperat, E.; Estellat, C.; Baptiste, A.; Renard-Penna, R. MRI for prostate cancer: Can computed high b-value DWI replace native acquisitions? Eur. Radiol. 2019, 29, 5197–5204. [Google Scholar] [CrossRef]

- Ursprung, S.; Herrmann, J.; Joos, N.; Weiland, E.; Benkert, T.; Almansour, H.; Lingg, A.; Afat, S.; Gassenmaier, S. Accelerated diffusion-weighted imaging of the prostate using deep learning image reconstruction: A retrospective comparison with standard diffusion-weighted imaging. Eur. J. Radiol. 2023, 165, 110953. [Google Scholar] [CrossRef]

- Park, J.C.; Park, K.J.; Park, M.Y.; Kim, M.-h.; Kim, J.K. Fast T2-Weighted Imaging With Deep Learning-Based Reconstruction: Evaluation of Image Quality and Diagnostic Performance in Patients Undergoing Radical Prostatectomy. J. Magn. Reson. Imaging 2022, 55, 1735–1744. [Google Scholar] [CrossRef]

- Kim, E.H.; Choi, M.H.; Lee, Y.J.; Han, D.; Mostapha, M.; Nickel, D. Deep learning-accelerated T2-weighted imaging of the prostate: Impact of further acceleration with lower spatial resolution on image quality. Eur. J. Radiol. 2021, 145, 110012. [Google Scholar] [CrossRef]

- de Rooij, M.; Barentsz, J.O. PI-QUAL v.1: The first step towards good-quality prostate MRI. Eur. Radiol. 2022, 32, 876–878. [Google Scholar] [CrossRef] [PubMed]

- Woernle, A.; Englman, C.; Dickinson, L.; Kirkham, A.; Punwani, S.; Haider, A.; Freeman, A.; Kasivisivanathan, V.; Emberton, M.; Hines, J.; et al. Picture Perfect: The Status of Image Quality in Prostate MRI. J. Magn. Reson. Imaging 2024, 59, 1930–1952. [Google Scholar] [CrossRef] [PubMed]

- Giganti, F.; Dinneen, E.; Kasivisvanathan, V.; Haider, A.; Freeman, A.; Kirkham, A.; Punwani, S.; Emberton, M.; Shaw, G.; Moore, C.M.; et al. Inter-reader agreement of the PI-QUAL score for prostate MRI quality in the NeuroSAFE PROOF trial. Eur. Radiol. 2022, 32, 879–889. [Google Scholar] [CrossRef] [PubMed]

- Basar, Y.; Alis, D.; Seker, M.E.; Kartal, M.S.; Guroz, B.; Arslan, A.; Sirolu, S.; Kurtcan, S.; Denizoglu, N.; Karaarslan, E. Inter-reader agreement of the prostate imaging quality (PI-QUAL) score for basic readers in prostate MRI: A multi-center study. Eur. J. Radiol. 2023, 165, 110923. [Google Scholar] [CrossRef]

- Fleming, H.; Dias, A.B.; Talbot, N.; Li, X.; Corr, K.; Haider, M.A.; Ghai, S. Inter-reader variability and reproducibility of the PI-QUAL score in a multicentre setting. Eur. J. Radiol. 2023, 168, 111091. [Google Scholar] [CrossRef]

- Ponsiglione, A.; Cereser, L.; Spina, E.; Mannacio, L.; Negroni, D.; Russo, L.; Muto, F.; Di Costanzo, G.; Stanzione, A.; Cuocolo, R.; et al. PI-QUAL version 2: A Multi-Reader reproducibility study on multiparametric MRI from a tertiary referral center. Eur. J. Radiol. 2024, 181, 111716. [Google Scholar] [CrossRef]

- Coelho, F.M.A.; Baroni, R.H. Strategies for improving image quality in prostate MRI. Abdom. Radiol. 2024, 49, 4556–4573. [Google Scholar] [CrossRef]

- Cipollari, S.; Guarrasi, V.; Pecoraro, M.; Bicchetti, M.; Messina, E.; Farina, L.; Paci, P.; Catalano, C.; Panebianco, V. Convolutional Neural Networks for Automated Classification of Prostate Multiparametric Magnetic Resonance Imaging Based on Image Quality. J. Magn. Reson. Imaging 2022, 55, 480–490. [Google Scholar] [CrossRef]

- Lin, Y.; Belue, M.J.; Yilmaz, E.C.; Harmon, S.A.; An, J.; Law, Y.M.; Hazen, L.; Garcia, C.; Merriman, K.M.; Phelps, T.E.; et al. Deep Learning-Based T2-Weighted MR Image Quality Assessment and Its Impact on Prostate Cancer Detection Rates. J. Magn. Reson. Imaging 2024, 59, 2215–2223. [Google Scholar] [CrossRef]

- Lin, Y.; Belue, M.J.; Yilmaz, E.C.; Law, Y.M.; Merriman, K.M.; Phelps, T.E.; Gelikman, D.G.; Ozyoruk, K.B.; Lay, N.S.; Merino, M.J.; et al. Deep learning-based image quality assessment: Impact on detection accuracy of prostate cancer extraprostatic extension on MRI. Abdom. Radiol. 2024, 49, 2891–2901. [Google Scholar] [CrossRef]

- Urase, Y.; Ueno, Y.; Tamada, T.; Sofue, K.; Takahashi, S.; Hinata, N.; Harada, K.; Fujisawa, M.; Murakami, T. Comparison of prostate imaging reporting and data system v2.1 and 2 in transition and peripheral zones: Evaluation of interreader agreement and diagnostic performance in detecting clinically significant prostate cancer. Br. J. Radiol. 2021, 95, 20201434. [Google Scholar] [CrossRef] [PubMed]

- Westphalen, A.C.; McCulloch, C.E.; Anaokar, J.M.; Arora, S.; Barashi, N.S.; Barentsz, J.O.; Bathala, T.K.; Bittencourt, L.K.; Booker, M.T.; Braxton, V.G.; et al. Variability of the Positive Predictive Value of PI-RADS for Prostate MRI across 26 Centers: Experience of the Society of Abdominal Radiology Prostate Cancer Disease-focused Panel. Radiology 2020, 296, 76–84. [Google Scholar] [CrossRef]

- Taya, M.; Behr, S.C.; Westphalen, A.C. Perspectives on technology: Prostate Imaging-Reporting and Data System (PI-RADS) interobserver variability. BJU Int. 2024, 134, 510–518. [Google Scholar] [CrossRef] [PubMed]

- Greer, M.D.; Choyke, P.L.; Turkbey, B. PI-RADSv2: How we do it. J. Magn. Reson. Imaging 2017, 46, 11–23. [Google Scholar] [CrossRef]

- Barrett, T.; Padhani, A.R.; Patel, A.; Ahmed, H.U.; Allen, C.; Bardgett, H.; Belfield, J.; Brizmohun Appayya, M.; Harding, T.; Hoch, O.-S.; et al. Certification in reporting multiparametric magnetic resonance imaging of the prostate: Recommendations of a UK consensus meeting. BJU Int. 2021, 127, 304–306. [Google Scholar] [CrossRef] [PubMed]

- Syer, T.; Mehta, P.; Antonelli, M.; Mallett, S.; Atkinson, D.; Ourselin, S.; Punwani, S. Artificial Intelligence Compared to Radiologists for the Initial Diagnosis of Prostate Cancer on Magnetic Resonance Imaging: A Systematic Review and Recommendations for Future Studies. Cancers 2021, 13, 3318. [Google Scholar] [CrossRef]

- Nsugbe, E. Towards Unsupervised Learning Driven Intelligence for Prediction of Prostate Cancer. Artifi. Intell. Appl. 2024, 2, 263–270. [Google Scholar] [CrossRef]

- Li, H.; Liu, H.; von Busch, H.; Grimm, R.; Huisman, H.; Tong, A.; Winkel, D.; Penzkofer, T.; Shabunin, I.; Choi, M.H.; et al. Deep Learning–based Unsupervised Domain Adaptation via a Unified Model for Prostate Lesion Detection Using Multisite Biparametric MRI Datasets. Radiol. Artif. Intell. 2024, 6, e230521. [Google Scholar] [CrossRef]

- Li, W.; Zhang, Y.; Zhou, H.; Yang, W.; Xie, Z.; He, Y. CLMS: Bridging domain gaps in medical imaging segmentation with source-free continual learning for robust knowledge transfer and adaptation. Med. Image Anal. 2025, 100, 103404. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, B.; Dohopolski, M.; Bai, T.; Jiang, S.; Nguyen, D. Deep unsupervised clustering for prostate auto-segmentation with and without hydrogel spacer. Mach. Learn. Sci. Technol. 2025, 6, 015015. [Google Scholar] [CrossRef]

- Javed, H.; El-Sappagh, S.; Abuhmed, T. Robustness in deep learning models for medical diagnostics: Security and adversarial challenges towards robust AI applications. Artif. Intell. Rev. 2025, 58, 12. [Google Scholar] [CrossRef]

- Li, H.; Lee, C.H.; Chia, D.; Lin, Z.; Huang, W.; Tan, C.H. Machine Learning in Prostate MRI for Prostate Cancer: Current Status and Future Opportunities. Diagnostics 2022, 12, 289. [Google Scholar] [CrossRef] [PubMed]

- Twilt, J.J.; van Leeuwen, K.G.; Huisman, H.J.; Fütterer, J.J.; de Rooij, M. Artificial Intelligence Based Algorithms for Prostate Cancer Classification and Detection on Magnetic Resonance Imaging: A Narrative Review. Diagnostics 2021, 11, 959. [Google Scholar] [CrossRef]

- Turkbey, B.; Haider, M.A. Artificial Intelligence for Automated Cancer Detection on Prostate MRI: Opportunities and Ongoing Challenges, From the AJR Special Series on AI Applications. Am. J. Roentgenol. 2022, 219, 188–194. [Google Scholar] [CrossRef]

- hProstate. 2024. Available online: https://hevi.ai (accessed on 14 May 2025).

- Abbasian Ardakani, A.; Bureau, N.J.; Ciaccio, E.J.; Acharya, U.R. Interpretation of radiomics features—A pictorial review. Comput. Methods Programs Biomed. 2022, 215, 106609. [Google Scholar] [CrossRef]

- Michaely, H.J.; Aringhieri, G.; Cioni, D.; Neri, E. Current Value of Biparametric Prostate MRI with Machine-Learning or Deep-Learning in the Detection, Grading, and Characterization of Prostate Cancer: A Systematic Review. Diagnostics 2022, 12, 799. [Google Scholar] [CrossRef]

- Ferro, M.; de Cobelli, O.; Musi, G.; del Giudice, F.; Carrieri, G.; Busetto, G.M.; Falagario, U.G.; Sciarra, A.; Maggi, M.; Crocetto, F.; et al. Radiomics in prostate cancer: An up-to-date review. Ther. Adv. Urol. 2022, 14, 17562872221109020. [Google Scholar] [CrossRef]

- Zaharchuk, G.; Gong, E.; Wintermark, M.; Rubin, D.; Langlotz, C.P. Deep Learning in Neuroradiology. Am. J. Neuroradiol. 2018, 39, 1776–1784. [Google Scholar] [CrossRef] [PubMed]

- Eklund, A.; Dufort, P.; Forsberg, D.; LaConte, S.M. Medical image processing on the GPU—Past, present and future. Med. Image Anal. 2013, 17, 1073–1094. [Google Scholar] [CrossRef]

- Flannery, B.T.; Sandler, H.M.; Lal, P.; Feldman, M.D.; Santa-Rosario, J.C.; Pathak, T.; Mirtti, T.; Farre, X.; Correa, R.; Chafe, S.; et al. Stress testing deep learning models for prostate cancer detection on biopsies and surgical specimens. J. Pathol. 2025, 265, 146–157. [Google Scholar] [CrossRef]

- Chen, T.; Hu, W.; Zhang, Y.; Wei, C.; Zhao, W.; Shen, X.; Zhang, C.; Shen, J. A Multimodal Deep Learning Nomogram for the Identification of Clinically Significant Prostate Cancer in Patients with Gray-Zone PSA Levels: Comparison with Clinical and Radiomics Models. Acad. Radiol. 2025, 32, 864–876. [Google Scholar] [CrossRef] [PubMed]

- Zheng, B.; Mo, F.; Shi, X.; Li, W.; Shen, Q.; Zhang, L.; Liao, Z.; Fan, C.; Liu, Y.; Zhong, J.; et al. An Automatic Deep-Radiomics Framework for Prostate Cancer Diagnosis and Stratification in Patients with Serum Prostate-Specific Antigen of 4.0-10.0 ng/mL: A Multicenter Retrospective Study. Acad. Radiol. 2025, 32, 2709–2722. [Google Scholar] [CrossRef]

- Cuocolo, R.; Cipullo, M.B.; Stanzione, A.; Romeo, V.; Green, R.; Cantoni, V.; Ponsiglione, A.; Ugga, L.; Imbriaco, M. Machine learning for the identification of clinically significant prostate cancer on MRI: A meta-analysis. Eur. Radiol. 2020, 30, 6877–6887. [Google Scholar] [CrossRef] [PubMed]

- Alqahtani, S. Systematic Review of AI-Assisted MRI in Prostate Cancer Diagnosis: Enhancing Accuracy Through Second Opinion Tools. Diagnostics 2024, 14, 2576. [Google Scholar] [CrossRef] [PubMed]

- Yu, R.; Jiang, K.-w.; Bao, J.; Hou, Y.; Yi, Y.; Wu, D.; Song, Y.; Hu, C.-H.; Yang, G.; Zhang, Y.-D. PI-RADSAI: Introducing a new human-in-the-loop AI model for prostate cancer diagnosis based on MRI. Br. J. Cancer 2023, 128, 1019–1029. [Google Scholar] [CrossRef] [PubMed]

- Hosseinzadeh, M.; Saha, A.; Brand, P.; Slootweg, I.; de Rooij, M.; Huisman, H. Deep learning–assisted prostate cancer detection on bi-parametric MRI: Minimum training data size requirements and effect of prior knowledge. Eur. Radiol. 2022, 32, 2224–2234. [Google Scholar] [CrossRef]

- Khosravi, P.; Lysandrou, M.; Eljalby, M.; Li, Q.; Kazemi, E.; Zisimopoulos, P.; Sigaras, A.; Brendel, M.; Barnes, J.; Ricketts, C.; et al. A Deep Learning Approach to Diagnostic Classification of Prostate Cancer Using Pathology–Radiology Fusion. J. Magn. Reson. Imaging 2021, 54, 462–471. [Google Scholar] [CrossRef]

- Winkel, D.J.; Tong, A.; Lou, B.; Kamen, A.; Comaniciu, D.; Disselhorst, J.A.; Rodríguez-Ruiz, A.; Huisman, H.; Szolar, D.; Shabunin, I.; et al. A Novel Deep Learning Based Computer-Aided Diagnosis System Improves the Accuracy and Efficiency of Radiologists in Reading Biparametric Magnetic Resonance Images of the Prostate: Results of a Multireader, Multicase Study. Invest. Radiol. 2021, 56, 605–613. [Google Scholar] [CrossRef]

- Bayerl, N.; Adams, L.C.; Cavallaro, A.; Bäuerle, T.; Schlicht, M.; Wullich, B.; Hartmann, A.; Uder, M.; Ellmann, S. Assessment of a fully-automated diagnostic AI software in prostate MRI: Clinical evaluation and histopathological correlation. Eur. J. Radiol. 2024, 181, 111790. [Google Scholar] [CrossRef]

- Saha, A.; Twilt, J.J.; Bosma, J.S.; van Ginneken, B.; Yakar, D.; Elschot, M.; Veltman, J.; Fütterer, J.; de Rooij, M.; Huisman, H. The PI-CAI Challenge: Public Training and Development Dataset. 2022. Available online: https://zenodo.org/records/6517398 (accessed on 14 May 2025).

- Bressem, K.; Adams, L.; Engel, G. Prostate158—Training data. 2022. Available online: https://zenodo.org/records/6481141 (accessed on 14 May 2025).

- Armato, S.G.; Huisman, H.; Drukker, K.; Hadjiiski, L.; Kirby, J.S.; Petrick, N.; Redmond, G.; Giger, M.; Cha, K.; Mamonov, A.; et al. PROSTATEx Challenges for computerized classification of prostate lesions from multiparametric magnetic resonance images. J. Med. Imaging 2018, 5, 044501. [Google Scholar] [CrossRef]

- Netzer, N.; Eith, C.; Bethge, O.; Hielscher, T.; Schwab, C.; Stenzinger, A.; Gnirs, R.; Schlemmer, H.-P.; Maier-Hein, K.H.; Schimmöller, L.; et al. Application of a validated prostate MRI deep learning system to independent same-vendor multi-institutional data: Demonstration of transferability. Eur. Radiol. 2023, 33, 7463–7476. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Bao, J.; Qiao, X.; Jin, P.; Ji, Y.; Li, Z.; Zhang, J.; Su, Y.; Ji, L.; Shen, J.; et al. Predicting clinically significant prostate cancer with a deep learning approach: A multicentre retrospective study. Eur. J. Nucl. Med. Mol. Imaging 2023, 50, 727–741. [Google Scholar] [CrossRef] [PubMed]

- Karagoz, A.; Alis, D.; Seker, M.E.; Zeybel, G.; Yergin, M.; Oksuz, I.; Karaarslan, E. Anatomically guided self-adapting deep neural network for clinically significant prostate cancer detection on bi-parametric MRI: A multi-center study. Insights Imaging 2023, 14, 110. [Google Scholar] [CrossRef]

- Li, Y.; Wynne, J.; Wang, J.; Qiu, R.L.J.; Roper, J.; Pan, S.; Jani, A.B.; Liu, T.; Patel, P.R.; Mao, H.; et al. Cross-shaped windows transformer with self-supervised pretraining for clinically significant prostate cancer detection in bi-parametric MRI. Med. Phys. 2025, 52, 993–1004. [Google Scholar] [CrossRef] [PubMed]

- Molière, S.; Hamzaoui, D.; Ploussard, G.; Mathieu, R.; Fiard, G.; Baboudjian, M.; Granger, B.; Roupret, M.; Delingette, H.; Renard-Penna, R. A Systematic Review of the Diagnostic Accuracy of Deep Learning Models for the Automatic Detection, Localization, and Characterization of Clinically Significant Prostate Cancer on Magnetic Resonance Imaging. Eur. Urol. Oncol. 2024. [Google Scholar] [CrossRef]

- Seetharaman, A.; Bhattacharya, I.; Chen, L.C.; Kunder, C.A.; Shao, W.; Soerensen, S.J.C.; Wang, J.B.; Teslovich, N.C.; Fan, R.E.; Ghanouni, P.; et al. Automated detection of aggressive and indolent prostate cancer on magnetic resonance imaging. Med. Phys. 2021, 48, 2960–2972. [Google Scholar] [CrossRef]

- Giganti, F.; Kirkham, A.; Kasivisvanathan, V.; Papoutsaki, M.-V.; Punwani, S.; Emberton, M.; Moore, C.M.; Allen, C. Understanding PI-QUAL for prostate MRI quality: A practical primer for radiologists. Insights Imaging 2021, 12, 59. [Google Scholar] [CrossRef]

- Hötker, A.M.; Da Mutten, R.; Tiessen, A.; Konukoglu, E.; Donati, O.F. Improving workflow in prostate MRI: AI-based decision-making on biparametric or multiparametric MRI. Insights Imaging 2021, 12, 112. [Google Scholar] [CrossRef]

- Wang, X.; Ma, J.; Bhosale, P.; Ibarra Rovira, J.J.; Qayyum, A.; Sun, J.; Bayram, E.; Szklaruk, J. Novel deep learning-based noise reduction technique for prostate magnetic resonance imaging. Abdom. Radiol. 2021, 46, 3378–3386. [Google Scholar] [CrossRef]

- Sunoqrot, M.R.S.; Nketiah, G.A.; Selnæs, K.M.; Bathen, T.F.; Elschot, M. Automated reference tissue normalization of T2-weighted MR images of the prostate using object recognition. Magn. Reson. Mater. Phys. Biol. Med. 2021, 34, 309–321. [Google Scholar] [CrossRef]

- Zhu, L.; Gao, G.; Zhu, Y.; Han, C.; Liu, X.; Li, D.; Liu, W.; Wang, X.; Zhang, J.; Zhang, X.; et al. Fully automated detection and localization of clinically significant prostate cancer on MR images using a cascaded convolutional neural network. Front. Oncol. 2022, 12, 958065. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.C.; Nakai, H.; Kuanar, S.; Froemming, A.T.; Bolan, C.W.; Kawashima, A.; Takahashi, H.; Mynderse, L.A.; Dora, C.D.; Humphreys, M.R.; et al. Fully Automated Deep Learning Model to Detect Clinically Significant Prostate Cancer at MRI. Radiology 2024, 312, e232635. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Wu, P.; Cui, Y.; Liu, X.; Wang, K.; Gao, G.; Wang, H.; Zhang, X.; Wang, X. Deep-Learning Models for Detection and Localization of Visible Clinically Significant Prostate Cancer on Multi-Parametric MRI. J. Magn. Reson. Imaging 2023, 58, 1067–1081. [Google Scholar] [CrossRef] [PubMed]

- Shao, L.; Liu, Z.; Liu, J.; Yan, Y.; Sun, K.; Liu, X.; Lu, J.; Tian, J. Patient-level grading prediction of prostate cancer from mp-MRI via GMINet. Comput. Biol. Med. 2022, 150, 106168. [Google Scholar] [CrossRef]

- Hu, L.; Fu, C.; Song, X.; Grimm, R.; von Busch, H.; Benkert, T.; Kamen, A.; Lou, B.; Huisman, H.; Tong, A.; et al. Automated deep-learning system in the assessment of MRI-visible prostate cancer: Comparison of advanced zoomed diffusion-weighted imaging and conventional technique. Cancer Imaging 2023, 23, 6. [Google Scholar] [CrossRef]

- Weißer, C.; Netzer, N.; Görtz, M.; Schütz, V.; Hielscher, T.; Schwab, C.; Hohenfellner, M.; Schlemmer, H.-P.; Maier-Hein, K.H.; Bonekamp, D. Weakly Supervised MRI Slice-Level Deep Learning Classification of Prostate Cancer Approximates Full Voxel- and Slice-Level Annotation: Effect of Increasing Training Set Size. J. Magn. Reson. Imaging 2024, 59, 1409–1422. [Google Scholar] [CrossRef]

- Bashkanov, O.; Rak, M.; Meyer, A.; Engelage, L.; Lumiani, A.; Muschter, R.; Hansen, C. Automatic detection of prostate cancer grades and chronic prostatitis in biparametric MRI. Comput. Methods Programs Biomed. 2023, 239, 107624. [Google Scholar] [CrossRef]

- Saha, A.; Hosseinzadeh, M.; Huisman, H. End-to-end prostate cancer detection in bpMRI via 3D CNNs: Effects of attention mechanisms, clinical priori and decoupled false positive reduction. Med. Image Anal. 2021, 73, 102155. [Google Scholar] [CrossRef]

- Mongan, J.; Moy, L.; Kahn, C.E. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- Thomas, M.; Murali, S.; Simpson, B.S.S.; Freeman, A.; Kirkham, A.; Kelly, D.; Whitaker, H.C.; Zhao, Y.; Emberton, M.; Norris, J.M. Use of artificial intelligence in the detection of primary prostate cancer in multiparametric MRI with its clinical outcomes: A protocol for a systematic review and meta-analysis. BMJ Open 2023, 13, e074009. [Google Scholar] [CrossRef]

- Penzkofer, T.; Padhani, A.R.; Turkbey, B.; Haider, M.A.; Huisman, H.; Walz, J.; Salomon, G.; Schoots, I.G.; Richenberg, J.; Villeirs, G.; et al. ESUR/ESUI position paper: Developing artificial intelligence for precision diagnosis of prostate cancer using magnetic resonance imaging. Eur. Radiol. 2021, 31, 9567–9578. [Google Scholar] [CrossRef] [PubMed]

T2-weighted (T2W) Imaging

|

DWI

|

DCE Imaging

|

| Study/Technology | Findings | AUC/Performance Metrics |

|---|---|---|

| Cuocolo et al. (12 Studies) [75] | Non-DL models outperformed DL models (AUC = 0.90 vs. 0.78). | Machine learning (ML) models using biopsy as reference: AUC = 0.85; radical prostatectomy specimens: AUC = 0.88. |

| Michaely et al. (29 Studies) [68] | No clear performance advantage between ML and DL methods; AI detection comparable to trained radiologists. | AUC values across studies ranged widely from 0.70 to 0.99, with some studies not providing AUC values. |

| Yu et al. (DL-assisted PI-RADS) [77] | Outperformed 70% of radiologists in MRI-based PCa diagnosis. | AUC not specified, but outperformed radiologists. |

| Hosseinzadeh et al. (Patient-Based * Results) [78] | DL-Computer aided diagnosis (DL-CAD) trained with larger sets (1586 scans) performed significantly better. The inclusion of zonal segmentations as prior knowledge improved performance. | AUC: 0.85 (with zonal segmentation and largest training set); Sensitivity: 91% (PI-RADS); Specificity: 77% (PI-RADS); Cohen′s kappa (κc) Agreement: 0.53 (DL-CAD vs. radiologists), 0.50 (DL-CAD vs. pathologists), 0.61 (radiologists vs. pathologists). |

| Hosseinzadeh et al. (Lesion-Based ** Results) [78] | Larger training sets (50–1586 cases) improved performance. Adding zonal segmentation increased sensitivity at similar FP levels. | Sensitivity at 1 FP per patient: 83% (without zonal segmentation), 87% (with zonal segmentation) (95% CI: 82–91); free-response receiver operating characteristic (FROC) Curve Sensitivity: 85% (DL-CAD, with zonal segmentation, 95% CI: 77–83) at 1 FP per patient, compared to expert radiologists’ 91% (95% CI: 84–96) at 0.30 FP per patient. |

| Khosravi et al. (AI-aided biopsy model) [79] | AUC of 0.89 for distinguishing cancerous vs. benign, AUC of 0.78 for high-risk vs. low-risk disease. | AUC: 0.89 (cancerous vs. benign), 0.78 (high-risk vs. low-risk). |

| Winkel et al. (AI impact on bpMRI in 100 patients) [80] | AI improved radiologists’ accuracy in detecting lesions, AUC increased from 0.84 to 0.88; Inter-reader agreement improved from 0.22 to 0.36; 21% reduction in reading times. | AUC: 0.88, Fleiss′ kappa (κF): improved from 0.22 to 0.36, reduced reading time by 21%. |

| Bayerl et al. (mdprostate-Commercial AI tool integrated into picture archiving and communication system (PACS)) [81] | 100% sensitivity at PI-RADS ≥ 2 cutoff, 85.5% sensitivity, 63.2% specificity at PI-RADS ≥ 4 cutoff; AUC of 0.803 for cancers of any grade. | Sensitivity: 100% (PI-RADS ≥ 2), 85.5% (PI-RADS ≥ 4); Specificity: 63.2%, AUC: 0.803. |

| Saha et al. (Prostate imaging: Cancer AI (PI-CAI) Study 10,000+ MRI exams) [22] | “Gleason grade group 2 or higher PCa, reduced false positives by 50.4%, detected fewer indolent cancers, improved patient outcomes. | Area under the receiver operating characteristic curve (AUROC): 0.91 (AI vs. 0.86 radiologists). |

| Netzer et al. (DL system from 2 external institutions) [85] | Comparable performance across external datasets, with receiver operating characteristic (ROC) AUC values of 0.80, 0.87, 0.82. | ROC AUC: 0.80, 0.87, 0.82. |

| Zhao et al. (Multicenter bpMRI from 7 centers) [86] | DL models showed comparable performance to expert radiologists’ PI-RADS assessment; integration with PI-RADS increased specificity. | Comparable to expert radiologists, increased specificity with PI-RADS integration. |

| Karagoz et al. (PI-CAI dataset) [87] | AUC of 0.888 and 0.889 on external validation; AUC of 0.870 using transfer learning. | AUROC: 0.888, 0.889 (external validation), 0.870 (transfer learning). |

| Li et al. (self-supervised learning-transformer-based model) [88] | High performance on external datasets, improving network generalization. | Cross-validation (PI-CAI dataset): AUC: 0.888 ± 0.010, Average Precision (AP): 0.545 ± 0.060; External dataset (model generalizability): AUC: 0.79, AP: 0.45. |

| Molière et al. (Meta-analysis of 25 Studies) [89] | AI performance ranged from AUC of 0.573 to 0.892 at lesion level, 0.82 to 0.875 at patient level; AI sensitivity approached experienced radiologists. | AUC: 0.573–0.892 (lesion level), 0.82–0.875 (patient level); sensitivity comparable to radiologists. |

| Seetharaman et al. [90] | AI slightly underperformed in sensitivity but outperformed junior radiologists. | AUC: 0.75 (radical prostatectomy), 0.80 (biopsy); AI detected 18% of the lesions missed by radiologists. |

| Lack of Cohort Diversity | Most AI models are developed using data from single centers or specific protocols, leading to inconsistent performance across different distributions. |

| Subjectivity in Image Quality Evaluation | The evaluation of MRI image quality, such as through the PI-QUAL scoring system, is subjective and dependent on the reader’s experience, leading to variability. |

| Interobserver Variability | There is moderate agreement among readers with different experience levels, leading to inconsistency in the evaluation of MRI images. |

| AI Model Overfitting | AI models using DL techniques may suffer from overfitting, especially when trained on small, non-representative datasets. |

| Dataset Bias and Generalizability | AI models trained on limited or private databases may not generalize well to other populations or settings, reducing their effectiveness. |

| Limited Experience in AI Model Training | Developing AI models requires extensive training with experienced readers, but the subjective nature of annotation can lead to inconsistent results across different readers. |

| Standardization of MRI Quality | Standardizing and improving MRI quality for AI models remains challenging, as variations in acquisition methods can impact model performance. |

| Multidisciplinary Collaboration Challenges | In lesion contouring, collaboration between different specialists (e.g., radiologists, pathologists) is required, but it remains a challenge to ensure consistency. |

| Clinical Integration and Approval | Broader clinical integration of AI faces challenges in clinical approval, adherence to safety standards, and the need for diverse, large datasets for training. |

| Data Privacy and Database Limitations | Many AI studies use private or small-scale databases, which limits the applicability and generalization of AI models, especially for larger or multi-center studies. |

| Training Protocols and Model Updates | AI models need continual updates and improvements to ensure they remain accurate and effective in the clinical setting. |

| Bias in PCa Detection | AI models may be biased towards the disease prevalence seen in specific populations, limiting their effectiveness in diverse global settings. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alis, D.; Onay, A.; Colak, E.; Karaarslan, E.; Bakir, B. A Narrative Review of Artificial Intelligence in MRI-Guided Prostate Cancer Diagnosis: Addressing Key Challenges. Diagnostics 2025, 15, 1342. https://doi.org/10.3390/diagnostics15111342

Alis D, Onay A, Colak E, Karaarslan E, Bakir B. A Narrative Review of Artificial Intelligence in MRI-Guided Prostate Cancer Diagnosis: Addressing Key Challenges. Diagnostics. 2025; 15(11):1342. https://doi.org/10.3390/diagnostics15111342

Chicago/Turabian StyleAlis, Deniz, Aslihan Onay, Evrim Colak, Ercan Karaarslan, and Baris Bakir. 2025. "A Narrative Review of Artificial Intelligence in MRI-Guided Prostate Cancer Diagnosis: Addressing Key Challenges" Diagnostics 15, no. 11: 1342. https://doi.org/10.3390/diagnostics15111342

APA StyleAlis, D., Onay, A., Colak, E., Karaarslan, E., & Bakir, B. (2025). A Narrative Review of Artificial Intelligence in MRI-Guided Prostate Cancer Diagnosis: Addressing Key Challenges. Diagnostics, 15(11), 1342. https://doi.org/10.3390/diagnostics15111342