Skin Cancer Detection and Classification Using Neural Network Algorithms: A Systematic Review

Abstract

1. Introduction

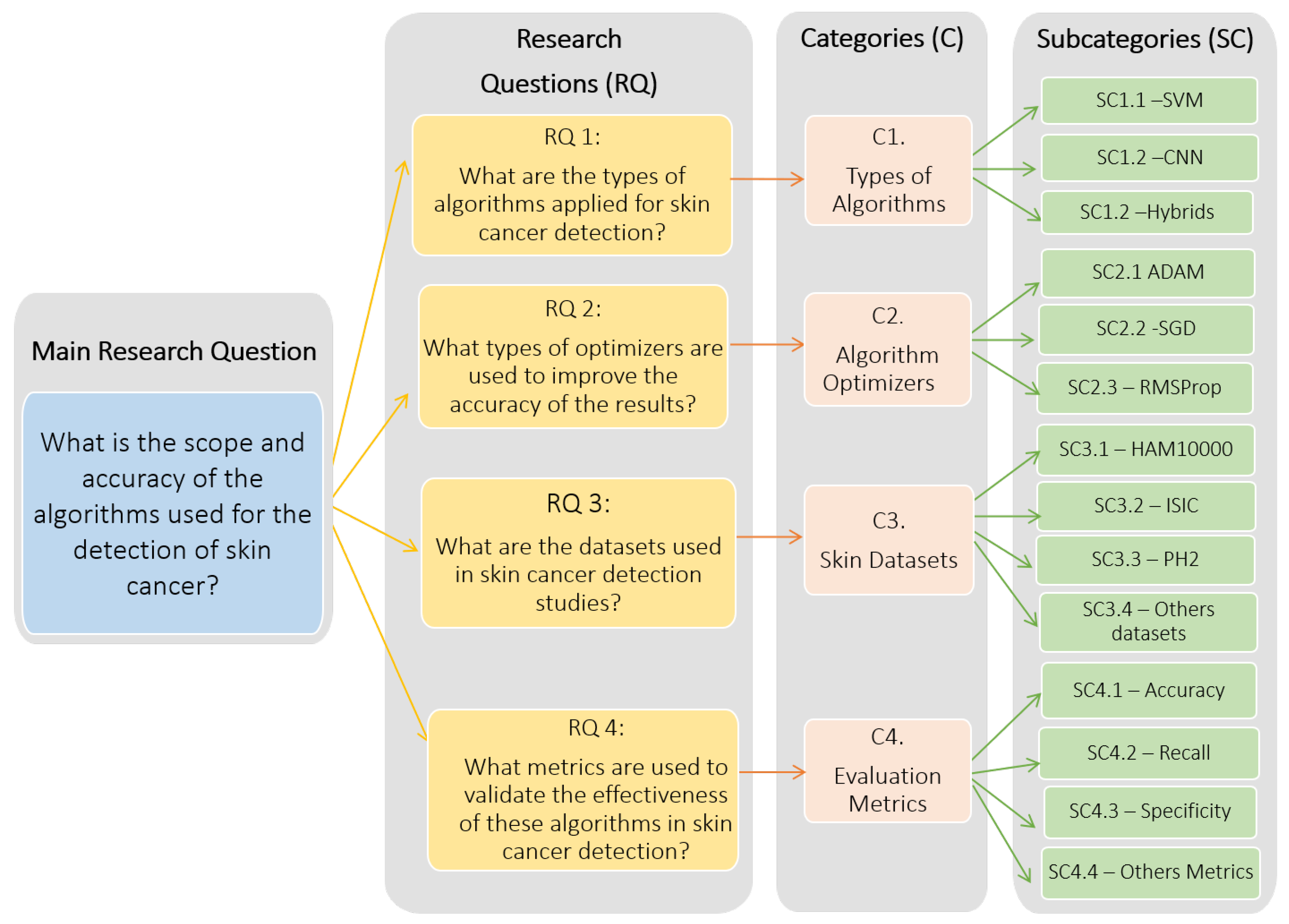

- RQ 1: What are the types of algorithms applied to detect skin cancer?

- RQ 2: What types of optimizers are used to improve the accuracy of the results?

- RQ 3: What are the datasets used in skin cancer detection studies?

- RQ 4: What metrics are used to validate the effectiveness of these algorithms in skin cancer detection?

2. Materials and Methods

2.1. Methodology

2.2. Search Strategy

2.3. Analysis Categories

3. Results

3.1. Main Features of the Selected Articles

3.2. Findings per Analysis Category

3.2.1. Types of Algorithms

3.2.2. Model Optimizers

3.2.3. Skin Datasets

3.2.4. Evaluation Metrics

3.3. Summary

4. Discussion

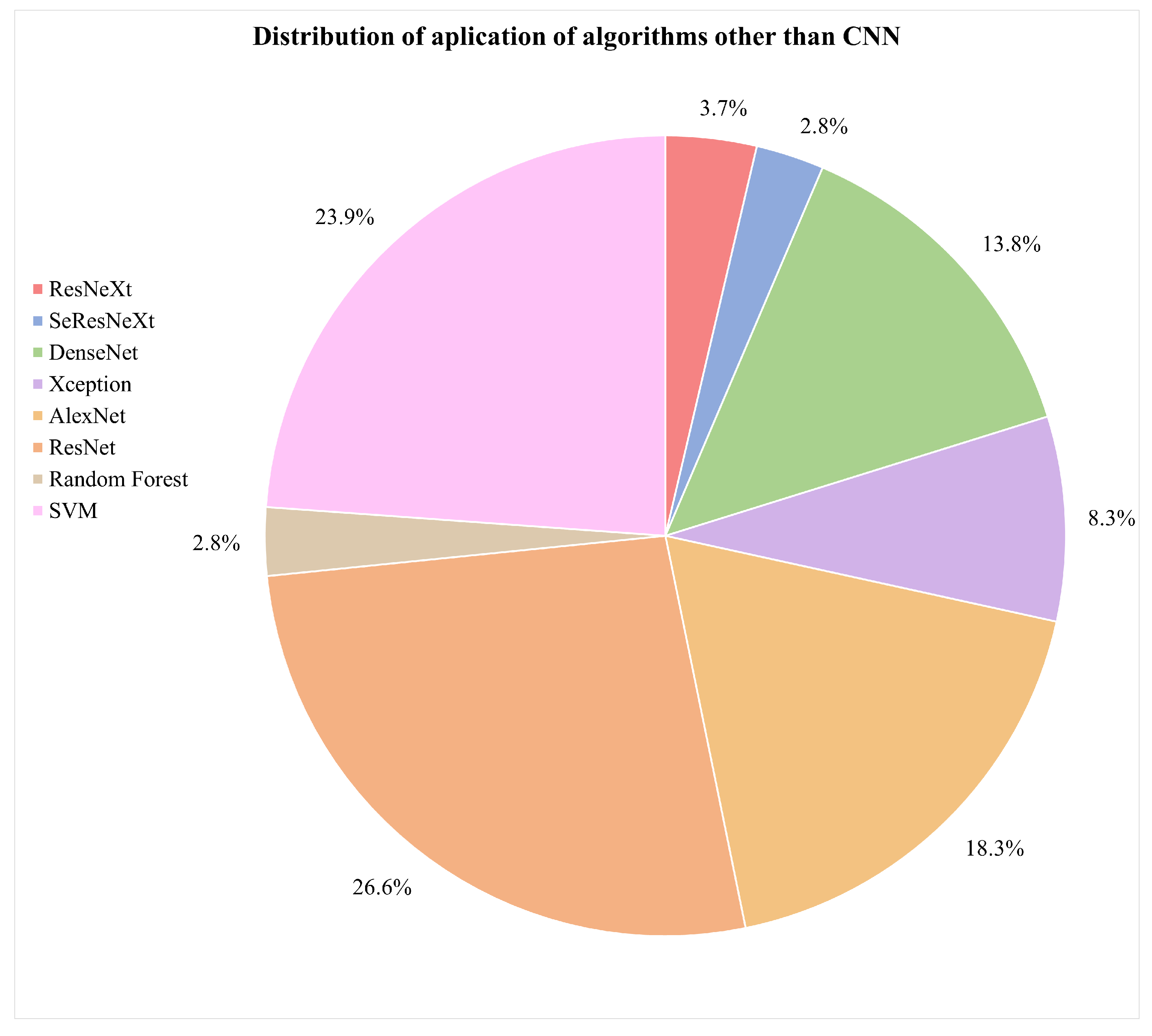

- What are the types of algorithms applied to detect skin cancer?According to the reviewed studies, the most commonly used algorithms in the field of this research are convolutional neural networks (CNNs). In general terms, CNNs are characterized by having a series of convolutional layers, responsible for extracting the primary features by combining various kernels to generate feature maps. Additionally, pooling layers gradually reduce the image size, refining the precision of distinctive features that will be used to train the model. These layers are applied sequentially, starting from the original input image in the first layer of the network.The architecture of a CNN can vary in terms of the number of convolutional or pooling layers, and it can also incorporate fully connected (FC) layers. FC layers process prior features for classification, initiating a classification phase that may be repeated in subsequent layers, continuing the classification process until data are prepared for the final output and classification [75,76]. These layers can be complemented with activation layers to introduce nonlinearity. Additionally, techniques such as “dropout” are employed to prevent overfitting by randomly deactivating certain neurons during training. “Early stopping” enhances performance by identifying the model’s equilibrium point and halting training when it no longer learns, optimizing the use of computational resources. In summary, these techniques complement the architecture, improving classification and mitigating overfitting issues.It was also evident that the application of CNNs in conjunction with pretrained models for skin cancer detection, especially melanoma, has achieved improved accuracy results. Some of these predefined algorithms reviewed in the studies include ResNeXt, SeResNeXt, DenseNet, Xception, AlexNet, ResNet, SVMs, and random forests [31,33,43,45,60,74]. Furthermore, in the examined literature, it is pertinent to note a scarce amount of detailed documentation information concerning the software libraries utilized for algorithm implementation, a detail that warrants further investigation for future research endeavors. However, within the scope of the reviewed studies, TensorFlow and PyTorch were identified as the predominant libraries for the development and training of neural networks. The following graph illustrates the presence of the mentioned algorithms in the articles that are part of this research, which have been considered part of the proposed experimentation or a general reference in the presented conceptual framework [65,77,78,79].Figure 9 illustrates the distribution of various machine learning algorithms used instead of CNNs. The majority of applications were focused on advanced algorithms such as ResNet and SVMs, which together represented more than half of the total. Algorithms like AlexNet and DenseNet also had a significant share. Conversely, methods such as ResNeXt, SeResNeXt, and random forests were applied to a lesser extent, indicating a preference for more established or possibly more effective models in the set of applications under consideration.On the other hand, the idea of approaches based on the interaction between pretrained models with CNNs have been relevant within the literature. In Figure 10, we illustrate a comparison between purely pretrained models (orange) and hybrid pretrained models (blue). In this regard, hybrid designs led by algorithms such as ResNet have been recurrently complemented by CNNs, mostly due to the good results and affinity when working together.At the same time, the use of hybrid algorithms led to the concepts of transfer learning and fine-tuning, which are interesting techniques to explore in these areas since the main idea is to consider the use of a pretrained model and make fine adjustments for a specific task.The former refers to a technique where a model developed for one task is reused as the starting point for a model in a second task. Often, the initial layers of the pretrained network are frozen. This means that the weights of these layers are not updated during further training. The frozen layers act as generic feature extractors.The latter is an additional step in transfer learning. After initializing a model with weights from a pretrained model, training continues on the new dataset, finely adjusting the weights of some or all layers and allowing the model to more specifically adapt to the characteristics of the new dataset, which can result in better performance for a particular situation [1,8,76,80].CNNs applied in conjunction with optimization algorithms constitute a powerful approach in the field of deep learning, particularly in computer vision tasks such as image recognition, classification, and segmentation.Optimization algorithms are also essential for efficiently training a CNN. During training, the goal is to minimize a loss function, which measures how far the model’s predictions are from the actual outcomes. In this sense, optimization seeks to adjust the weights of the neural network in an attempt to reduce the loss function. Among the most used algorithms are the following:

- Stochastic gradient descent (SGD): This is a classic method that performs updates after viewing each training sample or a small batch of samples, making it more robust against local minima [43].

- Momentum: This improves SGD by taking into account the gradient from the previous update to smooth oscillations and speed up training [43].

- Optimization algorithms in the learning process of CNNs: This approach enables the adjustment of weights and biases in pretrained CNN models to enhance accuracy in the detection and diagnosis of skin cancer [81].

- What types of optimizers are used to improve the accuracy of the results?Throughout the extensive analysis of the literature, a range of algorithms designed for skin cancer detection was evident, featuring a variety of network architectures and tuning parameters and the integration of optimizers within their training routines. These elements are crucial in a CNN framework, as they serve to refine and augment key aspects such as the following:

- Stability and convergence: Different optimizers possess properties that can impact the stability and rate of convergence during training. Selecting the appropriate optimizer can aid in circumventing issues such as training stagnation or divergence.

- Efficient learning: Optimizers enable the efficient adjustment of weights and biases in a CNN throughout the training process. This is vital for developing a model that can effectively learn from a dataset of skin images, which is often large and intricate.

- Overcoming local minima: Optimizers assist in navigating past local minima in the loss function, which is particularly crucial in complex problems like skin cancer detection, where the objective function may have multiple local minima [58].

Considering the points discussed, it can be suggested that optimizers are critical to the effectiveness of CNN algorithms for detecting skin cancer [74], as they facilitate efficient training, ensure proper convergence, and enable the model to overcome optimization challenges within the neural network’s weight adjustments. The choice of an optimizer, along with its hyperparameter tuning, is an essential aspect of developing a detection algorithm. In general, from the literature analyzed, Adaptive Moment Estimation (ADAM), stochastic gradient descent (SGD), and root mean square propagation (RMSProp) emerged as the most frequently employed optimizers, Table 8 summarizes the advantages and disadvantages for optimizer mentioned.In the reviewed papers, it was feasible to discern the significance of the outcomes achieved for various algorithm configurations in conjunction with optimizer integration, with ADAM being the most commonly employed one. Presented in Figure 11 is an outline of three selected papers, which illustrate three different stages of contributions within the research domain in recent years. For instance, the first work reported a different design based on CNNs which achieved 68% accuracy, in comparison with the 88% accuracy yielded by hybrid pretrained approaches, which illustrates a relevant improvement.In the first study, entitled “Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images” [31], a deep learning method solely based on CNNs that surpassed 11 pathologists in classifying histopathological melanoma images was highlighted, achieving 68% accuracy. The second paper, “An approach for multiclass skin lesion classification based on ensemble learning” [65], employed an ensemble learning approach to classify skin lesions into multiple categories, utilizing specific algorithms like ResNeXt, SeResNeXt, DenseNet, Xception, and ResNet to achieve an average accuracy of 88% on a dataset of 18,730 dermoscopy images. This result significantly exceeds that of the first study, which solely used CNNs. Finally, in the third article, “Deep learning techniques for skin lesion analysis and melanoma cancer detection: a survey of state-of-the-art” [40], a variety of deep learning techniques for skin lesion analysis and melanoma cancer detection are summarized, highlighting a CNN model optimized with a deep residual network and CDCNN and achieving 99.2% accuracy in classifying 11,720 images from the ISIC 2018 database, providing an overview of advancements in this field up to the year 2020.Overall, the use of optimizers seems to be a consistent strategy for achieving improvements in network performance, with ADAM standing out in several studies, suggesting its popularity and efficacy in optimizing various CNN architectures. However, in some cases, SGD or RMSProp may perform equally well or even better, especially when the hyperparameters are properly adjusted. The final selection often involves experimentation and fine-tuning, meaning the choice of optimizer algorithms depends greatly on the specific problem and the architecture of the neural network. - What are the datasets used in skin cancer detection studies?The most commonly used datasets in the reviewed studies were the Human Against Machine (HAM10000) dataset, primarily created by dermatologists at Harvard and other institutions, and the International Skin Imaging Collaboration (ISIC) dataset. Both contain a large number of images (10,015 and 11,720, respectively), compiling a series of dermatoscopic images of skin lesions, including skin cancer characterized by high-quality photographs. In the case of the HAM10000 dataset, seven diagnosed categories are included, with nevus, melanoma, and carcinoma being among the most frequent ones. These images have been extensively used for training machine learning models, specifically for classification tasks through CNNs [46,48]. The ISIC dataset represents a global contribution initiative, featuring a vast collection of images that include various categories but with a focus on melanoma-type cancer [2,82,83,84,85,86].Also, both datasets have been utilized for research and development of automatic diagnostic tools, serving as a standard reference in international challenges and competitions. They include metadata related to clinical diagnosis, lesion type, and body location, among other factors. Their open access and the diversity of data they offer make them highly valuable to the scientific community, in dermatological research, and in the development of artificial intelligence tools for the diagnosis of skin diseases as a significant complement to expert diagnosis [87,88,89,90,91]. Additionally, the PH2 dataset consists of a recompilation of 200 images, focusing on a local objective rather than being broadly applicable to other case studies [69,74]. Regarding well-known issues within the source of the dataset employed, although in the minority, some researchers use non-public databases and internet images, complicating the replication of results due to data unavailability and potential bias in the selection of internet images [92,93,94,95]. On the other hand, most of the datasets currently available focus on skin lesions for lighter skin tones, with many of the images in the ISIC dataset originating mainly from the United States, Europe, and Australia. Furthermore, in order to achieve higher degrees of accuracy and effectiveness when working on the classification, the details transform into key elements to consider, such as training the model while considering the intensity within the color of the skin [43,47]. The size of the lesion also plays a crucial role, as lesions that are smaller than 6 mm tend to be more challenging to identify. As previously addressed in the first question, the treatment or preprocessing of images [33] prior to their training with convolutional neural networks (CNNs) is a critical step for enhancing the efficacy and efficiency of a model. The following key points are suggested for consideration in order to enhance image processing:

- Quantity

- –

- –

- Generative adversarial networks (GANs): These are a synthetic data generation method that aims to produce samples of images that appear real, referring to a minimax game between two players: a generator and a discriminator. The generator transforms a distribution of random noise into realistic images, while the discriminator learns to differentiate between these generated images and real training data [32].

- Quality

- –

- Color standardization: Ensuring that all images have the same color space (for example, RGB or grayscale) is crucial for maintaining data consistency [98].

- –

- Contrast adjustment: Enhancing the contrast of an image can help to highlight important features.

- Size

- –

- Resizing: Images should be of a uniform size before being fed into a CNN. Therefore, it is important to verify the size of the entire dataset and choose one that allows for the preservation of important information without being excessively large, helping to reduce computational requirements [38,57].

- –

- Processing

- –

- Normalization: This involves scaling pixel values to have a common range, such as from 0 to 1 or from −1 to 1. This assists the network to train more efficiently. Normalization is typically carried out by dividing the pixel values by 255 (the maximum value for a pixel) [6].

- –

- Noise reduction: In some cases, images may contain noise that can be detrimental to the model. Applying filters to reduce or eliminate this noise can be useful [100].

- Transformation

- –

- Whitening: This transforms the image so that it has a mean close to zero and uniform variance. This can enhance convergence during training.

- –

- Edge detection and feature extraction: In some cases, it may be useful to preprocess the image to extract specific features, such as edges, using techniques like Sobel or Canny filters. The Sobel filter uses convolutions with two 3 × 3 matrices: one to detect changes in pixel intensity in the horizontal direction (Sobel X) and another for the vertical direction (Sobel Y) [101]. The Canny filter is a more sophisticated approach to edge detection and is considered one of the best due to its accuracy. This process may include various steps and techniques [102]. Both filters have their own strengths, as the Sobel filter is simpler and faster to compute, while the Canny filter is more robust and effective in precise edge detection, especially in the presence of noise [103].

The selection of preprocessing techniques is largely contingent upon the nature of the problem and the particular dataset in question [104,105]. In addition to the aforementioned points, the inclusion of clinical data such as race, age, gender, and skin type in classification systems could substantially improve their precision, offering dermatologists valuable supplementary information for decision-making processes. Therefore, it is essential to consider the metadata of the datasets available, conducting an analysis and review of the intersection of information with images. These aspects are crucial for future research in this application domain. Moreover, it has been noted that deep learning tends to be more efficacious than traditional machine learning methods, especially in datasets with a substantial number of images per class. This efficacy can also be replicated in smaller datasets, utilizing data augmentation techniques. - What metrics are used to validate the effectiveness of these algorithms in skin cancer detection?

- Recall: This is primarily for detecting the majority of malignant lesion cases, as failing to identify a melanoma can have severe consequences [110].

- Specificity: This reduces false positives, which is crucial to avoid unnecessary biopsies and the anxiety associated with a misdiagnosis [111].

- F1 score: This is important for achieving a balance between sensitivity and precision, especially when working with imbalanced data, as is common in skin lesions where melanoma cases may be less frequent than benign ones [112].

- AUC-ROC: This provides a comprehensive measure of model performance across all classification thresholds, aiding in selecting the most appropriate threshold for malignant lesion detection [113].

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Manimurugan, S. Hybrid high performance intelligent computing approach of CACNN and RNN for skin cancer image grading. Soft Comput. 2023, 27, 579–589. [Google Scholar] [CrossRef]

- Girdhar, N.; Sinha, A.; Gupta, S. DenseNet-II: An improved deep convolutional neural network for melanoma cancer detection. Soft Comput. 2023, 27, 13285–13304. [Google Scholar] [CrossRef] [PubMed]

- Balaha, H.M.; Hassan, A.E.S. Skin cancer diagnosis based on deep transfer learning and sparrow search algorithm. Neural Comput. Appl. 2023, 35, 815–853. [Google Scholar] [CrossRef]

- Dascalu, A.; Walker, B.N.; Oron, Y.; David, E.O. Non-melanoma skin cancer diagnosis: A comparison between dermoscopic and smartphone images by unified visual and sonification deep learning algorithms. J. Cancer Res. Clin. Oncol. 2022, 148, 2497–2505. [Google Scholar] [CrossRef] [PubMed]

- Hribernik, N.; Huff, D.T.; Studen, A.; Zevnik, K.; Žan, K.; Emamekhoo, H.; Škalic, K.; Jeraj, R.; Reberšek, M. Quantitative imaging biomarkers of immune-related adverse events in immune-checkpoint blockade-treated metastatic melanoma patients: A pilot study. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 1857–1869. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Luo, S.; Greer, P. A Novel Vision Transformer Model for Skin Cancer Classification. Neural Process. Lett. 2023, 55, 9335–9351. [Google Scholar] [CrossRef]

- Majumder, S.; Ullah, M.A. Feature extraction from dermoscopy images for melanoma diagnosis. SN Appl. Sci. 2019, 1, 753. [Google Scholar] [CrossRef]

- Qureshi, A.S.; Roos, T. Transfer Learning with Ensembles of Deep Neural Networks for Skin Cancer Detection in Imbalanced Data Sets. Neural Process. Lett. 2023, 55, 4461–4479. [Google Scholar] [CrossRef]

- Pour, M.P.; Seker, H. Transform domain representation-driven convolutional neural networks for skin lesion segmentation, 113129. Expert Syst. Appl. 2020, 144. [Google Scholar] [CrossRef]

- Hasan, M.K.; Roy, S.; Mondal, C.; Alam, M.A.; Elahi, M.T.E.; Dutta, A.; Raju, S.M.U.; Jawad, M.T.; Ahmad, M. Dermo-DOCTOR: A framework for concurrent skin lesion detection and recognition using a deep convolutional neural network with end-to-end dual encoders. Biomed. Signal Process. Control 2021, 68, 102661. [Google Scholar] [CrossRef]

- Ahmedt-Aristizabal, D.; Nguyen, C.; Tychsen-Smith, L.; Stacey, A.; Li, S.; Pathikulangara, J.; Petersson, L.; Wang, D. Monitoring of Pigmented Skin Lesions Using 3D Whole Body Imaging. Comput. Methods Programs Biomed. 2023, 232, 107451. [Google Scholar] [CrossRef]

- Fischman, S.; Pérez-Anker, J.; Tognetti, L.; Naro, A.D.; Suppa, M.; Cinotti, E.; Viel, T.; Monnier, J.; Rubegni, P.; del Marmol, V.; et al. Non-invasive scoring of cellular atypia in keratinocyte cancers in 3D LC-OCT images using Deep Learning. Sci. Rep. 2022, 12, 481. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Ouellette, S.; Jamgochian, M.; Liu, Y.; Rao, B. One-class machine learning classification of skin tissue based on manually scanned optical coherence tomography imaging. Sci. Rep. 2023, 13, 867. [Google Scholar] [CrossRef] [PubMed]

- Gorris, M.A.; van der Woude, L.L.; Kroeze, L.I.; Bol, K.; Verrijp, K.; Amir, A.L.; Meek, J.; Textor, J.; Figdor, C.G.; de Vries, I.J.M. Paired primary and metastatic lesions of patients with ipilimumab-treated melanoma: High variation in lymphocyte infiltration and HLA-ABC expression whereas tumor mutational load is similar and correlates with clinical outcome. J. Immunother. Cancer 2022, 10, e004329. [Google Scholar] [CrossRef] [PubMed]

- Alsaade, F.W.; Aldhyani, T.H.; Al-Adhaileh, M.H. Developing a Recognition System for Diagnosing Melanoma Skin Lesions Using Artificial Intelligence Algorithms. Comput. Math. Methods Med. 2021, 2021, 9998379. [Google Scholar] [CrossRef]

- Khan, M.M.; Tazin, T.; Hussain, M.Z.; Mostakim, M.; Rehman, T.; Singh, S.; Gupta, V.; Alomeir, O. Breast Tumor Detection Using Robust and Efficient Machine Learning and Convolutional Neural Network Approaches. Comput. Intell. Neurosci. 2022. [Google Scholar] [CrossRef] [PubMed]

- Haggenmüller, S.; Maron, R.C.; Hekler, A.; Utikal, J.S.; Barata, C.; Barnhill, R.L.; Beltraminelli, H.; Berking, C.; Betz-Stablein, B.; Blum, A.; et al. Skin cancer classification via convolutional neural networks: Systematic review of studies involving human experts. Eur. J. Cancer 2021, 156, 202–216. [Google Scholar] [CrossRef]

- Kassem, M.A.; Hosny, K.M.; Damaševičius, R.; Eltoukhy, M.M. Machine learning and deep learning methods for skin lesion classification and diagnosis: A systematic review. Diagnostics 2021, 11, 1390. [Google Scholar] [CrossRef]

- Rai, H.M. Cancer detection and segmentation using machine learning and deep learning techniques: A review. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. Declaración PRISMA 2020: Una guía actualizada para la publicación de revisiones sistemáticas. Rev. Española Cardiol. 2021, 74, 790–799. [Google Scholar] [CrossRef]

- Ain, Q.U.; Al-Sahaf, H.; Xue, B.; Zhang, M. Generating Knowledge-Guided Discriminative Features Using Genetic Programming for Melanoma Detection. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 554–569. [Google Scholar] [CrossRef]

- Kumar, A.; Vatsa, A. Untangling Classification Methods for Melanoma Skin Cancer. Front. Big Data 2022, 5, 848614. [Google Scholar] [CrossRef]

- Usmani, U.A.; Watada, J.; Jaafar, J.; Aziz, I.A.; Roy, A. A reinforcement learning algorithm for automated detection of skin lesions. Appl. Sci. 2021, 11, 9367. [Google Scholar] [CrossRef]

- Ruini, C.; Schlingmann, S.; Žan, J.; Avci, P.; Padrón-Laso, V.; Neumeier, F.; Koveshazi, I.; Ikeliani, I.U.; Patzer, K.; Kunrad, E.; et al. Machine learning based prediction of squamous cell carcinoma in ex vivo confocal laser scanning microscopy. Cancers 2021, 13, 5522. [Google Scholar] [CrossRef]

- Alzahrani, S.; Al-Bander, B.; Al-Nuaimy, W. A comprehensive evaluation and benchmarking of convolutional neural networks for melanoma diagnosis. Cancers 2021, 13, 4494. [Google Scholar] [CrossRef]

- Wang, G.; Yan, P.; Tang, Q.; Yang, L.; Chen, J. Multiscale Feature Fusion for Skin Lesion Classification. BioMed Res. Int. 2023, 2023, 5146543. [Google Scholar] [CrossRef] [PubMed]

- Albraikan, A.A.; Nemri, N.; Alkhonaini, M.A.; Hilal, A.M.; Yaseen, I.; Motwakel, A. Automated Deep Learning Based Melanoma Detection and Classification Using Biomedical Dermoscopic Images. Comput. Mater. Contin. 2023, 74, 2443–2459. [Google Scholar] [CrossRef]

- Felmingham, C.; MacNamara, S.; Cranwell, W.; Williams, N.; Wada, M.; Adler, N.R.; Ge, Z.; Sharfe, A.; Bowling, A.; Haskett, M.; et al. Improving Skin cancer Management with ARTificial Intelligence (SMARTI): Protocol for a preintervention/postintervention trial of an artificial intelligence system used as a diagnostic aid for skin cancer management in a specialist dermatology setting. BMJ Open 2022, 12, e050203. [Google Scholar] [CrossRef]

- Alabduljabbar, R.; Alshamlan, H. Intelligent multiclass skin cancer detection using convolution neural networks. Comput. Mater. Contin. 2021, 69, 831–847. [Google Scholar] [CrossRef]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against Machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Holland-Letz, T.; et al. Deep learning outperformed 136 of 157 dermatologists in a head-to-head dermoscopic melanoma image classification task. Eur. J. Cancer 2019, 113, 47–54. [Google Scholar] [CrossRef]

- Munir, K.; Elahi, H.; Ayub, A.; Frezza, F.; Rizzi, A. Cancer diagnosis using deep learning: A bibliographic review. Cancers 2019, 11, 1235. [Google Scholar] [CrossRef] [PubMed]

- Al-masni, M.A.; Kim, D.H.; Kim, T.S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Comput. Methods Programs Biomed. 2020, 190, 105351. [Google Scholar] [CrossRef] [PubMed]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region Extraction and Classification of Skin Cancer: A Heterogeneous framework of Deep CNN Features Fusion and Reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef] [PubMed]

- Goyal, M.; Oakley, A.; Bansal, P.; Dancey, D.; Yap, M.H. Skin Lesion Segmentation in Dermoscopic Images with Ensemble Deep Learning Methods. IEEE Access 2020, 8, 4171–4181. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Fröhling, S.; et al. A convolutional neural network trained with dermoscopic images performed on par with 145 dermatologists in a clinical melanoma image classification task. Eur. J. Cancer 2019, 111, 148–154. [Google Scholar] [CrossRef] [PubMed]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Berking, C.; Haferkamp, S.; Hauschild, A.; Weichenthal, M.; Klode, J.; Schadendorf, D.; Holland-Letz, T.; et al. Deep neural networks are superior to dermatologists in melanoma image classification. Eur. J. Cancer 2019, 119, 11–17. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Solass, W.; Schmitt, M.; Klode, J.; Schadendorf, D.; Sondermann, W.; Franklin, C.; Bestvater, F.; et al. Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images. Eur. J. Cancer 2019, 118, 91–96. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2021, 54, 811–841. [Google Scholar] [CrossRef]

- Zhang, N.; Cai, Y.X.; Wang, Y.Y.; Tian, Y.T.; Wang, X.L.; Badami, B. Skin cancer diagnosis based on optimized convolutional neural network. Artif. Intell. Med. 2020, 102, 101756. [Google Scholar] [CrossRef] [PubMed]

- Kadampur, M.A.; Riyaee, S.A. Skin cancer detection: Applying a deep learning based model driven architecture in the cloud for classifying dermal cell images. Inform. Med. Unlocked 2020, 18, 100282. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wang, C. Fusing fine-tuned deep features for skin lesion classification. Comput. Med. Imaging Graph. 2019, 71, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Berking, C.; Klode, J.; Schadendorf, D.; Jansen, P.; Franklin, C.; Holland-Letz, T.; Krahl, D.; et al. Pathologist-level classification of histopathological melanoma images with deep neural networks. Eur. J. Cancer 2019, 115, 79–83. [Google Scholar] [CrossRef] [PubMed]

- Ashraf, R.; Afzal, S.; Rehman, A.U.; Gul, S.; Baber, J.; Bakhtyar, M.; Mehmood, I.; Song, O.Y.; Maqsood, M. Region-of-Interest Based Transfer Learning Assisted Framework for Skin Cancer Detection. IEEE Access 2020, 8, 147858–147871. [Google Scholar] [CrossRef]

- Maron, R.C.; Weichenthal, M.; Utikal, J.S.; Hekler, A.; Berking, C.; Hauschild, A.; Enk, A.H.; Haferkamp, S.; Klode, J.; Schadendorf, D.; et al. Systematic outperformance of 112 dermatologists in multiclass skin cancer image classification by convolutional neural networks. Eur. J. Cancer 2019, 119, 57–65. [Google Scholar] [CrossRef]

- Albahar, M.A. Skin Lesion Classification Using Convolutional Neural Network with Novel Regularizer. IEEE Access 2019, 7, 38306–38313. [Google Scholar] [CrossRef]

- Kumar, M.; Alshehri, M.; AlGhamdi, R.; Sharma, P.; Deep, V. A DE-ANN Inspired Skin Cancer Detection Approach Using Fuzzy C-Means Clustering. Mobile Netw. Appl. 2020, 25, 1319–1329. [Google Scholar] [CrossRef]

- Nawaz, M.; Mehmood, Z.; Nazir, T.; Naqvi, R.A.; Rehman, A.; Iqbal, M.; Saba, T. Skin cancer detection from dermoscopic images using deep learning and fuzzy k-means clustering. Microsc. Res. Tech. 2022, 85, 339–351. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin lesion segmentation and classification: A unified framework of deep neural network features fusion and selection. Expert Syst. 2022, 39, e12497. [Google Scholar] [CrossRef]

- Turani, Z.; Fatemizadeh, E.; Blumetti, T.; Daveluy, S.; Moraes, A.F.; Chen, W.; Mehregan, D.; Andersen, P.E.; Nasiriavanaki, M. Optical radiomic signatures derived from optical coherence tomography images improve identification of melanoma. Cancer Res. 2019, 79, 2021–2030. [Google Scholar] [CrossRef]

- Dey, N.; Rajinikanth, V.; Ashour, A.S.; Tavares, J.M.R. Social group optimization supported segmentation and evaluation of skin melanoma images. Symmetry 2018, 10, 51. [Google Scholar] [CrossRef]

- Tan, T.Y.; Zhang, L.; Lim, C.P.; Fielding, B.; Yu, Y.; Anderson, E. Evolving Ensemble Models for Image Segmentation Using Enhanced Particle Swarm Optimization. IEEE Access 2019, 7, 34004–34019. [Google Scholar] [CrossRef]

- Öztürk, Ş.; Özkaya, U. Skin Lesion Segmentation with Improved Convolutional Neural Network. J. Digit. Imaging 2020, 33, 958–970. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Ge, Z.; Bonnington, C.P.; Zhou, J. Progressive Transfer Learning and Adversarial Domain Adaptation for Cross-Domain Skin Disease Classification. IEEE J. Biomed. Health Inform. 2020, 24, 1379–1393. [Google Scholar] [CrossRef]

- Thanh, D.N.; Prasath, V.B.; Hieu, L.M.; Hien, N.N. Melanoma Skin Cancer Detection Method Based on Adaptive Principal Curvature, Colour Normalisation and Feature Extraction with the ABCD Rule. J. Digit. Imaging 2020, 33, 574–585. [Google Scholar] [CrossRef] [PubMed]

- Amin, J.; Sharif, A.; Gul, N.; Anjum, M.A.; Nisar, M.W.; Azam, F.; Bukhari, S.A.C. Integrated design of deep features fusion for localization and classification of skin cancer. Pattern Recognit. Lett. 2020, 131, 63–70. [Google Scholar] [CrossRef]

- Bakkouri, I.; Afdel, K. Computer-aided diagnosis (CAD) system based on multi-layer feature fusion network for skin lesion recognition in dermoscopy images. Multimed. Tools Appl. 2020, 79, 20483–20518. [Google Scholar] [CrossRef]

- Wei, L.; Ding, K.; Hu, H. Automatic Skin Cancer Detection in Dermoscopy Images Based on Ensemble Lightweight Deep Learning Network. IEEE Access 2020, 8, 99633–99647. [Google Scholar] [CrossRef]

- Kaymak, R.; Kaymak, C.; Ucar, A. Skin lesion segmentation using fully convolutional networks: A comparative experimental study. Expert Syst. Appl. 2020, 161, 113742. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Javed, K.; Rashid, M.; Bukhari, S.A.C. An integrated framework of skin lesion detection and recognition through saliency method and optimal deep neural network features selection. Neural Comput. Appl. 2020, 32, 15929–15948. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Koundal, D.; Singh, K. Fusion of U-Net and CNN model for segmentation and classification of skin lesion from dermoscopy images. Expert Syst. Appl. 2023, 213, 119230. [Google Scholar] [CrossRef]

- Okur, E.; Turkan, M. A survey on automated melanoma detection. Eng. Appl. Artif. Intell. 2018, 73, 50–67. [Google Scholar] [CrossRef]

- Abbas, Q.; Celebi, M.E. DermoDeep-A classification of melanoma-nevus skin lesions using multi-feature fusion of visual features and deep neural network. Multimed. Tools Appl. 2019, 78, 23559–23580. [Google Scholar] [CrossRef]

- Rahman, Z.; Hossain, M.S.; Islam, M.R.; Hasan, M.M.; Hridhee, R.A. An approach for multiclass skin lesion classification based on ensemble learning. Inform. Med. Unlocked 2021, 25, 100659. [Google Scholar] [CrossRef]

- Oskal, K.R.; Risdal, M.; Janssen, E.A.; Undersrud, E.S.; Gulsrud, T.O. A U-net based approach to epidermal tissue segmentation in whole slide histopathological images. SN Appl. Sci. 2019, 1, 672. [Google Scholar] [CrossRef]

- Sreelatha, T.; Subramanyam, M.V.; Prasad, M.N. Early Detection of Skin Cancer Using Melanoma Segmentation technique. J. Med. Syst. 2019, 43, 190. [Google Scholar] [CrossRef]

- Olugbara, O.O.; Taiwo, T.B.; Heukelman, D. Segmentation of Melanoma Skin Lesion Using Perceptual Color Difference Saliency with Morphological Analysis. Math. Probl. Eng. 2018, 2018, 1524286. [Google Scholar] [CrossRef]

- Alizadeh, S.M.; Mahloojifar, A. Automatic skin cancer detection in dermoscopy images by combining convolutional neural networks and texture features. Int. J. Imaging Syst. Technol. 2021, 31, 695–707. [Google Scholar] [CrossRef]

- Wu, Z.; Zhao, S.; Peng, Y.; He, X.; Zhao, X.; Huang, K.; Wu, X.; Fan, W.; Li, F.; Chen, M.; et al. Studies on Different CNN Algorithms for Face Skin Disease Classification Based on Clinical Images. IEEE Access 2019, 7, 66505–66511. [Google Scholar] [CrossRef]

- Shetty, B.; Fernandes, R.; Rodrigues, A.P.; Chengoden, R.; Bhattacharya, S.; Lakshmanna, K. Skin lesion classification of dermoscopic images using machine learning and convolutional neural network. Sci. Rep. 2022, 12, 18134. [Google Scholar] [CrossRef]

- Nasr-Esfahani, E.; Rafiei, S.; Jafari, M.H.; Karimi, N.; Wrobel, J.S.; Samavi, S.; Soroushmehr, S.M.R. Dense pooling layers in fully convolutional network for skin lesion segmentation. Comput. Med. Imaging Graph. 2019, 78, 101658. [Google Scholar] [CrossRef] [PubMed]

- Mohakud, R.; Dash, R. Designing a grey wolf optimization based hyper-parameter optimized convolutional neural network classifier for skin cancer detection. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 6280–6291. [Google Scholar] [CrossRef]

- Abunadi, I.; Senan, E.M. Deep learning and machine learning techniques of diagnosis dermoscopy images for early detection of skin diseases. Electronics 2021, 10, 3158. [Google Scholar] [CrossRef]

- Spyridonos, P.; Gaitanis, G.; Likas, A.; Bassukas, I.D. A convolutional neural network based system for detection of actinic keratosis in clinical images of cutaneous field cancerization. Biomed. Signal Process. Control 2023, 79, 104059. [Google Scholar] [CrossRef]

- Iqbal, S.; Qureshi, A.N.; Mustafa, G. Hybridization of CNN with LBP for Classification of Melanoma Images. Comput. Mater. Contin. 2022, 71, 4915–4939. [Google Scholar] [CrossRef]

- Mazoure, B.; Mazoure, A.; Bédard, J.; Makarenkov, V. DUNEScan: A web server for uncertainty estimation in skin cancer detection with deep neural networks. Sci. Rep. 2022, 12, 179. [Google Scholar] [CrossRef]

- Imran, A.; Nasir, A.; Bilal, M.; Sun, G.; Alzahrani, A.; Almuhaimeed, A. Skin Cancer Detection Using Combined Decision of Deep Learners. IEEE Access 2022, 10, 118198–118212. [Google Scholar] [CrossRef]

- Mabrouk, M.S.; Sayed, A.Y.; Afifi, H.M.; Sheha, M.A.; Sharwy, A. Fully Automated Approach for Early Detection of Pigmented Skin Lesion Diagnosis Using ABCD. J. Healthc. Inform. Res. 2020, 4, 151–173. [Google Scholar] [CrossRef]

- Shehzad, K.; Zhenhua, T.; Shoukat, S.; Saeed, A.; Ahmad, I.; Bhatti, S.S.; Chelloug, S.A. A Deep-Ensemble-Learning-Based Approach for Skin Cancer Diagnosis. Electronics 2023, 12, 1342. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, H.J.; Zhang, J.; Badami, B. Optimization of the Convolutional Neural Networks for Automatic Detection of Skin Cancer. Open Med. 2020, 15, 27–37. [Google Scholar] [CrossRef] [PubMed]

- Mukadam, S.B.; Patil, H.Y. Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network. Appl. Sci. 2023, 13, 1210. [Google Scholar] [CrossRef]

- Tahir, M.; Naeem, A.; Malik, H.; Tanveer, J.; Naqvi, R.A.; Lee, S.W. DSCC-Net: Multi-Classification Deep Learning Models for Diagnosing of Skin Cancer Using Dermoscopic Images. Cancers 2023, 15, 2179. [Google Scholar] [CrossRef] [PubMed]

- Nie, Y.; Sommella, P.; Carratù, M.; O’Nils, M.; Lundgren, J. A Deep CNN Transformer Hybrid Model for Skin Lesion Classification of Dermoscopic Images Using Focal Loss. Diagnostics 2023, 13, 72. [Google Scholar] [CrossRef] [PubMed]

- Saba, T.; Javed, R.; Rahim, M.S.M.; Rehman, A.; Bahaj, S.A. IoMT Enabled Melanoma Detection Using Improved Region Growing Lesion Boundary Extraction. Comput. Mater. Contin. 2022, 71, 6219–6237. [Google Scholar] [CrossRef]

- Alam, M.J.; Mohammad, M.S.; Hossain, M.A.F.; Showmik, I.A.; Raihan, M.S.; Ahmed, S.; Mahmud, T.I. S2C-DeLeNet: A parameter transfer based segmentation-classification integration for detecting skin cancer lesions from dermoscopic images. Comput. Biol. Med. 2022, 150, 106148. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Q.; Daadaa, Y.; Rashid, U.; Ibrahim, M.E. Assist-Dermo: A Lightweight Separable Vision Transformer Model for Multiclass Skin Lesion Classification. Diagnostics 2023, 13, 2531. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, N.; Shah, J.H.; Khan, M.A.; Baili, J.; Ansari, G.J.; Tariq, U.; Kim, Y.J.; Cha, J.H. A novel framework of multiclass skin lesion recognition from dermoscopic images using deep learning and explainable AI. Front. Oncol. 2023, 13, 1151257. [Google Scholar] [CrossRef]

- Bistroń, M.; Piotrowski, Z. Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis. Appl. Sci. 2022, 12, 9960. [Google Scholar] [CrossRef]

- Kaur, R.; GholamHosseini, H.; Sinha, R. Hairlines removal and low contrast enhancement of melanoma skin images using convolutional neural network with aggregation of contextual information. Biomed. Signal Process. Control 2022, 76, 103653. [Google Scholar] [CrossRef]

- El-Shafai, W.; El-Fattah, I.A.; Taha, T.E. Deep learning-based hair removal for improved diagnostics of skin diseases. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Ho, C.J.; Calderon-Delgado, M.; Lin, M.Y.; Tjiu, J.W.; Huang, S.L.; Chen, H.H. Classification of squamous cell carcinoma from FF-OCT images: Data selection and progressive model construction. Comput. Med. Imaging Graph. 2021, 93, 101992. [Google Scholar] [CrossRef] [PubMed]

- Hong, Y.; Zhang, G.; Wei, B.; Cong, J.; Xu, Y.; Zhang, K. Weakly supervised semantic segmentation for skin cancer via CNN superpixel region response. Multimed. Tools Appl. 2023, 82, 6829–6847. [Google Scholar] [CrossRef]

- Yaqoob, M.M.; Alsulami, M.; Khan, M.A.; Alsadie, D.; Saudagar, A.K.J.; AlKhathami, M. Federated Machine Learning for Skin Lesion Diagnosis: An Asynchronous and Weighted Approach. Diagnostics 2023, 13, 1964. [Google Scholar] [CrossRef] [PubMed]

- Gomathi, E.; Jayasheela, M.; Thamarai, M.; Geetha, M. Skin cancer detection using dual optimization based deep learning network. Biomed. Signal Process. Control 2023, 84, 104968. [Google Scholar] [CrossRef]

- Alheejawi, S.; Berendt, R.; Jha, N.; Maity, S.P.; Mandal, M. Automated proliferation index calculation for skin melanoma biopsy images using machine learning. Comput. Med. Imaging Graph. 2021, 89, 101893. [Google Scholar] [CrossRef] [PubMed]

- Hameed, A.; Umer, M.; Hafeez, U.; Mustafa, H.; Sohaib, A.; Siddique, M.A.; Madni, H.A. Skin lesion classification in dermoscopic images using stacked Convolutional Neural Network. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 3551–3565. [Google Scholar] [CrossRef]

- Serrano, C.; Lazo, M.; Serrano, A.; Toledo-Pastrana, T.; Barros-Tornay, R.; Acha, B. Clinically Inspired Skin Lesion Classification through the Detection of Dermoscopic Criteria for Basal Cell Carcinoma. J. Imaging 2022, 8, 197. [Google Scholar] [CrossRef]

- Kousis, I.; Perikos, I.; Hatzilygeroudis, I.; Virvou, M. Deep Learning Methods for Accurate Skin Cancer Recognition and Mobile Application. Electronics 2022, 11, 1294. [Google Scholar] [CrossRef]

- Naeem, A.; Anees, T.; Fiza, M.; Naqvi, R.A.; Lee, S.W. SCDNet: A Deep Learning-Based Framework for the Multiclassification of Skin Cancer Using Dermoscopy Images. Sensors 2022, 22, 5652. [Google Scholar] [CrossRef]

- Ranjan, R.; Avasthi, V. Edge Detection Using Guided Sobel Image Filtering. Wirel. Pers. Commun. 2023, 132, 651–677. [Google Scholar] [CrossRef]

- Mbaidin, A.; Cernadas, E.; Al-Tarawneh, Z.A.; Fernández-Delgado, M.; Domínguez-Petit, R.; Rábade-Uberos, S.; Hassanat, A. MSCF: Multi-Scale Canny Filter to Recognize Cells in Microscopic Images. Sustainability 2023, 15, 3693. [Google Scholar] [CrossRef]

- Guaragnella, C.; Rizzi, M. Simple and accurate border detection algorithm for melanoma computer aided diagnosis. Diagnostics 2020, 10, 423. [Google Scholar] [CrossRef] [PubMed]

- Kalpana, B.; Reshmy, A.K.; Pandi, S.S.; Dhanasekaran, S. OESV-KRF: Optimal ensemble support vector kernel random forest based early detection and classification of skin diseases. Biomed. Signal Process. Control 2023, 85, 104779. [Google Scholar] [CrossRef]

- Namboodiri, T.S.; Jayachandran, A. Multi-class skin lesions classification system using probability map based region growing and DCNN. Int. J. Comput. Intell. Syst. 2020, 13, 77–84. [Google Scholar] [CrossRef]

- Karuppiah, S.P.; Sheeba, A.; Padmakala, S.; Subasini, C.A. An Efficient Galactic Swarm Optimization Based Fractal Neural Network Model with DWT for Malignant Melanoma Prediction. Neural Process. Lett. 2022, 54, 5043–5062. [Google Scholar] [CrossRef]

- Kränke, T.; Tripolt-Droschl, K.; Röd, L.; Hofmann-Wellenhof, R.; Koppitz, M.; Tripolt, M. New AI-algorithms on smartphones to detect skin cancer in a clinical setting—A validation study. PLoS ONE 2023, 18, e0280670. [Google Scholar] [CrossRef] [PubMed]

- Sikandar, S.; Mahum, R.; Ragab, A.E.; Yayilgan, S.Y.; Shaikh, S. SCDet: A Robust Approach for the Detection of Skin Lesions. Diagnostics 2023, 13, 1824. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.K.; Abolghasemi, V.; Anisi, M.H. Fuzzy Logic with Deep Learning for Detection of Skin Cancer. Appl. Sci. 2023, 13, 8927. [Google Scholar] [CrossRef]

- Shahsavari, A.; Khatibi, T.; Ranjbari, S. Skin lesion detection using an ensemble of deep models: SLDED. Multimed. Tools Appl. 2023, 82, 10575–10594. [Google Scholar] [CrossRef]

- Batista, L.G.; Bugatti, P.H.; Saito, P.T. Classification of Skin Lesion through Active Learning Strategies. Comput. Methods Programs Biomed. 2022, 226, 107122. [Google Scholar] [CrossRef]

- Vani, R.; Kavitha, J.C.; Subitha, D. Novel approach for melanoma detection through iterative deep vector network. J. Ambient. Intell. Humaniz. Comput. 2021. [Google Scholar] [CrossRef]

- Alix-Panabieres, C.; Magliocco, A.; Cortes-Hernandez, L.E.; Eslami-S, Z.; Franklin, D.; Messina, J.L. Detection of cancer metastasis: Past, present and future. Clin. Exp. Metastasis 2022, 39, 21–28. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Arandian, B. An Improved CNN Architecture to Diagnose Skin Cancer in Dermoscopic Images Based on Wildebeest Herd Optimization Algorithm. Comput. Intell. Neurosci. 2021, 2021, 7567870. [Google Scholar] [CrossRef] [PubMed]

- Talavera-Martínez, L.; Bibiloni, P.; Giacaman, A.; Taberner, R.; Hernando, L.J.D.P.; González-Hidalgo, M. A novel approach for skin lesion symmetry classification with a deep learning model. Comput. Biol. Med. 2022, 145, 105450. [Google Scholar] [CrossRef]

| Criteria | Indicates | Description |

|---|---|---|

| A | Asymmetry | The majority of illustrated melanomas exhibit an imbalance in their features |

| B | Border | The borders of melanomas are usually uneven and may have irregular or scalloped edges |

| C | Color | The presence of multiple colors within a melanoma is a warning sign |

| D | Diameter | Melanomas tend to be larger, approximately the size of an eraser or around 6 mm in diameter or larger |

| E | Evolution | This considers any alteration in the shape, size, color, or elevation of a skin spot as a warning sign of melanoma |

| N° | Criteria: Inclusion (IC) and Exclusion (EC) | Description |

|---|---|---|

| IC1 | Area of knowledge | Computer science information systems, oncology, computer science artificial intelligence, computer science theory methods, engineering biomedical, computer science software engineering, computer science interdisciplinary applications, medical informatics, multidisciplinary engineering, multidisciplinary sciences |

| IC2 | Language | English |

| IC3 | Document type | Article |

| EC1 | Year | ≤2018 |

| EC2 | Key words | Systematic review |

| Id SQ | Description | WoS | Scopus |

|---|---|---|---|

| SQ1 | Algorithm CNN to skin cancer | 126 | 274 |

| 61 | 93 | ||

| SQ2 | CNN in skin cancer detection | 185 | 88 |

| 436 | 171 | ||

| SQ3 | Melanoma cancer detection algorithms | 6641 | 127 |

| 180 | 65 | ||

| SQ4 | Optimization of CNN algorithms for skin cancer detection | 23 | 10 |

| 31 | 19 | ||

| Preliminary totals | 6975 | 286 | |

| 921 | 348 | ||

| Final Results | 634 | ||

| Refinements | WoS | Scopus |

|---|---|---|

| First refinement: duplicate removal | 187 | 235 |

| Second refinement: filtering by quartiles Q1 and Q2 | 174 | 157 |

| Final Results | 331 | |

| Categories | Subcategories | Description |

|---|---|---|

| Types of Algorithms | CNN | A convolutional neural network (CNN) is a type of deep neural network specifically designed for the efficient processing of two-dimensional data, such as images and videos. CNNs are inspired by the organization and functioning of the biological visual system, where individual neurons respond to overlapping and superimposed regions of the visual field. This type of network has been particularly successful, especially in the analysis of images in the field of medicine [13]. |

| SVM | The support vector machine (SVM) is a widely used machine learning model for classification and regression tasks. It is considered a supervised learning algorithm, which means that it is trained using a labeled dataset where the classes or values to be predicted are known [21]. | |

| Hybrids | Other classification algorithms have been identified for skin cancer detection, with deep neural network-based algorithms, particularly recurrent neural networks (RNNs), standing out, as well as a decision tree-based algorithm known as XG-Boost [7,22]. | |

| Model Optimizers | ADAM | The ADAM optimization algorithm combines the advantages of stochastic gradient descent (SGD) and the momentum algorithm to dynamically adapt the learning rate. It provides fast and efficient convergence across a wide range of problems [23,24]. |

| SGD | The stochastic gradient descent is a simple algorithm that updates the network’s weights by using the gradient of the loss function at each iteration. It is the basic optimizer used in many machine learning applications [22]. | |

| RMSprop | RMSprop adapts the learning rate individually for each parameter during training. This means that parameters with large gradients will experience a smaller learning rate, while parameters with small gradients will have a larger learning rate [25]. | |

| Skin Datasets | HAM10000 | HAM10000 (Human Against Machine) is a dataset consisting of 10,015 dermoscopic images, each of which is a 600 × 450 three-channel RGB image. It provides properly categorized training images [23,26]. |

| ISIC | ISIC (International Skin Imaging Collaboration) is a publicly available international collaboration dataset of skin images that contains a variety of properly classified skin lesions for research purposes [25]. | |

| PH2 | PH2 comprises dermoscopic image databases. PH2 was acquired at the Dermatology Service of Hospital Pedro Hispano in Matosinhos, Portugal, and it includes 200 images, encompassing 40 melanomas and 160 other skin lesions termed nevi (including both atypical and typical nevi) [23]. | |

| Others datasets | Additional datasets, such as the Cutaneous Squamous Cell Carcinoma (cSCC) dataset, involve patients in the study and feature confocal laser scanning microscopy (CLSM) images, with each approximately 10,000 × 10,000 pixels in size [23]. | |

| Evaluation Metrics | Accuracy | Accuracy is the ratio of correctly predicted observations to the total observations. For better understanding in the context of the study, it would be the number of images correctly classified—positive and negative—divided by the total number of images [14,27]. |

| Recall | Recall or sensitivity measures the proportion of actual positives that are correctly identified as such. This is particularly important in medical image processing, where it is crucial to identify as many true cases as possible. In this context, it would be the proportion of images that are correctly identified as belonging to a particular class out of all the images that actually belong to that class [24,28]. | |

| Specificity | Specificity is a metric that refers to the model’s ability to generate responses that are not only accurate but also detailed and relevant to the given context or query. This is particularly important in tasks that require precision and detail-oriented answers. Specificity in this sense is often balanced with other metrics like accuracy, fluency, and relevance [29]. | |

| Others metrics | Metrics such as the receiver operating characteristic (ROC), F1 score, and FNR are crucial in the evaluation of classification algorithms. The ROC curve, along with the area under the curve (AUC), provides a visual and numerical representation of the algorithm’s ability to distinguish between different classes. The F1 score, by merging precision and sensitivity, yields a singular metric that harmonizes these two elements, proving particularly valuable in scenarios with class imbalances. Additionally, precision, also known as the positive predictive value, measures the accuracy of positive predictions. It reflects how many of the items identified as positive are actually positive [23]. The false negative rate (FNR), quantifying the proportion of true positives incorrectly identified as negatives, is critically important in fields where false negatives carry significant consequences, such as in medical diagnostics [24,29]. |

| Algorithms | Optimizers | Datasets | Metrics | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ID | SVM | CNN | Hybrids | ADAM | SGD | RMSProp | HAM10000 | ISIC | PH2 | Other Datasets | Accuracy | Recall | Specificity | Other Metrics |

| Haenssle et al. [30] | x | 88.9% | 75.7% | x | ||||||||||

| Brinker et al. [31] | x | x | 96% | 95% | 95.18% | x | ||||||||

| Munir et al. [32] | x | x | 76% | 81.7% | ||||||||||

| Al-masni et al. [33] | x | |||||||||||||

| Saba et al. [34] | x | x | x | x | x | 98.4% | 98.25% | 98.5% | x | |||||

| Goyal et al. [35] | x | x | x | x | x | 95.67% | 92.08% | 98.58% | x | |||||

| Brinker et al. [36] | x | x | x | x | 92.8% | 68.2% | ||||||||

| Brinker et al. [37] | x | x | 82.3% | 77.9% | ||||||||||

| Mahbod et al. [38] | x | x | x | x | 96.3% | x | ||||||||

| Hekler et al. [39] | x | 68% | 76% | 60% | ||||||||||

| Adegun and Viriri [40] | x | x | x | x | x | 99.2% | 83.3% | 98.6% | x | |||||

| Zhang et al. [41] | x | 97% | 93.5% | 92% | ||||||||||

| Kadampur and Riyaee [42] | x | 98.99% | x | |||||||||||

| Mahbod et al. [43] | x | x | x | x | x | x | ||||||||

| Hekler et al. [44] | x | |||||||||||||

| Ashraf et al. [45] | 97.9% | |||||||||||||

| Maron et al. [46] | x | 74.4% | 98.8% | |||||||||||

| Albahar [47] | x | x | 97.49% | x | ||||||||||

| Kumar et al. [48] | x | x | x | x | 97.4% | |||||||||

| Nawaz et al. [49] | x | x | x | x | 95.6% | |||||||||

| Khan et al. [50] | x | x | x | x | 96.5% | |||||||||

| Turani et al. [51] | x | 98% | 97% | 98% | x | |||||||||

| Dey et al. [52] | x | 96.19% | 98.41% | 91.16% | x | |||||||||

| Tan et al. [53] | x | x | x | x | x | x | x | |||||||

| Öztürk et al. [54] | x | x | x | x | 96.92% | 96.88% | 95.31% | x | ||||||

| Gu et al. [55] | x | x | x | 82.9% | 58.9% | 97.1% | x | |||||||

| Thanh et al. [56] | x | 96.6% | 96.1% | 96.8% | x | |||||||||

| Amin et al. [57] | x | x | x | x | x | x | 99.9% | 99.52% | 99.62% | x | ||||

| Bakkouri and Afdel [58] | x | x | x | x | 98.09% | 93.35% | 98.88% | x | ||||||

| Wei et al. [59] | x | x | x | x | x | 96.2% | 93.9% | 97.4% | x | |||||

| Kaymak et al. [60] | x | x | 94.81% | |||||||||||

| Khan et al. [61] | x | x | x | x | 97.74% | 97.39% | 100% | x | ||||||

| Anand et al. [62] | x | x | x | x | 97.96% | |||||||||

| Okur and Turkan [63] | x | x | x | 94% | ||||||||||

| Abbas and Celebi [64] | x | x | 93% | 95% | x | |||||||||

| Rahman et al. [65] | x | x | x | x | x | x | 88% | x | ||||||

| Oskal et al. [66] | x | 92.01% | ||||||||||||

| Sreelatha et al. [67] | x | 98.64% | 99.22% | |||||||||||

| Olugbara et al. [68] | x | x | ||||||||||||

| Alizadeh and Mahloojifar [69] | x | x | x | x | x | x | 97.5% | 100% | 96.88% | x | ||||

| Wu et al. [70] | x | 87.25% | x | |||||||||||

| Shetty et al. [71] | x | x | x | 95.18% | x | |||||||||

| Nasr-Esfahani et al. [72] | x | x | x | 95.7% | 92.77% | 96.3% | x | |||||||

| Mohakud and Dash [73] | x | x | 98.33% | |||||||||||

| Abunadi and Senan [74] | x | x | x | 97.91% | ||||||||||

| Presence in the literature review (%) | 20% | 80% | 24% | 22% | 13% | 4% | 18% | 49% | 24% | 29% | 71% | 53% | 53% | 56% |

| Dataset Name | Link to Dataset (accessed on 23 October 2023) |

|---|---|

| HAM10000 | https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T |

| ISIC | https://challenge.isic-archive.com/data |

| PH2 | https://www.fc.up.pt/addi/ph2%20database.html |

| Optimizer Characteristics | ADAM | SGD | RMSprop |

|---|---|---|---|

| Advantages |

|

| Adapts the learning rate individually for each parameter, making it effective. |

| Disadvantages |

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hermosilla, P.; Soto, R.; Vega, E.; Suazo, C.; Ponce, J. Skin Cancer Detection and Classification Using Neural Network Algorithms: A Systematic Review. Diagnostics 2024, 14, 454. https://doi.org/10.3390/diagnostics14040454

Hermosilla P, Soto R, Vega E, Suazo C, Ponce J. Skin Cancer Detection and Classification Using Neural Network Algorithms: A Systematic Review. Diagnostics. 2024; 14(4):454. https://doi.org/10.3390/diagnostics14040454

Chicago/Turabian StyleHermosilla, Pamela, Ricardo Soto, Emanuel Vega, Cristian Suazo, and Jefté Ponce. 2024. "Skin Cancer Detection and Classification Using Neural Network Algorithms: A Systematic Review" Diagnostics 14, no. 4: 454. https://doi.org/10.3390/diagnostics14040454

APA StyleHermosilla, P., Soto, R., Vega, E., Suazo, C., & Ponce, J. (2024). Skin Cancer Detection and Classification Using Neural Network Algorithms: A Systematic Review. Diagnostics, 14(4), 454. https://doi.org/10.3390/diagnostics14040454