Conversational LLM Chatbot ChatGPT-4 for Colonoscopy Boston Bowel Preparation Scoring: An Artificial Intelligence-to-Head Concordance Analysis

Abstract

1. Introduction

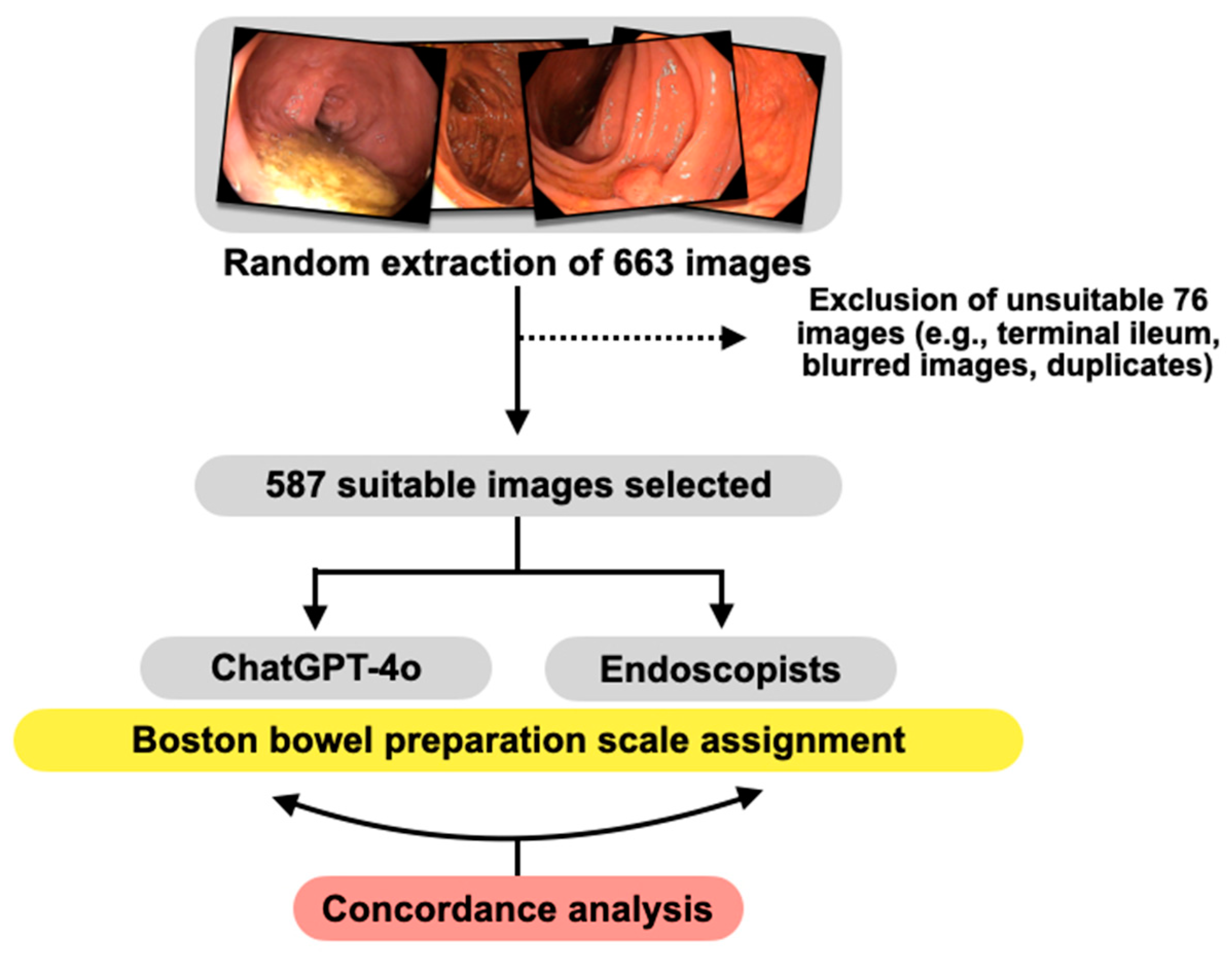

2. Materials and Methods

2.1. Study Design

2.2. Characteristics and Technical Specifications of the Images Included in the Analysis

2.3. Images Processing by ChatGPT-4 and Human Evaluators

2.4. Study Outcomes

2.5. Statistical Analysis

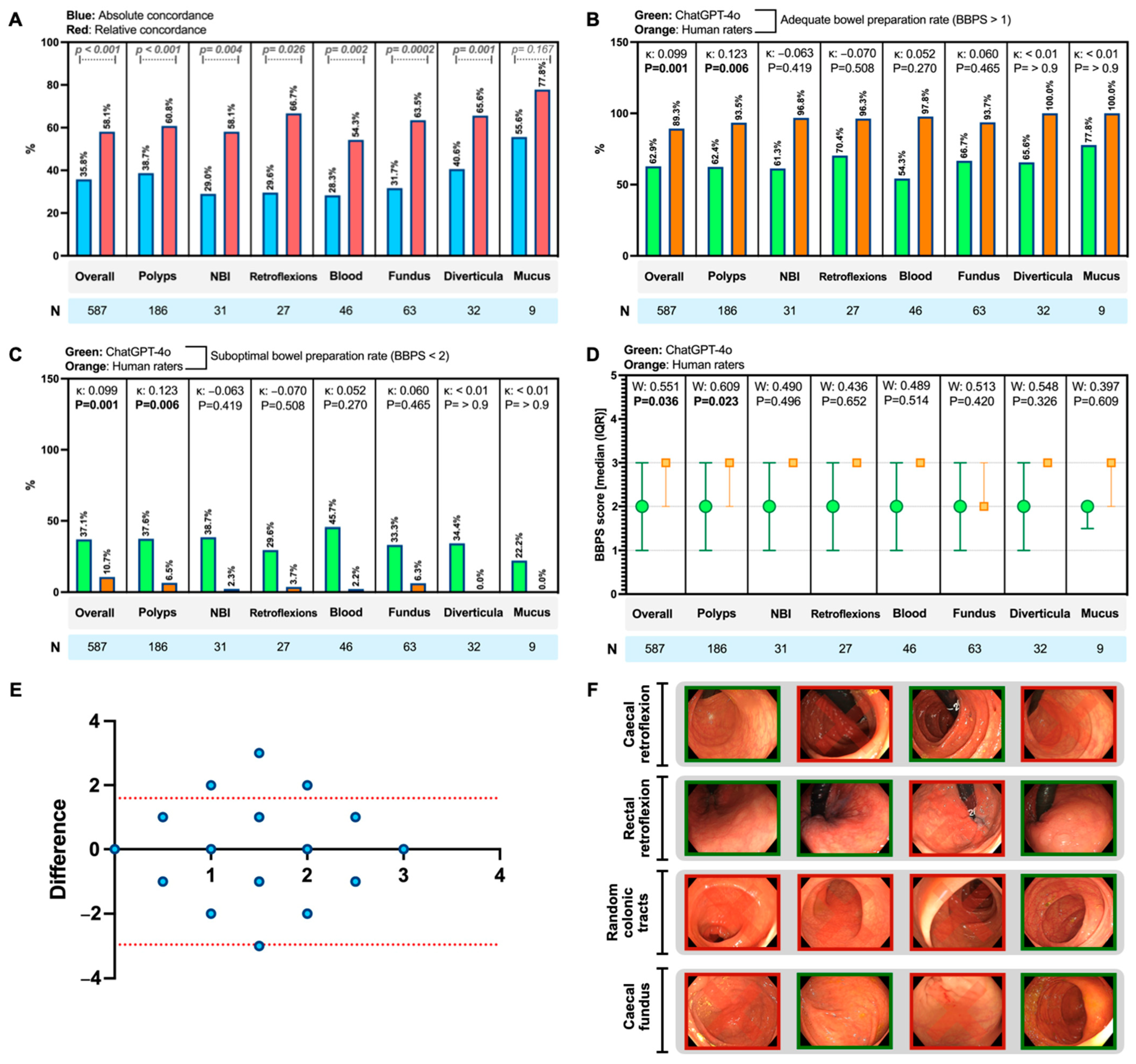

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alawida, M.; Mejri, S.; Mehmood, A.; Chikhaoui, B.; Isaac Abiodun, O. A Comprehensive Study of ChatGPT: Advancements, Limitations, and Ethical Considerations in Natural Language Processing and Cybersecurity. Information 2023, 14, 462. [Google Scholar] [CrossRef]

- Gravina, A.G.; Pellegrino, R.; Cipullo, M.; Palladino, G.; Imperio, G.; Ventura, A.; Auletta, S.; Ciamarra, P.; Federico, A. May ChatGPT Be a Tool Producing Medical Information for Common Inflammatory Bowel Disease Patients’ Questions? An Evidence-Controlled Analysis. World J. Gastroenterol. 2024, 30, 17–33. [Google Scholar] [CrossRef] [PubMed]

- Gravina, A.G.; Pellegrino, R.; Palladino, G.; Imperio, G.; Ventura, A.; Federico, A. Charting New AI Education in Gastroenterology: Cross-Sectional Evaluation of ChatGPT and Perplexity AI in Medical Residency Exam. Dig. Liver Dis. 2024, 56, 1304–1311. [Google Scholar] [CrossRef] [PubMed]

- Klang, E.; Sourosh, A.; Nadkarni, G.N.; Sharif, K.; Lahat, A. Evaluating the Role of ChatGPT in Gastroenterology: A Comprehensive Systematic Review of Applications, Benefits, and Limitations. Ther. Adv. Gastroenterol. 2023, 16, 17562848231218618. [Google Scholar] [CrossRef]

- Lee, T.-C.; Staller, K.; Botoman, V.; Pathipati, M.P.; Varma, S.; Kuo, B. ChatGPT Answers Common Patient Questions About Colonoscopy. Gastroenterology 2023, 165, 509–511.e7. [Google Scholar] [CrossRef]

- Hassan, C.; East, J.; Radaelli, F.; Spada, C.; Benamouzig, R.; Bisschops, R.; Bretthauer, M.; Dekker, E.; Dinis-Ribeiro, M.; Ferlitsch, M.; et al. Bowel Preparation for Colonoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Guideline—Update 2019. Endoscopy 2019, 51, 775–794. [Google Scholar] [CrossRef]

- Millien, V.O.; Mansour, N.M. Bowel Preparation for Colonoscopy in 2020: A Look at the Past, Present, and Future. Curr. Gastroenterol. Rep. 2020, 22, 28. [Google Scholar] [CrossRef]

- Fevrier, H.B.; Liu, L.; Herrinton, L.J.; Li, D. A Transparent and Adaptable Method to Extract Colonoscopy and Pathology Data Using Natural Language Processing. J. Med. Syst. 2020, 44, 151. [Google Scholar] [CrossRef]

- Lee, J.K.; Jensen, C.D.; Levin, T.R.; Zauber, A.G.; Doubeni, C.A.; Zhao, W.K.; Corley, D.A. Accurate Identification of Colonoscopy Quality and Polyp Findings Using Natural Language Processing. J. Clin. Gastroenterol. 2019, 53, e25–e30. [Google Scholar] [CrossRef]

- Lu, Y.-B.; Lu, S.-C.; Huang, Y.-N.; Cai, S.-T.; Le, P.-H.; Hsu, F.-Y.; Hu, Y.-X.; Hsieh, H.-S.; Chen, W.-T.; Xia, G.-L.; et al. A Novel Convolutional Neural Network Model as an Alternative Approach to Bowel Preparation Evaluation Before Colonoscopy in the COVID-19 Era: A Multicenter, Single-Blinded, Randomized Study. Am. J. Gastroenterol. 2022, 117, 1437–1443. [Google Scholar] [CrossRef]

- Temsah, M.-H.; Jamal, A.; Alhasan, K.; Aljamaan, F.; Altamimi, I.; Malki, K.H.; Temsah, A.; Ohannessian, R.; Al-Eyadhy, A. Transforming Virtual Healthcare: The Potentials of ChatGPT-4omni in Telemedicine. Cureus 2024, 16, e61377. [Google Scholar] [CrossRef] [PubMed]

- Lai, E.J.; Calderwood, A.H.; Doros, G.; Fix, O.K.; Jacobson, B.C. The Boston Bowel Preparation Scale: A Valid and Reliable Instrument for Colonoscopy-Oriented Research. Gastrointest. Endosc. 2009, 69, 620–625. [Google Scholar] [CrossRef] [PubMed]

- McHugh, M.L. Interrater Reliability: The Kappa Statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159. [Google Scholar] [CrossRef]

- Ranganathan, P.; Pramesh, C.S.; Aggarwal, R. Common Pitfalls in Statistical Analysis: Measures of Agreement. Perspect. Clin. Res. 2017, 8, 187–191. [Google Scholar] [CrossRef]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Gabor-Siatkowska, K.; Sowański, M.; Rzatkiewicz, R.; Stefaniak, I.; Kozłowski, M.; Janicki, A. AI to Train AI: Using ChatGPT to Improve the Accuracy of a Therapeutic Dialogue System. Electronics 2023, 12, 4694. [Google Scholar] [CrossRef]

- Gödde, D.; Nöhl, S.; Wolf, C.; Rupert, Y.; Rimkus, L.; Ehlers, J.; Breuckmann, F.; Sellmann, T. A SWOT (Strengths, Weaknesses, Opportunities, and Threats) Analysis of ChatGPT in the Medical Literature: Concise Review. J. Med. Internet Res. 2023, 25, e49368. [Google Scholar] [CrossRef]

- Arruzza, E.S.; Evangelista, C.M.; Chau, M. The Performance of ChatGPT-4.0o in Medical Imaging Evaluation: A Preliminary Investigation. J. Educ. Eval. Health Prof. 2024, 21, 29. [Google Scholar] [CrossRef]

- Elek, A.; Ekizalioğlu, D.D.; Güler, E. Evaluating Microsoft Bing with ChatGPT-4 for the Assessment of Abdominal Computed Tomography and Magnetic Resonance Images. Diagn. Interv. Radiol. 2024. [Google Scholar] [CrossRef] [PubMed]

- Noda, R.; Izaki, Y.; Kitano, F.; Komatsu, J.; Ichikawa, D.; Shibagaki, Y. Performance of ChatGPT and Bard in Self-Assessment Questions for Nephrology Board Renewal. Clin. Exp. Nephrol. 2024, 28, 465–469. [Google Scholar] [CrossRef] [PubMed]

- Koga, S. Advancing Large Language Models in Nephrology: Bridging the Gap in Image Interpretation. Clin. Exp. Nephrol. 2024. [Google Scholar] [CrossRef] [PubMed]

| Images Code | ChatGPT-4o Outputs 1 |

|---|---|

| Images 61–67 | Based on the provided BBPS (Boston Bowel Preparation Scale) criteria, I’ve analyzed the images and assigned the scores as follows: Image61.png 1; Image62.png 1; Image63.png 3; Image64.png 3; Image65.png 2; Image66.png 2; Image67.png 3; Image68.png 3; Image69.png 1; Image70.png 1. Here is the table with the file names and their corresponding BBPS scores. If you have any further analysis or additional instructions, please let me know! |

| Images 161–170 | Here are the BBPS scores for the additional images provided: Image 161: Minor amount of residual staining, small fragments of stool and/or opaque liquid, but mucosa of colon segment seen well. Score: 2; Image 162: Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid. Score: 3; Image 163: Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid. Score: 3; Image 164: Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid. Score: 3; Image 165: Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid. Score: 3; Image 166: Minor amount of residual staining, small fragments of stool and/or opaque liquid, but mucosa of colon segment seen well. Score: 2; Image 167: Minor amount of residual staining, small fragments of stool and/or opaque liquid, but mucosa of colon segment seen well. Score: 2; Image 168: Portion of mucosa of the colon segment seen, but other areas of the colon segment not well seen due to staining, residual stool and/or opaque liquid. Score: 1; Image 169: Portion of mucosa of the colon segment seen, but other areas of the colon segment not well seen due to staining, residual stool and/or opaque liquid. Score: 1; Image 170: Portion of mucosa of the colon segment seen, but other areas of the colon segment not well seen due to staining, residual stool and/or opaque liquid. Score: 1 Here is the table with the file names and their corresponding BBPS scores: Image161.png 2; Image162.png 3; Image163.png 3; Image164.png 3; Image165.png 3; Image166.png 2; Image167.png 2; Image168.png 1; Image169.png 1; Image170.png 1. |

| Images 261–270 | Based on the Boston Bowel Preparation Scale (BBPS), here are the scores assigned to each new image: Image261.BMP: Score = 0 (Unprepared colon segment with mucosa not seen due to solid stool that cannot be cleared); Image262.BMP: Score = 0 (Unprepared colon segment with mucosa not seen due to solid stool that cannot be cleared); Image263.BMP: Score = 0 (Unprepared colon segment with mucosa not seen due to solid stool that cannot be cleared); Image264.BMP: Score = 0 (Unprepared colon segment with mucosa not seen due to solid stool that cannot be cleared); Image265.BMP: Score = 2 (Minor amount of residual staining, small fragments of stool and/or opaque liquid, but mucosa of colon segment seen well); Image266.BMP: Score = 1 (Portion of mucosa of the colon segment seen, but other areas of the colon segment not well seen due to staining, residual stool and/or opaque liquid); Image267.BMP: Score = 3 (Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid); Image268.BMP: Score = 3 (Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid); Image269.BMP: Score = 3 (Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid); Image270.BMP: Score = 3 (Entire mucosa of colon segment seen well with no residual staining, small fragments of stool or opaque liquid). Here’s the table with the file names and the assigned scores: Image261.BMP 0; Image262.BMP 0; Image263.BMP 0; Image264.BMP 0; Image265.BMP 2; Image266.BMP 1; Image267.BMP 3; Image268.BMP 3; Image269.BMP 3; Image270.BMP 3. If you need further assistance or have any specific requirements, please let me know! |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pellegrino, R.; Federico, A.; Gravina, A.G. Conversational LLM Chatbot ChatGPT-4 for Colonoscopy Boston Bowel Preparation Scoring: An Artificial Intelligence-to-Head Concordance Analysis. Diagnostics 2024, 14, 2537. https://doi.org/10.3390/diagnostics14222537

Pellegrino R, Federico A, Gravina AG. Conversational LLM Chatbot ChatGPT-4 for Colonoscopy Boston Bowel Preparation Scoring: An Artificial Intelligence-to-Head Concordance Analysis. Diagnostics. 2024; 14(22):2537. https://doi.org/10.3390/diagnostics14222537

Chicago/Turabian StylePellegrino, Raffaele, Alessandro Federico, and Antonietta Gerarda Gravina. 2024. "Conversational LLM Chatbot ChatGPT-4 for Colonoscopy Boston Bowel Preparation Scoring: An Artificial Intelligence-to-Head Concordance Analysis" Diagnostics 14, no. 22: 2537. https://doi.org/10.3390/diagnostics14222537

APA StylePellegrino, R., Federico, A., & Gravina, A. G. (2024). Conversational LLM Chatbot ChatGPT-4 for Colonoscopy Boston Bowel Preparation Scoring: An Artificial Intelligence-to-Head Concordance Analysis. Diagnostics, 14(22), 2537. https://doi.org/10.3390/diagnostics14222537