Explainable AI for Interpretation of Ovarian Tumor Classification Using Enhanced ResNet50

Abstract

1. Introduction

- A modified custom ResNet50 (or, in terms of convolutional layers, a ResNet60) architecture is proposed for the classification of ovarian tumors as benign and malignant. The modified ResNet50 classifier performs the classification task with 97.5% accuracy on the test dataset, which is higher than other state-of-the-art architectures implemented—GoogLeNet (Inception-v1), Inception-v4, ResNeXt, Inception-ResNet, ResNet16, Xception, VGG16, VGG19, ResNet50, EfficientNetB0.

- The results of the modified ResNet50 are then provided for interpretation to the explainable AI methods LIME, Saliency Map, Grad-CAM, Occlusion Analysis, SHAP and SmoothGrad. In order to have a meaningful and accurate interpretation of the classification output, the explainable AI methods were implemented on the classification results of the modified ResNet50 architecture, since it had the best classification accuracy on the test dataset.

- The above explainable AI methods highlighted the features and regions of interest that were given most importance by the proposed model in obtaining the final classification output, such as the shape and neighboring area of the tumor. The results show qualitatively that the tumors that have a fixed boundary and localized nature are classified as benign by the proposed model, while those with an irregular shape and boundary are classified as malignant by the model, indicating that the localization, boundary, and shape are important characteristics to understand qualitatively from the image as to whether the tumor has a tendency to metastasize to neighboring organs and tissues, subsequently leading to the benign or malignant nature.

2. Related Work

2.1. Existing Work Explainability of Classification Models in the Medical Imaging Domain

2.2. Existing Work on Explainability of CNN Models in the Medical Imaging Domain

3. Research Gap and Motivation

4. Methodology

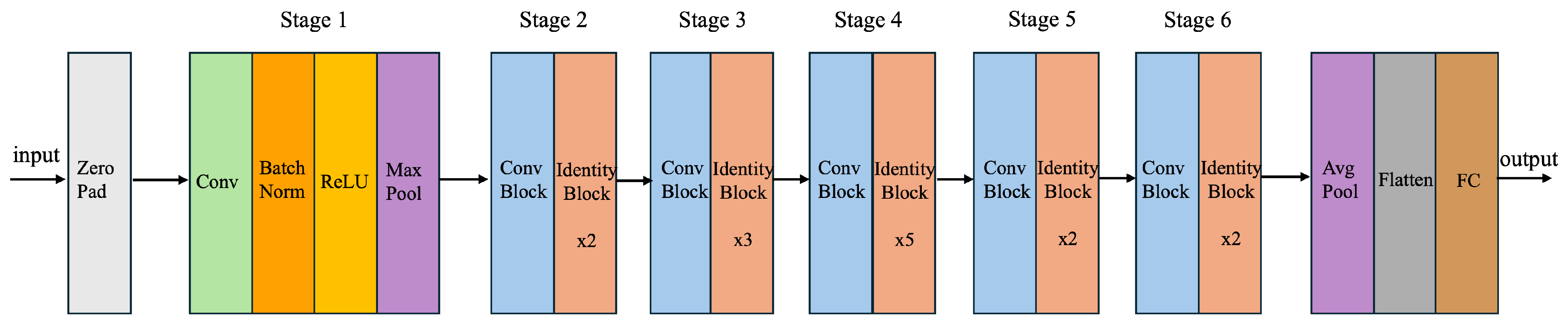

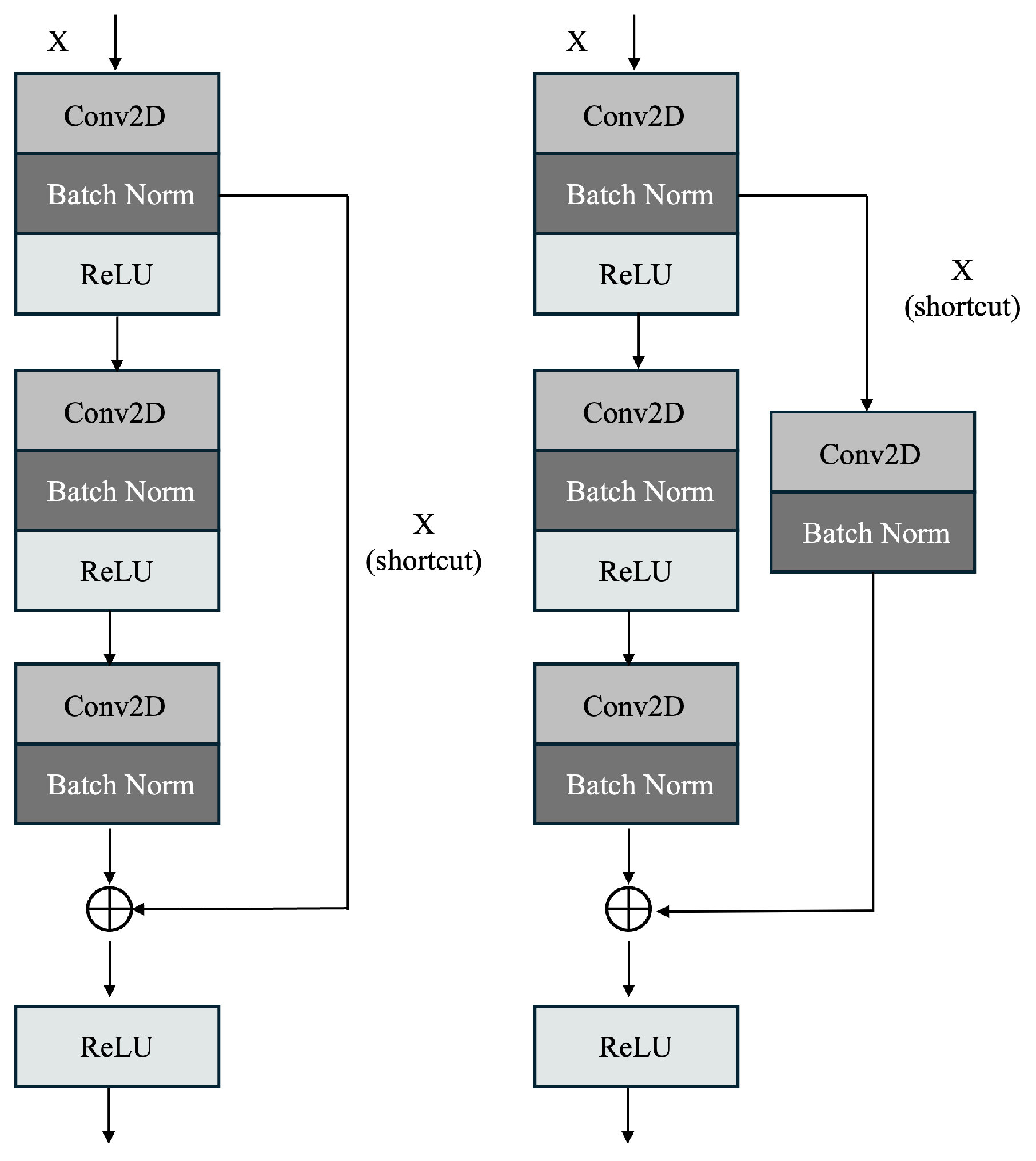

4.1. Enhanced ResNet50 (Proposed ResNet60) Architecture

| Algorithm 1: Image classification using enhanced ResNet50 architecture. |

|

4.2. Explainable AI Methods

5. Results and Discussion

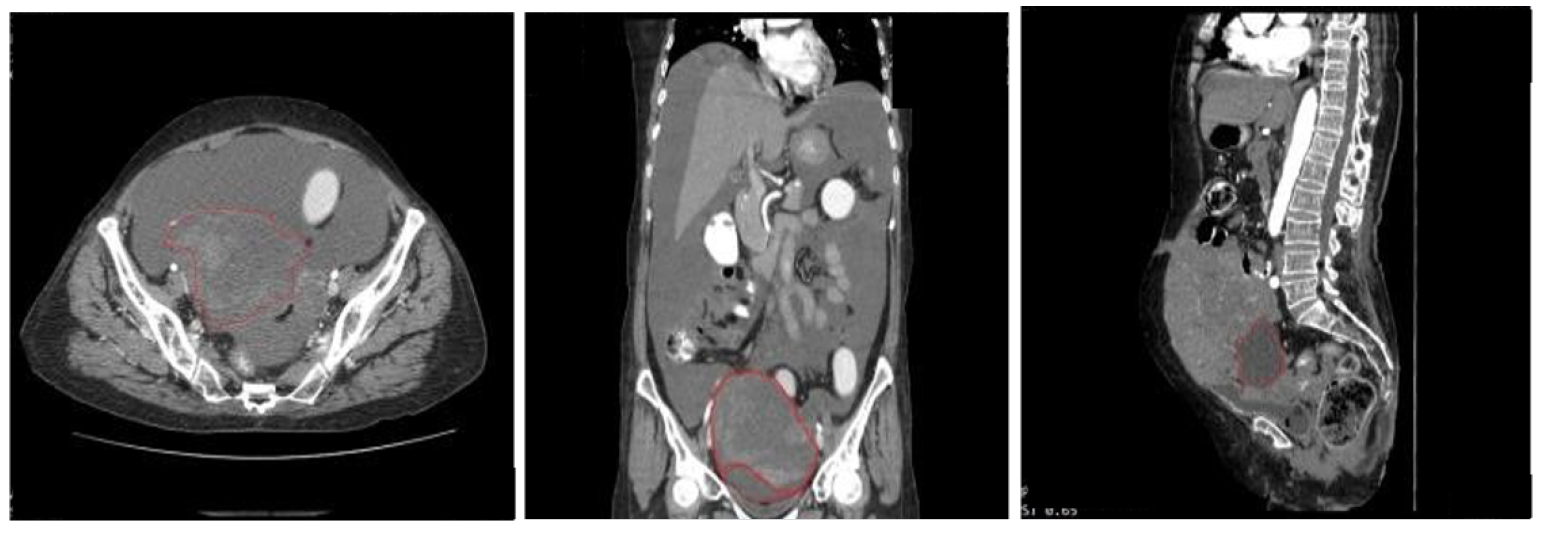

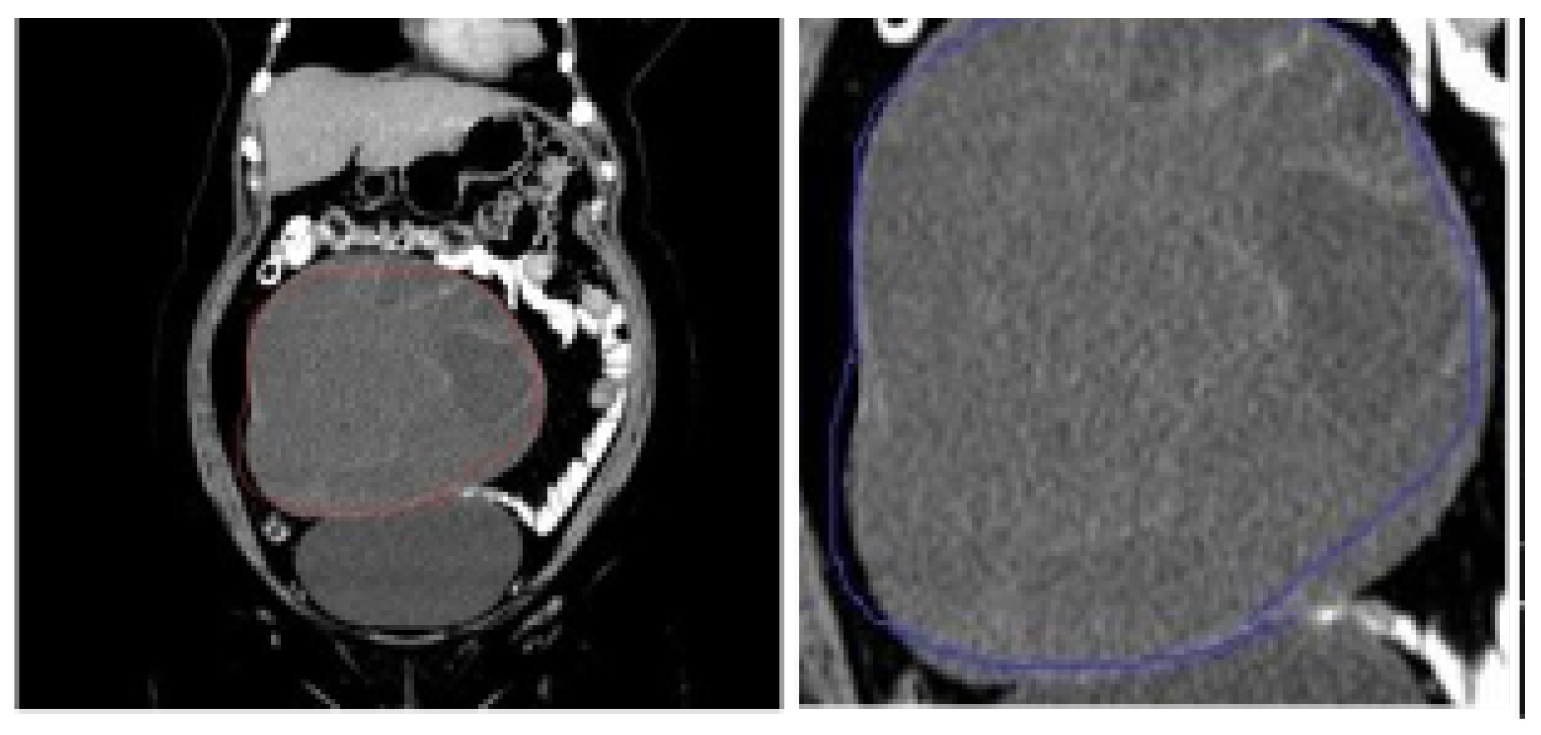

5.1. Data Source and Description

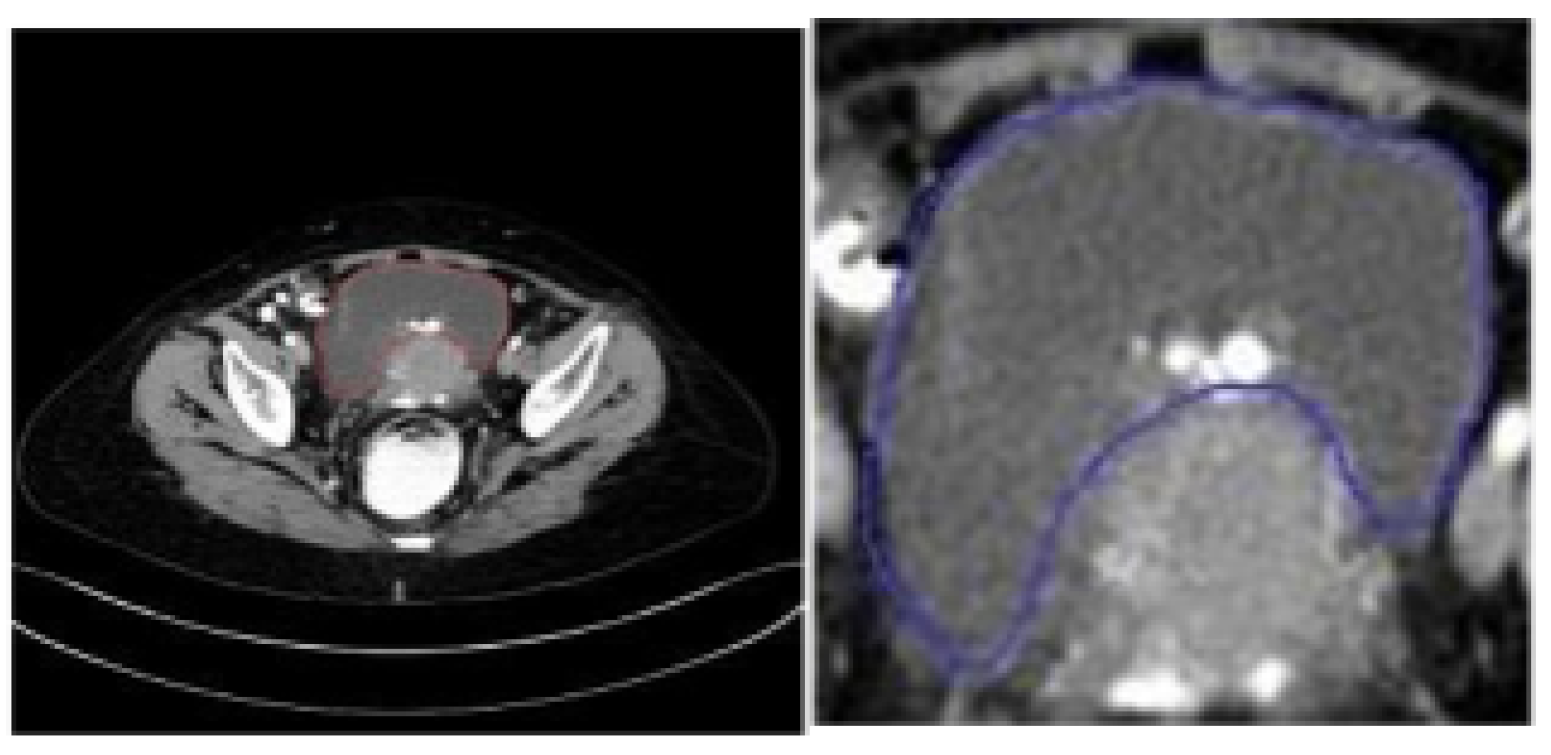

5.2. Data Preprocessing and Dataset Preparation for Training and Evaluation

5.3. Classification Results

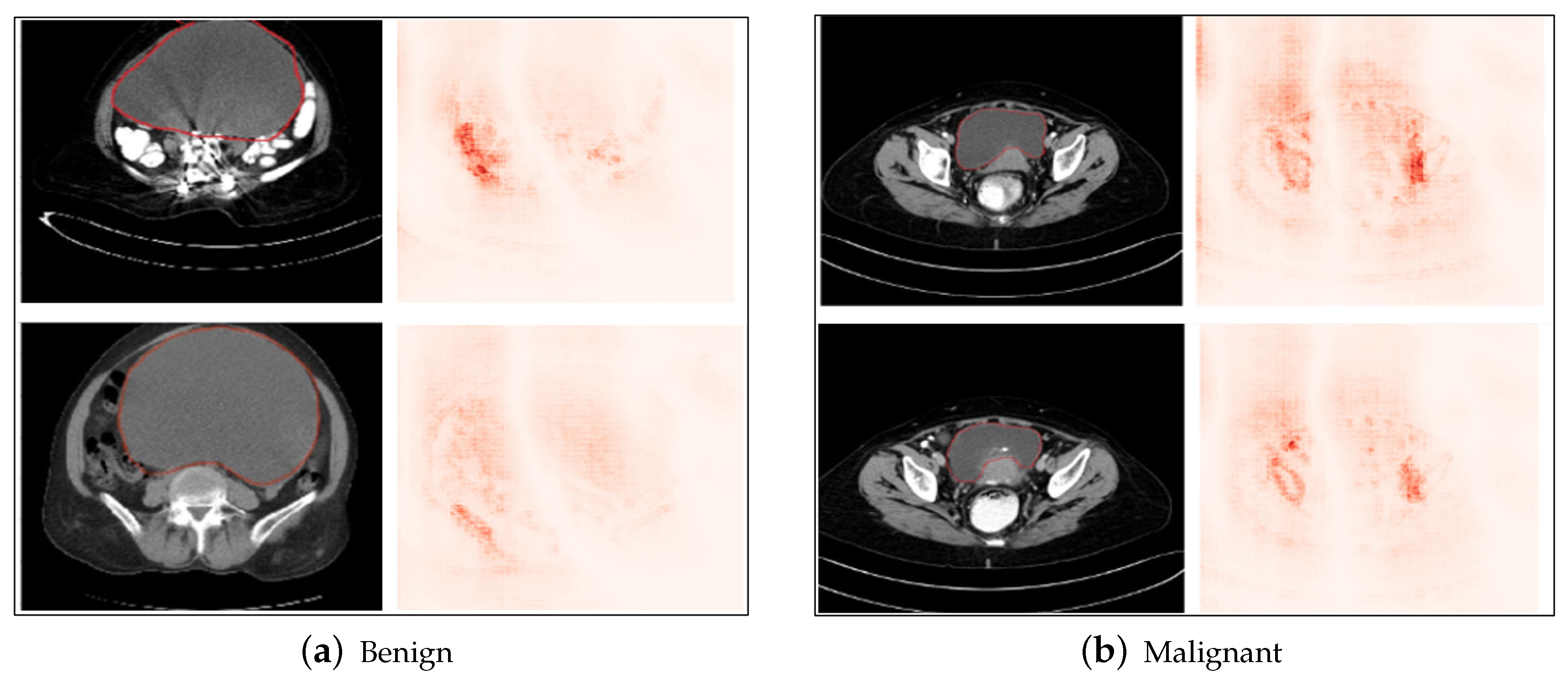

5.4. LIME Results

5.5. Saliency Map Results

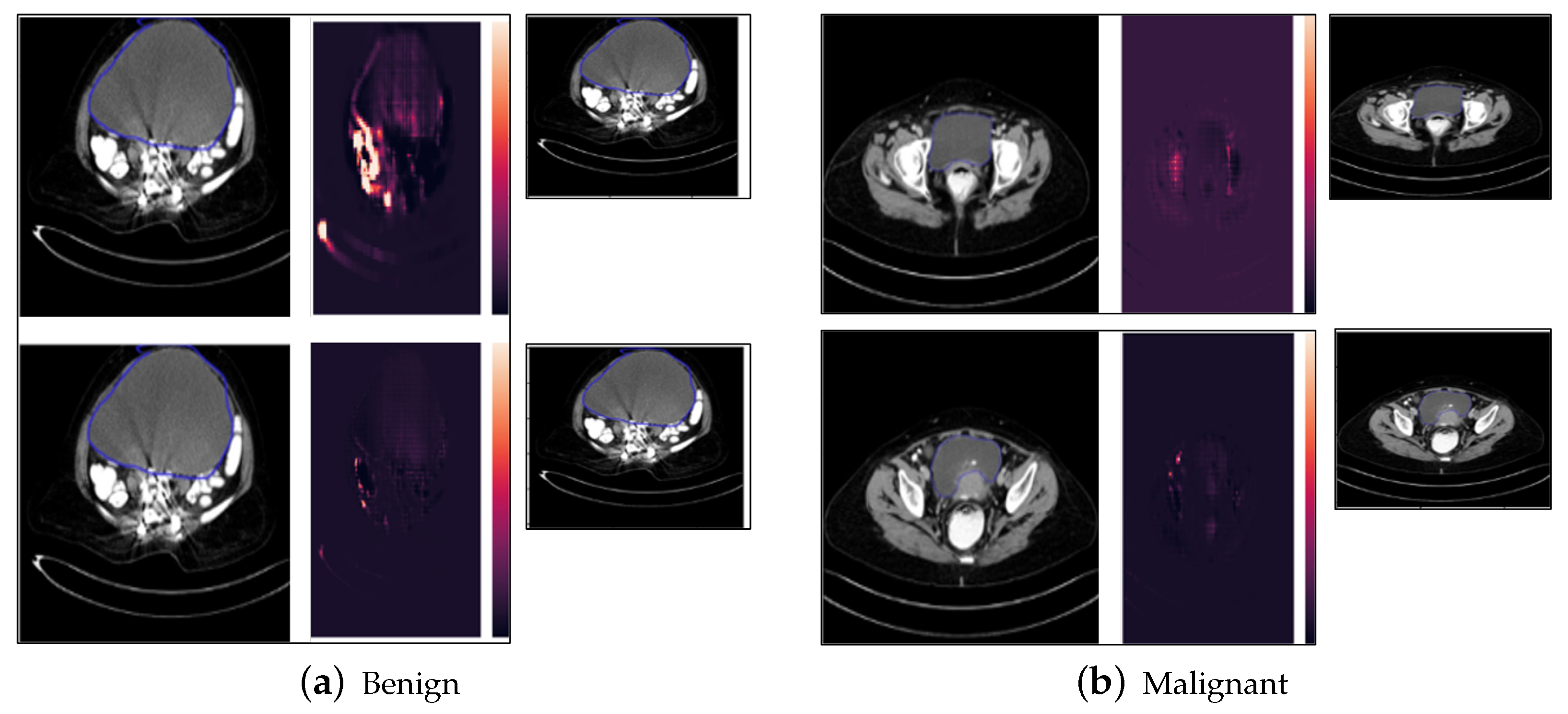

5.6. Occlusion Analysis Results

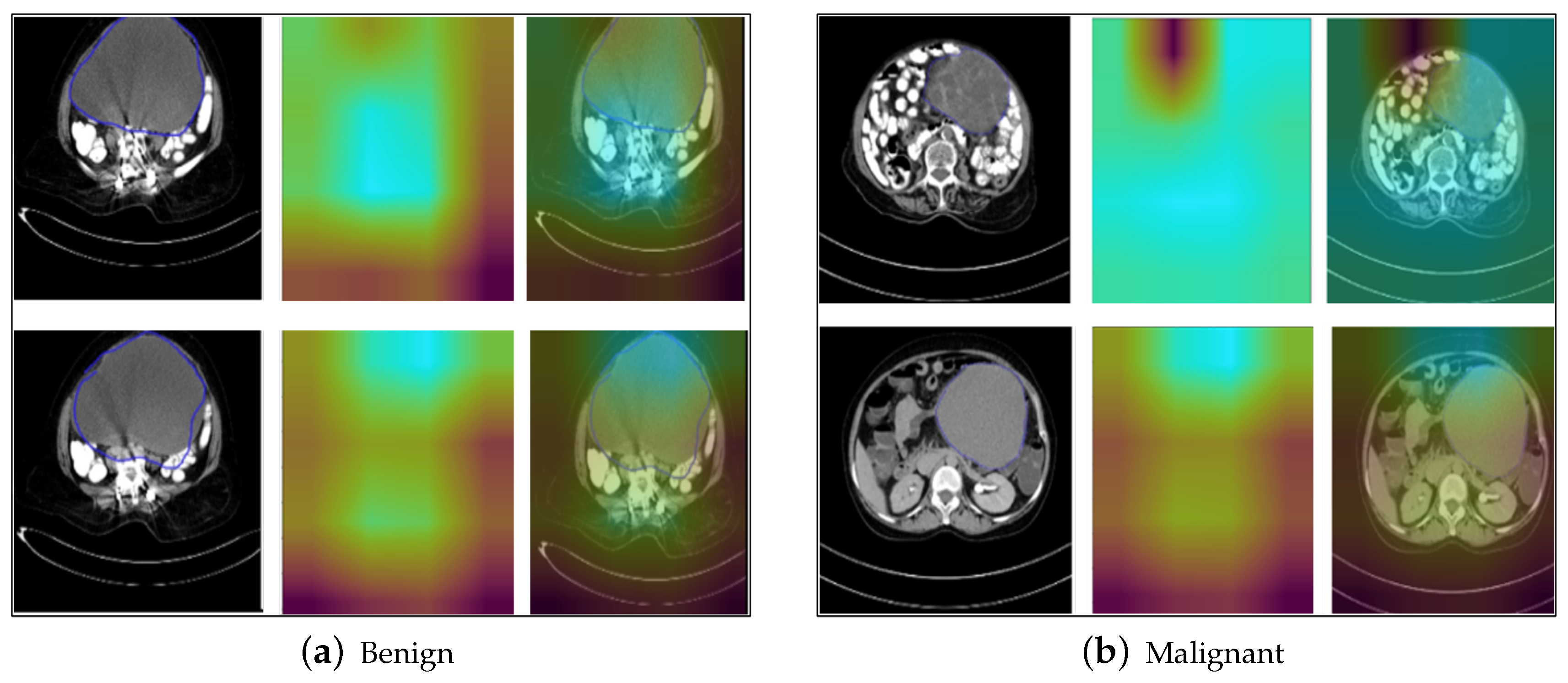

5.7. Grad-CAM Results

5.8. SHAP and SmoothGrad Results

5.9. Comparison of the Explainable AI Results

5.10. Scope of Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wojtyła, C.; Bertuccio, P.; Giermaziak, W.; Santucci, C.; Odone, A.; Ciebiera, M.; Negri, E.; Wojtyła, A.; La Vecchia, C. European trends in ovarian cancer mortality, 1990–2020 and predictions to 2025. Eur. J. Cancer 2023, 194, 113350. [Google Scholar] [CrossRef] [PubMed]

- Asangba, A.E.; Chen, J.; Goergen, K.M.; Larson, M.C.; Oberg, A.L.; Casarin, J.; Multinu, F.; Kaufmann, S.H.; Mariani, A.; Chia, N.; et al. Diagnostic and prognostic potential of the microbiome in ovarian cancer treatment response. Sci. Rep. 2023, 13, 730. [Google Scholar] [CrossRef] [PubMed]

- Jan, Y.T.; Tsai, P.S.; Huang, W.H.; Chou, L.Y.; Huang, S.C.; Wang, J.Z.; Lu, P.H.; Lin, D.C.; Yen, C.S.; Teng, J.P.; et al. Machine learning combined with radiomics and deep learning features extracted from CT images: A novel AI model to distinguish benign from malignant ovarian tumors. Insights Imaging 2023, 14, 68. [Google Scholar] [CrossRef] [PubMed]

- Vela-Vallespín, C.; Medina-Perucha, L.; Jacques-Aviñó, C.; Codern-Bové, N.; Harris, M.; Borras, J.M.; Marzo-Castillejo, M. Women’s experiences along the ovarian cancer diagnostic pathway in Catalonia: A qualitative study. Health Expect. 2023, 26, 476–487. [Google Scholar] [CrossRef] [PubMed]

- Zacharias, J.; von Zahn, M.; Chen, J.; Hinz, O. Designing a feature selection method based on explainable artificial intelligence. Electron. Mark. 2022, 32, 2159–2184. [Google Scholar] [CrossRef]

- Gupta, M.; Sharma, S.K.; Sampada, G.C. Classification of Brain Tumor Images Using CNN. Comput. Intell. Neurosci. 2023, 2023, 2002855. [Google Scholar] [CrossRef] [PubMed]

- Yadav, S.S.; Jadhav, S.M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Liang, J. Image classification based on RESNET. J. Phys. Conf. Ser. 2020, 1634, 012110. [Google Scholar] [CrossRef]

- Saranya, A.; Subhashini, R. A systematic review of Explainable Artificial Intelligence models and applications: Recent developments and future trends. Decis. Anal. J. 2023, 7, 100230. [Google Scholar]

- Baehrens, D.; Schroeter, T.; Harmeling, S.; Kawanabe, M.; Hansen, K.; Müller, K.R. How to explain individual classification decisions. J. Mach. Learn. Res. 2010, 11, 1803–1831. [Google Scholar]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A brief survey on history, research areas, approaches and challenges. In Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, 9–14 October 2019, Proceedings, Part II 8; Springer International Publishing: Basel, Switzerland, 2019; pp. 563–574. [Google Scholar]

- Yang, W.; Wei, Y.; Wei, H.; Chen, Y.; Huang, G.; Li, X.; Li, R.; Yao, N.; Wang, X.; Gu, X.; et al. Survey on explainable AI: From approaches, limitations and Applications aspects. Hum.-Centric Intell. Syst. 2023, 3, 161–188. [Google Scholar] [CrossRef]

- Samek, W.; Wieg, T.; Müller, K.R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv 2017, arXiv:1708.08296. [Google Scholar]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef] [PubMed]

- Van der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef] [PubMed]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable ai: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- An, J.; Zhang, Y.; Joe, I. Specific-Input LIME Explanations for Tabular Data Based on Deep Learning Models. Appl. Sci. 2023, 13, 8782. [Google Scholar] [CrossRef]

- Alqaraawi, A.; Schuessler, M.; Weiß, P.; Costanza, E.; Berthouze, N. Evaluating saliency map explanations for convolutional neural networks: A user study. In Proceedings of the 25th International Conference on Intelligent User Interfaces, Cagliari, Italy, 17–20 March 2020; pp. 275–285. [Google Scholar]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Li, X.H.; Shi, Y.; Li, H.; Bai, W.; Song, Y.; Cao, C.C.; Chen, L. Quantitative evaluations on saliency methods: An experimental study. arXiv 2020, arXiv:2012.15616. [Google Scholar]

- Resta, M.; Monreale, A.; Bacciu, D. Occlusion-based explanations in deep recurrent models for biomedical signals. Entropy 2021, 23, 1064. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Cao, Q.H.; Nguyen, T.T.H.; Nguyen, V.T.K.; Nguyen, X.P. A Novel Explainable Artificial Intelligence Model in Image Classification problem. arXiv 2023, arXiv:2307.04137. [Google Scholar]

- Fu, R.; Hu, Q.; Dong, X.; Guo, Y.; Gao, Y.; Li, B. Axiom-based grad-cam: Towards accurate visualization and explanation of cnns. arXiv 2020, arXiv:2008.02312. [Google Scholar]

- Kakogeorgiou, I.; Karantzalos, K. Evaluating explainable artificial intelligence methods for multi-label deep learning classification tasks in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102520. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 1–10. [Google Scholar]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef] [PubMed]

- Hooker, S.; Erhan, D.; Kindermans, P.J.; Kim, B. A benchmark for interpretability methods in deep neural networks. Adv. Neural Inf. Process. Syst. 2019, 32, 1–12. [Google Scholar]

- Ishikawa, S.N.; Todo, M.; Taki, M.; Uchiyama, Y.; Matsunaga, K.; Lin, P.; Ogihara, T.; Yasui, M. Example-based explainable AI and its application for remote sensing image classification. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103215. [Google Scholar] [CrossRef]

- Shivhare, I.; Jogani, V.; Purohit, J.; Shrawne, S.C. Analysis of Explainable Artificial Intelligence Methods on Medical Image Classification. In Proceedings of the 2023 Third International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 5–6 January 2023; pp. 1–5. [Google Scholar]

- Montavon, G.; Lapuschkin, S.; Binder, A.; Samek, W.; Müller, K.R. Explaining nonlinear classification decisions with deep taylor decomposition. Pattern Recognit. 2017, 65, 211–222. [Google Scholar] [CrossRef]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning important features through propagating activation differences. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3145–3153. [Google Scholar]

- Smilkov, D.; Thorat, N.; Kim, B.; Viégas, F.; Wattenberg, M. Smoothgrad: Removing noise by adding noise. arXiv 2017, arXiv:1706.03825. [Google Scholar]

- Soltani, S.; Kaufman, R.A.; Pazzani, M.J. User-centric enhancements to explainable ai algorithms for image classification. In Proceedings of the Annual Meeting of the Cognitive Science Society, Toronto, ON, Canada, 27–30 July 2022; Volume 44. [Google Scholar]

- Springenberg, J.T.; Dosovitskiy, A.; Brox, T.; Riedmiller, M. Striving for simplicity: The all convolutional net. arXiv 2015, arXiv:1412.6806. [Google Scholar]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3319–3328. [Google Scholar]

- Vermeire, T.; Brughmans, D.; Goethals, S.; de Oliveira, R.M.B.; Martens, D. Explainable image classification with evidence counterfactual. Pattern Anal. Appl. 2022, 25, 315–335. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part I; Springer International Publishing: Basel, Switzerland, 2014; Volume 13, pp. 818–833. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Wu, B.; Fan, Y.; Mao, L. Large-scale image classification with explainable deep learning scheme. 2021; under review. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

| References | Problem Statement | Dataset Used | Algorithms | Performance | Comparative Analysis with Proposed System |

|---|---|---|---|---|---|

| Jan, Y. T et al. [3] | Classification of ovarian tumors into benign and malignant using a proposed AI model | CT scanned images of 185 ovarian tumors from 149 patients | Ensemble AI model | Accuracy: 82%, Specificity: 89%, Sensitivity: 68% | The authors have achieved an accuracy of 82% for classification on this dataset, which is higher than the existing system used by junior radiologists. In our existing work, we used a dataset of 53 patients from a hospital in India. The results show a higher accuracy of 97.5% on the test dataset for classification using the proposed modified ResNet50 model. |

| Gupta, M et al. [6] | Brain tumor classification using CNN | MRI scanned images from UCI repository available publicly | Custom CNN and VGG16 | Custom CNN achieved 100% accuracy, while VGG16 achieved 96% accuracy | The authors have trained a highly accurate custom CNN model for brain tumor classification, as well as tested a pre-trained VGG16 model. In our paper, as compared to VGG16 and other SOTA architectures, the proposed modified ResNet50 achieves the highest accuracy of 97.5% on the ovarian tumor test dataset. |

| Yadav, S et al. [7] | Medical image classification using deep CNNs | Chest X-ray images classified as Normal and Pneumonia | Custom CNN and VGG16 | Custom CNN achieved 88.3% accuracy, while VGG16 achieved 92.4% accuracy | The authors have implemented custom CNN and VGG16 on a chest X-ray images dataset, and achieved an accuracy of greater than 90% for VGG16. In our paper, as compared to VGG16, the proposed modified ResNet50 achieves higher classification performance on the ovarian tumor dataset. |

| References | Problem Statement | Dataset Used | Algorithms | Comparative Analysis with Proposed System |

|---|---|---|---|---|

| An, J. et al. [18], Soltani, S. et al. [35] | Explainability of CNN model output using LIME explanations | Wine Quality Dataset, Pima Indians Diabetes dataset, publicly available dataset comprising 14,380 images of 66 bird species from Flickr | LIME | An, J. et al. [18] used LIME for explaining feature importance in a binary classification problem using numerical and categorical attributes. Soltani, S. et al. [35] have evaluated the performance of LIME-generated explanations on bird species images, and evaluated the performance of the bird species classification explanation by conducting study trials that employed expert bird-watchers, who are able to distinguish bird species through specific characteristics and distinguishing features. In our research work, the concept of LIME or local explanations was extended to identify the characteristics of a tumors that cause it to be benign or malignant. |

| Alqaraawi, A. et al. [19] | Explainability of CNN models using Saliency Map and evaluation of performance | ILSVRC-2013 test dataset, PASCAL VOC dataset, COCO dataset | Saliency Map | The authors of these research papers presented class saliency as a method for the explainability of CNN output, as well as evaluation of the performance of these methods using scores for the saliency features, class sensitivity, and stability of the predicted confidence. In our research work, we have implemented the Saliency Map on the ovarian tumor dataset by constructing class Saliency Maps as described in the papers. However, evaluation of the explanation is beyond the scope of this paper, and is a prospective topic for continuation of this work. |

| Selvaraju, R. R. et al. [23], Fu, R. et al. [25], Cao, Q. H. et al. [24] | Explainability of CNN classification output using Class Activation Mapping (Grad-CAM, Segmentation CAM) | ImageNet Large Scale Visual Recognition Challenge (ILSVRC) data set, publicly available dog and cat binary classification dataset | Grad-CAM | Selvaraju, R. R. et al. [23] proposed the Gradient Class Activation Mapping (Grad-CAM), which highlights the regions of interest in an image for a target class based on the gradients. Fu, R. et al. [25] have provided an enhanced version of the Grad-CAM, called XGrad-CAM, that takes into account the two axioms’ sensitivity and conservation for evaluating the Grad-CAM explanations. Cao, Q. H. et al. [24] have proposed the Segmentation - Class Activation Mapping (SeCAM), which combines the capabilities of both LIME and Grad-CAM. |

| Resta, M. et al. [22] | Explainability using Occlusion Analysis for biomedical dataset | Cuff-Less Blood Pressure Estimation Data Set (CBPEDS) dataset pertaining to physiological signals, the Combined measurement of ECG, Breathing and Seismocardiograms Database (CEBSDB), and the PTB Diagnostic ECG Database (PTBDB) | Occlusion Analysis | The authors have proposed occlusion-based explanations that determine the effect of each input feature on the final classification output. A similar approach of occlusion-based experiments were conducted on the ovarian tumor dataset in our study. |

| Smilkov, D. et al. [34] | Explainability of CNN output using SmoothGrad | ILSVRC-2013 dataset | SmoothGrad | The authors have proposed the SmoothGrad method, an enhancement of the sensitivity maps based on gradients. In our paper, we have followed a similar approach to implement SmoothGrad for explanation of ResNet60 output on the ovarian tumor dataset. |

| Lundberg, S. M. et al. [27], Zacharias, J et al. [5] | Explainability and feature selection for classification using SHAP | Publicly available datasets: Taiwanese bankruptcy prediction, German credit, diabetes, credit card fraud detection, Spam base, breast cancer Wisconsin (diagnostic) | SHAP | The authors have demonstrated a method to utilize SHAP for eliminating less important features before training the classification model. The feature importance scores generated by the SHAP model were used to select the top features (selected by the user through elimination) important for classification. However, the features considered here are numerical or categorical features. In our paper, we have demonstrated the use of SHAP on the ovarian tumor dataset to highlight the important pixels. Since the input dataset comprises images, the highlighted pixels qualitatively present an idea of the important features considered by the model. |

| Singh, A et al. [14], Van der Velden, B. H et al. [15], Linardatos, P. et al. [16], Shivhare, I. et al. [31] | Comparative studies demonstrating the use of explainable AI methods in the medical imaging domain | Medical imaging datasets like X-ray, CT-scan, etc. | Backpropagation- based approaches like backpropagation, deconvolution, guided backpropagation, Class Activation Mapping (CAM), Grad-CAM, LRP, SHAP, Trainable attention, Occlusion sensitivity, LIME, Textual Explanation, Testing with Concept Activation Vectors (TCAV) | The authors discussed attribution-based, occlusion-based and backpropagation-based explainability methods that can be used for a variety of datasets in the medical imaging domain, including X-rays, CT-scans, breast imaging, and skin imaging. We have used backpropagation, activation mapping, SHAPley features importance values, and local explanations using LIME and occlusion analysis in our existing work for a qualitative analysis of ovarian tumor classification. Apart from the above methods, the authors also explored the possibility of attention-based methods and concept vectors, textual justification, intrinsic explainability, etc. as a future scope to this research domain. |

| Bach, S et al. [28], Ishikawa, S. N. et al. [30], Montavon, G. et al. [32], Vermeire, T. et al. [38], Shrikumar, A. et al. [33], Wu, B. et al. [41] | Other explainability methods, apart from the ones mentioned above | MNIST dataset, remote sensing image dataset from the Sentinel-2 satellite, ILSVRC dataset, DNA genome sequence dataset, ImageNet-1K, Cifar-100 | Pixel-wise explanations using layer-wise propagation, example-based explainable AI, deep Taylor decomposition, evidence counterfactual, DeepLift, channel and spatial attention model | The authors in these research papers proposed further methods to explain CNN predictions using pixel contributions, example-based AI, decomposition of deep neural networks, counterfactual explanations, deep learning-based feature selection and attention models for the explanation of CNN classification models. These methods have not been implemented in our paper, and provide a prospect for expansion of the scope of our future research by implementing these on ovarian tumor dataset. |

| Train | Validation | Test | |||

|---|---|---|---|---|---|

| Benign | Malignant | Benign | Malignant | Benign | Malignant |

| 2633 | 1011 | 1128 | 433 | 376 | 144 |

| Stage | Layers | Conv1 Filters | Conv2 Filters | Conv3 Filters | TotalConv Filters | Stride | Padding and ReLU | Batch Norm |

|---|---|---|---|---|---|---|---|---|

| 1 | Conv | 64 | 64 | 256 | 384 | (2, 2) | Valid | Yes |

| 1 | Identity (x2) | 64 | 64 | 256 | 384 | (1, 1) | Valid | Yes |

| 2 | Conv | 128 | 128 | 512 | 768 | (2, 2) | Valid | Yes |

| 2 | Identity (x3) | 128 | 128 | 512 | 768 | (1, 1) | Valid | Yes |

| 3 | Conv | 256 | 256 | 1024 | 1536 | (2. 2) | Valid | Yes |

| 3 | Identity (x5) | 256 | 256 | 1024 | 1536 | (1, 1) | Valid | Yes |

| 4 | Conv | 512 | 512 | 2048 | 3072 | (2, 2) | Valid | Yes |

| 4 | Identity (x2) | 512 | 512 | 2048 | 3072 | (1, 1) | Valid | Yes |

| 5 | Conv | 512 | 512 | 2048 | 3072 | (1, 1) | Valid | Yes |

| 5 | Identity (x2) | 512 | 512 | 2048 | 3072 | (1, 1) | Valid | Yes |

| 6 | Conv | 1024 | 1024 | 4096 | 6144 | (2, 2) | Valid | Yes |

| 6 | Identity (x2) | 1024 | 1024 | 4096 | 6144 | (1, 1) | Valid | Yes |

| Architecture | Input Image Size | Optimizer | Learning Rate | Batch Size | Maximum Number of Filters | Filter Size | Activation Function | Pooling Layers | Flatten Layer | Padding | Strides | Epochs | Steps per Epoch | Validation Steps | Loss |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GoogLeNet | 224 × 224×3 | Adam | 0.001 | 64 | 192 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Sparse Categorical Cross Entropy |

| Inception v4 | 224 × 224×3 | Adam | 0.0007 | 64 | 192 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 150 | 10 | 5 | Sparse Categorical Cross Entropy |

| VGG16 | 224 × 224×3 | Adam | 0.0005 | 64 | 512 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 100 | 10 | 5 | Binary Cross Entropy |

| VGG19 | 224 × 224×3 | Adam | 0.001 | 64 | 256 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 150 | 10 | 5 | Binary Cross Entropy |

| ResNet16 | 224 × 224×3 | Adam | 0.001 | 64 | 256 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Binary Cross Entropy |

| ResNeXt | 224 × 224×3 | Adam | 0.001 | 64 | 512 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Binary Cross Entropy |

| Inception-ResNet | 224 × 224×3 | Adam | 0.001 | 64 | 256 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Sparse Categorical Cross Entropy |

| Xception | 224 × 224×3 | Adam | 0.001 | 64 | 728 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Sparse Categorical Cross Entropy |

| ResNet50 | 224 × 224×3 | Adam | 0.001 | 64 | 128 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Binary Cross Entropy |

| ResNet60 (enhanced ResNet50) | 224 × 224×3 | Adam | 0.001 | 64 | 1024 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Binary Cross Entropy |

| EfficientNetB0 | 224 × 224×3 | Adam | 0.001 | 64 | 512 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 150 | 10 | 5 | Binary Cross Entropy |

| DenseNet121 | 224 × 224×3 | Adam | 0.001 | 64 | 1024 | 3 × 3 | ReLU (Softmax for final layer) | Max Pooling, Average Pooling | Flatten | Same | 2 | 200 | 10 | 5 | Binary Cross Entropy |

| Model Name | Variant Name | Train Accuracy | Train Loss | Test Accuracy | Test Loss |

|---|---|---|---|---|---|

| Inception | GoogLeNet (Inception v1) | 91.2% | 0.24 | 92.5% | 0.25 |

| Inception v4 | 93.8% | 0.12 | 80% | 42.60 | |

| Xception | 93.75% | 0.17 | 95% | 0.07 | |

| Inception-ResNet | 97.5% | 0.09 | 95% | 0.09 | |

| VGG | VGG 16 | 71.8% | 2.46 | 72.5% | 2.42 |

| VGG 19 | 74.4% | 0.56 | 74.37% | 0.57 | |

| ResNet | ResNet16 | 98.75% | 0.03 | 92.5% | 0.17 |

| ResNeXt | 95% | 0.02 | 90% | 0.40 | |

| ResNet 50 | 96.2% | 0.16 | 90% | 0.52 | |

| ResNet60 (proposed) | 95% | 0.19 | 97.5% | 0.14 | |

| EfficientNet | EfficientNet B0 | 71.8% | 0.60 | 72.5% | 0.58 |

| DenseNet | Densenet 121 | 70% | 2.25 | 70.63% | 0.54 |

| Accuracy | Precision | Recall (TPR) | F1-Score | Specificity (TNR) | FPR | FNR | Error |

|---|---|---|---|---|---|---|---|

| 97.5% | 96.66% | 100% | 98.30% | 90.97% | 9.03% | 0% | 2.5% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guha, S.; Kodipalli, A.; Fernandes, S.L.; Dasar, S. Explainable AI for Interpretation of Ovarian Tumor Classification Using Enhanced ResNet50. Diagnostics 2024, 14, 1567. https://doi.org/10.3390/diagnostics14141567

Guha S, Kodipalli A, Fernandes SL, Dasar S. Explainable AI for Interpretation of Ovarian Tumor Classification Using Enhanced ResNet50. Diagnostics. 2024; 14(14):1567. https://doi.org/10.3390/diagnostics14141567

Chicago/Turabian StyleGuha, Srirupa, Ashwini Kodipalli, Steven L. Fernandes, and Santosh Dasar. 2024. "Explainable AI for Interpretation of Ovarian Tumor Classification Using Enhanced ResNet50" Diagnostics 14, no. 14: 1567. https://doi.org/10.3390/diagnostics14141567

APA StyleGuha, S., Kodipalli, A., Fernandes, S. L., & Dasar, S. (2024). Explainable AI for Interpretation of Ovarian Tumor Classification Using Enhanced ResNet50. Diagnostics, 14(14), 1567. https://doi.org/10.3390/diagnostics14141567