Research on Artificial-Intelligence-Assisted Medicine: A Survey on Medical Artificial Intelligence

Abstract

1. Introduction

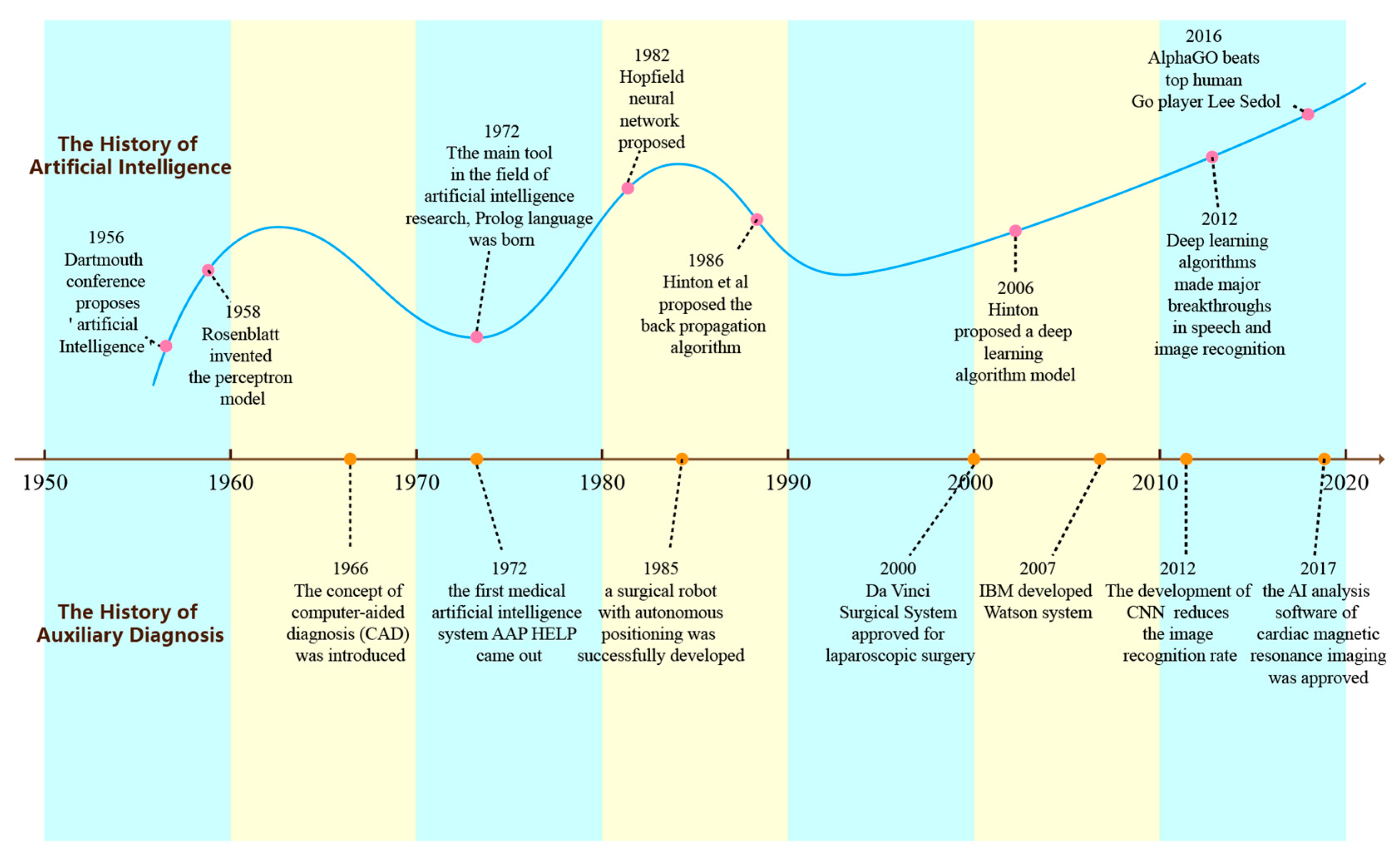

1.1. The Development History of Artificial Intelligence

1.2. The Development of AI in the Medical Field

1.3. Status Quo of AI in the Field of Assisted Medicine

2. AI in Healthcare Informatics

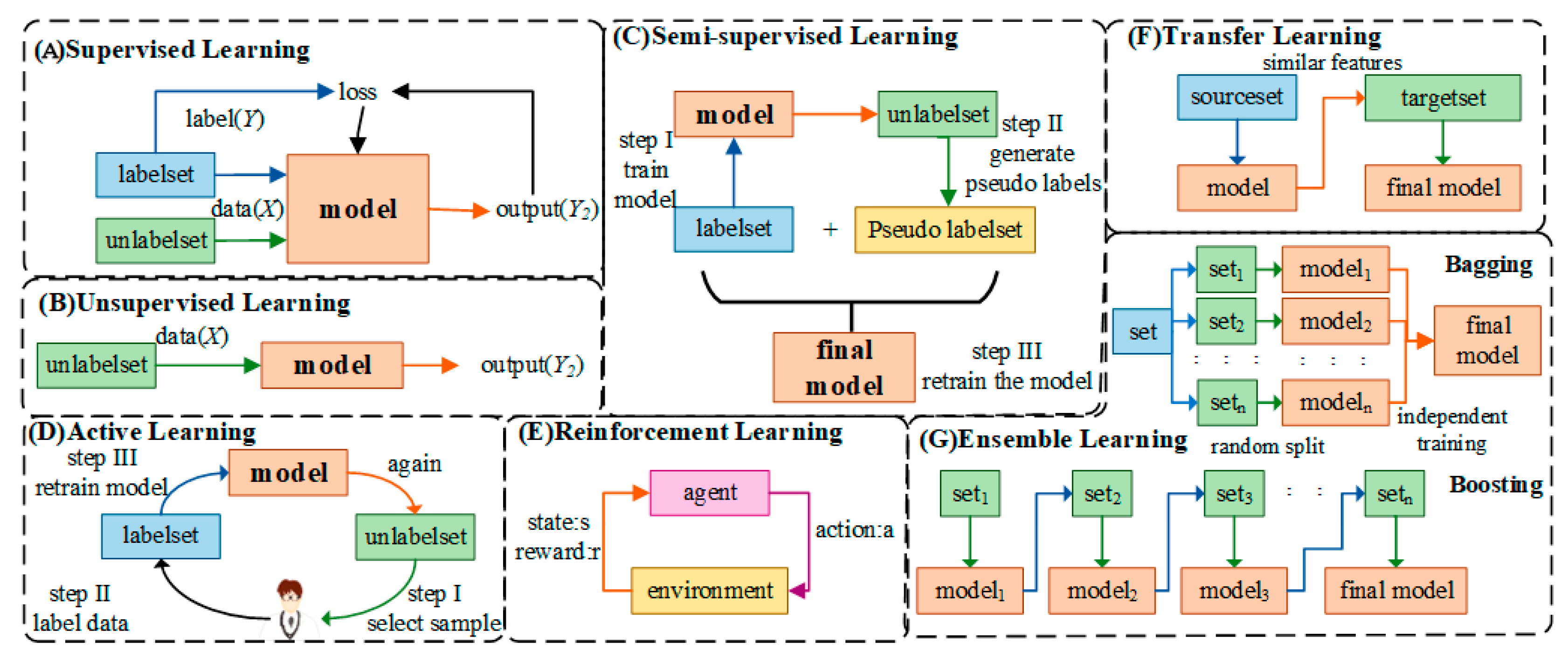

2.1. Classification of Artificial Intelligence

2.2. Artificial Intelligence Frameworks

2.3. Modeling Methods of Artificial Intelligence

3. The Application of AI in the Medical Field

3.1. The Application of AI in Genomics

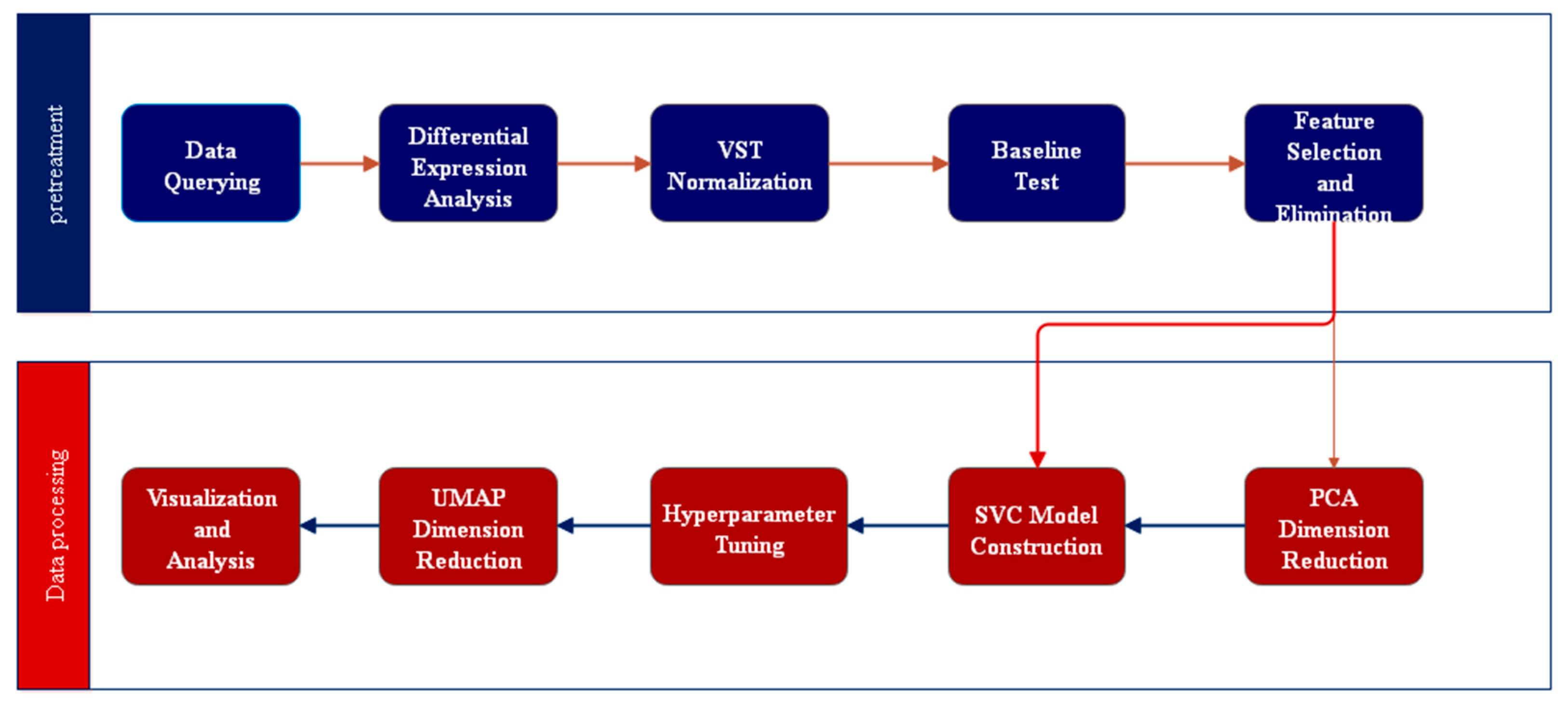

3.1.1. Disease Prediction and Analysis

3.1.2. Analysis of Drugs and Pathogenesis

- (1)

- Reliability interpretability algorithm: including multi-gene property compatible multivariate method, PRS, data-driven multivariate multimode method.

- (2)

- Improve diagnosis algorithm: multi-level and multi-dimensional framework, etc.

- (3)

- Improve treatment algorithms: biomarker prediction algorithms, treatment response prediction, etc.

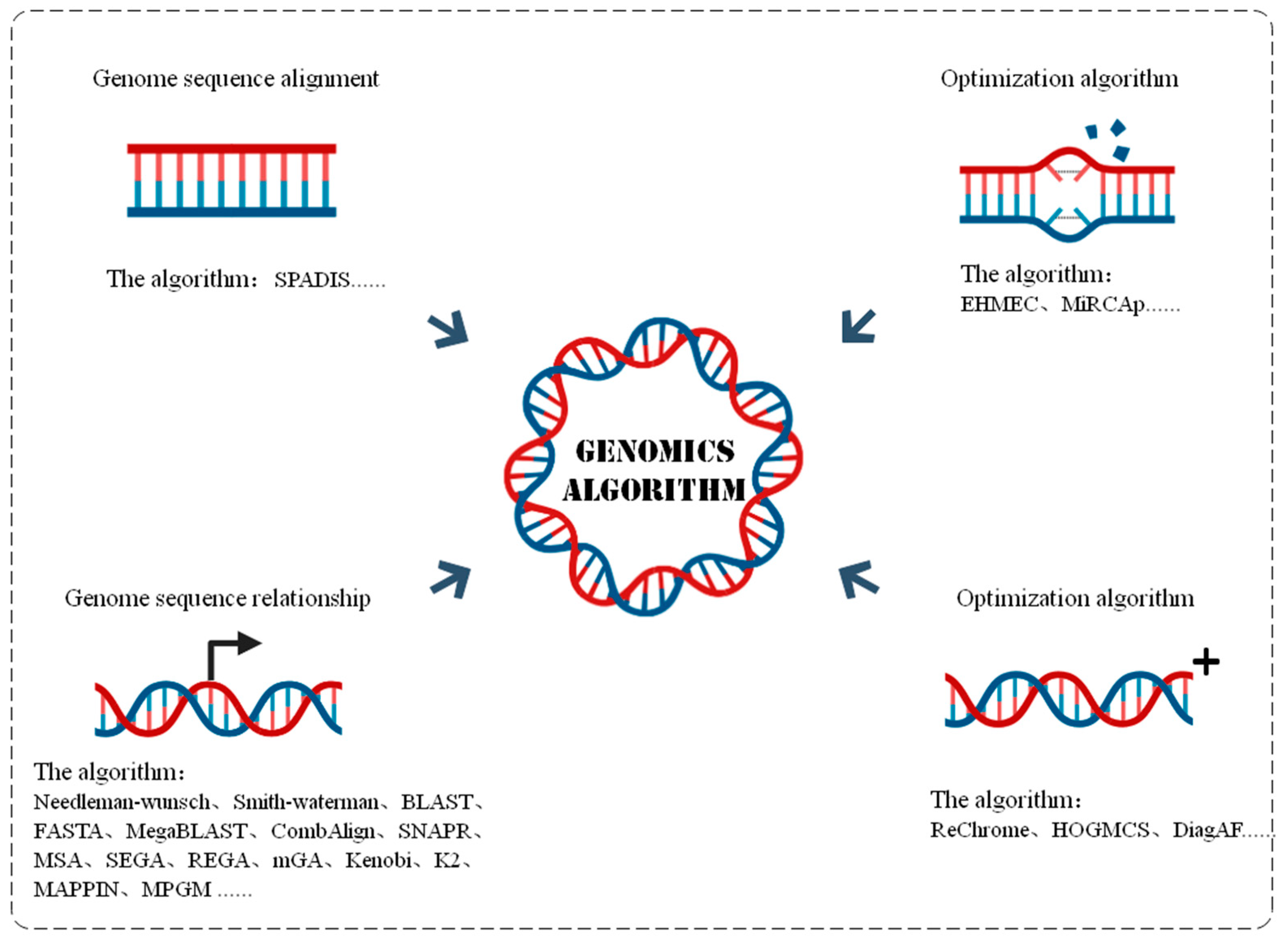

3.1.3. Genome Algorithm

3.2. The Application of AI in Drug Discovery

3.2.1. Drug Target Discovery

3.2.2. Drug Screening

3.2.3. Drug Design

3.2.4. Drug Synthesis

3.2.5. Drug Repurposing

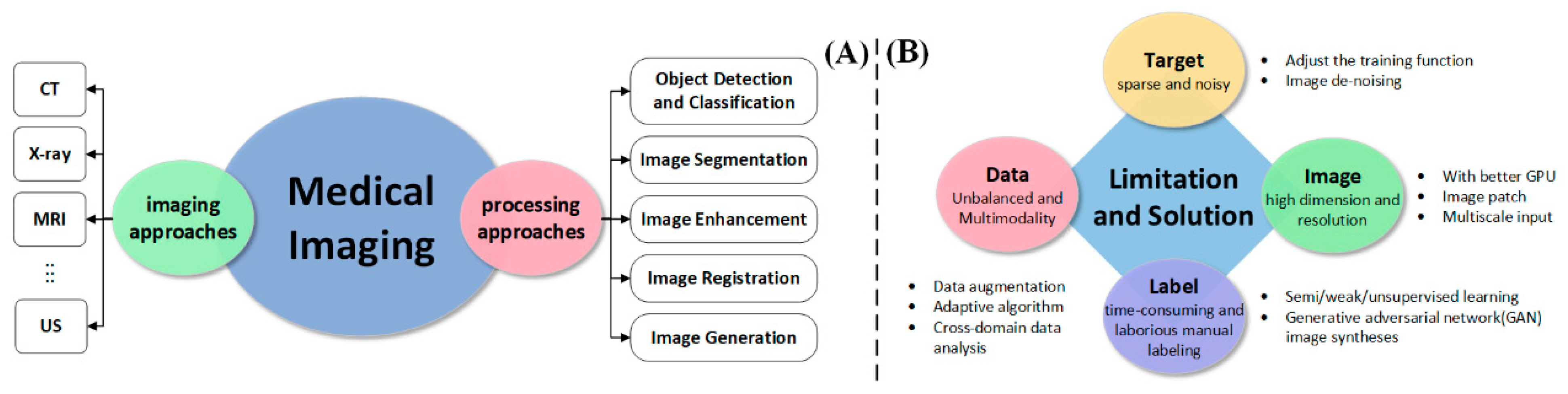

3.3. The Application of AI in Medical Imaging

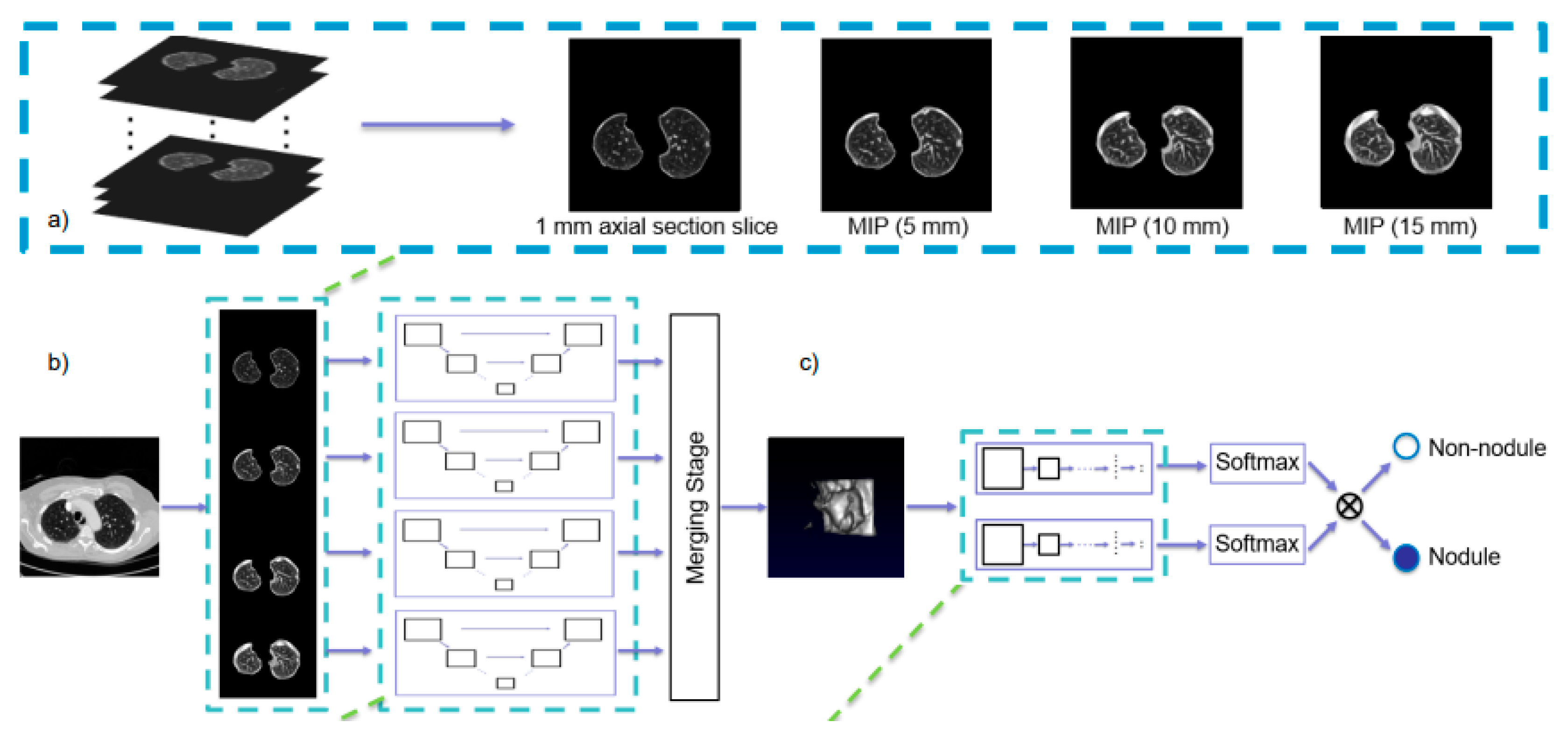

3.3.1. Detection and Classification

3.3.2. Medical Image Segmentation

- (1)

- Unlike natural images, medical images have blurred discontinuous boundaries, which can lead to misidentification of adjacent regions.

- (2)

- Medical images often have few annotations, and how to use a large number of unlabeled data is the direction of image segmentation development.

3.3.3. Medical Image Registration

3.3.4. Other Applications of AI in Medical Imaging

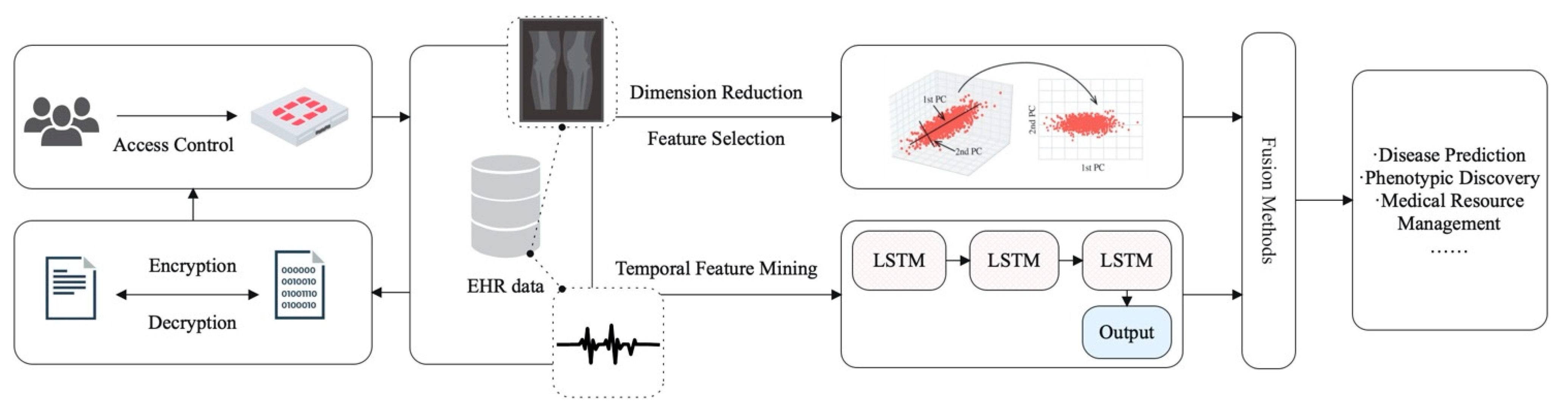

3.4. The Application of AI in Electronic Health Records

3.4.1. EHR Data Characteristics

- (1)

- Patient representation based on discrete medical concepts involves extracting patient features from discrete concepts such as international classification of diseases (ICD) codes or medical texts. How to realize the relation extraction of high-dimensional medical text data and the alignment of medical codes in different standards is the main difficulty of this type of patient representation.

- (2)

- The patient representation based on time series medical data is designed to represent the patient by time series vital signs data such as respiratory rate, heart rate, blood pressure, etc. The difficulty of this type of representation is how to establish a model to automatically extract associations between patient’s signs and disease symptoms in different time series. At the same time, the problems of uneven sampling and asynchronous sampling of time series data should be taken into account.

- (3)

- Patient representation based on multimodal data requires the fusion of diagnostic codes, medical texts, vital signs data, medical images, and other data from different modalities to express patient characteristics. The main difficulty associated with it is how to solve the heterogeneity of data and how to obtain the associative learning of EHR data from different modalities.

3.4.2. EHR Data-Assisted Disease Diagnosis and Prediction

- (1)

- Disease diagnosis and prediction based on discrete medical concepts often face the challenge of high dimension and sparsity. Reference [120] proposes a semi-supervised multi-task learning which treats the prediction of the glomerular filtration rate (eGFR) state at a single time point as a task according to eGFR, pathological classification, and other structured EHR data so as to predict the short-term evolution of chronic kidney disease.

- (2)

- In examining other studies of disease diagnosis and prediction based on time series medical data, the literature [121] proposes a model based on third-order tensor decomposition to capture ternary correlations involving additional clinical attributes or temporal features from EHR diagnostic records, thereby predicting the incidence of chronic diseases. Reference [85] uses an attention-based Bi-LSTM model to capture the temporal information of time series EHR data to predict the risk of cardiovascular disease in patients.

- (3)

- When performing disease diagnosis prediction based on multimodal data, typical cases involve the fusion of physiological signal features and EHR structural data and the fusion of medical images and EHR structural data to achieve disease diagnosis or prediction. Advanced signal processing techniques, such as Taut String estimation and dual-tree complex wavelet packet transform, are used to extract features from ECG signals to predict the onset of complications in cardiovascular surgery. In addition, chest X-rays and clinician confidence levels in the diagnosis were used as measures of label uncertainty to make a diagnosis of acute respiratory distress syndrome (ARDS) [122].

3.4.3. Other Applications of EHR Data

3.4.4. Challenges Faced by EHR Data-Assisted Health Care

3.5. The Application of AI in Health Management

3.5.1. Wireless Mobile Treatment

3.5.2. Medical Data Fusion and Analytics

3.5.3. Medical Data Privacy Protection

3.5.4. Health Management Platform

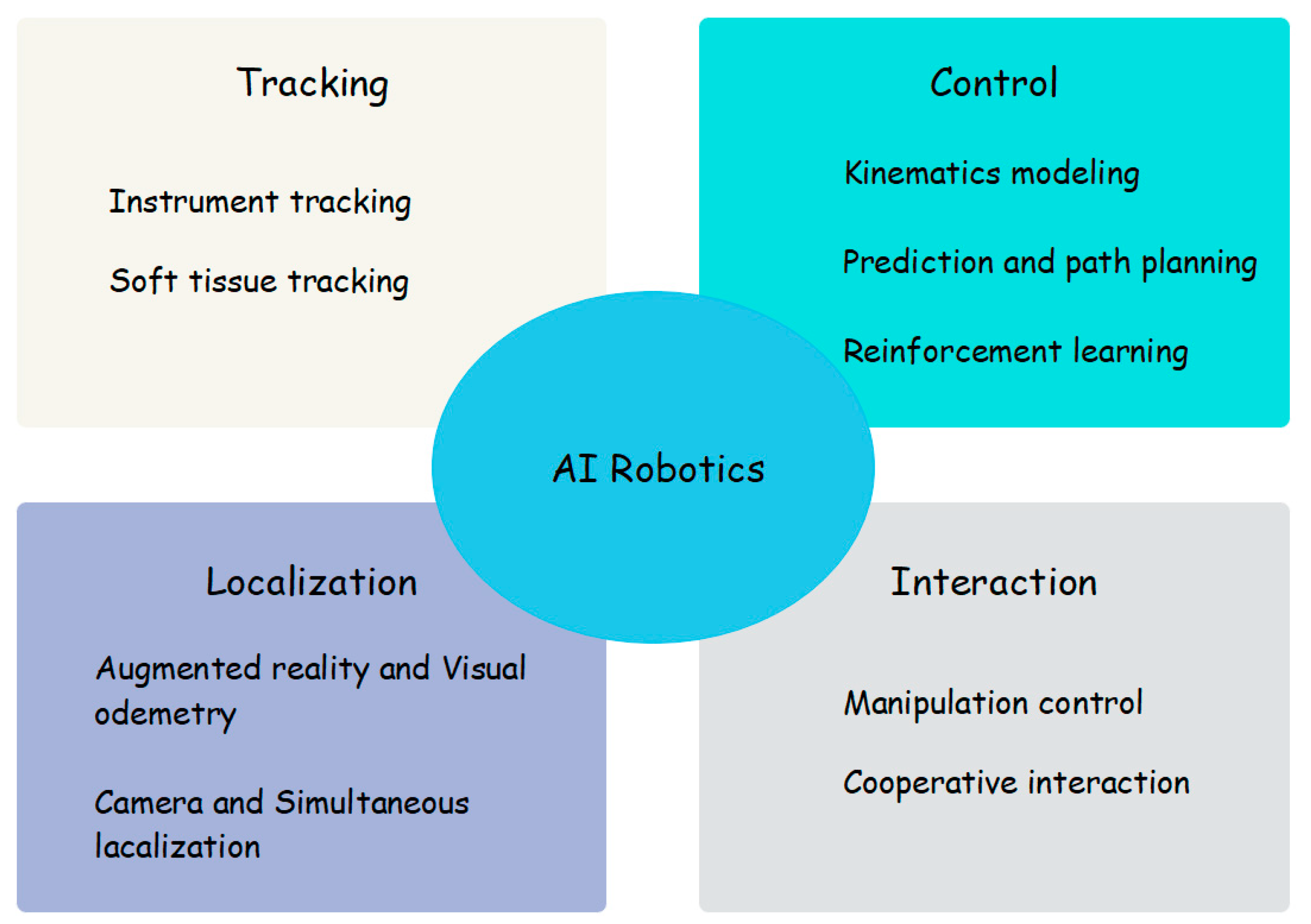

3.6. The Application of AI in Medical Robots

4. Opportunities and Challenges for the Development of Artificial Intelligence in the Field of Medicine

4.1. Opportunities for AI-Assisted Healthcare

- (1)

- Breakthroughs in AI technology

- (2)

- Supported by public health data

- (3)

- Commercial market push

- (4)

- The impact of artificial intelligence in the COVID-19 pandemic

4.2. Challenges of AI-Assisted Healthcare

- (1)

- Market fragmentation

- (2)

- Traditional thinking and ethics

- (3)

- Limitations of AI technology

- (4)

- Data Sharing Issues

- (5)

- Shortage of professionals

5. Conclusions and Outlook

5.1. Summary

5.2. Future Outlook

Funding

Conflicts of Interest

References

- Mekki, Y.M.; Zughaier, S.M. Teaching artificial intelligence in medicine. Nat. Rev. Bioeng. 2024, 2, 450–451. [Google Scholar] [CrossRef]

- Peltier, J.W.; Dahl, A.J.; Schibrowsky, J.A. Artificial intelligence in interactive marketing: A conceptual framework and research agenda. J. Res. Interact. Mark. 2024, 18, 54–90. [Google Scholar] [CrossRef]

- Lv, B.; Liu, F.; Li, Y.; Nie, J. Artificial Intelligence-Aided Diagnosis Solution by Enhancing the Edge Features of Medical Images. Diagnostics 2023, 13, 1063. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Yang, S.; Gou, F.; Zhou, Z.; Xie, P.; Xu, N.; Dai, Z. Intelligent Segmentation Medical Assistance System for MRI Images of Osteosarcoma in Developing Countries. Comput. Math. Methods Med. 2022, 2022, 6654946. [Google Scholar] [CrossRef] [PubMed]

- Moor, M.; Banerjee, O.; Abad, Z.S.H.; Krumholz, H.M.; Leskovec, J.; Topol, E.J.; Rajpurkar, P. Foundation models for generalist medical artificial intelligence. Nature 2023, 616, 259–265. [Google Scholar] [CrossRef] [PubMed]

- Zhan, X.; Liu, J.; Long, H.; Zhu, J.; Tang, H.; Gou, F.; Wu, J. An Intelligent Auxiliary Framework for Bone Malignant Tumor Lesion Segmentation in Medical Image Analysis. Diagnostics 2023, 13, 223. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Liu, E.; Peng, S.; Chen, Q.; Li, D.; Lian, D. Using artificial intelligence technology to fight COVID-19: A review. Artif. Intell. Rev. 2022, 55, 4941–4977. [Google Scholar] [CrossRef] [PubMed]

- Saxena, A.; Misra, D.; Ganesamoorthy, R.; Gonzales, J.L.A.; Almashaqbeh, H.A.; Tripathi, V. Artificial Intelligence Wireless Network Data Security System for Medical Records Using Cryptography Management. In Proceedings of the 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 28–29 April 2022; pp. 2555–2559. [Google Scholar]

- Zhang, J.; Zhang, Z.-M. Ethics and governance of trustworthy medical artificial intelligence. BMC Med. Inform. Decis. Mak. 2023, 23, 7. [Google Scholar] [CrossRef] [PubMed]

- Manickam, P.; Mariappan, S.A.; Murugesan, S.M.; Hansda, S.; Kaushik, A.; Shinde, R.; Thipperudraswamy, S.P. Artificial Intelligence (AI) and Internet of Medical Things (IoMT) Assisted Biomedical Systems for Intelligent Healthcare. Biosensors 2022, 12, 562. [Google Scholar] [CrossRef]

- Arasteh, S.T.; Isfort, P.; Saehn, M.; Mueller-Franzes, G.; Khader, F.; Kather, J.N.; Kuhl, C.; Nebelung, S.; Truhn, D. Collaborative training of medical artificial intelligence models with non-uniform labels. Sci. Rep. 2023, 13, 6046. [Google Scholar] [CrossRef]

- Ahuja, A.S.; Polascik, B.W.; Doddapaneni, D.; Byrnes, E.S.; Sridhar, J. The digital metaverse: Applications in artificial intelligence, medical education, and integrative health. Integr. Med. Res. 2023, 12, 100917. [Google Scholar] [CrossRef] [PubMed]

- Babichev, S.; Yasinska-Damri, L.; Liakh, I. A Hybrid Model of Cancer Diseases Diagnosis Based on Gene Expression Data with Joint Use of Data Mining Methods and Machine Learning Techniques. Appl. Sci. 2023, 13, 6022. [Google Scholar] [CrossRef]

- Guan, P.; Yu, K.; Wei, W.; Tan, Y.; Wu, J. Big Data Analytics on Lung Cancer Diagnosis Framework with Deep Learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 1–12. [Google Scholar] [CrossRef]

- Wu, J.; Gou, F.; Tian, X. Disease Control and Prevention in Rare Plants Based on the Dominant Population Selection Method in Opportunistic Social Networks. Comput. Intell. Neurosci. 2022, 2022, 1489988. [Google Scholar] [CrossRef]

- Liu, F.; Gou, F.; Wu, J. An Attention-Preserving Network-Based Method for Assisted Segmentation of Osteosarcoma MRI Images. Mathematics 2022, 10, 1665. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Zhou, W.; Li, T.; Zhang, Y.; Wang, J. Higher-Order Proximity-Based MiRNA-Disease Associations Prediction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 19, 501–512. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Gou, F.; Wu, J. An effective data communication community establishment scheme in opportunistic networks. IET Commun. 2023, 17, 1354–1367. [Google Scholar] [CrossRef]

- He, K.; Zhu, J.; Li, L.; Gou, F.; Wu, J. Two-stage coarse-to-fine method for pathological images in medical decision-making systems. IET Image Process. 2023, 18, 175–193. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, L.; Gou, F.; Tan, Y. A Residual Fusion Network for Osteosarcoma MRI Image Segmentation in Developing Countries. Comput. Intell. Neurosci. 2022, 2022, 7285600. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Li, Z.; Guo, W.; Yang, W.; Huang, F. A Fast Linear Neighborhood Similarity-Based Network Link Inference Method to Predict MicroRNA-Disease Associations. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 405–415. [Google Scholar] [CrossRef]

- Mahdi, S.S.; Battineni, G.; Khawaja, M.; Allana, R.; Siddiqui, M.K.; Agha, D. How does artificial intelligence impact digital healthcare initiatives? A review of AI applications in dental healthcare. Int. J. Inf. Manag. Data Insights 2023, 3, 100144. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Y.; Chen, Y. Inverse design of materials by machine learning. Materials 2022, 15, 1811. [Google Scholar] [CrossRef] [PubMed]

- Baxi, V.; Edwards, R.; Montalto, M.; Saha, S. Digital pathology and artificial intelligence in translational medicine and clinical practice. Mod. Pathol. 2021, 35, 23–32. [Google Scholar] [CrossRef] [PubMed]

- Moingeon, P.; Kuenemann, M.; Guedj, M. Artificial intelligence-enhanced drug design and develop-ment: Toward a computational precision medicine. Drug Discov. Today 2022, 27, 215–222. [Google Scholar] [CrossRef] [PubMed]

- He, T.; Heidemeyer, M.; Ban, F.; Cherkasov, A.; Ester, M. SimBoost: A read-across approach for predicting drug–target binding affinities using gradient boosting machines. J. Cheminforma. 2017, 9, 24. [Google Scholar] [CrossRef] [PubMed]

- Öztürk, H.; Özgür, A.; Ozkirimli, E. DeepDTA: Deep drug–target binding affinity prediction. Bioinformatics 2018, 34, i821–i829. [Google Scholar] [CrossRef] [PubMed]

- Debnath, S.; Debnath, T.; Bhaumik, S.; Majumdar, S.; Kalle, A.M.; Aparna, V. Discovery of novel potential selective HDAC8 inhibitors by combine ligand-based, structure-based virtual screening and in-vitro biological evaluation. Sci. Rep. 2019, 9, 17174. [Google Scholar] [CrossRef] [PubMed]

- Al-Masni, M.A.; Park, J.-M.; Gi, G.; Rivera, P.; Valarezo, E.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. Simultaneous Detection and Classification of Breast Masses in Digital Mammograms via a Deep Learning YOLO-Based CAD System. Comput. Methods Programs Biomed. 2018, 157, 85–94. [Google Scholar] [CrossRef] [PubMed]

- Lv, B.; Liu, F.; Gou, F.; Wu, J. Multi-Scale Tumor Localization Based on Priori Guidance-Based Segmentation Method for Osteosarcoma MRI Images. Mathematics 2022, 10, 2099. [Google Scholar] [CrossRef]

- Liu, W.; Liu, X.; Li, H.; Li, M.; Zhao, X.; Zhu, Z. Integrating Lung Parenchyma Segmentation and Nodule Detection with Deep Multi-Task Learning. IEEE J. Biomed. Health Inform. 2021, 25, 3073–3081. [Google Scholar] [CrossRef]

- Shen, Y.; Gou, F.; Wu, J. Node Screening Method Based on Federated Learning with IoT in Opportunistic Social Networks. Mathematics 2022, 10, 1669. [Google Scholar] [CrossRef]

- Gou, F.; Liu, J.; Zhu, J.; Wu, J. A Multimodal Auxiliary Classification System for Osteosarcoma Histopathological Images Based on Deep Active Learning. Healthcare 2022, 10, 2189. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Gou, F.; Long, H.; He, K.; Wu, J. Effective data optimization and evaluation based on social communication with AI-assisted in opportunistic social networks. Wirel. Commun. Mob. Comput. 2022, 2022, 4879557. [Google Scholar] [CrossRef]

- Kiemen, A.L.; Braxton, A.M.; Grahn, M.P.; Han, K.S.; Babu, J.M.; Reichel, R.; Jiang, A.C.; Kim, B.; Hsu, J.; Amoa, F.; et al. CODA: Quantitative 3D reconstruction of large tissues at cellular resolution. Nat. Methods 2022, 19, 1490–1499. [Google Scholar] [CrossRef] [PubMed]

- Sekhar, A.; Biswas, S.; Hazra, R.; Sunaniya, A.K.; Mukherjee, A.; Yang, L. Brain Tumor Classification Using Fine-Tuned GoogLeNet Features and Machine Learning Algorithms: IoMT Enabled CAD System. IEEE J. Biomed. Health Inform. 2021, 26, 983–991. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Gao, L.; Duan, H.; Huang, G.; Ye, X.; Nie, S. Segmentation of pulmonary nodules in CT images based on 3D-UNET combined with three-dimensional conditional random field optimization. Med. Phys. 2020, 47, 4054–4063. [Google Scholar] [CrossRef] [PubMed]

- Xue, P.; Dong, E.; Ji, H. Lung 4D CT Image Registration Based on High-Order Markov Random Field. IEEE Trans. Med. Imaging 2019, 39, 910–921. [Google Scholar] [CrossRef] [PubMed]

- Gou, F.; Wu, J. Message transmission strategy based on recurrent neural network and attention mechanism in IoT system. J. Circuits Syst. Comput. 2022, 31, 2250126. [Google Scholar] [CrossRef]

- Gou, F.; Wu, J. Triad link prediction method based on the evolutionary analysis with IoT in opportunistic social networks. Comput. Commun. 2022, 181, 143–155. [Google Scholar] [CrossRef]

- Meng, Y.; Speier, W.; Ong, M.K.; Arnold, C.W. Bidirectional representation learning from transformers using multimodal electronic health record data to predict depression. IEEE J. Biomed. Health Inform. 2021, 25, 3121–3129. [Google Scholar] [CrossRef]

- Zhang, X.; Qian, B.; Li, Y.; Cao, S.; Davidson, I. Context-aware and Time-aware Attention-based Model for Disease Risk Prediction with Inter-pretability. IEEE Trans. Knowl. Data Eng. 2021, 35, 3551–3562. [Google Scholar] [CrossRef]

- Bardak, B.; Tan, M. Improving clinical outcome predictions using convolution over medical entities with multimodal learning. Artif. Intell. Med. 2021, 117, 102112. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Li, X.; Wang, X.; Chai, Y.; Zhang, Q. Locally weighted factorization machine with fuzzy partition for elderly readmission prediction. Knowl. Based Syst. 2022, 242, 108326. [Google Scholar] [CrossRef]

- Luo, X.; Gandhi, P.; Storey, S.; Zhang, Z.; Han, Z.; Huang, K. A computational framework to analyze the associations between symptoms and cancer patient attributes post chemotherapy using EHR data. IEEE J. Biomed. Health Inform. 2021, 25, 4098–4109. [Google Scholar] [CrossRef] [PubMed]

- Baman, J.R.; Mathew, D.T.; Jiang, M.; Passman, R.S. Mobile Health for Arrhythmia Diagnosis and Management. J. Gen. Intern. Med. 2021, 37, 188–197. [Google Scholar] [CrossRef] [PubMed]

- Tan, L.; Yu, K.; Bashir, A.K.; Cheng, X.; Ming, F.; Zhao, L.; Zhou, X. Toward real-time and efficient cardiovascular monitoring for COVID-19 patients by 5G-enabled wearable medical devices: A deep learning approach. Neural Comput. Appl. 2021, 35, 13921–13934. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Lam, C.H.; Xhafa, F.; Tang, V.; Ip, W. Artificial Intelligence and Data Mining Techniques for the Well-Being of Elderly. In IoT for Elderly, Aging and eHealth; Springer: Cham, Switzerland, 2022; pp. 51–66. [Google Scholar]

- Vrontis, D.; Christofi, M.; Pereira, V.; Tarba, S.; Makrides, A.; Trichina, E. Artificial intelligence, robotics, advanced technologies and human resource management: A systematic review. Int. J. Hum. Resour. Manag. 2021, 33, 1237–1266. [Google Scholar] [CrossRef]

- Battineni, G.; Mittal, M.; Jain, S. Data Visualization in the Transformation of Healthcare Industries. In Advanced Prognostic Predictive Modelling in Healthcare Data Analytics; Springer: Singapore, 2021; pp. 1–23. [Google Scholar]

- Wen, H.; Wei, M.; Du, D.; Yin, X. A Blockchain-Based Privacy Preservation Scheme in Mobile Medical. Secur. Commun. Netw. 2022; 2022, 9889263. [Google Scholar] [CrossRef]

- Bahmani, A.; Alavi, A.; Buergel, T.; Upadhyayula, S.; Wang, Q.; Ananthakrishnan, S.K.; Alavi, A.; Celis, D.; Gillespie, D.; Young, G.; et al. A scalable, secure, and interoperable platform for deep data-driven health management. Nat. Commun. 2021, 12, 5757. [Google Scholar] [CrossRef] [PubMed]

- Peters, B.S.; Armijo, P.R.; Krause, C.; Choudhury, S.A.; Oleynikov, D. Review of emerging surgical robotic technology. Surg. Endosc. 2018, 32, 1636–1655. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.-L.; Chien, Y.-H.; Chia, E.-Y.; Fu, L.-C.; Lai, J.-S. Deep learning based motion prediction for exoskeleton robot control in upper limb rehabilitation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5076–5082. [Google Scholar]

- Thananjeyan, B.; Garg, A.; Krishnan, S.; Chen, C.; Miller, L.; Goldberg, K. Multilateral surgical pattern cutting in 2D orthotropic gauze with deep reinforcement learning policies for tensioning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2371–2378. [Google Scholar]

- Li, L.; Gou, F.; Wu, J. Modified Data Delivery Strategy Based on Stochastic Block Model and Community Detection in Opportunistic Social Networks. Wirel. Commun. Mob. Comput. 2022, 2022, 5067849. [Google Scholar] [CrossRef]

- Guo, F.; Wu, J. Novel data transmission technology based on complex IoT system in opportunistic social networks. Peer-to-Peer Netw. Appl. 2022, 16, 571–588. [Google Scholar] [CrossRef]

- Gou, F.; Wu, J. Data Transmission Strategy Based on Node Motion Prediction IoT System in Opportunistic Social Networks. Wirel. Pers. Commun. 2022, 126, 1751–1768. [Google Scholar] [CrossRef]

- Cheng, K.; Li, Z.; He, Y.; Guo, Q.; Lu, Y.; Gu, S.; Wu, H. Potential Use of Artificial Intelligence in Infectious Disease: Take ChatGPT as an Example. Ann. Biomed. Eng. 2023, 51, 1130–1135. [Google Scholar] [CrossRef] [PubMed]

- Oyedotun, O.K.; Khashman, A. Deep learning in vision-based static hand gesture recognition. Neural Comput. Appl. 2016, 28, 3941–3951. [Google Scholar] [CrossRef]

- Eberhart, R.; Dobbins, R. Early neural network development history: The age of Camelot. IEEE Eng. Med. Biol. Mag. 1990, 9, 15–18. [Google Scholar] [CrossRef]

- Degadwala, S.; Vyas, D.; Dave, H. Classification of COVID-19 cases using Fine-Tune Convolution Neural Network (FT-CNN). In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 609–613. [Google Scholar] [CrossRef]

- Adday, B.N.; Shaban, F.A.J.; Jawad, M.R.; Jaleel, R.A.; Zahra, M.M.A. Enhanced Vaccine Recommender System to prevent COVID-19 based on Clustering and Classification. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies (ICEET), Istanbul, Turkey, 27–28 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, K.; Wang, R.; Liu, G.; Shu, Z.; Wang, N.; Zhang, R.; Yu, J.; Chen, J.; Li, X.; Zhou, X. HerGePred: Heterogeneous Network Embedding Representation for Disease Gene Prediction. IEEE J. Biomed. Health Inform. 2018, 23, 1805–1815. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Guo, J.; Zhao, N.; Liu, Y.; Liu, X.; Liu, G.; Guo, M. A Cancer Survival Prediction Method Based on Graph Convolutional Network. IEEE Trans. NanoBioscience 2019, 19, 117–126. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Lee, S.C.; Quinn, T.P.; Truong, B.; Li, X.; Tran, T.; Venkatesh, S.; Le, T.D. PAN: Personalized Annotation-Based Networks for the Prediction of Breast Cancer Relapse. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 2841–2847. [Google Scholar] [CrossRef] [PubMed]

- Siqueira, G.; Brito, K.L.; Dias, U.; Dias, Z. Heuristics for Genome Rearrangement Distance with Replicated Genes. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 2094–2108. [Google Scholar] [CrossRef]

- Yilmaz, S.; Tastan, O.; Cicek, A.E. SPADIS: An Algorithm for Selecting Predictive and Diverse SNPs in GWAS. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 1208–1216. [Google Scholar] [CrossRef]

- Hossain, M.; Abdullah, A.-B.M.; Shill, P.C. An Extension of Heuristic Algorithm for Reconstructing Multiple Haplotypes with Minimum Error Correction. In Proceedings of the 2020 Emerging Technology in Computing, Communication and Electronics (ETCCE), Dhaka, Bangladesh, 21–22 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kchouk, M.; Elloumi, M. Error correction and DeNovo genome Assembly for the MinIon sequencing reads mixing Illumina short reads. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; p. 1785. [Google Scholar] [CrossRef]

- Ishaq, M.; Khan, A.; Khan, M.; Imran, M. Current Trends and Ongoing Progress in the Computational Alignment of Biological Sequences. IEEE Access 2019, 7, 68380–68391. [Google Scholar] [CrossRef]

- Djeddi, W.E.; Ben Yahia, S.; Nguifo, E.M. A Novel Computational Approach for Global Alignment for Multiple Biological Networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 15, 2060–2066. [Google Scholar] [CrossRef] [PubMed]

- Battistella, E.; Vakalopoulou, M.; Sun, R.; Estienne, T.; Lerousseau, M.; Nikolaev, S.; Andres, E.A.; Carre, A.; Niyoteka, S.; Robert, C.; et al. COMBING: Clustering in Oncology for Mathematical and Biological Identification of Novel Gene Signatures. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021; 3317–3331. [Google Scholar] [CrossRef]

- Chow, K.; Sarkar, A.; Elhesha, R.; Cinaglia, P.; Ay, A.; Kahveci, T. ANCA: Alignment-Based Network Construction Algorithm. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 512–524. [Google Scholar] [CrossRef] [PubMed]

- Symeonidi, A.; Nicolaou, A.; Johannes, F.; Christlein, V. Recursive Convolutional Neural Networks for Epigenomics. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 2567–2574. [Google Scholar] [CrossRef]

- Chen, J.; Han, G.; Xu, A.; Akutsu, T.; Cai, H. Identifying miRNA-gene common and specific regulatory modules for cancer subtyping by a high-order graph matching model. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022. [Google Scholar] [CrossRef]

- Wang, Z.; Sun, H.; Shen, C.; Hu, X.; Gao, J.; Li, D.; Cao, D.; Hou, T. Combined strategies in structure-based virtual screening. Phys. Chem. Chem. Phys. 2020, 22, 3149–3159. [Google Scholar] [CrossRef] [PubMed]

- Gentile, F.; Yaacoub, J.C.; Gleave, J.; Fernandez, M.; Ton, A.-T.; Ban, F.; Stern, A.; Cherkasov, A. Artificial intelligence–enabled virtual screening of ultra-large chemical libraries with deep docking. Nat. Protoc. 2022, 17, 672–697. [Google Scholar] [CrossRef] [PubMed]

- Gonczarek, A.; Tomczak, J.M.; Zaręba, S.; Kaczmar, J.; Dąbrowski, P.; Walczak, M.J. Interaction prediction in structure-based virtual screening using deep learning. Comput. Biol. Med. 2018, 100, 253–258. [Google Scholar] [CrossRef] [PubMed]

- Gysi, D.M.; Do Valle, Í.; Zitnik, M.; Ameli, A.; Gan, X.; Varol, O.; Ghiassian, S.D.; Patten, J.J.; Davey, R.A.; Loscalzo, J.; et al. Network medicine framework for identifying drug-repurposing opportunities for COVID-19. Proc. Natl. Acad. Sci. USA 2021, 118, e2025581118. [Google Scholar] [CrossRef] [PubMed]

- Rajput, A.; Thakur, A.; Mukhopadhyay, A.; Kamboj, S.; Rastogi, A.; Gautam, S.; Jassal, H.; Kumar, M. Prediction of repurposed drugs for Coronaviruses using artificial intelligence and machine learning. Comput. Struct. Biotechnol. J. 2021, 19, 3133–3148. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gou, F.; Wu, J. Image segmentation technology based on transformer in medical decision-making system. IET Image Process. 2023, 17, 3040–3054. [Google Scholar] [CrossRef]

- Wu, J.; Xia, J.; Gou, F. Information transmission mode and IoT community reconstruction based on user influence in opportunistic social networks. Peer-to-Peer Netw. Appl. 2022, 15, 1398–1416. [Google Scholar] [CrossRef]

- Shen, Y.; Gou, F.; Dai, Z. Osteosarcoma MRI Image-Assisted Segmentation System Base on Guided Aggregated Bilateral Network. Mathematics 2022, 10, 1090. [Google Scholar] [CrossRef]

- Ouyang, T.; Yang, S.; Gou, F.; Dai, Z.; Wu, J. Rethinking U-Net from an Attention Perspective with Transformers for Osteosarcoma MRI Image Segmentation. Comput. Intell. Neurosci. 2022, 2022, 7973404. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Xiao, P.; Huang, H.; Gou, F.; Zhou, Z.; Dai, Z. An Artificial Intelligence Multiprocessing Scheme for the Diagnosis of Osteosarcoma MRI Images. IEEE J. Biomed. Health Inform. 2022, 26, 4656–4667. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Zhu, J.; Lv, B.; Yang, L.; Sun, W.; Dai, Z.; Gou, F.; Wu, J. Auxiliary Segmentation Method of Osteosarcoma MRI Image Based on Transformer and U-Net. Comput. Intell. Neurosci. 2022, 2022, 9990092. [Google Scholar] [CrossRef]

- Wu, J.; Guo, Y.; Gou, F.; Dai, Z. A medical assistant segmentation method for MRI images of osteosarcoma based on DecoupleSegNet. Int. J. Intell. Syst. 2022, 37, 8436–8461. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, J.; Tang, H.; Zhou, X.; Xiong, W. BA-GCA Net: Boundary Aware Grid Contextual At-tention Net in Osteosarcoma MRI Image Segmentation. Comput. Intell. Neurosci. 2022, 2022, 3881833. [Google Scholar] [CrossRef]

- Gou, F.; Wu, J. An Attention-based AI-assisted Segmentation System for Osteosarcoma MRI Images. In Proceedings of the 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Las Vegas, NV, USA, 6–8 December 2022; pp. 1539–1543. [Google Scholar] [CrossRef]

- Al-Antari, M.A.; Han, S.-M.; Kim, T.-S. Evaluation of deep learning detection and classification towards computer-aided diagnosis of breast lesions in digital X-ray mammograms. Comput. Methods Programs Biomed. 2020, 196, 105584. [Google Scholar] [CrossRef]

- Zheng, S.; Guo, J.; Cui, X.; Veldhuis, R.N.J.; Oudkerk, M.; van Ooijen, P.M.A. Automatic Pulmonary Nodule Detection in CT Scans Using Convolutional Neural Networks Based on Maximum Intensity Projection. IEEE Trans. Med. Imaging 2019, 39, 797–805. [Google Scholar] [CrossRef] [PubMed]

- Khosravan, N.; Bagci, U. S4ND: Single-Shot Single-Scale Lung Nodule Detection. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, September 16–20, 2018, Proceedings, Part. II 11; Springer International Publishing: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Ayana, G.; Park, J.; Choe, S.-W. Patchless Multi-Stage Transfer Learning for Improved Mammographic Breast Mass Classification. Cancers 2022, 14, 1280. [Google Scholar] [CrossRef]

- Yu, B.; Zhou, L.; Wang, L.; Yang, W.; Yanga, M.; Bourgeat, P.; Fripp, J. SA-LuT-Nets: Learning Sample-Adaptive Intensity Lookup Tables for Brain Tumor Segmentation. IEEE Trans. Med. Imaging 2021, 40, 1417–1427. [Google Scholar] [CrossRef]

- Gegundez-Arias, M.E.; Marin-Santos, D.; Perez-Borrero, I.; Vasallo-Vazquez, M.J. A new deep learning method for blood vessel segmentation in retinal images based on convolutional kernels and modified U-Net model. Comput. Methods Programs Biomed. 2021, 205, 106081. [Google Scholar] [CrossRef]

- Usman, M.; Lee, B.-D.; Byon, S.-S.; Kim, S.-H.; Lee, B.-I.; Shin, Y.-G. Volumetric lung nodule segmentation using adaptive ROI with multi-view residual learning. Sci. Rep. 2020, 10, 12839. [Google Scholar] [CrossRef] [PubMed]

- Singh, V.K.; Rashwan, H.A.; Romani, S.; Akram, F.; Pandey, N.; Sarker, M.K.; Saleh, A.; Arenas, M.; Arquez, M.; Puig, D.; et al. Breast tumor segmentation and shape classification in mammograms using generative adversarial and convolutional neural network. Expert. Syst. Appl. 2019, 139, 112855. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, Z.; Wang, X.; Zhang, X.; Cheng, L.; Lei, J.; Wang, Y.; Song, M. Edge-competing pathological liver vessel segmentation with limited labels. Proc. AAAI Conf. Artif. Intell. 2021, 35, 1325–1333. [Google Scholar] [CrossRef]

- Shakibapour, E.; Cunha, A.; Aresta, G.; Mendonça, A.M.; Campilho, A. An unsupervised metaheuristic search approach for segmentation and volume measurement of pulmonary nodules in lung CT scans. Expert. Syst. Appl. 2018, 119, 415–428. [Google Scholar] [CrossRef]

- Gur, S.; Wolf, L.; Golgher, L.; Blinder, P. Unsupervised Microvascular Image Segmentation Using an Active Contours Mimicking Neural Network. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10721–10730. [Google Scholar]

- Houssein, E.H.; Emam, M.M.; Ali, A.A. An efficient multilevel thresholding segmentation method for thermography breast cancer imaging based on improved chimp optimization algorithm. Expert. Syst. Appl. 2021, 185, 115651. [Google Scholar] [CrossRef]

- Cai, N.; Chen, H.; Li, Y.; Peng, Y.; Li, J. Adaptive Weighting Landmark-Based Group-Wise Registration on Lung DCE-MRI Images. IEEE Trans. Med. Imaging 2020, 40, 673–687. [Google Scholar] [CrossRef] [PubMed]

- Hansen, L.; Heinrich, M.P. GraphRegNet: Deep Graph Regularisation Networks on Sparse Keypoints for Dense Registration of 3D Lung CTs. IEEE Trans. Med. Imaging 2021, 40, 2246–2257. [Google Scholar] [CrossRef] [PubMed]

- Huang, W.; Yang, H.; Liu, X.; Li, C.; Zhang, I.; Wang, R.; Zheng, H.; Wang, S. A Coarse-to-Fine Deformable Transformation Framework for Unsupervised Multi-Contrast MR Image Registration with Dual Consistency Constraint. IEEE Trans. Med. Imaging 2021, 40, 2589–2599. [Google Scholar] [CrossRef]

- Huang, Y.; Ahmad, S.; Fan, J.; Shen, D.; Yap, P.-T. Difficulty-aware hierarchical convolutional neural networks for deformable registration of brain MR images. Med. Image Anal. 2020, 67, 101817. [Google Scholar] [CrossRef] [PubMed]

- Ding, Y.; Yu, X.; Yang, Y. RFNet: Region-aware Fusion Network for Incomplete Multi-modal Brain Tumor Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3955–3964. [Google Scholar] [CrossRef]

- Fu, Y.; Lei, Y.; Wang, T.; Patel, P.; Jani, A.B.; Mao, H.; Curran, W.J.; Liu, T.; Yang, X. Biomechanically constrained non-rigid MR-TRUS prostate registration using deep learning based 3D point cloud matching. Med. Image Anal. 2020, 67, 101845. [Google Scholar] [CrossRef]

- Sood, R.R.; Shao, W.; Kunder, C.; Teslovich, N.C.; Wang, J.B.; Soerensen, S.J.; Madhuripan, N.; Jawahar, A.; Brooks, J.D.; Ghanouni, P.; et al. 3D Registration of pre-surgical prostate MRI and histopathology images via super-resolution volume reconstruction. Med. Image Anal. 2021, 69, 101957. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Wang, B.; He, X.; Cui, S.; Shao, L. DR-GAN: Conditional Generative Adversarial Network for Fine-Grained Lesion Synthesis on Diabetic Retinopathy Images. IEEE J. Biomed. Health Inform. 2022, 26, 56–66. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Zheng, F.; Cong, R.; Huang, W.; Scott, M.R.; Shao, L. MCMT-GAN: Multi-Task Coherent Modality Transferable GAN for 3D Brain Image Synthesis. IEEE Trans. Image Process. 2020, 29, 8187–8198. [Google Scholar] [CrossRef] [PubMed]

- You, D.; Liu, F.; Ge, S.; Xie, X.; Zhang, J.; Wu, X. AlignTransformer: Hierarchical Alignment of Visual Regions and Disease Tags for Medical Report Generation. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Virtual Event, 27 September–1 October 2021; Volume 12903, pp. 72–82. [Google Scholar]

- Chen, Z.; Shen, Y.; Song, Y.; Wan, X. Cross-modal Memory Networks for Radiology Report Generation. arXiv 2021, arXiv:2204.13258. [Google Scholar]

- Fang, J.; Fu, H.; Liu, J. Deep triplet hashing network for case-based medical image retrieval. Med. Image Anal. 2021, 69, 101981. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Vyas, K.; Shen, M.; Yang, J.; Yang, G.-Z. Deep Graph-Based Multimodal Feature Embedding for Endomicroscopy Image Retrieval. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 481–492. [Google Scholar] [CrossRef] [PubMed]

- Shang, Y.; Tian, Y.; Zhou, M.; Zhou, T.; Lyu, K.; Wang, Z.; Xin, R.; Liang, T.; Zhu, S.; Li, J. EHR-Oriented Knowledge Graph System: Toward Efficient Utilization of Non-Used Infor-mation Buried in Routine Clinical Practice. IEEE J. Biomed. Health Inform. 2021, 25, 2463–2475. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Tan, X.; Huang, Z.; Pan, B.; Tian, J. Mining incomplete clinical data for the early assessment of Kawasaki disease based on feature clustering and convolutional neural networks. Artif. Intell. Med. 2020, 105, 101859. [Google Scholar] [CrossRef] [PubMed]

- Pokharel, S.; Zuccon, G.; Li, X.; Utomo, C.P.; Li, Y. Temporal tree representation for similarity computation between medical patients. Artif. Intell. Med. 2020, 108, 101900. [Google Scholar] [CrossRef] [PubMed]

- Yin, K.; Cheung, W.; Fung, B.C.M.; Poon, J. Learning Inter-Modal Correspondence and Phenotypes from Multi-Modal Electronic Health Records. IEEE Trans. Knowl. Data Eng. 2020, 34, 4328–4341. [Google Scholar] [CrossRef]

- Bernardini, M.; Romeo, L.; Frontoni, E.; Amini, M.R. A semi-supervised multi-task learning approach for predicting short-term kidney disease evolution. IEEE J. Biomed. Health Inform. 2021, 25, 3983–3994. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhang, Q.; Chen, F.Y.; Leung, E.Y.; Wong, E.L.; Yeoh, E.K. Tensor Factorization-Based Prediction with an Application to Estimate the Risk of Chronic Diseases. IEEE Intell. Syst. 2021, 36, 53–61. [Google Scholar] [CrossRef]

- An, Y.; Huang, N.; Chen, X.; Wu, F.; Wang, J. High-risk prediction of cardiovascular diseases via attention-based deep neural networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 1093–1105. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhu, Z.; Wu, H.; Ding, S.; Zhao, Y. CCAE: Cross-field categorical attributes embedding for cancer clinical endpoint prediction. Artif. Intell. Med. 2020, 107, 101915. [Google Scholar] [CrossRef] [PubMed]

- Chushig-Muzo, D.; Soguero-Ruiz, C.; de Miguel-Bohoyo, P.; Mora-Jiménez, I. Interpreting clinical latent representations using autoencoders and probabilistic models. Artif. Intell. Med. 2021, 122, 102211. [Google Scholar] [CrossRef] [PubMed]

- Beniczky, S.; Karoly, P.; Nurse, E.; Ryvlin, P.; Cook, M. Machine learning and wearable devices of the future. Epilepsia 2020, 62, S116–S124. [Google Scholar] [CrossRef] [PubMed]

- Gadaleta, M.; Radin, J.M.; Baca-Motes, K.; Ramos, E.; Kheterpal, V.; Topol, E.J.; Steinhubl, S.R.; Quer, G. Passive detection of COVID-19 with wearable sensors and explainable machine learning algorithms. NPJ Digit. Med. 2021, 4, 166. [Google Scholar] [CrossRef] [PubMed]

- Koh, A.; Kang, D.; Xue, Y.; Lee, S.; Pielak, R.M.; Kim, J.; Hwang, T.; Min, S.; Banks, A.; Bastien, P.; et al. A soft, wearable microfluidic device for the capture, storage, and colorimetric sensing of sweat. Sci. Transl. Med. 2016, 8, 366ra165. [Google Scholar] [CrossRef]

- Kahan, S.; Look, M.; Fitch, A. The benefit of telemedicine in obesity care. Obesity 2022, 30, 577–586. [Google Scholar] [CrossRef]

- Persaud, Y.K. Using Telemedicine to Care for the Asthma Patient. Curr. Allergy Asthma Rep. 2022, 22, 43–52. [Google Scholar] [CrossRef]

- Zhou, L.; Fan, M.; Hansen, C.; Johnson, C.R.; Weiskopf, D. A Review of Three-Dimensional Medical Image Visualization. Health Data Sci. 2022. [Google Scholar] [CrossRef] [PubMed]

- Hossain, F.; Islam-Maruf, R.; Osugi, T.; Nakashima, N.; Ahmed, A. A Study on Personal Medical History Visualization Tools for Doctors. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 547–551. [Google Scholar] [CrossRef]

- Attarian, R.; Hashemi, S. An anonymity communication protocol for security and privacy of clients in IoT-based mobile health transactions. Comput. Netw. 2021, 190, 107976. [Google Scholar] [CrossRef]

- Alzubaidy, H.K.; Al-Shammary, D.; Abed, M.H. A Survey on Patients Privacy Protection with Stganography and Visual Encryption. arXiv 2022, arXiv:2201.09388. [Google Scholar]

- Liu, X.; Zhao, J.; Li, J.; Cao, B.; Lv, Z. Federated Neural Architecture Search for Medical Data Security. IEEE Trans. Ind. Inform. 2022, 18, 5628–5636. [Google Scholar] [CrossRef]

- Zhang, L.; Zhong, S. A Health Management Platform based on CGM Device. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019; pp. 822–826. [Google Scholar]

- Wang, X.; Chen, Y.; Wang, J.; Yu, A. Design of Doctor-Patient Management Platform Based on Microservice Architecture. In Proceedings of the 2020 2nd International Conference on Applied Machine Learning (ICAML), Changsha, China, 16–18 October 2020; pp. 99–102. [Google Scholar] [CrossRef]

- Wang, S. Research on the Design of Community Health Management Service for the Aged under the Background of Medical- nursing Combined Service in Big Data Era. In Proceedings of the 2021 International Conference on Forthcoming Networks and Sustainability in AIoT Era (FoNeS-AIoT), Nicosia, Turkey, 27–28 December 2021; pp. 296–300. [Google Scholar] [CrossRef]

- Salter, K.; Jutai, J.; Hartley, M.; Foley, N.; Bhogal, S.; Bayona, N.; Teasell, R. Impact of early vs delayed admission to rehabilitation on functional outcomes in persons with stroke. J. Rehabil. Med. 2006, 38, 113–117. [Google Scholar] [CrossRef] [PubMed]

- Komalasari, R. The Ethical Consideration of Using Artificial Intelligence (AI) in Medicine. In Advanced Bioinspiration Methods for Healthcare Standards, Policies, and Reform; IGI Global: Hershey, PA, USA, 2023; pp. 1–16. [Google Scholar]

- Dharmarajan, K.; Panitch, W.; Shi, B.; Huang, H.; Chen, L.Y.; Low, T.; Fer, D.; Goldberg, K. A Trimodal Framework for Robot-Assisted Vascular Shunt Insertion When a Supervising Surgeon is Local, Remote, or Unavailable. In Proceedings of the 2023 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 19–21 April 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Rivero-Moreno, Y.; Echevarria, S.; Vidal-Valderrama, C.; Pianetti, L.; Cordova-Guilarte, J.; Navarro-Gonzalez, J.; Acevedo-Rodríguez, J.; Dorado-Avila, G.; Osorio-Romero, L.; Chavez-Campos, C.; et al. Robotic Surgery: A Comprehensive Review of the Literature and Current Trends. Cureus 2023, 15. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Ding, J.; Dong, Y.; Ma, X.; Wang, R.; Jin, K.; Zhang, H.; Zhang, Y. A survey of technologies facilitating home and community-based stroke rehabilitation. Int. J. Hum. Comput. Interact. 2022, 39, 1016–1042. [Google Scholar] [CrossRef]

- Guo, C.; Li, H. Application of G network combined with AI robots in personalized nursing in China: A. In Perspectives in Digital Health and Big Data in Medicine: Current Trends, Professional Challenges, and Ethical, Legal, and Social Implications; 2023; Volume 90.

- Nwoye, C.I.; Mutter, D.; Marescaux, J.; Padoy, N. Weakly supervised convolutional LSTM approach for tool tracking in laparoscopic videos. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1059–1067. [Google Scholar] [CrossRef]

- Aviles, A.I.; Alsaleh, S.M.; Hahn, J.K.; Casals, A. Towards retrieving force feedback in robotic-assisted surgery: A su-pervised neuro-recurrent-vision approach. IEEE Trans. Haptics 2016, 10, 431–443. [Google Scholar] [CrossRef]

- De Momi, E.; Kranendonk, L.; Valenti, M.; Enayati, N.; Ferrigno, G. A neural network-based approach for trajectory planning in robot–human handover tasks. Front. Robot. AI 2016, 3, 34. [Google Scholar] [CrossRef]

- Celina, A.; Raj, V.H.; Ajay, V.; Ramachandran, G.; Kumar, C.; Muthumanickam, T. Artificial Intelligence for Development of Variable Power Biomedical Electronics Gadgets Applications. In Proceedings of the 2023 Second International Conference on Augmented Intelligence and Sustainable Systems (ICAISS), Trichy, India, 23–25 August 2023; pp. 145–149. [Google Scholar] [CrossRef]

- He, Z.; Liu, J.; Gou, F.; Wu, J. An Innovative Solution Based on TSCA-ViT for Osteosarcoma Diagnosis in Resource-Limited Settings. Biomedicines 2023, 11, 2740. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Yu, L.; Zhu, J.; Tang, H.; Gou, F.; Wu, J. Auxiliary Segmentation Method of Osteosarcoma in MRI Images Based on Denoising and Local Enhancement. Healthcare 2022, 10, 1468. [Google Scholar] [CrossRef] [PubMed]

- Gou, F.; Wu, J. Optimization of edge server group collaboration architecture strategy in IoT smart cities application. Peer-to-Peer Netw. Appl. 2024. [Google Scholar] [CrossRef]

- Huang, Z.; Ling, Z.; Gou, F.; Wu, J. Medical Assisted-Segmentation System Based on Global Feature and Stepwise Feature Integration for Feature Loss Problem. Biomed. Signal Process. Control 2024, 89, 105814. [Google Scholar] [CrossRef]

- Werbos, P.J. Applications of advances in nonlinear sensitivity analysis. In System Modeling and Optimization; Lecture Notes in Control and Information Sciences; Drenick, R.F., Kozin, F., Eds.; Springer: Berlin/Heidelberg, Germany, 1982; Volume 38. [Google Scholar] [CrossRef]

- Gou, F.; Tang, X.; Liu, J.; Wu, J. Artificial intelligence multiprocessing scheme for pathology images based on transformer for nuclei segmentation. Complex. Intell. Syst. 2024. [Google Scholar] [CrossRef]

- Lucas, A.; Revell, A.; Davis, K.A. Artificial intelligence in epilepsy—Applications and pathways to the clinic. Nat. Rev. Neurol. 2024, 20, 319–336. [Google Scholar] [CrossRef]

- Zhong, X.; Gou, F.; Wu, J. An intelligent MRI assisted diagnosis and treatment system for osteosarcoma based on super-resolution. Complex Intell. Syst. 2024. [Google Scholar] [CrossRef]

- Zhan, X.; Long, H.; Gou, F.; Wu, J. A semantic fidelity interpretable-assisted decision model for lung nodule classification. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 625–633. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, G.; Hu, X.; Xiao, J. Artificial Intelligence Auxiliary Diagnosis and Treatment System for Breast Cancer in Developing Countries. J. X-ray Sci. Technol. 2024, 32, 395–413. [Google Scholar] [CrossRef]

- Karargyris, A.; Umeton, R.; Sheller, M.J.; Aristizabal, A.; George, J.; Wuest, A.; Pati, S.; Kassem, H.; Zenk, M.; Baid, U.; et al. Federated benchmarking of medical artificial intelligence with MedPerf. Nat. Mach. Intell. 2023, 5, 799–810. [Google Scholar] [CrossRef]

- Wu, J.; Luo, T.; Zeng, J.; Gou, F. Continuous Refinement-Based Digital Pathology Image Assistance Scheme in Medical Decision-Making Systems. IEEE J. Biomed. Health Inform. 2024, 28, 2091–2102. [Google Scholar] [CrossRef]

- Kumar, K.; Kumar, P.; Deb, D.; Unguresan, M.-L.; Muresan, V. Artificial Intelligence and Machine Learning Based Intervention in Medical Infrastructure: A Review and Future Trends. Healthcare 2023, 11, 207. [Google Scholar] [CrossRef]

- Li, B.; Liu, F.; Lv, B.; Zhang, Y.; Gou, F.; Wu, J. Cytopathology image analysis method based on high-resolution medical representation learning in medical decision-making system. Complex Intell. Syst. 2024, 10, 4253–4274. [Google Scholar] [CrossRef]

- Cadario, R.; Longoni, C.; Morewedge, C.K. Understanding, explaining, and utilizing medical artificial intelligence. Nat. Hum. Behav. 2021, 5, 1636–1642. [Google Scholar] [CrossRef] [PubMed]

- Moses, M.E.; Rankin, S.M.G. Medical artificial intelligence should do no harm. Nat. Rev. Electr. Eng. 2024, 1, 280–281. [Google Scholar] [CrossRef]

- Ibuki, T.; Ibuki, A.; Nakazawa, E. Possibilities and ethical issues of entrusting nursing tasks to robots and artificial intelligence. Nurs. Ethics 2023. [Google Scholar] [CrossRef] [PubMed]

- Kolbinger, F.R.; Veldhuizen, G.P.; Zhu, J.; Truhn, D.; Kather, J.N. Reporting guidelines in medical artificial intelligence: A systematic review and meta-analysis. Commun. Med. 2024, 4, 71. [Google Scholar] [CrossRef]

- Xu, K.; Feng, H.; Zhang, H.; He, C.; Kang, H.; Yuan, T.; Shi, L.; Zhou, C.; Hua, G.; Cao, Y.; et al. Structure-guided discovery of highly efficient cytidine deaminases with sequence-context independence. Nat. Biomed. Eng. 2024, 24, 427–441. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Q.; Liu, H.; Chen, Z.; Li, Z.; Zhuo, Y.; Li, K.; Wang, C.; Huang, J. Healthcare facilities management: A novel data-driven model for predictive maintenance of computed tomography equipment. Artif. Intell. Med. 2024, 149, 102807. [Google Scholar] [CrossRef]

- Perez-Lopez, R.; Laleh, N.G.; Mahmood, F.; Kather, J.N. A guide to artificial intelligence for cancer researchers. Nat. Rev. Cancer 2024, 24, 427–441. [Google Scholar] [CrossRef]

- Costello, J.; Kaur, M.; Reformat, M.Z.; Bolduc, F.V. Leveraging knowledge graphs and natural language processing for automated web resource labeling and knowledge mobilization in neurodevelopmental disorders: Development and usability study. J. Med. Internet Res. 2023, 25, e45268. [Google Scholar]

- Stamate, E.; Piraianu, A.-I.; Ciobotaru, O.R.; Crassas, R.; Duca, O.; Fulga, A.; Grigore, I.; Vintila, V.; Fulga, I.; Ciobotaru, O.C. Revolutionizing Cardiology through Artificial Intelligence—Big Data from Proactive Prevention to Precise Diagnostics and Cutting-Edge Treatment—A Comprehensive Review of the Past 5 Years. Diagnostics 2024, 14, 1103. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, K.; Chen, D.Z.; Wu, J. AI-Enhanced Virtual Reality in Medicine: A Comprehensive Survey. arXiv 2024, arXiv:2402.03093. [Google Scholar]

| Algorithm | Property | Description | Advantage | Limitation |

|---|---|---|---|---|

| Linear regression | Supervised learning | Model the relationship between independent and dependent variables. | 1. Easy to implement. 2. Good interpretability, is conducive to decision analysis. | Unable to handle highly complex/non-linear data. |

| Naive bayes | Supervised learning | Based on Bayes’ theorem and features independence, it uses knowledge of probability and statistics to classify. | 1. Robust, easy to implement, and interpretability. 2. Can incremental training. | The data independence is too strict. |

| K-nearest neighbor (KNN) | Unsupervised learning | Find the K nearest nodes in the high-dimensional feature space. | 1. Easy to implement, can incremental training. 2. Can be used for classification or regression tasks; 3. Not sensitive to outliers. | 1. high computational complexity. 2. not suitable for data imbalance tasks. 3. Need enough nodes. |

| Decision tree | Supervised learning | Build probability functions and tree structures to achieve layer-by-layer prediction. | 1. Strong interpretability. 2. Numerical and Boolean data can be handled. | 1. Easy to overfit. 2. Ignore associations between data. |

| Clustering | Unsupervised learning | Based on similarity, maximize the distance between clusters and reduce the distance within clusters. | Can handle complex high-dimensional data. | 1. Cannot perform incremental training. 2. Influential hyper parameters, it is bad for training. |

| Support vector machines | Supervised learning | Set the maximum margin hyperplane as the decision boundary (nonlinear data can be processed by kernel methods). | 1. Can handle high-dimensional data. 2. Strong generalization. 3. Can solve the small samples problem. | 1. Difficult to find a suitable kernel function. 2. Sensitive to missing data. 3. Weak interpretability |

| Principal component analysis | Unsupervised learning | Use fewer features to reflect the original feature space to achieve dimensionality reduction. | 1. Reduce data complexity. 2. Can de-noise to a certain extent. 3. No hyper parameter limit. | 1. In the case of non-Gaussian distributions, the results may not be optimal. 2. Cannot handle irregular data. |

| Artificial neural networks | All seven categories | Connect a large number of nodes to each other according to different connection methods. | 1. Can self-learning and generalization. 2. High accuracy. | 1. Not interpretability. 2. Huge computational complexity, need sufficient hardware support. |

| Multi-layer perceptron (MLP) | Supervised learning | A type of artificial neural network model. | 1. Universal approximation. 2. High fault tolerance. 3. Able to learn complex relationships; 4.can quickly calculate large-scale data. | 1. Easy to overfitting. 2. Requires a large; amount of training data. 3. Low interpretability. |

| Conditional Random Field (CRF) | Supervised learning | Probabilistic graphical models are used for modeling and predicting sequential data. | 1. Handle sequence data. 2. Capable of capturing long-term dependencies in sequence data. 3. Flexible model structure. | 1. High computational complexity when dealing with long sequential data. 2. High difficulty in parameter adjustment. 3. Poor interpretability. |

| Convolutional Neural Network (CNN) | Supervised learning | A neural network consisting of multiple convolutional, pooling and fully connected layers. | 1. No need to manually design features. 2. Weights can be shared. | 1. Higher computational complexity. 2. easy to overfitting. 3. Poor interpretability; 4. prone to overfitting |

| Generative Adversarial Network (GAN) | Unsupervised learning | A model consisting of a generator and a discriminator. | 1. Highly readable and understandable. 2. Does not require labeled data. 3. Highly flexible: can be used for various types of data and tasks. | 1. The training process is more difficult and requires tuning of multiple hyper parameters. 2. Instability. 3. Evaluation is more difficult. 4. Poor interpretability. |

| Deep Belief Network (DBN) | Unsupervised learning | Models consisting of multiple Restricted Boltzmann Machines. | 1. Can learn the distribution of complex data and multi-layer feature representation. 2. Can generate new data samples. 3. High flexibility. | 1. Higher computational complexity. 2. Difficult training process. 3. Poor model interpretability. 4. Easy to overfitting. |

| Gradient Boosting | Supervised learning | An ensemble learning algorithm that improves prediction accuracy by combining multiple weak learners. | 1. High accuracy. 2. Higher flexibility. 3. Higher robustness. 4. Decision-making process relatively easy to explain and understand. | 1. The process of tuning parameter is more difficult. 2. Higher computational complexity. 3. Easy to overfitting. 4. Need to choose a suitable weak learner. |

| Boosting | Supervised learning | An integrated learning method for combining weak classifications into one strong classifier through training. | 1. Reduce the risk of overfitting. 2. Applicable to various types of data. | 1. Easily affected by outliers; 2. Complicated adjustment. 3. High computational complexity. |

| Random trees | Supervised learning | An integrated learning approach consisting of multiple decision trees. | 1. Applicable to high-dimensional data; 2. Insensitive to outliers. 3. Can be calculated in parallel. | 1. Poor model interpretation. 2. High resource consumption. |

| Major Frameworks | Advantages | Disadvantages | Language | Source Code |

|---|---|---|---|---|

| Tensorflow | 1. It has a powerful computing cluster and can run models on mobile platforms such as iOS and Android; 2. It has better visualization effect of computational graph. | 1. Lack of many pre-trained models; 2. Does not support OpenCL. | C++/Python/Java/R, etc. | https://github.com/tensorflow/tensorflow (accessed on 1 February 2024). |

| Keras | 1. Highly modular, very simple to build a network; 2. Simple API with uniform style; 3. Easy to extend, easy to add new modules, just write new classes or functions modeled after existing modules. | 1. Slow speed; 2. The program occupies a lot of GPU memory. | Python/R | https://github.com/keras-team/keras (accessed on 1 February 2024). |

| Caffe | 1. C++/CUDA/Python code, fast and high performance; 2. Factory design mode, code structure is clear, readable and extensible; 3. Support command line, Python, and Matlab interfaces, easy to use; 4. It is convenient to switch between CPU and GPU, and multi-GPU training is convenient. | 1. Source code modification threshold is high, need to achieve forward/back propagation; 2. Automatic differentiation is not supported. | C++/Python/Matlab | https://github.com/BVLC/caffe (accessed on 1 February 2024). |

| PyTorch | 1. API design is very simple and consistent; 2. Dynamic diagrams and can be debugged just like normal Python code; 3. Its error specification is usually easy to read. | 1. Visualization requires a third party 2. Production deployment requires an API server. | C/C++/Python | https://github.com/pytorch/pytorch (accessed on 1 February 2024). |

| MXNet | 1. Support for both imperative and symbolic programming models; 2. Support distributed training on multi-CPU/GPU devices to make full use of the scale advantages of cloud computing. | Interface document mess. | C++/Python/R, etc. | https://github.com/apache/incubator-mxnet (accessed on 1 February 2024). |

| Reference | Application Object | AI/ML Technology | Advantages | Disadvantage |

|---|---|---|---|---|

| gcForest [13] | Prediction of breast cancer subtype | Convolutional neural network, spectral clustering algorithm, inductive clustering technique | High prediction accuracy | Discretization leads to information loss |

| HOPA_MDA [17] | The miRNA disease association prediction | Higher-order proximity | Performance is further improved on the basis of HOP_MD. | The association between unmarked miRNAs and diseases is difficult to measure |

| FLNSNLI [21] | Predicting the association between miRNA and disease | Fast linear neighborhood similarity | High-precision performance, less data requirements | Initial miRNA and disease associated data are required |

| RW-RDGN [64] | Disease gene prediction | network embedding representation, Heterogeneous networks | Excellent performance beyond existing similar methods | Application of heterogeneous disease genes to be developed |

| GCGCN [66] | Survival prediction of breast cancer and lung squamous cell carcinoma | Graph convolution network | Excellent prediction effect and expansion performance | Large sample size is needed to achieve better prediction results |

| PAN [67] | Prediction of breast cancer recurrence | Annotation-based networks | Solving the Limitation of Personalized Gene Network | Large consumption of calculation process |

| Type | Reference | AI/ML Techniques | Application Example | Features |

|---|---|---|---|---|

| Sequence alignment | SPADIS [67] | Approximation algorithms | SNP dataset analysis | Wide application range. Incomplete data annotations easily lead to large deviations. |

| Sequence correction | EHMEC [69] | Heuristic algorithms | Polyploid reconstruction haplotype | Minimize the number of errors between DNA reading arrays. |

| MiRCAp [70] | Next-Generation Sequencing | DNA sequencing instrument | Correct by forming multiple sequence alignments: delete, insert, and replace errors without requiring large storage space. | |

| Sequence relationship | Needleman-wunsch [71], Smith-waterman [71] | Dynamic programming optimization, Bayesian method, Genetic Algorithms | Comparison of nucleic acid or protein sequences | Identify the homology between proteins to track evolution. Pairs sequences search and compare optimized or closely related fragments. |

| BLAST [71], FASTA [71] | Paired sequence alignment and search | Depending on the frequency of amino acid distribution, the local similarity of alignment optimization is approximated directly. It can also be used to discover potential homologues. | ||

| MegaBLAT/CombAlign [71] | Structure-based pairwise comparison | Sequence alignment and search program were derived based on BLAST, showing the relationship between single residues and identifying the similarities and different regions between the alignment proteins. | ||

| MSA [71] | Parallel alignment of multi-genome sequences | Explore the similarity and relationship between sequences and find sequence special motifs. | ||

| SEGA/REGA/mGA/Kenobi/K2 [71] | Tracing the origin of sequence evolution | Evolution or genetic algorithm can be used to trace the origin of sequence evolution. | ||

| MAPPIN [72] | Bipartite graph | Globally aligned multiprotein interaction networks and analysis | The PPI network was analyzed, and the topological structure and function similarity regions between molecular networks of different species were found. | |

| Optimistic algorithm | ReChrome [75] | Convolutional neural networks | Histone analysis and gene expression prediction | Can be applied to any size of genomic data, reducing the number of parameters, not affected by any type of overfitting. |

| HOGMCS [76] | High-order graph matching | Molecular Mechanism of Cancer | Improve the accuracy and reliability of miRNA–gene interaction recognition. |

| Algorithm Type | Mainstream Algorithm | Research Status | Prospect |

|---|---|---|---|

| Supervised learning | Machine learning, DTINet, etc. | AUC (Area Under Curve) value of 0.75 | Lack of adequate labels and low label quality limit development |

| Unsupervised learning | Clustering algorithm, MANTRA, etc. | Accuracy is usually moderate | The new drug disease association, which is lacking in the current understanding of pharmacology, has great prospects |

| Semi-supervised learning | LapRLS, LPMIHN, NetCBP, etc. | There are many successful cases | Strike a balance between accuracy and universality of new examples, with high research value |

| Region | References | Modality | Dimension | Method | Performance |

|---|---|---|---|---|---|

| Breast | Mohammed et al. [29] (2018) | X-ray | 2D | CNN | DDSM: 99.7% Acc(D)/97% Acc(C) |

| Antari et al. [91] (2020) | X-ray | 2D | CNN | DDSM: 99.17% Acc(D)/97.5% Acc(C) Inbreast: 97.27% Acc(D)/95.32%Acc(C) | |

| Sekhar et al. [36] (2022) | X-ray | 2D | TL, CNN | DDSM: 100% AUC(C) Inbreast: 99.94% AUC(C) MIAS: 99.93% AUC(C) | |

| Lung | Khosravan et al. [93] (2018) | CT | 3D | DCNN | LUNA: 0.897 CPM(D) |

| Zheng et al. [92] (2020) | CT | 2.5D | MIP, CNN | LIDC: 92.7% Sen/1 FPs(D) | |

| Liu et al. [31] (2021) | CT | 3D | MTL, CNN | LUNA: 0.939 CPM(D) |

| Region | References | Modality | Dimension | Method | Performance |

|---|---|---|---|---|---|

| Breast | Singh et al. [98] (2020) | X-ray | 2D | GAN, CNN, Semi-supervise | DDSM: 94% DSC, 87% IoU |

| Essam et al. [102] (2021) | Infrared Image | 2D | MH, ML | Private. It is better than the other nine metaheuristics (MH). | |

| Brain tumor | Yu et al. [95] (2021) | MRI | 3D | CNN | BRATS2018: 86.45% mDSC, 3.67 mHD BRATS2019: 84.61% mDSC, 3.69 mHD |

| Vessel | Gur et al. [101] (2019) | Microscopic Image | 2D | Unsupervised-DL, Morphology | VessINN: 82.9% DSC, 99.2% Sen DeepVess: 77.6% DSC, 92.3% Sen |

| Feng et al. [99] (2021) | Pathological Image | 2D | GAN | Private: 99.5% DSC, 97.25% mIoU | |

| Arias et al. [96] (2021) | OCT | 2D | CNN | DRIVE: 89.97% Sen, 96.90% Spe, 95.63% Acc | |

| Lung | Shakibapour et al. [100] (2018) | CT | 3D | CNN, Unsupervise | LUNA: 82.35% DSC LIDC: 71.05% DSC |

| Wu et al. [37] (2020) | CT | 3D | CNN, CRF | LIDC: 83.3% DSC | |

| Usman et al. [97] (2020) | CT | 2.5D | Semi-Automatic, CNN | LIDC: 87.5% DSC |

| Region | Reference | Modality | Method | Contributions |

|---|---|---|---|---|

| Lung | Cai, et al. [103] (2021) | MRI | Landmark-based | Adaptive landmark weighting strategy can reduce the error caused by landmark mismatch. |

| Hansen and Heinrich [104] (2021) | CT | GCN and CNN | CNN and GCN are used to extract discrete displacement space and spatial dimension | |

| Xue, et al. [38] (2019) | CT | MRF-based | Design a higher-order energy function to maintain the topology. | |

| Brain | Huang, et al. [105] (2021) | MRI | STN-based | (1) Integration of multimodal affine and deformable transformations. (2) Derivation of reversible deformation. |

| Huang, et al. [106] (2021) | MRI | DNN-based | (1) A new multi-scale cascade network. (2) Design a difficulty sensing module to gradually feed forward the hard region to subsequent subnetworks. | |

| Fan, et al. [107] (2019) | MRI | FCN-based | (1) Use deformation field to guide ground truth. (2) Use the difference between the images after registration to guide the image heterogeneity. | |

| Prostate | Fu, et al. [108] (2021) | US and MRI | CNN-Based | Combine with point cloud matching for registration. |

| Sood, et al. [109] (2021) | MRI and Histopathology images | GAN-based | (1) Introduce a new super-resolution generative adversarial network. (2) Don’t require interpolation. |

| Type | Reference | Method | Deficiency |

|---|---|---|---|

| Patient representation based on discrete medical concepts | [45] | Clustering and association rules | Negative detection and word sense disambiguation in the model may make some symptom concepts missed. |

| Patient representation based on time series medical data | [41] | Improved BERT model | The extracted semantic features may affect the performance of the model due to the constraints of the bag-of-words assumption. |

| [42] | Attention-based predictive model | Model does not take the alignment of ICD codes in different standards into account. | |

| [118] | Temporal tree model | Obtained patient representations may only perform well on upstream tasks such as computing similarity. | |

| Patient representation based on multimodal data | [117] | Clustering and CNN | Models account for imputation on incomplete datasets, but also introduce data bias and noise. |

| [12] | CNN-LSTM | Models are difficult to interpret and may not be clinically acceptable. | |

| [119] | Collective Hidden Interaction Tensor Decomposition Model | The process of reconstructing hidden interaction tensors to infer unobserved modes is difficult to explain. |

| References | Technical Tools | Protection Links | Description | ||

|---|---|---|---|---|---|

| Transmission | Storage | Visualization | |||

| [50] | Game Theory | √ | Proposing a Markov theory-based game model for privacy protection in e-health applications. | ||

| [51] | Blockchain | √ | √ | Proposing a blockchain technology-based authentication scheme for securing medical data. | |

| [132] | Anonymous communication protocol | √ | Proposing an anonymous communication protocol for mobile health to protect data security and identity privacy. | ||

| [133] | Visual Encryption | √ | An overview of steganography and visual cryptography for more than 30 models. | ||

| [134] | Federal Learning | √ | √ | Proposing a federal learning framework that does not transmit medical data but only the parameters of the training results. | |

| Classification | Application Type | Application Example |

|---|---|---|

| Surgical robots | Orthopaedic robots | Pelvic fracture repositioning robot |

| Surgical robots | Automatic suture surgical robot, soft tissue surgery robot. | |

| Rehabilitation robots | Rehabilitation training robots | Portable planar passive rehabilitation robot; ankle rehabilitation robot. |

| Exoskeleton robots | Upper limb rehabilitation exoskeleton robot. | |

| Assistive robots | Positioning and diagnostic robots | Spinal injection needle positioning robot; soft surgery robot for lung cancer diagnosis and treatment; airbag endoscopy robot; magnetic positioning robot. |

| Robot assistant | MRI-guided low back pain injection with a fully driven body robotic assistant. | |

| Telemedicine robots | Telemedicine robot based on standard imaging technology robotic arm control. |

| Literature | Specific Application Areas |

|---|---|

| References [13,15,17,21,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,166] | Genomics |

| References [23,24,25,26,27,28,76,77,78,79,80,81] | Drug Discovery |

| References [3,4], References [6,14,16,19,20,29,30,31,33,35,36,37,38,82,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,130,149,151,153,155,156,157,159,161] | Medical image |

| References [41,42,43,44,45,50,116,117,118,119,120,121,122,123,124,154] | Electronic health Record |

| References [46,47,48,51,52,59,60,61,125,126,127,128,129,131,135,136,137,138,140,167] | Health Management |

| References [53,54,55,141,142,143,144,145,146,147,164] | Medical robots |

| References [1,12,168] | Artificial intelligence medical assisted teaching |

| References [5,7,9,10,12,22,53,130,139,160,162,163,165,169] | Comprehensive review |

| References [8,132,133,134] | Medical data security |

| References [11,61] | Medical AI models and frameworks |

| References [2,18,24,32,34,39,40,49,56,57,58,83,148,150,152,158,164,170,171] | Other |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gou, F.; Liu, J.; Xiao, C.; Wu, J. Research on Artificial-Intelligence-Assisted Medicine: A Survey on Medical Artificial Intelligence. Diagnostics 2024, 14, 1472. https://doi.org/10.3390/diagnostics14141472

Gou F, Liu J, Xiao C, Wu J. Research on Artificial-Intelligence-Assisted Medicine: A Survey on Medical Artificial Intelligence. Diagnostics. 2024; 14(14):1472. https://doi.org/10.3390/diagnostics14141472

Chicago/Turabian StyleGou, Fangfang, Jun Liu, Chunwen Xiao, and Jia Wu. 2024. "Research on Artificial-Intelligence-Assisted Medicine: A Survey on Medical Artificial Intelligence" Diagnostics 14, no. 14: 1472. https://doi.org/10.3390/diagnostics14141472

APA StyleGou, F., Liu, J., Xiao, C., & Wu, J. (2024). Research on Artificial-Intelligence-Assisted Medicine: A Survey on Medical Artificial Intelligence. Diagnostics, 14(14), 1472. https://doi.org/10.3390/diagnostics14141472