External Quality Assessment (EQA) for SARS-CoV-2 RNA Point-of-Care Testing in Primary Healthcare Services: Analytical Performance over Seven EQA Cycles

Abstract

1. Introduction

2. Methods

2.1. Molecular SARS-CoV-2 POC Testing Network

2.2. SARS-CoV-2 POC Testing Operator Training and Competency

2.3. EQA Survey Organisation and Samples

2.4. EQA Dispatch and Storage

2.5. Testing of EQA Specimens and Result Interpretation

2.6. Submission and Assessment of Results

2.7. EQA Participant Reports

2.8. Corrective Action and Re-Training

3. Results

3.1. Participating Health Services

3.2. Quality Assurance Performance

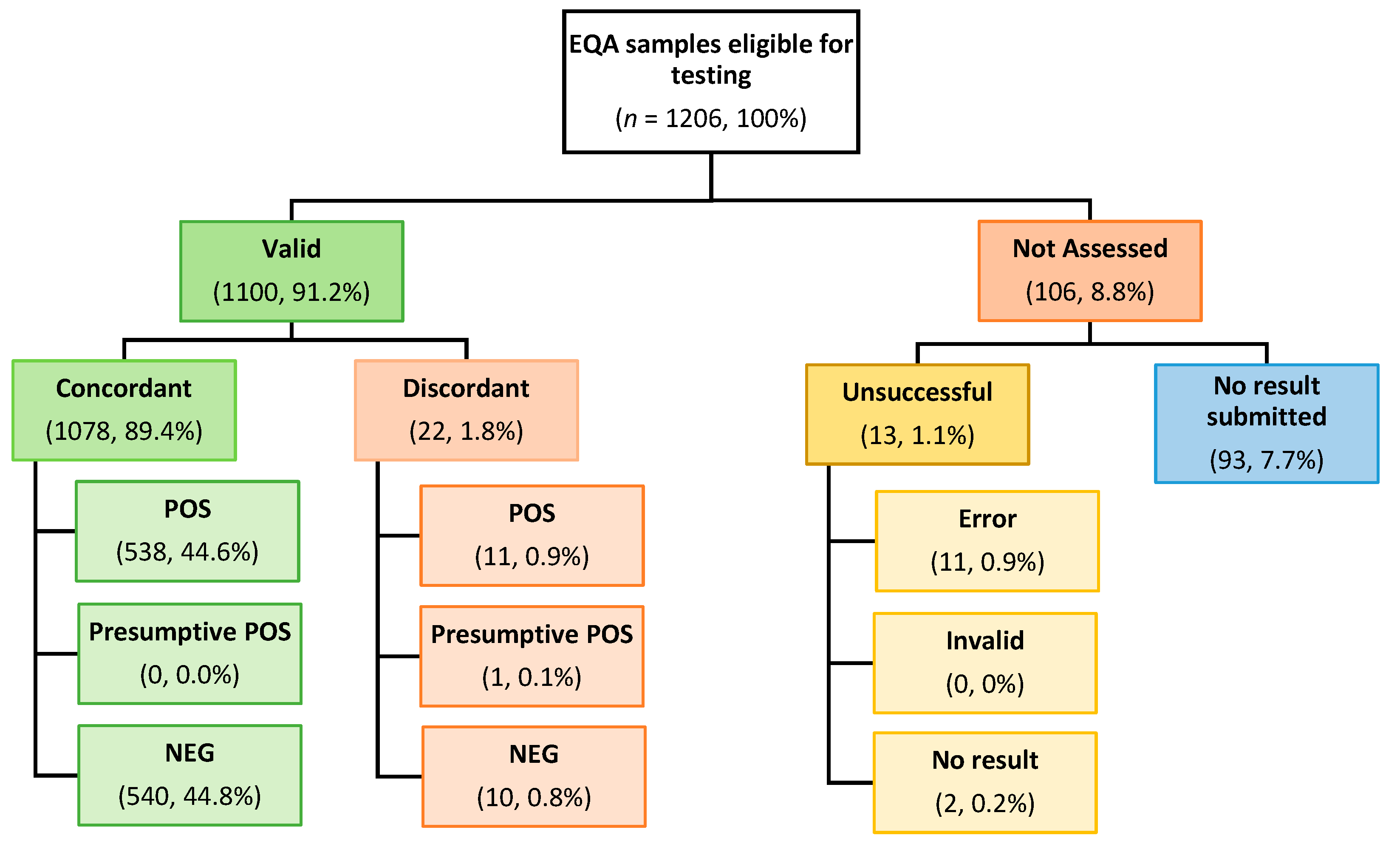

3.2.1. Overall EQA Performance

3.2.2. Inter-Site Performance by Specimen

3.2.3. Reporting of Discordant Results

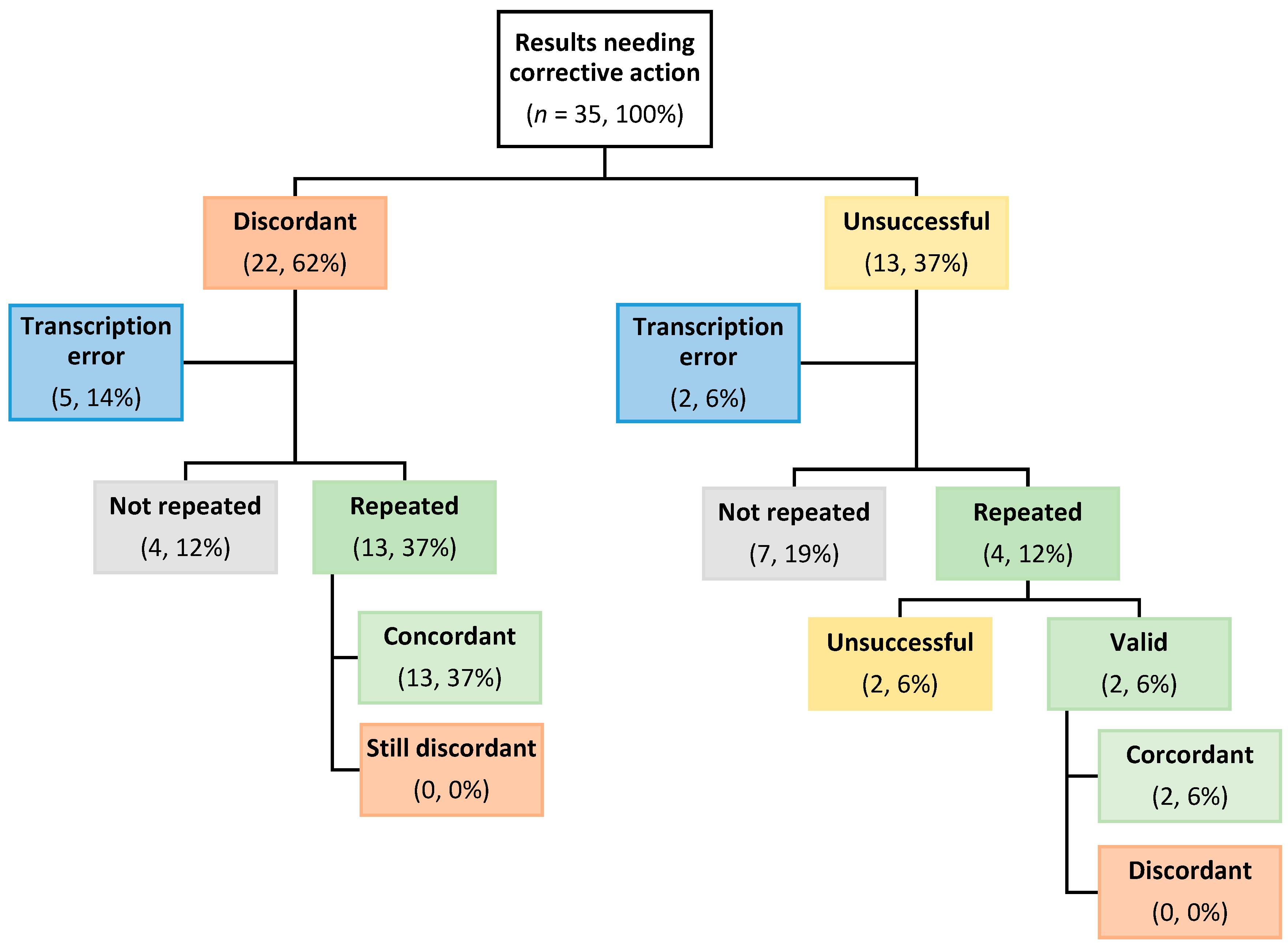

3.2.4. Post-Report Corrective Actioning of Discordant Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hengel, B.; Causer, L.; Matthews, S.; Smith, K.; Andrewartha, K.; Badman, S.; Spaeth, B.; Tangey, A.; Cunningham, P.; Saha, A.; et al. A decentralised point-of-care testing model to address inequities in the COVID-19 response. Lancet Infect. Dis. 2021, 21, e183–e190. [Google Scholar] [CrossRef] [PubMed]

- Lafferty, L.; Smith, K.; Causer, L.; Andrewartha, K.; Whiley, D.; Badman, S.G.; Donovan, B.; Anderson, L.; Tangey, A.; Mak, D.; et al. Scaling up sexually transmissible infections point-of-care testing in remote Aboriginal and Torres Strait Islander communities: Healthcare workers’ perceptions of the barriers and facilitators. Implement. Sci. Commun. 2021, 2, 127. [Google Scholar] [CrossRef] [PubMed]

- Guy, R.J.; Ward, J.; Causer, L.M.; Natoli, L.; Badman, S.G.; Tangey, A.; Hengel, B.; Wand, H.; Whiley, D.; Tabrizi, S.N.; et al. Molecular point-of-care testing for chlamydia and gonorrhoea in Indigenous Australians attending remote primary health services (TTANGO): A cluster-randomised, controlled, crossover trial. Lancet Infect. Dis. 2018, 18, 1117–1126. [Google Scholar] [CrossRef] [PubMed]

- Australian Government Department of Health and Aged Care. Evaluation of COVID-19 Point-of-Care Testing in Remote and First Nations Communities; Australian Government Department of Health and Aged Care: Canberra, Australia, 2022.

- National Pathology Accreditation Advisory Council. Requirements for Quality Control, External Quality Assurance and Method Evaluation, 6th ed.; Australian Government Department of Health and Aged Care: Canberra, Australia, 2018.

- World Health Organisation (WHO). Laboratory Quality Management System: Handbook; WHO: Geneva, Switzerland, 2011. [Google Scholar]

- Saha, A.; Andrewartha, K.; Badman, S.G.; Tangey, A.; Smith, K.S.; Sandler, S.; Ramsay, S.; Braund, W.; Manoj-Margison, S.; Matthews, S.; et al. Flexible and Innovative Connectivity Solution to Support National Decentralized Infectious Diseases Point-of-Care Testing Programs in Primary Health Services: Descriptive Evaluation Study. J. Med. Internet Res. 2023, 25, e46701. [Google Scholar] [CrossRef] [PubMed]

- van Bockel, D.; Munier, C.M.L.; Turville, S.; Badman, S.G.; Walker, G.; Stella, A.O.; Aggarwal, A.; Yeang, M.; Condylios, A.; Kelleher, A.D.; et al. Evaluation of Commercially Available Viral Transport Medium (VTM) for SARS-CoV-2 Inactivation and Use in Point-of-Care (POC) Testing. Viruses 2020, 12, 1208. [Google Scholar] [CrossRef] [PubMed]

- Lau, K.A.; Kaufer, A.; Gray, J.; Theis, T.; Rawlinson, W.D. Proficiency testing for SARS-CoV-2 in assuring the quality and overall performance in viral RNA detection in clinical and public health laboratories. Pathology 2022, 54, 472–478. [Google Scholar] [CrossRef] [PubMed]

- Edson, D.C.; Casey, D.L.; Harmer, S.E.; Downes, F.P. Identification of SARS-CoV-2 in a Proficiency Testing Program. Am. J. Clin. Pathol. 2020, 154, 475–478. [Google Scholar] [CrossRef] [PubMed]

- Matheeussen, V.; Corman, V.M.; Mantke, O.D.; McCulloch, E.; Lammens, C.; Goossens, H.; Niemeyer, D.; Wallace, P.S.; Klapper, P.; Niesters, H.G. International external quality assessment for SARS-CoV-2 molecular detection and survey on clinical laboratory preparedness during the COVID-19 pandemic, April/May 2020. Eurosurveillance 2020, 25, 2001223. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Han, M.G.; Yoo, C.K.; Lee, S.W.; Chung, Y.S.; Park, J.S.; Kim, M.N.; Lee, H.; Hong, K.H.; Seong, M.W.; et al. Nationwide External Quality Assessment of SARS-CoV-2 Molecular Testing, South Korea. Emerg. Infect. Dis. 2020, 26, 2353–2360. [Google Scholar] [CrossRef] [PubMed]

| EQA Specification | Description |

|---|---|

| Deactivated material | Gamma irradiated to allow safe product transportation and reduce infectivity risk to operator |

| Liquid-stable | No reconstitution required by operator. Vial size facilitated safe handling with the assay manufacturer’s sampling pipette (i.e., no additional equipment required) |

| Temperature-stable | Product shipped at ambient temperature |

| Variable viral loads | Survey included negative and positive specimens, with positive viral loads varied across survey production |

| Inclusion of variants of concern | Delta and Omicron variants of concern were included in EQA specimen manufacture as they became prominent through the pandemic |

| Sample volume | Sample volume was adequate to allow for repeat testing, if required for corrective action |

| Vial identification | Survey-specific coloured vial lids for easy specimen number identification |

| Instructions for Use | Printed, customised layperson instructions for use, with reference to colour coded specimens |

| Survey Frequency | Minimised to two samples per survey at quarterly intervals to reduce burden on remote health service staff |

| Dispatch Lists | Customised survey-specific product dispatch lists to maximise successful delivery to remote health services |

| Result Submission | Options for simple, program-specific, paper-based (and later) web-based result submission using the POC testing resources or website with the same operator ID and password |

| Reports | Simplified, layperson feedback reports for health services |

| Survey Number and Issue Date (Month, Year) | Specimen ID | Specimen Constituent | SARS-CoV-2 Gene Target/s and Expected Cycle Threshold (Ct) | Expected Specimen Qualitative Result |

|---|---|---|---|---|

| 1 (Jul 2020) | MCoV-2020-01 | Coronavirus (SARS-CoV-2) | E gene Ct 17.7 N2 gene Ct 20.2 | Positive |

| MCoV-2020-02 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative | |

| 2 (Nov 2020) | MCoV-2020-03 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative |

| MCoV-2020-04 | Coronavirus (SARS-CoV-2) | E gene Ct 21.3 N2 gene Ct 23.8 | Positive | |

| 3 (Feb 2021) | MCoV-2021-01 | Coronavirus (SARS-CoV-2) | E gene Ct 21.7 N2 gene Ct 24.4 | Positive |

| MCoV-2021-02 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative | |

| 4 (Jun 2021) | MCoV-2021-03 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative |

| MCoV-2021-04 | Coronavirus (SARS-CoV-2) | E gene Ct 25.8 N2 gene Ct 28.3 | Positive | |

| 5 (Sep 2021) | MCoV-2021-05 | Coronavirus (SARS-CoV-2) | E gene Ct 26.3 N2 gene Ct 28.7 | Positive |

| MCoV-2021-06 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative | |

| 6 (Feb 2022) | MCoV-2022-01 | Coronavirus (SARS-CoV-2 Delta variant) | E gene Ct 27.6 N2 gene Ct 30.2 | Positive |

| MCoV-2022-02 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative | |

| 7 (May 2022) | MCoV-2022-03 | MDCK—Negative | E gene Ct 0 N2 gene Ct 0 | Negative |

| MCoV-2022-04 | Coronavirus (SARS-CoV-2 Omicron variant) | E gene Ct 29.3 N2 gene Ct 31.6 | Positive |

| Survey | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sample | 2020-01 | 2020-02 | 2020-03 | 2020-04 | 2021-01 | 2021-02 | 2021-03 | 2021-04 | 2021-05 | 2021-06 | 2022-01 | 2022-02 | 2022-03 | 2022-04 |

| Number of eligible sites | 80 | 80 | 86 | 86 | 88 | 88 | 88 | 88 | 87 | 87 | 89 | 89 | 85 | 85 |

| % of eligible sites submitted results | 96% | 95% | 93% | 93% | 100% | 100% | 97% | 97% | 99% | 99% | 94% | 94% | 94% | 94% |

| Survey | Specimen ID | Expected Result | Inter-Site Concordance % (n) | Inter-Site Discordance % (n) | Inter-Site Not Assessed % (n) |

|---|---|---|---|---|---|

| 1 (Jul 2020) | MCoV-2020-01 | Positive | 80.0% (64) | 7.5% (6) | 12.5% (10) |

| MCoV-2020-02 | Negative | 83.7% (67) | 6.3% (5) | 10.0% (8) | |

| 2 (Nov 2020) | MCoV-2020-03 | Negative | 89.5% (77) | 1.2% (1) | 9.3% (8) |

| MCoV-2020-04 | Positive | 90.7% (78) | 1.2% (1) | 8.1% (7) | |

| 3 (Feb 2021) | MCoV-2021-01 | Positive | 90.9% (80) | 0.0% (0) | 9.1% (8) |

| MCoV-2021-02 | Negative | 89.8% (79) | 1.1% (1) | 9.1% (8) | |

| 4 (Jun 2021) | MCoV-2021-03 | Negative | 92.0% (81) | 2.3% (2) | 5.7% (5) |

| MCoV-2021-04 | Positive | 92.0% (81) | 2.3% (2) | 5.7% (5) | |

| 5 (Sep 2021) | MCoV-2021-05 | Positive | 89.7% (78) | 1.1% (1) | 9.2% (8) |

| MCoV-2021-06 | Negative | 90.8% (79) | 1.2% (1) | 8.0% (7) | |

| 6 (Feb 2022) | MCoV-2022-01 | Positive | 91.0% (81) | 0.0% (0) | 9.0% (8) |

| MCoV-2022-02 | Negative | 89.9% (80) | 1.1% (1) | 9.0% (8) | |

| 7 (May 2022) | MCoV-2022-03 | Negative | 90.6% (77) | 1.2% (1) | 8.2% (7) |

| MCoV-2022-04 | Positive | 89.4% (76) | 0.0% (0) | 10.6% (9) | |

| Median (Interquartile (IQR) range) | 90.2% (89.6–90.9%) | 1.2% (1.1–2.0%) | 9.0% (8.2–9.3%) | ||

| Survey Number | Specimen ID | Expected Result | POS % (n) | PP % (n) | NEG % (n) | Unsuccessful % (n) | No Result Submitted% (n) |

|---|---|---|---|---|---|---|---|

| 1 (Jul 2020) | MCoV-2020-01 | Positive | 80.0% (64) | 0.0% (0) | 7.5% (6) | 3.8% (3) Error, 1.2% (1) No Result | 7.5% (6) |

| MCoV-2020-02 | Negative | 6.2% (5) | 0.0% (0) | 83.8% (67) | 1.2% (1) Error | 8.8% (7) | |

| 2 (Nov 2020) | MCoV-2020-03 | Negative | 1.2% (1) | 0.0% (0) | 89.5% (77) | 1.2% (1) Error | 8.1% (7) |

| MCoV-2020-04 | Positive | 90.7% (78) | 0.0% (0) | 1.2% (1) | 0.0% (0) | 8.1% (7) | |

| 3 (Feb 2021) | MCoV-2021-01 | Positive | 90.9% (80) | 0.0% (0) | 0.0% (0) | 1.1% (1) Error | 8.0% (7) |

| MCoV-2021-02 | Negative | 1.1% (1) | 0.0% (0) | 89.8% (79) | 1.1% (1) No Result | 8.0% (7) | |

| 4 (Jun 2021) | MCoV-2021-03 | Negative | 2.3% (2) | 0.0% (0) | 92.0% (81) | 0.0% (0) | 5.7% (5) |

| MCoV-2021-04 | Positive | 92.0% (81) | 0.0% (0) | 2.3% (2) | 0.0% (0) | 5.7% (5) | |

| 5 (Sep 2021) | MCoV-2021-05 | Positive | 89.7% (78) | 0.0% (0) | 1.1% (1) | 1.1% (1) Error | 8.1% (7) |

| MCoV-2021-06 | Negative | 1.1% (1) | 0.0% (0) | 90.8% (79) | 0.0% (0) | 8.1% (7) | |

| 6 (Feb 2022) | MCoV-2022-01 | Positive | 91.0% (81) | 0.0% (0) | 0.0% (0) | 0.0% (0) | 9.0% (8) |

| MCoV-2022-02 | Negative | 0.0% (0) | 1.1% (1) | 89.9% (80) | 0.0% (0) | 9.0% (8) | |

| 7 (May 2022) | MCoV-2022-03 | Negative | 1.2% (1) | 0.0% (0) | 90.5% (77) | 1.2% (1) Error | 7.1% (6) |

| MCoV-2022-04 | Positive | 89.4% (76) | 0.0% (0) | 0.0% (0) | 3.5% (3) Error | 7.1% (6) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matthews, S.J.; Miller, K.; Andrewartha, K.; Milic, M.; Byers, D.; Santosa, P.; Kaufer, A.; Smith, K.; Causer, L.M.; Hengel, B.; et al. External Quality Assessment (EQA) for SARS-CoV-2 RNA Point-of-Care Testing in Primary Healthcare Services: Analytical Performance over Seven EQA Cycles. Diagnostics 2024, 14, 1106. https://doi.org/10.3390/diagnostics14111106

Matthews SJ, Miller K, Andrewartha K, Milic M, Byers D, Santosa P, Kaufer A, Smith K, Causer LM, Hengel B, et al. External Quality Assessment (EQA) for SARS-CoV-2 RNA Point-of-Care Testing in Primary Healthcare Services: Analytical Performance over Seven EQA Cycles. Diagnostics. 2024; 14(11):1106. https://doi.org/10.3390/diagnostics14111106

Chicago/Turabian StyleMatthews, Susan J., Kelcie Miller, Kelly Andrewartha, Melisa Milic, Deane Byers, Peter Santosa, Alexa Kaufer, Kirsty Smith, Louise M. Causer, Belinda Hengel, and et al. 2024. "External Quality Assessment (EQA) for SARS-CoV-2 RNA Point-of-Care Testing in Primary Healthcare Services: Analytical Performance over Seven EQA Cycles" Diagnostics 14, no. 11: 1106. https://doi.org/10.3390/diagnostics14111106

APA StyleMatthews, S. J., Miller, K., Andrewartha, K., Milic, M., Byers, D., Santosa, P., Kaufer, A., Smith, K., Causer, L. M., Hengel, B., Gow, I., Applegate, T., Rawlinson, W. D., Guy, R., & Shephard, M. (2024). External Quality Assessment (EQA) for SARS-CoV-2 RNA Point-of-Care Testing in Primary Healthcare Services: Analytical Performance over Seven EQA Cycles. Diagnostics, 14(11), 1106. https://doi.org/10.3390/diagnostics14111106