Abstract

Brain tumor diagnosis at an early stage can improve the chances of successful treatment and better patient outcomes. In the biomedical industry, non-invasive diagnostic procedures, such as magnetic resonance imaging (MRI), can be used to diagnose brain tumors. Deep learning, a type of artificial intelligence, can analyze MRI images in a matter of seconds, reducing the time it takes for diagnosis and potentially improving patient outcomes. Furthermore, an ensemble model can help increase the accuracy of classification by combining the strengths of multiple models and compensating for their individual weaknesses. Therefore, in this research, a weighted average ensemble deep learning model is proposed for the classification of brain tumors. For the weighted ensemble classification model, three different feature spaces are taken from the transfer learning VGG19 model, Convolution Neural Network (CNN) model without augmentation, and CNN model with augmentation. These three feature spaces are ensembled with the best combination of weights, i.e., weight1, weight2, and weight3 by using grid search. The dataset used for simulation is taken from The Cancer Genome Atlas (TCGA), having a lower-grade glioma collection with 3929 MRI images of 110 patients. The ensemble model helps reduce overfitting by combining multiple models that have learned different aspects of the data. The proposed ensemble model outperforms the three individual models for detecting brain tumors in terms of accuracy, precision, and F1-score. Therefore, the proposed model can act as a second opinion tool for radiologists to diagnose the tumor from MRI images of the brain.

1. Introduction

A brain tumor, which is regarded as one of the most serious illnesses of the nervous system, is an unexpected and uncontrollable development of brain cells. The Tumor Society estimates that approximately 4 lakh people worldwide are impacted with brain tumors every year [1,2]. Brain tumors can cause a range of complications, including seizures, cognitive problems, and physical disabilities. Early detection and treatment can help reduce the risk of these complications. Detection at an early stage allows for a wider range of treatment options that can help improve the patient’s quality of life, by reducing the need for more invasive treatments and minimizing the impact of the tumor [3,4].

The use of deep learning for the detection of brain tumors is an active field of research that shows significant potential for enhancing the precision and timeliness of brain tumor diagnosis [5,6,7]. Known as a subfield of computer learning, deep learning entails teaching a neural network to spot structures within large datasets. To detect brain tumors, for example, deep learning algorithms can be trained on huge collections of medical photos to recognize the telltale signs of these diseases.

There are several challenges associated with brain tumor detection using deep learning, including the need for large, high-quality datasets, and the difficulty of interpreting the output of the neural network [8]. The availability of enormous medical picture datasets, along with recent advancements in deep learning algorithms, have led to encouraging outcomes in this area. There is hope that future research and development into deep learning-based techniques for brain tumor detection may increase the accuracy and efficiency of brain tumor diagnosis, and ultimately improve patient outcomes.

In deep learning, use of ensemble models can be used to improve the accuracy and robustness of predictions. In the context of brain tumor classification, an ensemble model can help increase the accuracy of classification by combining the strengths of multiple models and compensating for their individual weaknesses. By combining the models, the ensemble model can take advantage of the strengths of each model and mitigate their weaknesses. For example, if a particular model is more prone to overfitting, the ensemble model can compensate by giving less weight to its predictions.

Overall, the use of an ensemble model can improve the accuracy and robustness of brain tumor classification by leveraging the strengths of multiple models and mitigating their individual weaknesses. This can ultimately help clinicians make more accurate and informed decisions about the diagnosis and treatment of brain tumors.

In this research, a weighted average ensemble deep learning model for brain tumor detection is presented. The article’s most significant contributions are as follows:

- A weighted average ensemble model is proposed for the classification of brain tumors by using the grid search for the best combination of weights, i.e., weight1, weight2, and weight3, that are taken for transfer learning model, Convolution Neural Network (CNN) model without augmentation, and CNN model with augmentation, respectively;

- The results of the weighted average ensemble model are compared with the individual model, i.e., transfer learning model, Convolution Neural Network (CNN) model without augmentation, and CNN model with augmentation, in which the proposed ensemble model has outperformed the other individual models;

- Adam optimizer and a 32 batch size were used to evaluate the proposed weighted average ensemble model for brain tumor classification from MRI scans.

2. Related Work

The present literature methods are reviewed here. Gill et al. [9] used a VGG19 architecture and achieved an accuracy of 73.0%, precision of 87.0%, sensitivity of 75.0%, and an F1-score of 81.0% on a dataset of 3000 brain MRI images to classify brain tumor. Rajinikanth [10] also used the VGG19 architecture and achieved a higher performance, with an accuracy of 98.17%, precision of 98.50%, sensitivity of 98.75%, and specificity of 97% on a dataset of 1400 MRI images. Khan [11] used both VGG16 and VGG19 architectures and achieved high accuracy on different datasets, with 98.16% on BraTs2015, 97.26% on BraTs2017, and 93.40% on BraTs2018. Khan [12] used a CNN and attained an accuracy of 97.8% on a dataset of 3216 images. Asiri et al. [13] used the VGG19 architecture and attained an accuracy of 98.0% on a dataset of 2870 images. Raj et al. [14] in 2020 used a recurrent neural network technique and achieved an accuracy of 96%, specificity of 98%, and sensitivity of 97%. Poonguzhali et al. [15] in 2019 analyzed 20 patient images using RCNN and SVM classifiers and achieved a sensitivity of 82% and specificity of 99%. Pandian et al. [16] in 2017 analyzed 1000 images using Convnet techniques and attained an accuracy of 97%. Joshi et al. [17] in 2019 used a CNN technique for image analysis and achieved an accuracy of 79.07%. Rao et al. [18] selected patches in each voxel’s plane and trained a CNN. The outputs of each CNN’s final FC layer using softmax were then combined and used to build an RF classifier.

A CNN model is suggested by Kao et al. [19] using the block location data. The ambiguity can be decreased, and the accuracy can be significantly increased by combining the tumor data that has been taken from many advanced networks. To gain more precise anatomical data on brain tumors, Nassar et al. [20,21] fed the CNN model by integrating the image features of long skip-linked lesions. W. Chen et al. [22] showed a separate 3D U-Net model that got around the memory limit by using different 3D convolutions. Wang et al. [23] made a TransBTS structure that worked well with a transformer. Liu et al. [24,25] suggested a customized deep 3D V-Net model based on encoders and decoders that used less memory and computing power and were based on fewer parameters. An attention module with group cross-channel was used to keep track of the most important things [25,26,27]. The suggested work used standard 2017 and 2018 records for research studies. From these two datasets, 2D slices with only the tumorous area were taken.

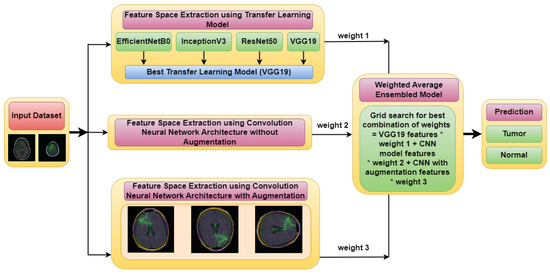

3. Proposed Weighted Average Ensemble Deep Learning Model Architecture

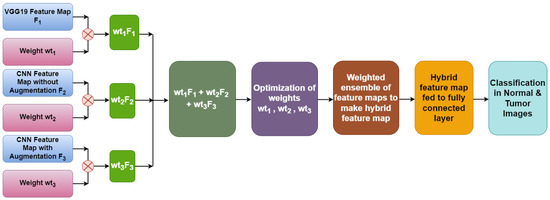

Figure 1 illustrates the architecture of the proposed Weighted Average Ensemble Deep Learning Model for classifying MRI images of brain tumors. The whole methodology is divided into two phases. The classification is performed using a weighted average ensemble of three models, in which the first model is a transfer learning-based model, the second model is Convolution Neural Network (CNN) model without augmentation and the third model is the CNN model with augmentation. From these three models, three different feature spaces are extracted, which are ensembled, to make an optimized feature space. For this, three different weights, i.e., weight 1, weight 2, and weight 3 are assigned to three different models using a grid search combination to find the best-optimized classification model. By merging the results of several models, an ensemble model can provide more precise forecasts. It is more robust than individual models because if one of the models in the ensemble makes an incorrect prediction, the other models can compensate and provide a correct prediction.

Figure 1.

Proposed Weighted Average Ensemble Deep Learning Model for classification of brain tumor.

3.1. Input Dataset

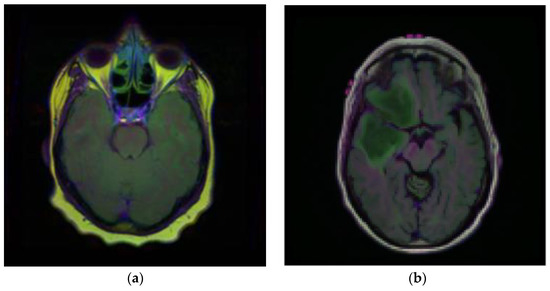

Brain MRI scans from 110 patients with 3929 brain MRI images are included in the dataset using FLAIR abnormalities. Out of the total 3929 dataset images, 90% of the data are used for training and 10% are used for testing. After that, out of the 90% training data, 15% are used for the validation set. Figure 2 illustrates the brain MRI images taken from Kaggle [28,29]. Figure 2a displays the normal image and Figure 2b displays the tumor image of the brain in which two tumor regions are shown with a break in between. It is difficult to segment this break region in the tumor part. The proposed methodology shown in Figure 3 is also segmenting this break part accurately.

Figure 2.

Samples of Brain MRI Images (a) Normal Image, (b) Tumor Image.

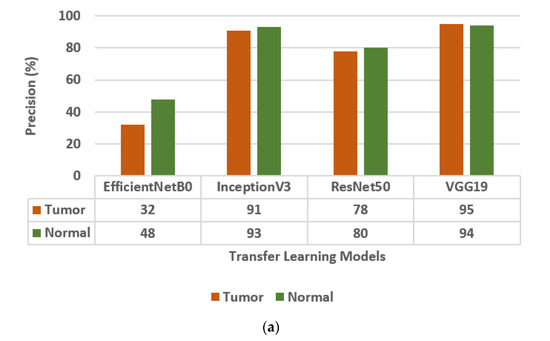

Figure 3.

Confusion matrix parameter values of different transfer learning models, i.e., EfficientNetB0, InceptionV3, ResNet50, and VGG19. (a) Precision, (b) Sensitivity, (c) F1-score.

3.2. Feature Space Extraction Using Three Different Models

In this section, the classification of three models is performed. In model 1, the classification is performed using three different transfer learning models. In model 2, the classification using the Convolution Neural Network (CNN) architecture without augmentation is performed, and in the model 3, the classification is performed using the CNN architecture with augmentation.

3.2.1. Model 1: Classification Using Transfer Learning Models and Evaluation of Best Transfer Learning Model

The different Transfer Learning (TL) models that are used for the classification of brain tumors are EfficientNetB0, InceptionV3, ResNet50 [30], and VGG19. The values of the confusion matrix parameters, such as Precision (PR), Sensitivity (SN), and F1-score (FS) are obtained on all four transfer learning models and are shown in Figure 3. From the analysis of PR, SN, and FS as presented in Figure 3a–c, respectively, it is concluded that the VGG19 model outperforms the other three TL models, i.e., EfficientNetB0, InceptionV3, and ResNet50. The VGG19 model has obtained a precision of 95%, sensitivity of 96%, and F1-score of 95% for brain tumor classes.

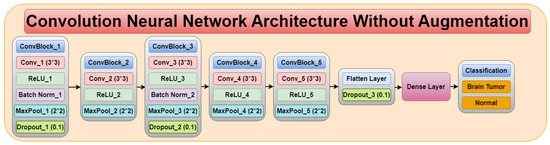

3.2.2. Model 2: Classification Using Convolution Neural Network (CNN) Architecture without Augmentation

The Convolution Neural Network (CNN) architecture consists of five convolution blocks, as shown in Figure 4. Each convolution block consists of different convolution layers, ReLU layer, batch normalization, max pool layer, and dropout layer. Therefore, the CNN architecture consists of five convolution layers, five ReLU layers, two batch normalization, five max pool layers, three dropout layers, flatten layer, and a dense layer.

Figure 4.

Convolution neural network architecture without augmentation.

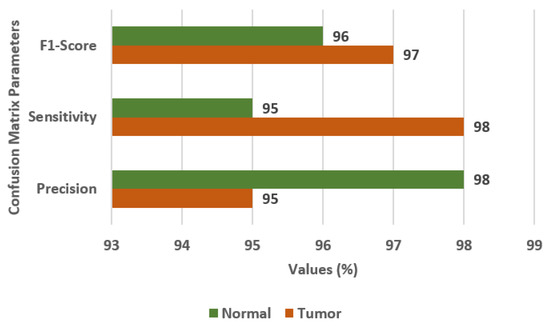

Figure 5 displays the confusion matrix parameter values obtained using the CNN model. The values of FS, SN, and PR are 97%, 98%, and 95%, respectively, for the tumor class. The CNN model outperformed the VGG19 transfer learning model.

Figure 5.

Confusion matrix parameter values for the CNN model.

3.2.3. Model 3: Classification Using Convolution Neural Network (CNN) Architecture with Augmentation

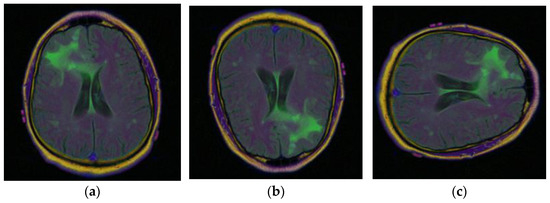

To obtain more, and more varied images of brain tumors, the data augmentation technique is used with the existing images. The different data augmentation techniques [31,32,33] that are applied are vertical flipping and horizontal flipping. Figure 6a displays the original sample of the brain tumor image, Figure 6b displays the vertically flipped image, and Figure 6c displays the horizontally flipped image.

Figure 6.

Samples of Brain MRI Images [31]: (a) Original Image, (b) Vertical Flip, (c) Horizontal Flip.

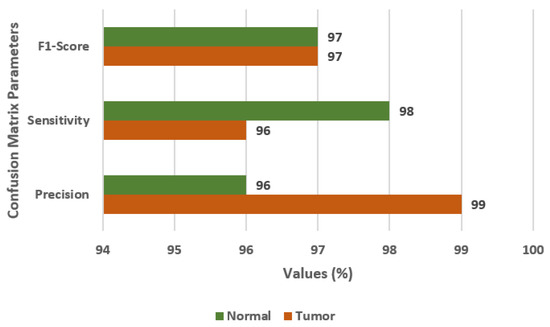

In this section, the results are obtained using the CNN model with augmented images. Figure 7 displays the confusion matrix parameter values on the CNN model with data augmentation. The values of FS, SN, and PR are 97%, 96%, and 99%, respectively. The CNN model with data augmentation, outperformed the previous models.

Figure 7.

Confusion matrix parameter values for CNN model with data augmentation.

3.3. Classification Using Ensembling of Three Different Models

The proposed weighted average ensembled model is designed by combining three feature spaces obtained from the TL model, CNN model without augmentation, and CNN model with augmentation. For this, a grid search is performed to find the best combination of weights assigned to three different feature spaces. Weight 1 (wt1) is obtained from the VGG19 TL model, weight 2 (wt2) is taken from the CNN model without augmentation, and weight 3 (wt3) is obtained from the CNN model with data augmentation [34,35,36].

Figure 8 illustrates the weighted ensemble of three feature maps extracted from three different models. These weights are further optimized by using a grid search combination to achieve the maximum accuracy value of the ensemble model. Equation (1) shows the formula of the hybrid feature map for the best combination of weights.

Hybrid feature map = VGG19 feature map F1 × wt1 + CNN feature map without augmentation F2 × wt2 + CNN feature map with augmentation F3 × wt3

Figure 8.

Hybrid feature map generation.

With the help of optimized weights, a hybrid feature map is generated which is further fed to a fully connected layer to determine the classified output.

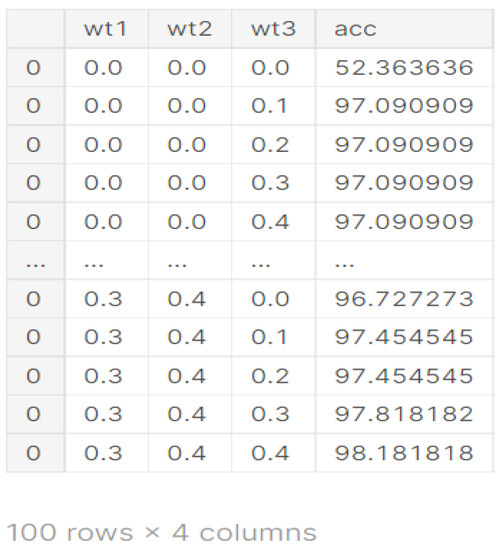

From Figure 9, it can be seen that for the different values of wt1, wt2, and wt3, different values of accuracies are obtained. The best value of accuracy 98.18% is obtained on weights 0.3, 0.4, and 0.4 as shown in Figure 9.

Figure 9.

Weights obtained from different models.

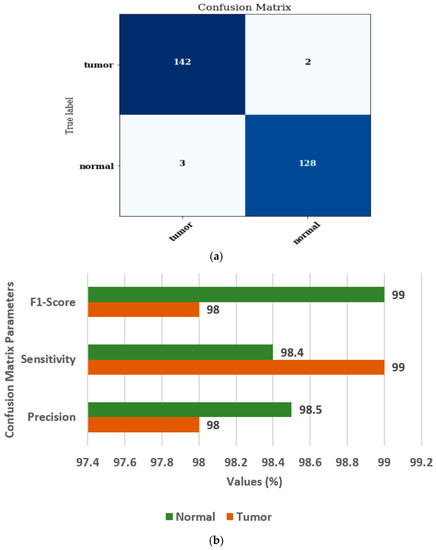

Figure 10 shows the confusion matrix and confusion matrix parameters of the ensemble model [37,38]. Figure 10a displays the confusion matrix for normal and brain tumor classes. Figure 10b displays the values of FS, SN, and PR as 98%, 99%, and 98%, respectively, for the tumor class.

Figure 10.

Ensembled model (a) Confusion matrix, (b) Confusion matrix parameters.

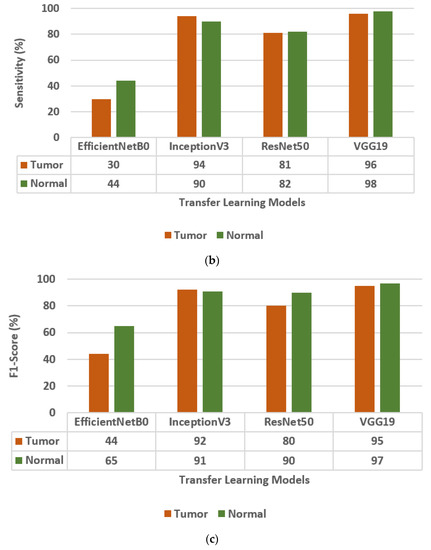

3.4. Comparison of Ensembled Model with Individual Models

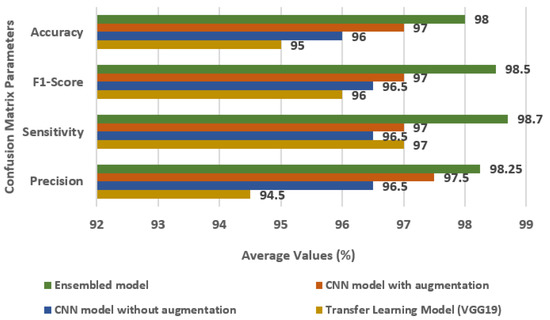

Figure 11 displays the comparison of the Ensembled model with individual models, i.e., transfer learning model, CNN model without augmentation, and CNN model with augmentation in terms of accuracy, FS, SN, and PR. For the ensemble model, the values of accuracy, FS, SN, and PR are 98%, 98.5%, 98.7%, and 98.25%, respectively.

Figure 11.

Comparison of Ensembled model with individual models.

3.5. Comparison of Ensembled Model with State-of-Art

Table 1 provides a summary of different research studies on medical image analysis, along with the number of images used, the technique employed, and the performance parameters achieved by each study.

Raj et al. [14] used a recurrent neural network and achieved an accuracy of 96%, specificity of 98%, and sensitivity of 97%. Poonguzhali et al. [15] used a RCNN and SVM classifier on 20 patient images and achieved a sensitivity of 82% and specificity of 99%. Pandian et al. [16] used convnet, slicenet, and VGNet on 1000 images and achieved an accuracy of 97%. Joshi et al. [17] used a CNN and achieved an accuracy of 79.07%. Gill et al. [9] used VGG19 on 3000 images and achieved an accuracy of 73.0%, precision of 87.0%, sensitivity of 75.0%, and F1-score of 81.0%. Rajinikanth [10] used VGG19 on 1400 images and achieved an accuracy of 98.17%, precision of 98.50%, sensitivity of 98.75%, and specificity of 97%. Khan [11] used VGG16 and VGG19 on various datasets and achieved accuracies ranging from 93.40% to 98.16%. Khan [12] used a CNN on 3216 images and achieved an accuracy of 97.8%. Asiri et al. [13] used VGG19 on 2870 images and achieved an accuracy of 98.0%.

Finally, the proposed model used a weighted average ensemble model on 3929 images and achieved an accuracy of 98.00%, sensitivity of 98.7%, F1-Score of 98.5%, and precision of 98.25%.

Table 1.

Review of State-of-the-Art Methods Compared to the Proposed Model.

Table 1.

Review of State-of-the-Art Methods Compared to the Proposed Model.

| Ref. | Year | Images | Technique Used | Performance Parameters |

|---|---|---|---|---|

| Raj et al. [14] | 2020 | - | Recurrent Neural Network | Accuracy = 96% Specificity = 98% Sensitivity = 97% |

| Poonguzhali et al. [15] | 2019 | 20 patients | RCNN and SVM classifier | Sensitivity = 82% Specificity = 99% |

| Pandian et al. [16] | 2017 | 1000 | Convnet and slicenet and VGNet | Accuracy = 97% |

| Joshi et al. [17] | 2019 | - | CNN | Accuracy = 79.07% |

| Gill et al. [9] | 2022 | 3000 | VGG19 | Accuracy = 73.0% Precision = 87.0% Sensitivity = 75.0% F1-score = 81.0% |

| Rajinikanth [10] | 2020 | 1400 | VGG19 | Accuracy = 98.17% Precision = 98.50% Sensitivity = 98.75% Specificity = 97% |

| Khan [11] | 2020 | - | VGG16 VGG19 | Accuracy on BraTs2015 = 98.16% BraTs2017 = 97.26% BraTs2018 = 93.40% |

| Khan [12] | 2022 | 3216 | CNN | Accuracy = 97.8% |

| Asiri et al. [13] | 2022 | 2870 | VGG19 | Accuracy = 98.0% |

| Proposed model | 2023 | 3929 | Weighted average ensemble model | Accuracy = 98.00% Sensitivity = 98.7% F1-Score = 98.5% Precision = 98.25% |

4. Conclusions

Deep learning models can be sensitive to the random initialization of weights, the choice of hyperparameters, and the randomness in the training data. By mixing numerous models trained on distinct portions of the data and using varying hyperparameters, an ensemble model can aid in reducing this unpredictability. In order to classify brain tumors from MRI scans, this research offers a weighted average ensemble deep learning model. The presented work has been estimated on the brain MRI database. It performs classification by using the grid search for the best combination of weights, i.e., weight1, weight2, and weight3 that are taken for the VGG19 TL model, CNN model without augmentation, and CNN model with augmentation, respectively. The proposed ensemble model outperforms the three individual models in relations of accuracy, precision and F1-score, having values of 98%, 98.25%, and 98.5%, respectively. Accordingly, radiologists can use this model as a second opinion resource for making a diagnosis of brain tumors from MRI images.

The study’s inability to generalize findings to other cancer forms attacking MRI pictures is a significant shortcoming. A number of image modalities and segmentation techniques, including the Pyramid Scene Parsing Network (PSPNet), UNet, DeepLab, and Feature Pyramid Network (FPN), can be used in future studies to achieve a good enough approximation of affected brain regions to separate them from healthy ones. It is possible that a combination of modalities, each with its own approach to image registration, will be required to properly display the missing features of image in the patterns over time and execute classification. It is possible that using ensembles would allow for greater precision and accuracy.

Author Contributions

Conceptualization, V.A., S.G., D.G., Q.X.; and Y.G.; methodology, V.A, S.G., Y.G., Q.X., and S.J.; software, V.A., S.G. and D.G.; validation, S.G., D.G., Y.G. and Q.X.; formal analysis, V.A., S.G. and D.G.; investigation, S.G., D.G., Y.G.; resources, V.A., S.G., D.G.; data curation, V.A., S.G., D.G., Y.G. and S.J.; writing—original draft preparation, V.A., S.G., D.G.; writing—review and editing, V.A., S.G., D.G., Y.G., Q.X., S.J., A.S. (Asadullah Shah), and A.S. (Asadullah Shaikh); visualization, V.A., S.G., D.G., Y.G.; supervision, Y.G. and S.J.; project administration, Y.G. and Q.X..; funding acquisition, Y.G. and Q.X. All authors have read and agreed to the published version of the manuscript.

Funding

The publication of this work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia, under Project GRANT2,792.

Data Availability Statement

Data Will be available form first author on request.

Acknowledgments

The publication of this work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia, under Project GRANT2,792. The authors are also thankful to International Islamic University, Malaysia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghaffari, M.; Samarasinghe, G.; Jameson, M.; Aly, F.; Holloway, L.; Chlap, P.; Koh, E.S.; Sowmya, A.; Oliver, R. Automated post-operative brain tumour segmentation: A deep learning model based on transfer learning from pre-operative images. Magn. Reson. Imaging 2022, 86, 28–36. [Google Scholar] [CrossRef]

- Ahmadi, A.; Kashefi, M.; Shahrokhi, H.; Nazari, M.A. Computer aided diagnosis system using deep convolutional neural networks for ADHD subtypes. Biomed. Signal Process. Control 2021, 63, 102227. [Google Scholar] [CrossRef]

- Kumar, T.S.; Arun, C.; Ezhumalai, P. An approach for brain tumor detection using optimal feature selection and optimized deep belief network. Biomed. Signal Process. Control 2022, 73, 103440. [Google Scholar] [CrossRef]

- Akter, S.; Das, D.; Haque, R.U.; Tonmoy, M.I.Q.; Hasan, M.R.; Mahjabeen, S.; Ahmed, M. AD-CovNet: An exploratory analysis using a hybrid deep learning model to handle data imbalance, predict fatality, and risk factors in Alzheimer’s patients with COVID-19. Comput. Biol. Med. 2022, 146, 105657. [Google Scholar] [CrossRef] [PubMed]

- Ma, Q.; Zhou, S.; Li, C.; Liu, F.; Liu, Y.; Hou, M.; Zhang, Y. DGRUnit: Dual graph reasoning unit for brain tumor segmentation. Comput. Biol. Med. 2022, 149, 106079. [Google Scholar] [CrossRef]

- Li, Z.; Sun, Y.; Zhang, L.; Tang, J. Ctnet: Context-based tandem network for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 9904–9917. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Li, Z. Ssa: Semantic structure aware inference for weakly pixel wise dense predictions without cost. arXiv 2021, arXiv:2111.03392. [Google Scholar]

- Ghaffari, M.; Sowmya, A.; Oliver, R. Automated brain tumour segmentation using cascaded 3d densely-connected u-net. In International MICCAI Brainlesion Workshop; Springer: Berlin/Heidelberg, Germany, 2020; pp. 481–491. [Google Scholar]

- Gill, K.S.; Sharma, A.; Anand, V.; Gupta, R. Brain Tumor Detection using VGG19 model on Adadelta and SGD Optimizer. In Proceedings of the 2022 6th International Conference on Electronics, Communication and Aerospace Technology, Coimbatore, India, 1–3 December 2022; pp. 1407–1412. [Google Scholar]

- Rajinikanth, V.; Joseph Raj, A.N.; Thanaraj, K.P.; Naik, G.R. A customized VGG19 network with concatenation of deep and handcrafted features for brain tumor detection. Appl. Sci. 2020, 10, 3429. [Google Scholar] [CrossRef]

- Khan, M.A.; Ashraf, I.; Alhaisoni, M.; Damaševičius, R.; Scherer, R.; Rehman, A.; Bukhari, S.A.C. Multimodal brain tumor classification using deep learning and robust feature selection: A machine learning application for radiologists. Diagnostics 2020, 10, 565. [Google Scholar] [CrossRef]

- Khan, M.S.I.; Rahman, A.; Debnath, T.; Karim, M.R.; Nasir, M.K.; Band, S.S.; Mosavi, A.; Dehzangi, I. Accurate brain tumor detection using deep convolutional neural network. Comput. Struct. Biotechnol. J. 2022, 20, 4733–4745. [Google Scholar] [CrossRef]

- Asiri, A.A.; Aamir, M.; Shaf, A.; Ali, T.; Zeeshan, M.; Irfan, M.; Alshamrani, K.A.; Alshamrani, H.A.; Alqahtani, F.F.; Alshehri, A.H. Block-Wise Neural Network for Brain Tumor Identification in Magnetic Resonance Images. Comput. Mater. Contin. 2022, 73, 5735–5753. [Google Scholar] [CrossRef]

- Raj, A.; Anil, A.; Deepa, P.L.; Aravind Sarma, H.; Naveen Chandran, R. BrainNET: A Deep Learning Network for Brain Tumor Detection and Classification. In Advances in Communication Systems and Networks; Springer: Singapore, 2020; pp. 577–589. [Google Scholar]

- Poonguzhali, N.; Rajendra, K.R.; Mageswari, T.; Pavithra, T. Heterogeneous deep neural network or healthcare using metric learning. In Proceedings of the 2019 IEEE International Conference on System, Computation, Automation and Networking (ICSCAN), Pondicherry, India, 29–30 March 2019. [Google Scholar]

- Pandian, A.A.; Balasubramanian, R. Fusion of contourlet transform and zernike moments using content based image retrieval for M.R.I. brain tumor images. Indian J. Sci. Technol. 2016, 9, 1–8. [Google Scholar] [CrossRef]

- Joshi, S.R.; Headley, D.B.; Ho, K.C.; Paré, D.; Nair, S.S. Classification of brainwaves using convolutional neural network. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), A Coruña, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar]

- Rao, V.; Sharifi, M.; Jaiswal, A. Brain tumor segmentation with deep learning. Multimodal Brain Tumor Segm. Chall. 2015, 59, 56–59. [Google Scholar]

- Kao, P.Y.; Shailja, S.; Jiang, J.; Zhang, A.; Khan, A.; Chen, J.W.; Manjunath, B.S. Improving Patch-Based Convolutional Neural Networks for MRI Brain Tumor Segmentation by Leveraging Location Information. Front. Neurosci. 2020, 13, 1449. [Google Scholar] [CrossRef] [PubMed]

- Nassar, S.E.; Mohamed, M.A.; Elnakib, A. MRI Brain Tumor Segmentation Using Deep Learning. Mansoura Eng. J. 2021, 45, 45–54. [Google Scholar] [CrossRef]

- Kayalibay, B.; Jensen, G.; Smagt, P. CNN-based Segmentation of Medical Imaging while. arXiv 2017, arXiv:1701.03056. [Google Scholar]

- Chen, W.; Liu, B.; Peng, S.; Sun, J.; Qiao, X. S3D-UNet: Separable 3D U-Net for Brain Tumor Segmentation. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Bakas, S., Kuijf, H., Keyvan, F., Reyes, M., van Walsum, T., Eds.; BrainLes 2018, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11384. [Google Scholar] [CrossRef]

- Wang, W.; Chen, C.; Ding, M.; Yu, H.; Zha, S.; Li, J. TransBTS: Multimodal brain tumor segmentation using transformer. In Proceedings of the 24th International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2021, Strasbourg, France, 27 September 27–1 October 2021. [Google Scholar] [CrossRef]

- Liu, P.; Dou, Q.; Wang, Q.; Heng, P.A. An encoder-decoder neural network with 3D squeeze-and-excitation and deep supervision for brain tumor segmentation. IEEE Access 2020, 8, 34029–34037. [Google Scholar] [CrossRef]

- Huang, Z.; Zhao, Y.; Liu, Y.; Song, G. GCAUNet: A group cross-channel attention residual UNet for slice based brain tumor segmentation. Biomed. Signal Process. Control 2021, 70, 102958. [Google Scholar] [CrossRef]

- Soumya, T.R.; Manohar, S.S.; Ganapathy, N.B.S.; Nelson, L.; Mohan, A.; Pandian, M.T. Profile Similarity Recognition in Online Social Network using Machine Learning Approach. In Proceedings of the 2022 4th International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 21–23 September 2022; pp. 805–809. [Google Scholar]

- Singh, S.; Aggarwal, A.K.; Ramesh, P.; Nelson, L.; Damodharan, P.; Pandian, M.T. COVID-19: Identification of Masked Face using CNN Architecture. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 17–19 August 2022; pp. 1045–1051. [Google Scholar]

- Buda, M.; Saha, A.; Mazurowski, M.A. Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm. Comput. Biol. Med. 2019, 109, 218–225. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Clark, K.; Czarnek, N.M.; Shamsesfandabadi, P.; Peters, K.B.; Saha, A. Radiogenomics of lower-grade glioma: Algorithmically-assessed tumor shape is associated with tumor genomic subtypes and patient outcomes in a multi-institutional study with The Cancer Genome Atlas data. J. Neurooncol. 2017, 133, 27–35. [Google Scholar] [CrossRef] [PubMed]

- Anand, V.; Gupta, S.; Nayak, S.R.; Koundal, D.; Prakash, D.; Verma, K.D. An automated deep learning models for classification of skin disease using Dermoscopy images: A comprehensive study. Multimed. Tools Appl. 2022, 81, 37379–37401. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Koundal, D.; Singh, K. Fusion of U-Net and CNN model for segmentation and classification of skin lesion from dermoscopy images. Expert Syst. Appl. 2023, 213, 119230. [Google Scholar] [CrossRef]

- Gulzar, Y.; Khan, S.A. Skin Lesion Segmentation Based on Vision Transformers and Convolutional Neural Networks—A Comparative Study. Appl. Sci. 2022, 12, 5990. [Google Scholar] [CrossRef]

- Khan, S.A.; Gulzar, Y.; Turaev, S.; Peng, Y.S. A modified HSIFT Descriptor for medical image classification of anatomy objects. Symmetry 2021, 13, 1987. [Google Scholar] [CrossRef]

- Aggarwal, S.; Gupta, S.; Kannan, R.; Ahuja, R.; Gupta, D.; Juneja, S.; Belhaouari, S.B. A convolutional neural network-based framework for classification of protein localization using confocal microscopy images. IEEE Access 2022, 10, 83591–83611. [Google Scholar] [CrossRef]

- Sharma, S.; Gupta, S.; Gupta, D.; Rashid, J.; Juneja, S.; Kim, J.; Elarabawy, M.M. Performance evaluation of the deep learning based convolutional neural network approach for the recognition of chest X-ray images. Front. Oncol. 2022, 12, 932496. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Gupta, S.; Gupta, D.; Juneja, S.; Mahmoud, A.; El–Sappagh, S.; Kwak, K.S. Transfer learning-based modified inception model for the diagnosis of Alzheimer’s disease. Front. Comput. Neurosci. 2022, 16, 1000435. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, S.; Juneja, S.; Rashid, J.; Gupta, D.; Gupta, S.; Kim, J. Protein Subcellular Localization Prediction by Concatenation of Convolutional Blocks for Deep Features Extraction from Microscopic Images. IEEE Access 2022, 11, 1057–1073. [Google Scholar] [CrossRef]

- Aggarwal, S.; Gupta, S.; Gupta, D.; Gulzar, Y.; Juneja, S.; Alwan, A.A.; Nauman, A. An Artificial Intelligence-Based Stacked Ensemble Approach for Prediction of Protein Subcellular Localization in Confocal Microscopy Images. Sustainability 2023, 15, 1695. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).