Abstract

(1) Background: Three-dimensional (3D) facial anatomical landmarks are the premise and foundation of facial morphology analysis. At present, there is no ideal automatic determination method for 3D facial anatomical landmarks. This research aims to realize the automatic determination of 3D facial anatomical landmarks based on the non-rigid registration algorithm developed by our research team and to evaluate its landmark localization accuracy. (2) Methods: A 3D facial scanner, Face Scan, was used to collect 3D facial data of 20 adult males without significant facial deformities. Using the radial basis function optimized non-rigid registration algorithm, TH-OCR, developed by our research team (experimental group: TH group) and the non-rigid registration algorithm, MeshMonk (control group: MM group), a 3D face template constructed in our previous research was deformed and registered to each participant’s data. The automatic determination of 3D facial anatomical landmarks was realized according to the index of 32 facial anatomical landmarks determined on the 3D face template. Considering these 32 facial anatomical landmarks manually selected by experts on the 3D facial data as the gold standard, the distance between the automatically determined and the corresponding manually selected facial anatomical landmarks was calculated as the “landmark localization error” to evaluate the effect and feasibility of the automatic determination method (template method). (3) Results: The mean landmark localization error of all facial anatomical landmarks in the TH and MM groups was 2.34 ± 1.76 mm and 2.16 ± 1.97 mm, respectively. The automatic determination of the anatomical landmarks in the middle face was better than that in the upper and lower face in both groups. Further, the automatic determination of anatomical landmarks in the center of the face was better than in the marginal part. (4) Conclusions: In this study, the automatic determination of 3D facial anatomical landmarks was realized based on non-rigid registration algorithms. There is no significant difference in the automatic landmark localization accuracy between the TH-OCR algorithm and the MeshMonk algorithm, and both can meet the needs of oral clinical applications to a certain extent.

1. Introduction

The anatomical and morphological features of the human face are often considered facial anatomical landmarks during the diagnosis and treatment of oral craniomaxillofacial diseases [1]. The clinical diagnosis, treatment planning, and evaluation of treatment outcomes for oral and maxillofacial surgery, orthodontic, and prosthodontic patients are often based on the morphological analysis of facial anatomical landmarks [2,3,4,5,6,7,8,9,10,11]. The traditional methods mostly employ anatomical landmarks on the two-dimensional (2D) image of the patient’s face for measurement. There are also reports of directly marking the anatomical landmarks on the patient’s face for measurement [12]. However, 2D image measurement lacks the depth information of 3D facial morphology, and directly marking landmarks on the face has the potential risk of facial trauma. With the development of three-dimensional (3D) optical non-invasive scanning technology, the application of facial 3D morphological data has increasingly gained clinical attention [13]. Automatic, accurate, and rapid identification of facial anatomical landmarks based on 3D facial data is currently a hot topic of research.

The existing automatic algorithms to determine anatomical landmarks using 3D facial data mainly include geometric feature algorithms [14,15,16,17,18] and artificial intelligence algorithms [19,20,21,22,23,24]. Geometric feature algorithms help determine facial anatomical landmarks by analyzing the changes in facial curvature characteristics and combining the prior knowledge of facial geometrical morphology. This method is suitable when there are significant changes in facial morphological features, and therefore, the number of anatomical landmarks that can be automatically determined is limited. The artificial intelligence algorithm uses training set data for deep learning to automatically determine facial anatomical landmarks. Based on the training set data with different annotation information, the corresponding number of anatomical landmarks can be automatically determined. The number and location of anatomical landmarks that can be determined by each algorithm model lack flexibility, and improvements are necessary for clinical universality.

Our research team had previously reported a method to automatically determine facial anatomical landmarks by combining 3D face templates and the non-rigid registration algorithm MeshMonk [25] (referred to as “template method”). We preliminarily tested the effect and feasibility of the “template method” using the 3D facial data of five individuals with no significant facial deformity and that of five with mild mandibular deviation [26]. Compared with geometric feature algorithms and artificial intelligence algorithms, the number of 3D facial anatomical landmarks that can be automatically determined by the template method is not limited by facial anatomical features and has good flexibility. Therefore, the template method has good application potential and clinical suitability in an oral clinic. However, in our previous study, we did not conduct an in-depth evaluation of the landmark localization accuracy of the template method.

Therefore, our research objectives are as follows: (1) Based on the non-rigid registration algorithm (TH-OCR) developed by our research team, realize automatic determination of 3D facial anatomical landmarks; (2) compare and analyze the automatic landmark localization accuracy of TH-OCR and MeshMonk, and provide corresponding reference for the application of the template method in an oral clinic.

2. Materials and Methods

2.1. Subjects

Twenty adult males who came to Peking University School and Hospital of Stomatology were recruited. The inclusion criteria were as follows: (1) The facial morphology was normal without obvious facial deformities. (2) No facial defects, traumas, no obvious facial asymmetry. The exclusion criteria were as follows: (1) After oral clinical diagnosis, suffering from facial deformities, traumas, defects, etc. (2) Patients who do not accept or are not comfortable with optical scanning of the face. This study was approved by the Bioethics Committee of Peking University Hospital of Stomatology (PKUSSIRB-202164079). The purpose and procedures of this study were fully explained to all subjects, and written informed consent was obtained before participation.

2.2. Experimental Equipment and Software

Face Scan (3D-Shape Corp, Erlangen, Germany), a 3D optical sensor, was used to collect the 3D facial data of the participants, using the following parameters: scanning speed, 0.8 s; scanning accuracy, 0.2 mm; scanning angle, 270°–320°; the imaging principle was raster scanning using 5 million charge-coupled device pixels, with approximately 10,000 data points and 20,000 triangular meshes.

The reverse engineering software Geomagic Studio 2013 (3D Systems, Morrisville, NC, USA) was used to preprocess each participant’s 3D facial data and manually select the anatomical landmarks. The Procrustes analysis (PA) algorithm in MATLAB R2019b (MathWorks, Natick, MA, USA) was used to calculate the scaling factor of the 3D face template. Meshlab 2020 (Open source, Tuscany, Italy) was used for data preparation before applying the non-rigid registration procedures. The non-rigid registration algorithm MeshMonk was used for deformation registration between the 3D face template of the control group (MM group) and the 3D facial data of the participants. The non-rigid registration algorithm TH-OCR developed by our research team was used for deformation registration between the 3D face template of the experimental group (TH group) and 3D facial data of the participants.

The algorithm MeshMonk runs in MATLAB R2019b, and TH-OCR runs in python 3.8. The hardware configuration for the algorithm to run was Intel Xeon Silver 4210R, 2.40 GHz GPU, and 192 GB of RAM.

2.3. Three-Dimensional Facial Data Collection and Processing

Face Scan was used to collect 3D facial data of adult males without significant facial deformities. Instrument calibration was performed before scanning to ensure accurate imaging. In accordance with the investigators’ instructions, the participants sat 135 cm in front of the instrument with a natural head position while looking straight ahead with both eyes and maintaining the Frankfort plane parallel to the ground. The participants’ face was completely exposed up to the hairline and until the ears on the left and right, without glasses or hair covering the face. Their facial expressions were naturally relaxed. After scanning, the 3D facial data was saved in the OBJ format.

In the reverse engineering software Geomagic Studio 2013, the 3D facial data of the participants were processed. Redundant data were deleted, and the range of retained data included that up to the hairline, left and right to the tragus, bypassing the mandibular angle, and along the mandible to the submental chin, with repair of the defect area on the facial margin. The spatial pose of the 3D facial data was adjusted in the software to ensure that the mid-sagittal plane was parallel to the YZ plane to achieve a natural head position. The data were saved as OBJ files (FaceModel_Patient).

A single operator with clinical experience, who was also skilled in the operation of Geomagic Studio 2013 software, selected 32 facial anatomical landmarks routinely used in dental clinics, including the trichion, glabella, and pronasale, on the 3D facial data of each participant. These included 10 midline and 22 bilateral points. The number, name, and abbreviation of these anatomical landmarks are presented in Table 1. Three consecutive selections were made, and the average coordinate value of the landmarks was considered the reference value for manual selection (Point_Ref).

Table 1.

Number, name, abbreviation, and index of facial anatomical landmarks used in this study.

2.4. Determination of 3D Facial Anatomical Landmarks

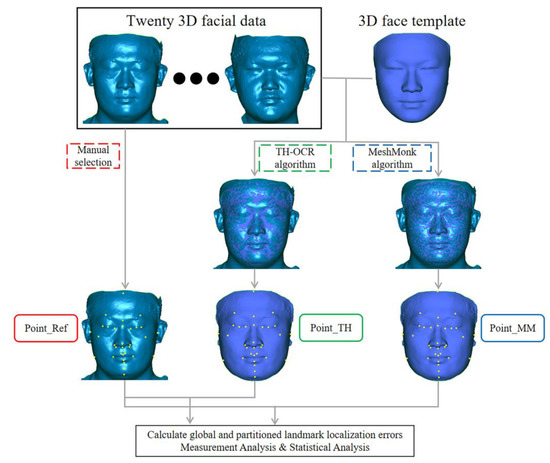

A flow chart depicting the experiment method is shown in Figure 1.

Figure 1.

Flow chart depicting the experiment method.

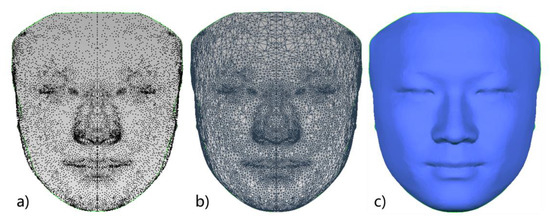

In a previous study, our research team had constructed a 3D face template (FaceModel_Mask) based on the average 3D facial data of 30 Chinese adult males with good facial symmetry, as shown in Figure 2. This 3D face template has 19,534 triangular faces and 9856 vertices, of which 216 vertices are on the midline of the face, and the X coordinate of all midline points is zero. There are 4820 vertices on the left and right sides, and the vertices on both sides are symmetric based on the midline of the face and have a one-to-one correspondence [26]. Compared with the 3D face templates constructed in previous related studies [25,27,28], the 3D face template in this study has the Chinese 3D facial anatomical features. Its data range covers the whole face, including the positions of facial anatomical landmarks commonly used in oral clinical practice, which provides the necessary data basis for this study. Thirty-two facial anatomical landmarks shown in Table 1 were selected from the 9856 vertices of the 3D face template, as shown in Figure 3. The vertex indices were recorded, as shown in Table 1.

Figure 2.

Three-dimensional face template: (a) 3D face template in point cloud form; (b) 3D face template in triangular mesh form; (c) 3D face template in smooth surface form.

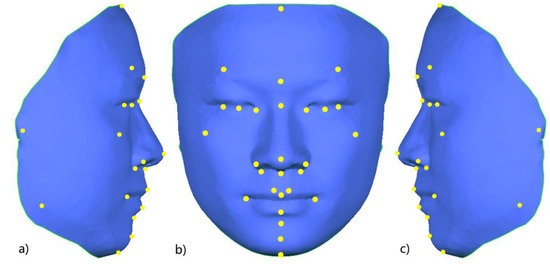

Figure 3.

Schematic diagram of 32 facial anatomical landmarks on the 3D face template; (a) right lateral view; (b) frontal view; (c) left lateral view.

Based on the above-mentioned 3D face template and the open-source non-rigid registration algorithm (MeshMonk), we proposed a “template method” to automatically determine the 3D facial anatomical landmarks. The principle of the template method was as follows: MeshMonk was used to deform and register the 3D face template to the target 3D facial data. The vertex indexes before and after the deformation of the 3D face template remained unchanged. Therefore, based on the recorded vertex indexes, the 3D coordinates of the corresponding vertices on the deformed 3D face template can be automatically obtained. In this way, the effect of automatically determining the anatomical landmarks of the target 3D facial data was realized.

In this study, based on the process of the template method, the non-rigid registration algorithm (TH-OCR) developed by our research team was used to realize the automatic determination of the 3D facial anatomical landmarks. Taking TH-OCR as the experimental group and MeshMonk as the control group, the automatic landmark localization accuracy of the two algorithms was analyzed and evaluated. The automatic landmark localization steps of the TH-OCR algorithm were as follows (the data of one participant were used to illustrate the process):

Step 1: Using the Meshlab 2020 software, a total of 8 facial anatomical landmarks, including the bilateral tragion, endocanthion, pronasale, cheilion, and gnathion, were selected on the 3D face template (FaceModel_Mask). The 3D coordinate data of the landmarks was set as .pp and .csv files. Similarly, the above 8 anatomical landmarks were selected on the participant’s 3D facial data (FaceModel_Patient), and the 3D coordinate data of the landmarks was again saved as .pp and .csv files. The above 8 facial anatomical landmarks were used for the initialization process of non-rigid registration.

Step 2: The landmark set coordinate data (.csv format) of FaceModel_Mask and FaceModel_Patient was imported in MATLAB R2019b software. The Procrustes analysis algorithm was used to calculate the overall scaling factor for FaceModel_Mask and to save the scaling factor in .txt format.

Step 3: The FaceModel_Patient, FaceModel_Mask, their landmark set data (.pp format), and the overall scaling factor of FaceModel_Mask were imported into the TH-OCR algorithm. The algorithm first performed rigid registration based on the 8 landmarks of the two models and unified FaceModel_Patient and FaceModel_Mask to the same spatial scale based on the overall scaling factor of FaceModel_Mask. Then, based on the radial basis function, according to the corresponding relationship between the two sets of landmark data, FaceModel_Mask was elastically deformed, and the 3D shape of FaceModel_Mask was initially close to that of FaceModel_Patient. Then, based on the non-rigid ICP algorithm, FaceModel_Mask was registered to FaceModel_Patient, so that it was further approximated to the 3D shape of FaceModel_Patient. Based on the pre-determined 32 anatomical landmark indexes on FaceModel_Mask, the coordinates of these anatomical landmarks on the deformed FaceModel_Mask were obtained. This resulted in the automatic determination of 3D facial anatomical landmarks by the TH-OCR algorithm.

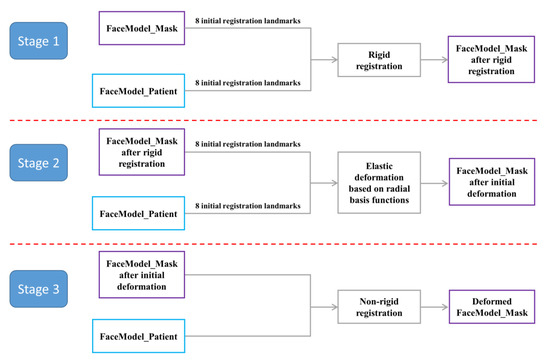

The algorithm flow in step 3 is shown in Figure 4. Part of the function formula involved in step 3 was as follows:

Figure 4.

Flow chart of the TH-OCR algorithm.

Rigid registration part:

where is the set of landmarks of FaceModel_Patient; is the set of landmarks of FaceModel_Mask; stands for rotation matrix; stands for translation vector; is the number of landmarks. Rigid registration of FaceModel_Patient and FaceModel_Mask was realized based on this function formula.

Preliminary elastic deformation based on radial basis function was as follows:

where = (xi,yi,zi) is the ith vertex of FaceModel_Mask; is the ith vertex of FaceModel_Mask after the initial elastic deformation; is the number of vertices of FaceModel_Mask; is the deformation parameter; is the affine transformation parameter; is the radial basis function, where is a positive parameter. The preliminary elastic deformation of FaceModel_Mask was realized based on the correspondence between the landmark sets of FaceModel_Patient and FaceModel_Mask and this function formula.

Non-rigid registration part. Objective function for the non-rigid registration procedure was as follows:

where is the data term error; is the smoothing term error; and is the feature point registration error; is the weighting parameter.

was defined as follows:

where represents the affine transformation matrix; is the closest vertex on FaceModel_Patient to ; is the weight. If a corresponding point for could not be found on FaceModel_Patient, was set to 0, otherwise it was set to 1. takes the 2 norm.

was defined as follows:

where is the line segment connecting and ; is the set of all edges in the FaceModel_Mask after the initial elastic deformation.

was defined as follows:

where stands for landmarks.

The process of non-rigid registration was as follows:

- Initialize Xi0(i = 1, …, N), k = 0;

- Based on a fixed set of parameters (in this study, α = 1, the iteration parameters of β were: 20, 10, 10, 10, 5, 1, and the iteration parameters of γ were: 100, 100, 20, 5, 0.05, 0.005),

- ①.

- For each vertex k on FaceModel_Mask, find the closest vertex on FaceModel_Patient as the corresponding point k;

- ②.

- Calculate Xik (i = 1, …, N) to minimize error E;

- ③.

- Update FaceModel_Mask, the new FaceModel_Mask = {k+1|i = 1, …, N} = {Xikk| i = 1, …, N};

- ④.

- Repeat ①–③ until ;

- Repeat step 2 after changing the parameters. Output the deformed FaceModel_Mask.

The above operation was repeated, and the TH-OCR algorithm was used to complete the determination of anatomical landmarks on the 3D facial data of the 20 participants. The coordinate values of 32 anatomical landmarks for each participant’s data were recorded as the experimental group of this study (Point_TH).

Based on the above data, the non-rigid registration algorithm MeshMonk was used in the control group to achieve the registration of FaceModel_Patient and FaceModel_Mask. The determination of anatomical landmarks using the 3D facial data of 20 participants was completed, and the coordinate values of 32 anatomical landmarks for each participant’s data were recorded as the control group (Point_MM).

2.5. Measurement Analysis

For the 3D facial data of 20 adult males without significant facial deformities included in this study, 32 facial anatomical landmarks manually determined by experts were used as reference values (Point_Ref). The Euclidean distance between the coordinate values of the 32 facial anatomical landmarks determined in the Point_TH group, Point_MM group, and Point_Ref group were calculated for each participant and defined as the “landmark localization error”. The mean and standard deviation of the landmark localization error of the 32 facial anatomical landmarks in the Point_TH group and the Point_MM group were calculated (average of 20 patients’ eponymous landmarks). A quantitative analysis of the effect of the two algorithms in determining facial anatomical landmarks was performed.

The face is divided into three parts based on the horizontal plane passing through the eyebrow point and the nasal base point: the upper face, middle face, and lower face. There are 4 anatomical landmarks in the upper face, 17 in the middle face, and 11 in the lower face. The mean and standard deviation of the landmark localization error of the anatomical landmarks in the three face regions was calculated for each participant. In addition, the facial area comprising the trichion, bilateral tragion, bilateral gonion, and gnathion was defined as the facial marginal area in this study, and the area comprising the remaining 26 landmarks was defined as the facial central area. The mean and standard deviation of the landmark localization error of the anatomical landmarks in the marginal and central areas of the face was calculated to investigate the effect of two algorithms on the determination of anatomical landmarks in different regions of the face.

2.6. Statistical Analysis

To investigate the consistency and reproducibility of manually selected facial anatomical landmarks by the same operator, the intra-class coefficient (ICC) was calculated.

Using SPSS 21.0 software, the S-W normality test was performed on the mean values of the landmark localization error of the 32 facial anatomical landmarks in the Point_TH and Point_MM groups. The Friedman rank sum test was used to make statistical inferences on the effect of determination of facial anatomical landmarks by the two algorithms. The test level α of 0.05 indicated a statistically significant difference. We analyzed whether there was statistical difference between the two methods.

3. Results

The ICC of the facial anatomical landmarks manually selected by the same operator were all >0.95 (0.98–1.00), demonstrating high intra-operator reproducibility.

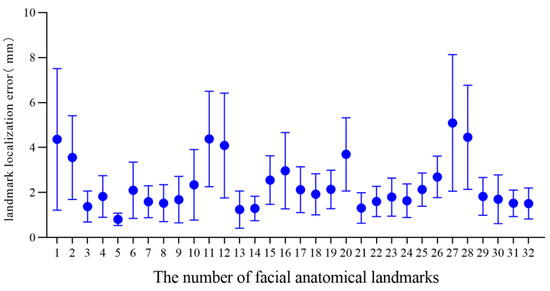

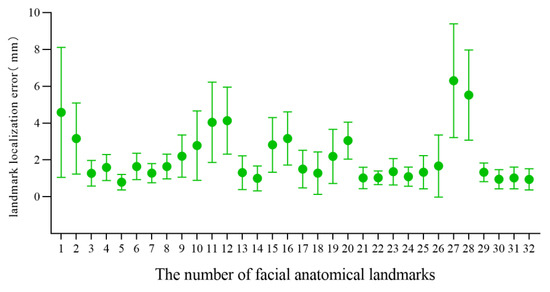

For the 3D facial data of 20 individuals without significant facial deformity in this study, the mean and standard deviation of the landmark localization error of the 32 facial anatomical landmarks in the TH and MM groups was calculated, as shown in Table 2 and Figure 5 and Figure 6. The average landmark localization error of the 32 landmarks in the Point_TH group was 2.34 ± 1.76 mm; the error for the subnasale was the lowest (0.81 ± 0.27 mm) and that for the right gonion was the greatest (5.10 ± 3.04 mm). The landmark localization error was less than 2 mm for 53.1% landmarks and less than 4 mm for 84.4%. The average landmark localization error of the 32 landmarks in the Point_MM group was 2.16 ± 1.97 mm; the error for the subnasale was the lowest (0.80 ± 0.42 mm) and that for the right gonion was the greatest (6.30 ± 3.09 mm). The landmark localization error was less than 2 mm for 62.5% landmarks and less than 4 mm for 84.4%.

Table 2.

Landmark localization errors for the 3D facial anatomical landmarks (mm).

Figure 5.

Landmark localization error for each facial anatomical landmark in the TH group.

Figure 6.

Landmark localization error for each facial anatomical landmark in the MM group.

Statistical analysis showed that the S-W normality test p-values in the Point_TH and Point_MM group were all less than 0.05, which did not obey the normal distribution. The Friedman rank sum test results showed that there was no significant difference in the landmark localization error of the two algorithms for determining facial anatomical landmarks (p > 0.05).

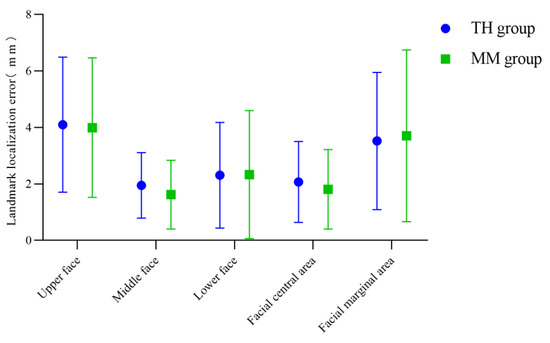

The mean and standard deviation of the landmark localization error of the anatomical landmarks in each facial region of the Point_TH and Point_MM group were calculated, as shown in Table 3 and Figure 7. According to the measurement results, both algorithms are more effective in determining facial anatomical landmarks in the middle face than in the upper and lower face, and the determination of landmarks in the central area of the face was better than that in the marginal area. The determination of landmarks in the central area of the face was slightly better in the Point_MM group than in the Point_TH group, and that in the marginal area was slightly better in the Point_TH group.

Table 3.

Landmark localization error for the 3D facial anatomical landmarks based on facial area (mm).

Figure 7.

Landmark localization errors in the TH and MM groups based on facial area.

4. Discussion

4.1. Related Studies on Automatic Determination of 3D Facial Anatomical Landmarks

Facial anatomical landmarks play an important role in oral clinical diagnosis and treatment, including preoperative disease diagnosis, treatment planning, or postoperative evaluation of treatment outcomes. Therefore, determination of facial anatomical landmarks has always been a subject of interest. With advances in medical technology, the facial data of oral clinical patients has gradually transitioned from 2D images to 3D digital models, and the determination of facial anatomical landmarks has gradually shifted from 2D to 3D. The traditional method to determine facial anatomical landmarks based on 3D facial data mainly involves manual selection, which requires effort and lacks adequate repeatability and consistency [29,30].

In recent years, algorithm-based methods for determining facial anatomical landmarks have been reported. These algorithms can be broadly classified as geometric feature algorithms and artificial intelligence algorithms, as shown in Table 4. In 2009, Sun et al. [14] proposed a 3D facial landmark determination method based on Shape Index features and geometric constraints, which can automatically determine five facial anatomical landmarks, including the endocanthion and pronasale. The average localization accuracy of this method for facial landmarks is higher than 90%. In 2017, Liang et al. [15] proposed a 3D facial landmark determination method involving HK curvature analysis combined with prior knowledge of facial geometry, which can determine eight facial anatomical landmarks, including the pronasale and cheilion. The method was tested on 3D facial data of neutral expressions, and the average landmark localization error was 4.17 ± 2.53 mm. In 2019, Arpah et al. [17] described the automatic determination of 10 facial anatomical landmarks in the nasolabial region, including the pronasale and cheilion, based on the geometric feature information of 3D facial data, and the average landmark localization error was 2.23 mm. This method is mainly suitable for obvious facial features. The determination of anatomical landmarks, such as the pronasale and cheilion, is good, but that of insignificant facial features is not ideal. A limited number of facial anatomical landmarks can be determined using these methods.

Table 4.

Results of related studies on the automatic determination of 3D facial anatomical landmarks.

In 2017, Gilani et al. [21] proposed a method to determine facial landmarks based on a Deep landmark identification network. It helped realize the automatic determination of 11 3D facial anatomical landmarks. The FRGC v2.0 face database was used for testing, and the average landmark localization error was 3.0 mm. In 2018, Wang et al. [22] proposed a coarse-to-fine approach to automatically locate the facial landmarks using deep feature fusion on 3D facial geometry data, with an average landmark localization error of 3.96 ± 2.55 mm in determining 14 facial landmarks according to the BU3DFE dataset. In 2019, Wang [19] proposed a method for determining facial landmarks based on denoising auto-encoder networks. This method can determine 22 landmarks on the face, with an average landmark localization error of 3.71 mm. The above-mentioned automatic determination method of facial anatomical landmarks based on an artificial intelligence algorithm is mainly realized through data training. For the training set, face data with a certain amount of anatomical landmark information are manually selected, and the intelligent algorithm trained using it can output the same landmark information as the training set. The number of facial anatomical landmarks that can be determined by such methods is not limited by the 3D morphological features of the face. However, an intelligent algorithm trained by the training set data of a single number of landmarks has limited flexibility. It is mainly reflected in the following: it is impossible to determine the landmarks that were not included during training, and the number of landmarks output by the algorithm is fixed.

4.2. Evaluation and Analysis of Automatic Landmark Localization Accuracy of the Template Method

MeshMonk is a non-rigid registration algorithm reported in 2019 [25]. This algorithm can gradually deform and register the 3D face template to the target 3D facial data and make them as similar as possible in 3D shape and spatial position. The number and index of vertices of the 3D face template before and after deformation remain unchanged, but the 3D coordinates of the vertices are changed. Claes P’s research team has used MeshMonk for 3D morphological analysis of the face and disease diagnosis analysis in related research, with an initial attempt to automatically determine 19 anatomical landmarks of the 3D facial data [31,32,33,34]. In this study, a radial basis function optimized non-rigid registration algorithm (TH-OCR) was developed by our research team. The TH-OCR algorithm can deform and register the 3D face template onto the 3D facial data of the subject, and the RMS value of the 3D deviation between the registered template and 3D facial data of the subject was less than 0.2 mm.

In this study, TH-OCR and MeshMonk were used to automatically determine 32 facial anatomical landmarks for 20 samples of three-dimensional facial data without significant facial deformities. The measurement results show that the automatic landmark localization accuracy of TH-OCR and MeshMonk were 2.34 ± 1.76 mm and 2.16 ± 1.97 mm, respectively. The stability of automatic landmark localization accuracy of TH-OCR was slightly better, while the average landmark localization accuracy of MeshMonk was slightly higher. The statistical results showed that there was no statistically significant difference between the automatic landmark localization accuracy of TH-OCR and MeshMonk. By evaluating the automatic landmark localization accuracy of the two algorithms in each area of the face, it was found that the performance of the landmark localization accuracy of the two algorithms was consistent. The landmark localization error in the middle face was the smallest (1.95 ± 1.16 mm; 1.62 ± 1.22 mm), and the landmark localization error in the upper face was the largest (4.10 ± 2.39 mm; 3.99 ± 2.47 mm). As can be seen from Figure 5 and Figure 6, the mean and standard deviation of the landmark localization errors in the TH and MM groups were lower in the nasolabial region. The “template method” is suggested to have good accuracy and robustness in determining the landmarks of significant facial anatomical features (curvature changes significantly), indicating good clinical feasibility. Relevant studies have shown that a landmark localization error within 2 mm is the limit that an operator can achieve [23,35]. For the 32 facial anatomical landmarks in this study, there were 17 and 20 landmarks in the TH and MM groups, respectively, and the landmark localization error was less than 2 mm, which indicated that the “template method” could be applicable in an oral clinic to a certain extent.

Compared with the above-mentioned geometric feature algorithms and artificial intelligence algorithms, the template method has the following advantages: ① According to different needs, the index of the facial anatomical landmarks to be determined can be flexibly recorded on the 3D face template, and the corresponding anatomical landmarks of the target 3D facial data can be determined. Therefore, the number of landmarks that can be automatically determined by the template method is not limited, and the flexibility is good; ② The template method does not require algorithm training and has lower requirements for data and operating environment. Its automatic landmark localization has high efficiency and good accuracy. Therefore, the template method may possibly be applied and popularized in oral clinics.

4.3. Advantages and Disadvantages of the TH-OCR Algorithm

The “template method” can help to automatically determine 3D facial anatomical landmarks, including two key parts: 3D face template and non-rigid registration algorithm. In this study, the same 3D face template was used to test the effects of the TH-OCR algorithm developed by our research team and the MeshMonk algorithm to determine 3D facial anatomical landmarks. The measurement results showed that the mean and standard deviation of the landmark localization errors in the TH group (3.52 ± 2.43 mm) were less than those in the MM group (3.70 ± 3.04 mm) when determining landmarks in the facial marginal area (Table 3). This may be because the radial basis function in the TH-OCR algorithm optimizes the non-rigid ICP algorithm. Before deforming and registering the 3D face template to the 3D face data of the individual, the TH-OCR algorithm of our research team preliminarily deforms the 3D face template based on the radial basis function according to the eight manually selected landmarks. The 3D face template is preliminarily close to the 3D shape of the subject’s 3D facial data to improve the speed and accuracy of the subsequent non-rigid ICP algorithm in identifying the corresponding points between the 3D face template and the subject’s 3D facial data. When deforming and registering the 3D face template to the 3D face data of the subject, the TH-OCR algorithm is mainly based on the non-rigid ICP algorithm. In the early stage of deformation registration, the manually selected eight landmarks guide the deformation of the 3D face template. Later, the TH-OCR algorithm controls the data term error, smoothing term error, and feature point registration error of deformation registration by adjusting the weighting parameters of the objective function terms (the constraints on feature point registration errors are from strong to weak, and the constraints on data term errors and smoothing term errors are from weak to strong), and finally completes the deformation registration of the 3D face template. The addition of radial basis functions and the preliminary guidance of manually selected marginal landmarks (bilateral tragion and gnathion) to the deformation of the 3D face template improve the deformation registration effect of the TH-OCR algorithm on the marginal area of the 3D face template to a certain extent. Therefore, the TH-OCR algorithm is slightly superior to the MeshMonk algorithm in determining the landmarks in the facial marginal area. However, the measurement results of this study also showed that when determining the 17 landmarks in the middle area and 26 landmarks in the central area of the face, the mean landmark localization errors in the MM group were 1.62 mm and 1.81 mm, respectively, which were both better than those of the TH group. This reflects that the accuracy of the MeshMonk algorithm in determining the landmarks in the central area of the face is slightly higher than that of the TH-OCR algorithm. The optimization and improvement of the TH-OCR algorithm in this regard requires further research.

4.4. Limitations

The “template method” used in this study also has certain limitations in determining facial anatomical landmarks. First, the determination of anatomical landmarks on the edge of the face needs to be improved. Second, this study only evaluated the landmark localization accuracy of the template method on 3D facial data without significant facial deformities. The application effect of the template method on the 3D facial data with different facial deformities needs to be studied further.

5. Conclusions

In this study, based on the TH-OCR algorithm developed by our research team, the automatic determination of 3D facial anatomical landmarks was completed. For 3D facial data without significant facial deformities, there was no significant difference between the landmark localization accuracy (2.34 ± 1.76 mm) of the TH-OCR algorithm and the landmark localization accuracy (2.16 ± 1.97 mm) of the MeshMonk algorithm, which can meet the application requirements of an oral clinic to a certain extent. However, the landmark localization accuracy in the facial upper and marginal area of the template method was slightly poor, and it is necessary to optimize the 3D face template and non-rigid registration algorithm. The applicability of the “template method” to patients with different facial deformities needs further study.

Author Contributions

Conceptualization, A.W., Y.W., S.W. and Y.Z. (Yijiao Zhao); methodology, A.W.; software, S.W.; validation, resources and data curation, A.W., Y.Z. (Yujia Zhu), N.X., Z.G. and Y.Z. (Yun Zhang); formal analysis, A.W.; writing—original draft preparation, A.W.; writing—review and editing, Y.W., S.W. and Y.Z. (Yijiao Zhao); supervision and funding acquisition, Y.W. and Y.Z. (Yijiao Zhao). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (82071171, 81870815), Key R&D Program of Gansu (21YF5FA165), and Open Subject Foundation of Peking University School and Hospital of Stomatology (PKUSS20210202).

Institutional Review Board Statement

The current study was conducted in accordance with the Declaration of Helsinki and approved by the Biomedical Ethics Committee of Peking University School and Hospital of Stomatology (No: PKUSSIRB-202164079).

Informed Consent Statement

Written informed consent was obtained from all participants included in the study.

Data Availability Statement

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

Acknowledgments

We thank the Peking University School and Hospital of Stomatology for providing 3D facial data. We also thank Lei for his help in algorithm debugging.

Conflicts of Interest

The authors declare that they have no competing interest.

Abbreviations

3D: three-dimensional; 2D: two-dimensional; ICP: iterative closest point; PA: Procrustes analysis.

References

- Hicks, J.L. Important landmarks of the orofacial complex. Emerg. Med. Clin. N. Am. 2000, 18, 379–391. [Google Scholar] [CrossRef]

- Kook, M.; Jung, S.; Park, H.; Oh, H.; Ryu, S.; Cho, J.; Lee, J.; Yoon, S.; Kim, M.; Shin, H. A comparison study of different facial soft tissue analysis methods. J. Cranio-Maxillo-Facial Surg. 2013, 42, 648–656. [Google Scholar] [CrossRef]

- Germec-Cakan, D.; Canter, H.I.; Nur, B.; Arun, T. Comparison of Facial Soft Tissue Measurements on Three-Dimensional Images and Models Obtained with Different Methods. J. Craniofac. Surg. 2010, 21, 1393–1399. [Google Scholar] [CrossRef]

- Ferrario, V.F.; Sforza, C.; Poggio, C.E.; Serrao, G. Facial three-dimensional morphometry. Am. J. Orthod. Dentofac. Orthop. 1996, 109, 86–93. [Google Scholar] [CrossRef]

- Dalal, A.B.; Phadke, S.R. Morphometric analysis of face in dysmorphology. Comput. Meth. Prog. Bio. 2007, 85, 165–172. [Google Scholar] [CrossRef]

- Storms, A.S.; Miclotte, A.; Grosjean, L.; Cadenas De Llano-Pérula, M.; Alqerban, A.; Fieuws, S.; Sun, Y.; Politis, C.; Verdonck, A.; Willems, G. Short-term hard and soft tissue changes after mandibular advancement surgery in Class II patients: A retrospective cephalometric study. Eur. J. Orthodont. 2017, 39, 567–576. [Google Scholar] [CrossRef]

- Salloum, E.; Millett, D.T.; Kelly, N.; McIntyre, G.T.; Cronin, M.S. Soft tissue changes: A comparison between changes caused by the construction bite and by successful treatment with a modified Twin-block appliance. Eur. J. Orthodont. 2018, 40, 512–518. [Google Scholar] [CrossRef]

- Ubaya, T.; Sherriff, A.; Ayoub, A.; Khambay, B. Soft tissue morphology of the naso-maxillary complex following surgical correction of maxillary hypoplasia. Int. J. Oral. Maxillofac. Surg. 2012, 41, 727–732. [Google Scholar] [CrossRef]

- Rosati, R.; De Menezes, M.; Da, S.A.; Rossetti, A.; Lanza, A.G.; Sforza, C. Stereophotogrammetric evaluation of tooth-induced labial protrusion. J. Prosthodont. 2014, 23, 347–352. [Google Scholar] [CrossRef]

- Allam, E.; Mpofu, P.; Ghoneima, A.; Tuceryan, M.; Kula, K. The Relationship Between Hard Tissue and Soft Tissue Dimensions of the Nose in Children: A 3D Cone Beam Computed Tomography Study. J. Forensic. Sci. 2018, 63, 1652–1660. [Google Scholar] [CrossRef]

- Zhu, Y.; Fu, X.; Zhang, L.; Zheng, S.; Wen, A.; Xiao, N.; Wang, Y.; Zhao, Y. A mathematical algorithm of the facial symmetry plane: Application to mandibular deformity 3D facial data. J. Anat. 2022, 240, 556–566. [Google Scholar] [CrossRef]

- Farkas, L.G.; Bryson, W.; Klotz, J. Is photogrammetry of the face reliable? Plast. Reconstr. Surg. 1980, 66, 346. [Google Scholar] [CrossRef]

- Ma, L.; Xu, T.; Lin, J. Validation of a three-dimensional facial scanning system based on structured light techniques. Comput. Meth. Prog. Biomed. 2009, 94, 290–298. [Google Scholar] [CrossRef]

- Sun, H.; Bi, D.; Tao, J. Markers localization of 3D face based on curvature and geometric constraints. Microcomput. Inf. 2009, 25, 264–266. [Google Scholar]

- Liang, Y.; Zhang, Y. 3D facial landmark localization under pose and expression variations. Control. Theory Appl. 2017, 34, 820–828. [Google Scholar]

- Vezzetti, E.; Moos, S.; Marcolin, F.; Stola, V. A pose-independent method for 3D face landmark formalization. Comput. Meth. Prog. Biomed. 2012, 108, 1078–1096. [Google Scholar] [CrossRef]

- Abu, A.; Ngo, C.G.; Abu-Hassan, N.; Othman, S.A. Automated craniofacial landmarks detection on 3D image using geometry characteristics information. BMC Bioinform. 2019, 19, 65–80. [Google Scholar] [CrossRef]

- Liang, S.; Wu, J.; Weinberg, S.M.; Shapiro, L.G. Improved detection of landmarks on 3D human face data. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2013, 2013, 6482–6485. [Google Scholar]

- Wang, L. Research of Robust 3d Facial Landmarking Techniques; Southeast University: Dhaka, Bangladesh, 2019. [Google Scholar]

- Cheng, X.; Da, F.; Deng, X. Coarse-to-fine 3D facial landmark localization based on keypoints. Chin. J. Sci. Instrum. 2018, 39, 256–264. [Google Scholar]

- Gilani, S.Z.; Mian, A.; Eastwood, P. Deep, dense and accurate 3D face correspondence for generating population specific deformable models. Pattern Recogn. 2017, 69, 238–250. [Google Scholar] [CrossRef]

- Wang, K.; Zhao, X.; Gao, W.; Zou, J. A coarse-to-fine approach for 3D facial landmarking by using deep feature fusion. Symmetry 2018, 10, 308. [Google Scholar] [CrossRef]

- Paulsen, R.R.; Juhl, K.A.; Haspang, T.M.; Hansen, T.; Ganz, M.; Einarsson, G. Multi-view Consensus CNN for 3D Facial Landmark Placement. In Proceedings of the Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer International Publishing: Cham, Switzerland, 2019; pp. 706–719. [Google Scholar]

- Zhu, Y.; Xu, Q.; Zhao, Y.; Zhang, L.; Fu, Z.; Wen, A.; Gao, Z.; Zhang, Y.; Fu, X.; Wang, Y. Deep learning-assisted construction of three-demensional facial midsagittal plane. J. Peking Univ. (Health Sci.) 2022, 54, 134–139. [Google Scholar]

- White, J.D.; Ortega-Castrillón, A.; Matthews, H.; Zaidi, A.A.; Ekrami, O.; Snyders, J.; Fan, Y.; Penington, T.; Van Dongen, S.; Shriver, M.D.; et al. MeshMonk: Open-source large-scale intensive 3D phenotyping. Sci. Rep. 2019, 9, 6085. [Google Scholar] [CrossRef]

- Wen, A.; Zhu, Y.; Zheng, S.; Xiao, N.; Gao, Z.; Fu, X.; Wang, Y.; Zhao, Y. Preliminary study on the method of automatically determining facial landmarks based on three-dimensional face template. Chin. J. Stomatol. 2022, 57, 358–365. [Google Scholar]

- Li, M.; Cole, J.B.; Manyama, M.; Larson, J.R.; Liberton, D.K.; Riccardi, S.L.; Ferrara, T.M.; Santorico, S.A.; Bannister, J.J.; Forkert, N.D.; et al. Rapid automated landmarking for morphometric analysis of three-dimensional facial scans. J. Anat. 2017, 230, 607–618. [Google Scholar] [CrossRef]

- Gilani, S.Z.; Shafait, F.; Mian, A. Shape-based automatic detection of a large number of 3D facial landmarks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Li, Z.; Giunta, R.E.; Frank, K.; Schenck, T.L.; Koban, K.C. Reproducibility of Novel Soft-Tissue Landmarks on Three-Dimensional Human Facial Scan Images in Caucasian and Asian. Aesthet. Plast. Surg. 2022, 46, 719–731. [Google Scholar] [CrossRef]

- Baysal, A.; Sahan, A.O.; Ozturk, M.A.; Uysal, T. Reproducibility and reliability of three-dimensional soft tissue landmark identification using three-dimensional stereophotogrammetry. Angle Orthod. 2016, 86, 1004–1009. [Google Scholar] [CrossRef]

- Kung, S.; Walters, M.; Claes, P.; Goldblatt, J.; Le Souef, P.; Baynam, G. A Dysmorphometric Analysis to Investigate Facial Phenotypic Signatures as a Foundation for Non-invasive Monitoring of Lysosomal Storage Disorders. Jimd. Rep. 2013, 8, 31–39. [Google Scholar]

- Farnell, D.J.J.; Richmond, S.; Galloway, J.; Zhurov, A.I.; Pirttiniemi, P.; Heikkinen, T.; Harila, V.; Matthews, H.; Claes, P. An exploration of adolescent facial shape changes with age via multilevel partial least squares regression. Comput. Meth. Prog. Bio 2021, 200, 105935. [Google Scholar] [CrossRef]

- Ekrami, O.; Claes, P.; White, J.D.; Zaidi, A.A.; Shriver, M.D.; Van Dongen, S. Measuring asymmetry from high-density 3D surface scans: An application to human faces. PLoS ONE 2018, 13, e207895. [Google Scholar] [CrossRef]

- Claes, P.; Walters, M.; Vandermeulen, D.; Clement, J.G. Spatially-dense 3D facial asymmetry assessment in both typical and disordered growth. J. Anat. 2011, 219, 444–455. [Google Scholar] [CrossRef]

- Fagertun, J.; Harder, S.; Rosengren, A.; Moeller, C.; Werge, T.; Paulsen, R.R.; Hansen, T.F. 3D facial landmarks: Inter-operator variability of manual annotation. BMC Med. Imaging 2014, 14, 35. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).