Auditory Electrophysiological and Perceptual Measures in Student Musicians with High Sound Exposure

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Noise Exposure Questionnaire

2.3. First Session

2.4. Second Session

2.5. Electrophysiological Waveform Analysis

2.6. Statistical Analysis

3. Results

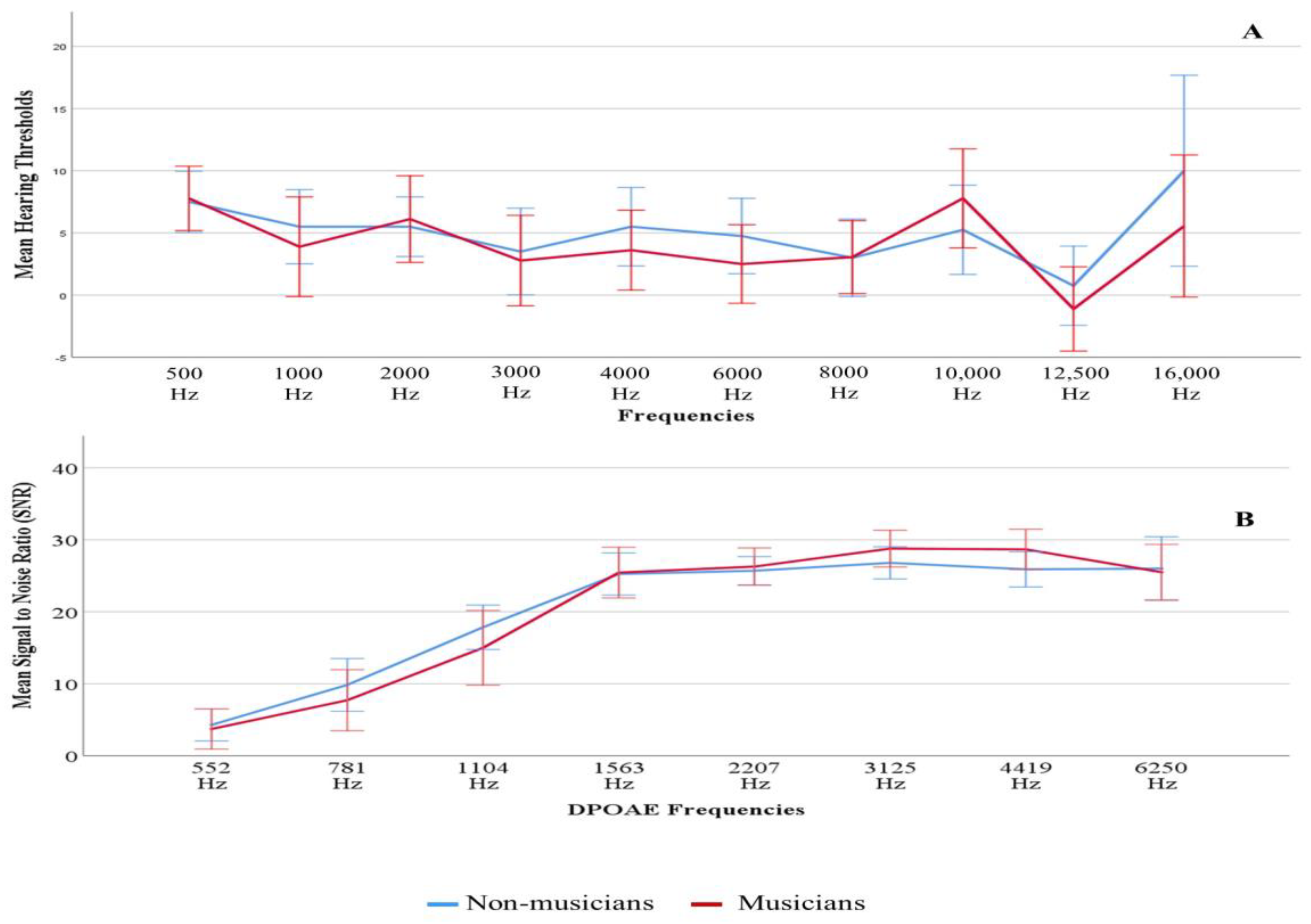

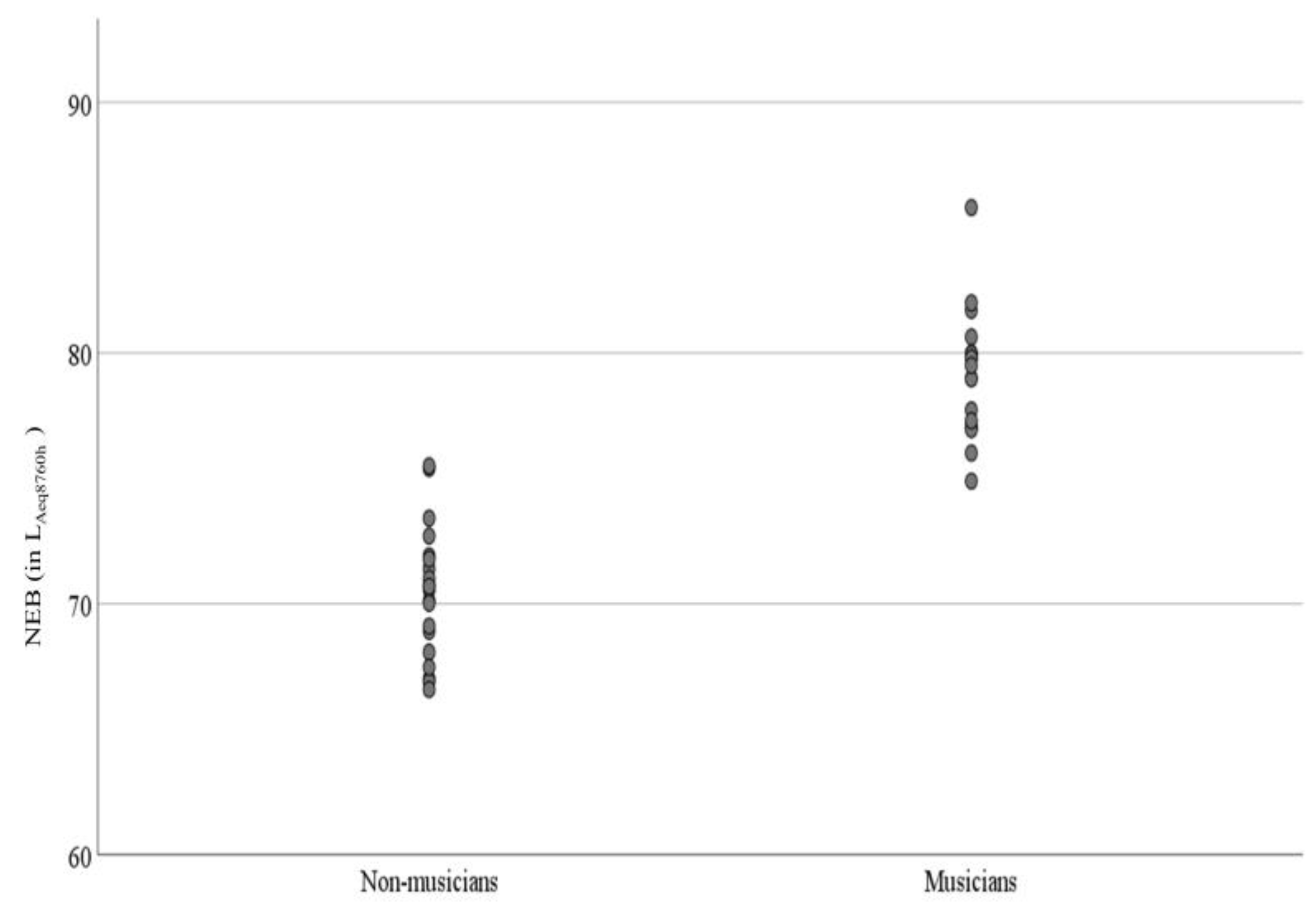

3.1. Descriptive Statistics

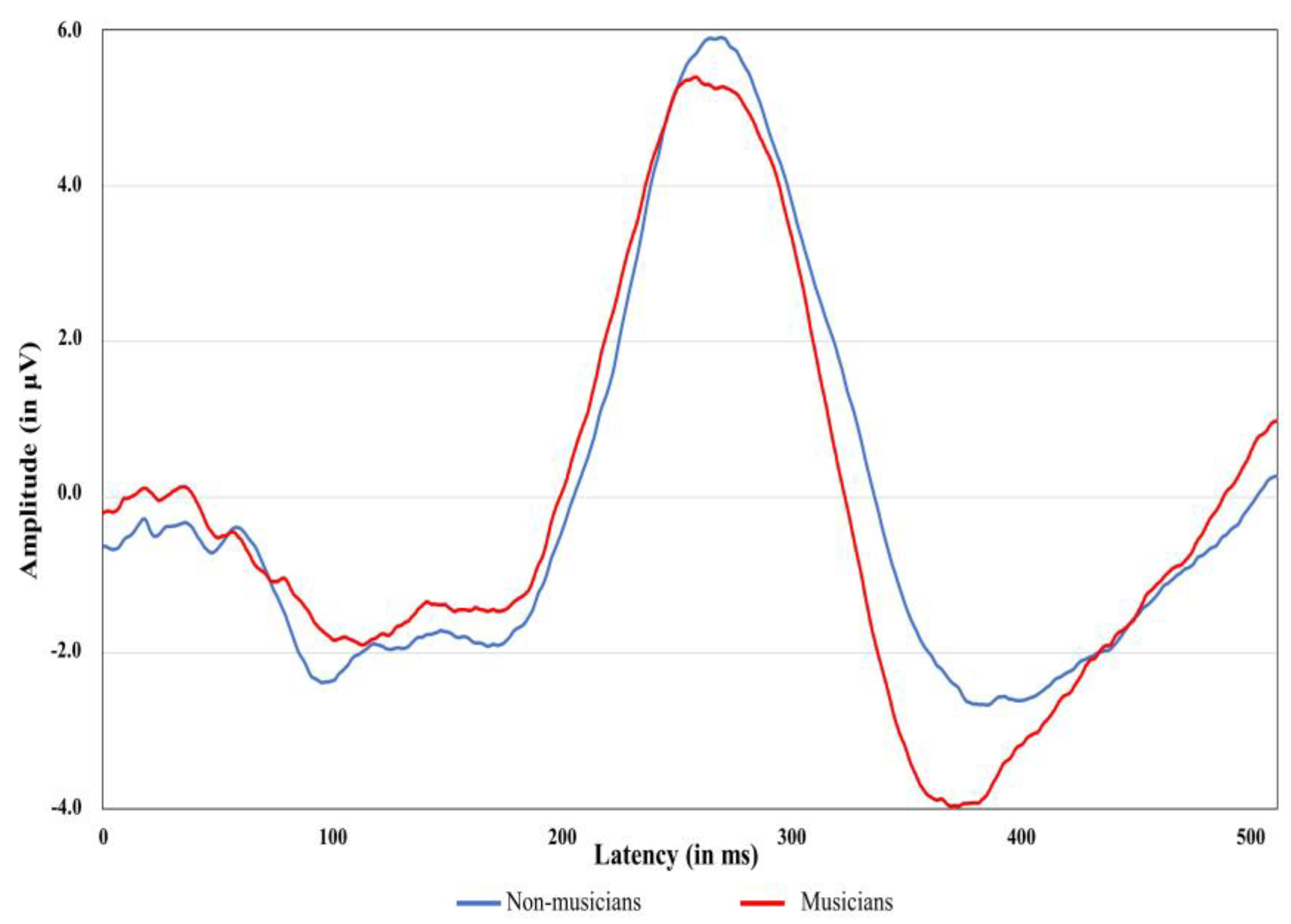

3.2. Electrophysiological Measures

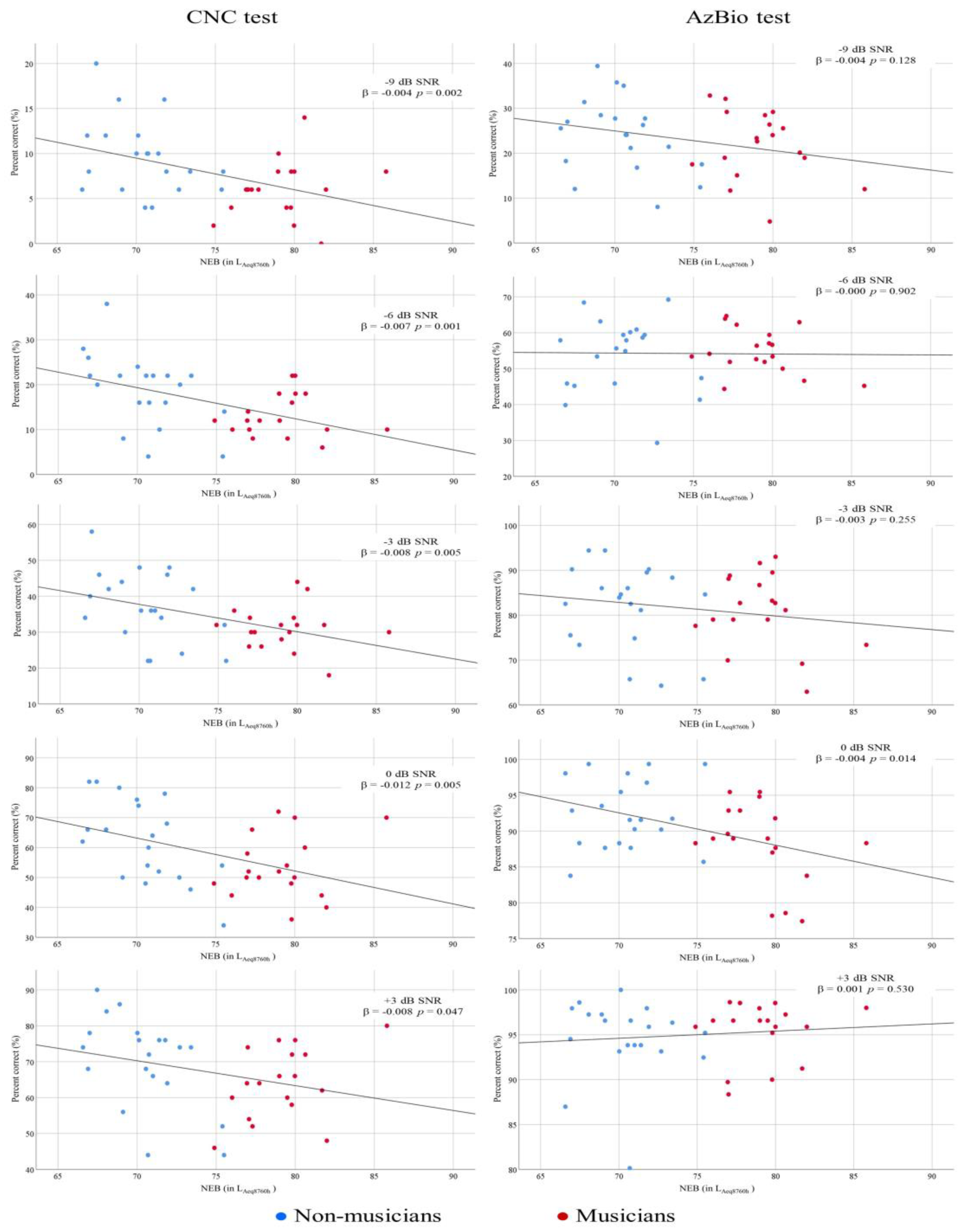

3.3. Word Recognition in Noise

3.4. Sentence Recognition in Noise

4. Discussion

4.1. The Relationship between NEB and Performances on Speech-in-Noise Tasks

4.2. The Relationship between NEB and Electrophysiological Measures

4.3. Speech-in-Noise and Electrophysiological Measures in Musicians

4.4. Study Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Miendlarzewska, E.A.; Trost, W.J. How Musical Training Affects Cognitive Development: Rhythm, Reward and Other Modulating Variables. Front. Neurosci. 2013, 7, 279. [Google Scholar] [CrossRef]

- Hennessy, S.; Mack, W.J.; Habibi, A. Speech-in-Noise Perception in Musicians and Non-Musicians: A Multi-Level Meta-Analysis. Hear. Res. 2022, 416, 108442. [Google Scholar] [CrossRef] [PubMed]

- Liang, C.; Earl, B.; Thompson, I.; Whitaker, K.; Cahn, S.; Xiang, J.; Fu, Q.-J.; Zhang, F. Musicians Are Better than Non-Musicians in Frequency Change Detection: Behavioral and Electrophysiological Evidence. Front. Neurosci. 2016, 10, 464. [Google Scholar] [CrossRef]

- Parbery-Clark, A.; Skoe, E.; Lam, C.; Kraus, N. Musician Enhancement for Speech-In-Noise. Ear Hear. 2009, 30, 653–661. [Google Scholar] [CrossRef] [PubMed]

- Musacchia, G.; Sams, M.; Skoe, E.; Kraus, N. Musicians Have Enhanced Subcortical Auditory and Audiovisual Processing of Speech and Music. Proc. Natl. Acad. Sci. USA 2007, 104, 15894–15898. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.D. Why Would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef]

- Başkent, D.; Gaudrain, E. Musician Advantage for Speech-on-Speech Perception. J. Acoust. Soc. Am. 2016, 139, EL51–EL516. [Google Scholar] [CrossRef]

- Du, Y.; Zatorre, R.J. Musical Training Sharpens and Bonds Ears and Tongue to Hear Speech Better. Proc. Natl. Acad. Sci. USA 2017, 114, 13579–13584. [Google Scholar] [CrossRef]

- Brown, C.J.; Jeon, E.-K.; Driscoll, V.; Mussoi, B.; Deshpande, S.B.; Gfeller, K.; Abbas, P.J. Effects of Long-Term Musical Training on Cortical Auditory Evoked Potentials. Ear Hear. 2017, 38, e74–e84. [Google Scholar] [CrossRef]

- Strait, D.L.; Kraus, N.; Skoe, E.; Ashley, R. Musical Experience Promotes Subcortical Efficiency in Processing Emotional Vocal Sounds. Ann. N. Y. Acad. Sci. 2009, 1169, 209–213. [Google Scholar] [CrossRef]

- Wong, P.C.M.; Skoe, E.; Russo, N.M.; Dees, T.; Kraus, N. Musical Experience Shapes Human Brainstem Encoding of Linguistic Pitch Patterns. Nat. Neurosci. 2007, 10, 420–422. [Google Scholar] [CrossRef] [PubMed]

- Fuller, C.D.; Galvin, J.J., 3rd; Maat, B.; Free, R.H.; Başkent, D. The Musician Effect: Does It Persist under Degraded Pitch Conditions of Cochlear Implant Simulations? Front. Neurosci. 2014, 8, 179. [Google Scholar] [CrossRef] [PubMed]

- Ruggles, D.R.; Freyman, R.L.; Oxenham, A.J. Influence of Musical Training on Understanding Voiced and Whispered Speech in Noise. PLoS ONE 2014, 9, e86980. [Google Scholar] [CrossRef]

- Parbery-Clark, A.; Strait, D.L.; Anderson, S.; Hittner, E.; Kraus, N. Musical Experience and the Aging Auditory System: Implications for Cognitive Abilities and Hearing Speech in Noise. PLoS ONE 2011, 6, e18082. [Google Scholar] [CrossRef]

- Slater, J.; Kraus, N. The Role of Rhythm in Perceiving Speech in Noise: A Comparison of Percussionists, Vocalists and Non-Musicians. Cogn. Process. 2016, 17, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Donai, J.J.; Jennings, M.B. Gaps-in-Noise Detection and Gender Identification from Noise-Vocoded Vowel Segments: Comparing Performance of Active Musicians to Non-Musicians. J. Acoust. Soc. Am. 2016, 139, EL128. [Google Scholar] [CrossRef]

- Peretz, I.; Vuvan, D.; Lagrois, M.-É.; Armony, J.L. Neural Overlap in Processing Music and Speech. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 2015, 370, 20140090. [Google Scholar] [CrossRef]

- Barlow, C. Potential Hazard of Hearing Damage to Students in Undergraduate Popular Music Courses. Med. Probl. Perform. Art. 2010, 25, 175–182. [Google Scholar] [CrossRef]

- Gopal, K.V.; Chesky, K.; Beschoner, E.A.; Nelson, P.D.; Stewart, B.J. Auditory Risk Assessment of College Music Students in Jazz Band-Based Instructional Activity. Noise Health 2013, 15, 246–252. [Google Scholar] [CrossRef]

- McIlvaine, D.; Stewart, M.; Anderson, R. Noise Exposure Levels for Musicians during Rehearsal and Performance Times. Med. Probl. Perform. Artist. 2012, 27, 31–36. [Google Scholar] [CrossRef]

- Miller, V.L.; Stewart, M.; Lehman, M. Noise Exposure Levels for Student Musicians. Med. Probl. Perform. Art. 2007, 22, 160–165. [Google Scholar] [CrossRef]

- Tufts, J.B.; Skoe, E. Examining the Noisy Life of the College Musician: Weeklong Noise Dosimetry of Music and Non-Music Activities. Int. J. Audiol. 2018, 57, S20–S27. [Google Scholar] [CrossRef] [PubMed]

- Washnik, N.; Phillips, S.; Teglas, S. Student’s Music Exposure: Full-Day Personal Dose Measurements. Noise Health 2016, 18, 98. [Google Scholar] [CrossRef] [PubMed]

- O’Brien, I.; Driscoll, T.; Ackermann, B. Sound Exposure of Professional Orchestral Musicians during Solitary Practice. J. Acoust. Soc. Am. 2013, 134, 2748–2754. [Google Scholar] [CrossRef]

- Schmidt, J.H.; Pedersen, E.R.; Juhl, P.M.; Christensen-Dalsgaard, J.; Andersen, T.D.; Poulsen, T.; Bælum, J. Sound Exposure of Symphony Orchestra Musicians. Ann. Occup. Hyg. 2011, 55, 893–905. [Google Scholar] [CrossRef]

- Greasley, A.E.; Fulford, R.J.; Pickard, M.; Hamilton, N. Help Musicians UK Hearing Survey: Musicians’ Hearing and Hearing Protection. Psychol. Music 2018, 48, 529–546. [Google Scholar] [CrossRef]

- ACOEM. Evidence-Based Statement: Noise-Induced Hearing Loss. J. Occup. Environ. Med. 2003, 45, 579–581. [Google Scholar] [CrossRef]

- Phillips, S.L.; Henrich, V.C.; Mace, S.T. Prevalence of Noise-Induced Hearing Loss in Student Musicians. Int. J. Audiol. 2010, 49, 309–316. [Google Scholar] [CrossRef]

- Kujawa, S.G.; Liberman, M.C. Adding Insult to Injury: Cochlear Nerve Degeneration after “Temporary” Noise-Induced Hearing Loss. J. Neurosci. 2009, 29, 14077–14085. [Google Scholar] [CrossRef]

- Furman, A.C.; Kujawa, S.G.; Liberman, M.C. Noise-Induced Cochlear Neuropathy Is Selective for Fibers with Low Spontaneous Rates. J. Neurophysiol. 2013, 110, 577–586. [Google Scholar] [CrossRef]

- Lin, H.W.; Furman, A.C.; Kujawa, S.G.; Liberman, M.C. Primary Neural Degeneration in the Guinea Pig Cochlea after Reversible Noise-Induced Threshold Shift. JARO-J. Assoc. Res. Otolaryngol. 2011, 12, 605–616. [Google Scholar] [CrossRef] [PubMed]

- Valero, M.D.; Burton, J.A.; Hauser, S.N.; Hackett, T.A.; Ramachandran, R.; Liberman, M.C. Noise-Induced Cochlear Synaptopathy in Rhesus Monkeys (Macaca Mulatta). Hear. Res. 2017, 353, 213–223. [Google Scholar] [CrossRef] [PubMed]

- Sergeyenko, Y.; Lall, K.; Liberman, M.C.; Kujawa, S.G. Age-Related Cochlear Synaptopathy: An Early-Onset Contributor to Auditory Functional Decline. J. Neurosci. 2013, 33, 13686–13694. [Google Scholar] [CrossRef]

- Viana, L.M.; O’Malley, J.T.; Burgess, B.J.; Jones, D.D.; Oliveira, C.A.C.P.; Santos, F.; Merchant, S.N.; Liberman, L.D.; Liberman, M.C. Cochlear Neuropathy in Human Presbycusis: Confocal Analysis of Hidden Hearing Loss in Post-Mortem Tissue. Hear. Res. 2015, 327, 78–88. [Google Scholar] [CrossRef] [PubMed]

- Schaette, R.; McAlpine, D. Tinnitus with a Normal Audiogram: Physiological Evidence for Hidden Hearing Loss and Computational Model. J. Neurosci. Off. J. Soc. Neurosci. 2011, 31, 13452–13457. [Google Scholar] [CrossRef] [PubMed]

- Fernandez, K.A.; Guo, D.; Micucci, S.; De Gruttola, V.; Liberman, M.C.; Kujawa, S.G. Noise-Induced Cochlear Synaptopathy with and Without Sensory Cell Loss. Neuroscience 2020, 427, 43–57. [Google Scholar] [CrossRef]

- Bharadwaj, H.M.; Verhulst, S.; Shaheen, L.; Liberman, M.C.; Shinn-Cunningham, B.G. Cochlear Neuropathy and the Coding of Supra-Threshold Sound. Front. Syst. Neurosci. 2014, 8, 26. [Google Scholar] [CrossRef]

- Kujawa, S.G.; Liberman, M.C. Synaptopathy in the Noise-Exposed and Aging Cochlea: Primary Neural Degeneration in Acquired Sensorineural Hearing Loss. Hear. Res. 2015, 330, 191–199. [Google Scholar] [CrossRef]

- Lobarinas, E.; Spankovich, C.; Le Prell, C.G. Evidence of “Hidden Hearing Loss” Following Noise Exposures That Produce Robust TTS and ABR Wave-I Amplitude Reductions. Hear. Res. 2017, 349, 155–163. [Google Scholar] [CrossRef]

- Bramhall, N.F.; Konrad-Martin, D.; McMillan, G.P.; Griest, S.E. Auditory Brainstem Response Altered in Humans with Noise Exposure Despite Normal Outer Hair Cell Function. Ear Hear. 2017, 38, e1–e12. [Google Scholar] [CrossRef]

- Grose, J.H.; Buss, E.; Hall, J.W. Loud Music Exposure and Cochlear Synaptopathy in Young Adults: Isolated Auditory Brainstem Response Effects but No Perceptual Consequences. Trends Hear. 2017, 21, 2331216517737417. [Google Scholar] [CrossRef]

- Johannesen, P.T.; Buzo, B.C.; Lopez-Poveda, E.A. Evidence for Age-Related Cochlear Synaptopathy in Humans Unconnected to Speech-in-Noise Intelligibility Deficits. Hear. Res. 2019, 374, 35–48. [Google Scholar] [CrossRef] [PubMed]

- Liberman, M.C.; Epstein, M.J.; Cleveland, S.S.; Wang, H.; Maison, S.F. Toward a Differential Diagnosis of Hidden Hearing Loss in Humans. PLoS ONE 2016, 11, e0162726. [Google Scholar] [CrossRef] [PubMed]

- Mepani, A.M.; Kirk, S.A.; Hancock, K.E.; Bennett, K.; De Gruttola, V.; Liberman, M.C.; Maison, S.F. Middle-Ear Muscle Reflex and Word-Recognition in “Normal Hearing” Adults: Evidence for Cochlear Synaptopathy? HHS Public Access. Ear Hear. 2020, 41, 25–38. [Google Scholar] [CrossRef]

- Skoe, E.; Tufts, J. Evidence of Noise-Induced Subclinical Hearing Loss Using Auditory Brainstem Responses and Objective Measures of Noise Exposure in Humans. Hear. Res. 2018, 361, 80–91. [Google Scholar] [CrossRef] [PubMed]

- Stamper, G.C.; Johnson, T.A. Auditory Function in Normal-Hearing, Noise-Exposed Human Ears. Ear Hear. 2015, 36, 172–184. [Google Scholar] [CrossRef] [PubMed]

- Suresh, C.H.; Krishnan, A. Search for Electrophysiological Indices of Hidden Hearing Loss in Humans: Click Auditory Brainstem Response Across Sound Levels and in Background Noise. Ear Hear. 2020, 42, 53–67. [Google Scholar] [CrossRef] [PubMed]

- Valderrama, J.T.; Beach, E.F.; Yeend, I.; Sharma, M.; Van Dun, B.; Dillon, H. Effects of Lifetime Noise Exposure on the Middle-Age Human Auditory Brainstem Response, Tinnitus and Speech-in-Noise Intelligibility. Hear. Res. 2018, 365, 36–48. [Google Scholar] [CrossRef] [PubMed]

- Grinn, S.K.; Wiseman, K.B.; Baker, J.A.; Le Prell, C.G. Hidden Hearing Loss? No Effect of Common Recreational Noise Exposure on Cochlear Nerve Response Amplitude in Humans. Front. Neurosci. 2017, 11, 465. [Google Scholar] [CrossRef]

- Prendergast, G.; Guest, H.; Munro, K.J.; Kluk, K.; Léger, A.; Hall, D.A.; Heinz, M.G.; Plack, C.J. Effects of Noise Exposure on Young Adults with Normal Audiograms I: Electrophysiology. Hear. Res. 2017, 344, 68–81. [Google Scholar] [CrossRef] [PubMed]

- Ridley, C.L.; Kopun, J.G.; Neely, S.T.; Gorga, M.P.; Rasetshwane, D.M. Using Thresholds in Noise to Identify Hidden Hearing Loss in Humans. Ear Hear. 2018, 39, 829–844. [Google Scholar] [CrossRef] [PubMed]

- Füllgrabe, C.; Moody, M.; Moore, B.C.J. No Evidence for a Link between Noise Exposure and Auditory Temporal Processing for Young Adults with Normal Audiograms. J. Acoust. Soc. Am. 2020, 147, EL465. [Google Scholar] [CrossRef] [PubMed]

- Fulbright, A.N.C.; Le Prell, C.G.; Griffiths, S.K.; Lobarinas, E. Effects of Recreational Noise on Threshold and Suprathreshold Measures of Auditory Function. Semin. Hear. 2017, 38, 298–318. [Google Scholar] [CrossRef] [PubMed]

- Guest, H.; Munro, K.J.; Prendergast, G.; Howe, S.; Plack, C.J. Tinnitus with a Normal Audiogram: Relation to Noise Exposure but No Evidence for Cochlear Synaptopathy. Hear. Res. 2017, 344, 265–274. [Google Scholar] [CrossRef]

- Lasky, R.E. Rate and Adaptation Effects on the Auditory Evoked Brainstem Response in Human Newborns and Adults. Hear. Res. 1997, 111, 165–176. [Google Scholar] [CrossRef]

- Bina, A.; Hourizadeh, S. The Most Important Factors of Causing Hearing Loss Following Central Auditory System Disorder and Central Nervous System (CNS) Disorder. J. Otolaryngol. Res. 2015, 2, 1–5. [Google Scholar] [CrossRef]

- Neuman, A.C. Central Auditory System Plasticity and Aural Rehabilitation of Adults. J. Rehabil. Res. Dev. 2005, 42, 169–186. [Google Scholar] [CrossRef]

- Polich, J. Clinical Application of the P300 Event-Related Brain Potential. Phys. Med. Rehabil. Clin. N. Am. 2004, 15, 133–161. [Google Scholar] [CrossRef]

- Polich, J. Updating P300: An Integrative Theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128. [Google Scholar] [CrossRef]

- Broglio, S.P.; Moore, R.D.; Hillman, C.H. A History of Sport-Related Concussion on Event-Related Brain Potential Correlates of Cognition. Int. J. Psychophysiol. Off. J. Int. Organ. Psychophysiol. 2011, 82, 16–23. [Google Scholar] [CrossRef]

- Johnson, T.A.; Cooper, S.; Stamper, G.C.; Chertoff, M. Noise Exposure Questionnaire: A Tool for Quantifying Annual Noise Exposure. J. Am. Acad. Audiol. 2016, 28, 14–35. [Google Scholar] [CrossRef]

- Henselman, L.W.; Henderson, D.; Shadoan, J.; Subramaniam, M.; Saunders, S.; Ohlin, D. Effects of Noise Exposure, Race, and Years of Service on Hearing in U.S. Army Soldiers. Ear Hear. 1995, 16, 382–391. [Google Scholar] [CrossRef] [PubMed]

- Ishii, E.K.; Talbott, E.O. Race/Ethnicity Differences in the Prevalence of Noise-Induced Hearing Loss in a Group of Metal Fabricating Workers. J. Occup. Environ. Med. 1998, 40, 661–666. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, I. Increased Medial Olivocochlear Reflex Strength in Normal-Hearing, Noise-Exposed Humans. PLoS ONE 2017, 12, e0184036. [Google Scholar] [CrossRef] [PubMed]

- Washnik, N.J.; Bhatt, I.S.; Phillips, S.L.; Tucker, D.; Richter, S. Evaluation of Cochlear Activity in Normal-Hearing Musicians. Hear. Res. 2020, 395, 108027. [Google Scholar] [CrossRef]

- McBride, D.I.; Williams, S. Audiometric Notch as a Sign of Noise Induced Hearing Loss. Occup. Environ. Med. 2001, 58, 46–51. [Google Scholar] [CrossRef]

- Nageris, B.I.; Raveh, E.; Zilberberg, M.; Attias, J. Asymmetry in Noise-Induced Hearing Loss: Relevance of Acoustic Reflex and Left or Right Handedness. Undefined 2007, 28, 434–437. [Google Scholar] [CrossRef]

- Wilson, R.H.; McArdle, R. Speech Signals Used to Evaluate Functional Status of the Auditory System. J. Rehabil. Res. Dev. 2005, 42, 79–94. [Google Scholar] [CrossRef]

- Miller, G.A.; Heise, G.A.; Lichten, W. The Intelligibility of Speech as a Function of the Context of the Test Materials. J. Exp. Psychol. 1951, 41, 329–335. [Google Scholar] [CrossRef]

- O’neill, J.J. Recognition of Intelligibility Test Materials in Context and Isolation. J. Speech Hear. Disord. 1957, 22, 87–90. [Google Scholar] [CrossRef]

- Prendergast, G.; Millman, R.E.; Guest, H.; Munro, K.J.; Kluk, K.; Dewey, R.S.; Hall, D.A.; Heinz, M.G.; Plack, C.J. Effects of Noise Exposure on Young Adults with Normal Audiograms II: Behavioral Measures. Hear. Res. 2017, 356, 74–86. [Google Scholar] [CrossRef] [PubMed]

- Yeend, I.; Beach, E.F.; Sharma, M.; Dillon, H. The Effects of Noise Exposure and Musical Training on Suprathreshold Auditory Processing and Speech Perception in Noise. Hear. Res. 2017, 353, 224–236. [Google Scholar] [CrossRef] [PubMed]

- Spankovich, C.; Le Prell, C.G.; Lobarinas, E.; Hood, L.J. Noise History and Auditory Function in Young Adults With and Without Type 1 Diabetes Mellitus. Ear Hear. 2017, 38, 724–735. [Google Scholar] [CrossRef]

- Bhatt, I.S.; Wang, J. Evaluation of Dichotic Listening Performance in Normal-Hearing, Noise-Exposed Young Females. Hear. Res. 2019, 380, 10–21. [Google Scholar] [CrossRef] [PubMed]

- Don, M.; Ponton, C.W.; Eggermont, J.J.; Masuda, A. Auditory Brainstem Response (ABR) Peak Amplitude Variability Reflects Individual Differences in Cochlear Response Times. J. Acoust. Soc. Am. 1994, 96, 3476–3491. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, C.; Phillips, D.S.; Trune, D.R. Variables Affecting the Auditory Brainstem Response: Audiogram, Age, Gender and Head Size. Hear. Res. 1989, 40, 75–85. [Google Scholar] [CrossRef]

- Benet, N.; Krishna, R.; Kumar, V. Enhancement of Processing Capabilities of Hippocampus Lobe: A P300 Based Event Related Potential Study. J. Audiol. Otol. 2021, 25, 119–123. [Google Scholar] [CrossRef]

- Dittinger, E.; D’Imperio, M.; Besson, M. Enhanced Neural and Behavioural Processing of a Nonnative Phonemic Contrast in Professional Musicians. Eur. J. Neurosci. 2018, 47, 1504–1516. [Google Scholar] [CrossRef]

- Thakur, L.; Anand, J.P.; Banerjee, P.K. Auditory Evoked Functions in Ground Crew Working in High Noise Environment of Mumbai Airport. Indian J. Physiol. Pharmacol. 2004, 48, 453–460. [Google Scholar]

- Massa, C.G.P.; Rabelo, C.M.; Moreira, R.R.; Matas, C.G.; Schochat, E.; Samelli, A.G. P300 in Workers Exposed to Occupational Noise. Braz. J. Otorhinolaryngol. 2012, 78, 107–112. [Google Scholar] [CrossRef]

- Coffey, E.B.J.; Mogilever, N.B.; Zatorre, R.J. Speech-in-Noise Perception in Musicians: A Review. Hear. Res. 2017, 352, 49–69. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. Musicians Experience Less Age-Related Decline in Central Auditory Processing. Psychol. Aging 2012, 27, 410–417. [Google Scholar] [CrossRef] [PubMed]

- Boebinger, D.; Evans, S.; Scott, S.K.; Rosen, S.; Lima, C.F.; Manly, T. Musicians and Non-Musicians Are Equally Adept at Perceiving Masked Speech. J. Acoust. Soc. Am. 2015, 137, 378. [Google Scholar] [CrossRef] [PubMed]

- Escobar, J.; Mussoi, B.S.; Silberer, A.B. The Effect of Musical Training and Working Memory in Adverse Listening Situations. Ear Hear. 2020, 41, 278–288. [Google Scholar] [CrossRef] [PubMed]

- Madsen, S.M.K.; Whiteford, K.L.; Oxenham, A.J. Musicians Do Not Benefit from Differences in Fundamental Frequency When Listening to Speech in Competing Speech Backgrounds. Sci. Rep. 2017, 7, 12624. [Google Scholar] [CrossRef] [PubMed]

- Skoe, E.; Camera, S.; Tufts, J. Noise Exposure May Diminish the Musician Advantage for Perceiving Speech in Noise. Ear Hear. 2019, 40, 782–793. [Google Scholar] [CrossRef]

- Hope, A.J.; Luxon, L.M.; Bamiou, D.-E. Effects of Chronic Noise Exposure on Speech-in-Noise Perception in the Presence of Normal Audiometry. J. Laryngol. Otol. 2013, 127, 233–238. [Google Scholar] [CrossRef]

- Le Prell, C.G. Effects of Noise Exposure on Auditory Brainstem Response and Speech-in-Noise Tasks: A Review of the Literature. Int. J. Audiol. 2019, 58, S3–S32. [Google Scholar] [CrossRef]

- DiNino, M.; Holt, L.; Shinn-Cunningham, B. Cutting Through the Noise: Noise-Induced Cochlear Synaptopathy and Individual Differences in Speech Understanding Among Listeners With Normal Audiograms. Ear Hear. 2021, 43, 9–22. [Google Scholar] [CrossRef]

- Fan, Y.; Liang, J.; Cao, X.; Pang, L.; Zhang, J. Effects of Noise Exposure and Mental Workload on Physiological Responses during Task Execution. Int. J. Environ. Res. Public Health 2022, 19, 12434. [Google Scholar] [CrossRef]

- Jafari, M.J.; Khosrowabadi, R.; Khodakarim, S.; Mohammadian, F. The Effect of Noise Exposure on Cognitive Performance and Brain Activity Patterns. Open Access Maced. J. Med. Sci. 2019, 7, 2924–2931. [Google Scholar] [CrossRef] [PubMed]

- Thompson, R.; Smith, R.B.; Bou Karim, Y.; Shen, C.; Drummond, K.; Teng, C.; Toledano, M.B. Noise Pollution and Human Cognition: An Updated Systematic Review and Meta-Analysis of Recent Evidence. Environ. Int. 2022, 158, 106905. [Google Scholar] [CrossRef]

- Patel, S.V.; DeCarlo, C.M.; Book, S.A.; Schormans, A.L.; Whitehead, S.N.; Allman, B.L.; Hayes, S.H. Noise Exposure in Early Adulthood Causes Age-Dependent and Brain Region-Specific Impairments in Cognitive Function. Front. Neurosci. 2022, 16, 1001686. [Google Scholar] [CrossRef] [PubMed]

- Mehraei, G.; Hickox, A.E.; Bharadwaj, H.M.; Goldberg, H.; Verhulst, S.; Liberman, M.C.; Shinn-Cunningham, B.G. Auditory Brainstem Response Latency in Noise as a Marker of Cochlear Synaptopathy. J. Neurosci. 2016, 36, 3755–3764. [Google Scholar] [CrossRef]

- Gordon-Salant, S.; Cole, S.S. Effects of Age and Working Memory Capacity on Speech Recognition Performance in Noise Among Listeners With Normal Hearing. Ear Hear. 2016, 37, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Amer, T.; Kalender, B.; Hasher, L.; Trehub, S.E.; Wong, Y. Do Older Professional Musicians Have Cognitive Advantages? PLoS ONE 2013, 8, e71630. [Google Scholar] [CrossRef] [PubMed]

| P300 Stimulus Parameters | ABR Stimulus Parameters | |

|---|---|---|

| Stimulus | /ba/—frequent (80%) /ta/—infrequent (20%) | 100 µs click |

| Intensity | 80 dB nHL | 80 dB nHL |

| Stimulation rate | 1.10/s | 11.3, 51.3, and 81.3/s |

| Transducer | ER-3A insert earphones | ER-3A insert earphones |

| Presentation | Monoaural (left ear) | Monoaural (left ear) |

| Recording Parameters | ||

| Filter setting | 1–30 Hz | 100–3000 Hz |

| Electrode montage | Vertical (2-channel) Channel A: Positive Cz Negative mastoid left Channel B: Positive above left eye Negative below left eye Ground: Fpz | Vertical (1-channel) Positive Cz Negative left mastoid Ground Fpz |

| Stimulus Rate | Group | Gender | Wave I Amplitude | Wave V Amplitude | Wave I Latency | Wave V Latency |

|---|---|---|---|---|---|---|

| Mean (SD) | Mean (SD) | Mean (SD) | Mean (SD) | |||

| 11.3 | Non-musician | Male | 0.32 (0.14) | 0.46 (0.14) | 1.51 (0.11) | 5.72 (0.17) |

| Female | 0.35 (0.11) | 0.54 (0.11) | 1.59 (0.09) | 5.64 (0.22) | ||

| Musician | Male | 0.30 (0.10) | 0.44 (0.12) | 1.58 (0.10) | 5.72 (0.17) | |

| Female | 0.38 (0.13) | 0.61 (0.12) | 1.59 (0.03) | 5.59 (0.25) | ||

| 51.3 | Non-musician | Male | 0.15 (0.08) | 0.41 (0.14) | 1.63 (0.13) | 5.92 (0.17) |

| Female | 0.18 (0.10) | 0.53 (0.06) | 1.66 (0.10) | 5.92 (0.19) | ||

| Musician | Male | 0.19 (0.09) | 0.43 (0.11) | 1.66 (0.12) | 5.99 (0.23) | |

| Female | 0.21 (0.04) | 0.49 (0.13) | 1.66 (0.08) | 5.88 (0.18) | ||

| 81.3 | Non-musician | Male | 0.12 (0.10) | 0.40 (0.14) | 1.71 (0.10) | 6.10 (0.13) |

| Female | 0.15 (0.08) | 0.54 (0.09) | 1.70 (0.11) | 6.11 (0.19) | ||

| Musician | Male | 0.11 (0.07) | 0.38 (0.11) | 1.68 (0.15) | 6.17 (0.18) | |

| Female | 0.17 (0.04) | 0.44 (0.10) | 1.72 (0.07) | 6.05 (0.14) |

| Group | Gender | P300 Amplitude | P300 Latency |

|---|---|---|---|

| Mean (SD) | Mean (SD) | ||

| Non-musician | Male | 9.22 (4.54) | 274.78 (14.70) |

| Female | 11.84 (6.03) | 263.89 (23.31) | |

| Musician | Male | 11.38 (3.33) | 276.70 (15.08) |

| Female | 10.33 (6.17) | 267.83 (27.39) |

| Wave I Rate 11.3 | Wave I Rate 51.3 | Wave I Rate 81.3 | Wave V Rate 11.3 | Wave V Rate 51.3 | Wave V Rate 81.3 | ||

|---|---|---|---|---|---|---|---|

| NEB | β value | 0.003 | 0.004 | 0.001 | 0.005 | 0.000 | −0.005 |

| Std. error | 0.004 | 0.003 | 0.002 | 0.004 | 0.004 | 0.004 | |

| p-value | 0.417 | 0.132 | 0.670 | 0.262 | 0.990 | 0.220 | |

| Gender | β value | 0.064 | 0.025 | 0.045 | 0.129 | 0.110 | 0.100 |

| Std. error | 0.038 | 0.027 | 0.025 | 0.040 | 0.037 | 0.038 | |

| p-value | 0.106 | 0.355 | 0.077 | 0.003 | 0.006 | 0.012 | |

| Adjusted R2 | 0.028 | 0.023 | 0.035 | 0.236 | 0.156 | 0.174 | |

| p-value | 0.229 | 0.251 | 0.203 | 0.009 | 0.020 | 0.013 |

| CNC Test | −9 dB | −6 dB | −3 dB | 0 dB | +3 dB | |

|---|---|---|---|---|---|---|

| NEB | β value | −0.004 ** | −0.007 ** | −0.008 ** | −0.012 ** | −0.008 * |

| Std. error | 0.001 | 0.002 | 0.003 | 0.004 | 0.004 | |

| p-value | 0.002 | 0.001 | 0.005 | 0.005 | 0.047 | |

| Gender | β value | −0.025 * | −0.033 | −0.019 | −0.033 | −0.047 |

| Std. error | 0.012 | 0.021 | 0.027 | 0.039 | 0.037 | |

| p-value | 0.040 | 0.117 | 0.477 | 0.398 | 0.218 | |

| Adjusted R2 | 0.237 ** | 0.245 ** | 0.160 * | 0.158 * | 0.079 * | |

| p-value | 0.003 | 0.003 | 0.018 | 0.019 | 0.090 |

| AzBio Test | −9 dB | −6 dB | −3 dB | 0 dB | +3 dB | |

|---|---|---|---|---|---|---|

| NEB | β value | −0.004 | 0.000 | −0.003 | −0.004 * | 0.001 |

| Std. error | 0.003 | 0.003 | 0.003 | 0.002 | 0.001 | |

| p-value | 0.128 | 0.902 | 0.255 | 0.014 | 0.530 | |

| Gender | β value | 0.027 | −0.006 | −0.018 | 0.004 | 0.002 |

| Std. error | 0.025 | 0.028 | 0.029 | 0.017 | 0.013 | |

| p-value | 0.304 | 0.841 | 0.537 | 0.833 | 0.885 | |

| Adjusted R2 | 0.054 | −0.056 | −0.013 | 0.120 * | −0.045 | |

| p-value | 0.143 | 0.975 | 0.470 | 0.040 | 0.818 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Washnik, N.J.; Bhatt, I.S.; Sergeev, A.V.; Prabhu, P.; Suresh, C. Auditory Electrophysiological and Perceptual Measures in Student Musicians with High Sound Exposure. Diagnostics 2023, 13, 934. https://doi.org/10.3390/diagnostics13050934

Washnik NJ, Bhatt IS, Sergeev AV, Prabhu P, Suresh C. Auditory Electrophysiological and Perceptual Measures in Student Musicians with High Sound Exposure. Diagnostics. 2023; 13(5):934. https://doi.org/10.3390/diagnostics13050934

Chicago/Turabian StyleWashnik, Nilesh J., Ishan Sunilkumar Bhatt, Alexander V. Sergeev, Prashanth Prabhu, and Chandan Suresh. 2023. "Auditory Electrophysiological and Perceptual Measures in Student Musicians with High Sound Exposure" Diagnostics 13, no. 5: 934. https://doi.org/10.3390/diagnostics13050934

APA StyleWashnik, N. J., Bhatt, I. S., Sergeev, A. V., Prabhu, P., & Suresh, C. (2023). Auditory Electrophysiological and Perceptual Measures in Student Musicians with High Sound Exposure. Diagnostics, 13(5), 934. https://doi.org/10.3390/diagnostics13050934