Light-Dermo: A Lightweight Pretrained Convolution Neural Network for the Diagnosis of Multiclass Skin Lesions

Abstract

1. Introduction

1.1. Motivations

1.2. Major Contributions

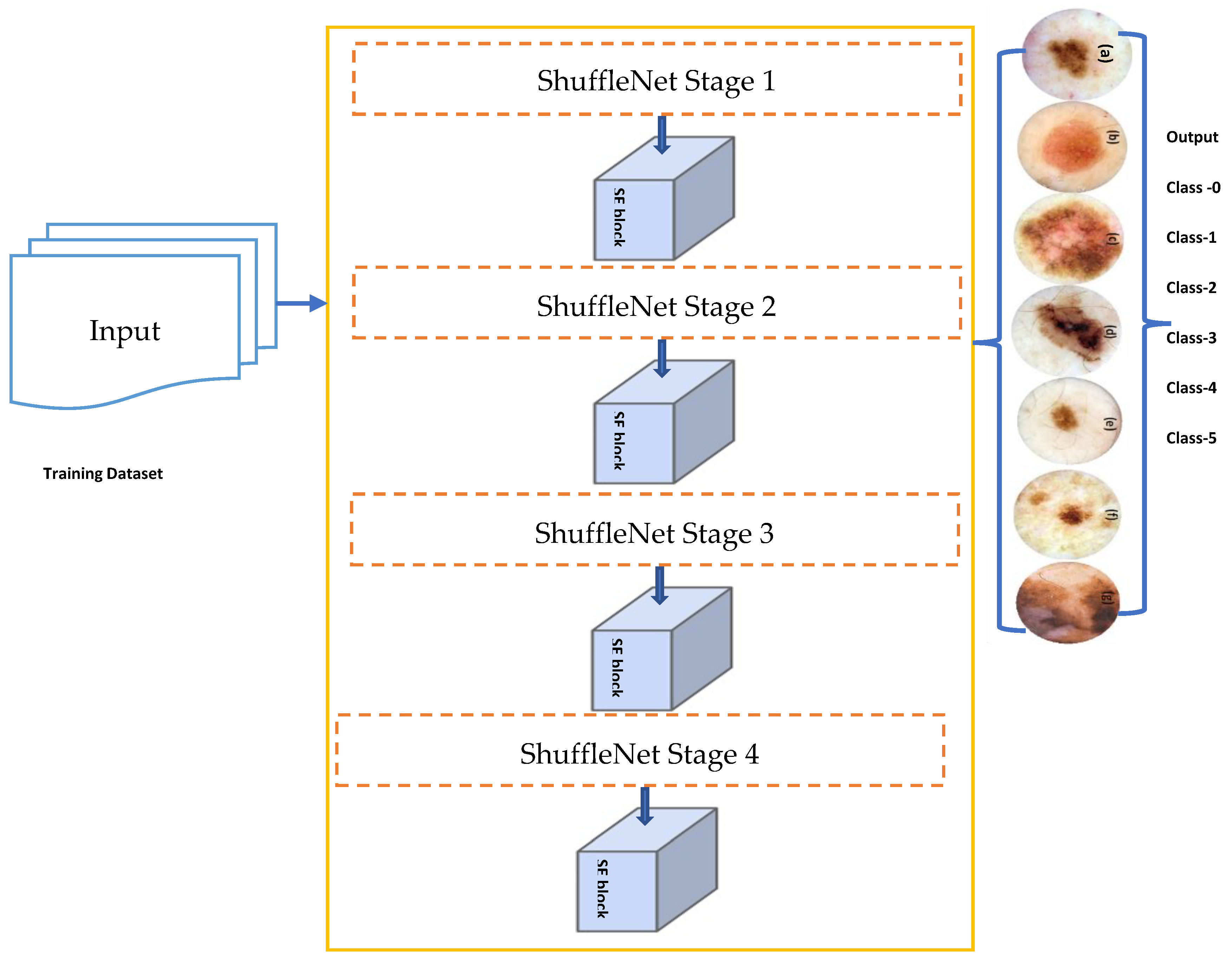

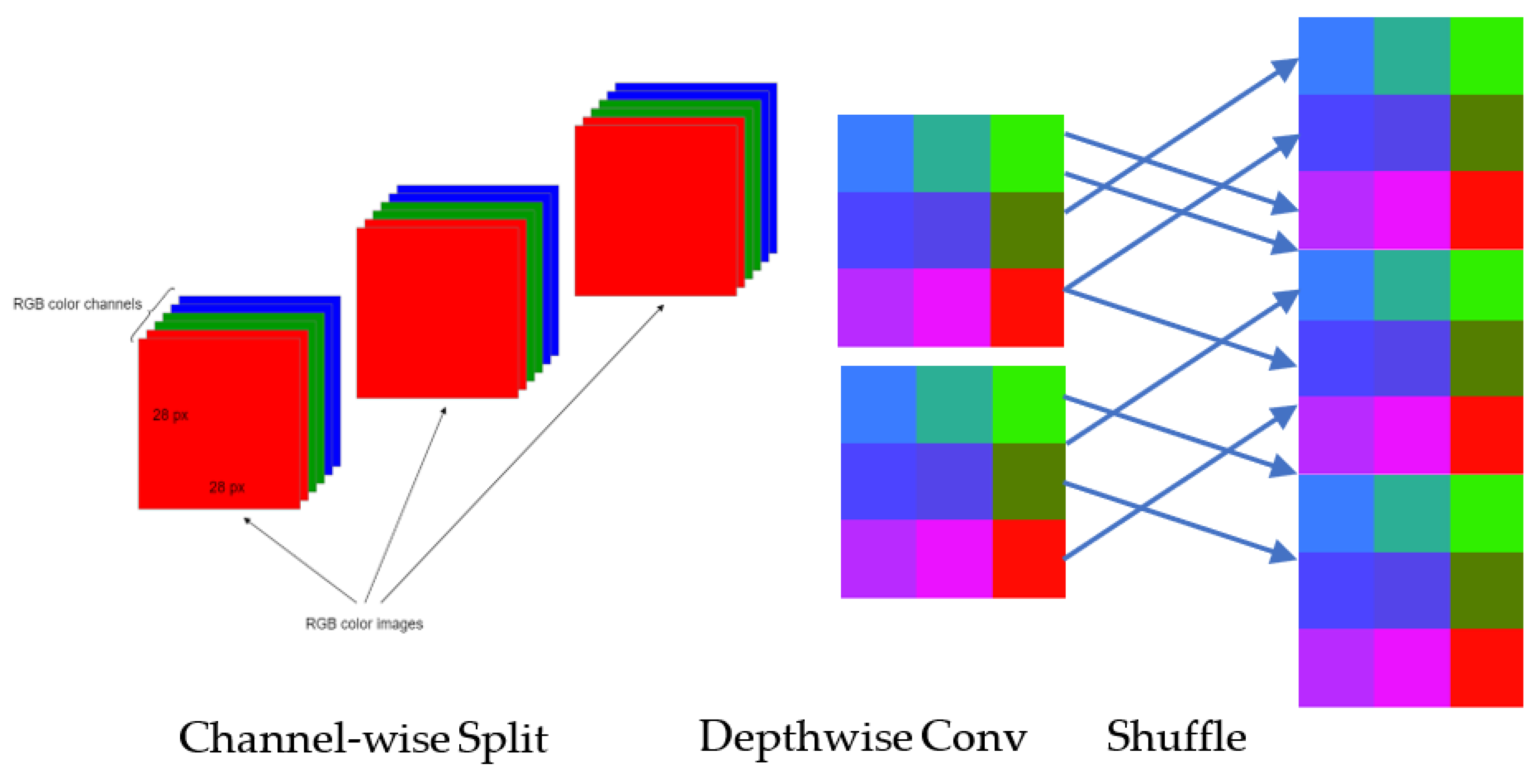

- A new lightweight pretrained model based on the ShuffleNet architecture is developed in this paper which incorporates different layers, a separate nonlinear activation function such as GELU, and a channel shuffling technique.

- The Squeeze-and-excitation (SE) blocks are integrated to ShuffleNet architecture for developing a lightweight Light-Dermo model to manage resource constraints (latency, memory size, etc.).

- The Light-Dermo model has ability to reduce overfitting. The ShuffleNet network’s connections create quick pathways from the bottom layers to the top ones. As a result, the loss function (GELU) gives each layer more direction. Therefore, the dense connection protects against the overfitting problem better, especially when learning from insignificant amounts of data.

- The Light-Dermo model is a computational inexpensive solution for the diagnosis of multiple classes of pigmented skin lesions (PSLs) compared to state-of-the-art approaches, when tested on mobile devices.

2. Literature Review

| Ref. | Description | * Classification | Dataset | Augment? | Results% | Limitations |

|---|---|---|---|---|---|---|

| [8] | Pigmented Skin Lesions (PSLs) classify into seven classes by using TL approach. | AlexNet | ISIC2018 | Yes | ACC.: 89.7 | Evaluated on single dataset, classify seven-classes, but computational expensive, classifier overfitting. |

| [10] | The combine work of TL and DL. | MobileNet V2 and LSTM | HAM10000 | Yes | ACC: 85 | Classes imbalance problem, binary classification, tested on signal dataset and computational expensive. |

| [11] | The approach employs FixCaps for dermoscopic image classification. | FixCaps | HAM10000 | Yes | Acc.: 96.49 | Classes imbalance problem, evaluated on single dataset, classify only two-classes, and computational expensive. |

| [12] | The approach is a multiclass EfficientNet TL classifier to recognize different classes of PSLs. | EfficientNet | HAM10000 | Yes | ACC.: 87.91 | Single dataset, accuracy is not good, which limits the detection accuracy, 6 classes only, overfitting and computational expensive |

| [13] | They developed another technique based on CNN and non-linear activations functions. | Employ Linear and nonlinear activation functions either the hidden layers or output layers | PH2 ISIC | Yes | ACC: 97.5 | Evaluated on two datasets, classify only two-classes, classifier overfitting, and Computational expensive. |

| [14] | CNN model along with activation functions. | Multiple-CNN models | HAM10000 Dataset | Yes | ACC: 97.85 | Three classes of PSLs and reduced hyper-parameters so computational expensive, classifier overfitting and used only single dataset. |

| [15] | The approach is based on convolutional neural network model based on deep learning (DCNN to accurately classify the malignant skin lesions | DCNN | HAM10000 Dataset | Yes | ACC:91.3 | Classes imbalance problem, evaluated on single dataset, classify seven-classes, and computational expensive, classifier overfitting. |

| [16] | Differentiate only benign and Malignant. | VGG16 | Skin Cancer: Malignant vs. Benign 1 | Yes | ACC: 89 | Classes imbalance problem, binary classification only two-classes, not generalize solution, and computational expensive. |

| [17] | The approach of the classifier is based on Deep Learning Algorithm | ResNet-152 | ASAN Edinburgh Hallym | Yes | ACC: 96 | Classes imbalance problem, evaluated on single dataset, classify only two-classes, and computational expensive. |

| [18] | This technique uses features fusion approach to recognize PSLs. | PDFFEM | ISBI 2016, ISIC 2017, and PH2. | Yes | ISBI 2016 ACC:99.8 | Image processing, handcrafted-based feature extraction approach, which limits the detection accuracy, 6 classes only, classifier underfitting, and computational expensive |

| [19] | Different classifiers are utilized to evaluate the approach. | DT, KNN, LR and LAD | HAM10000 | Yes | ACC:95.18 | Classes imbalance problem, evaluated on single dataset, classify seven-classes, and computational expensive, classifier overfitting. |

| [20] | The approach is a Heterogeneous of Deep CNN Features Fusion and Reduction | SVM, KNN and NN | PH2, ISBI 2016, ISBI 2017 | Yes | ACC: 95.1% | Image processing, handcrafted-based feature extraction approach, which limits the detection accuracy, 6 classes only and computational expensive |

| [22] | Segmentation and Classification of Melanoma and Nevis | KNN, CNN | ISIC, DermNet NZ | Yes | -- | Classes imbalance problem, evaluated on two datasets, classify only two-classes, and computational expensive. |

| [24] | Segmentation and classification approach | Pretrain CN, moth flame optimization (IMFO) | ISBI 2016, ISBI 2017, ISIC 2018, and PH2, HAM10000 | Yes | ACC: 91% | Image processing, handcrafted-based feature extraction approach, which limits the detection accuracy, 6 classes only and computational expensive |

| [25] | Dermo-Deep is developed for classification based on two classes | five-layer pretrained CNN architecture | ISBI 2016, ISBI 2017, ISIC 2018, and PH2, HAM10000 | No | ACC: 96% | Classes imbalance problem, classify seven-classes, and computational expensive, classifier overfitting. |

| [26] | Classification of seven classes to recognize PSLs | Google’s Inception-v3 | HAM10000 | Yes | ACC: 90% | Classes imbalance problem, binary classification only two-classes, and computational expensive. |

| [27] | A DCNN model is developed, which was constructed with several layers, various filter sizes, and fewer filters and parameters | DCNN | ISIC-17, ISIC-18, ISIC-19 | Yes | ACC: 94% | Classes imbalance problem, classify only two-classes, and computational expensive. |

| [29] | Different pretrained models based on transfer learning techniques were evaluated in recent study | DenseNet201 | ISIC | Yes | --- | Limits the detection accuracy, 6 classes only and computational expensive |

3. Background of Cutting-Edge TL Networks

3.1. AlexNet TL

3.2. MobileNet TL

3.3. SqueezeNet TL

3.4. Inception TL

3.5. ResNet TL

3.6. Xception TL

3.7. ShuffleNet TL

4. Materials and Methods

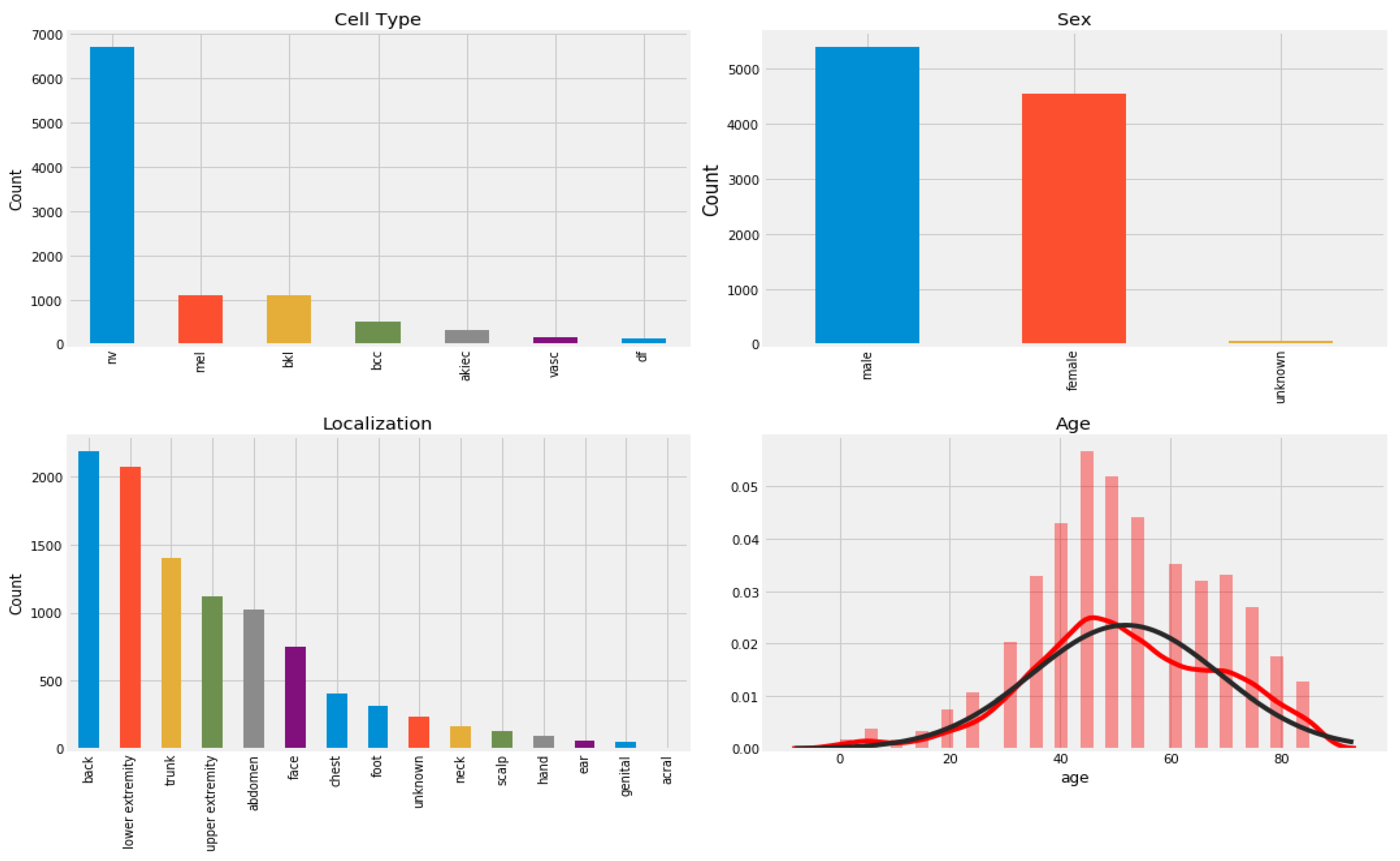

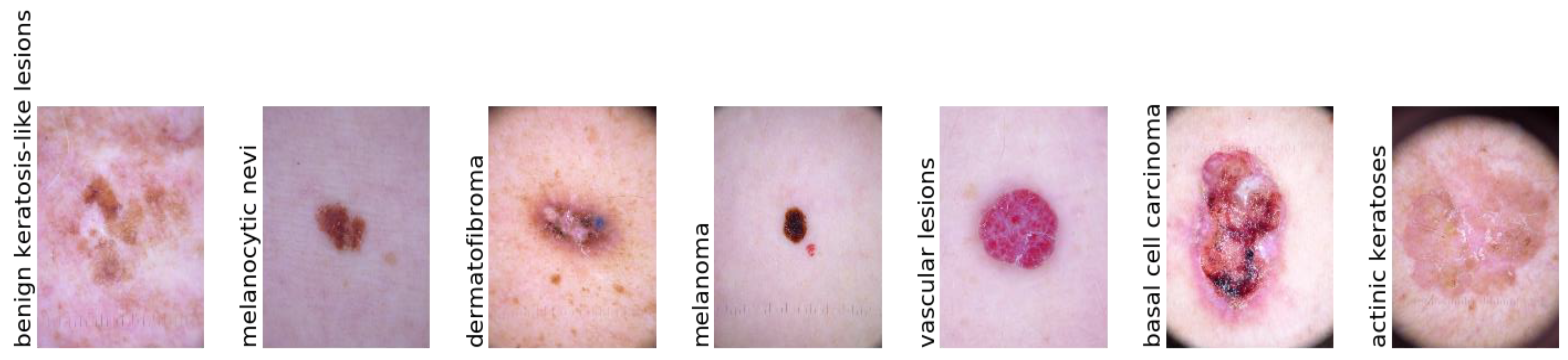

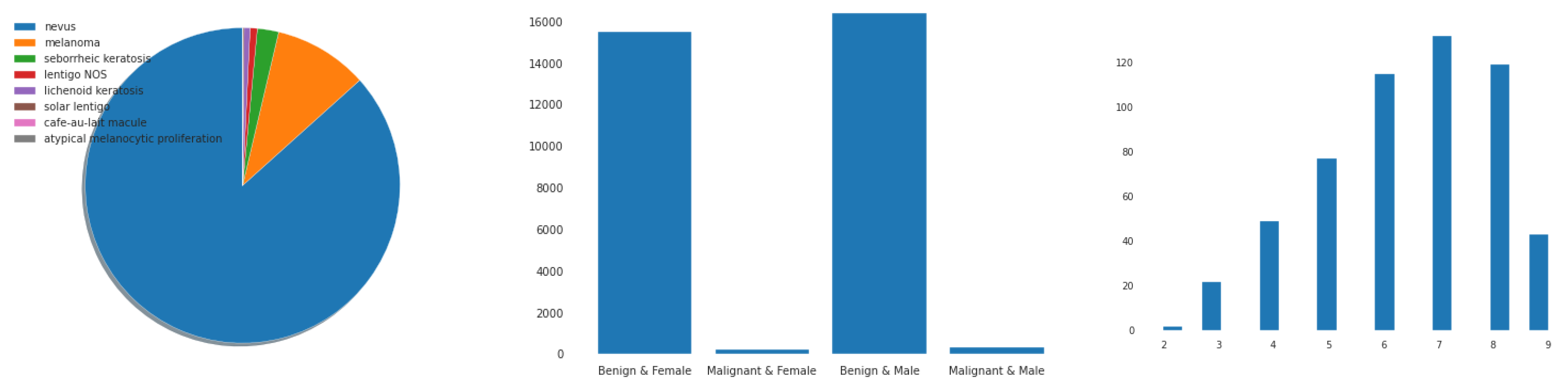

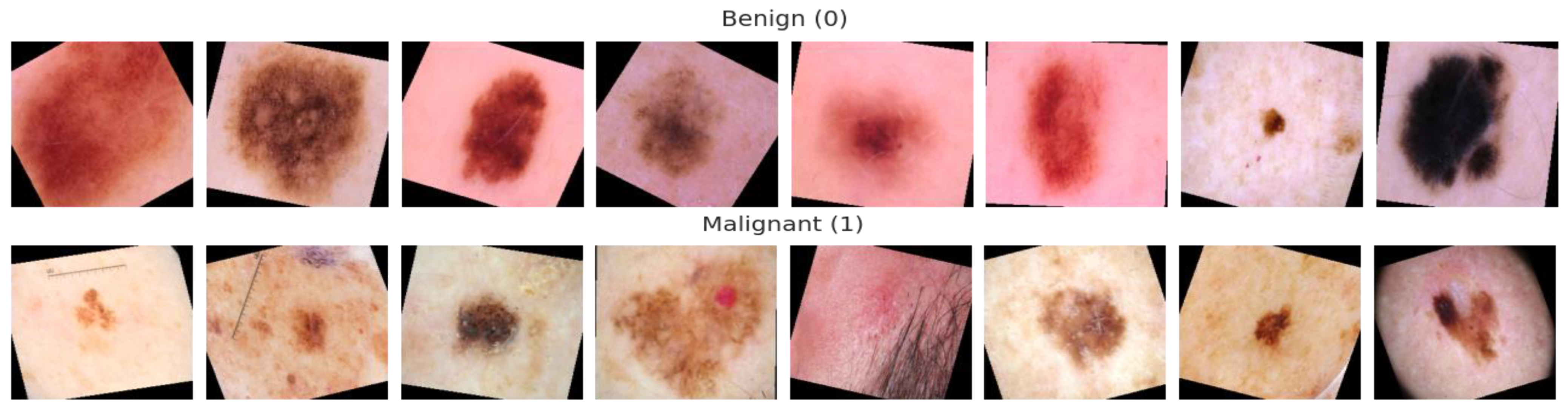

4.1. Data Acquisition

4.2. Proposed Methodology

4.2.1. Data Imbalance and Augmentation

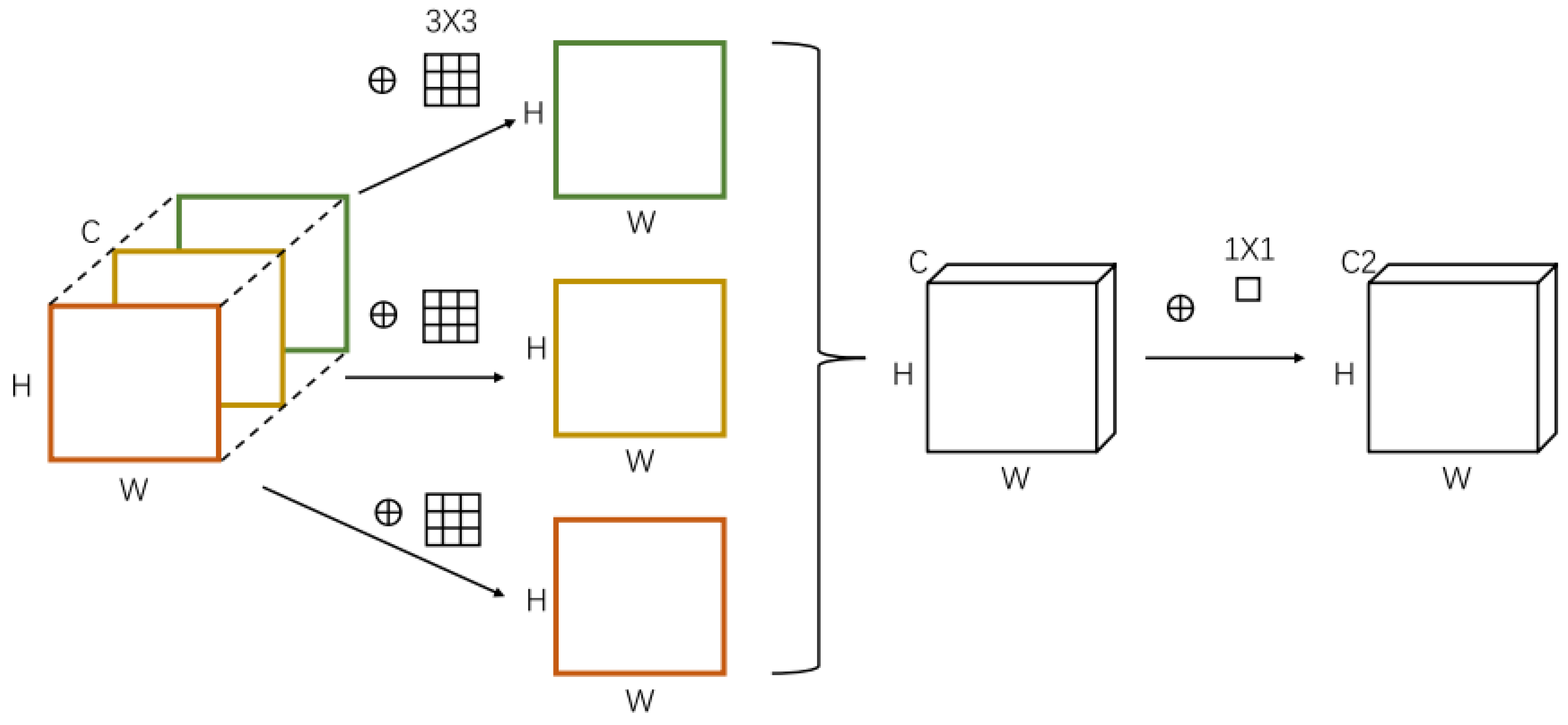

4.2.2. Improved ShuffleNet-Light Architecture

| Algorithm 1: ShuffleNet-Light Architecture for Features Extraction and Classification of PSLs |

| Input:Input Tensor (), 2-D of (256 × 256 × 3) PSLs training dataset. Output:Obtained and Classified feature mapaugmented 2-D image Main Process: Step 1. Define number of stages = 4 Step 2. Iterate for Each Stage

Step 4. Fcat(i) = concatenation (# features-maps) Step 5. channel = shuffle (x) [End Step 2] Step 6. Model Construction

Step 8. Test samplesare predicted to the class label using the decision function of the below equation. |

4.2.3. Design of Network Structure

4.2.4. Transfer Learning

5. Results

5.1. Experimental Setup

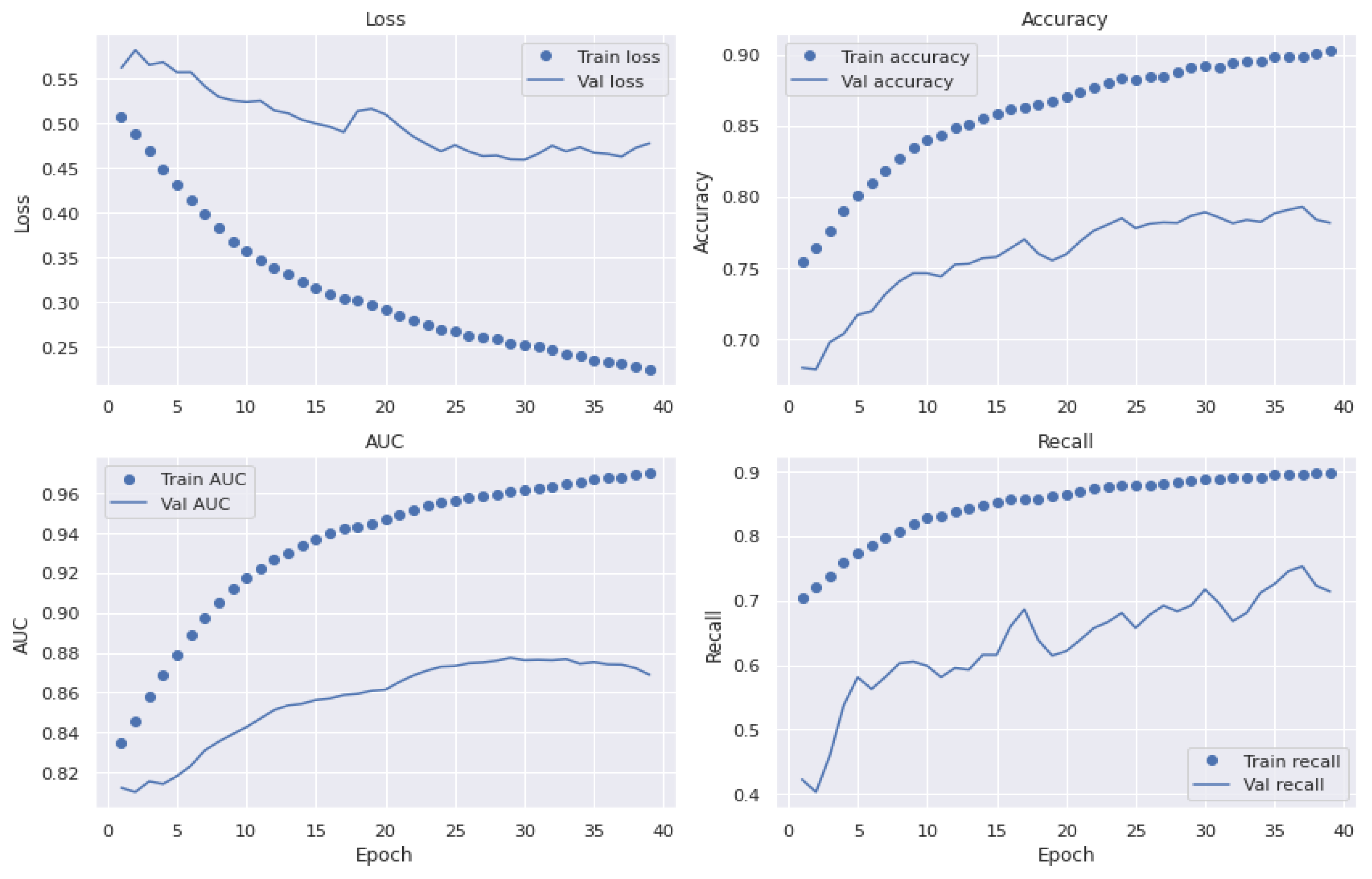

5.2. Model Training

5.3. Model Evalaution

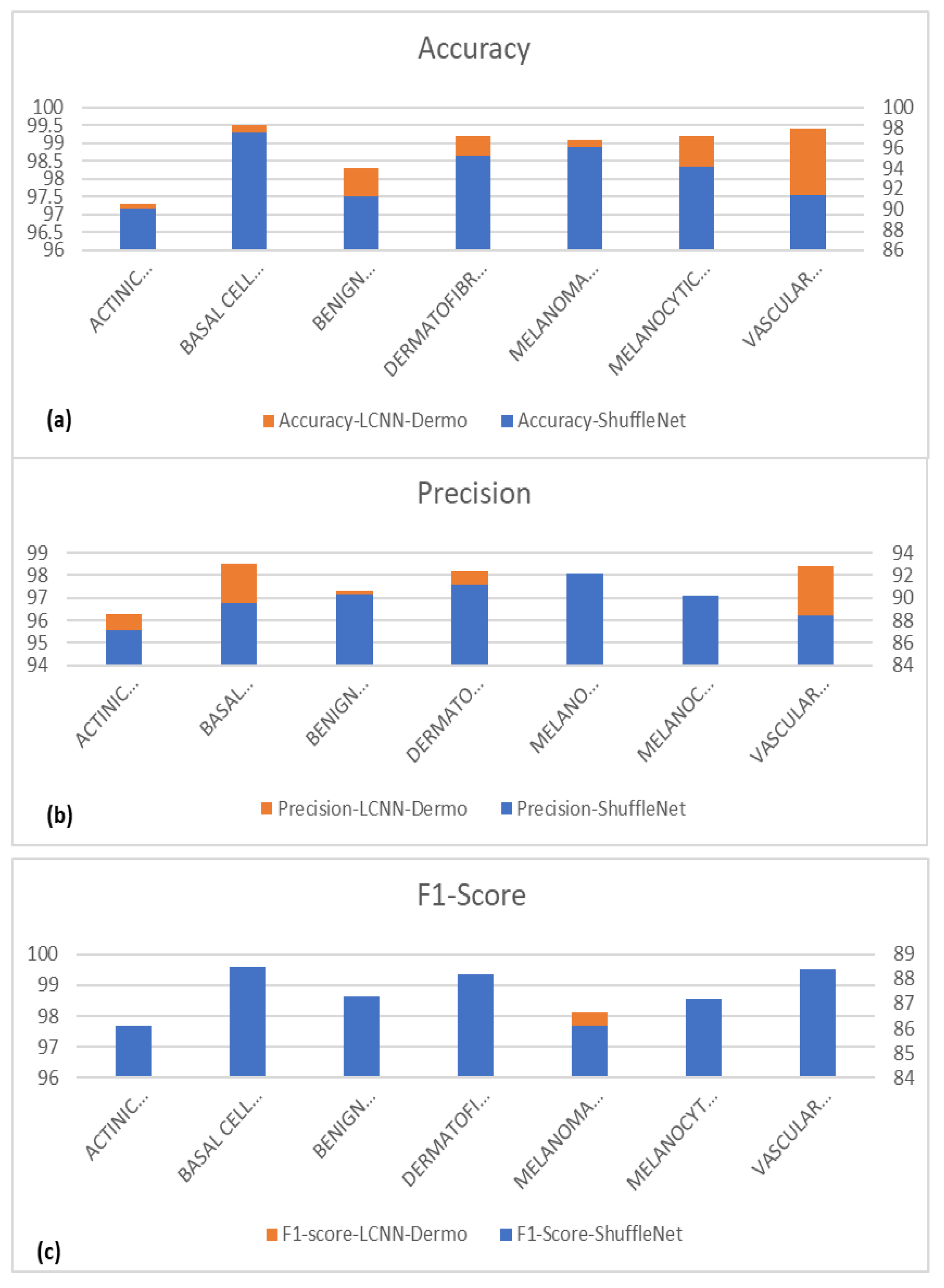

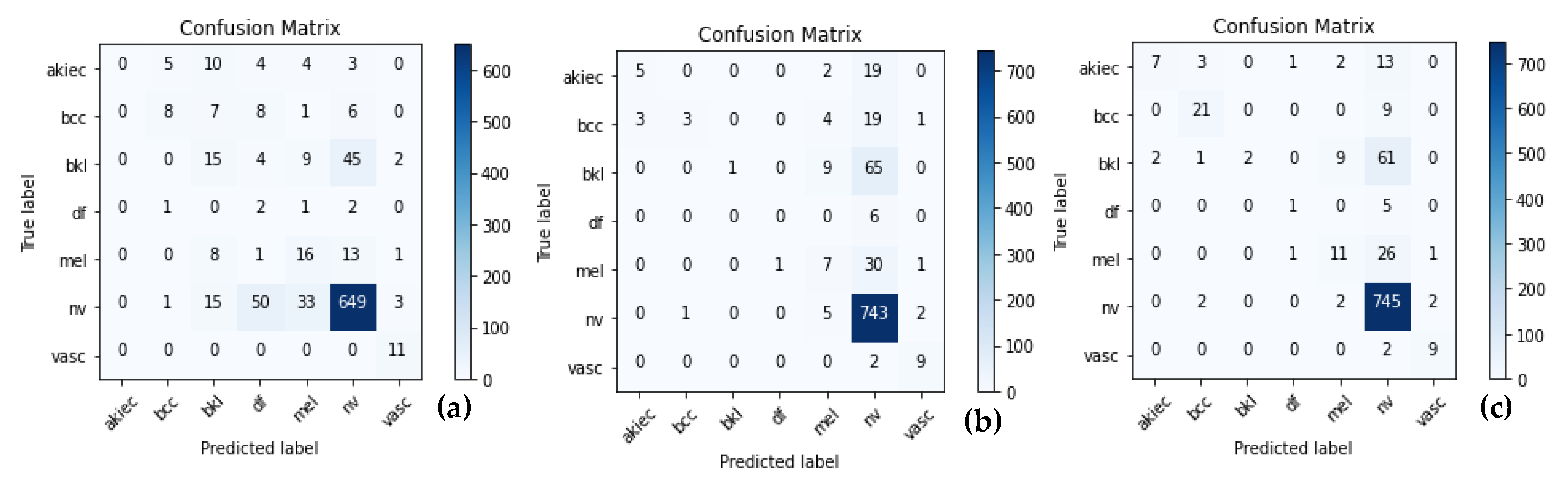

5.4. Results Analyis

5.5. Comparisons in Terms of Computational Time

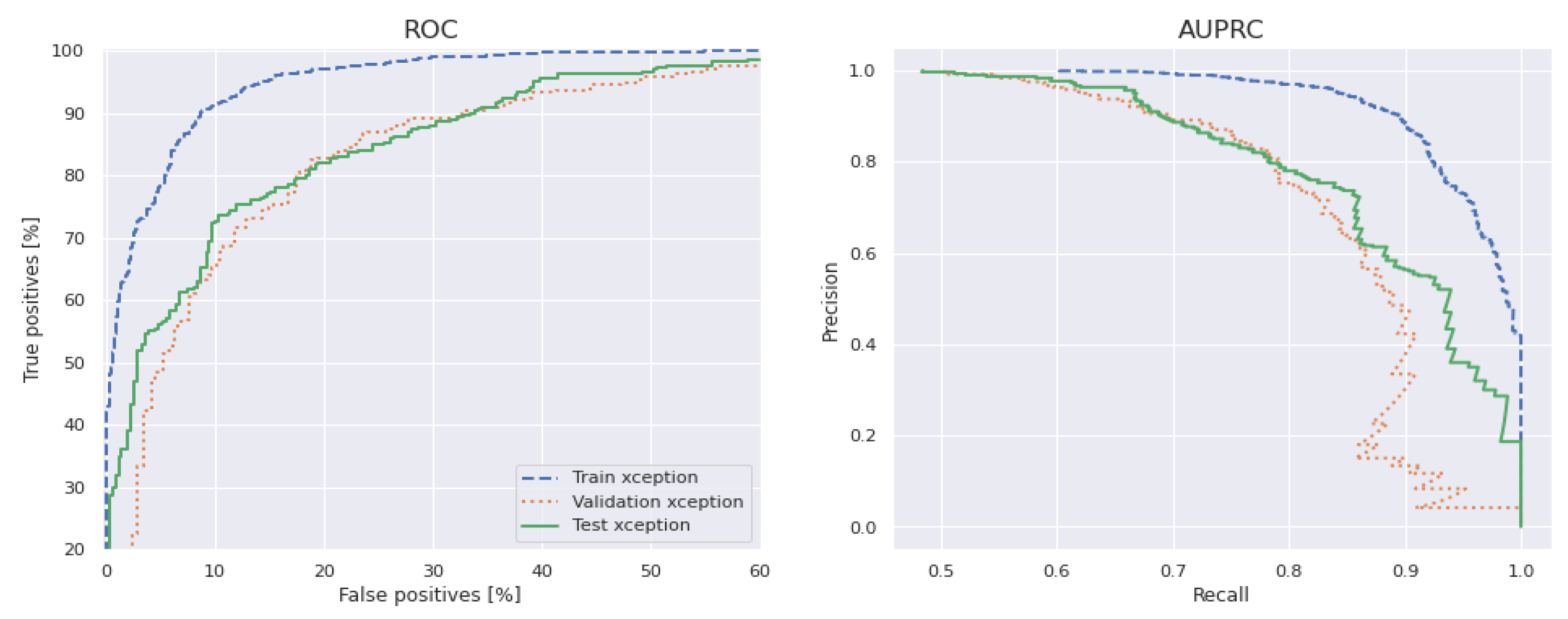

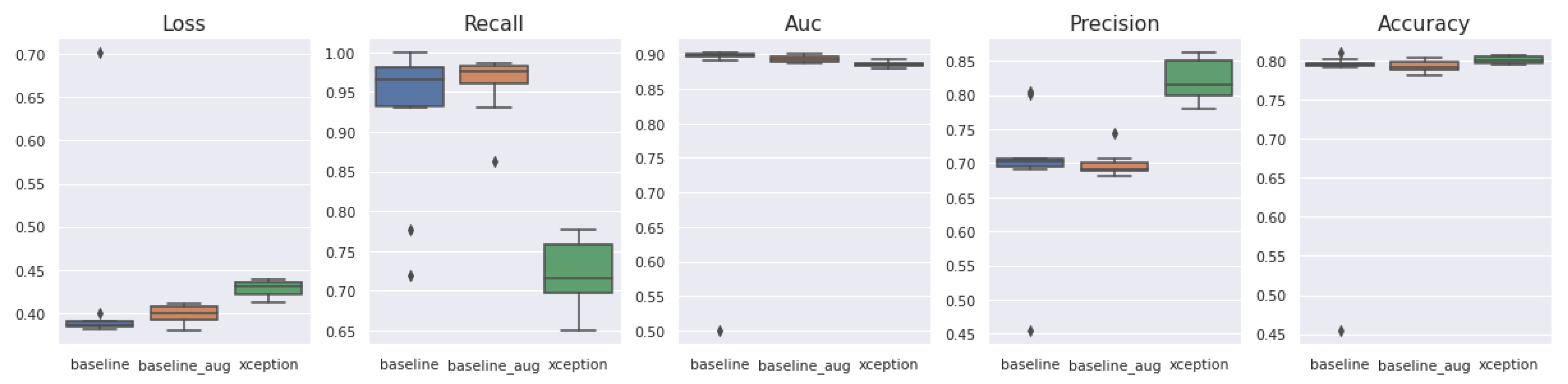

5.6. Generalized Model: No Overfitting or Underfitting

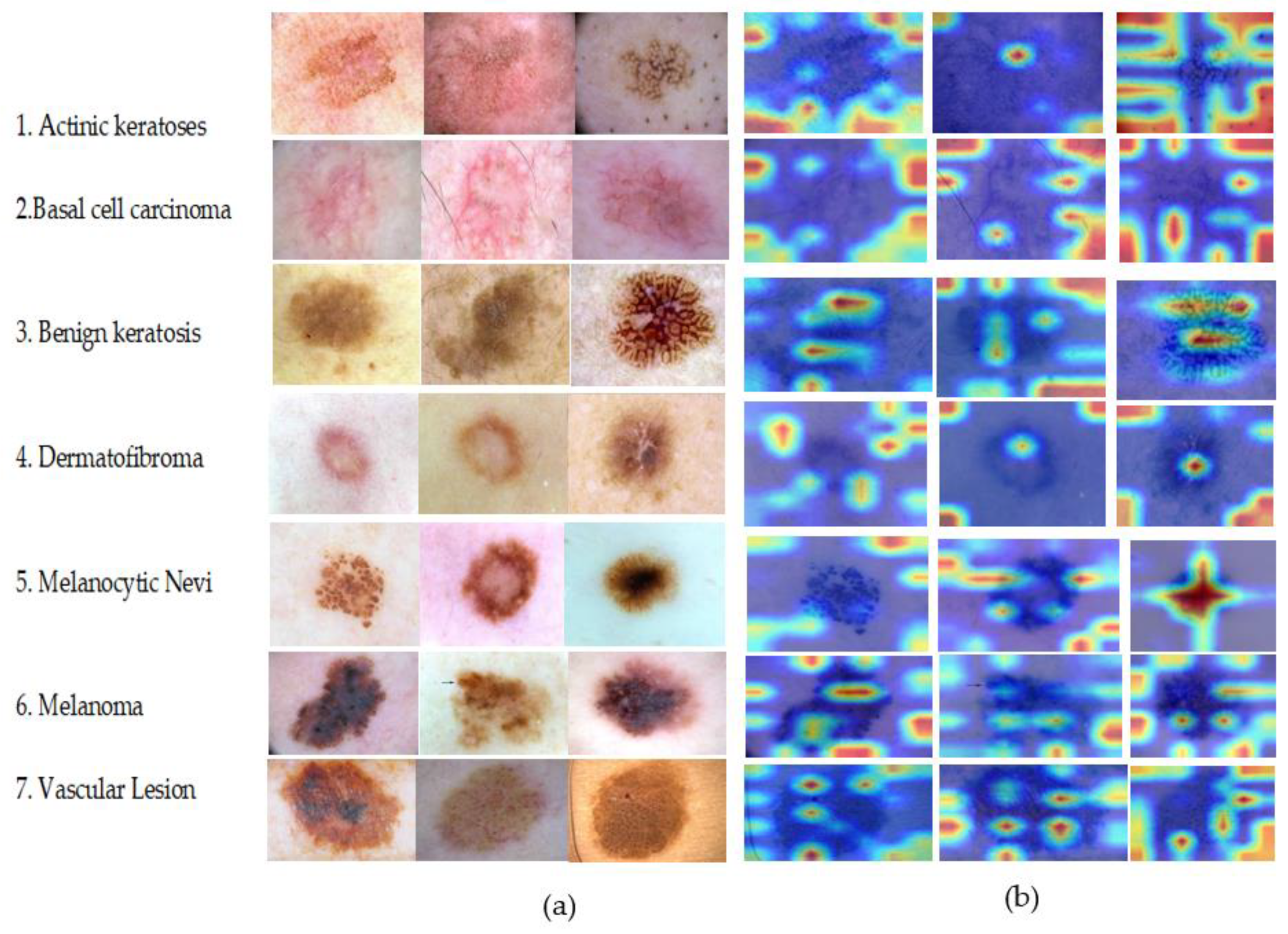

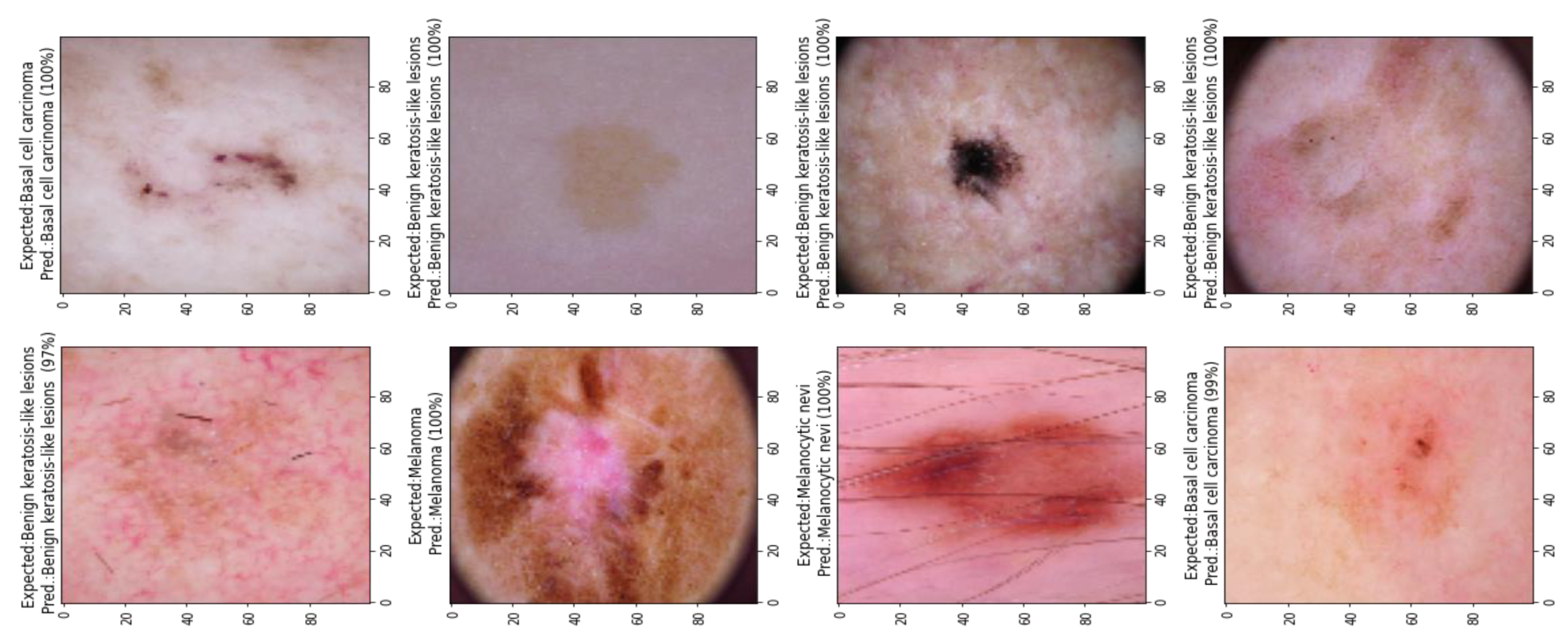

5.7. Model Interpretability

6. Discussion

6.1. Advantages of Proposed Approach

- (1)

- The most important thing that our study adds is the idea of a new, highly optimized, lightweight CNN model that can find seven types of pigmented skin lesions (PSLs).

- (2)

- The ShuffleNet-Light design minimizes network complexity and increases accuracy and recognition speed compared to those of currently available deep learning (DL) architectures. Our model’s ShuffleNet architecture’s SE block adds to its accuracy by modifying it. It also doesn’t have much of an impact on the model’s complexity or recognition rate.

- (3)

- The ShuffleNet-Light has a generalized capability with no overfitting or underfitting problems.

- (4)

- Since the activation function improves accuracy, we replaced the original ReLU function in the proposed model with the GELU function, which, in our analysis, improved the model. Therefore, the activation function does influence how well the model does when it must classify skin lesions.

- (5)

- We think that our research shows that it might be possible to recognize and track PSLs in real-time when mobile phones are used in outreach settings.

6.2. Limitations of Proposed Approach and Future Works

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PSLs | pigmented skin lesions |

| CAD | Computer-Aided Diagnosis |

| DT | Decision Tree |

| DL | Deep Learning |

| PDFFEM | Pigmented deep fused features extraction method |

| LDA | Linear Discriminant Analysis |

| CNN | Convolutional Neural Network |

| TL | Transfer Learning |

| ML | Machine Learning |

| SVM | support vector machine |

| KNN | k-nearest neighbor |

| NN | Neural Network |

| ResNet | Residual Network |

| DCNN | Deep convolutional neural network |

| FixCaps | Improved Capsule network |

| LSTM | Long short-term memory |

| ACC | Accuracy |

| SE | Sensitivity |

| AUC | area under the receiver operating characteristic curve |

| MCC | Matthew’s correlation coefficient |

| SP | Specificity |

| PR | Precision |

| RC | Recall |

| M | millions |

| SGD | Stochastic gradient descent |

| RMSprop | Root mean square propagation |

| Adagrad | Adaptive gradient algorithm |

| Adadelta | An extension of Adagrad |

| Adam | Adaptive moment estimation |

| AdaMax | A variant of Adam |

| Nadam | Nesterov-accelerated adaptive moment estimation |

| MS | Milliseconds |

| CPU | Central processing unit |

| GPU | Graphical processing unit |

| TPU | Tensor Processing units |

| CA | Channel-wise attention |

| FLOPs | Floating-point operations |

| AKIEC | Actinic keratoses |

| BCC | Basal cell carcinoma |

| BKL | Benign keratosis |

| DF | Dermatofibroma |

| NV | Melanocytic Nevi |

| MEL | Melanoma |

| VASC | Vascular Lesion |

References

- Nie, Y.; Sommella, P.; Carratù, M.; Ferro, M.; O’nils, M.; Lundgren, J. Recent Advances in Diagnosis of Skin Lesions using Dermoscopic Images based on Deep Learning. IEEE Access 2022, 10, 95716–95747. [Google Scholar] [CrossRef]

- Pacheco, A.G.; Krohling, R.A. Recent advances in deep learning applied to skin cancer detection. arXiv 2019, arXiv:1912.03280. [Google Scholar]

- Jones, O.T.; Matin, R.N.; van der Schaar, M.; Bhayankaram, K.P.; Ranmuthu, C.K.I.; Islam, M.S.; Behiyat, D.; Boscott, R.; Calanzani, N.; Emery, J.; et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: A systematic review. Lancet Digit. Health 2022, 4, e466–e476. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, I.; Yan, J.; Abbas, Q.; Shaheed, K.; Riaz, A.B.; Wahid, A.; Khan, M.W.J.; Szczuko, P. Medical Image Segmentation Using Deep Semantic-based Methods: A Review of Techniques, Applications and Emerging Trends. Inf. Fusion 2022, 90, 316–352. [Google Scholar] [CrossRef]

- Alqudah, A.M.; Qazan, S.; Al-Ebbini, L.; Alquran, H.; Qasmieh, I.A. ECG heartbeat arrhythmias classification: A comparison study between different types of spectrum representation and convolutional neural networks architectures. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 4877–4907. [Google Scholar] [CrossRef]

- Popescu, D.; El-Khatib, M.; Ichim, L. Skin Lesion Classification Using Collective Intelligence of Multiple Neural Networks. Sensors 2022, 22, 4399. [Google Scholar] [CrossRef] [PubMed]

- Manimurugan, S. Hybrid high performance intelligent computing approach of CACNN and RNN for skin cancer image grading. Soft Comput. 2022, 27, 579–589. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Fouad, M.M. Classification of Skin Lesions into Seven Classes Using Transfer Learning with AlexNet. J. Digit. Imaging 2020, 33, 1325–1334. [Google Scholar] [CrossRef]

- Jinnai, S.; Yamazaki, N.; Hirano, Y.; Sugawara, Y.; Ohe, Y.; Hamamoto, R. The Development of a Skin Cancer Classification System for Pigmented Skin Lesions Using Deep Learning. Biomolecules 2020, 10, 1123. [Google Scholar] [CrossRef]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef]

- Lan, Z.; Cai, S.; He, X.; Wen, X. FixCaps: An Improved Capsules Network for Diagnosis of Skin Cancer. IEEE Access 2022, 10, 76261–76267. [Google Scholar] [CrossRef]

- Ali, K.; Shaikh, Z.A.; Khan, A.A.; Laghari, A.A. Multiclass skin cancer classification using EfficientNets—A first step towards preventing skin cancer. Neurosci. Inform. 2021, 2, 100034. [Google Scholar] [CrossRef]

- Rasel, M.A.; Obaidellah, U.H.; Kareem, S.A. convolutional neural network-based skin lesion classification with Variable Nonlinear Activation Functions. IEEE Access 2022, 10, 83398–83414. [Google Scholar] [CrossRef]

- Aldhyani, T.H.; Verma, A.; Al-Adhaileh, M.H.; Koundal, D. Multi-Class Skin Lesion Classification Using a Lightweight Dynamic Kernel Deep-Learning-Based Convolutional Neural Network. Diagnostics 2022, 12, 2048. [Google Scholar] [CrossRef] [PubMed]

- Shahin, A.; Sipon, M.; Jahurul, H.; Mahbubur, R.; Khairul, I. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. J. Mach. Learn. Appl. 2021, 5, 100036. [Google Scholar]

- Anand, V.; Gupta, S.; Altameem, A.; Nayak, S.R.; Poonia, R.C.; Saudagar, A.K.J. An Enhanced Transfer Learning Based Classification for Diagnosis of Skin Cancer. Diagnostics 2022, 12, 1628. [Google Scholar] [CrossRef]

- Han, S.S.; Kim, M.S.; Lim, W.; Park, G.H.; Park, I.; Chang, S.E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J. Investig. Dermatol. 2018, 138, 1529–1538. [Google Scholar] [CrossRef]

- Javed, R.; Shafry Mohd Rahim, M.; Saba, T.; Sahar, G.; Javed Awan, M. An Accurate Skin Lesion Classification Using Fused Pigmented Deep Feature Extraction Method. In Prognostic Models in Healthcare: AI and Statistical Approaches; Springer: Singapore, 2022; Volume 109, pp. 47–78. [Google Scholar]

- Shetty, B.; Fernandes, R.; Rodrigues, A.P.; Chengoden, R.; Bhattacharya, S.; Lakshmanna, K. Skin lesion classification of dermoscopic images using machine learning and convolutional neural network. Sci. Rep. 2022, 12, 18134. [Google Scholar] [CrossRef]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region extraction and classification of skin cancer: A heterogeneous framework of deep CNN features fusion and reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef]

- Rezk, E.; Eltorki, M.; El-Dakhakhni, W. Leveraging Artificial Intelligence to Improve the Diversity of Dermatological Skin Color Pathology: Protocol for an Algorithm Development and Validation Study. JMIR Res. Protoc. 2022, 11, e34896. [Google Scholar] [CrossRef]

- Hameed, N.; Shabut, A.M.; Ghosh, M.K.; Hossain, M.A. Multi-class multi-level classification algorithm for skin lesions classification using machine learning techniques. Expert Syst. Appl. 2020, 141, 112961. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Damaševičius, R.; Maskeliūnas, R. Skin Lesion Segmentation and Multiclass Classification Using Deep Learning Features and Improved Moth Flame Optimization. Diagnostics 2021, 11, 811. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Q.; Celebi, M.E. DermoDeep—A classification of melanoma-nevus skin lesions using multi-feature fusion of visual features and deep neural network. Multimed. Tools Appl. 2019, 78, 23559–23580. [Google Scholar] [CrossRef]

- Harangi, B.; Baran, A.; Hajdu, A. Assisted deep learning framework for multi-class skin lesion classification considering a binary classification support. Biomed. Signal Process. Control 2020, 62, 102–115. [Google Scholar] [CrossRef]

- Iqbal, I.; Younus, M.; Walayat, K.; Kakar, M.U.; Ma, J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput. Med. Imaging Graph. 2021, 88, 101843. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin lesion segmentation and classification: A unified framework of deep neural network features fusion and selection. Expert Syst. 2022, 39, e12497. [Google Scholar] [CrossRef]

- Arora, G.; Dubey, A.K.; Jaffery, Z.A.; Rocha, A. A comparative study of fourteen deep learning networks for multi skin lesion classification (MSLC) on unbalanced data. Neural Comput. Appl. 2022, 1–27. [Google Scholar] [CrossRef]

- Alfi, I.A.; Rahman, M.M.; Shorfuzzaman, M.; Nazir, A. A Non-Invasive Interpretable Diagnosis of Melanoma Skin Cancer Using Deep Learning and Ensemble Stacking of Machine Learning Models. Diagnostics 2022, 12, 726. [Google Scholar] [CrossRef]

- Abbas, Q.; Qureshi, I.; Yan, J.; Shaheed, K. Machine Learning Methods for Diagnosis of Eye-Related Diseases: A Systematic Review Study Based on Ophthalmic Imaging Modalities. Arch. Comput. Methods Eng. 2022, 29, 3861–3918. [Google Scholar] [CrossRef]

- Kassem, M.A.; Hosny, K.M.; Damaševičius, R.; Eltoukhy, M.M. Machine Learning and Deep Learning Methods for Skin Lesion Classification and Diagnosis: A Systematic Review. Diagnostics 2021, 11, 1390. [Google Scholar] [CrossRef] [PubMed]

- Hasan, M.K.; Elahi, M.T.E.; Alam, M.A.; Jawad, M.T.; Martí, R. DermoExpert: Skin lesion classification using a hybrid convolutional neural network through segmentation, transfer learning, and augmentation. Inform. Med. Unlocked 2022, 28, 100819. [Google Scholar] [CrossRef]

- Yu, X.; Wang, J.; Hong, Q.Q.; Teku, R.; Wang, S.H.; Zhang, Y.D. Transfer learning for medical images analyses: A survey. Neurocomputing 2022, 489, 230–254. [Google Scholar] [CrossRef]

- Nguyen, D.M.; Nguyen, T.T.; Vu, H.; Pham, Q.; Nguyen, M.D.; Nguyen, B.T.; Sonntag, D. TATL: Task agnostic transfer learning for skin attributes detection. Med. Image Anal. 2022, 78, 102359. [Google Scholar] [CrossRef] [PubMed]

- Panthakkan, A.; Anzar, S.M.; Jamal, S.; Mansoor, W. Concatenated Xception-ResNet50—A novel hybrid approach for accurate skin cancer prediction. Comput. Biol. Med. 2022, 150, 106170. [Google Scholar] [CrossRef]

- Jasil, S.P.; Ulagamuthalvi, V. Deep learning architecture using transfer learning for classification of skin lesions. J. Ambient. Intell. Humaniz. Comput. 2021, 1–8. [Google Scholar] [CrossRef]

- Tang, Z.; Yang, J.; Li, Z.; Qi, F. Grape disease image classification based on lightweight convolution neural networks and channelwise attention. Comput. Electron. Agric. 2020, 178, 105735. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-sources dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Rotemberg, V.; Kurtansky, N.; Betz-Stablein, B.; Caffery, L.; Chousakos, E.; Codella, N.; Combalia, M.; Dusza, S.; Guitera, P.; Gutman, D.; et al. A patient-centric dataset of images and metadata for identifying melanomas using clinical context. Sci. Data 2021, 8, 34. [Google Scholar] [CrossRef]

- Kassem, M.A.; Hosny, K.M.; Fouad, M.M. Skin lesions classification into eight classes for isic 2019 using deep convolutional neural network and transfer learning. IEEE Access 2020, 8, 114822–114832. [Google Scholar] [CrossRef]

- Gessert, N.; Nielsen, M.; Shaikh, M.; Werner, R.; Schlaefer, A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX 2020, 7, 100864. [Google Scholar] [CrossRef] [PubMed]

- Ravikumar, A.; Sriraman, H.; Saketh, P.M.S.; Lokesh, S.; Karanam, A. Effect of neural network structure in accelerating performance and accuracy of a convolutional neural network with GPU/TPU for image analytics. PeerJ Comput. Sci. 2022, 8, e909. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 14 June 2018; pp. 7132–7141. [Google Scholar]

- Fraiwan, M.; Faouri, E. On the Automatic Detection and Classification of Skin Cancer Using Deep Transfer Learning. Sensors 2022, 22, 4963. [Google Scholar] [CrossRef] [PubMed]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM: Improved visual explanations for deep convolutional networks. arXiv 2017, arXiv:1710.11063. [Google Scholar]

- Manipur, I.; Manzo, M.; Granata, I.; Giordano, M.; Maddalena, L.; Guarracino, M.R. Netpro2vec: A graph embedding framework for biomedical applications. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 19, 729–740. [Google Scholar] [CrossRef] [PubMed]

| Classes * | No. of Images | Data Augmentation |

|---|---|---|

| AK | 500 | 4000 |

| BCC | 2000 | 4000 |

| BK | 2000 | 4000 |

| DF | 200 | 4000 |

| NV | 6000 | 4000 |

| MEL | 3000 | 4000 |

| VASC | 300 | 4000 |

| Total | 14,000 | 2800 |

| Parameters | Angle | Brightness | Zoom | Shear | Mode | Horizontal | Vertical | Rescale | Noise |

|---|---|---|---|---|---|---|---|---|---|

| values | 30° | [0.9, 1.1] | 0.1 | 0.1 | Constant | Flip | Flip | 1./255 | 0.45 |

| Classes | 1 ACC | 1 PR | 1 SE | 1 SP | 1 F1-Score | 1 MCC |

|---|---|---|---|---|---|---|

| (1) ACTINIC KERATOSES (AKIEC) | 96.1 ± 1.3 | 94.2 ± 2.0 | 96.2 ± 1.4 | 94.0 ± 2.2 | 95.2 ± 1.2 | 97.4 |

| (2) BASAL CELL CARCINOMA (BCC) | 97.5 ± 0.8 | 96.1 ± 1.4 | 97.0 ± 0.2 | 95.9 ± 1.5 | 97.5 ± 0.8 | 98.4 |

| (3) BENIGN KERATOSIS (BKL) | 98.3 ± 0.8 | 97.2 ± 1.2 | 99.6 ± 0.3 | 97.1 ± 1.4 | 98.4 ± 0.7 | 96.0 |

| (4) DERMATOFIBROMA (DF) | 99.2 ± 0.3 | 99.1 ± 0.5 | 99.4 ± 0.5 | 99.1 ± 0.5 | 98.2 ± 0.3 | 98.0 |

| (5) MELANOMA (MEL) | 98.1 ± 0.5 | 99.0 ± 0.5 | 98.3 ± 0.5 | 98.9 ± 0.6 | 99.1 ± 0.5 | 100.0 |

| (6) MELANOCYTIC NEVI (NV) | 98.2 ± 0.3 | 99.2 ± 0.4 | 97.3 ± 0.5 | 99.2 ± 0.4 | 98.2 ± 0.3 | 96.0 |

| (7) VASCULAR SKIN LESIONS (VASC) | 99.4 ± 0.4 | 99.6 ± 0.3 | 99.1 ± 0.6 | 99.6 ± 0.3 | 99.4 ± 0.4 | 99.5 |

| TL Architectures | Complexity (FLOPs) | # Parameters (M) | Model Size (MB) | GPU Speed (MS) |

|---|---|---|---|---|

| ShuffleNet-Light | 67.3 M | 1.9 | 9.3 | 0.6 |

| ShuffleNet | 98.9 M | 2.5 | 14.5 | 1.7 |

| SqueezeNet | 94.4 M | 2.4 | 12.3 | 1.2 |

| ResNet18 | 275.8 M | 2.7 | 15.2 | 2.6 |

| MobileNet | 285.8 M | 3.4 | 16.3 | 2.7 |

| Inception-v3 | 654.3 M | 3.9 | 17.5 | 2.9 |

| Xception | 66.9 M | 2.5 | 14.5 | 2.7 |

| AlexNet | 295.8 M | 2.5 | 12.3 | 3.3 |

| State-of-the-Art | Classes | Augment | Epochs | 1 Time (S) | 1 ACC | F1-Score | 1 MCC |

|---|---|---|---|---|---|---|---|

| Light-Dermo Model | 7 | Yes | 40 | 2.4 | 98.1% | 98.1% | 98.1% |

| 9CNN models [6] | 7 | Yes | 40 | 12 | 80.5% | 80.5% | 82.5% |

| AlexNet [8] | 7 | Yes | 40 | 17 | 81.9% | 81.9% | 81.9% |

| MobileNet-LSTM [10] | 7 | Yes | 40 | 13 | 82.3% | 82.3% | 80.3% |

| FixCaps [11] | 7 | Yes | 40 | 15 | 84.8% | 84.8% | 83.8% |

| EfficientNet [12] | 7 | Yes | 40 | 18 | 75.4% | 75.4% | 74.4% |

| CNN-Leaky [13] | 7 | Yes | 40 | 20 | 76.5% | 76.5% | 75.5% |

| DCNN [15] | 7 | Yes | 40 | 22 | 77.9% | 77.9% | 76.9% |

| Deep Learning Models | 1 ACC | 1 PR | 1 SE | 1 SP | F1-Score | 1 MCC |

|---|---|---|---|---|---|---|

| ShuffleNet-Light | 99.1% | 98.3% | 97.4% | 98.2% | 98.1% | 98% |

| ShuffleNet | 88.5% | 87.3% | 88.3% | 88.7% | 87.7% | 85% |

| SqueezeNet | 87.9% | 84.5% | 90.8% | 85.4% | 87.6% | 84% |

| ResNet18 | 89.3% | 87.1% | 93.1% | 87.9% | 90.1% | 89% |

| MobileNet | 90.8% | 90.2% | 90.0% | 91.4% | 90.1% | 88.% |

| Inception-v3 | 89.4% | 87.7% | 90.0% | 88.9% | 88.8% | 88% |

| Xception | 89.5% | 88.3% | 89.3% | 90.7% | 88.7% | 88% |

| AlexNet [17] | 88.9% | 87.6% | 88.8% | 88.9% | 87.7% | 86% |

| CNN-TL Architectures | Complexity (FLOPs) | # Parameters (M) | Model Size (MB) | GPU Speed (MS) |

|---|---|---|---|---|

| ShuffleNet-Light | 67.3 M | 1.9 | 9.3 | 0.6 |

| ShuffleNet | 98.9 M | 2.5 | 14.5 | 1.7 |

| SqueezeNet | 94.4 M | 2.4 | 12.3 | 1.2 |

| ResNet18 | 275.8 M | 2.7 | 15.2 | 2.6 |

| MobileNet | 285.8 M | 3.4 | 16.3 | 2.7 |

| Inception-v3 | 654.3 M | 3.9 | 17.5 | 2.9 |

| Xception | 66.9 M | 2.5 | 14.5 | 2.7 |

| AlexNet | 295.8 M | 2.5 | 12.3 | 3.3 |

| Batch Size | Epochs | CPU/TPU/GPU (MS) |

|---|---|---|

| 64 | 40 | 600/400/500 |

| 128 | 40 | 800/450/550 |

| 256 | 40 | 900/500/600 |

| 512 | 40 | 900/500/600 |

| 1024 | 40 | 900/500/600 |

| Model | 1 FLOPs | 256 × 256 | 300 × 300 | 400 × 400 |

|---|---|---|---|---|

| ShuffleNet | 80 M | 50.2 ms | 54.4 ms | 54.4 ms |

| ShuffleNet-Light+Inception-v3 | 60 M | 34.5 ms | 35.9 ms | 35.9 ms |

| ShuffleNet-Light+AlexNet | 220 M | 40.8 ms | 45.4 ms | 45.4 ms |

| ShuffleNet-Light+MobileNet | 120 M | 47.7 ms | 50.7 ms | 50.7 ms |

| Model | Shuffle | No Shuffle | %Err |

|---|---|---|---|

| ShuffleNet | 40% | 50% | 2.3 |

| ShuffleNet-Light+Inception-v3 | 55% | 65% | 1.4 |

| ShuffleNet-Light+AlexNet | 60% | 70% | 3.2 |

| ShuffleNet-Light+MobileNet | 40% | 50% | 2.2 |

| Model | Adam | RMSprop | SGD | AdaMax | Adadelta | Nadam |

|---|---|---|---|---|---|---|

| ShuffleNet | 2.9 m | 3.9 m | 2.9 m | 3.2 m | 3.2 m | 3.0 m |

| ShuffleNet-Light+Inception-v3 | 2.0 m | 2.1 m | 1.9 m | 2.1 m | 2.1 m | 2.0 m |

| ShuffleNet-Light+AlexNet | 2.4 m | 3.4 m | 2.4 m | 3.4 m | 3.4 m | 2.5 m |

| ShuffleNet-Light+MobileNet | 2.5 m | 3.5 m | 2.5 m | 4.5 m | 3.5 m | 2.7 m |

| Model | Sigmoid | Tanh | ReLU | Leaky-ReLU | GELU |

|---|---|---|---|---|---|

| ShuffleNet | 89% | 89% | 88% | 92% | 90% |

| ShuffleNet-Light+Inception-v3 | 96% | 96% | 97% | 96% | 99% |

| ShuffleNet-Light+AlexNet | 90% | 91% | 89% | 89% | 90% |

| ShuffleNet-Light+MobileNet | 90% | 90% | 88% | 87% | 89% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baig, A.R.; Abbas, Q.; Almakki, R.; Ibrahim, M.E.A.; AlSuwaidan, L.; Ahmed, A.E.S. Light-Dermo: A Lightweight Pretrained Convolution Neural Network for the Diagnosis of Multiclass Skin Lesions. Diagnostics 2023, 13, 385. https://doi.org/10.3390/diagnostics13030385

Baig AR, Abbas Q, Almakki R, Ibrahim MEA, AlSuwaidan L, Ahmed AES. Light-Dermo: A Lightweight Pretrained Convolution Neural Network for the Diagnosis of Multiclass Skin Lesions. Diagnostics. 2023; 13(3):385. https://doi.org/10.3390/diagnostics13030385

Chicago/Turabian StyleBaig, Abdul Rauf, Qaisar Abbas, Riyad Almakki, Mostafa E. A. Ibrahim, Lulwah AlSuwaidan, and Alaa E. S. Ahmed. 2023. "Light-Dermo: A Lightweight Pretrained Convolution Neural Network for the Diagnosis of Multiclass Skin Lesions" Diagnostics 13, no. 3: 385. https://doi.org/10.3390/diagnostics13030385

APA StyleBaig, A. R., Abbas, Q., Almakki, R., Ibrahim, M. E. A., AlSuwaidan, L., & Ahmed, A. E. S. (2023). Light-Dermo: A Lightweight Pretrained Convolution Neural Network for the Diagnosis of Multiclass Skin Lesions. Diagnostics, 13(3), 385. https://doi.org/10.3390/diagnostics13030385