1. Introduction

Health care is based on high-quality patient treatment, and to ensure this quality, the competence of health-care professionals needs to be systematically evaluated [

1]. In medical imaging, the radiological report plays an important role in patient treatment [

2] and helps general practitioners treating the patient. Radiographs are important in evaluating patients with upper- or lower-extremity trauma [

3,

4]. Thus, the radiology report based on radiographs has an important role in patient treatment.

Extremity fractures are the second-most missed diagnosis when reporting on radiographs [

5]. This is especially relevant now that increased cross-sectional imaging represents a growing proportion of the teaching material during radiology residency training. Missed findings in radiographs may result in several complications for the patient [

6]. Identifying mistakes made in radiograph interpretation is an important way to improve interpretation competence [

7]. Up to 80% of diagnostic errors in radiology are classified as perceptual errors where the abnormal finding is not seen [

2,

8]. These errors are more frequent during evening and nighttime [

9,

10,

11]. In skeletal radiology, most of malpractice claims towards radiologists are related to errors in fracture interpretation [

12,

13,

14].

In summary, radiographs are still used as first-line studies to evaluate patients with possible fractures. Therefore, interpretation competence should constantly be evaluated. Interpretation errors in radiographs are frequently related to worse patient outcomes. There are still limited data on the diagnostic performance in MSK radiograph interpretation between specialists and residents, especially with regard to time of day and subtle vs. obvious findings. In this study, we evaluated only different upper and lower MSK regions due to their frequency and the limited number of imaging outcomes (e.g., fracture or no fracture).

The purpose of this study was to determine radiology specialists’ and residents’ performance in radiograph interpretation and the rate of discrepancy between them. We hypothesized that (1) radiology specialists’ performance is superior compared to residents’ performance, (2) residents have more missed findings in subtle radiology findings compared to specialists, and (3) missed findings increase during evening and night.

2. Materials and Methods

This retrospective cross-sectional study received ethical approval from the Ethics Committee of the University of Turku (ETMK Dnro: 38/1801/2020). This study complied with the Declaration of Helsinki and was performed according to ethics committee approval. Because of the retrospective nature of the study, need for informed consent was waived by the Ethics Committee of the Hospital District of Southwest Finland.

This retrospective study reviewed appendicular radiographs (N = 1006) interpreted by radiology specialists (

n = 506) and residents (

n = 500) between 2018 and 2021. This type of study design allowed us to collect the reports at one study point and was less time-consuming than a longitudinal or prospective study design. Different MSK body parts were included and the same amount of patient cases were included in every MSK region for both radiology specialists and trainees. Cases were selected with the following inclusion criteria: (a) trauma indication, (b) original radiology report made by either radiology specialists or residents, and (c) primary radiographs. The exclusion criteria were (a) non-trauma indication, (b) no original report found in PACS system, and (c) control study. All radiographs were interpreted by two subspecialty-level MSK radiologists with 20 and 25 years of experience. Double (dual) reading was used, which has been shown to be an effective but also time-consuming way of finding discrepancies in radiology reports [

15]. The radiologists did not know the original report or whether the original report was made by radiology specialists or residents. Consensus between the two radiologists was evaluated against the original report. All radiographs were viewed in a picture archiving and communication system and with diagnostic monitors. To improve the generalizability of the results, data from various imaging devices were included.

Interpretation error was defined as disagreement between the original report and the two subspecialty-level MSK radiologists. In the case of interpretation error, it was evaluated and subcategorized. In addition, interpretation errors and their implications for patient treatment were classified based on the severity of the interpretation error. Implications were classified based on the consensus of the two subspecialty-level MSK radiologists as follows: Grade 1, no clinical importance; Grade 2, unable to know whether the error had clinical importance; and Grade 3, clear clinical effect on patient treatment. In addition, all abnormal radiographs were labeled as being subtle (n = 103) or obvious (n = 310) based on the two subspecialty-level MSK radiologists’ consensus.

Patient age, sex, time of interpretation, and date of interpretation were recorded. Data were collected and managed using REDCap (Research Electronic Data Capture) electronic data capture tools hosted at Turku University.

Patients were divided into three age groups (

Table 1) to represent pediatric (1–16), adult (17–64) and elderly (>65). There were no statistically significant differences between patient age groups (

p = 0.66) or sex (

p = 0.53) when radiology specialists’ and residents’ interpretations were compared. In addition, time of interpretation was classified to present morning, evening, and night shifts.

Categorical variables were summarized with counts and percentages and continuous age with means together with range. Associations between two categorical variables were evaluated with chi-squared or Fisher’s exact test (Monte Carlo simulation used if needed). p-values less than 0.05 (two-tailed) were considered statistically significant. Sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) were calculated together with their 95% confidence intervals (CIs).

The data analysis for this paper was generated using SAS software version 9.4 for Windows (SAS Institute Inc., Cary, NC, USA).

3. Results

3.1. Overall Findings

Of the 1006 radiographs, 41% were abnormal. In total, 67 radiographic findings were missed (6.7%) and 32 findings were overcalled (3.2%). Among the missed fractures, 18% were found in children, 60% in adults, and 22% in elderly. Among the overcalls, 28.1% were found in children, 50% in adults, and 21.9% in elderly. The most common reason for interpretation error was fracture (58%). Interpretation error was most likely to happen in wrist (18%) or foot (17%) interpretations.

Different MSK regions had different rates of subtle and obvious radiographic findings (

p = 0.001). Most subtle findings were found in the elbow (31%) and wrist (30%). Subtle radiographic findings occurred most often at 3 p.m.–4 p.m. (44%), 5 p.m.–6 p.m. (38%), and 9 p.m.–10 p.m. (38%).

Figure 1 shows the distribution between morning, evening, and night shifts in interpretation errors, subtle and obvious findings, and abnormal radiographs.

There were no statistically significant differences between errors made in morning, evening or night shifts (

p = 0.57) (

Table 2). Radiology specialists were better at correctly diagnosing radiographs during the evening and nighttime compared to radiology residents (93% vs. 87%), but there were no statistically significant differences. Error rates did increase for radiology specialists during 7–8 a.m., 11–12 a.m., 15–17 p.m. and 23–00 p.m. The highest error rates for radiology residents were found during 1–4 a.m., 6–7 a.m. and 16–18 p.m. There were no statistically significant differences between misses, overcalls and weekdays (

p = 0.31). Most misses were made on either Monday (22%) or Saturday (22%). In addition, most overcalls were made on Friday (28%).

3.2. Discrepancies between Radiology Specialists and Residents

No statistically significant differences (

p = 0.44) were found in the interpretation errors between the radiology specialists and the residents. The radiology specialists missed 5.7% of the findings, while the residents missed 7.6%. On the other hand, the radiology specialists made 2.8% of the overcalls and the residents made 3.6% of the overcalls. The sensitivity, specificity, positive predictive value, and negative predictive value were 0.86, 0.92, 0.88, and 0.91, respectively (

Table 3). Patient age was similar (

p = 0.29) in the correct diagnosis group and in the interpretation error group. However, there were variations in competence between the different MSK regions and radiology specialists or residents.

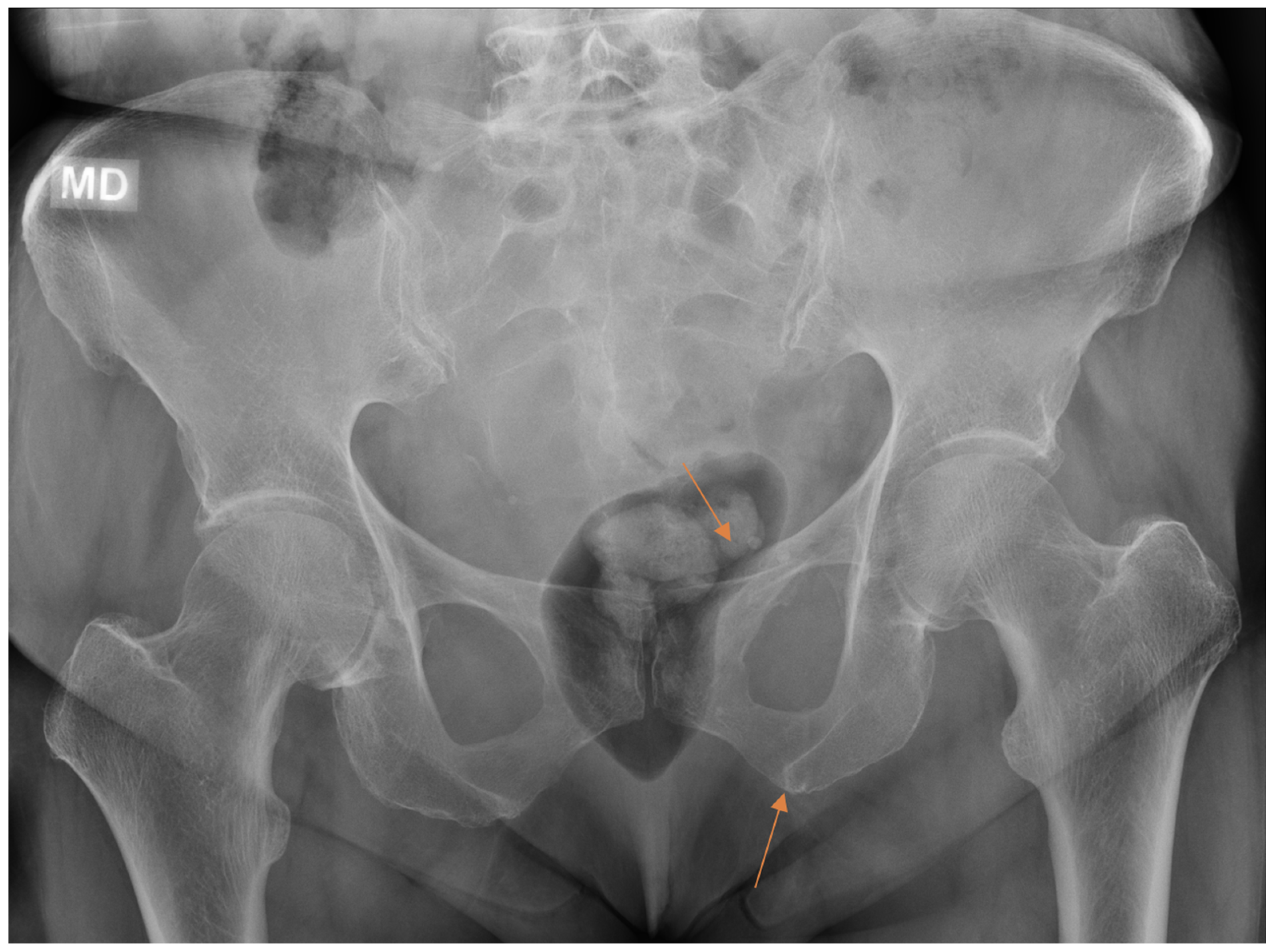

Diagnostic accuracy in the different MSK regions showed a wide range of variation (

Table 4). The highest sensitivity (0.97), specificity (0.95), negative predictive value (0.97), and positive predictive value (0.95) were found in the pelvis interpretation, while the lowest sensitivity (0.82), specificity (0.83), negative predictive value (0.80), and positive predictive value (0.85) were found in the wrist interpretation. Overall, the lowest sensitivity (0.78) was found in the foot interpretation. For the shoulder, the radiology specialists made the correct diagnoses in 95% of the cases, compared to 83% by the residents; for the knee, the radiology specialists made the correct diagnoses in 89% of the cases, compared to 97% by the residents. However, there were no statistically significant differences between the radiology specialists and the residents in the different MSK regions.

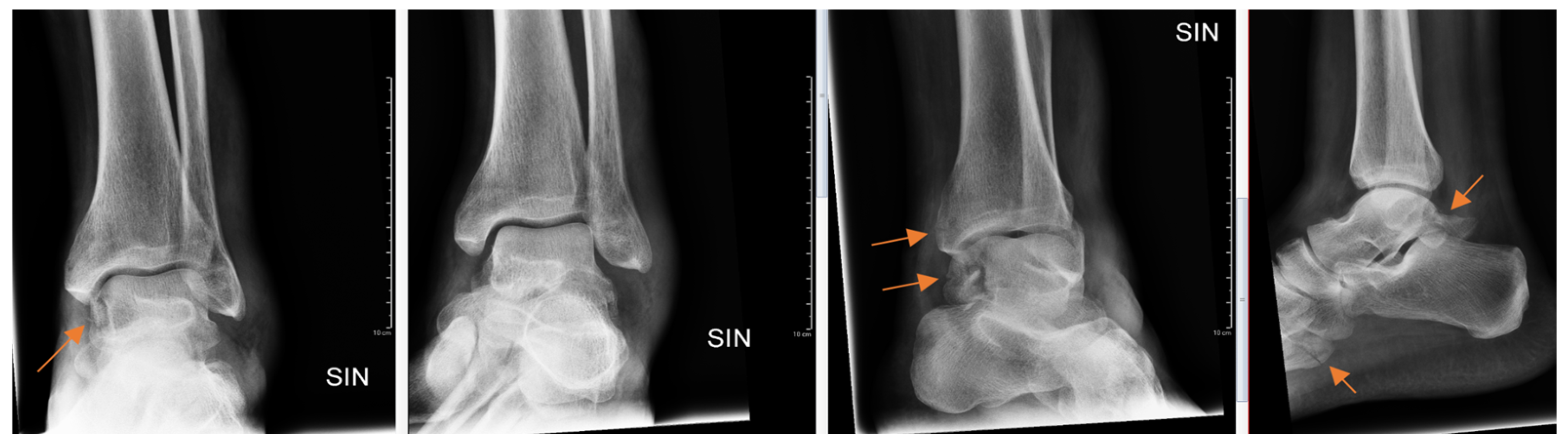

Radiology specialists interpreted more radiographs as having subtle findings compared to residents (

p = 0.04). Different age groups did not differ (

p = 0.89) between subtle or obvious cases. Radiology specialists missed correct diagnoses in subtle and obvious radiographs in 33% and 4.9%, respectively. In contrast, residents missed correct diagnoses in subtle (

Figure 2) and obvious (

Figure 3) radiographs in 51% and 8.4%, respectively.

From all the missed findings in the radiographs, 70% (

n = 44) were interpreted as having an impact on patient care (

p = 0.02), but this did not differ between the radiology specialists and the residents. Findings missed by the radiology specialists (

Figure 4 and

Figure 5) affected patient care in 71% of cases and overcalls in 31% of cases. Findings missed by the residents (

Figure 6) affected patient care in 69% of cases and overcalls in 47% of cases. From all the overcalls in the radiographs, 40% (

n = 12) seemed to have an impact on patient care. The most common impact on patient care was a lack of the necessary control study (40%), followed by an unnecessary control study (14%). Interpretation error rarely led to unnecessary operative treatment (1%).

4. Discussion

4.1. Overall Findings

We found similar rates of misses and overcalls in the reading of the radiographs between the radiology specialists and the residents, with both groups having lower sensitivity compared to specificity, yet there were differences in competence among the different MSK regions. Neither day nor time of the day showed statistically significant differences in interpretation competence. These results highlight that there are no major differences between the radiology specialists and the residents in MSK radiograph interpretation. However, there are MSK regions that need more attention in the future regarding competence in radiograph interpretation. This will have direct implications for resident training programs. Importantly, there were no statistically significant group differences in the age distribution between the resident and specialist groups, suggesting that the main conclusions are not biased by age.

For the upper and lower extremities, we found a sensitivity of 0.86 and specificity of 0.92, which are lower than reported in the previous studies [

16]. In contrast to the previous studies [

16,

17], we did not find any statistically significant increase in the radiology specialist or resident interpretation errors for the evening or night shifts compared to the daytime shifts. However, we did find that the residents, who can be more prone to fatigue-related errors [

18,

19], made more interpretation errors during the night shift compared to the morning or evening shift. The radiology specialists are also prone to fatigue-related problems in interpretation [

17] and, in this study, we found that 18% of missed diagnoses occurred between 15:00 and 17:00, which highlights the fatigue-related errors in interpretation. Most missed diagnoses in this study were related to missed fractures, similar to the previous studies [

20,

21,

22]. The prevalence of abnormality in our study was 41%, which is in line with prevalence in clinical practice [

23] and does not overestimate the ability to detect abnormal cases [

24].

4.2. Discrepancies between Radiology Specialists and Residents

We found that the overall interpretation errors for radiology specialists and residents varied from 0 to 10% and 0 to 12%, respectively, showing slightly lower competence levels compared to previous studies [

1,

7,

21,

25,

26,

27,

28]. Earlier studies show that when evaluated with normal and abnormal cases, interpretation errors for radiology specialists range from 0.65% [

1] to 5% [

29,

30]. There are differences between individual radiology specialists’ interpretation competence, which can increase interpretation errors even to 8% [

31]. One of the largest studies showed a radiology specialist interpretation error rate between 3% and 4% [

1].

We did not find any statistically significant differences between the radiology specialists and the residents, which is in contrast to the previous studies, where the radiology specialists showed better diagnostic accuracy compared to the residents (

p = 0.02) [

32]. However, there are also studies showing no significant differences between the radiology specialists and the residents [

1,

20,

25]. In addition, we did not find statistically significant differences in the interpretation of subtle or obvious radiology findings, in contrast to the previous studies [

32]. In this study, the radiology specialists had higher rates of detection and higher diagnostic accuracy for subtle findings compared to the residents, which is consistent with the previous studies [

18]. Because we excluded reports initially signed by both a trainee and a specialist (a signal of consultation), the potential bias from specialists affecting trainee reports is probably low. In addition, we did not find statistically significant differences between the radiology specialists and residents in different MSK regions, as in the previous studies [

33]. In the previous studies [

16,

30], ankle interpretation showed the highest sensitivity (0.98) and specificity (0.95). In this study, the ankle sensitivity (0.83) and specificity (0.93) were lower. Furthermore, in this study, sensitivity was lower compared to specificity in all the MSK regions except the pelvis. This is well recognized in the field of radiology and can be related to litigation in missed findings [

34].

Diagnostic accuracy in the wrist had the lowest sensitivity and specificity among the MSK regions. This is worrying because the wrist is the most often injured MSK region [

35,

36], and missed findings can lead to complications such as nonunion, osteonecrosis, and osteoarthritis [

6]. The radiology specialists and residents had the same miss rate, with 9.5% and 9.7%, respectively, but the radiology specialists had fewer overcalls compared to the residents, with 3.6% and 8.1%, respectively. These miss and overcall rates in the wrist are higher than reported in the previous studies [

37]. Foot injuries are also very common, and diagnostic accuracy can have serious implications on patient care [

38]. In our study, foot interpretation showed the lowest sensitivity and specificity in the lower extremity. These findings should prompt radiology departments to pay special attention to these MSK regions in resident training. We found that most interpretation errors affected patient care, regardless of whether the radiograph was interpreted by a radiology specialist or resident.

4.3. Limitations

First, due to the retrospective nature of the study, we were unable to verify the level of clinical competence of the radiology specialist (e.g., years in practice), or the resident (e.g., year of residency). However, we might reasonably assume that every radiology specialist or resident has the required clinical competence when they dictate radiological reports for guiding patient treatment. Second, there is a possibility of undetected selection bias. Different types of fracture tend to occur during different times of the year in Finland. To diminish this selection bias, data collection spanned several time periods. Finally, follow-up studies were not obtained to verify the possible missed fractures unless the patient had had follow-up assessment at the same hospital and it could be found in the PACS. Our gold standard in this study was a consensus of two MSK radiology specialists, and possible errors in their interpretations potentially affect the results of this study also.

5. Conclusions

In conclusion, this study found a lack of major discrepancies between radiology specialists and residents in radiograph interpretation, although there were differences between MSK regions and in subtle or obvious radiographic findings. In this study, the interpretation of pelvic imaging yielded the most notable outcomes in terms of sensitivity, specificity, negative predictive value (NPV), and positive predictive value (PPV), whereas the interpretation of wrist radiographs demonstrated the most modest results in these performance metrics. Moreover, it is worth noting that no statistically significant distinctions were observed between the interpretations made by radiology specialists and trainees during evening or night shifts, despite radiology specialists showing a reduced incidence of interpretational errors. In addition, missed findings found in this study often affected patient treatment. Finally, there are MSK regions where the sensitivity or specificity are below 90%, and these should raise concerns and highlight the need for double reading and be taken into consideration in radiology education. Further prospective studies are needed in these specific MSK regions. In addition, future studies where artificial image interpretation is compared between radiology specialists and residents could be undertaken to highlight possible differences.

Author Contributions

J.T.H., S.K., P.N. and J.H., conceptualization and methodology; J.T.H., investigation; E.L., formal analysis and validation; J.T.H., writing—original draft and data curation; M.N., R.B.S., S.K.K. and T.K.P., writing—review and editing; H.J.A., S.K. and J.H., supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Radiological Society of Finland.

Institutional Review Board Statement

This study received ethical approval from the Ethics Committee of the University of Turku (ETMK Dnro: 38/1801/2020). This study complied with the Declaration of Helsinki and was performed according to ethics committee approval.

Informed Consent Statement

Because of the retrospective nature of the study, need for informed consent was waived by the Ethics Committee of the Hospital District of Southwest Finland.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Borgstede, J.P.; Lewis, R.S.; Bhargavan, M.; Sunshine, J.H. RADPEER quality assurance program: A multifacility study of interpretive disagreement rates. J. Am. Coll. Radiol. 2004, 1, 59–65. [Google Scholar] [CrossRef] [PubMed]

- Bruno, M.A.; Walker, E.A.; Abujudeh, H.H. Understanding and Confronting Our Mistakes: The Epidemiology of Error in Radiology and Strategies for Error Reduction. Radiographics 2015, 35, 1668–1676. [Google Scholar] [CrossRef] [PubMed]

- Gyftopoulos, S.; Chitkara, M.; Bencardino, J.T. Misses and errors in upper extremity trauma radiographs. Am. J. Roentgenol. 2014, 2023, 477–491. [Google Scholar] [CrossRef] [PubMed]

- Mattijssen-Horstink, L.; Langeraar, J.J.; Mauritz, G.J.; van der Stappen, W.; Baggelaar, M.; Tan, E.C.T.H. Radiologic discrepancies in diagnosis of fractures in a Dutch teaching emergency department: A retrospective analysis. Scand. J. Trauma Resusc. Emerg. Med. 2020, 28, 38. [Google Scholar] [CrossRef] [PubMed]

- Porrino, J.A.; Maloney, E.; Scherer, K.; Mulcahy, H.; Ha, A.S.; Allan, C. Fracture of the distal radius: Epidemiology and premanagement radiographic characterization. Am. J. Roentgenol. 2014, 203, 551–559. [Google Scholar] [CrossRef] [PubMed]

- Shahabpour, M.; Abid, W.; van Overstraeten, L.; de Maeseneer, M. Wrist Trauma: More Than Bones. J. Belg. Soc. Radiol. 2021, 105, 90. [Google Scholar] [CrossRef] [PubMed]

- Itri, J.N.; Kang, H.C.; Krishnan, S.; Nathan, D.; Scanlon, M.H. Using Focused Missed-Case Conferences to Reduce Discrepancies in Musculoskeletal Studies Interpreted by Residents on Call. Am. J. Roentgenol. 2011, 197, W696–W705. [Google Scholar] [CrossRef]

- Donald, J.J.; Barnard, S.A. Common patterns in 558 diagnostic radiology errors. J. Med. Imaging Radiat. Oncol. 2012, 56, 173–178. [Google Scholar] [CrossRef]

- Janjua, K.J.; Sugrue, M.; Deane, S.A. Prospective evaluation of early missed injuries and the role of tertiary trauma survey. J. Trauma Acute Care Surg. 1998, 44, 1000–1007. [Google Scholar] [CrossRef]

- Hallas, P.; Ellingsen, T. Errors in fracture diagnoses in the emergency deparment—Characteristics of patients and diurnal variation. BMC Emerg. Med. 2006, 6, 4. [Google Scholar] [CrossRef]

- Alshabibi, A.S.; Suleiman, M.E.; Tapia, K.A.; Brennan, P.C. Effects of time of day on radiological interpretation. Clin. Radiol. 2020, 75, 148–155. [Google Scholar] [CrossRef]

- Guly, H.R. Diagnostic errors in an accident and emergency department. Emerg. Med. J. 2001, 18, 263–269. [Google Scholar] [CrossRef] [PubMed]

- Whang, J.S.; Baker, S.R.; Patel, R.; Luk, L.; Castro, A. The causes of medical malpractice suits against radiologists in the United States. Radiology 2013, 266, 548–554. [Google Scholar] [CrossRef] [PubMed]

- Festekjian, A.; Kwan, K.Y.; Chang, T.P.; Lai, H.; Fahit, M.; Liberman, D.B. Radiologic discrepancies in children with special healthcare needs in a pediatric emergency department. Am. J. Emerg. Med. 2018, 36, 1356–1362. [Google Scholar] [CrossRef] [PubMed]

- Geijer, H.; Geijer, M. Added value of double reading in diagnostic radiology, a systematic review. Insights Imaging 2018, 9, 287–301. [Google Scholar] [CrossRef] [PubMed]

- York, T.; Franklin, C.; Reynolds, K.; Munro, G.; Jenney, H.; Harland, W.; Leong, D. Reporting errors in plain radiographs for lower limb trauma—A systematic review and meta-analysis. Skelet. Radiol. 2022, 51, 171–182. [Google Scholar] [CrossRef]

- Hanna, T.N.; Loehfelm, T.; Khosa, F.; Rohatgi, S.; Johnson, J.O. Overnight shift work: Factors contributing to diagnostic discrepancies. Emerg. Radiol. 2016, 23, 41–47. [Google Scholar] [CrossRef] [PubMed]

- Krupinski, E.A.; Berbaum, K.S.; Caldwell, R.T.; Schartz, K.M.; Madsen, M.T.; Kramer, D.J. Do long radiology workdays affect nodule detection in dynamic CT interpretation? J. Am. Coll. Radiol. 2012, 9, 191–198. [Google Scholar] [CrossRef]

- Bertram, R.; Kaakinen, J.; Bensch, F.; Helle, L.; Lantto, E.; Niemi, P.; Lundbom, N. Eye Movements of Radiologists Reflect Expertise in CT Study Interpretation: A Potential Tool to Measure Resident Development. Radiology 2016, 281, 805–815. [Google Scholar] [CrossRef]

- Kung, J.W.; Melenevsky, Y.; Hochman, M.G.; Didolkar, M.M.; Yablon, C.M.; Eisenberg, R.L.; Wu, J.S. On-Call Musculoskeletal Radiographs: Discrepancy Rates Between Radiology Residents and Musculoskeletal Radiologists. Am. J. Roentgenol. 2013, 200, 856–859. [Google Scholar] [CrossRef]

- Tomich, J.; Retrouvey, M.; Shaves, S. Emergency imaging discrepancy rates at a level 1 trauma center: Identifying the most common on-call resident “misses”. Emerg. Radiol. 2013, 20, 499–505. [Google Scholar] [CrossRef] [PubMed]

- Halsted, M.J.; Kumar, H.; Paquin, J.J.; Poe, S.A.; Bean, J.A.; Racadio, J.M.; Strife, J.L.; Donnelly, L.F. Diagnostic errors by radiology residents in interpreting pediatric radiographs in an emergency setting. Pediatr. Radiol. 2004, 34, 331–336. [Google Scholar] [CrossRef] [PubMed]

- Hardy, M.; Snaith, B.; Scally, A. The impact of immediate reporting on interpretive discrepancies and patient referral pathways within the emergency department: A randomised controlled trial. Br. J. Radiol. 2013, 86, 20120112. [Google Scholar] [CrossRef] [PubMed]

- Pusic, M.V.; Andrews, J.S.; Kessler, D.O.; Teng, D.C.; Pecaric, M.R.; Ruzal-Shapiro, C.; Boutis, K. Prevalence of abnormal cases in an image bank affects the learning of radiograph interpretation. Med. Educ. 2012, 46, 289–298. [Google Scholar] [CrossRef] [PubMed]

- Ruchman, R.B.; Jaeger, J.; Wiggins, E.F.; Seinfeld, S.; Thakral, V.; Bolla, S.; Wallach, S. Preliminary Radiology Resident Interpretations Versus Final Attending Radiologist Interpretations and the Impact on Patient Care in a Community Hospital. Am. J. Roentgenol. 2007, 189, 523–526. [Google Scholar] [CrossRef] [PubMed]

- Cooper, V.F.; Goodhartz, L.A.; Nemcek, A.A.; Ryu, R.K. Radiology resident interpretations of on-call imaging studies: The incidence of major discrepancies. Acad. Radiol. 2008, 15, 1198–1204. [Google Scholar] [CrossRef] [PubMed]

- Weinberg, B.D.; Richter, M.D.; Champine, J.G.; Morriss, M.C.; Browning, T. Radiology resident preliminary reporting in an independent call environment: Multiyear assessment of volume, timeliness, and accuracy. J. Am. Coll. Radiol. 2015, 12, 95–100. [Google Scholar] [CrossRef] [PubMed]

- McWilliams, S.R.; Smith, C.; Oweis, Y.; Mawad, K.; Raptis, C.; Mellnick, V. The Clinical Impact of Resident-attending Discrepancies in On-call Radiology Reporting: A Retrospective Assessment. Acad. Radiol. 2018, 25, 727–732. [Google Scholar] [CrossRef]

- Soffa, D.J.; Lewis, R.S.; Sunshine, J.H.; Bhargavan, M. Disagreement in interpretation: A method for the development of benchmarks for quality assurance in imaging. J. Am. Coll. Radiol. 2004, 1, 212–217. [Google Scholar] [CrossRef]

- Bisset, G.S.; Crowe, J. Diagnostic errors in interpretation of pediatric musculoskeletal radiographs at common injury sites. Pediatr. Radiol. 2014, 44, 552–557. [Google Scholar] [CrossRef]

- Siegle, R.L.; Baram, E.M.; Reuter, S.R.; Clarke, E.A.; Lancaster, J.L.; McMahan, C.A. Rates of disagreement in imaging interpretation in a group of community hospitals. Acad. Radiol. 1998, 5, 148–154. [Google Scholar] [CrossRef] [PubMed]

- Wood, G.; Knapp, K.M.; Rock, B.; Cousens, C.; Roobottom, C.; Wilson, M.R. Visual expertise in detecting and diagnosing skeletal fractures. Skelet. Radiol. 2013, 42, 165–172. [Google Scholar] [CrossRef] [PubMed]

- Bent, C.; Chicklore, S.; Newton, A.; Habig, K.; Harris, T. Do emergency physicians and radiologists reliably interpret pelvic radiographs obtained as part of a trauma series? Emerg. Med. J. 2013, 30, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Halpin, S.F.S. Medico-legal claims against English radiologists: 1995-2006. Br. J. Radiol. 2009, 82, 982–988. [Google Scholar] [CrossRef] [PubMed]

- Vabo, S.; Steen, K.; Brudvik, C.; Hunskaar, S.; Morken, T. Fractures diagnosed in primary care—A five-year retrospective observational study from a Norwegian rural municipality with a ski resort. Scand. J. Prim. Health Care 2019, 37, 444–451. [Google Scholar] [CrossRef]

- Hruby, L.A.; Haider, T.; Laggner, R.; Gahleitner, C.; Erhart, J.; Stoik, W.; Hajdu, S.; Thalhammer, G. Standard radiographic assessments of distal radius fractures miss involvement of the distal radioulnar joint: A diagnostic study. Arch. Orthop. Trauma Surg. 2021, 1, 3. [Google Scholar] [CrossRef] [PubMed]

- Wei, C.J.; Tsai, W.C.; Tiu, C.M.; Wu, H.T.; Chiou, H.J.; Chang, C.Y. Systematic analysis of missed extremity fractures in emergency radiology. Acta Radiol. 2006, 47, 710–717. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, C.G.; Jørgensen, S.B.; Larsen, P.; Horodyskyy, M.; Kjær, I.L.; Elsoe, R. Population-based incidence and epidemiology of 5912 foot fractures. Foot Ankle Surg. 2021, 27, 181–185. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).