Harnessing Machine Learning in Vocal Arts Medicine: A Random Forest Application for “Fach” Classification in Opera

Abstract

:1. Introduction

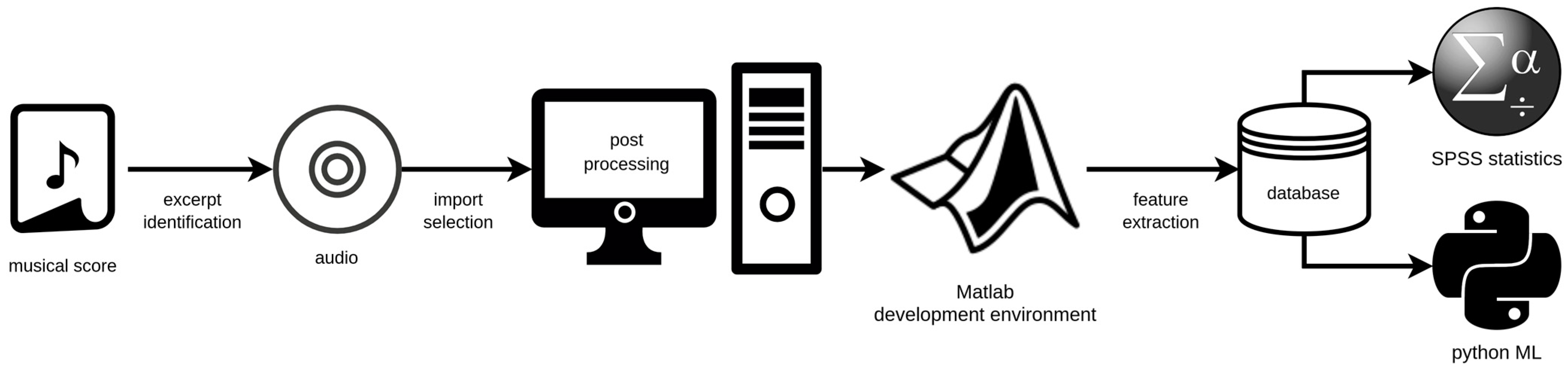

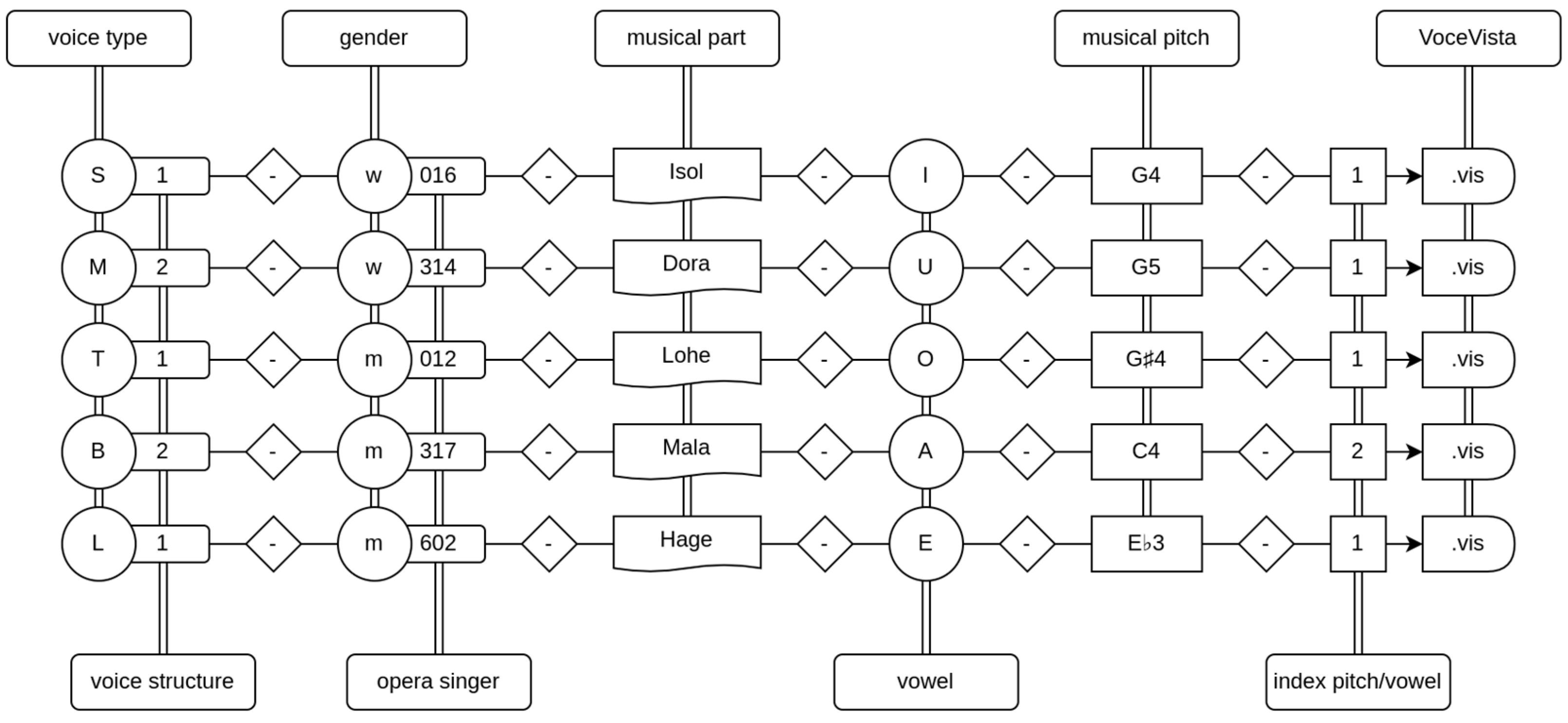

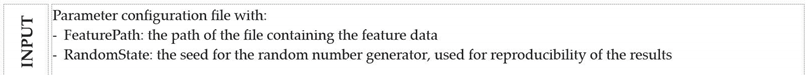

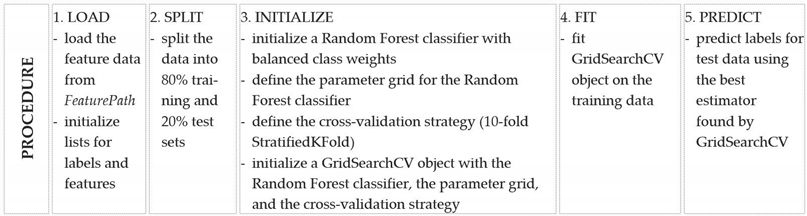

2. Materials and Methods

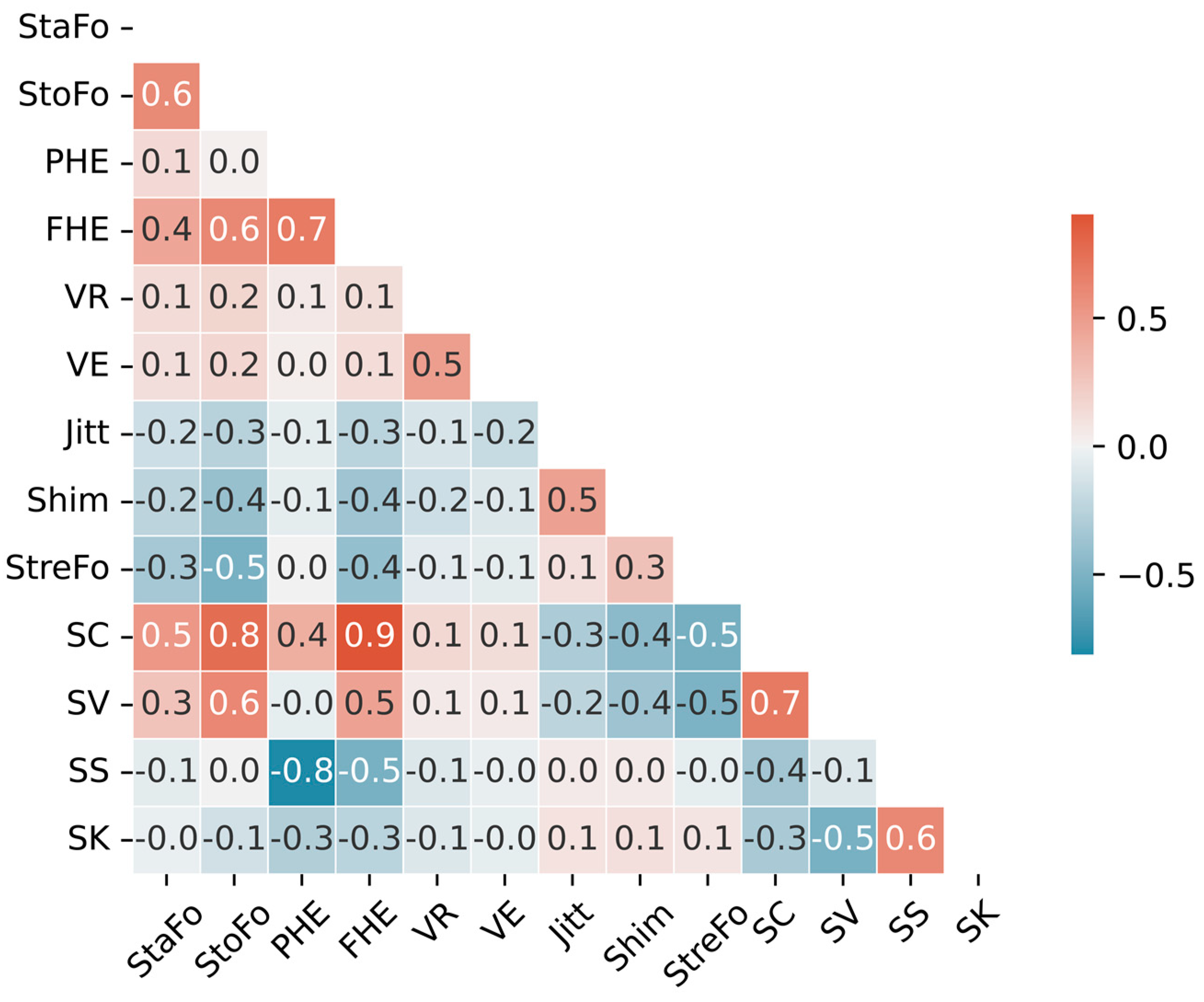

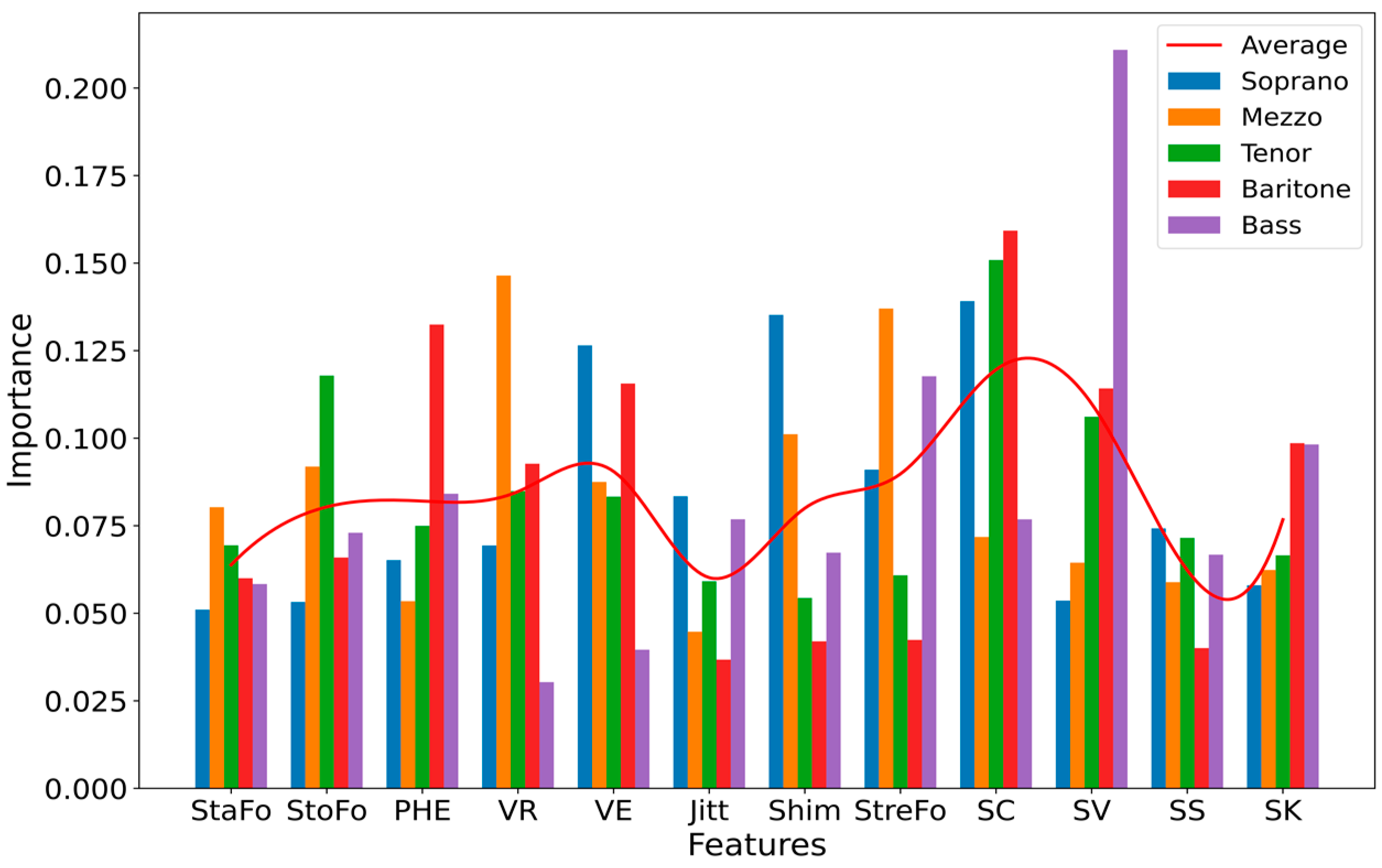

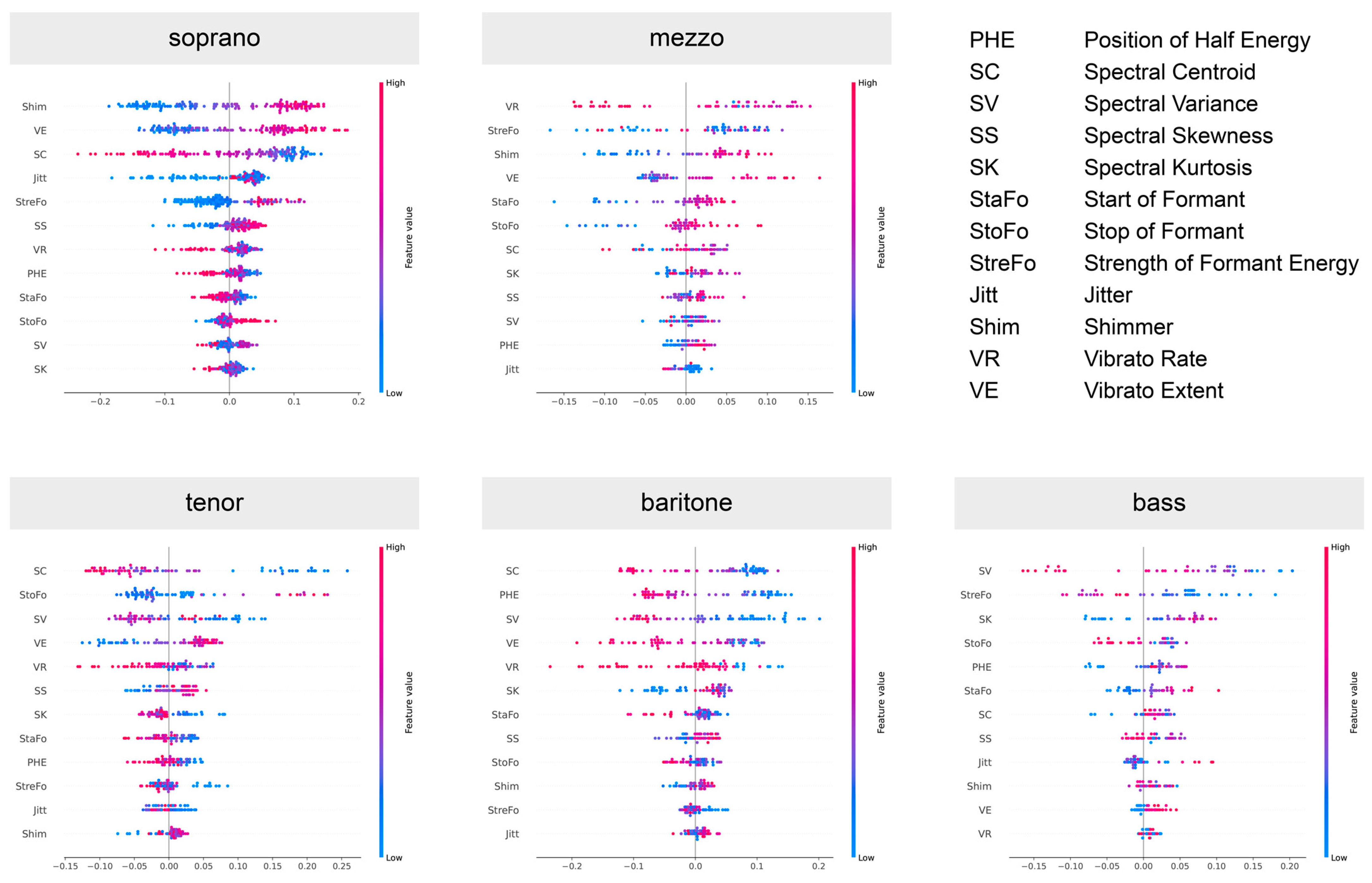

- Start and Stop of Formant (StaFo and StoFo), providing insight into the frequency range that most significantly amplifies vocal tract resonance;

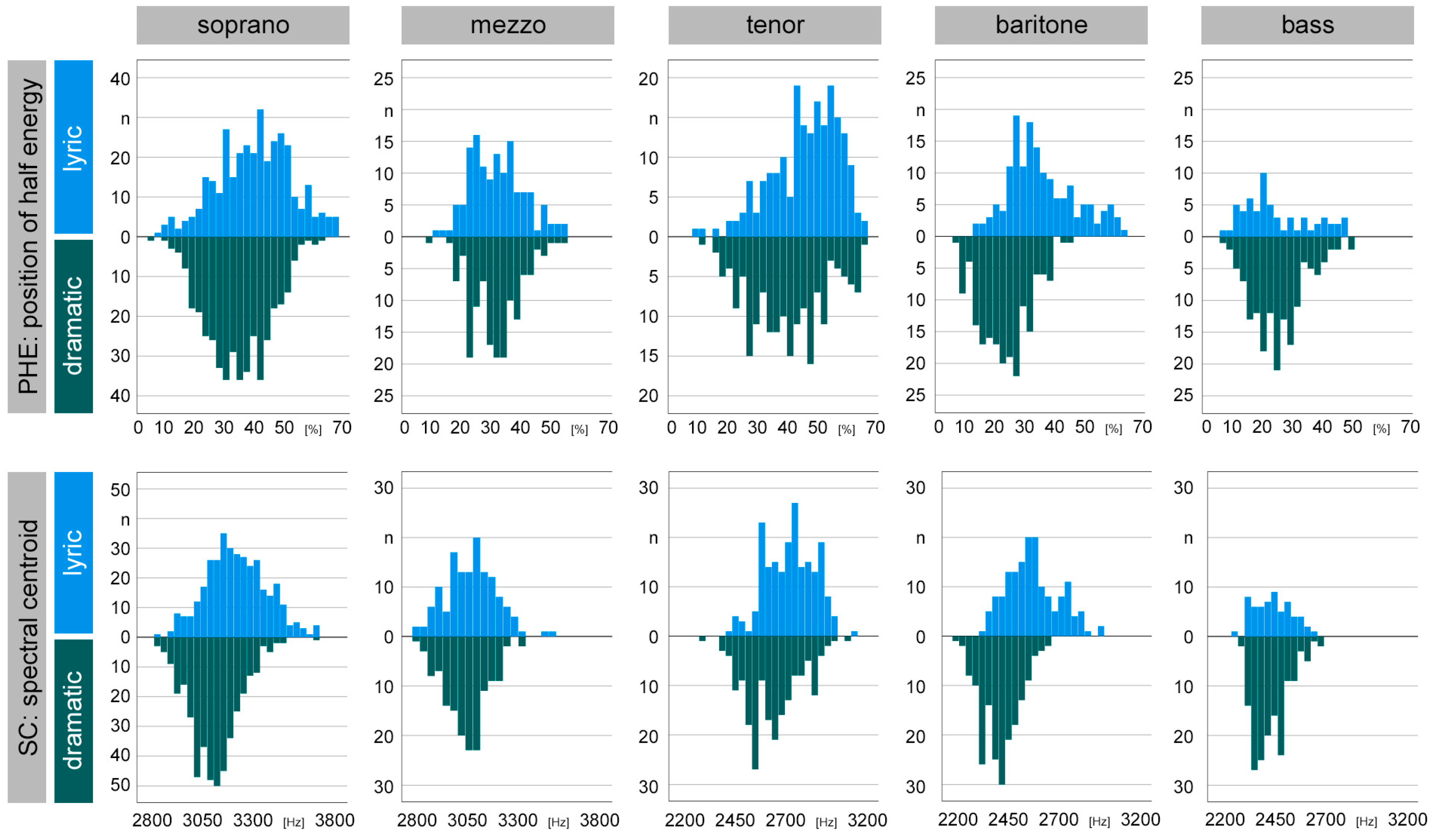

- Position of Half Energy (PHE) and Frequency of Half Energy (FHE), offering valuable information about the spectral balance of the voice by representing the midpoint position of the energy distribution;

- Vibrato Rate (VR) and Vibrato Extent (VE), which elucidate details about the speed and extent of pitch oscillation;

- Perturbation measures Jitter (Jitt) and Shimmer (Shim), which quantify voice stability by calculating cycle-to-cycle variations of the fundamental frequency and amplitude;

- Strength of Formant Energy (StreFo), signifying the energy intensity within the singer’s formant frequency bands;

- Further spectral features, i.e., Spectral Centroid (SC), Variance (SV), Skewness (SS), and Kurtosis (SK), delivering essential data about the distribution of energy in the singer’s formant frequency bands.

3. Results

4. Discussion

- In the phoniatric check-up, how are my current possibilities in solo opera singing assessed with the help of AI-supported evaluation of my objective vocal parameters?

- Is my voice suitable for a desired change of fach?

- Are my recent vocal fatigue symptoms due to the selection of my current roles?

- Are my persistent vocal problems related to my implemented fach change?

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sataloff, R.T. Professional singers: The science and art of clinical care. Am. J. Otolaryngol. 1981, 2, 251–266. [Google Scholar] [CrossRef] [PubMed]

- Sataloff, R.T. Vocal Health and Pedagogy: Science, Assessment, and Treatment, 3rd ed.; Plural Publishing: San Diego, CA, USA, 2021. [Google Scholar]

- Am Zehnhoff-Dinnesen, A.; Wiskirska-Woznica, B.; Neumann, K.; Nawka, T. Phoniatrics I: Fundamentals–Voice Disorders–Disorders of Language and Hearing Development (European Manual of Medicine), 1st ed.; Springer: Berlin, Germany, 2020. [Google Scholar]

- Hammarberg, B. Voice research and clinical needs. Folia Phoniatr. Logop. 2000, 52, 93–102. [Google Scholar] [CrossRef] [PubMed]

- Dejonckere, P.H.; Bradley, P.; Clemente, P.; Cornut, G.; Crevier-Buchman, L.; Friedrich, G.; Van De Heyning, P.; Remacle, M.; Woisard, V.; Committee on Phoniatrics of the European Laryngological Society (ELS). A basic protocol for functional assessment of voice pathology, especially for investigating the efficacy of (phonosurgical) treatments and evaluating new assessment techniques. Guideline elaborated by the Committee on Phoniatrics of the European Laryngological Society (ELS). Eur. Arch. Otorhinolaryngol. 2001, 258, 77–82. [Google Scholar] [CrossRef] [PubMed]

- Dejonckere, P.H.; Remacle, M.; Fresnel-Elbaz, E.; Woisard, V.; Crevier, L.; Millet, B. Reliability and clinical relevance of perceptual evaluation of pathological voices. Rev. Laryngol. Otol. Rhinol. 1998, 119, 247–248. [Google Scholar]

- Yamauchi, E.J.; Imaizumi, S.; Maruyama, H.; Haji, T. Perceptual evaluation of pathological voice quality: A comparative analysis between the RASATI and GRBASI scales. Logop. Phoniatr. Vocology 2010, 35, 121–128. [Google Scholar] [CrossRef]

- Dos Santos, P.C.M.; Vieira, M.N.; Sansão, J.P.H.; Gama, A.C.C. Effect of Auditory-Perceptual Training with Natural Voice Anchors on Vocal Quality Evaluation. J. Voice 2019, 33, 220–225. [Google Scholar] [CrossRef]

- Mahalingam, S.; Venkatraman, Y.; Boominathan, P. Cross-Cultural Adaptation and Validation of Consensus Auditory Perceptual Evaluation of Voice (CAPE-V): A Systematic Review. J. Voice 2021. S0892-1997(21)00359-3. [Google Scholar] [CrossRef]

- Nawka, T.; Konerding, U. The Interrater Reliability of Stroboscopy Evaluations. J. Voice 2012, 26, 812.e1–812.e10. [Google Scholar] [CrossRef]

- Caffier, P.P.; Schmidt, B.; Gross, M.; Karnetzky, K.; Nawka, T.; Rotter, A.; Seipelt, M.; Sedlmaier, B. A comparison of white light laryngostroboscopy versus autofluorescence endoscopy in the evaluation of vocal fold pathology. Laryngoscope 2013, 123, 1729–1734. [Google Scholar] [CrossRef]

- Powell, M.E.; Deliyski, D.D.; Hillman, R.E.; Zeitels, S.M.; Burns, J.A.; Mehta, D.D. Comparison of Videostroboscopy to Stroboscopy Derived From High-Speed Videoendoscopy for Evaluating Patients With Vocal Fold Mass Lesions. Am. J. Speech Lang. Pathol. 2016, 25, 576–589. [Google Scholar] [CrossRef]

- Caffier, P.P.; Nawka, T.; Ibrahim-Nasr, A.; Thomas, B.; Müller, H.; Ko, S.R.; Song, W.; Gross, M.; Weikert, S. Development of three-dimensional laryngostroboscopy for office-based laryngeal diagnostics and phonosurgical therapy. Laryngoscope 2018, 128, 2823–2831. [Google Scholar] [CrossRef] [PubMed]

- Pabon, P.; Ternström, S.; Lamarche, A. Fourier descriptor analysis and unification of voice range profile contours: Method and applications. J. Speech Lang. Hear. Res. 2011, 54, 755–776. [Google Scholar] [CrossRef] [PubMed]

- Ternström, S.; Pabon, P.; Södersten, M. The Voice Range Profile: Its Function, Applications, Pitfalls and Potential. Acta Acust. United Acust. 2016, 102, 268–283. [Google Scholar] [CrossRef]

- Caffier, P.P.; Möller, A.; Forbes, E.; Müller, C.; Freymann, M.L.; Nawka, T. The Vocal Extent Measure: Development of a Novel Parameter in Voice Diagnostics and Initial Clinical Experience. BioMed Res. Int. 2018, 2018, 3836714. [Google Scholar] [CrossRef] [PubMed]

- Freymann, M.L.; Mathmann, P.; Rummich, J.; Müller, C.; Neumann, K.; Nawka, T.; Caffier, P.P. Gender-specific reference ranges of the vocal extent measure in young and healthy adults. Logop. Phoniatr. Vocology 2020, 45, 73–81. [Google Scholar] [CrossRef]

- Wuyts, F.L.; De Bodt, M.S.; Molenberghs, G.; Remacle, M.; Heylen, L.; Millet, B.; Van Lierde, K.; Raes, J.; Van de Heyning, P.H. The dysphonia severity index: An objective measure of vocal quality based on a multiparameter approach. J. Speech Lang. Hear. Res. 2000, 43, 796–809. [Google Scholar] [CrossRef]

- Hakkesteegt, M.M.; Wieringa, M.H.; Brocaar, M.P.; Mulder, P.G.H.; Feenstra, L. The Interobserver and Test-Retest Variability of the Dysphonia Severity Index. Folia Phoniatr. Logop. 2008, 60, 86–90. [Google Scholar] [CrossRef]

- Patel, R.R.; Awan, S.N.; Barkmeier-Kraemer, J.; Courey, M.; Deliyski, D.D.; Eadie, T.; Paul, D.; Švec, J.; Hillman, R. Recommended Protocols for Instrumental Assessment of Voice: American Speech-Language-Hearing Association Expert Panel to Develop a Protocol for Instrumental Assessment of Vocal Function. Am. J. Speech Lang. Pathol. 2018, 27, 887–905. [Google Scholar] [CrossRef]

- Barsties V Latoszek, B.; Mathmann, P.; Neumann, K. The cepstral spectral index of dysphonia, the acoustic voice quality index and the acoustic breathiness index as novel multiparametric indices for acoustic assessment of voice quality. Curr. Opin. Otolaryngol. Head. Neck Surg. 2021, 29, 451–457. [Google Scholar] [CrossRef]

- Jacobson, B.H.; Johnson, A.; Grywalski, C.; Silbergleit, A.; Jacobson, G.; Benninger, M.S.; Newman, C.W. The Voice Handicap Index (VHI): Development and Validation. Am. J. Speech Lang. Pathol. 1997, 6, 66–70. [Google Scholar] [CrossRef]

- Portone, C.R.; Hapner, E.R.; McGregor, L.; Otto, K.; Johns, M.M., 3rd. Correlation of the Voice Handicap Index (VHI) and the Voice-Related Quality of Life Measure (V-RQOL). J. Voice 2007, 21, 723–727. [Google Scholar] [CrossRef] [PubMed]

- Nawka, T.; Verdonck-de Leeuw, I.M.; De Bodt, M.; Guimaraes, I.; Holmberg, E.B.; Rosen, C.A.; Schindler, A.; Woisard, V.; Whurr, R.; Konerding, U. Item Reduction of the Voice Handicap Index Based on the Original Version and on European Translations. Folia Phoniatr. Logop. 2009, 61, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Caffier, F.; Nawka, T.; Neumann, K.; Seipelt, M.; Caffier, P.P. Validation and Classification of the 9-Item Voice Handicap Index (VHI-9i). J. Clin. Med. 2021, 10, 3325. [Google Scholar] [CrossRef] [PubMed]

- Benninger, M.S.; Jacobson, B.H.; Johnson, A.F. Vocal Arts Medicine: The Care and Prevention of Professional Voice Disorders; Thieme: Stuttgart, Germany, 1994. [Google Scholar]

- Müller, M.; Schulz, T.; Ermakova, T.; Caffier, P.P. Lyric or Dramatic-Vibrato Analysis for Voice Type Classification in Professional Opera Singers. IEEE/ACM Trans. Audio Speech Lang. 2021, 29, 943–955. [Google Scholar] [CrossRef]

- Müller, M.; Wang, Z.; Caffier, F.; Caffier, P.P. New objective timbre parameters for classification of voice type and fach in professional opera singers. Sci. Rep. 2022, 12, 17921. [Google Scholar] [CrossRef]

- Marinescu, M.-C.; Ramirez, R. A Timing-Based Classification Method for Human Voice in Opera Recordings. In Proceedings of the 2009 International Conference on Machine Learning and Applications, ICMLA Proceedings, Miami, FL, USA, 13–15 December 2009; pp. 577–582. [Google Scholar] [CrossRef]

- Ropero Rendón, M.D.M.; Ermakova, T.; Freymann, M.L.; Ruschin, A.; Nawka, T.; Caffier, P.P. Efficacy of Phonosurgery, Logopedic Voice Treatment and Vocal Pedagogy in Common Voice Problems of Singers. Adv. Ther. 2018, 35, 1069–1086. [Google Scholar] [CrossRef]

- Kwok, M.; Eslick, G.D. The Impact of Vocal and Laryngeal Pathologies Among Professional Singers: A Meta-analysis. J. Voice 2019, 33, 58–65. [Google Scholar] [CrossRef]

- Rubin, A.D.; Codino, J. The Art of Caring for the Professional Singer. Otolaryngol. Clin. N. Am. 2019, 52, 769–778. [Google Scholar] [CrossRef]

- Caffier, P.P.; Weikert, S.; Nawka, T. Acute Vocal Fold Hemorrhage While Singing. Dtsch. Arztebl. Int. 2023, 120, 114. [Google Scholar] [CrossRef]

- Kloiber, R.; Konold, W.; Maschka, R. Handbuch der Oper, 14th ed.; J.B. Metzler: Stuttgart, Germany, 2016. [Google Scholar]

- Ling, P.A. Stimme, Stimmfach, Fachvertrag; Wißner: Augsburg, Germany, 2008. [Google Scholar]

- Benninger, M.S. The professional voice. J. Laryngol. Otol. 2011, 125, 111–116. [Google Scholar] [CrossRef]

- Sataloff, R.T. Professional voice users: The evaluation of voice disorders. Occup. Med. 2001, 16, 633–647,v. [Google Scholar] [PubMed]

- McKinney, J. The singing/acting young adult from a singing instruction perspective. J. Voice 1997, 11, 153–155. [Google Scholar] [CrossRef] [PubMed]

- Titze, I.R. Mechanical stress in phonation. J. Voice 1994, 8, 99–105. [Google Scholar] [CrossRef] [PubMed]

- Zeitels, S.M. The Art and Craft of Phonomicrosurgery in Grammy Award-Winning Elite Performers. Ann. Otol. Rhinol. Laryngol. 2019, 128, 7S–24S. [Google Scholar] [CrossRef] [PubMed]

- Chapman, J.L.; Morris, R. Singing and Teaching Singing: A Holistic Approach to Classical Voice, 4th ed.; Plural Publishing: San Diego, CA, USA, 2021. [Google Scholar]

- Harris, T.; Howard, D.M. The Voice Clinic Handbook, 2nd ed.; Compton Publishing: Braunton, UK, 2018. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Prasad, A.M.; Iverson, L.R.; Liaw, A. Newer Classification and Regression Tree Techniques: Bagging and Random Forests for Ecological Prediction. Ecosystems 2006, 9, 181–199. [Google Scholar] [CrossRef]

- scikit-learn.org. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 24 July 2023).

- Zhou, Z.H. Model Selection and Evaluation. In Machine Learning; Zhou, Z.H., Ed.; Springer: Singapore, 2021; pp. 25–56. [Google Scholar] [CrossRef]

- Mecke, A.-C.; Pfleiderer, M.; Richter, B.; Seedorf, T. Lexikon der Gesangsstimme, 2nd ed.; Laaber: Laaber, Germany, 2018. [Google Scholar]

- Coleman, R.F. Performance demands and the performer’s vocal capabilities. J. Voice 1987, 1, 209–216. [Google Scholar] [CrossRef]

- Scherer, R.C.; Brewer, D.W.; Colton, R.; Rubin, L.S.; Raphael, B.N.; Miller, R.; Howell, E.; Moore, G.P. The Integration of Voice Science, Voice Pathology, Medicine, Public Speaking, Acting, and Singing. J. Voice 1994, 8, 359–374. [Google Scholar] [CrossRef]

- Sataloff, R.T. The Professional Voice: Part I. J. Voice 1987, 1, 92–104. [Google Scholar] [CrossRef]

- Klingholz, F. Die Akustik der gestörten Stimme; Thieme: Stuttgart, Germany, 1986. [Google Scholar]

- Titze, I. Critical periods of vocal change–advanced age. NATS J. 1993, 49, 27. [Google Scholar]

- Richter, B.; Echternach, M. Medical treatment of singers’ voices. HNO 2011, 59, 547–555. [Google Scholar] [CrossRef] [PubMed]

- Kesting, J. Die Großen Sänger; Hoffmann und Campe: Hamburg, Germany, 2008. [Google Scholar]

- Biau, G. Analysis of a random forests model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar] [CrossRef]

- Sharaf, A.I. Sleep Apnea Detection Using Wavelet Scattering Transformation and Random Forest Classifier. Entropy 2023, 25, 399. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Chi, M.; Su, D.; Xiong, Y.; Wei, H.; You, Y.; Zuo, Y.; Yang, L. A random forest-based metabolic risk model to assess the prognosis and metabolism-related drug targets in ovarian cancer. Comput. Biol. Med. 2023, 153, 106432. [Google Scholar] [CrossRef]

|

|

|

| Predicted Dramatic | Predicted Lyric | |

|---|---|---|

| Actual Dramatic | True Positive (TP) | False Negative (FN) |

| Actual Lyric | False Positive (FP) | True Negative (TN) |

| Vocal Characteristics of Investigated Opera Singers | Frequency of Half Energy (FHE in Hz) | Position of Half Energy (PHE in %) | Spectral Centroid (SC in Hz) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Voice Type | Voice Structure (n Sound Samples) | Mean (SD) | 95% RR | p-Value (MWU-Test) | Mean (SD) | 95% RR | p-Value (MWU-test) | Mean (SD) | 95% RR | p-Value (MWU-Test) |

| soprano | all (n = 774) | 3092 (284) | 3072; 3112 | 35.98 (12.92) | 35.07; 36.89 | 3207 (154) | 3196; 3218 | |||

| lyric (n = 352) | 3169 (307) | 3136; 3201 | p < 0.001 | 39.46 (13.94) | 38.00; 40.92 | p < 0.001 | 3271 (159) | 3255; 3288 | p < 0.001 | |

| dramatic (n = 422) | 3028 (247) | 3004; 3052 | 33.08 (11.23) | 32.00; 34.15 | 3153 (128) | 3141; 3166 | ||||

| mezzo-soprano | all (n = 281) | 2985 (206) | 2960; 3009 | 31.10 (9.36) | 30.00; 32.20 | 3097 (121) | 3083; 3111 | |||

| lyric (n = 134) | 2990 (217) | 2953; 3027 | p = 0.766 | 31.36 (9.85) | 29.68; 33.04 | p = 0.767 | 3110 (132) | 3088; 3133 | p = 0.096 | |

| dramatic (n = 147) | 2979 (196) | 2947; 3011 | 30.86 (8.92) | 29.41; 32.31 | 3085 (108) | 3067; 3103 | ||||

| tenor | all (n = 389) | 2705 (221) | 2683; 2727 | 44.05 (13.82) | 42.67; 45.43 | 2740 (143) | 2725; 2754 | |||

| lyric (n = 199) | 2760 (205) | 2732; 2789 | p < 0.001 | 47.49 (12.77) | 45.70; 49.28 | p < 0.001 | 2789 (129) | 2771; 2807 | p < 0.001 | |

| dramatic (n = 190) | 2648 (224) | 2616; 2680 | 40.45 (13.99) | 38.45; 42.45 | 2688 (139) | 2668; 2708 | ||||

| baritone | all (n = 343) | 2454 (206) | 2432; 2476 | 28.34 (12.86) | 26.98; 29.71 | 2532 (144) | 2516; 2547 | |||

| lyric (n = 157) | 2576 (206) | 2543; 2608 | p < 0.001 | 35.94 (12.86) | 33.91; 37.97 | p < 0.001 | 2625 (135) | 2604; 2646 | p < 0.001 | |

| dramatic (n = 186) | 2351 (140) | 2331; 2371 | 21.93 (8.72) | 20.67; 23.20 | 2453 (97) | 2439; 2467 | ||||

| bass | all (n = 217) | 2384 (164) | 2363; 2406 | 24.01 (10.24) | 22.64; 25.38 | 2480 (92) | 2468; 2493 | |||

| lyric (n = 60) | 2379 (200) | 2327; 2430 | p = 0.270 | 23.65 (12.46) | 20.43; 26.87 | p = 0.265 | 2491 (98) | 2466; 2516 | p = 0.252 | |

| dramatic (n = 157) | 2387 (149) | 2363; 2410 | 24.15 (9.30) | 22.68; 25.61 | 2476 (89) | 2462; 2490 | ||||

| Voice Type | Accuracy | Balanced Error Rate (BER) |

|---|---|---|

| Soprano | 81.94% | 16.91% |

| Mezzo-soprano | 70.18% | 29.44% |

| Tenor | 82.05% | 17.91% |

| Baritone | 79.71% | 22.83% |

| Bass | 79.55% | 28.39% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Müller, M.; Caffier, F.; Caffier, P.P. Harnessing Machine Learning in Vocal Arts Medicine: A Random Forest Application for “Fach” Classification in Opera. Diagnostics 2023, 13, 2870. https://doi.org/10.3390/diagnostics13182870

Wang Z, Müller M, Caffier F, Caffier PP. Harnessing Machine Learning in Vocal Arts Medicine: A Random Forest Application for “Fach” Classification in Opera. Diagnostics. 2023; 13(18):2870. https://doi.org/10.3390/diagnostics13182870

Chicago/Turabian StyleWang, Zehui, Matthias Müller, Felix Caffier, and Philipp P. Caffier. 2023. "Harnessing Machine Learning in Vocal Arts Medicine: A Random Forest Application for “Fach” Classification in Opera" Diagnostics 13, no. 18: 2870. https://doi.org/10.3390/diagnostics13182870

APA StyleWang, Z., Müller, M., Caffier, F., & Caffier, P. P. (2023). Harnessing Machine Learning in Vocal Arts Medicine: A Random Forest Application for “Fach” Classification in Opera. Diagnostics, 13(18), 2870. https://doi.org/10.3390/diagnostics13182870