Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future

Abstract

1. Introduction

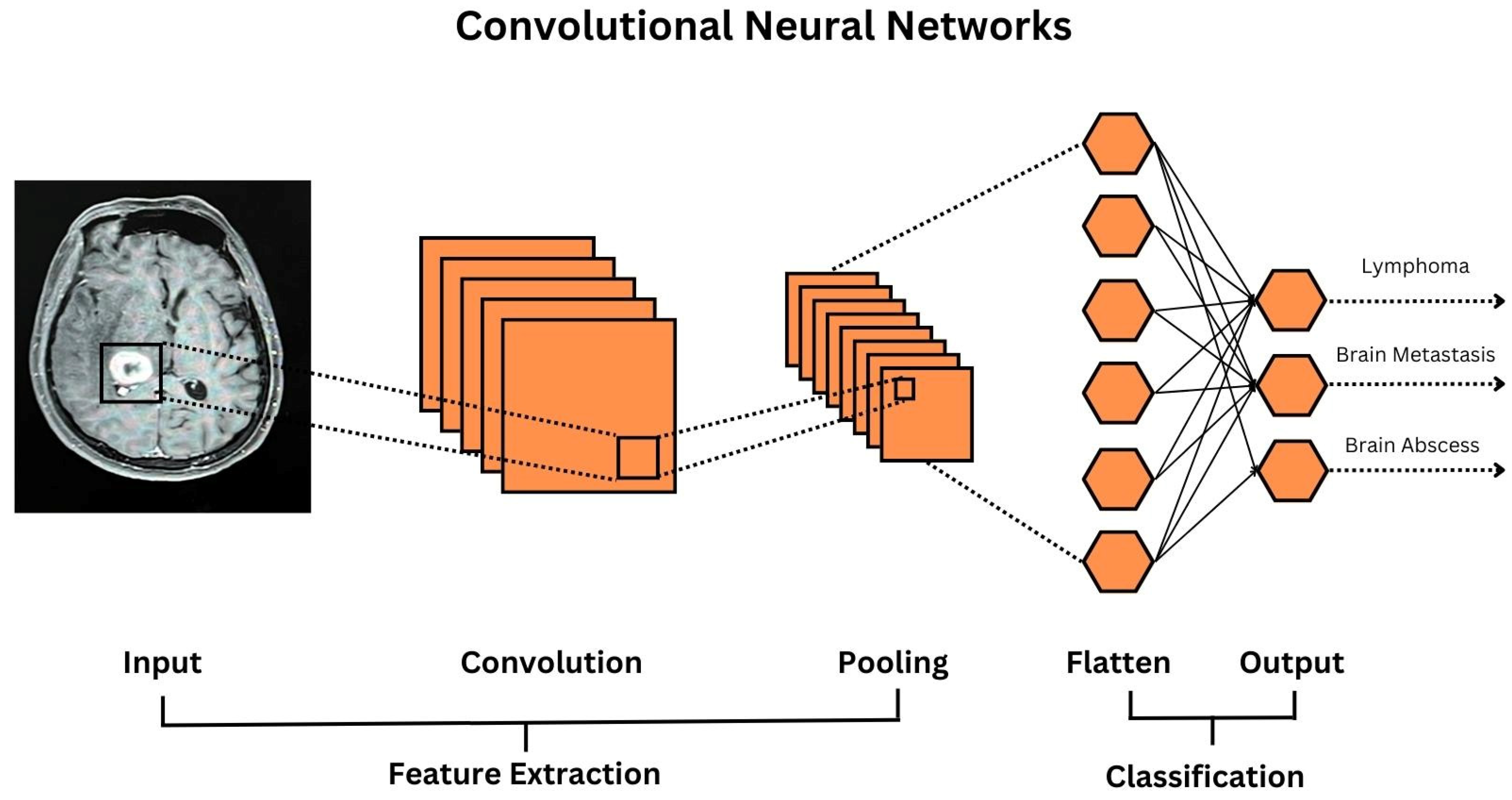

2. Tumor

Current Challenges

3. Spine

Current Challenges

4. Epilepsy

Current Challenges

5. Vascular

Current Challenges

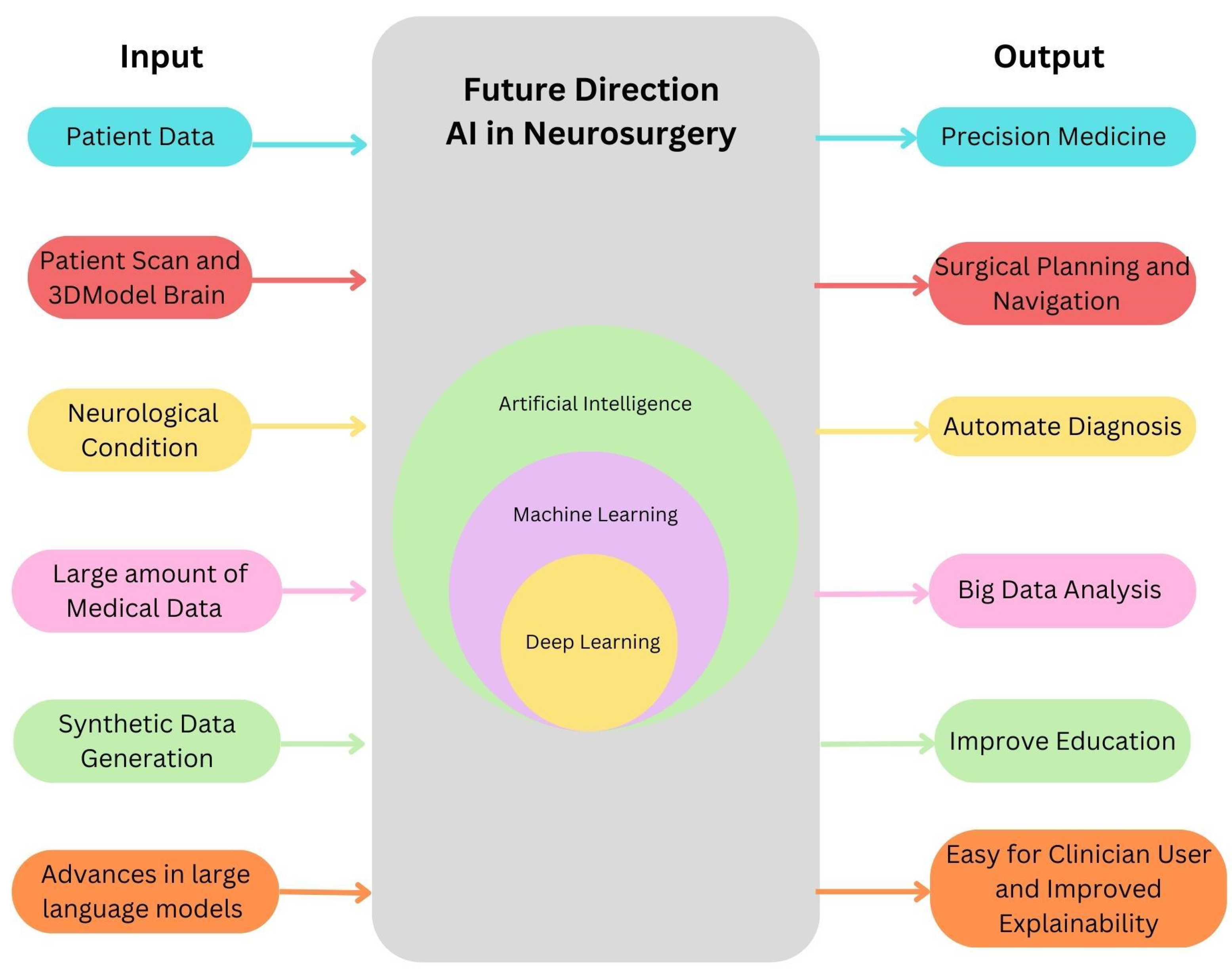

6. Future Directions

7. Limitations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Delgado-Baquerizo, M.; Wang, D.; Isbell, F.; Liu, J.; Feng, C.; Liu, J.; Zhong, Z.; Zhu, H.; Yuan, X.; et al. Diversifying Livestock Promotes Multidiversity and Multifunctionality In Managed Grasslands. PNAS 2019, 116, 6187–6192. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Z.; Emanuel, E.J. Predicting the Future—Big Data, Machine Learning, and Clinical Medicine. N. Engl. J. Med. 2016, 375, 12161219. [Google Scholar] [CrossRef]

- Senders, J.T.; Staples, P.C.; Karhade, A.V.; Zaki, M.M.; Gormley, W.B.; Broekman, M.L.; Smith, T.R.; Arnaout, O. Machine Learning and Neurosurgical Outcome Prediction: A Systematic Review. World Neurosurg. 2018, 109, 476–486.e1. [Google Scholar] [CrossRef] [PubMed]

- Senders, J.T.; Arnaout, O.; Karhade, A.V.; Dasenbrock, H.H.; Gormley, W.B.; Broekman, M.L.; Smith, T.R. Natural and Artificial Intelligence in Neurosurgery: A Systematic Review. Neurosurgery 2017, 83, 181–192. [Google Scholar] [CrossRef]

- Buchlak, Q.D.; Esmaili, N.; Leveque, J.-C.; Farrokhi, F.; Bennett, C.; Piccardi, M.; Sethi, R.K. Machine Learning Applications to Clinical Decision Support in Neurosurgery: An Artificial Intelligence Augmented Systematic Review. Neurosurg. Rev. 2019, 43, 1235–1253. [Google Scholar] [CrossRef]

- Elfanagely, O.; Toyoda, Y.; Othman, S.; Mellia, J.A.; Basta, M.; Liu, T.; Kording, K.; Ungar, L.; Fischer, J.P. Machine Learning and Surgical Outcomes Prediction: A Systematic Review. J. Surg. Res. 2021, 264, 346–361. [Google Scholar] [CrossRef] [PubMed]

- Raj, J.D.; Nelson, J.A.; Rao, K.S.P. A Study on the Effects of Some Reinforcers to Improve Performance of Employees in a Retail Industry. Behav. Modif. 2006, 6, 848–866. [Google Scholar] [CrossRef] [PubMed]

- Noble, W.S. What Is a Support Vector Machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef] [PubMed]

- Raschka, S.; Mirjalili, V. Python Machine Learning: Machine Learning and Deep. Learning with Python, Scikit-Learn, and TensorFlow, 2nd ed.; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Deo, R.C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef] [PubMed]

- Munsell, B.C.; Wee, C.-Y.; Keller, S.S.; Weber, B.; Elger, C.; da Silva, L.A.T.; Nesland, T.; Styner, M.; Shen, D.; Bonilha, L. Evaluation Of Machine Learning Algorithms for Treatment Outcome Prediction in Patients With Epilepsy Based on Structural Connectome Data. Neuroimage 2015, 118, 219–230. [Google Scholar] [CrossRef]

- Staartjes, V.E.; de Wispelaere, M.P.; Vandertop, W.P.; Schröder, M.L. Deep Learning-Based Preoperative Predictive Analytics for Patient-Reported Outcomes Following Lumbar Discectomy: Feasibility of Center-Specific Modeling. Spine J. 2019, 19, 853–861. [Google Scholar] [CrossRef] [PubMed]

- Izadyyazdanabadi, M.; Belykh, E.; Mooney, M.; Martirosyan, N.; Eschbacher, J.; Nakaji, P.; Preul, M.C.; Yang, Y. Convolutional Neural Networks: Ensemble Modeling, Fine-Tuning and Unsupervised Semantic Localization for Neurosurgical CLE Images. J. Vis. Commun. Image Represent. 2018, 54, 10–20. [Google Scholar] [CrossRef]

- Chauhan, N.K.; Singh, K. A Review on Conventional Machine Learning vs Deep Learning. In Proceedings of the 2018 International Conference on Computing, Power and Communication Technologies, GUCON 2018, Greater Noida, India, 28–29 September 2018; pp. 347–352. [Google Scholar] [CrossRef]

- Doppalapudi, S.; Qiu, R.G.; Badr, Y. Lung Cancer Survival Period Prediction and Understanding: Deep Learning Approaches. Int. J. Med. Informatics 2020, 148, 104371. [Google Scholar] [CrossRef]

- Corso, J.J.; Sharon, E.; Dube, S.; El-Saden, S.; Sinha, U.; Yuille, A. Efficient Multilevel Brain Tumor Segmentation With Integrated Bayesian Model Classification. IEEE Trans. Med. Imaging 2008, 27, 629–640. [Google Scholar] [CrossRef]

- Bauer, S.; Nolte, L.-P.; Reyes, M. Fully Automatic Segmentation of Brain Tumor Images Using Support Vector Machine Classification in Combination with Hierarchical Conditional Random Field Regularization. Med. Image Comput. Comput. Assist. Interv. 2011, 14, 354–361. [Google Scholar] [CrossRef] [PubMed]

- Ismael, S.A.A.; Mohammed, A.; Hefny, H. An Enhanced Deep Learning Approach for Brain Cancer MRI Images Classification Using Residual Networks. Artif. Intell. Med. 2019, 102, 101779. [Google Scholar] [CrossRef] [PubMed]

- Lukas, L.; Devos, A.; Suykens, J.; Vanhamme, L.; Howe, F.; Majós, C.; Moreno-Torres, A.; Van Der Graaf, M.; Tate, A.; Arús, C.; et al. Brain Tumor Classification Based On Long Echo Proton MRS Signals. Artif. Intell. Med. 2004, 31, 73–89. [Google Scholar] [CrossRef] [PubMed]

- Akkus, Z.; Ali, I.; Sedlář, J.; Agrawal, J.P.; Parney, I.F.; Giannini, C.; Erickson, B.J. Predicting Deletion of Chromosomal Arms 1p/19q in Low-Grade Gliomas from MR Images Using Machine Intelligence. J. Digit. Imaging 2017, 30, 469–476. [Google Scholar] [CrossRef] [PubMed]

- Díaz-Pernas, F.; Martínez-Zarzuela, M.; Antón-Rodríguez, M.; González-Ortega, D. A Deep Learning Approach for Brain Tumor Classification and Segmentation Using a Multiscale Convolutional Neural Network. Healthcare 2021, 9, 153. [Google Scholar] [CrossRef] [PubMed]

- Buchlak, Q.D.; Esmaili, N.; Leveque, J.C.; Bennett, C.; Farrokhi, F.; Piccardi, M. Machine learning applications to neuroimaging for glioma detection and classification: An artificial intelligence augmented systematic review. J. Clin. Neurosci. 2021, 89, 177–198. [Google Scholar] [CrossRef]

- McAvoy, M.; Prieto, P.C.; Kaczmarzyk, J.R.; Fernández, I.S.; McNulty, J.; Smith, T.; Yu, K.H.; Gormley, W.B.; Arnaout, O. Classification of glioblastoma versus primary central nervous system lymphoma using convolutional neural networks. Sci. Rep. 2021, 11, 15219. [Google Scholar] [CrossRef] [PubMed]

- Boaro, A.; Kaczmarzyk, J.R.; Kavouridis, V.K.; Harary, M.; Mammi, M.; Dawood, H.; Shea, A.; Cho, E.Y.; Juvekar, P.; Noh, T.; et al. Deep neural networks allow expert-level brain meningioma segmentation and present potential for improvement of clinical practice. Sci. Rep. 2022, 12, 15462. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Chang, K.; Bai, H.X.; Xiao, B.; Su, C.; Bi, W.L.; Zhang, P.J.; Senders, J.T.; Vallières, M.; Kavouridis, V.K.; et al. Machine learning reveals multimodal MRI patterns predictive of isocitrate dehydrogenase and 1p/19q status in diffuse low- and high-grade gliomas. J. Neurooncol. 2019, 142, 299–307. [Google Scholar] [CrossRef]

- Huang, H.; Yang, G.; Zhang, W.; Xu, X.; Yang, W.; Jiang, W.; Lai, X. A Deep Multi-Task Learning Framework for Brain Tumor Segmentation. Front. Oncol. 2021, 11. [Google Scholar] [CrossRef]

- Yousef, R.; Khan, S.; Gupta, G.; Siddiqui, T.; Albahlal, B.M.; Alajlan, S.A.; Haq, M.A. U-Net-Based Models towards Optimal MR Brain Image Segmentation. Diagnostics 2023, 13, 1624. [Google Scholar] [CrossRef] [PubMed]

- Juarez-Chambi, R.M.; Kut, C.; Rico-Jimenez, J.J.; Chaichana, K.L.; Xi, J.; Campos-Delgado, D.U.; Rodriguez, F.J.; Quinones-Hinojosa, A.; Li, X.; Jo, J.A. AI-Assisted In Situ Detection of Human Glioma Infiltration Using a Novel Computational Method for Optical Coherence Tomography. Clin. Cancer Res. 2019, 25, 6329–6338. [Google Scholar] [CrossRef] [PubMed]

- Jermyn, M.; Desroches, J.; Mercier, J.; St-Arnaud, K.; Guiot, M.-C.; Leblond, F.; Petrecca, K. Raman Spectroscopy Detects Distant Invasive Brain Cancer Cells Centimeters Beyond MRI Capability in Humans. Biomed. Opt. Express 2016, 7, 5129–5137. [Google Scholar] [CrossRef] [PubMed]

- Schucht, P.; Mathis, A.M.; Murek, M.; Zubak, I.; Goldberg, J.; Falk, S.; Raabe, A. Exploring Novel Innovation Strategies to Close a Technology Gap in Neurosurgery: HORAO Crowdsourcing Campaign. J. Med. Internet Res. 2023, 25, e42723. [Google Scholar] [CrossRef] [PubMed]

- Achkasova, K.A.; Moiseev, A.A.; Yashin, K.S.; Kiseleva, E.B.; Bederina, E.L.; Loginova, M.M.; Medyanik, I.A.; Gelikonov, G.V.; Zagaynova, E.V.; Gladkova, N.D. Nondestructive Label-Free Detection of Peritumoral White Matter Damage Using Cross-Polarization Optical Coherence Tomography. Front. Oncol. 2023, 13, 1133074. [Google Scholar] [CrossRef] [PubMed]

- Tonutti, M.; Gras, G.; Yang, G.-Z. A Machine Learning Approach For Real-Time Modelling of Tissue Deformation in Image-Guided Neurosurgery. Artif. Intell. Med. 2017, 80, 39–47. [Google Scholar] [CrossRef]

- Shen, B.; Zhang, Z.; Shi, X.; Cao, C.; Zhang, Z.; Hu, Z.; Ji, N.; Tian, J. Real-time intraoperative glioma diagnosis using fluorescence imaging and deep convolutional neural networks. Eur. J. Nucl. Med. Mol. Imaging. 2021, 48, 3482–3492. [Google Scholar] [CrossRef] [PubMed]

- Hollon, T.; Orringer, D.A. Label-Free Brain Tumor Imaging Using Raman-Based Methods. J. Neuro-Oncology 2021, 151, 393–402. [Google Scholar] [CrossRef] [PubMed]

- Emblem, K.E.; Pinho, M.C.; Zöllner, F.G.; Due-Tonnessen, P.; Hald, J.K.; Schad, L.R.; Meling, T.R.; Rapalino, O.; Bjornerud, A. A Generic Support Vector Machine Model for Preoperative Glioma Survival Associations. Radiology 2015, 275, 228–234. [Google Scholar] [CrossRef]

- Akbari, H.; Macyszyn, L.; Da, X.; Bilello, M.; Wolf, R.L.; Martinez-Lage, M.; Biros, G.; Alonso-Basanta, M.; O’Rourke, D.M.; Davatzikos, C. Imaging Surrogates of Infiltration Obtained Via Multiparametric Imaging Pattern Analysis Predict Subsequent Location of Recurrence of Glioblastoma. Neurosurgery 2016, 78, 572–580. [Google Scholar] [CrossRef]

- Emblem, K.E.; Due-Tonnessen, P.; Hald, J.K.; Bjornerud, A.; Pinho, M.C.; Scheie, D.; Schad, L.R.; Meling, T.R.; Zoellner, F.G. Machine Learning In Preoperative Glioma MRI: Survival Associations by Perfusion-Based Support Vector Machine Outperforms Traditional MRI. J. Magn. Reson. Imaging 2013, 40, 47–54. [Google Scholar] [CrossRef] [PubMed]

- Knoll, M.A.; Oermann, E.K.; Yang, A.I.; Paydar, I.; Steinberger, J.; Collins, B.; Collins, S.; Ewend, M.; Kondziolka, D. Survival of Patients With Multiple Intracranial Metastases Treated With Stereotactic Radiosurgery. Am. J. Clin. Oncol. 2018, 41, 425–431. [Google Scholar] [CrossRef] [PubMed]

- Azimi, P.; Shahzadi, S.; Sadeghi, S. Use Of Artificial Neural Networks to Predict the Probability of Developing New Cerebral Metastases After Radiosurgery Alone. J. Neurosurg. Sci. 2020, 64, 52–57. [Google Scholar] [CrossRef]

- Tewarie, I.A.; Senko, A.W.; Jessurun, C.A.C.; Zhang, A.T.; Hulsbergen, A.F.C.; Rendon, L.; McNulty, J.; Broekman, M.L.D.; Peng, L.C.; Smith, T.R.; et al. Predicting leptomeningeal disease spread after resection of brain metastases using machine learning. J. Neurosurg. 2022, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Blonigen, B.J.; Steinmetz, R.D.; Levin, L.; Lamba, M.A.; Warnick, R.E.; Breneman, J.C. Irradiated Volume as a Predictor of Brain Radionecrosis After Linear Accelerator Stereotactic Radiosurgery. Int. J. Radiat. Oncol. 2010, 77, 996–1001. [Google Scholar] [CrossRef]

- Chang, E.L.; Wefel, J.S.; Hess, K.R.; Allen, P.K.; Lang, F.F.; Kornguth, D.G.; Arbuckle, R.B.; Swint, J.M.; Shiu, A.S.; Maor, M.H.; et al. Neurocognition in Patients With Brain Metastases Treated With Radiosurgery or Radiosurgery Plus Whole-Brain Irradiation: A Randomised Controlled Trial. Lancet Oncol. 2009, 10, 1037–1044. [Google Scholar] [CrossRef]

- Mardor, Y.; Roth, Y.; Ocherashvilli, A.; Spiegelmann, R.; Tichler, T.; Daniels, D.; Maier, S.E.; Nissim, O.; Ram, Z.; Baram, J.; et al. Pretreatment Prediction of Brain Tumors Response to Radiation Therapy Using High b-Value Diffusion-Weighted MRI. Neoplasia 2004, 6, 136–142. [Google Scholar] [CrossRef] [PubMed]

- Hulsbergen, A.F.C.; Lo, Y.T.; Awakimjan, I.; Kavouridis, V.K.; Phillips, J.G.; Smith, T.R.; Verhoeff, J.J.C.; Yu, K.H.; Broekman, M.L.D.; Arnaout, O. Survival Prediction After Neurosurgical Resection of Brain Metastases: A Machine Learning Approach. Neurosurgery 2022, 91, 381–388. [Google Scholar] [CrossRef] [PubMed]

- Lacroix, M.; Abi-Said, D.; Fourney, D.R.; Gokaslan, Z.L.; Shi, W.; DeMonte, F.; Lang, F.F.; McCutcheon, I.E.; Hassenbusch, S.J.; Holland, E.; et al. A Multivariate Analysis Of 416 Patients with Glioblastoma Multiforme: Prognosis, Extent of Resection, and Survival. J. Neurosurg. 2001, 95, 190–198. [Google Scholar] [CrossRef] [PubMed]

- Cairncross, J.G.; Ueki, K.; Zlatescu, M.C.; Lisle, D.K.; Finkelstein, D.M.; Hammond, R.R.; Silver, J.S.; Stark, P.C.; Macdonald, D.R.; Ino, Y.; et al. Specific Genetic Predictors of Chemotherapeutic Response and Survival in Patients with Anaplastic Oligodendrogliomas. Gynecol. Oncol. 1998, 90, 1473–1479. [Google Scholar] [CrossRef]

- Eckel-Passow, J.E.; Lachance, D.H.; Molinaro, A.M.; Walsh, K.M.; Decker, P.A.; Sicotte, H.; Pekmezci, M.; Rice, T.W.; Kosel, M.L.; Smirnov, I.V.; et al. Glioma Groups Based on 1p/19q, IDH, and TERTPromoter Mutations in Tumors. N. Engl. J. Med. 2015, 372, 2499–2508. [Google Scholar] [CrossRef]

- Weller, M.; Stupp, R.; Reifenberger, G.; Brandes, A.A.; Bent, M.J.V.D.; Wick, W.; Hegi, M.E. MGMT Promoter Methylation in Malignant Gliomas: Ready for Personalized Medicine? Nat. Rev. Neurol. 2009, 6, 39–51. [Google Scholar] [CrossRef]

- Senders, J.T.; Staples, P.; Mehrtash, A.; Cote, D.J.; Taphoorn, M.J.B.; Reardon, D.A.; Gormley, W.B.; Smith, T.R.; Broekman, M.L.; Arnaout, O. An Online Calculator for the Prediction of Survival in Glioblastoma Patients Using Classical Statistics and Machine Learning. Neurosurgery 2020, 86, E184–E192. [Google Scholar] [CrossRef] [PubMed]

- Law, M.; Young, R.J.; Babb, J.S.; Peccerelli, N.; Chheang, S.; Gruber, M.L.; Miller, D.C.; Golfinos, J.G.; Zagzag, D.; Johnson, G. Gliomas: Predicting Time to Progression or Survival with Cerebral Blood Volume Measurements at Dynamic Susceptibility-weighted Contrast-enhanced Perfusion MR Imaging. Radiology 2008, 247, 490–498. [Google Scholar] [CrossRef]

- Price, S.J.; Jena, R.; Burnet, N.G.; Carpenter, T.A.; Pickard, J.D.; Gillard, J.H. Predicting Patterns of Glioma Recurrence Using Diffusion Tensor Imaging. Eur. Radiol. 2007, 17, 1675–1684. [Google Scholar] [CrossRef]

- Chang, K.; Beers, A.L.; Bai, H.X.; Brown, J.M.; Ly, K.I.; Li, X.; Senders, J.T.; Kavouridis, V.K.; Boaro, A.; Su, C.; et al. Automatic Assessment of Glioma Burden: A Deep Learning Algorithm for Fully Automated Volumetric and Bidimensional Measurement. Neuro-Oncology 2019, 21, 1412–1422. [Google Scholar] [CrossRef] [PubMed]

- Senders, J.T.; Zaki, M.M.; Karhade, A.V.; Chang, B.; Gormley, W.B.; Broekman, M.L.; Smith, T.R.; Arnaout, O. An Introduction and Overview of Machine Learning in Neurosurgical Care. Acta Neurochir. 2017, 160, 29–38. [Google Scholar] [CrossRef] [PubMed]

- Winkler-Schwartz, A.; Bissonnette, V.; Mirchi, N.; Ponnudurai, N.; Yilmaz, R.; Ledwos, N.; Siyar, S.; Azarnoush, H.; Karlik, B.; Del Maestro, R.F. Artificial Intelligence in Medical Education: Best Practices Using Machine Learning to Assess Surgical Expertise in Virtual Reality Simulation. J. Surg. Educ. 2019, 76, 1681–1690. [Google Scholar] [CrossRef]

- Celtikci, E. A Systematic Review on Machine Learning in Neurosurgery: The Future of Decision Making in Patient Care. Turk. Neurosurg. 2017, 28, 167–173. [Google Scholar] [CrossRef] [PubMed]

- Staartjes, V.E.; Stumpo, V.; Kernbach, J.M.; Klukowska, A.M.; Gadjradj, P.S.; Schröder, M.L.; Veeravagu, A.; Stienen, M.N.; van Niftrik, C.H.B.; Serra, C.; et al. Machine Learning in Neurosurgery: A Global Survey. Acta Neurochir. 2020, 162, 3081–3091. [Google Scholar] [CrossRef] [PubMed]

- Azimi, P.; Benzel, E.C.; Shahzadi, S.; Azhari, S.; Mohammadi, H.R. Use of Artificial Neural Networks to Predict Surgical Satisfaction in Patients With Lumbar Spinal Canal Stenosis. J. Neurosurg. Spine 2014, 20, 300–305. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, H.; Lee, S.I.; Garst, J.H.; Lu, D.S.; Li, C.H.; Nagasawa, D.T.; Ghalehsari, N.; Jahanforouz, N.; Razaghy, M.; Espinal, M.; et al. Use of Multivariate Linear Regression and Support Vector Regression to Predict Functional Outcome After Surgery for Cervical Spondylotic Myelopathy. J. Clin. Neurosci. 2015, 22, 1444–1449. [Google Scholar] [CrossRef] [PubMed]

- Shamim, M.S.; Enam, S.A.; Qidwai, U. Fuzzy Logic in Neurosurgery: Predicting Poor Outcomes After Lumbar Disk Surgery in 501 Consecutive Patients. Surg. Neurol. 2009, 72, 565–572. [Google Scholar] [CrossRef]

- Azimi, P.; Benzel, E.C.; Shahzadi, S.; Azhari, S.; Zali, A.R. Prediction of Successful Surgery Outcome in Lumbar Disc Herniation Based on Artificial Neural Networks. Glob. Spine J. 2014, 4. [Google Scholar] [CrossRef]

- Azimi, P.; Mohammadi, H.R.; Benzel, E.C.; Shahzadi, S.; Azhari, S. Use of Artificial Neural Networks to Predict Recurrent Lumbar Disk Herniation. J. Spinal Disord. Tech. 2015, 28, E161–E165. [Google Scholar] [CrossRef]

- Fatima, N.; Zheng, H.; Massaad, E.; Hadzipasic, M.; Shankar, G.M.; Shin, J.H. Development and Validation of Machine Learning Algorithms for Predicting Adverse Events After Surgery for Lumbar Degenerative Spondylolisthesis. World Neurosurg. 2020, 140, 627–641. [Google Scholar] [CrossRef] [PubMed]

- Karhade, A.V.; Thio, Q.C.B.S.; Ogink, P.T.; Shah, A.A.; Bono, C.M.; Oh, K.S.; Saylor, P.J.; Schoenfeld, A.J.; Shin, J.H.; Harris, M.B.; et al. Development of Machine Learning Algorithms for Prediction of 30-Day Mortality After Surgery for Spinal Metastasis. Neurosurgery 2018, 85, E83–E91. [Google Scholar] [CrossRef]

- Ames, C.P.; Smith, J.S.; Pellisé, F.; Kelly, M.; Alanay, A.; Acaroğlu, E.; Pérez-Grueso, F.J.S.; Kleinstück, F.; Obeid, I.; Vila-Casademunt, A.; et al. Artificial Intelligence Based Hierarchical Clustering of Patient Types and Intervention Categories in Adult Spinal Deformity Surgery. Spine 2019, 44, 915–926. [Google Scholar] [CrossRef]

- Xia, X.-P.; Chen, H.-L.; Cheng, H.-B. Prevalence of Adjacent Segment Degeneration After Spine Surgery. Spine 2013, 38, 597–608. [Google Scholar] [CrossRef]

- Wang, F.; Hou, H.-T.; Wang, P.; Zhang, J.-T.; Shen, Y. Symptomatic Adjacent Segment Disease After Single-Lever Anterior Cervical Discectomy and Fusion. Medicine 2017, 96, e8663. [Google Scholar] [CrossRef]

- Zhang, J.T.; Cao, J.M.; Meng, F.T.; Shen, Y. Cervical Canal Stenosis and Adjacent Segment Degeneration After Anterior Cervical Arthrodesis. Eur. Spine J. 2015, 24, 1590–1596. [Google Scholar] [CrossRef] [PubMed]

- Kong, L.; Cao, J.; Wang, L.; Shen, Y. Prevalence of Adjacent Segment Disease Following Cervical Spine Surgery. Medicine 2016, 95, e4171. [Google Scholar] [CrossRef]

- Yang, X.; Bartels, R.H.M.A.; Donk, R.; Arts, M.P.; Goedmakers, C.M.W.; Vleggeert-Lankamp, C.L.A. The Association of Cervical Sagittal Alignment With Adjacent Segment Degeneration. Eur. Spine J. 2019, 29, 2655–2664. [Google Scholar] [CrossRef] [PubMed]

- Goedmakers, C.M.W.; Lak, A.M.; Duey, A.H.; Senko, A.W.; Arnaout, O.; Groff, M.W.; Smith, T.R.; Vleggeert-Lankamp, C.L.A.; Zaidi, H.A.; Rana, A.; et al. Deep Learning for Adjacent Segment Disease at Preoperative MRI for Cervical Radiculopathy. Radiology 2021, 301, 664–671. [Google Scholar] [CrossRef] [PubMed]

- Karhade, A.V.; Bongers, M.E.; Groot, O.Q.; Kazarian, E.R.; Cha, T.D.; Fogel, H.A.; Hershman, S.H.; Tobert, D.G.; Schoenfeld, A.J.; Bono, C.M.; et al. Natural Language Processing for Automated Detection of Incidental Durotomy. Spine J. 2020, 20, 695–700. [Google Scholar] [CrossRef] [PubMed]

- Karhade, A.V.; Bongers, M.E.; Groot, O.Q.; Cha, T.D.; Doorly, T.P.; Fogel, H.A.; Hershman, S.H.; Tobert, D.G.; Srivastava, S.D.; Bono, C.M.; et al. Development of Machine Learning and Natural Language Processing Algorithms for Preoperative Prediction and Automated Identification of Intraoperative Vascular Injury in Anterior Lumbar Spine Surgery. Spine J. 2021, 21, 1635–1642. [Google Scholar] [CrossRef] [PubMed]

- Benyamin, R.; Trescot, A.M.; Datta, S.; Buenaventura, R.; Adlaka, R.; Sehgal, N.; Glaser, S.E.; Vallejo, R. Opioid Complications and Side Effects. Pain. Physician 2008, 11, S105–S120. [Google Scholar] [CrossRef]

- Schofferman, J. Long-Term Use of Opioid Analgesics for the Treatment of Chronic Pain of Nonmalignant Origin. J. Pain. Symptom Manag. 1993, 8, 279–288. [Google Scholar] [CrossRef] [PubMed]

- Schofferman, J. Long-Term Opioid Analgesic Therapy for Severe Refractory Lumbar Spine Pain. Clin. J. Pain. 1999, 15, 136–140. [Google Scholar] [CrossRef]

- Bartleson, J.D. Evidence For and Against the Use of Opioid Analgesics for Chronic Nonmalignant Low Back Pain: A Review: Table 1. Pain. Med. 2002, 3, 260–271. [Google Scholar] [CrossRef] [PubMed]

- Jamison, R.N.; Raymond, S.A.; Slawsby, E.A.; Nedeljkovic, S.S.; Katz, N.P. Opioid Therapy for Chronic Noncancer Back Pain. Spine 1998, 23, 2591–2600. [Google Scholar] [CrossRef]

- Paulozzi, L.J.; Budnitz, D.S.; Xi, Y. Increasing Deaths from Opioid Analgesics in the United States. Pharmacoepidemiol. Drug. Saf. 2006, 15, 618–627. [Google Scholar] [CrossRef]

- Karhade, A.V.; Ogink, P.T.; Thio, Q.C.; Cha, T.D.; Gormley, W.B.; Hershman, S.H.; Smith, T.R.; Mao, J.; Schoenfeld, A.J.; Bono, C.M.; et al. Development of Machine Learning Algorithms for Prediction of Prolonged Opioid Prescription After Surgery for Lumbar Disc Herniation. Spine J. 2019, 19, 1764–1771. [Google Scholar] [CrossRef] [PubMed]

- Stopa, B.M.; Robertson, F.C.; Karhade, A.V.; Chua, M.; Broekman, M.L.D.; Schwab, J.H.; Smith, T.R.; Gormley, W.B. Predicting Nonroutine Discharge After Elective Spine Surgery: External Validation of Machine Learning Algorithms. J. Neurosurg. Spine 2019, 31, 742–747. [Google Scholar] [CrossRef]

- Huang, K.T.; Silva, M.A.; See, A.P.; Wu, K.C.; Gallerani, T.; Zaidi, H.A.; Lu, Y.; Chi, J.H.; Groff, M.W.; Arnaout, O.M. A computer Vision Approach to Identifying the Manufacturer and Model of Anterior Cervical Spinal Hardware. J. Neurosurg. Spine 2019, 31, 844–850. [Google Scholar] [CrossRef]

- Grigsby, J.; Kramer, R.E.; Schneiders, J.L.; Gates, J.R.; Smith, W.B. Predicting Outcome of Anterior Temporal Lobectomy Using Simulated Neural Networks. Epilepsia 1998, 39, 61–66. [Google Scholar] [CrossRef] [PubMed]

- Antony, A.R.; Alexopoulos, A.V.; González-Martínez, J.A.; Mosher, J.C.; Jehi, L.; Burgess, R.C.; So, N.K.; Galán, R.F. Functional Connectivity Estimated from Intracranial EEG Predicts Surgical Outcome in Intractable Temporal Lobe Epilepsy. PLoS ONE 2013, 8, e77916. [Google Scholar] [CrossRef] [PubMed]

- Arle, J.E.; Perrine, K.; Devinsky, O.; Doyle, W.K. Neural Network Analysis of Preoperative Variables and Outcome in Epilepsy Surgery. J. Neurosurg. 1999, 90, 998–1004. [Google Scholar] [CrossRef]

- Armañanzas, R.; Alonso-Nanclares, L.; DeFelipe-Oroquieta, J.; Kastanauskaite, A.; de Sola, R.G.; DeFelipe, J.; Bielza, C.; Larrañaga, P. Machine Learning Approach for the Outcome Prediction of Temporal Lobe Epilepsy Surgery. PLoS ONE 2013, 8, e62819. [Google Scholar] [CrossRef] [PubMed]

- Bernhardt, B.C.; Hong, S.-J.; Bernasconi, A.; Bernasconi, N. Magnetic Resonance Imaging Pattern Learning in Temporal Lobe Epilepsy: Classification and Prognostics. Ann. Neurol. 2015, 77, 436–446. [Google Scholar] [CrossRef] [PubMed]

- Feis, D.-L.; Schoene-Bake, J.-C.; Elger, C.; Wagner, J.; Tittgemeyer, M.; Weber, B. Prediction of Post-Surgical Seizure Outcome in Left Mesial Temporal Lobe Epilepsy. NeuroImage Clin. 2013, 2, 903–911. [Google Scholar] [CrossRef]

- Njiwa, J.Y.; Gray, K.; Costes, N.; Mauguiere, F.; Ryvlin, P.; Hammers, A. Advanced [18F]FDG and [11C]flumazenil PET Analysis For Individual Outcome Prediction After Temporal Lobe Epilepsy Surgery for Hippocampal Sclerosis. NeuroImage Clin. 2014, 7, 122–131. [Google Scholar] [CrossRef] [PubMed]

- Memarian, N.; Kim, S.; Dewar, S.; Engel, J.; Staba, R.J. Multimodal Data and Machine Learning for Surgery Outcome Prediction In Complicated Cases of Mesial Temporal Lobe Epilepsy. Comput. Biol. Med. 2015, 64, 67–78. [Google Scholar] [CrossRef]

- Torlay, L.; Perrone-Bertolotti, M.; Thomas, E.; Baciu, M. Machine Learning–Xgboost Analysis of Language Networks to Classify Patients with Epilepsy. Brain Inform. 2017, 4, 159–169. [Google Scholar] [CrossRef]

- Abbasi, B.; Goldenholz, D.M. Machine Learning Applications in Epilepsy. Epilepsia 2019, 60, 2037–2047. [Google Scholar] [CrossRef]

- A Reinforcement Learning-Based Framework for the Generation and Evolution of Adaptation Rules. 2017 IEEE International Conference on Autonomic Computing (ICAC), Columbus, OH, USA, 17–21 July 2017. [CrossRef]

- Wiebe, S.; Blume, W.T.; Girvin, J.P.; Eliasziw, M.; Effectiveness and Efficiency of Surgery for Temporal Lobe Epilepsy Study Group. A Randomized, Controlled Trial of Surgery for Temporal-Lobe Epilepsy. N. Engl. J. Med. 2001, 345, 311–318. [Google Scholar] [CrossRef]

- Larivière, S.; Weng, Y.; De Wael, R.V.; Royer, J.; Frauscher, B.; Wang, Z.; Bernasconi, A.; Bernasconi, N.; Schrader, D.; Zhang, Z.; et al. Functional Connectome Contractions in Temporal Lobe Epilepsy: Microstructural Underpinnings and Predictors Of Surgical Outcome. Epilepsia 2020, 61, 1221–1233. [Google Scholar] [CrossRef] [PubMed]

- Faron, A.; Sichtermann, T.; Teichert, N.; Luetkens, J.A.; Keulers, A.; Nikoubashman, O.; Freiherr, J.; Mpotsaris, A.; Wiesmann, M. Performance of a Deep-Learning Neural Network to Detect Intracranial Aneurysms from 3D TOF-MRA Compared to Human Readers. Clin. Neuroradiol. 2019, 30, 591–598. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Li, W.; Tian, Z.; Zhang, Y.; Wang, K.; Zhang, Y.; Liu, J.; Yang, X. Stability Assessment of Intracranial Aneurysms Using Machine Learning Based on Clinical and Morphological Features. Transl. Stroke Res. 2020, 11, 1287–1295. [Google Scholar] [CrossRef] [PubMed]

- Park, A.; Chute, C.; Rajpurkar, P.; Lou, J.; Ball, R.L.; Shpanskaya, K.; Jabarkheel, R.; Kim, L.H.; McKenna, E.; Tseng, J.; et al. Deep Learning–Assisted Diagnosis of Cerebral Aneurysms Using the HeadXNet Model. JAMA Netw. Open. 2019, 2, e195600. [Google Scholar] [CrossRef]

- Silva, M.A.; Patel, J.; Kavouridis, V.; Gallerani, T.; Beers, A.; Chang, K.; Hoebel, K.V.; Brown, J.; See, A.P.; Gormley, W.B.; et al. Machine Learning Models can Detect Aneurysm Rupture and Identify Clinical Features Associated with Rupture. World Neurosurg. 2019, 131, e46–e51. [Google Scholar] [CrossRef]

- Sahlein, D.H.; Gibson, D.; Scott, J.A.; De Nardo, A.; Amuluru, K.; Payner, T.; Rosenbaum-Halevi, D.; Kulwin, C. Artificial Intelligence Aneurysm Measurement Tool Finds Growth in all Aneurysms that Ruptured During Conservative Management. J. NeuroInterventional Surg. 2022. [Google Scholar] [CrossRef] [PubMed]

- Wiebers, D.O. Unruptured intracranial aneurysms: Natural History, Clinical Outcome, And Risks of Surgical and Endovascular Treatment. Lancet 2003, 362, 103–110. [Google Scholar] [CrossRef]

- Brunozzi, D.; Theiss, P.; Andrews, A.; Amin-Hanjani, S.; Charbel, F.T.; Alaraj, A. Correlation Between Laminar Wall Shear Stress and Growth of Unruptured Cerebral Aneurysms: In Vivo Assessment. World Neurosurg. 2019, 131, e599–e605. [Google Scholar] [CrossRef]

- Nomura, S.; Kunitsugu, I.; Ishihara, H.; Koizumi, H.; Yoneda, H.; Shirao, S.; Oka, F.; Suzuki, M. Relationship between Aging and Enlargement of Intracranial Aneurysms. J. Stroke Cerebrovasc. Dis. 2015, 24, 2049–2053. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Jiang, P.; Jiang, Y.; Ge, H.; Li, S.; Jin, H.; Li, Y. Prediction of Aneurysm Stability Using a Machine Learning Model Based on PyRadiomics-Derived Morphological Features. Stroke 2019, 50, 2314–2321. [Google Scholar] [CrossRef]

- Hop, J.W.; Rinkel, G.J.; Algra, A.; van Gijn, J. Case-Fatality Rates and Functional Outcome After Subarachnoid Hemorrhage. Stroke 1997, 28, 660–664. [Google Scholar] [CrossRef] [PubMed]

- Nieuwkamp, D.J.; Setz, L.E.; Algra, A.; Linn, F.H.; de Rooij, N.K.; Rinkel, G.J. Changes in Case Fatality of Aneurysmal Subarachnoid Haemorrhage over Time, According to Age, Sex, and Region: A Meta-Analysis. Lancet Neurol. 2009, 8, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Al-Khindi, T.; Macdonald, R.L.; Schweizer, T.A. Cognitive and Functional Outcome After Aneurysmal Subarachnoid Hemorrhage. Stroke 2010, 41, e519–36. [Google Scholar] [CrossRef]

- Roos, Y.B.W.E.M.; Zarranz, J.J.; Rouco, I.; Gómez-Esteban, J.C.; Corral, J. Complications and Outcome in Patients With Aneurysmal Subarachnoid Haemorrhage: A Prospective Hospital Based Cohort Study in the Netherlands. J. Neurol. Neurosurg. Psychiatry 2000, 68, 337–341. [Google Scholar] [CrossRef]

- Koch, M.; Acharjee, A.; Ament, Z.; Schleicher, R.; Bevers, M.; Stapleton, C.; Patel, A.; Kimberly, W.T. Machine Learning-Driven Metabolomic Evaluation of Cerebrospinal Fluid: Insights Into Poor Outcomes After Aneurysmal Subarachnoid Hemorrhage. Neurosurgery 2021, 88, 1003–1011. [Google Scholar] [CrossRef] [PubMed]

- Vergouwen, M.D.; Vermeulen, M.; van Gijn, J.; Rinkel, G.J.; Wijdicks, E.F.; Muizelaar, J.P.; Mendelow, A.D.; Juvela, S.; Yonas, H.; Terbrugge, K.G.; et al. Definition of Delayed Cerebral Ischemia After Aneurysmal Subarachnoid Hemorrhage as an Outcome Event in Clinical Trials and Observational Studies. Stroke 2010, 41, 2391–2395. [Google Scholar] [CrossRef]

- Ramos, L.A.; Van Der Steen, W.E.; Barros, R.S.; Majoie, C.B.L.M.; Berg, R.V.D.; Verbaan, D.; Vandertop, W.P.; Zijlstra, I.J.A.J.; Zwinderman, A.H.; Strijkers, G.; et al. Machine Learning Improves Prediction of Delayed Cerebral Ischemia in Patients With Subarachnoid Hemorrhage. J. NeuroInterventional Surg. 2018, 11, 497–502. [Google Scholar] [CrossRef]

- Asadi, H.; Kok, H.K.; Looby, S.; Brennan, P.; O’Hare, A.; Thornton, J. Outcomes and Complications After Endovascular Treatment of Brain Arteriovenous Malformations: A Prognostication Attempt Using Artificial Intelligence. World Neurosurg. 2016, 96, 562–569.e1. [Google Scholar] [CrossRef]

- Gonzalez-Romo, N.I.; Hanalioglu, S.; Mignucci-Jiménez, G.; Koskay, G.; Abramov, I.; Xu, Y.; Park, W.; Lawton, M.T.; Preul, M.C. Quantification of Motion During Microvascular Anastomosis Simulation Using Machine Learning Hand Detection. Neurosurg. Focus. 2023, 54, E2. [Google Scholar] [CrossRef]

| 1st Author Paper, Year | Output | Input | Output Measures | ML Model | Number of Enrollment | Model Performance | Limitation |

|---|---|---|---|---|---|---|---|

| Tumor | |||||||

| Buchlak et al., 2021 [22] | Disease Diagnosis, Outcome | Glioma MRI data | AUC, Sensitivity, Specificity, Accuracy | CNN, SVM, RF | 153 | AUC = 0.87 ± 0.09 Sensitivity = 0.87 ± 0.10; Specificity = 0.0.86 ± 0.10; Precision = 0.88 ± 0.11 | - Large sample size influences NLP classification models. - Conference papers were excluded from the review. - Optimized deep language models are suggested for improved performance. - Readers are referred to specific papers for further information. |

| McAvoy et al., 2021 [23] | Disease Diagnosis | GBM and PCNSL MRI data | AUC | CNN | 320 | AUC = 0.94 (95% CI: 0.91–0.97) for GBM AUC = 0.95 (95% CI: 0.92–0.98) for PCNL. | - Retrospective design with a small number of patients from two academic institutions. - The findings may have limited generalizability to other settings. - The use of PNG exports of DICOM images results in data loss. - There is no direct comparison between the classification outcomes of CNNs and radiologists. - Further research is needed to determine the clinical value of the tool. |

| Boaro et al., 2021 [24] | Automatically segment meningiomas from MRI scan | Meningioma MRI data | Dice score, Hausdorff distance, Inter-expert variability | 3D-CNN | 806 | Dice score of 85.2% (mean Hausdorff = 8.8 mm; mean average Hausdorff distance = 0.4) Median of 88.2% (median Hausdorff = 5.0 mm; median average Hausdorff distance = 0.2 mm) Inter-expert variability in segmenting the same tumors with means ranging from 80.0 to 90.3% | - Limited in its ability to evaluate post-operative residuals, tumor recurrence, or tumor growth due to the inclusion of single pre-operative scans. - Model’s detection performance was not tested on brain MRI scans without meningioma. - Algorithm has not been integrated into the hospital informatics system. |

| Zhou et al., 2019 [25] | IDH genotype and 1p19q codeletion in gliomas | Preoperative MRI of glioma patients | AUC, Accuracy | ML, RF | 538 | IDH AUC training 0.921, validate 0.919 Accuracy 78.2% | -Retrospective design and focuses specifically on known gliomas. - Limiting its applicability to different tumor types and non - tumor mimickers. |

| Tonutti et al., 2017 [32] | Tumor deformation | Load-driven FEM simulations of tumor | Accuracy, Specificity | ANN, SVR | - | ANN model Predicting the position of the nodes with errors <0.3 mm SVR models positional errors < 0.2 mm | - Use of generic mechanical parameters and exclusion of certain brain structures |

| Shen et al., 2021 [33] | Intraoperative glioma diagnosis | Fluorescence of glioma tissue | AUC, Sensitivity, Specificity | FL-CNN | 1874 | AUC = 0.945 FL-CNN higher Sensitivity 93.8% vs. 82.0%, p < 0.001) Predict grade and Ki-67 level (AUC 0.810 and 0.625) | - Reliance on NIR-II fluorescence imaging. - While NIR-II offers advantages over NIR-I, it may still have lower specificity compared to clinically available methods |

| Hollon et al., 2021 [34] | Diagnose glioma molecular classes intraoperatively | Raman spectroscopy, coherent anti-Stokes Raman scattering (CARS) microscopy, Stimulated Raman histology (SRH) | Accuracy | CNN | - | accuracy of 92% sensitivity = 93% specificity = 91% | |

| Tewarie et al., 2022 [40] | Predict outcomes of LMD patients in Brain Metastasis | Clinical Characteristic patient in Brain Metastasis | Risk ratio, p value | Conditional survival forest, a Cox proportional hazards model, Extreme gradient boosting (XGBoost), Extra trees, LR, Synthetic Minority Oversampling Technique (SMOTE) | 1054 | XGboost AUC = 0.83 RFand Cox proportional hazards model C-index = 0.76 | The study includes limitations such as a wide time span for patient inclusion. - Including lymph node metastasis as an LMD risk factor is novel and requires more investigation. - Patients receiving only radiation therapy were excluded from the study. - Use of SMOTE reduced data variability. - LMD prognostication at brain metastases (BM) diagnosis is theoretical and not yet widely used in clinical care. |

| Hulsbergen et al., 2022 [44] | Predicts 6-month survival after neurosurgical resection for BM | Data of Brain Metastasis patient | AUC, Calibration, Brier score | Gradient boosting, K-nearest neighbors, LR, NB, RF, SVM | 1062 | AUC of 0.71 predicted both 6-month and longitudinal overall survival (p < 0.0005) | - Use of retrospective data for internal validation. - The study focuses on survival at a 6-month cutoff rather than overall median survival. - Intraoperative and postoperative factors can influence survival prediction. |

| Senders et al., 2018 [49] | Predict Survival in GBM patients | Demographic, Socioeconomic, Radiographical, Therapeutic Characteristics | C-index | AFT, Boosted decision trees survival, CPHR, RF, recursive partitioning algorithms | 20,821 | C-index = 0.70 | - Being restricted to continuous and binary models. - Unable to compute subject-level survival curves and lacks interpretability. - Computational inefficiency - Evaluating models based on multiple criteria - Factors unrelated to prediction performance. |

| Chang et al., 2019 [52] | Evaluation of treatment response | Preoperative MRI of low- or high-grade gliomas, Postoperative MRI with newly diagnosed glioblastoma | Sørensen–Dice coefficient, Sensitivity, Specificity, Dunnet’s test, Spearman’s rank correlation coefficient, intraclass correlation coefficient (ICC) | Deep Learning, Hybrid Watershed Algorithm, Robust Learning-Based Brain Extraction, Brain ExtractionTool, 3dSkullStrip, Brain Surface Extractor | 843 preopMRIs from 843 patients with gliomas 713 longitudinal postop MRI from 54 patients with newly diagnosed glioblastomas | Comparing manually and automatically derived longitudinal changes in tumor burden were 0.917, 0.966, and 0.850 | - Patient cohort is small and from a single institution. - Lack of comparison with other approaches. - Smaller tumors were excluded from the study. - Variability in MR imaging availability. - Confidence assessment in segmentations is absent |

| Senders et al., 2018 [53] | Presurgical planning, Intraoperative guidance, Neurophysiological monitoring, and Neurosurgical outcome prediction | Neurosurgical treatment | Median accuracy Dice similarity Median sensitivity coefficient | ANN SVMFuzzy C-means Bayesian Learning RFQuadratic discriminant analysis LDA Gaussian mixture models LR, K-nearest neighbor, NLP K-means | 6402 | Brain tumor Median Accuracy = 92% Dice similarity coefficient = 88% Radiological of critical/target brain median Accuracy = 94% Dice similarity coefficient = 91% Predict epileptogenic focus Median Accuracy = 86% Detect seizure by iEEG Median Sensitivity = 96% Intraop tumor demarcation Median Accuracy = 89% | - Need for more detailed analysis of all studies and a focus on perioperative care applications. - Caution is advised when interpreting the quantitative performance summary. |

| Spine | |||||||

| Fatima et al., 2020 [62] | Clinical decision-making, Patient outcomes | Gender, age, American Society of Anesthesiologists grade, Autogenous iliac bone graft, Instrumented fusion, Levels of surgery, Surgical approach, Functional status, Preoperative serum albumin (g/dL), Serum alkaline phosphatase (IU/mL) | Discrimination, Calibration, Brier score, Decision analysis | LRand LASSO | 3965 | AUC = 0.7 Brier score = 0.08 Predicting overall AEs Logistic regression = 0.70 (95% CI, 0.62–0.74) LASSO = 0.65 (95% CI, 0.61–0.69) | -Variation in patient and surgical characteristics within the database used. - Limited postoperative outcome data beyond 30 days - Potential missing variables and coding errors in the data are additional limitations. |

| Karhade et al., 2019 [63] | Postoperative outcome | Preoperative prognostic factor | Discrimination (c-statistic), Calibration (assessed by calibration slope and intercept), Brier score, Decision analysis | SVM, NeuralNetwork (NN) | 1790 | SVM0.760 NNwith c-statistic 0.769. | - Variable data veracity. - Limited availability of pertinent predictors - Unable to capture the overall trajectory of metastatic disease - lack of explanatory capability. - No examination of multivariate logistic regression or proportional hazard models. |

| Ames et al., 2019 [64] | Predict surgical outcome | Patient, Surgical factor | p-Value | Unsupervised hierarchical clustering | 570 | overall p-value 0.004 | - Dependency on sample size - Observation heterogeneity for determining patient and operative clusters. |

| Goedmakers et al., 2021 [70] | Predicting Adjacent Segment Disease (ASD) | Preoperative Cervical MRI | Accuracy, Sensitivity, Specificity, PPV, NPV, F1-score, Matthew correlation coefficient, Informedness, Markedness | VGGNet19, Resnet18, Resnet50 | 344 | Predict ASD Accuracy = 95% Sensitivity = 80% Specificity = 97% | - Reliance on the last available follow up. - Clinical and demographic characteristics were not considered in the analysis. - Variability in surgical techniques and outcomes. - Small number of MRI scans limited the study. - Distribution of ASD cases were imbalanced. |

| Karhade et al., 2020 [71] | Incidental durotomies in free-text operative notes | operative notes of patients undergoing lumbar spine surgery | AUC-ROC, Precision-recall curve, Brier score | NLP | 1000 | AUC-ROC = 0.99 Sensitivity = 0.89 Specificity = 0.99 PPV = 0.89 NPV = 0.99. | - Retrospective nature within a single healthcare system - Influence of shared surgical practices on documentation could affect the results. - Unrecognized or unrecorded incidental durotomies may have been overlooked. - Impracticality of multiple reviews by different researchers or spine surgeons is a limitation of the current work. |

| Karhade et al., 2021 [72] | Intraoperative vascular injury | age, male sex, body mass index, diabetes, L4-L5 exposure, and infection-related surgery (discitis, osteomyelitis) | C-statstic, Sensitivity, Specificity, PPV, NPV, F1-score | NLP | 1035 | C-statistic = 0.92 Sensitivity 0.86 Specificity = 0.93 PPV = 0.51 NPV = 0.99 F1-score of 0.64. | - Retrospective design from a single healthcare entity. - Prospective and multi-institutional validation is needed to confirm the findings. - Lack of a rigorous gold standard for intraoperative vascular injury is a limitation. - NLP algorithm used in the study may be prone to overfitting |

| Karhade et al., 2019 [79] | Prediction of prolonged opioid prescription after surgery for lumbar disc herniation | Chart review of patients undergoing surgery for lumbar disc herniation | C-statistic or AUC, Calibration, Brier Score | Elastic-net penalizedLR, RF, Stochastic Gradient Boosting, NN, SVM | 5413 | C-statistic = 0.81 AUC 0.81 calibration (slope = 1.13,intercept = 0.13) overall performance (Brier = 0.064) | - Unavailability of opioid dose data and exclusion of illicit opioid use. - Opioid use approximation was based on medical record data - Patient-reported outcomes were not included in the study. - Changing surgical techniques over the study period could have influenced the results. - The study included a limited diversity of institutions. |

| Stopa et al., 2019 [80] | Nonroutine discharge | Age, Sex, BMI, ASA class, Preoperative functional status, Number of fusion levels, Comorbidities, Preoperative laboratory findings, Discharge disposition | AUC, Discrimination (c-statistic), Calibration, and Positive and Negative predictive values (PPVs and NPVs) | Python (version 3.6) and the R programming language (version 3.5.1). | 144 | AUC 0.89, calibration slope = 1.09, calibration intercept = −0.08. PPV = 0.50NPV = 0.97. | - Positive findings in terms of external validation. - Different algorithms have shown varying levels of performance in discrimination and calibration. |

| Huang et al., 2019 [81] | Identification of implanted spinal hardware | AP film cervical radiography after ACDF | Cross-validation analysis Accurracy | KAZE feature detector K-means clustering MATLAB software Vision System Toolbox and Statistics and Machine Learning Toolbox | 321 | Top choice 91.5% ± 3.8% 2 choice 97.1% ± 2.0% 3 choice 98.4% ± 1.3% | - Limited number of available hardware systems for training. - Additional datasets are needed to evaluate visual artifacts and overlapping radiopaque “noise.” - Prospective data is required to assess the clinical utility of the model. - Potential applications of hardware classification beyond revision ACDF surgery. |

| Epilepsy | |||||||

| Grisby et al., 1998 [82] | Predict seizure outcomes | History, Demographics, Clinical examination, Routine scalp EEG, Video-scalp EEG monitoring, Intracranial EEG monitoring, Intracarotid amobarbital (Wada) testing, CT, MRI, Neuropsychological assessment | Accuracy | SNN | 87 | Accuracy = 81.3% and 95.4% | - Retrospective design with patient records - Prospective validation with new patients is needed for further validation |

| Torlay et al., 2017 [90] | Atypical language patterns Differentiate patients with epilepsy from healthy people | fMRI | AUC | ML, XGBOOST | 55 | AUC = 91 ± 5% | |

| Hosseini et al., 2017 [92] | Epilepsy Seizure Localization | Electroencephalography (EEG), Resting state-functional Magnetic Resonance Imaging (rs-fMRI), Diffusion Tensor Imaging (DTI) | Multiple t-test, Differential connectivity graph (DCG) | CNN | 9 | p-value Normal 1.85 × 10-14 Seizure 4.64 × 10 -27 | - limitations in reliably identifying preictal periods. - Need for an autonomic method that accurately detects and localizes epileptogenicity. |

| Memarian et al., 2015 [89] | Predict surgery outcome | Clinical, Electrophysiological, Structural magnetic resonance imaging (MRI) features | Accuracy | LDA, NB, SVM with radial basis function kernel (SVM-rbf), SVM with multilayer perceptron kernel (SVM-mlp), Least-Square SVM (LS-SVM). | 20 | Accuracy = 95% | - The limited spatial coverage of depth electrodes in intracranial EEG recordings poses a constraint. - Depth electrodes are not consistently implanted in all brain areas among patients. |

| Larivière et al., 2020 [94] | Predict postsurgical seizure outcome | Multimodal MRI imaging | Accuracy | Supervised machine learning with fivefold cross-validation | 30 | Accuracy = 76± 4% | - Limitations in sample size. - Regularization techniques were used- Variability in follow-up times and lack of generalizability to other types of drug-resistant focal epilepsies |

| Vascular | |||||||

| Park et al., 2019 [97] | Clinician performance with and without model augmentation | CTA examinations | Sensitivity, Specificity, Accuracy, time, interrater agreement | CNN | 818 | mean Sensitivity increased = 95%, mean Accuracy increased = 95%, mean Interrater agreement (Fleiss κ) increased = 0.060, from 0.799 to 0.859 (adjusted p = 0.05) mean Specificity = 95% Time to Diagnosis 95% | - Exclusion of ruptured aneurysms and aneurysms associated with other conditions. - Performance of the model in the presence of surgical hardware or devices remains uncertain. - Potential interpretation bias may exist - Conducted using data from a single institution. |

| Silva et al., 2019 [98] | Clinical Features, Detection of Aneurysm Rupture | Vascular imaging data of cerebral aneurysms | p value, AUC, Sensitivity, Specificity, PPV, NPV | RF, Linear SVM, Radial basis function kernel SVM | 845 | AUC Linear SVM = 0.77 Radial basis function kernel SVM = 0.78 | - Single institution for the patient cohort - The retrospective nature of the data comparing ruptured and unruptured cases is a limitation. - Long-term follow-up data on untreated aneurysms is lacking, which affects the analysis. |

| Liu et al., 2019 [103] | Predicting Aneurysm Stability | Morphological feature aneurysm | p value, Odds ratio, AUC, chi square test, t test | Lasso regression | 1139 | Flatness (OR, 0.584; 95% CI, 0.374–0.894) Spherical Disproportion (OR, 1.730; 95% CI, 1.143–2.658) SurfaceArea (OR) = 0.697 (95% CI, 0.476–0.998) AUC = 0.853 (95% CI, 0.767–0.940) | - Single-center nature - Reliance on post-rupture morphology as a surrogate for rupture risk evaluation - Potential misclassification of unstable aneurysms without definite symptoms - Limited focus on aneurysms within a specific size range, hindering analysis of smaller aneurysms. |

| Koch et al., 2021 [108] | Vasoactive molecule that predict poor outcome | CSF of aSAH patients | p value 2-tailed student t-test, Fischer’s exact test | Elastic net (EN) ML, Orthogonal partial least squares- (OPLS-DA) | 138 | Poor mRS At Discharge (p = 0.0005, 0.002, and 0.0001) At 90 day (p = 0.0036, 0.0001, and 0.004) | Biased patient cohort. - No correlation found between metabolite levels and vasospasm. - Effect sizes observed were moderate. - Possibility of changes in metabolite profiles over time. |

| Ramos et al., 2019 [110] | Prediction of Delay Cerebral Ischemia | Clinical and CT image data | AUC, | LR, SVM, RFMLP, Stock Convolutional Denoising Auto-encoder, PCA | 317 | Logistic regression models AUC = 0.63 (95% CI 0.62 to 0.63) ML with clinical data AUC = 0.68 (95% CI 0.65 to 0.69) ML with clinical data and image feature AUC = 0.74 (95% CI 0.72 to 0.75) | - LR model used in the study had a limitation of a low number of events per feature, making it prone to overfitting. - ML algorithms used in the study can handle high-dimensional feature spaces with less risk of overfitting but still require external validation. - Determining the best parameter configurations for ML models can be computationally expensive. |

| Asadi et al., 2016 [111] | Outcome variables, Clinical outcome prediction | Study documented imaging, Clinical presentation, Procedure, complications, Outcomes | Accuracy | Supervised Machine learning MATLAB Neural Network Toolbox | 199 | Accuracy = 97.5% | - ML algorithms depend on large training datasets for improved performance and accuracy. - Uncovering the true underlying relationships between factors can be challenging for ML algorithms. - There is a risk of overfitting when irrelevant data is included in the training process. |

| Gonzalez-Romo et al., 2023 [112] | Microvascular anastomosis hand motion | 21 tracking hand landmarks from 6 participant | Mean (SD), One-way ANOVA | Python programming language and Mediapipe; CNN | 6 | 6oo s 4 nonexpert 26 bites total 2 expert 33 bites(18 bites and 15 bites) 180 s Expert, 13 bites with mean latencies of 22.2(4.4) and 23.4 (10.1) seconds 2 intermediate, 9 bites with mean latencies of 31.5(7.1) and 34.4 (22.1) seconds per bites | - Small sample size. - Prospective follow up was not conducted. - Assessment of other technique domains was limited. - The relationship between motion analysis and learning curves using different simulators is not well understood. |

| Trial or Registry | Number Enroll | Condition | Interventions | Outcome Measure | Status |

|---|---|---|---|---|---|

| NCT04671368 | 141 | Central Nervous System Neoplasms | Diagnostic Test: Artificial Intelligence Diagnostic Test: Practicing Pathologists Diagnostic Test: Gold Standard | Diagnostic Accuracy of Study Arms Sensitivity and specificity of Study Arms Spearman Coefficient of Study Arms related to Gold Standard | Unknown status |

| NCT04220424 | 500 | Glioma | Diagnostic Test: MR and Histopathology images based prediction of molecular pathology and patient survival | AUC of Prediction performance | Unknown status |

| NCT04216550 | 600 | Recurrent Glioma | Drug: Apatinib | Changes of Response to Treatment Progression-Free Survival (PFS) Overall Survival (OS) Incidence of treatment-related adverse events | Recruiting |

| NCT04215211 | 2500 | Glioma | Diagnostic Test: Survival prediction for glioma patients | AUC of survival prediction performance | Recruiting |

| NCT04217018 | 3000 | Glioma | Diagnostic Test: Prediction of molecular pathology | AUC of prediction performance | Recruiting |

| NCT04215224 | 3500 | Glioma | Diagnostic Test: Histopathology images based survival prediction for glioma patients | AUC of survival prediction performance | Recruiting |

| NCT04217044 | 3000 | Glioma | Diagnostic Test: Histopathology images based prediction of molecular pathology | AUC of prediction performance | Recruiting |

| NCT04872842 | 1000 | Intracranial Aneurysm | Other: Obervation | Aneurysm rupture Aneurysm growth | Completed |

| NCT05608122 | 1000 | Intracranial Aneurysm | Other: Obervation | Aneurysm rupture Aneurysm growth | Recruiting |

| NCT04733638 | 500 | Intracerebral Hemorrhage | Device: Viz ICH VOLUME | Algorithm Performance Algorithm Processing Time Time to Notification Time to Treatment Length of Stay In Hospital Complications Modified Rankin Scale (mRS) at Discharge and 90 Days | Enrolling by invitation |

| NCT05804474 | 1500 | Intracranial Aneurysm | Other: Observational study | Intracranial aneurysm size Intracranial aneurysm volume Intracranial aneurysm height Intracranial aneurysm neck diameter Parent artery diameter Intracranial aneurysm width Aspect ratio Size ratio | Completed |

| NCT04608617 | 1000 | Stroke, Ischemic | Device: Viz LVO (De Novo Number DEN170073) | Transfer patients: Time from spoke CT/CTA to door-out Non-transfer patients: Time from Hub door to groin puncture Time from Spoke Door-In to Door-Out (DIDO) Time from Spoke CT/CTA to Specialist Notification Time from Spoke CT/CTA to Groin Puncture Time from Spoke Door to Groin Puncture Length of ICU Stay/Total Length of Stay Modified Rankin Scale (mRS) at Discharge and 90 Days National Institutes of Health Stroke Scale (NIHSS) at Discharge Patient Disposition at Discharge and 90 Days | Recruiting |

| NCT05099627 | 300 | Cervical Myelopathy | Other: The questionnaire “Cervical myelopathy treatment outcome questionnaire” Other: The retrospective questionnaire “CSM early diagnosis questionnaire” Other: JOACMEQ questionnaire and mJOA | JOACMEQ questionnaire mJOA questionnaire Cervical myelopathy treatment outcome questionnaire” CSM early diagnosis questionnaire” Nurick score DOR (diagnostic odds ratio) | Not yet recruiting |

| NCT05161130 | 1115 | Low Back Pain Disk Herniated Lumbar Spinal Stenosis Lumbar | Procedure: Lumbar Spinal Fusion | Visual Analogue Scale for Back Pain Visual Analogue Scale for Leg Pain Oswestry Disability Index | Completed |

| NCT04359745 | 500 | Glioblastoma | Accuracy of the artificial intelligence model Failure rate of the artificial intelligence model | Recruiting | |

| NCT04467489 | 1040 | Cerebral Cavernous Malformation Cavernous Angioma Hemorrhagic Microangiopathy | Other: observational | Circulating Diagnostic and Prognostic Biomarkers of CASH Correlation of Imaging and Plasma Biomarkers of CASH Confounders of CASH Biomarkers | Recruiting |

| NCT04819074 | 4000 | Aneurysm, Brain | Procedure: Microsurgery | modified Rankin Scale Sensorimotor neurological deficits Clavien Dindo Complication Grading | Recruiting |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tangsrivimol, J.A.; Schonfeld, E.; Zhang, M.; Veeravagu, A.; Smith, T.R.; Härtl, R.; Lawton, M.T.; El-Sherbini, A.H.; Prevedello, D.M.; Glicksberg, B.S.; et al. Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future. Diagnostics 2023, 13, 2429. https://doi.org/10.3390/diagnostics13142429

Tangsrivimol JA, Schonfeld E, Zhang M, Veeravagu A, Smith TR, Härtl R, Lawton MT, El-Sherbini AH, Prevedello DM, Glicksberg BS, et al. Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future. Diagnostics. 2023; 13(14):2429. https://doi.org/10.3390/diagnostics13142429

Chicago/Turabian StyleTangsrivimol, Jonathan A., Ethan Schonfeld, Michael Zhang, Anand Veeravagu, Timothy R. Smith, Roger Härtl, Michael T. Lawton, Adham H. El-Sherbini, Daniel M. Prevedello, Benjamin S. Glicksberg, and et al. 2023. "Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future" Diagnostics 13, no. 14: 2429. https://doi.org/10.3390/diagnostics13142429

APA StyleTangsrivimol, J. A., Schonfeld, E., Zhang, M., Veeravagu, A., Smith, T. R., Härtl, R., Lawton, M. T., El-Sherbini, A. H., Prevedello, D. M., Glicksberg, B. S., & Krittanawong, C. (2023). Artificial Intelligence in Neurosurgery: A State-of-the-Art Review from Past to Future. Diagnostics, 13(14), 2429. https://doi.org/10.3390/diagnostics13142429