Detection of Hydroxychloroquine Retinopathy via Hyperspectral and Deep Learning through Ophthalmoscope Images

Abstract

1. Introduction

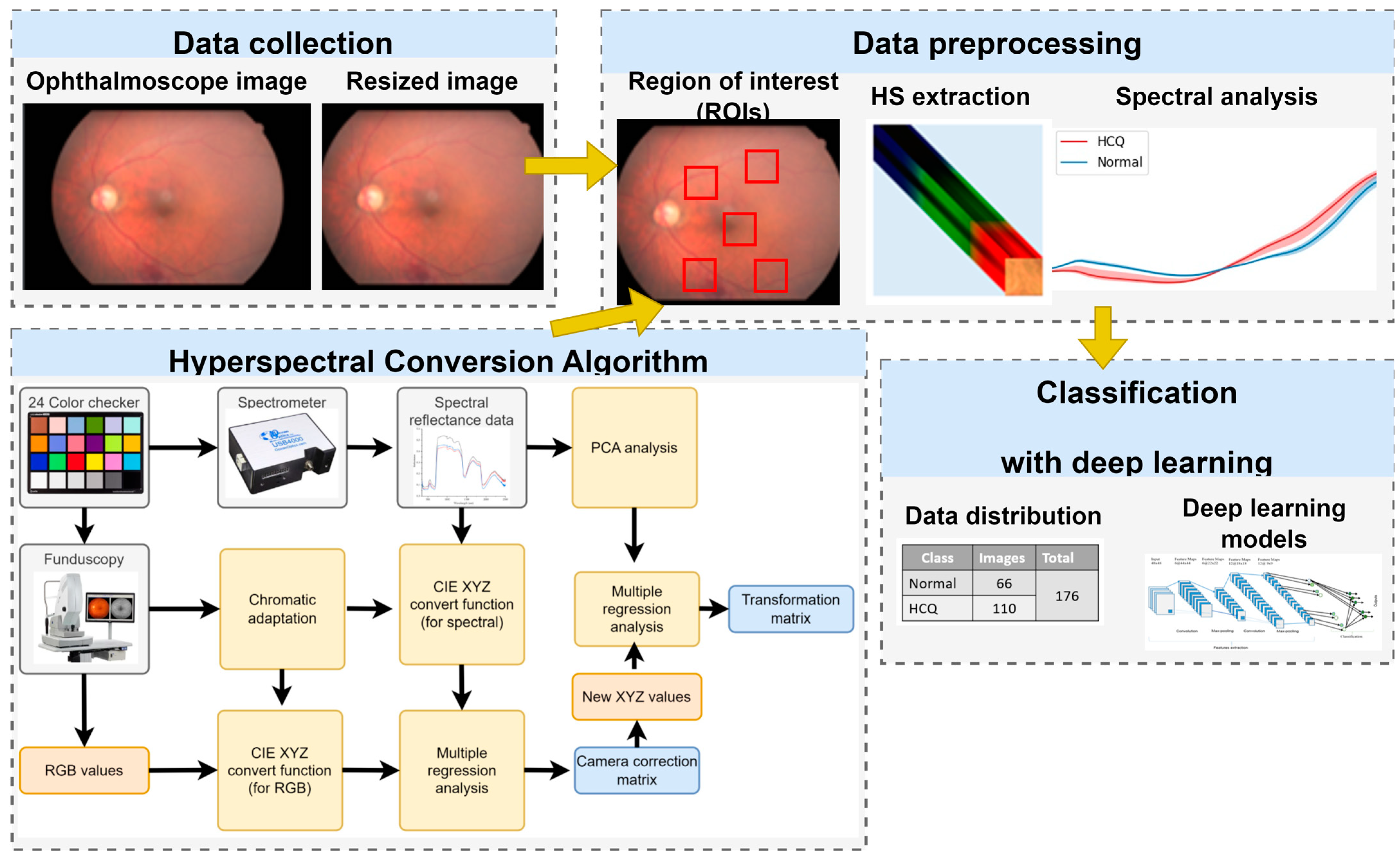

2. Materials and Methods

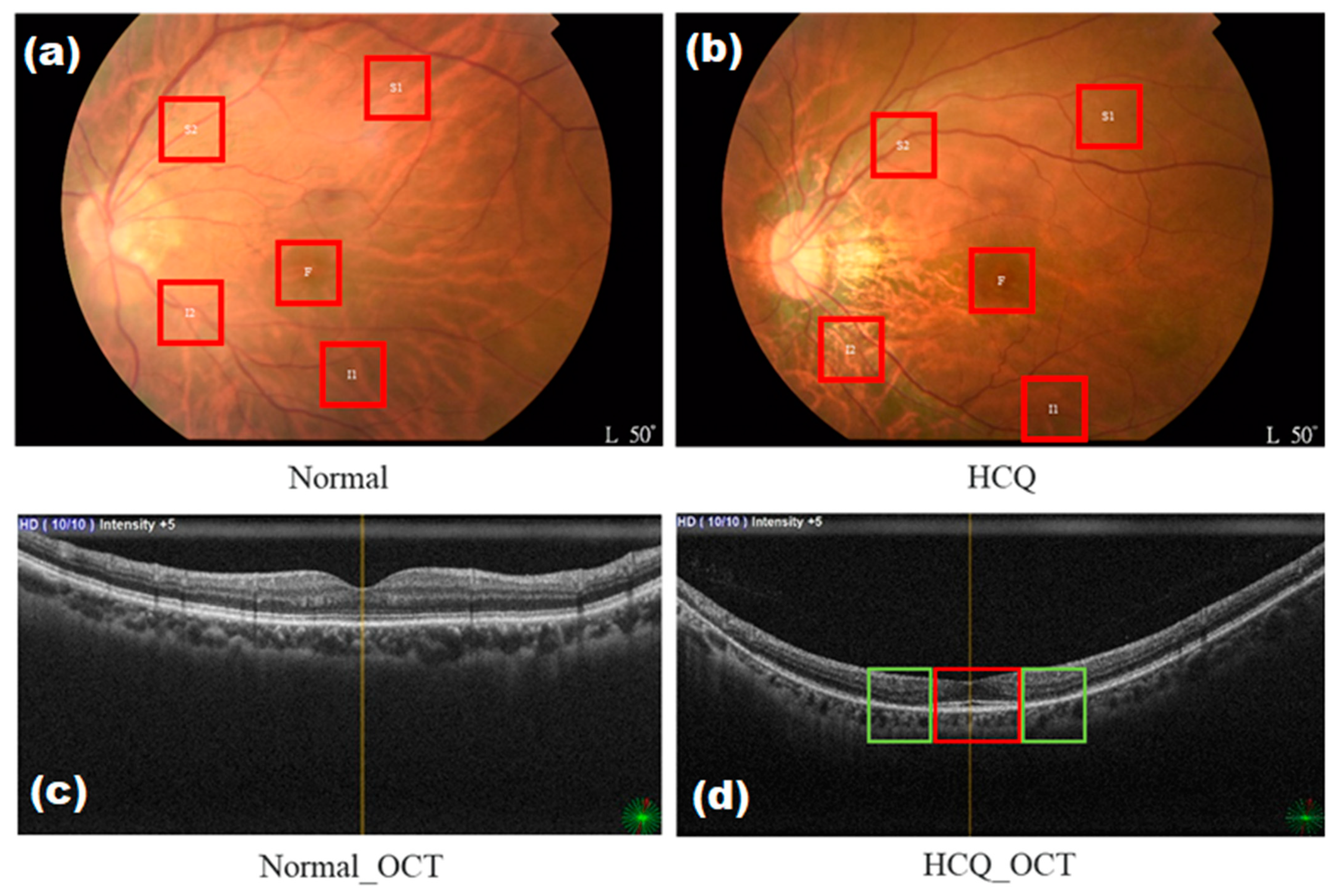

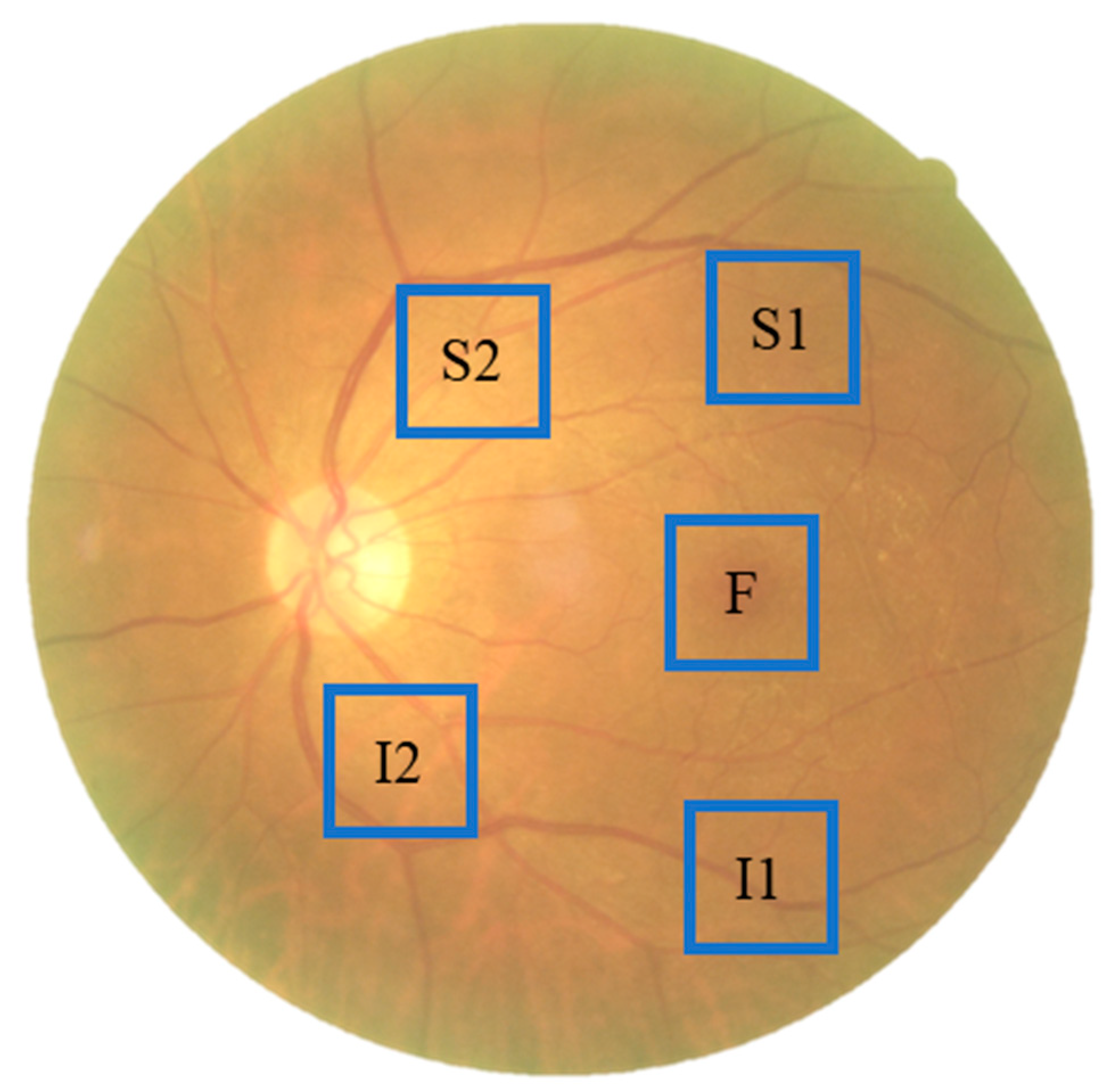

2.1. Data Collection

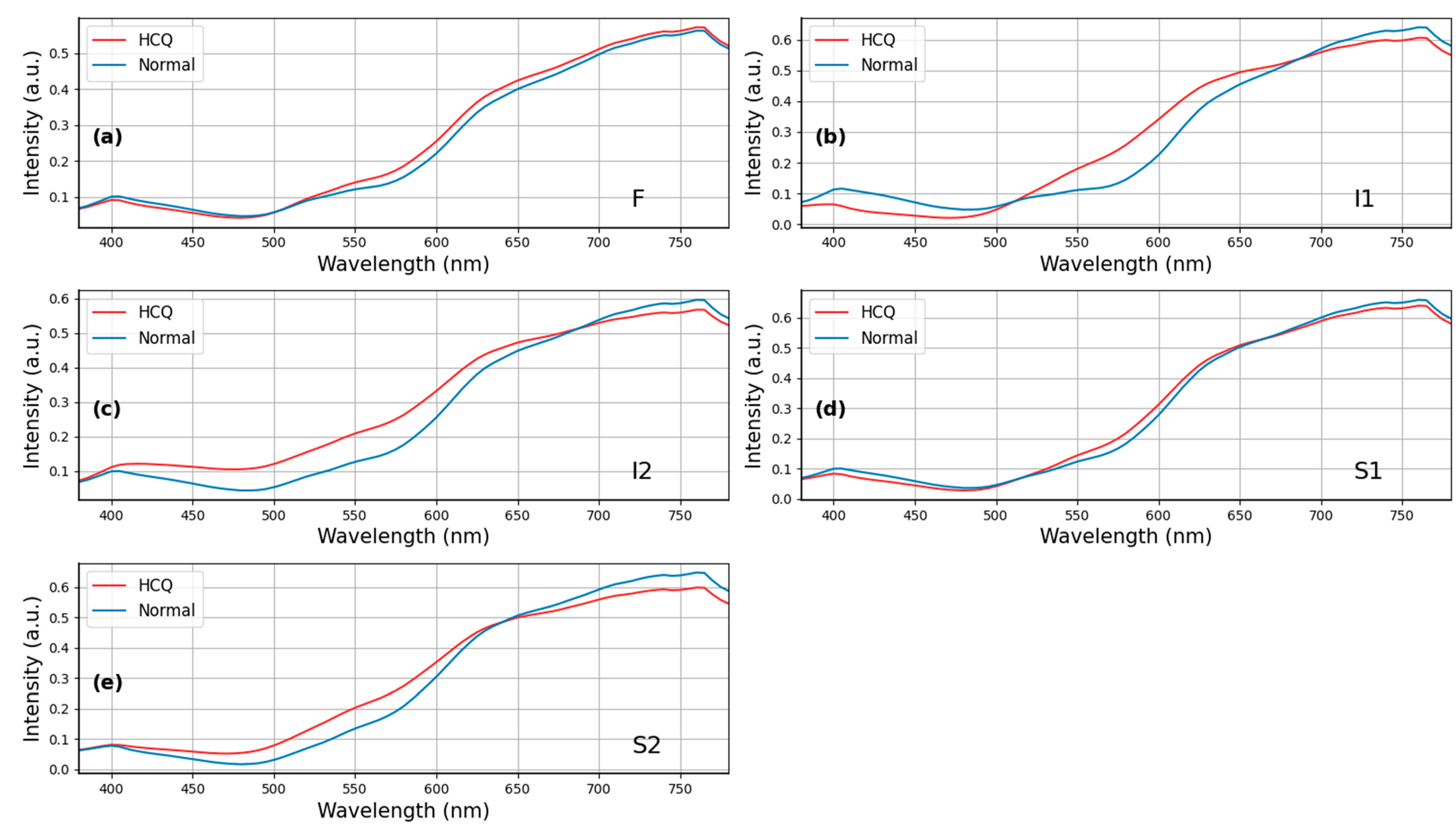

2.2. Data Preprocessing and Training Deep Learning Model

2.3. OCT System—Type B Ultrasonic Scanner (Nidek RS-3000)

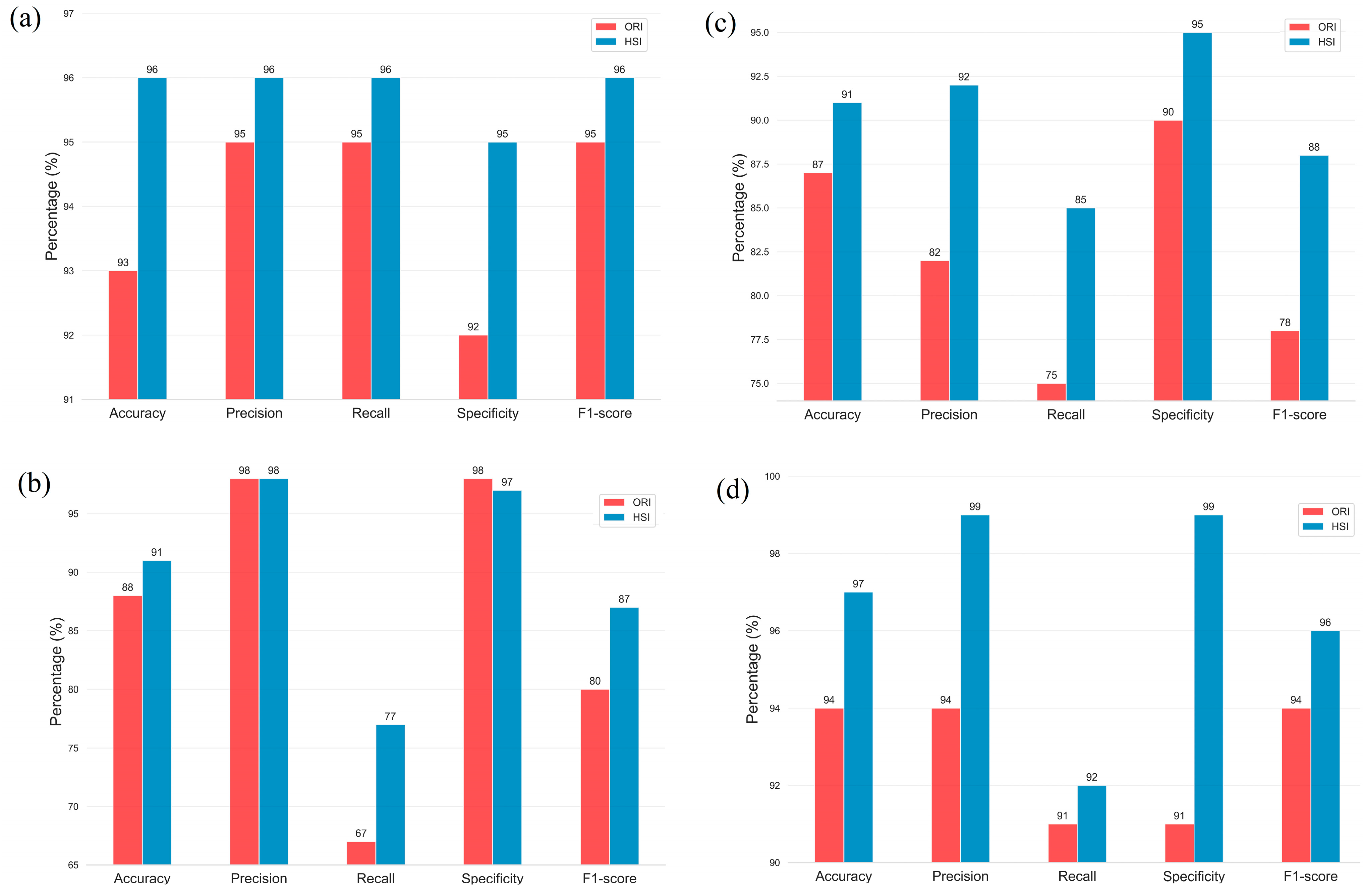

3. Results

4. Discussions

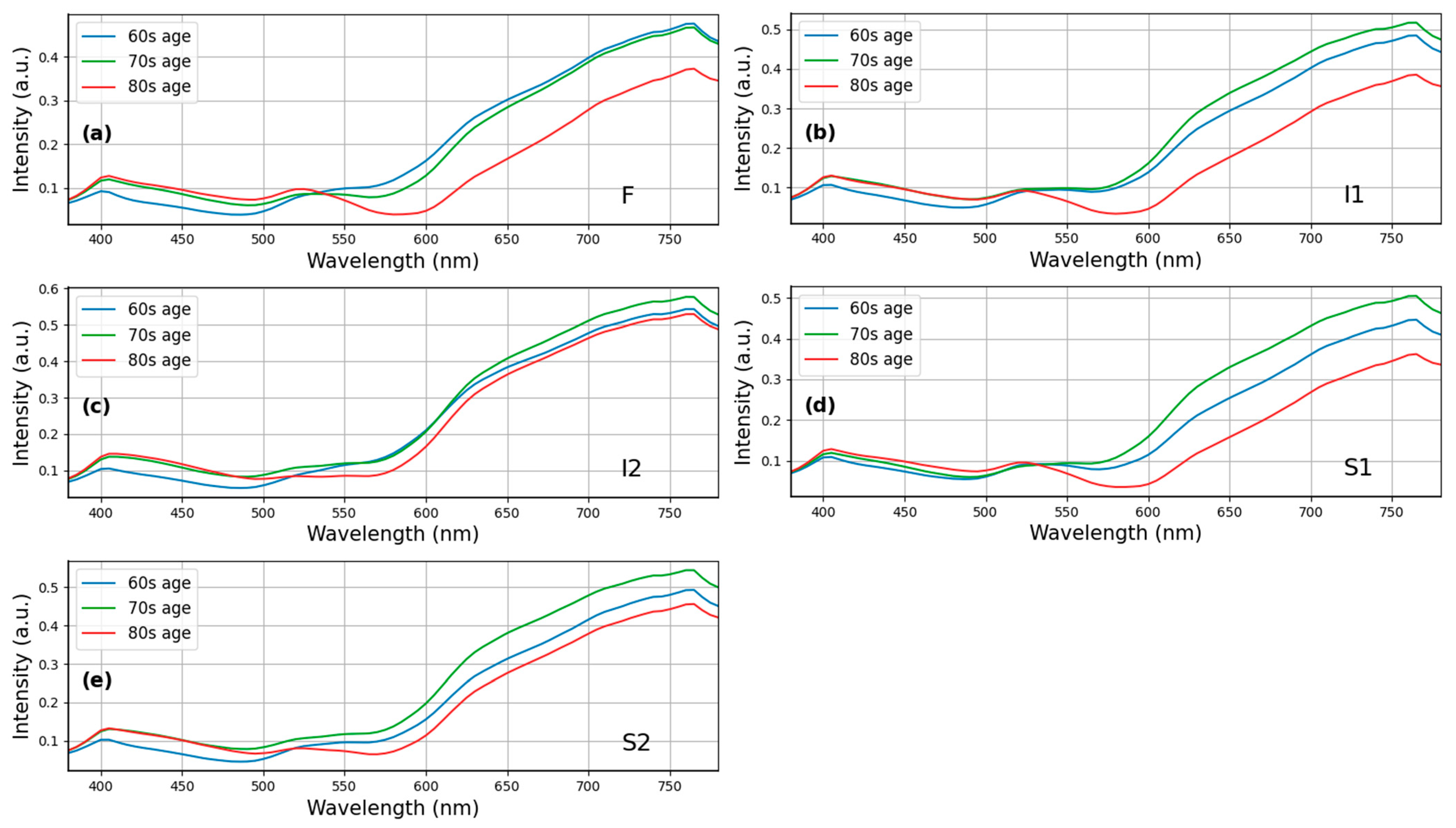

4.1. Effects of Aging on Hydroxychloroquine Retinopathy

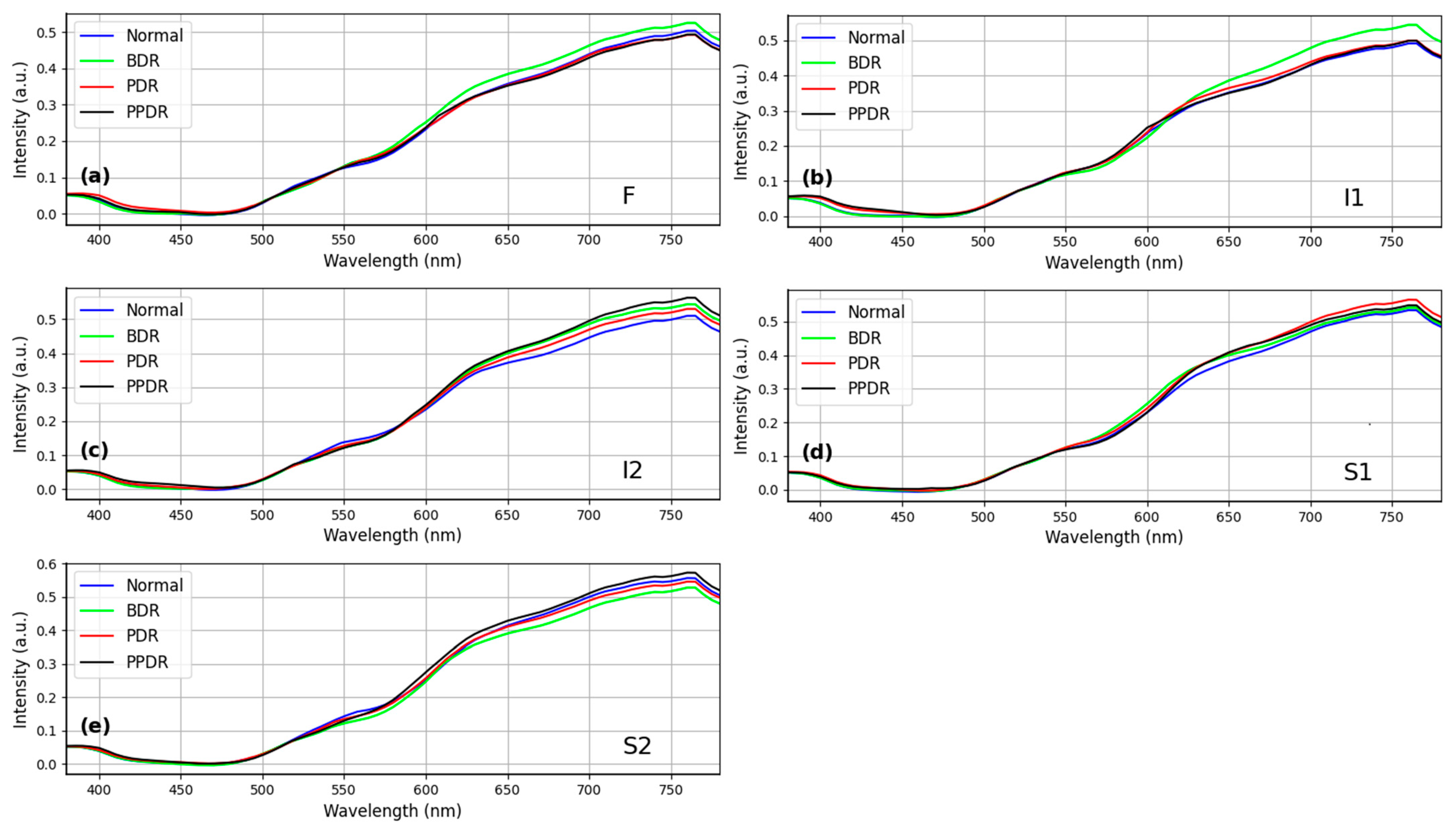

4.2. The Relationship of HCQ Retinopathy and Diabetes Remains Uncertain

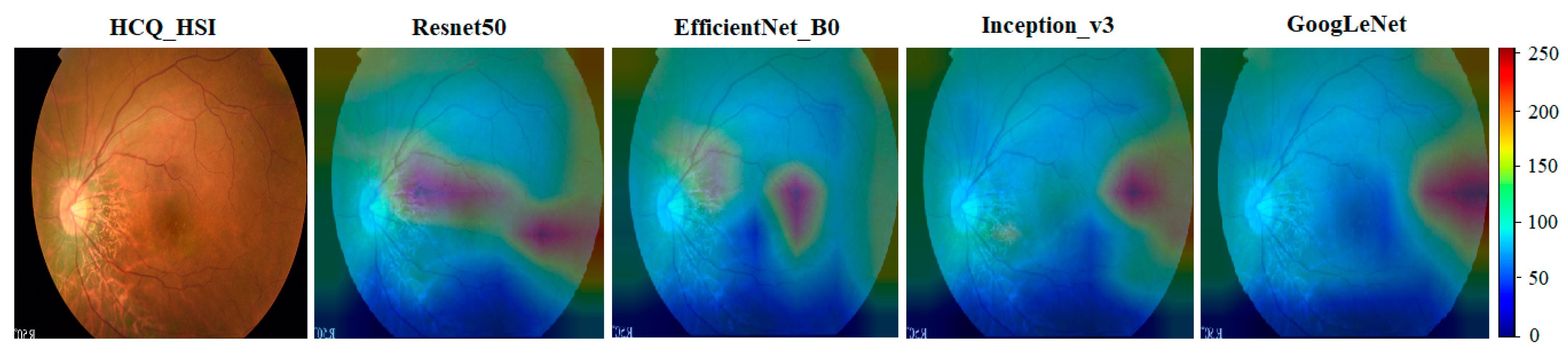

4.3. A Novel Screening Technique for HCQ Retinopathy

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bringmann, A.; Syrbe, S.; Görner, K.; Kacza, J.; Francke, M.; Wiedemann, P.; Reichenbach, A. The primate fovea: Structure, function and development. Prog. Retin. Eye Res. 2018, 66, 49–84. [Google Scholar] [CrossRef]

- Ferrara, M.; Lugano, G.; Sandinha, M.T.; Kearns, V.R.; Geraghty, B.; Steel, D.H. Biomechanical properties of retina and choroid: A comprehensive review of techniques and translational relevance. Eye 2021, 35, 1818–1832. [Google Scholar] [CrossRef]

- Kolb, H.; Fernandez, E.; Nelson, R. Webvision: The Organization of the Retina and Visual System; University of Utah Health Sciences Center: Salt Lake City, UT, USA, 1995. [Google Scholar]

- Yao, H.-Y.; Tseng, K.-W.; Nguyen, H.-T.; Kuo, C.-T.; Wang, H.-C. Hyperspectral ophthalmoscope images for the diagnosis of diabetic retinopathy stage. J. Clin. Med. 2020, 9, 1613. [Google Scholar] [CrossRef]

- Fan, W.-S.; Huang, S.-Y.; Nguyen, H.-T.; Ho, W.-T.; Chao, W.-H.; Lin, F.-C.; Wang, H.-C. Design of a Functional Eye Dressing for Treatment of the Vitreous Floater. J. Pers. Med. 2022, 12, 1659. [Google Scholar] [CrossRef]

- Newton, F.; Megaw, R. Mechanisms of photoreceptor death in retinitis pigmentosa. Genes 2020, 11, 1120. [Google Scholar] [CrossRef]

- Stein, J.D.; Khawaja, A.P.; Weizer, J.S. Glaucoma in adults—Screening, diagnosis, and management: A review. Jama 2021, 325, 164–174. [Google Scholar] [CrossRef]

- Schuster, A.K.; Erb, C.; Hoffmann, E.M.; Dietlein, T.; Pfeiffer, N. The diagnosis and treatment of glaucoma. Dtsch. Ärzteblatt Int. 2020, 117, 225. [Google Scholar] [CrossRef]

- Vujosevic, S.; Aldington, S.J.; Silva, P.; Hernández, C.; Scanlon, P.; Peto, T.; Simó, R. Screening for diabetic retinopathy: New perspectives and challenges. Lancet Diabetes Endocrinol. 2020, 8, 337–347. [Google Scholar] [CrossRef]

- Teo, Z.L.; Tham, Y.-C.; Yu, M.; Chee, M.L.; Rim, T.H.; Cheung, N.; Bikbov, M.M.; Wang, Y.X.; Tang, Y.; Lu, Y. Global prevalence of diabetic retinopathy and projection of burden through 2045: Systematic review and meta-analysis. Ophthalmology 2021, 128, 1580–1591. [Google Scholar] [CrossRef]

- Yusuf, I.; Sharma, S.; Luqmani, R.; Downes, S. Hydroxychloroquine retinopathy. Eye 2017, 31, 828–845. [Google Scholar] [CrossRef]

- Ponticelli, C.; Moroni, G. Hydroxychloroquine in systemic lupus erythematosus (SLE). Expert Opin. Drug Saf. 2017, 16, 411–419. [Google Scholar] [CrossRef] [PubMed]

- Dima, A.; Jurcut, C.; Chasset, F.; Felten, R.; Arnaud, L. Hydroxychloroquine in systemic lupus erythematosus: Overview of current knowledge. Ther. Adv. Musculoskelet. Dis. 2022, 14, 1759720X211073001. [Google Scholar] [CrossRef] [PubMed]

- Rempenault, C.; Combe, B.; Barnetche, T.; Gaujoux-Viala, C.; Lukas, C.; Morel, J.; Hua, C. Clinical and structural efficacy of hydroxychloroquine in rheumatoid arthritis: A systematic review. Arthritis Care Res. 2020, 72, 36–40. [Google Scholar] [CrossRef] [PubMed]

- Lane, J.C.; Weaver, J.; Kostka, K.; Duarte-Salles, T.; Abrahao, M.T.F.; Alghoul, H.; Alser, O.; Alshammari, T.M.; Biedermann, P.; Banda, J.M. Risk of hydroxychloroquine alone and in combination with azithromycin in the treatment of rheumatoid arthritis: A multinational, retrospective study. Lancet Rheumatol. 2020, 2, e698–e711. [Google Scholar] [CrossRef]

- van der Heijden, E.H.; Hartgring, S.A.; Kruize, A.A.; Radstake, T.R.; van Roon, J.A. Additive immunosuppressive effect of leflunomide and hydroxychloroquine supports rationale for combination therapy for Sjögren’s syndrome. Expert Rev. Clin. Immunol. 2019, 15, 801–808. [Google Scholar] [CrossRef]

- van der Heijden, E.H.M.; Blokland, S.L.M.; Hillen, M.R.; Lopes, A.P.P.; van Vliet-Moret, F.M.; Rosenberg, A.J.W.P.; Janssen, N.G.; Welsing, P.M.J.; Iannizzotto, V.; Tao, W. Leflunomide–hydroxychloroquine combination therapy in patients with primary Sjögren’s syndrome (RepurpSS-I): A placebo-controlled, double-blinded, randomised clinical trial. Lancet Rheumatol. 2020, 2, e260–e269. [Google Scholar] [CrossRef]

- Lam, C.; Yi, D.; Guo, M.; Lindsey, T. Automated detection of diabetic retinopathy using deep learning. AMIA Summits Transl. Sci. Proc. 2018, 2018, 147. [Google Scholar]

- Gao, Z.; Li, J.; Guo, J.; Chen, Y.; Yi, Z.; Zhong, J. Diagnosis of diabetic retinopathy using deep neural networks. IEEE Access 2018, 7, 3360–3370. [Google Scholar] [CrossRef]

- Yang, H.K.; Kim, Y.J.; Sung, J.Y.; Kim, D.H.; Kim, K.G.; Hwang, J.-M. Efficacy for differentiating nonglaucomatous versus glaucomatous optic neuropathy using deep learning systems. Am. J. Ophthalmol. 2020, 216, 140–146. [Google Scholar] [CrossRef]

- Li, F.; Yan, L.; Wang, Y.; Shi, J.; Chen, H.; Zhang, X.; Jiang, M.; Wu, Z.; Zhou, K. Deep learning-based automated detection of glaucomatous optic neuropathy on color fundus photographs. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 851–867. [Google Scholar] [CrossRef]

- Sarki, R.; Ahmed, K.; Wang, H.; Zhang, Y. Automatic detection of diabetic eye disease through deep learning using fundus images: A survey. IEEE Access 2020, 8, 151133–151149. [Google Scholar] [CrossRef]

- Kashani, A.H.; Jaime, G.R.L.; Saati, S.; Martin, G.; Varma, R.; Humayun, M.S. Non-invasive assessment of retinal vascular oxygen content among normal and diabetic human subjects: A study using hyperspectral computed tomographic imaging spectroscopy. Retina 2014, 34, 1854. [Google Scholar] [CrossRef]

- Hadoux, X.; Hui, F.; Lim, J.K.; Masters, C.L.; Pébay, A.; Chevalier, S.; Ha, J.; Loi, S.; Fowler, C.J.; Rowe, C. Non-invasive in vivo hyperspectral imaging of the retina for potential biomarker use in Alzheimer’s disease. Nat. Commun. 2019, 10, 4227. [Google Scholar] [CrossRef]

- More, S.S.; Beach, J.M.; McClelland, C.; Mokhtarzadeh, A.; Vince, R. In vivo assessment of retinal biomarkers by hyperspectral imaging: Early detection of Alzheimer’s disease. ACS Chem. Neurosci. 2019, 10, 4492–4501. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR: 2019, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Li, K.-C.; Lu, M.-Y.; Nguyen, H.T.; Feng, S.-W.; Artemkina, S.B.; Fedorov, V.E.; Wang, H.-C. Intelligent identification of MoS2 nanostructures with hyperspectral imaging by 3D-CNN. Nanomaterials 2020, 10, 1161. [Google Scholar] [CrossRef]

- Bergholz, R.; Schroeter, J.; Ruether, K. Evaluation of risk factors for retinal damage due to chloroquine and hydroxychloroquine. Br. J. Ophthalmol. 2010, 94, 1637–1642. [Google Scholar] [CrossRef]

- Baidya, A. Effects of Hydroxychloroquine on Progression of Diabetic Retinopathy in Subjects with Rheumatoid Arthritis and Type 2 Diabetes Mellitus. Curr. Diabetes Rev. 2022, 18, 102–107. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Stepien, K.E.; Han, D.P.; Schell, J.; Godara, P.; Rha, J.; Carroll, J. Spectral-domain optical coherence tomography and adaptive optics may detect hydroxychloroquine retinal toxicity before symptomatic vision loss. Trans. Am. Ophthalmol. Soc. 2009, 107, 28. [Google Scholar] [PubMed]

- Chen, E.; Brown, D.M.; Benz, M.S.; Fish, R.H.; Wong, T.P.; Kim, R.Y.; Major, J.C. Spectral domain optical coherence tomography as an effective screening test for hydroxychloroquine retinopathy (the “flying saucer” sign). Clin. Ophthalmol. 2010, 4, 1151–1158. [Google Scholar] [CrossRef] [PubMed]

- Babeau, F.; Busetto, T.; Hamel, C.; Villain, M.; Daien, V. Adaptive optics: A tool for screening hydroxychloroquine-induced maculopathy? Acta Ophthalmol. 2017, 95, e424–e425. [Google Scholar] [CrossRef]

- Kim, K.E.; Ahn, S.J.; Woo, S.J.; Park, K.H.; Lee, B.R.; Lee, Y.-K.; Sung, Y.-K. Use of OCT retinal thickness deviation map for hydroxychloroquine retinopathy screening. Ophthalmology 2021, 128, 110–119. [Google Scholar] [CrossRef] [PubMed]

- Yusuf, I.H.; Charbel Issa, P.; Ahn, S.J. Novel imaging techniques for hydroxychloroquine retinopathy. Front. Med. 2022, 9, 1026934. [Google Scholar] [CrossRef] [PubMed]

- Cheong, K.X.; Ong, C.J.T.; Chandrasekaran, P.R.; Zhao, J.; Teo, K.Y.C.; Mathur, R. Review of Retinal Imaging Modalities for Hydroxychloroquine Retinopathy. Diagnostics 2023, 13, 1752. [Google Scholar] [CrossRef] [PubMed]

- Littmann, H. Die Zeiss-Funduskamera. Ber 1955, 59, 318. [Google Scholar]

| HCQ Cases (n = 25) | Normal (n = 66) | p-Value | 95% CI | Effect Size | |

|---|---|---|---|---|---|

| Age 1 | 75.24 ± 8.41 | 75.83 ± 7.52 | 0.75 | −5.51–4.86 | −0.07 |

| 60 s age 3 | n = 15 | 62.90 ± 3.57 | <0.0001 | 60.69–65.11 | −4.03 |

| 70 s age 3 | n = 47 | 74.97 ± 2.27 | 74.23–75.72 | −6.83 | |

| 80 s age 3 | n = 29 | 82.50 ± 1.92 | 81.61–83.39 | −3.58 | |

| Sex (female/male) 2 | 22/3 | 45/21 | 0.10 | −0.02–0.05 | 0.02 |

| HBP (pos/neg) 2 | 17/8 | 59/7 | 0.03 | −0.02–0.07 | 0.02 |

| Glaucoma (pos/neg) 2 | 6/19 | 12/54 | 0.74 | −0.003–0.011 | 0.003 |

| AMD (pos/neg) 2 | 17/8 | 49/17 | 0.74 | −0.004–0.011 | 0.004 |

| DR (normal/BDR/PDR/PPDR) 2 | 6/6/4/9 | 21/11/18/16 | 0.43 | 0.54–3.58 | 0.17 |

| Train | Test | Total | |

|---|---|---|---|

| Normal | 53 | 13 | 66 |

| HCQ | 88 | 22 | 110 |

| Total | 141 | 35 | 176 |

| ORI | HSI | ||

|---|---|---|---|

| ResNet50 | Accuracy | 0.93 | 0.96 |

| Precision | 0.96 | 0.96 | |

| Recall | 0.95 | 0.96 | |

| Specificity | 0.92 | 0.95 | |

| F1-score | 0.95 | 0.96 | |

| Inception_v3 | Accuracy | 0.87 | 0.91 |

| Precision | 0.82 | 0.92 | |

| Recall | 0.75 | 0.85 | |

| Specificity | 0.90 | 0.95 | |

| F1-score | 0.78 | 0.88 | |

| GoogLeNet | Accuracy | 0.88 | 0.91 |

| Precision | 0.98 | 0.98 | |

| Recall | 0.67 | 0.77 | |

| Specificity | 0.98 | 0.97 | |

| F1-score | 0.80 | 0.87 | |

| EfficientNet_B0 | Accuracy | 0.94 | 0.97 |

| Precision | 0.94 | 0.99 | |

| Recall | 0.91 | 0.92 | |

| Specificity | 0.91 | 0.99 | |

| F1-score | 0.94 | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, W.-S.; Nguyen, H.-T.; Wang, C.-Y.; Liang, S.-W.; Tsao, Y.-M.; Lin, F.-C.; Wang, H.-C. Detection of Hydroxychloroquine Retinopathy via Hyperspectral and Deep Learning through Ophthalmoscope Images. Diagnostics 2023, 13, 2373. https://doi.org/10.3390/diagnostics13142373

Fan W-S, Nguyen H-T, Wang C-Y, Liang S-W, Tsao Y-M, Lin F-C, Wang H-C. Detection of Hydroxychloroquine Retinopathy via Hyperspectral and Deep Learning through Ophthalmoscope Images. Diagnostics. 2023; 13(14):2373. https://doi.org/10.3390/diagnostics13142373

Chicago/Turabian StyleFan, Wen-Shuang, Hong-Thai Nguyen, Ching-Yu Wang, Shih-Wun Liang, Yu-Ming Tsao, Fen-Chi Lin, and Hsiang-Chen Wang. 2023. "Detection of Hydroxychloroquine Retinopathy via Hyperspectral and Deep Learning through Ophthalmoscope Images" Diagnostics 13, no. 14: 2373. https://doi.org/10.3390/diagnostics13142373

APA StyleFan, W.-S., Nguyen, H.-T., Wang, C.-Y., Liang, S.-W., Tsao, Y.-M., Lin, F.-C., & Wang, H.-C. (2023). Detection of Hydroxychloroquine Retinopathy via Hyperspectral and Deep Learning through Ophthalmoscope Images. Diagnostics, 13(14), 2373. https://doi.org/10.3390/diagnostics13142373