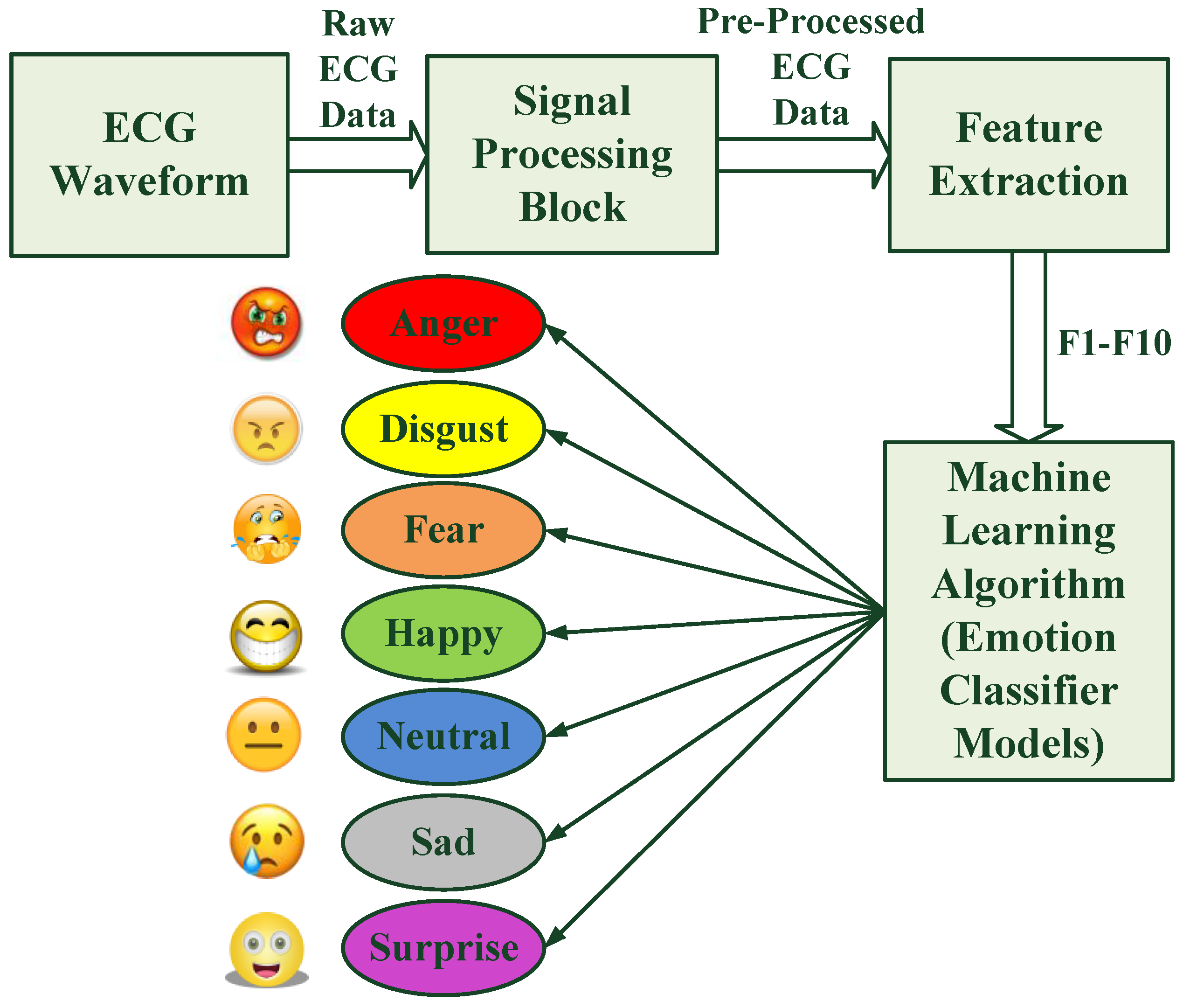

Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers

Abstract

1. Introduction

2. Related Work

2.1. Non-Physiological Signal-Based Emotion Classifiers

2.2. Physiological Signal-Based Emotion Classifiers

- A hardware system for an accurate ECG acquisition from above the cloth has been designed.

- Ten statistical features of the ECG data have been extracted using developed feature extraction algorithms.

- Experiments have been conducted on a total of 45 subjects and seven different categories of emotions using emotion classifier models (such as SVM and RF) developed using MATLAB have been classified.

- Developed models are capable of classifying human emotions precisely with reasonably high classification accuracies for a contactless unimodal ECG-based emotion recognition system.

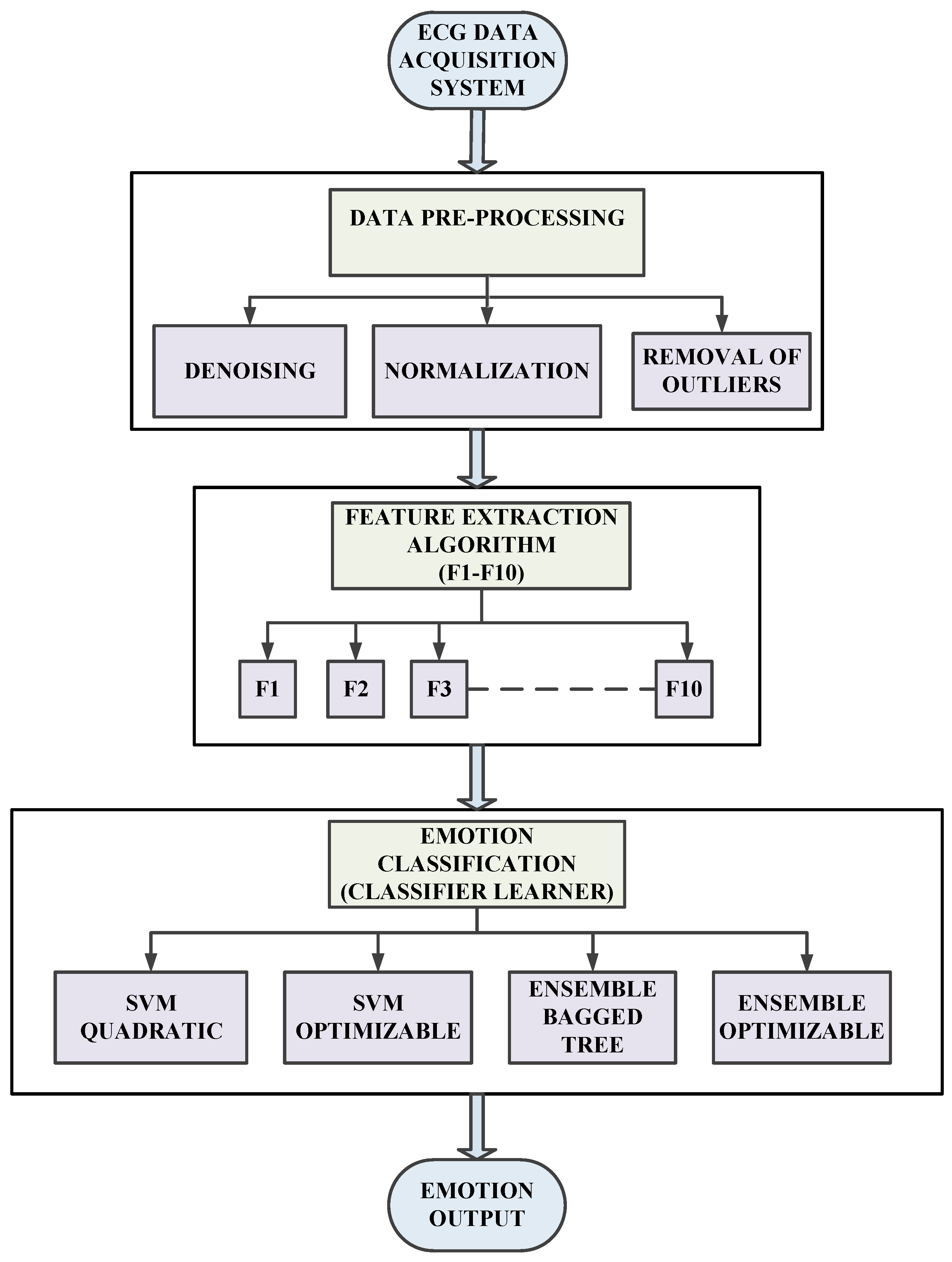

3. Methodology

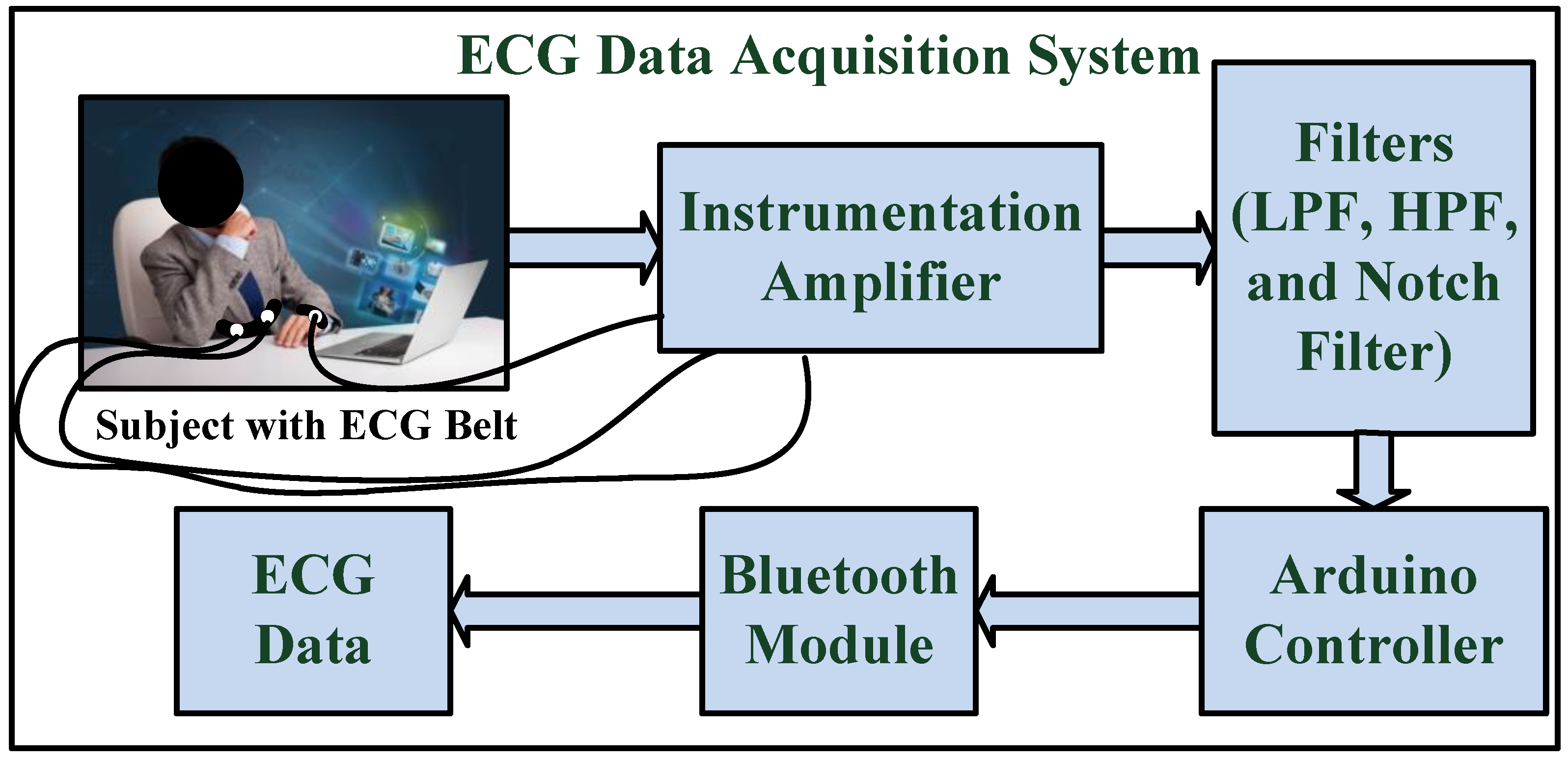

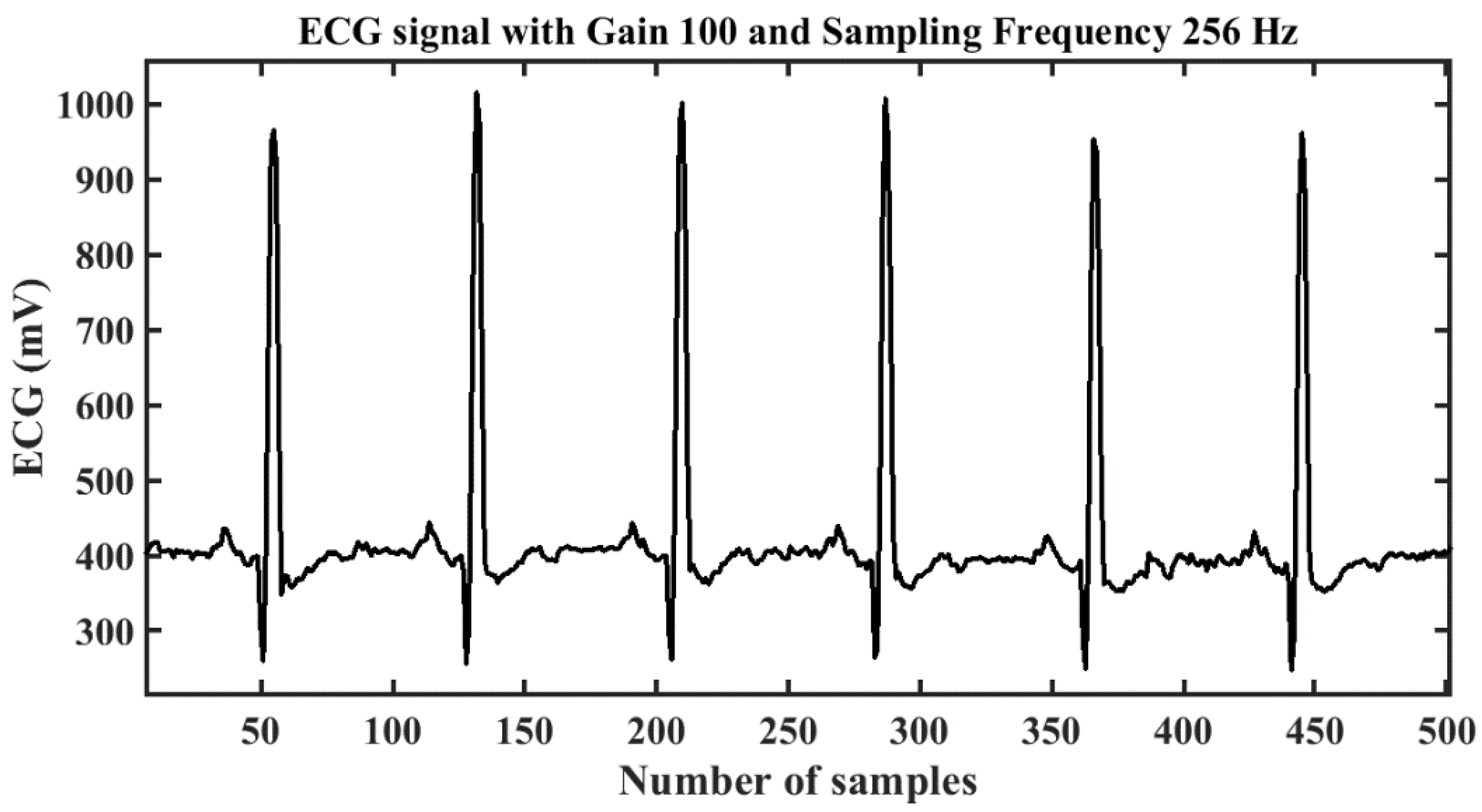

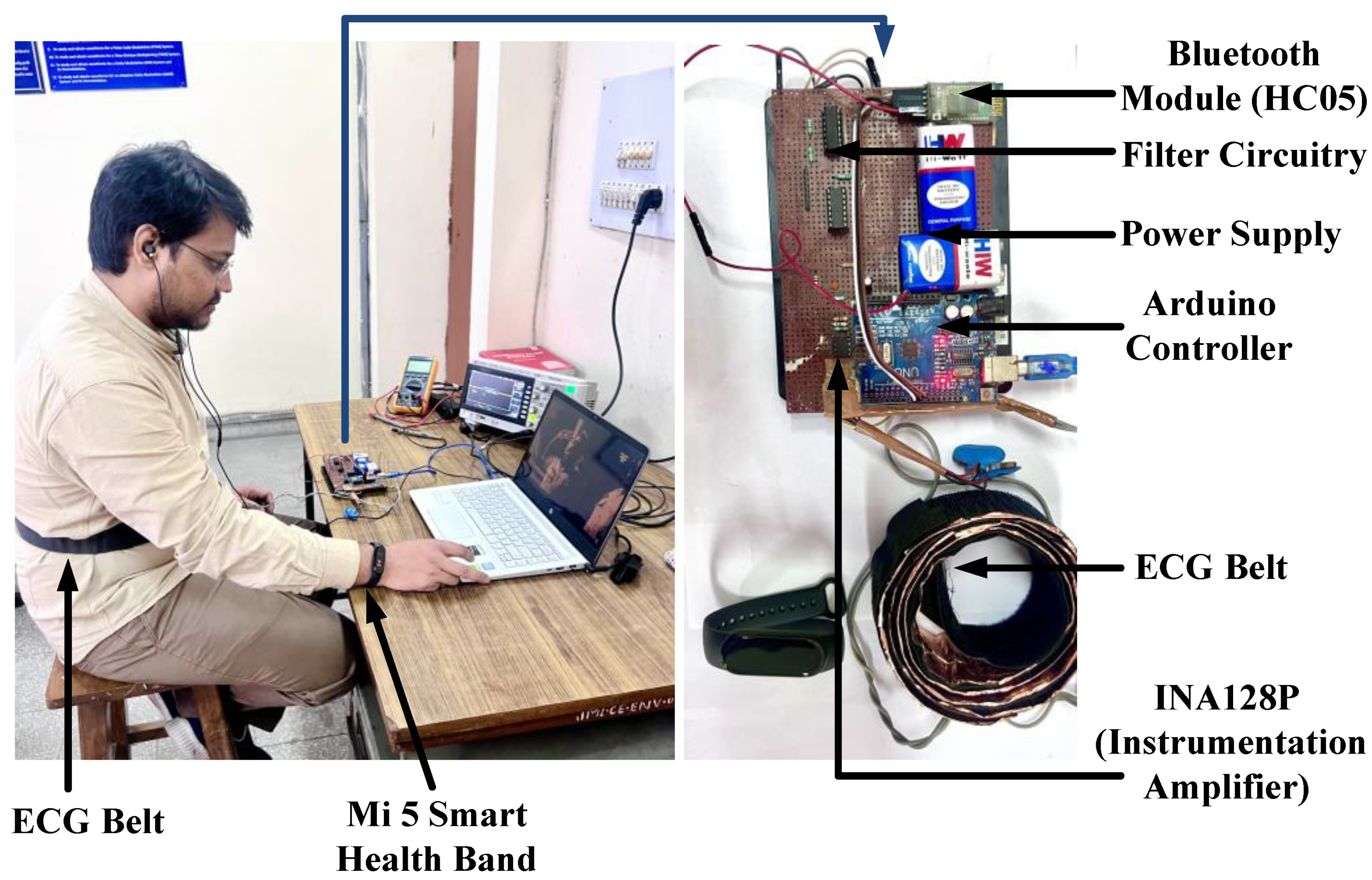

- A contactless method of accurate ECG acquisition is in itself a challenging task. The designed hardware acquires ECG from above the cloth of the subject. The noise minimization was achieved using filters designed using low-noise op-amps. The acquired signal was processed in the signal processing module.

- The stable connection of the Bluetooth module (HC-05) with the PC system was a challenge while acquiring the data. Experiments were required to be repeated in some cases.

- One of the features (heart rate) extracted for the ECG signal for the corresponding emotion was verified with a Mi Band 5 simultaneously. In some cases, the experiment was repeated.

- The intensity of any emotion also depends on the diurnal baseline of an individual mood; the baseline was modified as a neutral case for each individual. It can be referred to as calibration before experimentation.

- The volunteers who participated in this experimental study were explained the methodology, and the required consent was taken. The volunteers were healthy subjects with no cardiac history.

- The volunteers were categorized into three different groups based on the age group to which they belonged. The three different age groups selected were 21–30 years, 31–40 years, and 41–50 years, with a male to female of 3:2.

- The audiovisual stimuli were already prepared in the form of video clips of 60 s length for each of the seven different emotions inclusive of pre- and post-10 s of static image to obtain a baseline. The selection of the audiovisual stimuli was made with the help of the previous literature [39,40,41,42].

- A total of 45 volunteers (15 from each of the selected age groups) were shown the audiovisual stimuli in random order but while keeping an average time gap of 10 min between two video stimuli or until the subject returned to their baseline level.

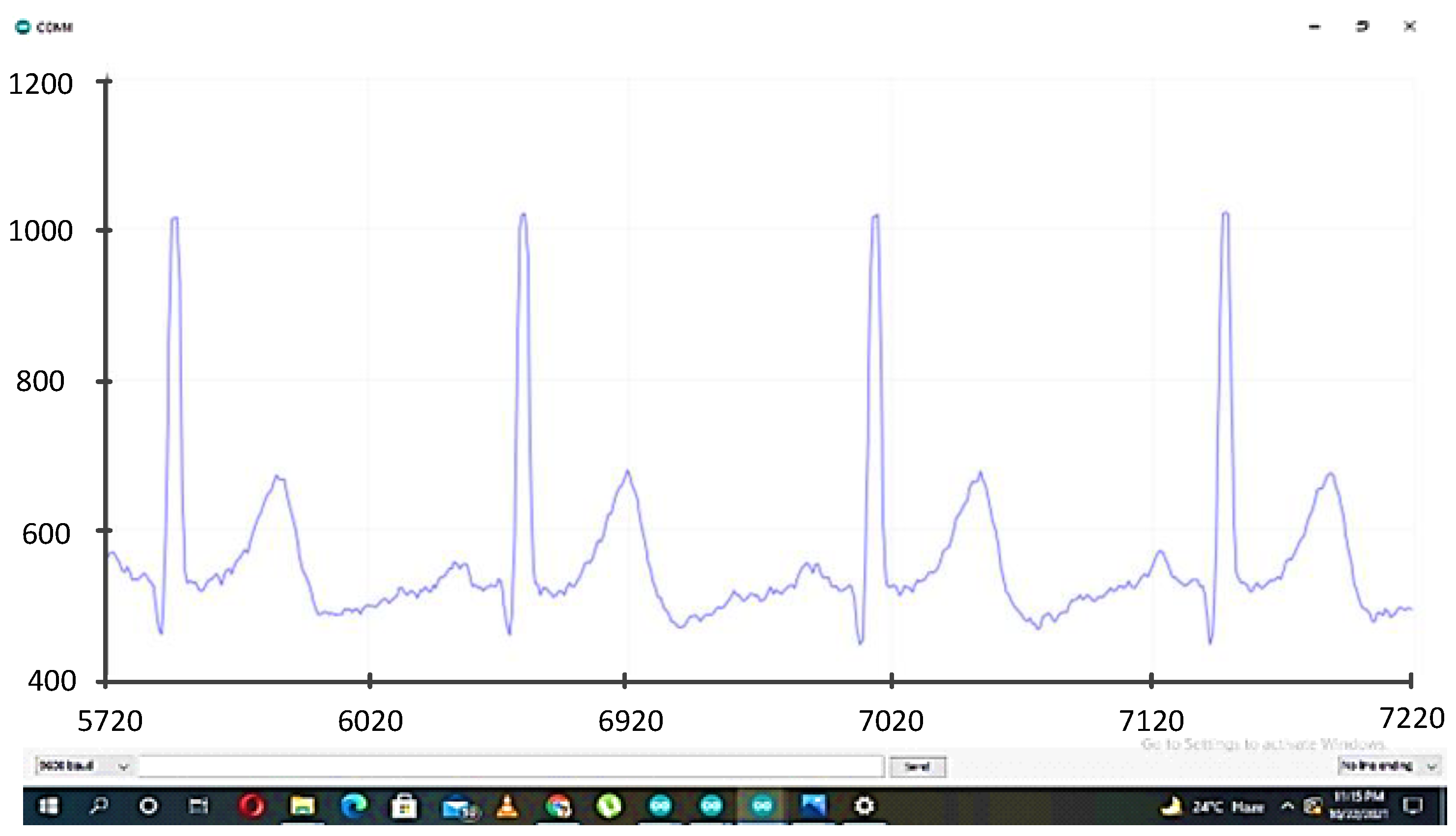

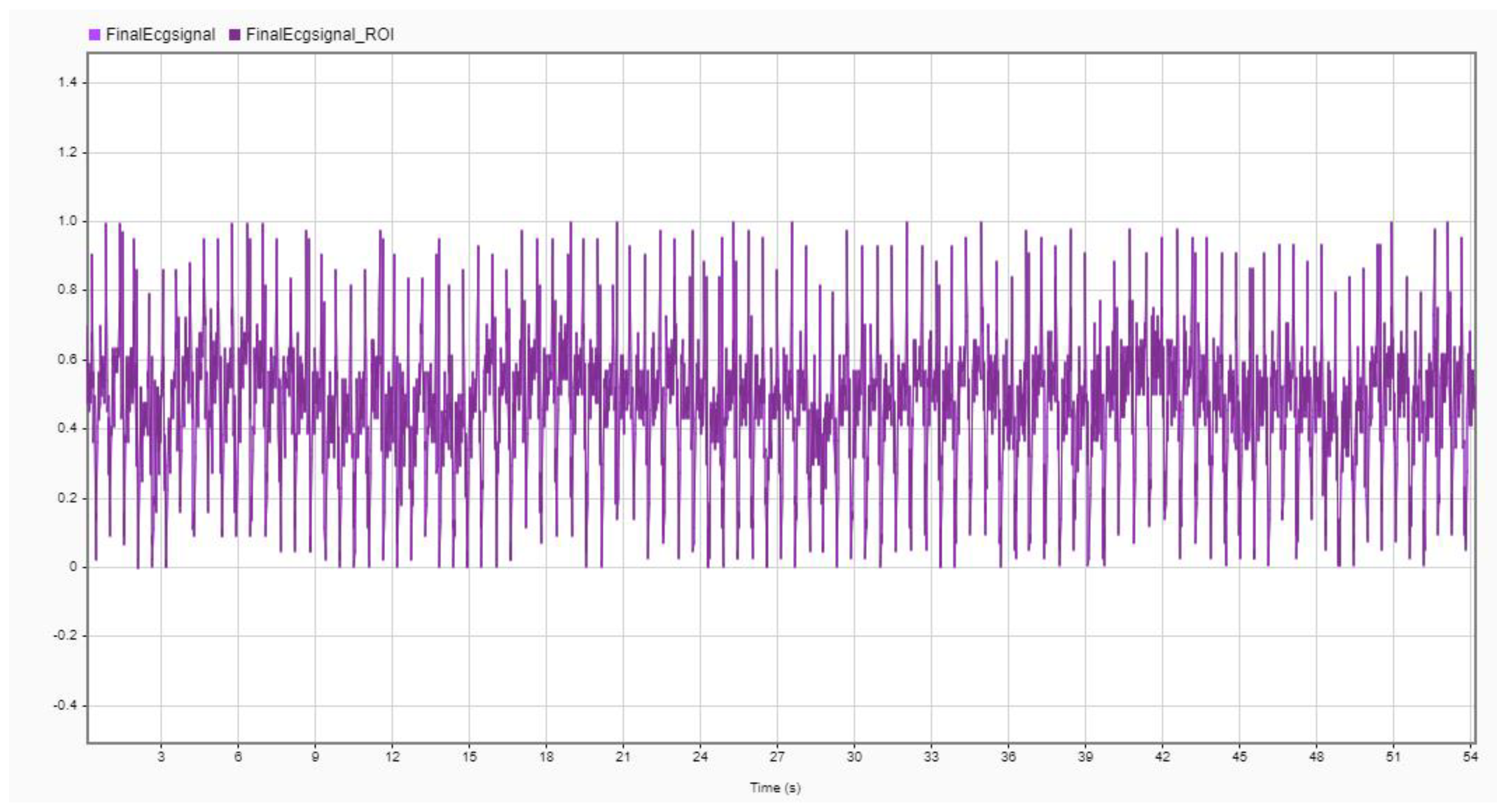

- While watching the video to elicit a specific emotion, the ECG of the subject from the ECG belt system was recorded and sent in parallel wirelessly to MATLAB using the serial port of the PC.

- An ECG feature extraction algorithm was developed; a live script ran on MATLAB in which the acquired ECG signal was firstly processed for noise removal and normalization, and then the statistical features were extracted.

- The subjects were also asked to write down the emotions they felt while watching the audiovisual stimuli; only those cases are considered in which the category of the clip and the emotion felt and mentioned by the subject matched.

- One of the primary features, heart rate, calculated from the feature extraction algorithm was verified with the heart rate acquired using the PPG sensor of a Mi Smart wrist Band 5. The experiment was repeated for the case in which the heart rate using the algorithm and smart band varied by more than 3–5 bpm.

- The ECG data for all seven emotions for the selected age groups and the corresponding feature datasets in the form of three different sets (Set A, Set B, and Set C) were prepared as final feature datasets.

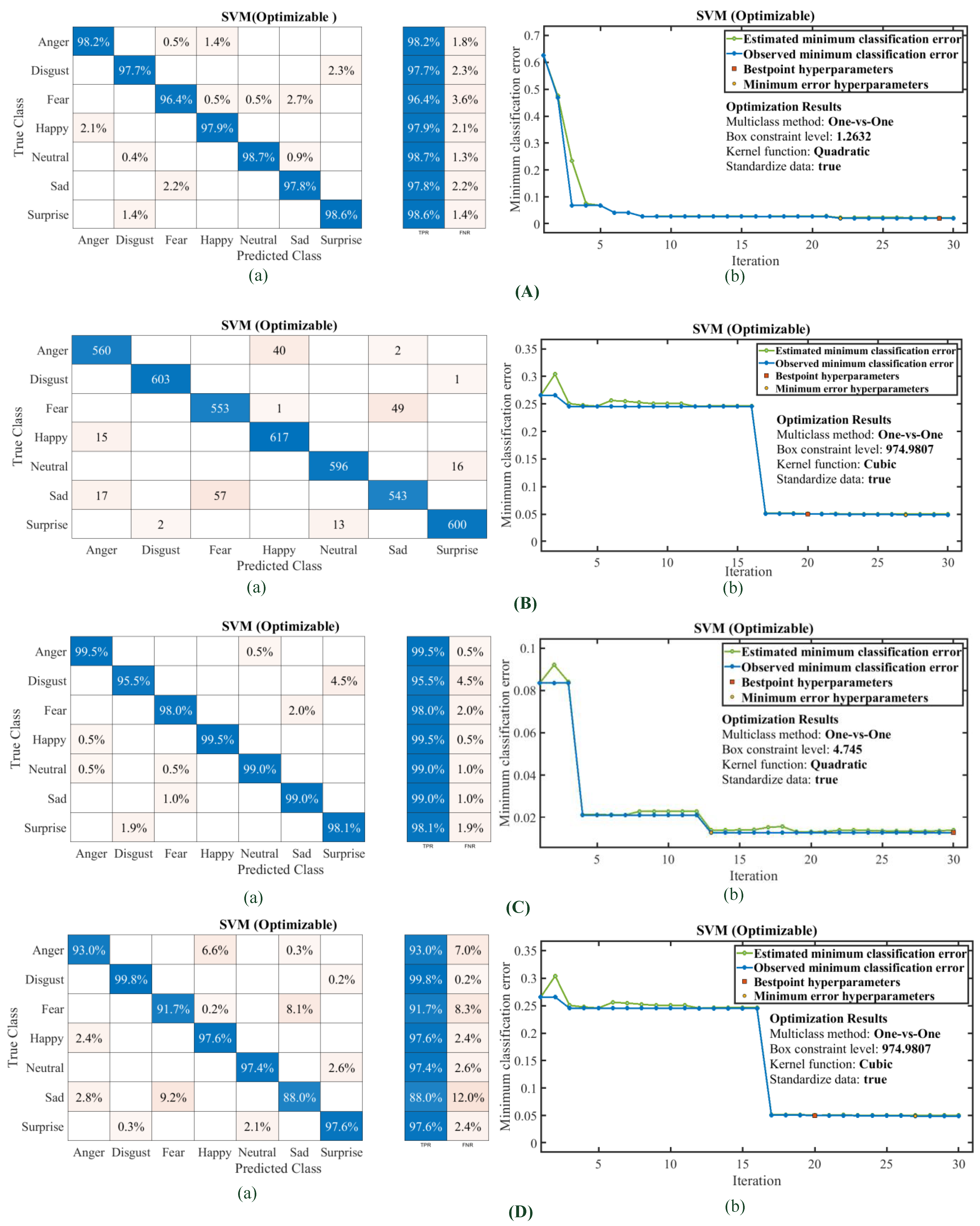

- The datasets were used individually to train classifier models using SVM and RF classifiers with a 10-fold cross-validation technique with PCA enabled.

- Two principal components were selected for each set of data to avoid overfitting problems. The significant features were selected using the wrapped method.

- The hyperparameters were tuned using the Bayesian optimization technique using optimizable classifier learners for both of the designed models to recommend the best hyperparameters.

- The classification accuracy obtained was validated and compared with available techniques.

3.1. Data Acquisition

3.2. Preparation of ECG Datasets

3.3. ECG Data Processing

3.4. Feature Extraction

3.5. Classifier Learners

3.5.1. SVM

3.5.2. Random Forest

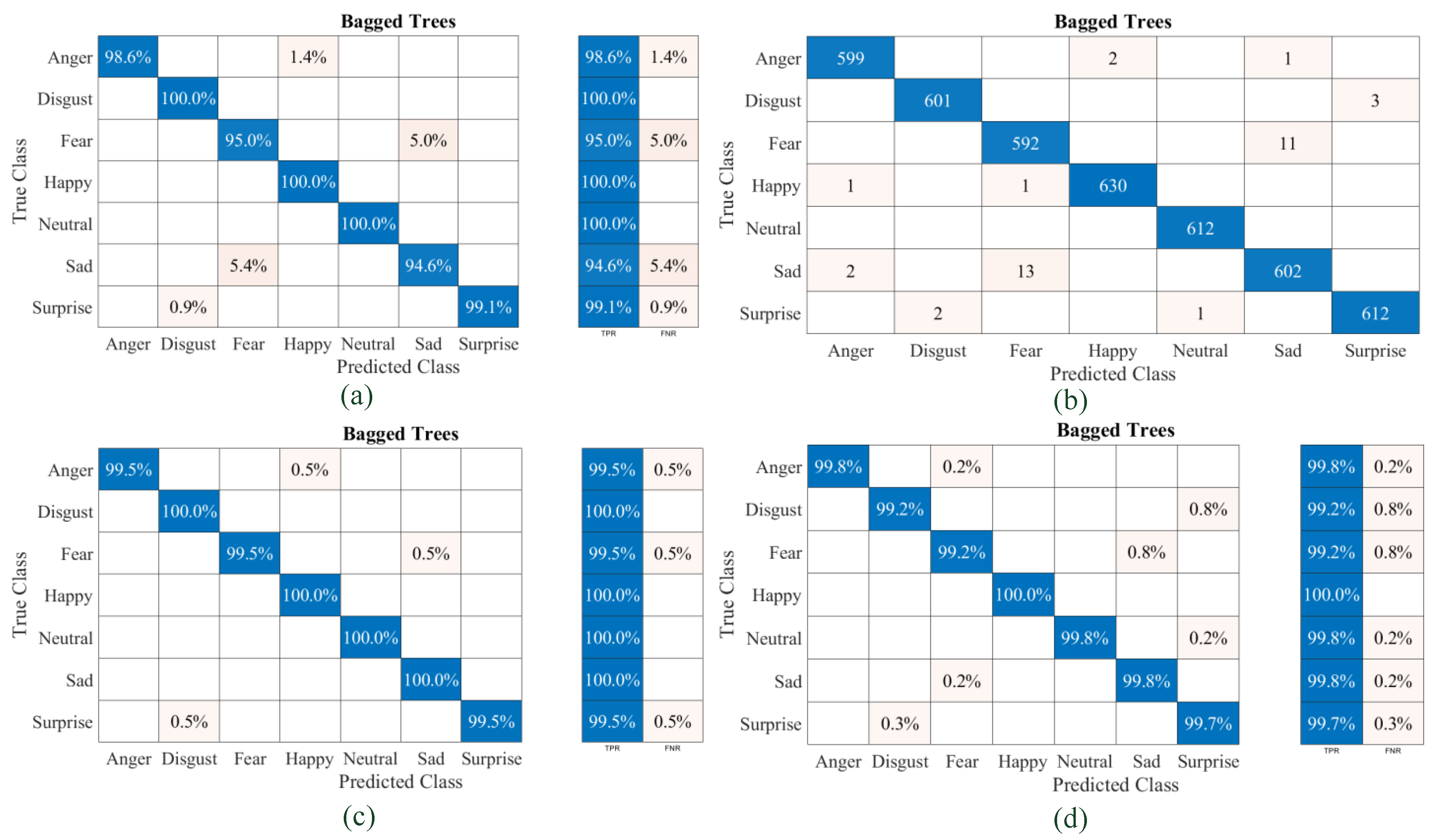

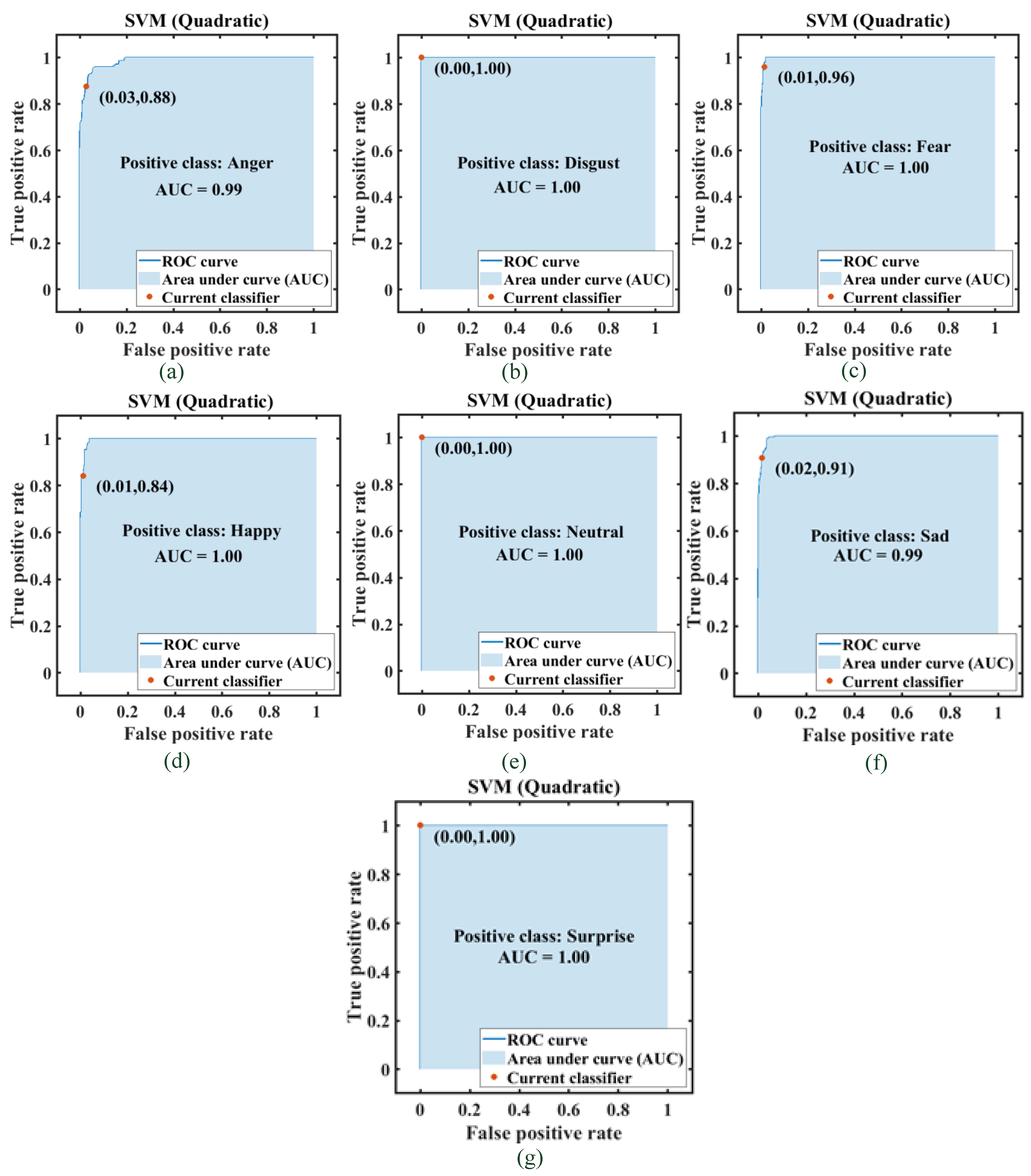

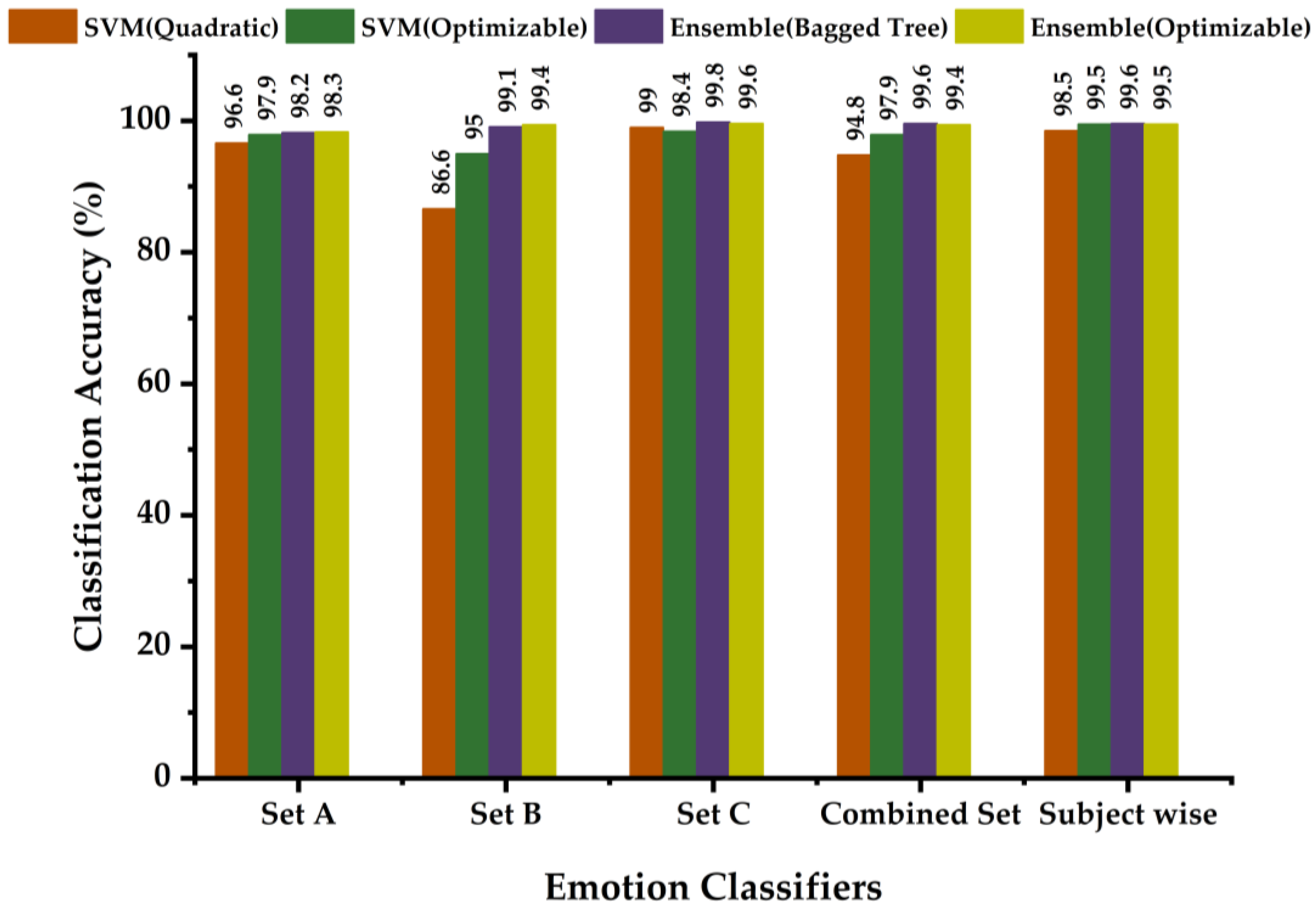

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, H.; Xue, M.; Wang, Y.; Yuan, G.; Li, X. Fast Visual Tracking With Siamese Oriented Region Proposal Network. IEEE Signal Process. Lett. 2022, 29, 1437–1441. [Google Scholar] [CrossRef]

- Nie, W.; Bao, Y.; Zhao, Y.; Liu, A. Long Dialogue Emotion Detection Based on Commonsense Knowledge Graph Guidance. IEEE Trans. Multimed. 2023, 1–15. [Google Scholar] [CrossRef]

- Xiong, Z.; Weng, X.; Wei, Y. SandplayAR: Evaluation of psychometric game for people with generalized anxiety disorder. Arts Psychother. 2022, 80, 101934. [Google Scholar] [CrossRef]

- Rayan, R.A.; Zafar, I.; Rajab, H.; Zubair, M.A.M.; Maqbool, M.; Hussain, S. Impact of IoT in Biomedical Applications Using Machine and Deep Learning. In Machine Learning Algorithms for Signal and Image Processing; Wiley: Hoboken, NJ, USA, 2022; pp. 339–360. ISBN 9781119861850. [Google Scholar]

- Peruzzi, G.; Galli, A.; Pozzebon, A. A Novel Methodology to Remotely and Early Diagnose Sleep Bruxism by Leveraging on Audio Signals and Embedded Machine Learning. In Proceedings of the 2022 IEEE International Symposium on Measurements & Networking (M&N), Padua, Italy, 18–20 July 2022; pp. 1–6. [Google Scholar]

- Kutsumi, Y.; Kanegawa, N.; Zeida, M.; Matsubara, H.; Murayama, N. Automated Bowel Sound and Motility Analysis with CNN Using a Smartphone. Sensors 2022, 23, 407. [Google Scholar] [CrossRef]

- Redij, R.; Kaur, A.; Muddaloor, P.; Sethi, A.K.; Aedma, K.; Rajagopal, A.; Gopalakrishnan, K.; Yadav, A.; Damani, D.N.; Chedid, V.G.; et al. Practicing Digital Gastroenterology through Phonoenterography Leveraging Artificial Intelligence: Future Perspectives Using Microwave Systems. Sensors 2023, 23, 2302. [Google Scholar] [CrossRef] [PubMed]

- Zgheib, R.; Chahbandarian, G.; Kamalov, F.; El Messiry, H.; Al-Gindy, A. Towards an ML-based semantic IoT for pandemic management: A survey of enabling technologies for COVID-19. Neurocomputing 2023, 528, 160–177. [Google Scholar] [CrossRef] [PubMed]

- Jain, Y.; Gandhi, H.; Burte, A.; Vora, A. Mental and Physical Health Management System Using ML, Computer Vision and IoT Sensor Network. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; pp. 786–791. [Google Scholar]

- Seng, K.P.; Ang, L.-M.; Ooi, C.S. A Combined Rule-Based & Machine Learning Audio-Visual Emotion Recognition Approach. IEEE Trans. Affect. Comput. 2018, 9, 3–13. [Google Scholar] [CrossRef]

- Wani, T.M.; Gunawan, T.S.; Qadri, S.A.A.; Kartiwi, M.; Ambikairajah, E. A Comprehensive Review of Speech Emotion Recognition Systems. IEEE Access 2021, 9, 47795–47814. [Google Scholar] [CrossRef]

- Hossain, M.S.; Muhammad, G. An Emotion Recognition System for Mobile Applications. IEEE Access 2017, 5, 2281–2287. [Google Scholar] [CrossRef]

- Hamsa, S.; Iraqi, Y.; Shahin, I.; Werghi, N. An Enhanced Emotion Recognition Algorithm Using Pitch Correlogram, Deep Sparse Matrix Representation and Random Forest Classifier. IEEE Access 2021, 9, 87995–88010. [Google Scholar] [CrossRef]

- Hamsa, S.; Shahin, I.; Iraqi, Y.; Werghi, N. Emotion Recognition from Speech Using Wavelet Packet Transform Cochlear Filter Bank and Random Forest Classifier. IEEE Access 2020, 8, 96994–97006. [Google Scholar] [CrossRef]

- Chen, L.; Li, M.; Su, W.; Wu, M.; Hirota, K.; Pedrycz, W. Adaptive Feature Selection-Based AdaBoost-KNN With Direct Optimization for Dynamic Emotion Recognition in Human–Robot Interaction. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 205–213. [Google Scholar] [CrossRef]

- Thuseethan, S.; Rajasegarar, S.; Yearwood, J. Deep Continual Learning for Emerging Emotion Recognition. IEEE Trans. Multimed. 2021, 24, 4367–4380. [Google Scholar] [CrossRef]

- Hameed, H.; Usman, M.; Tahir, A.; Ahmad, K.; Hussain, A.; Imran, M.A.; Abbasi, Q.H. Recognizing British Sign Language Using Deep Learning: A Contactless and Privacy-Preserving Approach. IEEE Trans. Comput. Soc. Syst. 2022, 1–9. [Google Scholar] [CrossRef]

- Yang, D.; Huang, S.; Liu, Y.; Zhang, L. Contextual and Cross-Modal Interaction for Multi-Modal Speech Emotion Recognition. IEEE Signal Process. Lett. 2022, 29, 2093–2097. [Google Scholar] [CrossRef]

- Aljuhani, R.H.; Alshutayri, A.; Alahdal, S. Arabic Speech Emotion Recognition from Saudi Dialect Corpus. IEEE Access 2021, 9, 127081–127085. [Google Scholar] [CrossRef]

- Samadiani, N.; Huang, G.; Hu, Y.; Li, X. Happy Emotion Recognition from Unconstrained Videos Using 3D Hybrid Deep Features. IEEE Access 2021, 9, 35524–35538. [Google Scholar] [CrossRef]

- Islam, M.R.; Moni, M.A.; Islam, M.M.; Rashed-Al-Mahfuz, M.; Islam, M.S.; Hasan, M.K.; Hossain, M.S.; Ahmad, M.; Uddin, S.; Azad, A.; et al. Emotion Recognition from EEG Signal Focusing on Deep Learning and Shallow Learning Techniques. IEEE Access 2021, 9, 94601–94624. [Google Scholar] [CrossRef]

- Torres, E.P.; Torres, E.A.; Hernandez-Alvarez, M.; Yoo, S.G. Emotion Recognition Related to Stock Trading Using Machine Learning Algorithms With Feature Selection. IEEE Access 2020, 8, 199719–199732. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Lu, C.; Zong, Y.; Zhang, X.; Cui, Z. MPED: A Multi-Modal Physiological Emotion Database for Discrete Emotion Recognition. IEEE Access 2019, 7, 12177–12191. [Google Scholar] [CrossRef]

- Sharma, L.D.; Bhattacharyya, A. A Computerized Approach for Automatic Human. IEEE Sens. J. 2021, 21, 26931–26940. [Google Scholar] [CrossRef]

- Li, G.; Ouyang, D.; Yuan, Y.; Li, W.; Guo, Z.; Qu, X.; Green, P. An EEG Data Processing Approach for Emotion Recognition. IEEE Sens. J. 2022, 22, 10751–10763. [Google Scholar] [CrossRef]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. An accurate emotion recognition system using ECG and GSR signals and matching pursuit method. Biomed. J. 2017, 40, 355–368. [Google Scholar] [CrossRef]

- Yin, H.; Yu, S.; Zhang, Y.; Zhou, A.; Wang, X.; Liu, L.; Ma, H.; Liu, J.; Yang, N. Let IoT Knows You Better: User Identification and Emotion Recognition through Millimeter Wave Sensing. IEEE Internet Things J. 2022, 10, 1149–1161. [Google Scholar] [CrossRef]

- Sepúlveda, A.; Castillo, F.; Palma, C.; Rodriguez-Fernandez, M. Emotion Recognition from ECG Signals Using Wavelet Scattering and Machine Learning. Appl. Sci. 2021, 11, 4945. [Google Scholar] [CrossRef]

- Hasnul, M.A.; Aziz, N.A.A.; Alelyani, S.; Mohana, M.; Aziz, A.A. Electrocardiogram-Based Emotion Recognition Systems and Their Applications in Healthcare—A Review. Sensors 2021, 21, 5015. [Google Scholar] [CrossRef] [PubMed]

- Subramanian, R.; Wache, J.; Abadi, M.K.; Vieriu, R.L.; Winkler, S.; Sebe, N. Ascertain: Emotion and personality recognition using commercial sensors. IEEE Trans. Affect. Comput. 2018, 9, 147–160. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, J.; Shen, J.; Li, S.; Hou, K.; Hu, B.; Gao, J.; Zhang, T. Emotion Recognition from Multimodal Physiological Signals Using a Regularized Deep Fusion of Kernel Machine. IEEE Trans. Cybern. 2021, 51, 4386–4399. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ekmekcioglu, E.; Caglar-Ozhan, S. Cross-subject multimodal emotion recognition based on hybrid fusion. IEEE Access 2020, 8, 168865–168878. [Google Scholar] [CrossRef]

- Albraikan, A.; Tobon, D.P.; El Saddik, A. Toward User-Independent Emotion Recognition Using Physiological Signals. IEEE Sens. J. 2019, 19, 8402–8412. [Google Scholar] [CrossRef]

- Awais, M.; Raza, M.; Singh, N.; Bashir, K.; Manzoor, U.; Islam, S.U.; Rodrigues, J.J.P.C. LSTM-Based Emotion Detection Using Physiological Signals: IoT Framework for Healthcare and Distance Learning in COVID-19. IEEE Internet Things J. 2021, 8, 16863–16871. [Google Scholar] [CrossRef]

- Feng, L.; Cheng, C.; Zhao, M.; Deng, H.; Zhang, Y. EEG-Based Emotion Recognition Using Spatial-Temporal Graph Convolutional LSTM With Attention Mechanism. IEEE J. Biomed. Health Inform. 2022, 26, 5406–5417. [Google Scholar] [CrossRef]

- Lopez-Gil, J.M.; Garay-Vitoria, N. Photogram Classification-Based Emotion Recognition. IEEE Access 2021, 9, 136974–136984. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X.; Li, P.; Chen, X.; Shao, L. Research on emotion recognition based on ECG signal. J. Phys. Conf. Ser. 2020, 1678, 012091. [Google Scholar] [CrossRef]

- Alam, A.; Ansari, A.Q.; Urooj, S. Design of Contactless Capacitive Electrocardiogram (ECG) Belt System. In Proceedings of the 2022 IEEE Delhi Section Conference (DELCON), New Delhi, India, 11–13 February 2022. [Google Scholar] [CrossRef]

- Miranda-Correa, J.A.; Abadi, M.K.; Sebe, N.; Patras, I. AMIGOS: A Dataset for Affect, Personality and Mood Research on Individuals and Groups. IEEE Trans. Affect. Comput. 2021, 12, 479–493. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Ramzan, N. DREAMER: A Database for Emotion Recognition Through EEG and ECG Signals from Wireless Low-cost off-the-Shelf Devices. IEEE J. Biomed. Health Inform. 2018, 22, 98–107. [Google Scholar] [CrossRef]

- Abadi, M.K.; Subramanian, R.; Kia, S.M.; Avesani, P.; Patras, I.; Sebe, N. DECAF: MEG-Based Multimodal Database for Decoding Affective Physiological Responses. IEEE Trans. Affect. Comput. 2015, 6, 209–222. [Google Scholar] [CrossRef]

- Qadri, S.F.; Lin, H.; Shen, L.; Ahmad, M.; Qadri, S.; Khan, S.; Khan, M.; Zareen, S.S.; Akbar, M.A.; Bin Heyat, M.B.; et al. CT-Based Automatic Spine Segmentation Using Patch-Based Deep Learning. Int. J. Intell. Syst. 2023, 2023, 1–14. [Google Scholar] [CrossRef]

| Reference | Technique | No. of Features | No. of Emotions | Max Accuracy | Contribution | Limitations | Year |

|---|---|---|---|---|---|---|---|

| [13] | RF | Speech datasets | 8 | 89.60% | Emotion recognition from speech signals using WPT cochlear filter bank and random forest classifier | A non-physiological signal can be manipulated by the subject | 2021 |

| [15] | AdaBoost-KNN | Facial expression dataset | 7 | 94.28% | AFS-AdaBoost-KNN-DO; a dynamic facial emotion recognition | Emotions can be masked in a facial expression-based technique | 2021 |

| [25] | KNN, SVM, RF, LR, BN, MLP, CNN, etc. | 2 (PSD and DE) | 3 (positive, neutral, and negative) | 89.63% when using the LR classifier | Real-time emotion recognition system using an EEG signal | Averaging in preprocessing resulted in a loss of emotional information | 2022 |

| [26] | LDA, Neural Network | 5 | 4 | 94.75% | An accurate emotion recognition using ECG and GSR | Less number of features | 2017 |

| [36] | SGCN (CNN) | 4 | 4 | 96.72% | A deep hybrid network called ST-GCLSTM for EEG-based emotion recognition | Fewer features | 2022 |

| [37] | Parameterized photograms and machine learning | Facial expression dataset | 7 | 99.80% | High accuracy using weak classifiers on publicly available datasets | Facial expression can be controlled by the subject | 2021 |

| [38] | SVW, CART, and KNN | 8 | 4 | 97%, (Angry) | ECG signal correlation features in emotion recognition | Accuracies are less for all emotions except ‘angry’ | 2020 |

| Proposed work | SVM and RF | 10 | 7 | 98% | ECG-based emotion classifier model, highly accurate, high prediction speed | Signals such as EEG, EMG, BR, and skin conductance can be explored as a unimodal feature | - |

| Dataset | Number of Subjects | Male:Female Ratio | Average Age (yrs.) |

|---|---|---|---|

| A | 15 | 3:2 | 23 |

| B | 15 | 3:2 | 36 |

| C | 15 | 3:2 | 44 |

| Combined | 45 | 3:2 | 34 |

| Dataset Type | Typical Size of the Datasets | |||

|---|---|---|---|---|

| ECG Data (per Emotion per Subject) | ECG Data Points (per Subject) | ECG Data Points (for All Subjects) | ECG Feature Dataset | |

| Set A | 10,240 | 71,680 | 1,075,200 | 4200 × 10 |

| Set B | ||||

| Set C | ||||

| Combined Set | 3,225,600 | 12,600 × 10 | ||

| S. No. | ECG Feature | Mathematical Expression N = Number of Samples, Sampling Frequency fs = 256 Hz Signal Duration, T = 40 s | |

|---|---|---|---|

| Name | Parameter | ||

| 1. | F1 | Mean | |

| 2. | F2 | Standard Deviation | |

| 3. | F3 | Variance | |

| 4. | F4 | Minimum Value | |

| 5. | F5 | Maximum Value | |

| 6. | F6 | Skewness | |

| 7. | F7 | Kurtosis | |

| 8. | F8 | First-Degree Difference | |

| 9. | F9 | Heart Rate (BPM) | |

| 10. | F10 | RRmean | |

| Dataset Type | Classifier | Model Type | Average Accuracy in Emotion Recognition (%) |

|---|---|---|---|

| Set A ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 96.6% |

| Optimizable | 97.9% | ||

| Random Forest (RF) | Ensemble Bagged Tree | 98.2% | |

| Ensemble Optimizable | 98.3% | ||

| Set B ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 86.6% |

| Optimizable | 95.0% | ||

| Random Forest (RF) | Ensemble Bagged Tree | 99.1% | |

| Ensemble Optimizable | 99.4% | ||

| Set C ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 99.0% |

| Optimizable | 98.4% | ||

| Random Forest (RF) | Ensemble Bagged Tree | 99.8% | |

| Ensemble Optimizable | 99.6% | ||

| Set A, B, and C combined ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 94.8% |

| Optimizable | 97.9% | ||

| Random Forest (RF) | Ensemble Bagged Tree | 99.6% | |

| Ensemble Optimizable | 99.4% | ||

| Subject wise ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 98.5% |

| Optimizable | 99.5% | ||

| Random Forest (RF) | Ensemble Bagged Tree | 99.6% | |

| Ensemble Optimizable | 99.5% |

| Dataset Type | Classifier Model Type | 10-Fold Cross-Validation | |||

|---|---|---|---|---|---|

| Average Training Time (Seconds) | Prediction Speed (obs/s) | Minimum Classification Error | |||

| Set A ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 5.078 | 12,000 | - |

| Optimizable | 113.56 | 20,000 | 0.03 | ||

| Random Forest (RF) | Ensemble Bagged Tree | 3.4884 | 8900 | - | |

| Ensemble Optimizable | 111.58 | 21,000 | 0.00127 | ||

| Set B ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 1.7669 | 16,000 | - |

| Optimizable | 666.76 | 18,000 | 0.02 | ||

| Random Forest (RF) | Ensemble Bagged Tree | 2.1453 | 10,000 | - | |

| Ensemble Optimizable | 119.88 | 1900 | 0.0001 | ||

| Set C ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 1.8198 | 14,000 | - |

| Optimizable | 492.72 | 20,000 | 0.015 | ||

| Random Forest (RF) | Ensemble Bagged Tree | 2.2686 | 11,000 | - | |

| Ensemble Optimizable | 108.32 | 2100 | 0.0007 | ||

| Set A, B, and C combined ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 8.5107 | 22,000 | - |

| Optimizable | 2110.8 | 34,000 | 0.002 | ||

| Random Forest (RF) | Ensemble Bagged Tree | 5.0359 | 16,000 | - | |

| Ensemble Optimizable | 181.74 | 7200 | 0.002 | ||

| Subject-wise ECG Feature Data | Support Vector Machine (SVM) | Quadratic | 1.4402 | 5900 | - |

| Optimizable | 237.31 | 3700 | 0.0137 | ||

| Random Forest (RF) | Ensemble Bagged Tree | 2.093 | 2900 | - | |

| Ensemble Optimizable | 98.879 | 5600 | 0.0125 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, A.; Urooj, S.; Ansari, A.Q. Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers. Diagnostics 2023, 13, 2097. https://doi.org/10.3390/diagnostics13122097

Alam A, Urooj S, Ansari AQ. Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers. Diagnostics. 2023; 13(12):2097. https://doi.org/10.3390/diagnostics13122097

Chicago/Turabian StyleAlam, Aftab, Shabana Urooj, and Abdul Quaiyum Ansari. 2023. "Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers" Diagnostics 13, no. 12: 2097. https://doi.org/10.3390/diagnostics13122097

APA StyleAlam, A., Urooj, S., & Ansari, A. Q. (2023). Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers. Diagnostics, 13(12), 2097. https://doi.org/10.3390/diagnostics13122097