Hybrid Deep Learning Approach for Stress Detection Using Decomposed EEG Signals

Abstract

1. Introduction

1.1. Machine Learning/Deep Learning for Classification of EEG Signal

| Classifier | Volunteers/Subjects | Feature Engineering (Domain) | Pros. of Classifier | Cons. of Classifier | Accuracy |

|---|---|---|---|---|---|

| SVM | 15 volunteers [41] | Correlation analysis (Time) | Works effectively when classes are well-separated | Unsuitable for large data sets | 86.94% |

| MLP | 33 subjects, eyes open and closed conditions [44] | Neuro-physiological Features (Time) | More efficient on non-linear data | Classification task computation are complex and consuming time | 85.20% |

| SVM | 6 subjects’ EEG dataset [45] | Hilbert Huang Transform (Time-Frequency) | - | Performs poorly when target classes overlap due to noise. | 89.07% |

| LR | 4 EEG channels features of 27 subjects [38] | Band power (Frequency) | Works well when data are linearly separable. | Requires average or no independent variable multi-collinearity | 98.76% |

| SVM | 17 patients were taken from subjects [46] | NIL | - | - | 90.0% |

| SVM | 34 patients were taken from subjects [47] | Band Power (Frequency) | - | Needs extensive testing such as cross validation | 85.0% |

| NB | 48 practice patterns were taken from subjects [48] | DWT (Time and frequency) | Process high-dimensional data efficiently | NB struggles to predict minorities class data | 91.60% |

| DL Network | 32 samples were taken from the subjects [49] | Power spectrum density (Frequency) | The model learns relevant features without manual feature engineering. | The training data, and model’s performance can decline in diverse hardware resources. | 53.42% |

| RF | 17 scalp patients and 10 intracranial were taken from subjects [50] | DWT (Time and frequency) | It automatically selects a subset of characteristics at each split, reducing the causes of dimensionality and irrelevant features. | Low-cardinality features may be less important or require preprocessing to avoid bias. | 62.00% |

| FL | 19 patients were taken from subjects [51] | Band Power (Frequency) | It permits the formulation of rules that account for varying degrees of uncertainty and exceptions | Complex fuzzy systems demand more processing and memory, making them unsuitable for real-time or resource-constrained applications. | 91.80% |

| KNN | 32 healthy subjects only [52] | DWT (Time and frequency) | It detects linear and non-linear data relationships | Struggles with class imbalances | 95.69% |

| Long Short-Term Memory (LSTM) | 32 EEG channels of 32 subjects [53] | Band power (Frequency) | Successfully captures long-term relationships and can alleviate the vanishing gradient issue that is typical in standard RNNs, making training and optimization simpler. | It is susceptible to overfitting, especially when the model has a high number of parameters and the training data are restricted. | 94.69% |

| (Bidirectional Long Short-Term Memory) BiLSTM-LSTM | 14 EEG channels of 48 subjects [54] | Power spectral density (Frequency) | Processes data in both directions, and is able to properly capture past and future contexts of the data | Requires an extensive amount of data to train successfully, this may pose a problem if there are not enough labelled data. | 97.80% |

| LR, NN, RNN | 1488 abnormal, 1529 normal patients were taken from subjects [55] | Raw EEG | RNNs can learn context from previous inputs | RNNs face vanishing gradient problem | RNN achieve3.47% more |

| VGG16-CNN | 16 and 19 channels were taken from 45 and 28 subjects, respectively [35] | Continuous Wavelet Transform (Time-Frequency) | VGG16 can be used as a feature extractor or as a starting point for transfer learning | VGG16 requires more computation compared other more streamlined CNN architectures such as ResNet or Inception. | 98% |

| 2D-CNN-LSTM | 5 channels are taken from 60 subjects [36] | Raw EEG | In hybrid model, CNNs are powerful in automatically learning hierarchical features from input data and LSTM networks; on the other hand, can handle temporal variations and long-term dependencies in sequential data. | It is susceptible to overfitting, especially when the model has a high number of parameters and the training data are restricted. | 72.55% |

| CNN + LSTM | 60 channels features of brain EEGs were taken from 54 subjects [27] | Fuzzy Entropy and fast Fourier transform (Frequency) | Hybrid DL model performed well on high dimension data | Hybrid DL Model takes long computation time for training | 99.22% |

1.2. Research Contributions

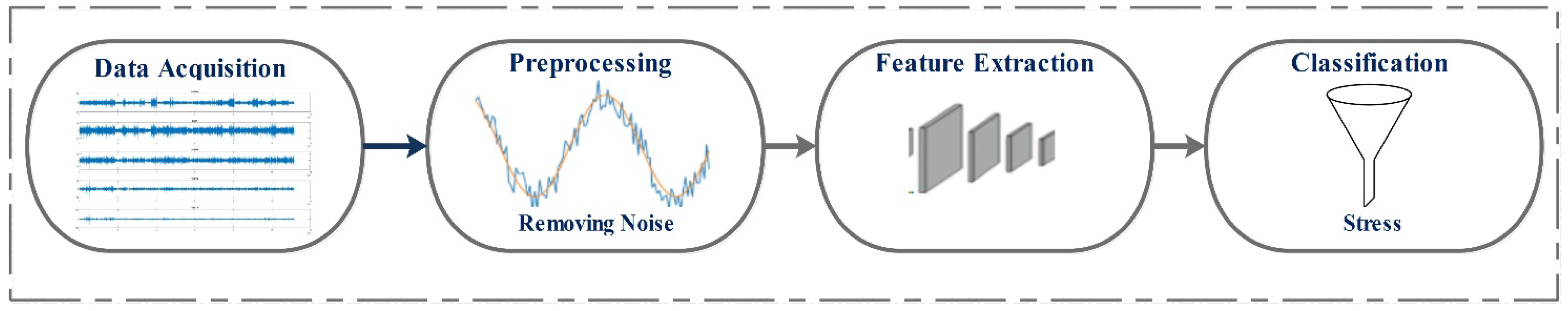

2. Approaches and Data Description

2.1. Dataset Descriptions

- (a)

- Apply a 1 Hz high-pass filter on the raw data.

- (b)

- Eliminate the line noise.

- (c)

- Carry out artifact subspace reconstruction (ASR).

- (d)

- Re-assign data to the average.

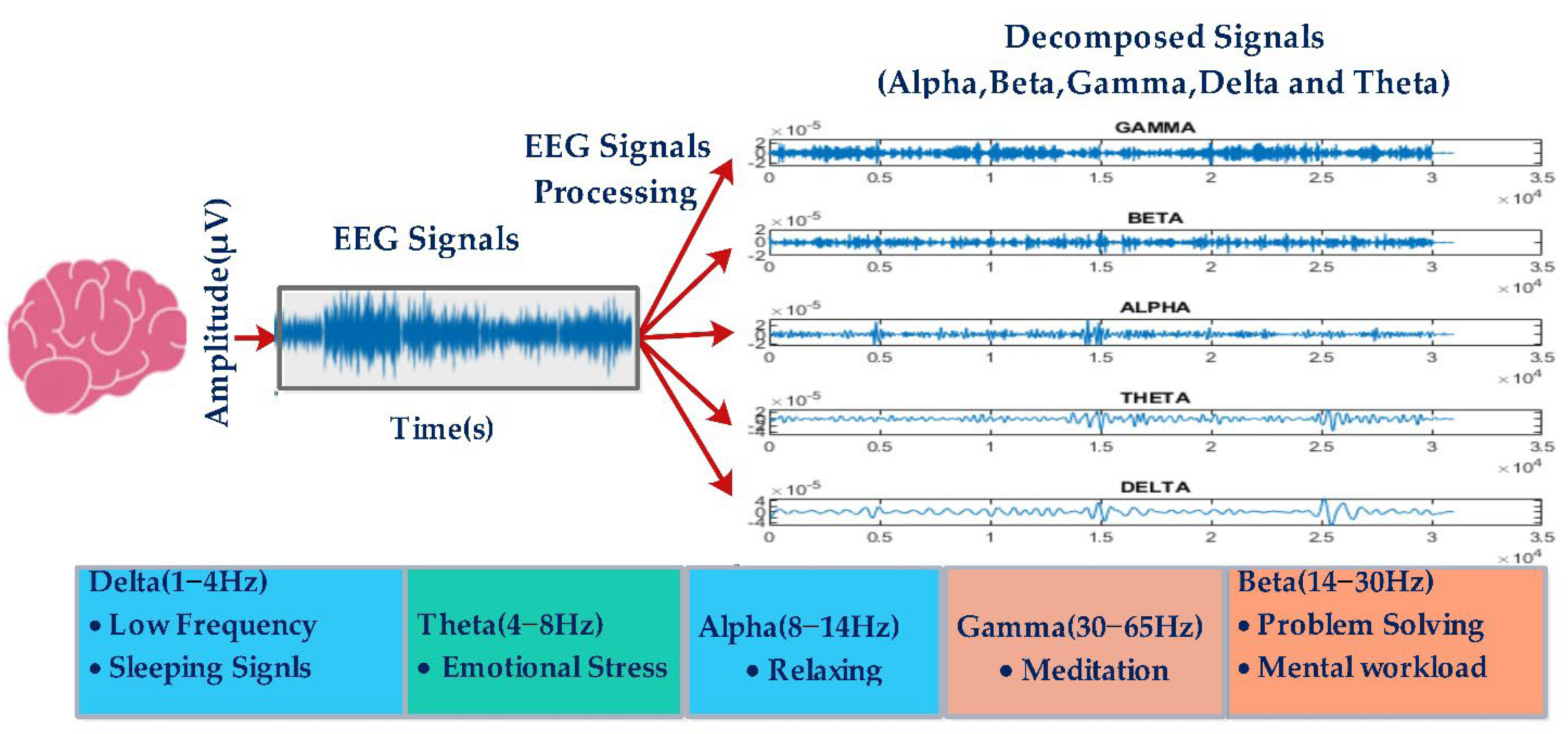

2.2. Discrete Wavelet Transform

2.3. Convolutional Neural Network (CNN)

2.4. Recurrent Neural Networks (RNN)

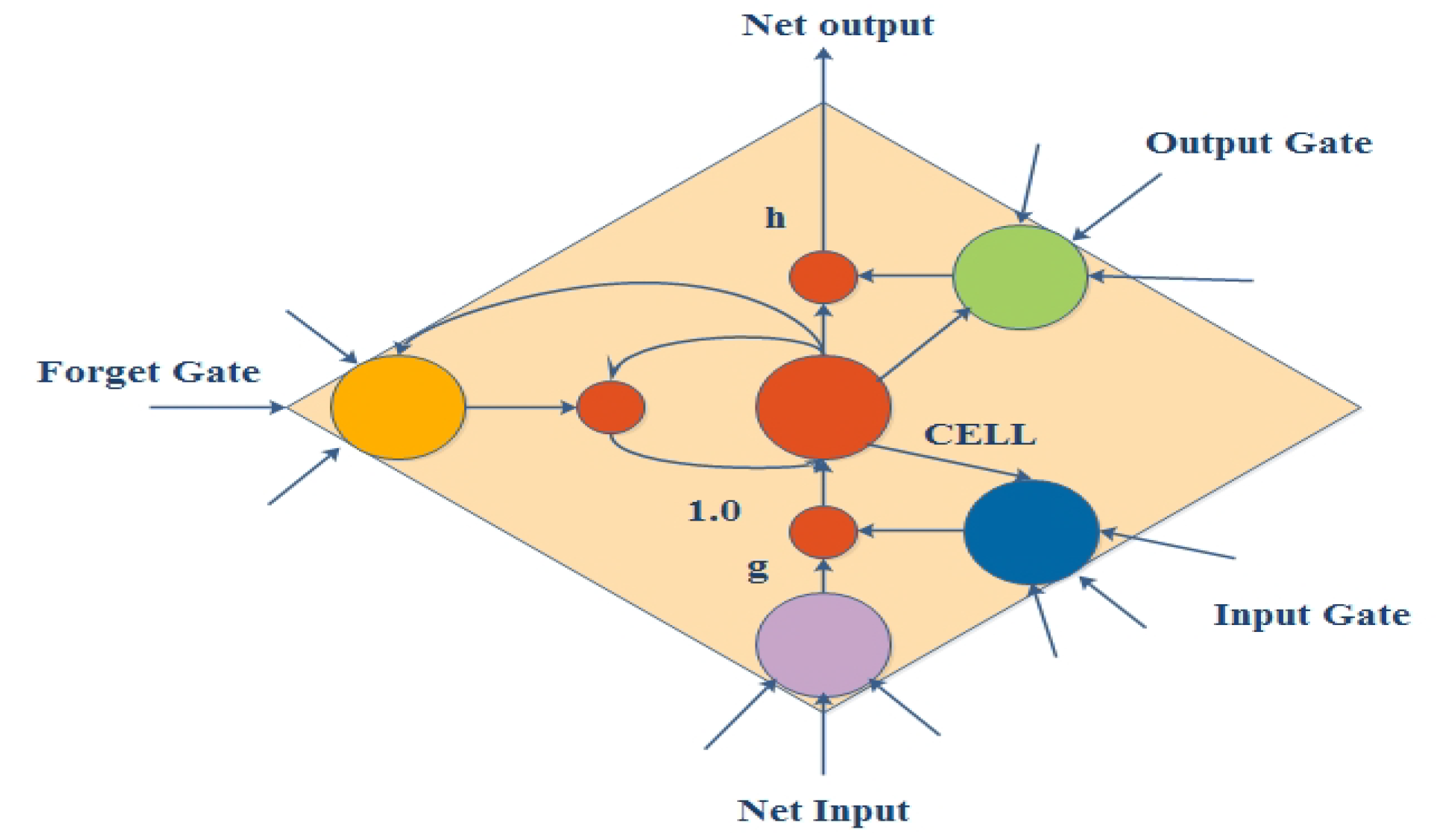

2.5. Long Short-Term Memory (LSTM)

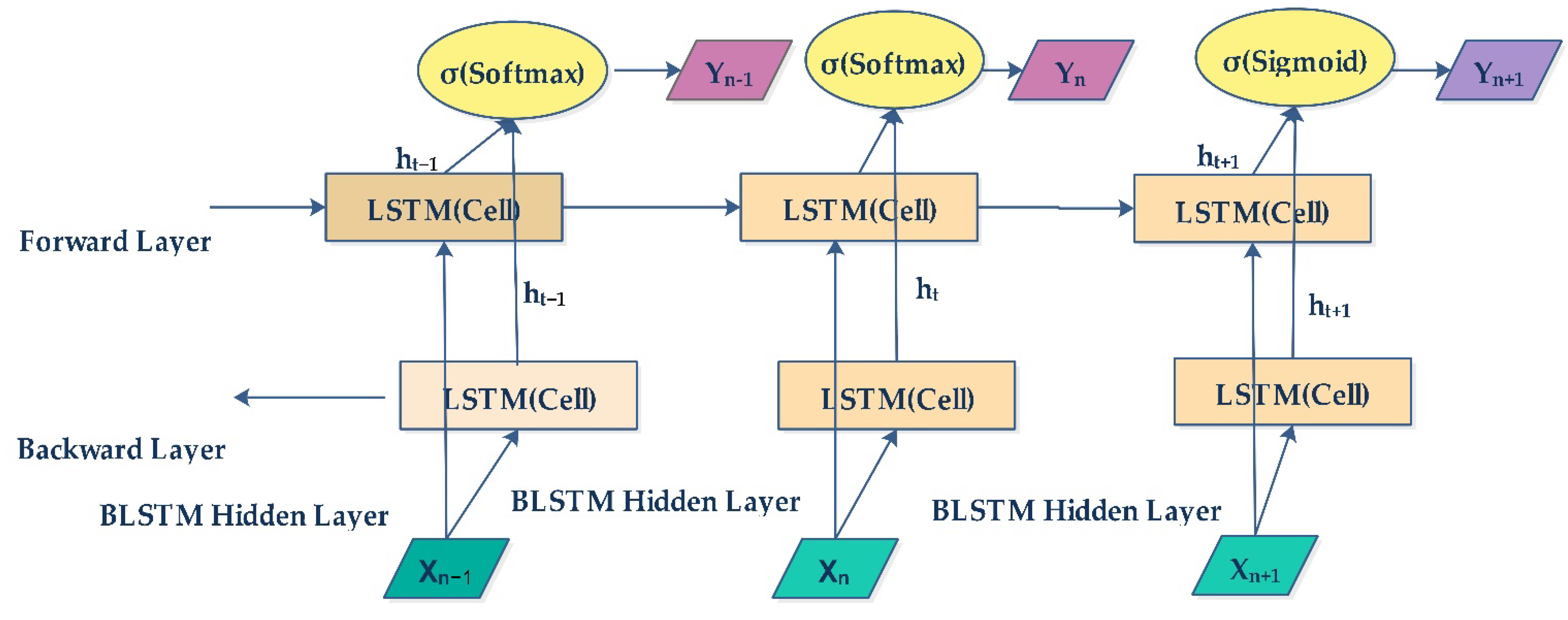

2.6. Bidirectional LSTM

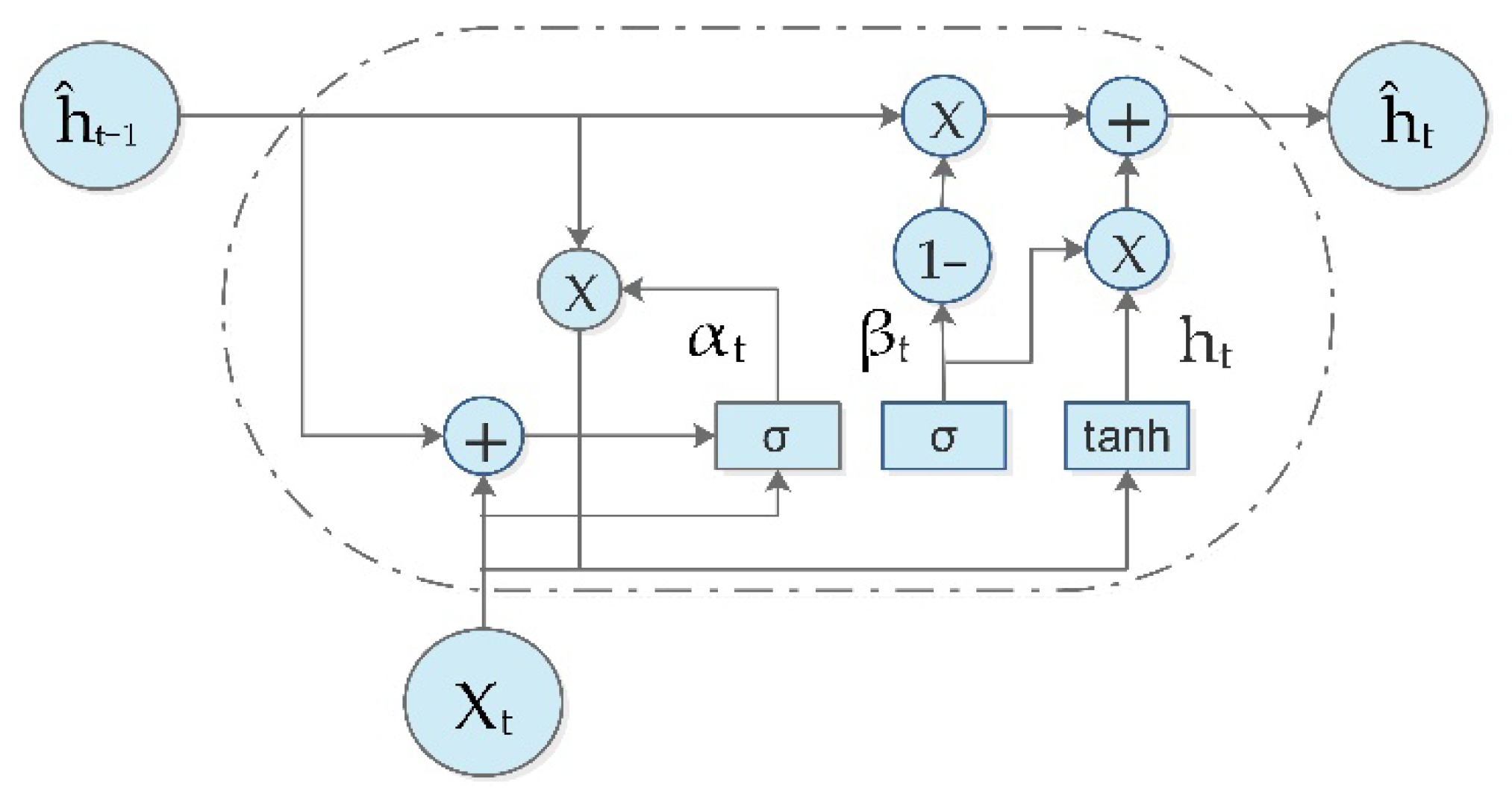

2.7. Gated Recurrent Units (GRU)

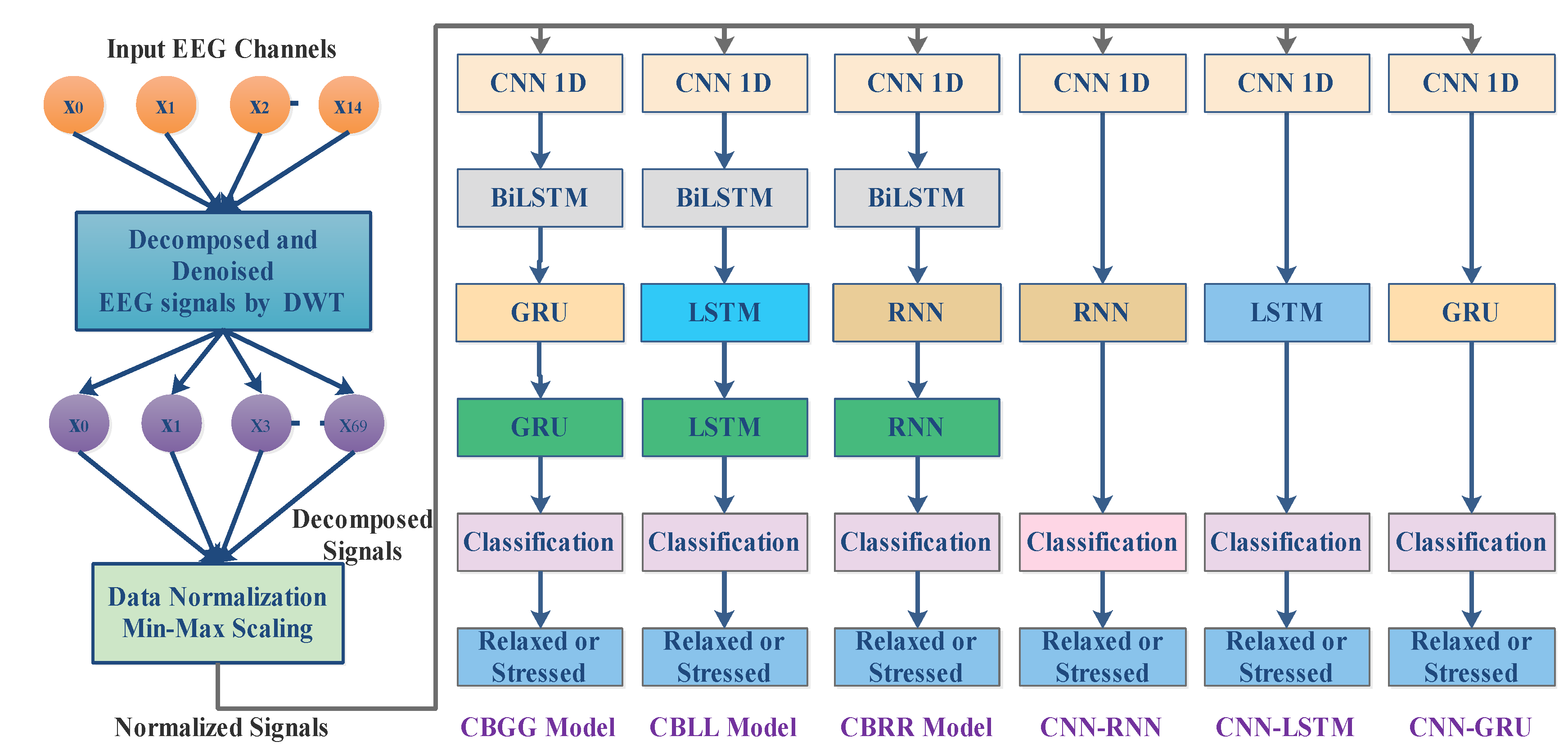

3. The DWT-Based Hybrid DL Models

4. Results and Analysis

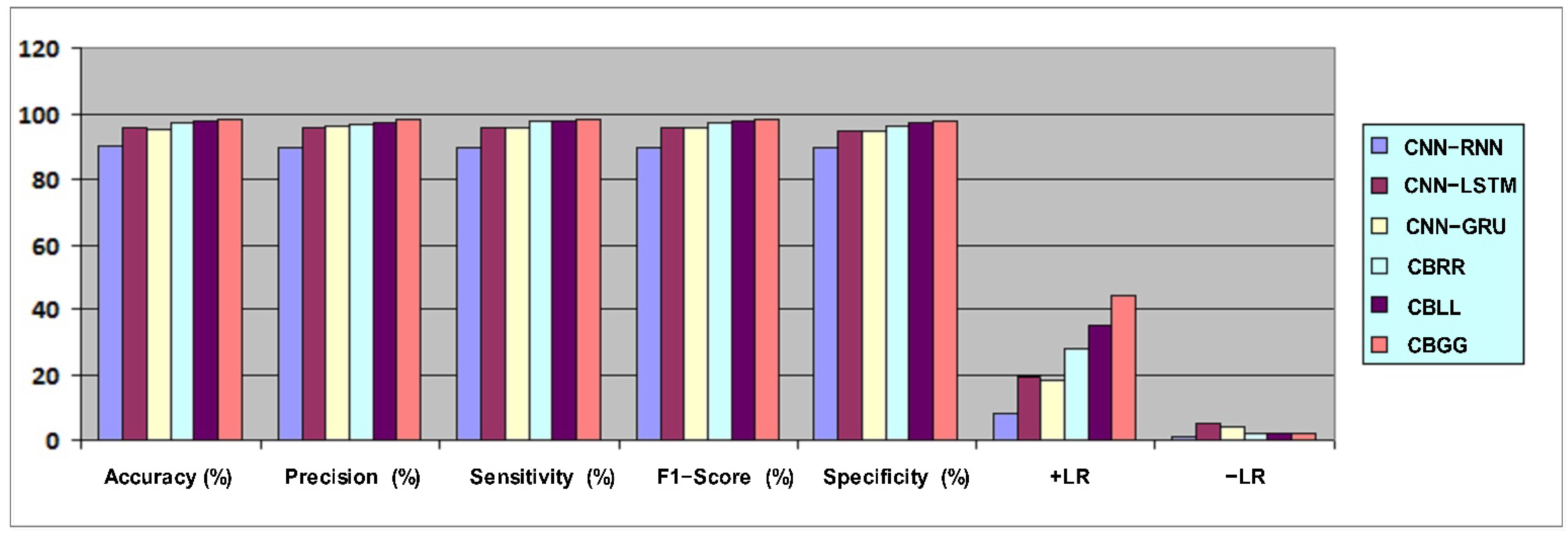

4.1. Metrics-Based Performance

4.2. Performance Evaluation

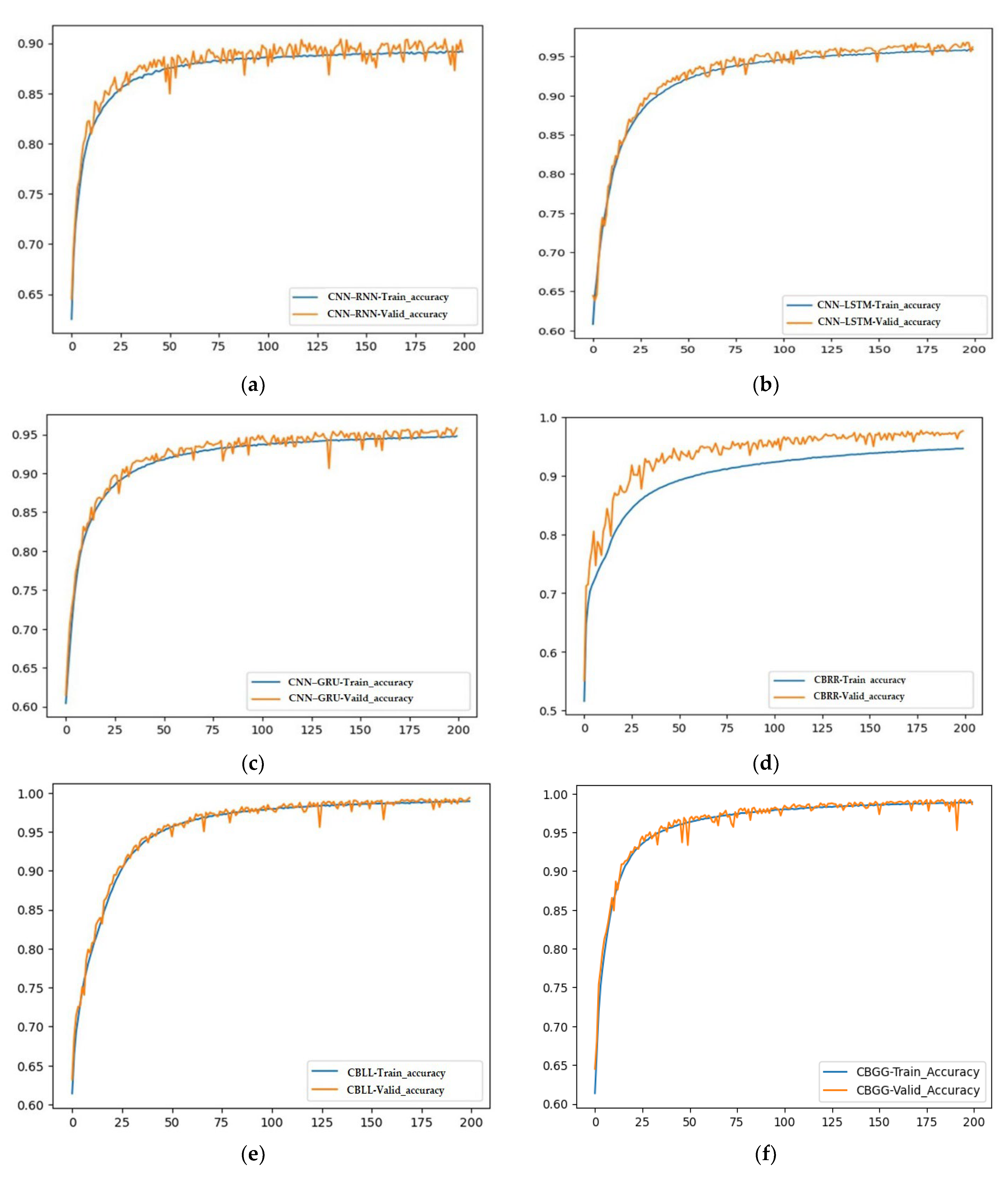

4.3. Convergence Curve Analysis

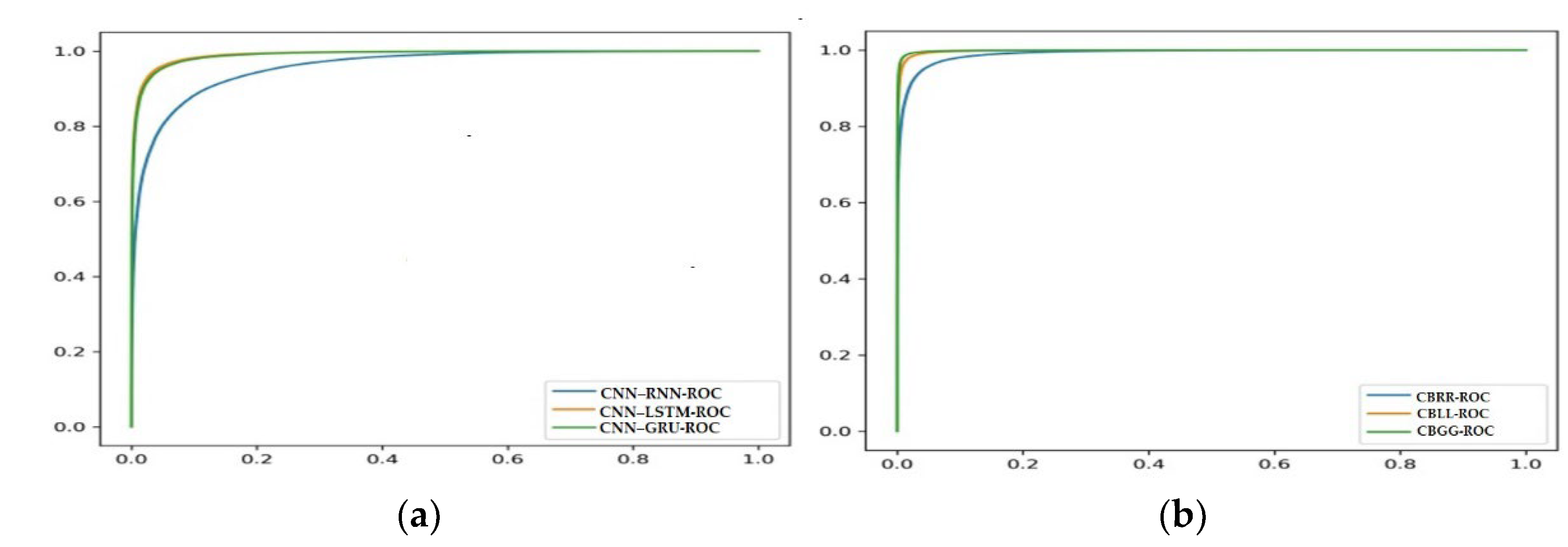

4.4. Receiver Operating Characteristic (ROC) Curve Analysis of Models

4.5. Comparison of Proposed and Existing Works

4.6. Validation of Proposed Model

4.7. Limitations of the Proposed Hybrid DL Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharma, R.; Chopra, K. EEG signal analysis and detection of stress using classification techniques. J. Inf. Optim. Sci. 2020, 41, 229–238. [Google Scholar] [CrossRef]

- Mikhno, I.; Koval, V.; Ternavskyi, A. Strategic management of healthcare institution development of the national medical services market. ACCESS Access Sci. Bus. Innov. Digit. Econ. 2020, 1, 157–170. [Google Scholar] [CrossRef] [PubMed]

- Qadri, A.; Yan, H. To promote entrepreneurship: Factors that influence the success of women entrepreneurs in Pakistan. Access J. 2023, 4, 155–167. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.K.; Singh, S.S.; Singh, V.L. Predicting adoption of next generation digital technology utilizing the adoption-diffusion model fit: The case of mobile payments interface in an emerging economy. Access J. 2023, 4, 130–148. [Google Scholar] [CrossRef]

- Cheema, A.; Singh, M. Psychological stress detection using phonocardiography signal: An empirical mode decomposition approach. Biomed. Signal Process. Control. 2019, 49, 493–505. [Google Scholar] [CrossRef]

- Petrova, M.; Tairov, I. Solutions to Manage Smart Cities’ Risks in Times of Pandemic Crisis. Risks 2022, 10, 240. [Google Scholar] [CrossRef]

- Salankar, N.; Koundal, D.; Qaisar, S.M. Stress classification by multimodal physiological signals using variational mode decomposition and machine learning. J. Health Eng. 2021, 2021, 2146369. [Google Scholar] [CrossRef]

- AlShorman, O.; Masadeh, M.; Bin Heyat, B.; Akhtar, F.; Almahasneh, H.; Ashraf, G.; Alexiou, A. Frontal lobe real-time EEG analysis using machine learning techniques for mental stress detection. J. Integr. Neurosci. 2022, 21, 20. [Google Scholar] [CrossRef]

- Hasan, M.J.; Kim, J.M. A hybrid feature pool-based emotional stress state detection algorithm using EEG signals. Brain Sci. 2019, 9, 376. [Google Scholar] [CrossRef]

- AlShorman, O.; Alshorman, B.; Alkahtani, F. A review of wearable sensors based monitoring with daily physical activity to manage type 2 diabetes. Int. J. Electr. Comput. Eng. 2021, 11, 646–653. [Google Scholar] [CrossRef]

- Dushanova, J.; Christov, M. The effect of aging on EEG brain oscillations related to sensory and sensorimotor functions. Adv. Med. Sci. 2014, 59, 61–67. [Google Scholar] [CrossRef]

- Mason, A.E.; Adler, J.M.; Puterman, E.; Lakmazaheri, A.; Brucker, M.; Aschbacher, K.; Epel, E.S. Stress resilience: Narrative identity may buffer the longitudinal effects of chronic caregiving stress on mental health and telomere shortening. Brain Behav. Immun. 2019, 77, 101–109. [Google Scholar] [CrossRef]

- Belleau, E.L.; Treadway, M.T.; Pizzagalli, D.A. The Impact of Stress and Major Depressive Disorder on Hippocampal and Medial Prefrontal Cortex Morphology. Biol. Psychiatry 2019, 85, 443–453. [Google Scholar] [CrossRef]

- Fernández, J.R.; Anishchenko, L. Mental stress detection using bioradar respiratory signals. Biomed. Signal Process. Control. 2018, 43, 244–249. [Google Scholar] [CrossRef]

- Heyat, M.B.; Hasan, Y.M.; Siddiqui, M.M. EEG signals and wireless transfer of EEG Signals. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 10–12. [Google Scholar]

- Bakhshayesh, H.; Fitzgibbon, S.; Janani, A.S.; Grummett, T.S.; Pope, K. Detecting synchrony in EEG: A comparative study of functional connectivity measures. Comput. Biol. Med. 2019, 105, 1–15. [Google Scholar] [CrossRef]

- Heyat, M.B.; Siddiqui, M.M. Recording of eegecgemg signal. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2015, 5, 813–815. [Google Scholar]

- Pal, R.; Heyat, M.B.; You, Z.; Pardhan, B.; Akhtar, F.; Abbas, S.J.; Guragai, B.; Acharya, K. Effect of Maha Mrityunjaya HYMN recitation on human brain for the analysis of single EEG channel C4-A1 using machine learning classifiers on yoga practitioner. In Proceedings of the 2020 17th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2020; pp. 89–92. [Google Scholar]

- Cea-Canas, B.; Gomez-Pilar, J.; Nunez, P.; Rodriguez-Vazquez, E.; de Uribe, N.; Diez, A.; Perez-Escudero, A.; Molina, V. Connectivity strength of the EEG functional network in schizophrenia and bipolar disorder. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2020, 98, 109801. [Google Scholar] [CrossRef]

- Dushanova, J.A.; Tsokov, S.A. Small-world EEG network analysis of functional connectivity in developmental dyslexia after visual training intervention. J. Integr. Neurosci. 2020, 19, 601–618. [Google Scholar] [CrossRef]

- Olson, E.A.; Cui, J.; Fukunaga, R.; Nickerson, L.D.; Rauch, S.L.; Rosso, I.M. Disruption of white matter structural integrity and connectivity in posttraumatic stress disorder: A TBSS and tractography study. Depress. Anxiety 2017, 34, 437–445. [Google Scholar] [CrossRef]

- Zubair, M.; Yoon, C. Multilevel mental stress detection using ultra-short pulse rate variability series. Biomed. Signal Process. Control. 2020, 57, 101736. [Google Scholar] [CrossRef]

- Goodman, R.N.; Rietschel, J.C.; Lo, L.-C.; Costanzo, M.E.; Hatfield, B.D. Stress, emotion regulation and cognitive performance: The predictive contributions of trait and state relative frontal EEG alpha asymmetry. Int. J. Psychophysiol. 2013, 87, 115–123. [Google Scholar] [CrossRef] [PubMed]

- Luján, M..; Jimeno, M.V.; Sotos, J.M.; Ricarte, J.J.; Borja, A.L. A Survey on EEG Signal Processing Techniques and Machine Learning: Applications to the Neurofeedback of Autobiographical Memory Deficits in Schizophrenia. Electronics 2021, 10, 3037. [Google Scholar] [CrossRef]

- Hosseini, M.-P.; Hosseini, A.; Ahi, K. A Review on Machine Learning for EEG Signal Processing in Bioengineering. IEEE Rev. Biomed. Eng. 2020, 14, 204–218. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, S.; Chugh, N. Review of machine learning techniques for EEG based brain computer interface. Arch. Comput. Methods Eng. 2022, 29, 3001–3020. [Google Scholar] [CrossRef]

- Sun, J.; Cao, R.; Zhou, M.; Hussain, W.; Bin Wang, B.; Xue, J.; Xiang, J. A hybrid deep neural network for classification of schizophrenia using EEG Data. Sci. Rep. 2021, 11, 4706. [Google Scholar] [CrossRef]

- Zuo, X.-N. A machine learning window into brain waves. Neuroscience 2020, 436, 167–169. [Google Scholar] [CrossRef]

- Najafzadeh, H.; Esmaeili, M.; Farhang, S.; Sarbaz, Y.; Rasta, S.H. Automatic classification of schizophrenia patients using resting-state EEG signals. Phys. Eng. Sci. Med. 2021, 44, 855–870. [Google Scholar] [CrossRef]

- Barros, C.; Silva, C.A.; Pinheiro, A.P. Advanced EEG-based learning approaches to predict schizophrenia: Promises and pitfalls. Artif. Intell. Med. 2021, 114, 102039. [Google Scholar] [CrossRef]

- Vázquez, M.A.; Maghsoudi, A.; Mariño, I.P. An Interpretable Machine Learning Method for the Detection of Schizophrenia Using EEG Signals. Front. Syst. Neurosci. 2021, 15, 652662. [Google Scholar] [CrossRef]

- Mortaga, M.; Brenner, A.; Kutafina, E. Towards interpretable machine learning in EEG analysis. In German Medical Data Sciences 2021: Digital Medicine: Recognize–Understand–Heal 2021; IOS Press: Amsterdam, The Netherlands, 2021; pp. 32–38. [Google Scholar]

- da Silva Lourenço, C.; Tjepkema-Cloostermans, M.C.; van Putten, M.J. Machine learning for detection of interictal epileptiform discharges. Clin. Neurophysiol. 2021, 132, 1433–1443. [Google Scholar] [CrossRef]

- Gao, Z.; Dang, W.; Wang, X.; Hong, X.; Hou, L.; Ma, K.; Perc, M. Complex networks and deep learning for EEG signal analysis. Cogn. Neurodyn. 2021, 15, 369–388. [Google Scholar] [CrossRef]

- Aslan, Z.; Akin, M. A deep learning approach in automated detection of schizophrenia using scalogram images of EEG signals. Phys. Eng. Sci. Med. 2022, 45, 83–96. [Google Scholar] [CrossRef]

- Ahmedt-Aristizabal, D.; Fernando, T.; Denman, S.; Robinson, J.E.; Sridharan, S.; Johnston, P.J.; Laurens, K.R.; Fookes, C. Identification of Children at Risk of Schizophrenia via Deep Learning and EEG Responses. IEEE J. Biomed. Health Inform. 2020, 25, 69–76. [Google Scholar] [CrossRef]

- Nikolaev, D.; Petrova, M. Application of Simple Convolutional Neural Networks in Equity Price Estimation. In Proceedings of the 2021 IEEE 8th International Conference on Problems of Infocommunications, Science and Technology (PIC S&T), Kharkiv, Ukraine, 5–7 October 2021; pp. 147–150. [Google Scholar] [CrossRef]

- Asif, A.; Majid, M.; Anwar, S.M. Human stress classification using EEG signals in response to music tracks. Comput. Biol. Med. 2019, 107, 182–196. [Google Scholar] [CrossRef]

- Ranjith, C.; Arunkumar, B. An improved elman neural network based stress detection from EEG signals and reduction of stress using music. Int. J. Eng. Res. Technol. 2019, 12, 16–23. [Google Scholar]

- Dyachenko, Y.; Nenkov, N.; Petrova, M.; Skarga-Bandurova, I.; Soloviov, O. Approaches to cognitive architecture of autonomous intelligent agent. Biol. Inspired Cogn. Arch. 2018, 26, 130–135. [Google Scholar] [CrossRef]

- Betti, S.; Lova, R.M.; Rovini, E.; Acerbi, G.; Santarelli, L.; Cabiati, M.; Del Ry, S.; Cavallo, F. Evaluation of an Integrated System of Wearable Physiological Sensors for Stress Monitoring in Working Environments by Using Biological Markers. IEEE Trans. Biomed. Eng. 2017, 65, 1748–1758. [Google Scholar] [CrossRef]

- Attallah, O. An Effective Mental Stress State Detection and Evaluation System Using Minimum Number of Frontal Brain Electrodes. Diagnostics 2020, 10, 292. [Google Scholar] [CrossRef]

- Ahani, A.; Wahbeh, H.; Nezamfar, H.; Miller, M.; Erdogmus, D.; Oken, B. Quantitative change of EEG and respiration signals during mindfulness meditation. J. Neuroeng. Rehabil. 2014, 11, 87. [Google Scholar] [CrossRef]

- Şeker, M.; Özbek, Y.; Yener, G.; Özerdem, M.S. Complexity of EEG dynamics for early diagnosis of Alzheimer’s disease using permutation entropy neuromarker. Comput. Methods Programs Biomed. 2021, 206, 106116. [Google Scholar] [CrossRef] [PubMed]

- Vanitha, V.; Krishnan, P. Real time stress detection system based on EEG signals. Biomed. Res. 2016, 2017 (Suppl. S1), S271–S275. [Google Scholar]

- Aghajani, H.; Garbey, M.; Omurtag, A. Measuring mental workload with EEG+ fNIRS. Front. Hum. Neurosci. 2017, 11, 359. [Google Scholar] [CrossRef] [PubMed]

- Aydin, S.; Arica, N.; Ergul, E.; Tan, O. Classification of obsessive compulsive disorder by EEG complexity and hemispheric dependency measurements. Int. J. Neural Systems 2015, 25, 1550010. [Google Scholar] [CrossRef]

- Amin, H.U.; Mumtaz, W.; Subhani, A.R.; Saad, M.N.M.; Malik, A.S. Classification of EEG Signals Based on Pattern Recognition Approach. Front. Comput. Neurosci. 2017, 11, 103. [Google Scholar] [CrossRef]

- Jirayucharoensak, S.; Pan-Ngum, S.; Israsena, P. EEG-Based Emotion Recognition Using Deep Learning Network with Principal Component Based Covariate Shift Adaptation. Sci. World J. 2014, 2014, 627892. [Google Scholar] [CrossRef]

- Le Douget, J.E.; Fouad, A.; Filali, M.M.; Pyrzowski, J.; Le Van Quyen, M. Surface and intracranial EEG spike detection based on discrete wavelet decomposition and random forest classification. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 475–478. [Google Scholar]

- Amezquita-Sanchez, J.P.; Mammone, N.; Morabito, F.C.; Adeli, H. A New dispersion entropy and fuzzy logic system methodology for automated classification of dementia stages using electroencephalograms. Clin. Neurol. Neurosurg. 2021, 201, 106446. [Google Scholar] [CrossRef]

- Li, M.; Xu, H.; Liu, X.; Lu, S. Emotion recognition from multichannel EEG signals using K-nearest neighbor classification. Technol. Health Care 2018, 26, 509–519. [Google Scholar] [CrossRef]

- Nath, D.; Singh, M.; Sethia, D.; Kalra, D.; Indu, S. An efficient approach to eeg-based emotion recognition using lstm network. In Proceedings of the 2020 16th IEEE international colloquium on signal processing & its applications (CSPA), Langkawi, Malaysia, 28–29 February 2020; pp. 88–92. [Google Scholar]

- Das Chakladar, D.; Dey, S.; Roy, P.P.; Dogra, D.P. EEG-based mental workload estimation using deep BLSTM-LSTM network and evolutionary algorithm. Biomed. Signal Process. Control. 2020, 60, 101989. [Google Scholar] [CrossRef]

- Roy, S.; Kiral-Kornek, I.; Harrer, S. ChronoNet: A deep recurrent neural network for abnormal EEG identification. In Proceedings of the Artificial Intelligence in Medicine: 17th Conference on Artificial Intelligence in Medicine, AIME 2019, Poznan, Poland, June 26–29; 2019; pp. 47–56. [Google Scholar]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Lim, W.L.; Sourina, O.; Wang, L.P. STEW: Simultaneous task EEG workload data set. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2106–2114. [Google Scholar] [CrossRef]

- Lakshmi, M.R.; Prasad, T.V.; Prakash, D.V. Survey on EEG signal processing methods. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2014, 4, 84–91. [Google Scholar]

- Malviya, L.; Mal, S. CIS feature selection based dynamic ensemble selection model for human stress detection from EEG signals. Clust. Comput. 2023, 1–15. [Google Scholar] [CrossRef]

- Ji, N.; Ma, L.; Dong, H.; Zhang, X. EEG Signals Feature Extraction Based on DWT and EMD Combined with Approximate Entropy. Brain Sci. 2019, 9, 201. [Google Scholar] [CrossRef]

- Aamir, M.; Pu, Y.-F.; Rahman, Z.; Tahir, M.; Naeem, H.; Dai, Q. A Framework for Automatic Building Detection from Low-Contrast Satellite Images. Symmetry 2018, 11, 3. [Google Scholar] [CrossRef]

- Wu, H.; Niu, Y.; Li, F.; Li, Y.; Fu, B.; Shi, G.; Dong, M. A Parallel Multiscale Filter Bank Convolutional Neural Networks for Motor Imagery EEG Classification. Front. Neurosci. 2019, 13, 1275. [Google Scholar] [CrossRef]

- Mohseni, M.; Shalchyan, V.; Jochumsen, M.; Niazi, I.K. Upper limb complex movements decoding from pre-movement EEG signals using wavelet common spatial patterns. Comput. Methods Programs Biomed. 2020, 183, 105076. [Google Scholar] [CrossRef]

- Smagulova, K.; James, A.P. Overview of long short-term memory neural networks. In Deep Learning Classifiers with Memristive Networks: Theory and Applications; Springer: Cham, Switzerland, 2020; pp. 139–153. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Kumar, D.; Singh, A.; Samui, P.; Jha, R.K. Forecasting monthly precipitation using sequential modelling. Hydrol. Sci. J. 2019, 64, 690–700. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X.; Wu, H.; Yang, X. EEG-based emotion classification based on Bidirectional Long Short-Term Memory Network. Procedia Comput. Sci. 2020, 174, 491–504. [Google Scholar] [CrossRef]

- Malviya, L.; Mal, S. A novel technique for stress detection from EEG signal using hybrid deep learning model. Neural Comput. Appl. 2022, 34, 19819–19830. [Google Scholar] [CrossRef]

- Abuqaddom, I.; Mahafzah, B.A.; Faris, H. Oriented stochastic loss descent algorithm to train very deep multi-layer neural networks without vanishing gradients. Knowl. Based Systems 2021, 230, 107391. [Google Scholar] [CrossRef]

- Graves, A. Supervised Sequence Labelling. In Supervised Sequence Labelling with Recurrent Neural Networks; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Geldiev, E.M.; Nenkov, N.V.; Petrova, M.M. Exercise of machine learning using some python tools and techniques. CBU Int. Conf. Proc. 2018, 6, 1062–1070. [Google Scholar] [CrossRef]

- Faust, O.; Acharya, U.R.; Adeli, H.; Adeli, A. Wavelet-based EEG processing for computer-aided seizure detection and epilepsy diagnosis. Seizure 2015, 26, 56–64. [Google Scholar] [CrossRef]

- Ismail, A.R.; Asfour, S.S. Discrete wavelet transform: A tool in smoothing kinematic data. J. Biomech. 1999, 32, 317–321. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 2018, 100, 270–278. [Google Scholar] [CrossRef]

| Model Sequential Layers | Layer | Parameters and Values |

|---|---|---|

| CBRR | CNN-ID MaxPooling1D BiLSTM Layer RNN Layer RNN Layer Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation = softmax Pool_size = 1 Filter =64 Filter =32 Filter =16 0.2 112,927 |

| CBLL | CNN-ID Layer MaxPooling1D BiLSTM Layer LSTM Layer LSTM Layer Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation =softmax Pool_size = 1 Filter = 64 Filter =32 Fiter =16 0.2 153,681 |

| CBGG | CNN-ID Layer MaxPooling1D BiLSTM Layer GRULayer GRU Layer Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation =softmax Pool_size = 1 Filter = 64 Filter =32 Fiter =16 0.2 117,657 |

| CNN-RNN | CNN-ID Layer MaxPooling1D RNN Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation =softmax Pool_size = 1 Filter = 64 0.2 12,673 |

| CNN-LSTM | CNN-ID Layer MaxPooling1D LSTM Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation =softmax Pool_size = 1 Filter = 64 0.2 49,729 |

| CNN-GRU | CNN-ID Layer MaxPooling1D GRU Dropout Total Parameters | Filter = 128, kernel size = 1, padding = valid, activation =softmax Pool_size = 1 Filter = 64 0.237,569 |

| Parameter | Formula |

|---|---|

| Precision | |

| Sensitivity | |

| Specificity | |

| F1-Score | |

| Accuracy | |

| Positive Likelihood Ratio (+LR) | |

| Negative Likelihood Ratio (−LR) |

| Model | Accuracy (%) | Precision (%) | Sensitivity (%) | F1-Score (%) | Specificity (%) | +LR | −LR |

|---|---|---|---|---|---|---|---|

| CNN–RNN | 89.91 | 89.70 | 89.83 | 89.80 | 89.80 | 8 | 0.12 |

| CNN–LSTM | 95.60 | 95.90 | 95.64 | 95.90 | 94.90 | 19 | 0.05 |

| CNN–GRU | 95.20 | 96.09 | 95.77 | 95.50 | 94.70 | 18 | 0.04 |

| CBRR | 97.10 | 96.53 | 97.74 | 97.14 | 96.45 | 28 | 0.02 |

| CBLL | 97.54 | 97.32 | 97.80 | 97.56 | 97.27 | 35 | 0.02 |

| CBGG | 98.10 | 98.27 | 98.08 | 98.20 | 97.76 | 44 | 0.02 |

| Feature Extraction/ Selection | Classifier | Accuracy | Cross-Validation |

|---|---|---|---|

| Particle Swarm Optimization (PSO) [46] | BiLSTM, LSTM | 86.33% | - |

| PSD features via FFT [48]) | KNN, SVM | 69.00% | - |

| CNN-based features from DWT signals | CBGG | 98.10% | 97.60% (Stratified 10-Fold) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roy, B.; Malviya, L.; Kumar, R.; Mal, S.; Kumar, A.; Bhowmik, T.; Hu, J.W. Hybrid Deep Learning Approach for Stress Detection Using Decomposed EEG Signals. Diagnostics 2023, 13, 1936. https://doi.org/10.3390/diagnostics13111936

Roy B, Malviya L, Kumar R, Mal S, Kumar A, Bhowmik T, Hu JW. Hybrid Deep Learning Approach for Stress Detection Using Decomposed EEG Signals. Diagnostics. 2023; 13(11):1936. https://doi.org/10.3390/diagnostics13111936

Chicago/Turabian StyleRoy, Bishwajit, Lokesh Malviya, Radhikesh Kumar, Sandip Mal, Amrendra Kumar, Tanmay Bhowmik, and Jong Wan Hu. 2023. "Hybrid Deep Learning Approach for Stress Detection Using Decomposed EEG Signals" Diagnostics 13, no. 11: 1936. https://doi.org/10.3390/diagnostics13111936

APA StyleRoy, B., Malviya, L., Kumar, R., Mal, S., Kumar, A., Bhowmik, T., & Hu, J. W. (2023). Hybrid Deep Learning Approach for Stress Detection Using Decomposed EEG Signals. Diagnostics, 13(11), 1936. https://doi.org/10.3390/diagnostics13111936